Home / Resources / ISACA Journal / Issues / 2021 / Volume 2 / Risk Assessment and Analysis Methods

Risk assessment and analysis methods: qualitative and quantitative.

A risk assessment determines the likelihood, consequences and tolerances of possible incidents. “Risk assessment is an inherent part of a broader risk management strategy to introduce control measures to eliminate or reduce any potential risk- related consequences.” 1 The main purpose of risk assessment is to avoid negative consequences related to risk or to evaluate possible opportunities.

It is the combined effort of:

- “…[I]dentifying and analyzing possible future events that could adversely affect individuals, assets, processes and/or the environment (i.e.,risk analysis)”

- “…[M]aking judgments about managing and tolerating risk on the basis of a risk analysis while considering influencing factors (i.e., risk evaluation)” 2

Relationships between assets, processes, threats, vulnerabilities and other factors are analyzed in the risk assessment approach. There are many methods available, but quantitative and qualitative analysis are the most widely known and used classifications. In general, the methodology chosen at the beginning of the decision-making process should be able to produce a quantitative explanation about the impact of the risk and security issues along with the identification of risk and formation of a risk register. There should also be qualitative statements that explain the importance and suitability of controls and security measures to minimize these risk areas. 3

In general, the risk management life cycle includes seven main processes that support and complement each other ( figure 1 ):

- Determine the risk context and scope, then design the risk management strategy.

- Choose the responsible and related partners, identify the risk and prepare the risk registers.

- Perform qualitative risk analysis and select the risk that needs detailed analysis.

- Perform quantitative risk analysis on the selected risk.

- Plan the responses and determine controls for the risk that falls outside the risk appetite.

- Implement risk responses and chosen controls.

- Monitor risk improvements and residual risk.

Qualitative and Quantitative Risk Analysis Techniques

Different techniques can be used to evaluate and prioritize risk. Depending on how well the risk is known, and if it can be evaluated and prioritized in a timely manner, it may be possible to reduce the possible negative effects or increase the possible positive effects and take advantage of the opportunities. 4 “Quantitative risk analysis tries to assign objective numerical or measurable values” regardless of the components of the risk assessment and to the assessment of potential loss. Conversely, “a qualitative risk analysis is scenario-based.” 5

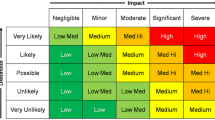

Qualitative Risk The purpose of qualitative risk analysis is to identify the risk that needs detail analysis and the necessary controls and actions based on the risk’s effect and impact on objectives. 6 In qualitative risk analysis, two simple methods are well known and easily applied to risk: 7

- Keep It Super Simple (KISS) —This method can be used on narrow-framed or small projects where unnecessary complexity should be avoided and the assessment can be made easily by teams that lack maturity in assessing risk. This one-dimensional technique involves rating risk on a basic scale, such as very high/high/medium/low/very.

- Probability/Impact —This method can be used on larger, more complex issues with multilateral teams that have experience with risk assessments. This two-dimensional technique is used to rate probability and impact. Probability is the likelihood that a risk will occur. The impact is the consequence or effect of the risk, normally associated with impact to schedule, cost, scope and quality. Rate probability and impact using a scale such as 1 to 10 or 1 to 5, where the risk score equals the probability multiplied by the impact.

Qualitative risk analysis can generally be performed on all business risk. The qualitative approach is used to quickly identify risk areas related to normal business functions. The evaluation can assess whether peoples’ concerns about their jobs are related to these risk areas. Then, the quantitative approach assists on relevant risk scenarios, to offer more detailed information for decision-making. 8 Before making critical decisions or completing complex tasks, quantitative risk analysis provides more objective information and accurate data than qualitative analysis. Although quantitative analysis is more objective, it should be noted that there is still an estimate or inference. Wise risk managers consider other factors in the decision-making process. 9

Although a qualitative risk analysis is the first choice in terms of ease of application, a quantitative risk analysis may be necessary. After qualitative analysis, quantitative analysis can also be applied. However, if qualitative analysis results are sufficient, there is no need to do a quantitative analysis of each risk.

Quantitative Risk A quantitative risk analysis is another analysis of high-priority and/or high-impact risk, where a numerical or quantitative rating is given to develop a probabilistic assessment of business-related issues. In addition, quantitative risk analysis for all projects or issues/processes operated with a project management approach has a more limited use, depending on the type of project, project risk and the availability of data to be used for quantitative analysis. 10

The purpose of a quantitative risk analysis is to translate the probability and impact of a risk into a measurable quantity. 11 A quantitative analysis: 12

- “Quantifies the possible outcomes for the business issues and assesses the probability of achieving specific business objectives”

- “Provides a quantitative approach to making decisions when there is uncertainty”

- “Creates realistic and achievable cost, schedule or scope targets”

Consider using quantitative risk analysis for: 13

- “Business situations that require schedule and budget control planning”

- “Large, complex issues/projects that require go/no go decisions”

- “Business processes or issues where upper management wants more detail about the probability of completing on schedule and within budget”

The advantages of using quantitative risk analysis include: 14

- Objectivity in the assessment

- Powerful selling tool to management

- Direct projection of cost/benefit

- Flexibility to meet the needs of specific situations

- Flexibility to fit the needs of specific industries

- Much less prone to arouse disagreements during management review

- Analysis is often derived from some irrefutable facts

THE MOST COMMON PROBLEM IN QUANTITATIVE ASSESSMENT IS THAT THERE IS NOT ENOUGH DATA TO BE ANALYZED.

To conduct a quantitative risk analysis on a business process or project, high-quality data, a definite business plan, a well-developed project model and a prioritized list of business/project risk are necessary. Quantitative risk assessment is based on realistic and measurable data to calculate the impact values that the risk will create with the probability of occurrence. This assessment focuses on mathematical and statistical bases and can “express the risk values in monetary terms, which makes its results useful outside the context of the assessment (loss of money is understandable for any business unit). 15 The most common problem in quantitative assessment is that there is not enough data to be analyzed. There also can be challenges in revealing the subject of the evaluation with numerical values or the number of relevant variables is too high. This makes risk analysis technically difficult.

There are several tools and techniques that can be used in quantitative risk analysis. Those tools and techniques include: 16

- Heuristic methods —Experience-based or expert- based techniques to estimate contingency

- Three-point estimate —A technique that uses the optimistic, most likely and pessimistic values to determine the best estimate

- Decision tree analysis —A diagram that shows the implications of choosing various alternatives

- Expected monetary value (EMV) —A method used to establish the contingency reserves for a project or business process budget and schedule

- Monte Carlo analysis —A technique that uses optimistic, most likely and pessimistic estimates to determine the business cost and project completion dates

- Sensitivity analysis —A technique used to determine the risk that has the greatest impact on a project or business process

- Fault tree analysis (FTA) and failure modes and effects analysis (FMEA) —The analysis of a structured diagram that identifies elements that can cause system failure

There are also some basic (target, estimated or calculated) values used in quantitative risk assessment. Single loss expectancy (SLE) represents the money or value expected to be lost if the incident occurs one time, and an annual rate of occurrence (ARO) is how many times in a one-year interval the incident is expected to occur. The annual loss expectancy (ALE) can be used to justify the cost of applying countermeasures to protect an asset or a process. That money/value is expected to be lost in one year considering SLE and ARO. This value can be calculated by multiplying the SLE with the ARO. 17 For quantitative risk assessment, this is the risk value. 18

USING BOTH APPROACHES CAN IMPROVE PROCESS EFFICIENCY AND HELP ACHIEVE DESIRED SECURITY LEVELS.

By relying on factual and measurable data, the main benefits of quantitative risk assessment are the presentation of very precise results about risk value and the maximum investment that would make risk treatment worthwhile and profitable for the organization. For quantitative cost-benefit analysis, ALE is a calculation that helps an organization to determine the expected monetary loss for an asset or investment due to the related risk over a single year.

For example, calculating the ALE for a virtualization system investment includes the following:

- Virtualization system hardware value: US$1 million (SLE for HW)

- Virtualization system management software value: US$250,000 (SLE for SW)

- Vendor statistics inform that a system catastrophic failure (due to software or hardware) occurs one time every 10 years (ARO = 1/10 = 0.1)

- ALE for HW = 1M * 1 = US$100,000

- ALE for SW = 250K * 0.1 = US$25,000

In this case, the organization has an annual risk of suffering a loss of US$100,000 for hardware or US$25,000 for software individually in the event of the loss of its virtualization system. Any implemented control (e.g., backup, disaster recovery, fault tolerance system) that costs less than these values would be profitable.

Some risk assessment requires complicated parameters. More examples can be derived according to the following “step-by-step breakdown of the quantitative risk analysis”: 19

- Conduct a risk assessment and vulnerability study to determine the risk factors.

- Determine the exposure factor (EF), which is the percentage of asset loss caused by the identified threat.

- Based on the risk factors determined in the value of tangible or intangible assets under risk, determine the SLE, which equals the asset value multiplied by the exposure factor.

- Evaluate the historical background and business culture of the institution in terms of reporting security incidents and losses (adjustment factor).

- Estimate the ARO for each risk factor.

- Determine the countermeasures required to overcome each risk factor.

- Add a ranking number from one to 10 for quantifying severity (with 10 being the most severe) as a size correction factor for the risk estimate obtained from company risk profile.

- Determine the ALE for each risk factor. Note that the ARO for the ALE after countermeasure implementation may not always be equal to zero. ALE (corrected) equals ALE (table) times adjustment factor times size correction.

- Calculate an appropriate cost/benefit analysis by finding the differences before and after the implementation of countermeasures for ALE.

- Determine the return on investment (ROI) based on the cost/benefit analysis using internal rate of return (IRR).

- Present a summary of the results to management for review.

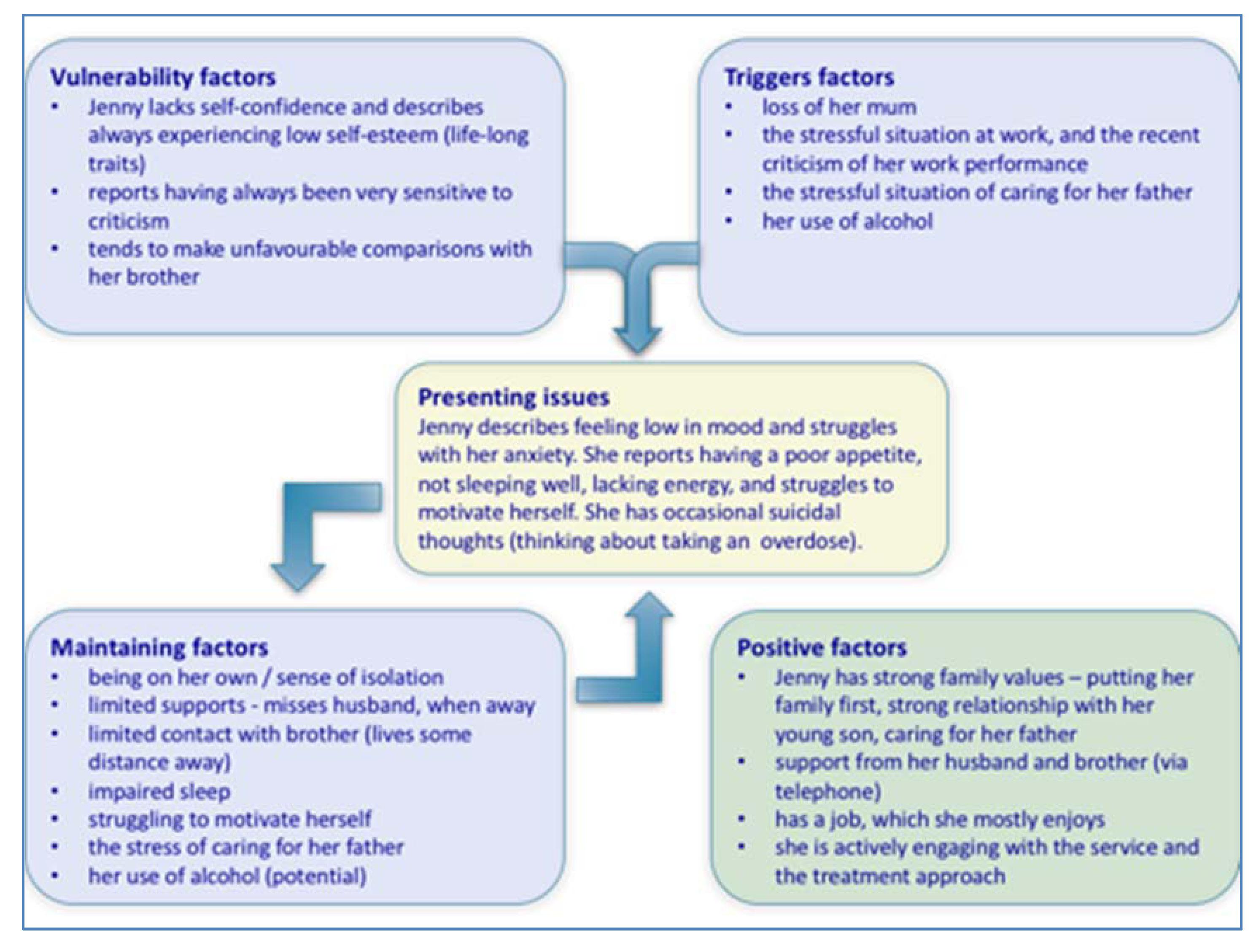

Using both approaches can improve process efficiency and help achieve desired security levels. In the risk assessment process, it is relatively easy to determine whether to use a quantitative or a qualitative approach. Qualitative risk assessment is quick to implement due to the lack of mathematical dependence and measurements and can be performed easily. Organizations also benefit from the employees who are experienced in asset/processes; however, they may also bring biases in determining probability and impact. Overall, combining qualitative and quantitative approaches with good assessment planning and appropriate modeling may be the best alternative for a risk assessment process ( figure 2 ). 20

Qualitative risk analysis is quick but subjective. On the other hand, quantitative risk analysis is optional and objective and has more detail, contingency reserves and go/no-go decisions, but it takes more time and is more complex. Quantitative data are difficult to collect, and quality data are prohibitively expensive. Although the effect of mathematical operations on quantitative data are reliable, the accuracy of the data is not guaranteed as a result of being numerical only. Data that are difficult to collect or whose accuracy is suspect can lead to inaccurate results in terms of value. In that case, business units cannot provide successful protection or may make false-risk treatment decisions and waste resources without specifying actions to reduce or eliminate risk. In the qualitative approach, subjectivity is considered part of the process and can provide more flexibility in interpretation than an assessment based on quantitative data. 21 For a quick and easy risk assessment, qualitative assessment is what 99 percent of organizations use. However, for critical security issues, it makes sense to invest time and money into quantitative risk assessment. 22 By adopting a combined approach, considering the information and time response needed, with data and knowledge available, it is possible to enhance the effectiveness and efficiency of the risk assessment process and conform to the organization’s requirements.

1 ISACA ® , CRISC Review Manual, 6 th Edition , USA, 2015, https://store.isaca.org/s/store#/store/browse/detail/a2S4w000004Ko8ZEAS 2 Ibid. 3 Schmittling, R.; A. Munns; “Performing a Security Risk Assessment,” ISACA ® Journal , vol. 1, 2010, https://www.isaca.org/resources/isaca-journal/issues 4 Bansal,; "Differentiating Quantitative Risk and Qualitative Risk Analysis,” iZenBridge,12 February 2019, https://www.izenbridge.com/blog/differentiating-quantitative-risk-analysis-and-qualitative-risk-analysis/ 5 Tan, D.; Quantitative Risk Analysis Step-By-Step , SANS Institute Information Security Reading Room, December 2020, https://www.sans.org/reading-room/whitepapers/auditing/quantitative-risk-analysis-step-by-step-849 6 Op cit Bansal 7 Hall, H.; “Evaluating Risks Using Qualitative Risk Analysis,” Project Risk Coach, https://projectriskcoach.com/evaluating-risks-using-qualitative-risk-analysis/ 8 Leal, R.; “Qualitative vs. Quantitative Risk Assessments in Information Security: Differences and Similarities,” 27001 Academy, 6 March 2017, https://advisera.com/27001academy/blog/2017/03/06/qualitative-vs-quantitative-risk-assessments-in-information-security/ 9 Op cit Hall 10 Goodrich, B.; “Qualitative Risk Analysis vs. Quantitative Risk Analysis,” PM Learning Solutions, https://www.pmlearningsolutions.com/blog/qualitative-risk-analysis-vs-quantitative-risk-analysis-pmp-concept-1 11 Meyer, W. ; “Quantifying Risk: Measuring the Invisible,” PMI Global Congress 2015—EMEA, London, England, 10 October 2015, https://www.pmi.org/learning/library/quantitative-risk-assessment-methods-9929 12 Op cit Goodrich 13 Op cit Hall 14 Op cit Tan 15 Op cit Leal 16 Op cit Hall 17 Tierney, M.; “Quantitative Risk Analysis: Annual Loss Expectancy," Netwrix Blog, 24 July 2020, https://blog.netwrix.com/2020/07/24/annual-loss-expectancy-and-quantitative-risk-analysis 18 Op cit Leal 19 Op cit Tan 20 Op cit Leal 21 ISACA ® , Conductin g a n IT Security Risk Assessment, USA, 2020, https://store.isaca.org/s/store#/store/browse/detail/a2S4w000004KoZeEAK 22 Op cit Leal

Volkan Evrin, CISA, CRISC, COBIT 2019 Foundation, CDPSE, CEHv9, ISO 27001-22301-20000 LA

Has more than 20 years of professional experience in information and technology (I&T) focus areas including information systems and security, governance, risk, privacy, compliance, and audit. He has held executive roles on the management of teams and the implementation of projects such as information systems, enterprise applications, free software, in-house software development, network architectures, vulnerability analysis and penetration testing, informatics law, Internet services, and web technologies. He is also a part-time instructor at Bilkent University in Turkey; an APMG Accredited Trainer for CISA, CRISC and COBIT 2019 Foundation; and a trainer for other I&T-related subjects. He can be reached at [email protected] .

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 08 April 2024

A case study on the relationship between risk assessment of scientific research projects and related factors under the Naive Bayesian algorithm

- Xuying Dong 1 &

- Wanlin Qiu 1

Scientific Reports volume 14 , Article number: 8244 ( 2024 ) Cite this article

370 Accesses

Metrics details

- Computer science

- Mathematics and computing

This paper delves into the nuanced dynamics influencing the outcomes of risk assessment (RA) in scientific research projects (SRPs), employing the Naive Bayes algorithm. The methodology involves the selection of diverse SRPs cases, gathering data encompassing project scale, budget investment, team experience, and other pertinent factors. The paper advances the application of the Naive Bayes algorithm by introducing enhancements, specifically integrating the Tree-augmented Naive Bayes (TANB) model. This augmentation serves to estimate risk probabilities for different research projects, shedding light on the intricate interplay and contributions of various factors to the RA process. The findings underscore the efficacy of the TANB algorithm, demonstrating commendable accuracy (average accuracy 89.2%) in RA for SRPs. Notably, budget investment (regression coefficient: 0.68, P < 0.05) and team experience (regression coefficient: 0.51, P < 0.05) emerge as significant determinants obviously influencing RA outcomes. Conversely, the impact of project size (regression coefficient: 0.31, P < 0.05) is relatively modest. This paper furnishes a concrete reference framework for project managers, facilitating informed decision-making in SRPs. By comprehensively analyzing the influence of various factors on RA, the paper not only contributes empirical insights to project decision-making but also elucidates the intricate relationships between different factors. The research advocates for heightened attention to budget investment and team experience when formulating risk management strategies. This strategic focus is posited to enhance the precision of RAs and the scientific foundation of decision-making processes.

Similar content being viewed by others

Beyond probability-impact matrices in project risk management: A quantitative methodology for risk prioritisation

A method for managing scientific research project resource conflicts and predicting risks using BP neural networks

Prediction of SMEs’ R&D performances by machine learning for project selection

Introduction.

Scientific research projects (SRPs) stand as pivotal drivers of technological advancement and societal progress in the contemporary landscape 1 , 2 , 3 . The dynamism of SRP success hinges on a multitude of internal and external factors 4 . Central to effective project management, Risk assessment (RA) in SRPs plays a critical role in identifying and quantifying potential risks. This process not only aids project managers in formulating strategic decision-making approaches but also enhances the overall success rate and benefits of projects. In a recent contribution, Salahuddin 5 provides essential numerical techniques indispensable for conducting RAs in SRPs. Building on this foundation, Awais and Salahuddin 6 delve into the assessment of risk factors within SRPs, notably introducing the consideration of activation energy through an exploration of the radioactive magnetohydrodynamic model. Further expanding the scope, Awais and Salahuddin 7 undertake a study on the natural convection of coupled stress fluids. However, RA of SRPs confronts a myriad of challenges, underscoring the critical need for novel methodologies 8 . Primarily, the intricate nature of SRPs renders precise RA exceptionally complex and challenging. The project’s multifaceted dimensions, encompassing technology, resources, and personnel, are intricately interwoven, posing a formidable challenge for traditional assessment methods to comprehensively capture all potential risks 9 . Furthermore, the intricate and diverse interdependencies among various project factors contribute to the complexity of these relationships, thereby limiting the efficacy of conventional methods 10 , 11 , 12 . Traditional approaches often focus solely on the individual impact of diverse factors, overlooking the nuanced relationships that exist between them—an inherent limitation in the realm of RA for SRPs 13 , 14 , 15 .

The pursuit of a methodology capable of effectively assessing project risks while elucidating the intricate interplay of different factors has emerged as a focal point in SRPs management 16 , 17 , 18 . This approach necessitates a holistic consideration of multiple factors, their quantification in contributing to project risks, and the revelation of their correlations. Such an approach enables project managers to more precisely predict and respond to risks. Marx-Stoelting et al. 19 , current approaches for the assessment of environmental and human health risks due to exposure to chemical substances have served their purpose reasonably well. Additionally, Awais et al. 20 highlights the significance of enthalpy changes in SRPs risk considerations, while Awais et al. 21 delve into the comprehensive exploration of risk factors in Eyring-Powell fluid flow in magnetohydrodynamics, particularly addressing viscous dissipation and activation energy effects. The Naive Bayesian algorithm, recognized for its prowess in probability and statistics, has yielded substantial results in information retrieval and data mining in recent years 22 . Leveraging its advantages in classification and probability estimation, the algorithm presents a novel approach for RA of SRPs 23 . Integrating probability analysis into RA enables a more precise estimation of project risks by utilizing existing project data and harnessing the capabilities of the Naive Bayesian algorithms. This method facilitates a quantitative, statistical analysis of various factors, effectively navigating the intricate relationships between them, thereby enhancing the comprehensiveness and accuracy of RA for SRPs.

This paper seeks to employ the Naive Bayesian algorithm to estimate the probability of risks by carefully selecting distinct research project cases and analyzing multidimensional data, encompassing project scale, budget investment, and team experience. Concurrently, Multiple Linear Regression (MLR) analysis is applied to quantify the influence of these factors on the assessment results. The paper places particular emphasis on exploring the intricate interrelationships between different factors, aiming to provide a more specific and accurate reference framework for decision-making in SRPs management.

This paper introduces several innovations and contributions to the field of RA for SRPs:

Comprehensive Consideration of Key Factors: Unlike traditional research that focuses on a single factor, this paper comprehensively considers multiple key factors, such as project size, budget investment, and team experience. This holistic analysis enhances the realism and thoroughness of RA for SRPs.

Introduction of Tree-Enhanced Naive Bayes Model: The naive Bayes algorithm is introduced and further improved through the proposal of a tree-enhanced naive Bayes model. This algorithm exhibits unique advantages in handling uncertainty and complexity, thereby enhancing its applicability and accuracy in the RA of scientific and technological projects.

Empirical Validation: The effectiveness of the proposed method is not only discussed theoretically but also validated through empirical cases. The analysis of actual cases provides practical support and verification, enhancing the credibility of the research results.

Application of MLR Analysis: The paper employs MLR analysis to delve into the impact of various factors on RA. This quantitative analysis method adds specificity and operability to the research, offering a practical decision-making basis for scientific and technological project management.

Discovery of New Connections and Interactions: The paper uncovers novel connections and interactions, such as the compensatory role of team experience for budget-related risks and the impact of the interaction between project size and budget investment on RA results. These insights provide new perspectives for decision-making in technology projects, contributing significantly to the field of RA for SRPs in terms of both importance and practical value.

The paper is structured as follows: “ Introduction ” briefly outlines the significance of RA for SRPs. Existing challenges within current research are addressed, and the paper’s core objectives are elucidated. A distinct emphasis is placed on the innovative aspects of this research compared to similar studies. The organizational structure of the paper is succinctly introduced, providing a brief overview of each section’s content. “ Literature review ” provides a comprehensive review of relevant theories and methodologies in the domain of RA for SRPs. The current research landscape is systematically examined, highlighting the existing status and potential gaps. Shortcomings in previous research are analyzed, laying the groundwork for the paper’s motivation and unique contributions. “ Research methodology ” delves into the detailed methodologies employed in the paper, encompassing data collection, screening criteria, preprocessing steps, and more. The tree-enhanced naive Bayes model is introduced, elucidating specific steps and the purpose behind MLR analysis. “ Results and discussion ” unfolds the results and discussions based on selected empirical cases. The representativeness and diversity of these cases are expounded upon. An in-depth analysis of each factor’s impact and interaction in the context of RA is presented, offering valuable insights. “ Discussion ” succinctly summarizes the entire research endeavor. Potential directions for further research and suggestions for improvement are proposed, providing a thoughtful conclusion to the paper.

Literature review

A review of ra for srps.

In recent years, the advancement of SRPs management has led to the evolution of various RA methods tailored for SRPs. The escalating complexity of these projects poses a challenge for traditional methods, often falling short in comprehensively considering the intricate interplay among multiple factors and yielding incomplete assessment outcomes. Scholars, recognizing the pivotal role of factors such as project scale, budget investment, and team experience in influencing project risks, have endeavored to explore these dynamics from diverse perspectives. Siyal et al. 24 pioneered the development and testing of a model geared towards detecting SRPs risks. Chen et al. 25 underscored the significance of visual management in SRPs risk management, emphasizing its importance in understanding and mitigating project risks. Zhao et al. 26 introduced a classic approach based on cumulative prospect theory, offering an optional method to elucidate researchers’ psychological behaviors. Their study demonstrated the enhanced rationality achieved by utilizing the entropy weight method to derive attribute weight information under Pythagorean fuzzy sets. This approach was then applied to RA for SRPs, showcasing a model grounded in the proposed methodology. Suresh and Dillibabu 27 proposed an innovative hybrid fuzzy-based machine learning mechanism tailored for RA in software projects. This hybrid scheme facilitated the identification and ranking of major software project risks, thereby supporting decision-making throughout the software project lifecycle. Akhavan et al. 28 introduced a Bayesian network modeling framework adept at capturing project risks by calculating the uncertainty of project net present value. This model provided an effective means for analyzing risk scenarios and their impact on project success, particularly applicable in evaluating risks for innovative projects that had undergone feasibility studies.

A review of factors affecting SRPs

Within the realm of SRPs management, the assessment and proficient management of project risks stand as imperative components. Consequently, a range of studies has been conducted to explore diverse methods and models aimed at enhancing the comprehension and decision support associated with project risks. Guan et al. 29 introduced a new risk interdependence network model based on Monte Carlo simulation to support decision-makers in more effectively assessing project risks and planning risk management actions. They integrated interpretive structural modeling methods into the model to develop a hierarchical project risk interdependence network based on identified risks and their causal relationships. Vujović et al. 30 provided a new method for research in project management through careful analysis of risk management in SRPs. To confirm the hypothesis, the study focused on educational organizations and outlined specific project management solutions in business systems, thereby improving the business and achieving positive business outcomes. Muñoz-La Rivera et al. 31 described and classified the 100 identified factors based on the dimensions and aspects of the project, assessed their impact, and determined whether they were shaping or directly affecting the occurrence of research project accidents. These factors and their descriptions and classifications made significant contributions to improving the security creation of the system and generating training and awareness materials, fostering the development of a robust security culture within organizations. Nguyen et al. concentrated on the pivotal risk factors inherent in design-build projects within the construction industry. Effective identification and management of these factors enhanced project success and foster confidence among owners and contractors in adopting the design-build approach 32 . Their study offers valuable insights into RA in project management and the adoption of new contract forms. Nguyen and Le delineated risk factors influencing the quality of 20 civil engineering projects during the construction phase 33 . The top five risks identified encompass poor raw material quality, insufficient worker skills, deficient design documents and drawings, geographical challenges at construction sites, and inadequate capabilities of main contractors and subcontractors. Meanwhile, Nguyen and Phu Pham concentrated on office building projects in Ho Chi Minh City, Vietnam, to pinpoint key risk factors during the construction phase 34 . These factors were classified into five groups based on their likelihood and impact: financial, management, schedule, construction, and environmental. Findings revealed that critical factors affecting office building projects encompassed both natural elements (e.g., prolonged rainfall, storms, and climate impacts) and human factors (e.g., unstable soil, safety behavior, owner-initiated design changes), with schedule-related risks exerting the most significant influence during the construction phase of Ho Chi Minh City’s office building projects. This provides construction and project management practitioners with fresh insights into risk management, aiding in the comprehensive identification, mitigation, and management of risk factors in office building projects.

While existing research has made notable strides in RA for SRPs, certain limitations persist. These studies limitations in quantifying the degree of influence of various factors and analyzing their interrelationships, thereby falling short of offering specific and actionable recommendations. Traditional methods, due to their inherent limitations, struggle to precisely quantify risk degrees and often overlook the intricate interplay among multiple factors. Consequently, there is an urgent need for a comprehensive method capable of quantifying the impact of diverse factors and revealing their correlations. In response to this exigency, this paper introduces the TANB model. The unique advantages of this algorithm in the RA of scientific and technological projects have been fully realized. Tailored to address the characteristics of uncertainty and complexity, the model represents a significant leap forward in enhancing applicability and accuracy. In comparison with traditional methods, the TANB model exhibits greater flexibility and a heightened ability to capture dependencies between features, thereby elevating the overall performance of RA. This innovative method emerges as a more potent and reliable tool in the realm of scientific and technological project management, furnishing decision-makers with more comprehensive and accurate support for RA.

Research methodology

This paper centers on the latest iteration of ISO 31000, delving into the project risk management process and scrutinizing the RA for SRPs and their intricate interplay with associated factors. ISO 31000, an international risk management standard, endeavors to furnish businesses, organizations, and individuals with a standardized set of risk management principles and guidelines, defining best practices and establishing a common framework. The paper unfolds in distinct phases aligned with ISO 31000:

Risk Identification: Employing data collection and preparation, a spectrum of factors related to project size, budget investment, team member experience, project duration, and technical difficulty were identified.

RA: Utilizing the Naive Bayes algorithm, the paper conducts RA for SRPs, estimating the probability distribution of various factors influencing RA results.

Risk Response: The application of the Naive Bayes model is positioned as a means to respond to risks, facilitating the formulation of apt risk response strategies based on calculated probabilities.

Monitoring and Control: Through meticulous data collection, model training, and verification, the paper illustrates the steps involved in monitoring and controlling both data and models. Regular monitoring of identified risks and responses allows for adjustments when necessary.

Communication and Reporting: Maintaining effective communication throughout the project lifecycle ensures that stakeholders comprehend the status of project risks. Transparent reporting on discussions and outcomes contributes to an informed project environment.

Data collection and preparation

In this paper, a meticulous approach is undertaken to select representative research project cases, adhering to stringent screening criteria. Additionally, a thorough review of existing literature is conducted and tailored to the practical requirements of SRPs management. According to Nguyen et al., these factors play a pivotal role in influencing the RA outcomes of SRPs 35 . Furthermore, research by He et al. underscored the significant impact of team members’ experience on project success 36 . Therefore, in alignment with our research objectives and supported by the literature, this paper identifies variables such as project scale, budget investment, team member experience, project duration, and technical difficulty as the focal themes. To ensure the universality and scientific rigor of our findings, the paper adheres to stringent selection criteria during the project case selection process. After preliminary screening of SRPs completed in the past 5 years, considering factors such as project diversity, implementation scales, and achieved outcomes, five representative projects spanning diverse fields, including engineering, medicine, and information technology, are ultimately selected. These project cases are chosen based on their capacity to represent various scales and types of SRPs, each possessing a typical risk management process, thereby offering robust and comprehensive data support for our study. The subsequent phase involves detailed data collection on each chosen project, encompassing diverse dimensions such as project scale, budget investment, team member experience, project cycle, and technical difficulty. The collected data undergo meticulous preprocessing to ensure data quality and reliability. The preprocessing steps comprised data cleaning, addressing missing values, handling outliers, culminating in the creation of a self-constructed dataset. The dataset encompasses over 500 SRPs across diverse disciplines and fields, ensuring statistically significant and universal outcomes. Particular emphasis is placed on ensuring dataset diversity, incorporating projects of varying scales, budgets, and team experience levels. This comprehensive coverage ensures the representativeness and credibility of the study on RA in SRPs. New influencing factors are introduced to expand the research scope, including project management quality (such as time management and communication efficiency), historical success rate, industry dynamics, and market demand. Detailed definitions and quantifications are provided for each new variable to facilitate comprehensive data processing and analysis. For project management quality, consideration is given to time management accuracy and communication frequency and quality among team members. Historical success rate is determined by reviewing past project records and outcomes. Industry dynamics are assessed by consulting the latest scientific literature and patent information. Market demand is gauged through market research and user demand surveys. The introduction of these variables enriches the understanding of RA in SRPs and opens up avenues for further research exploration.

At the same time, the collected data are integrated and coded in order to apply Naive Bayes algorithm and MLR analysis. For cases involving qualitative data, this paper uses appropriate coding methods to convert it into quantitative data for processing in the model. For example, for the qualitative feature of team member experience, numerical values are used to represent different experience levels, such as 0 representing beginners, 0 representing intermediate, and 2 representing advanced. The following is a specific sample data set example (Table 1 ). It shows the processed structured data, and the values in the table represent the specific characteristics of each project.

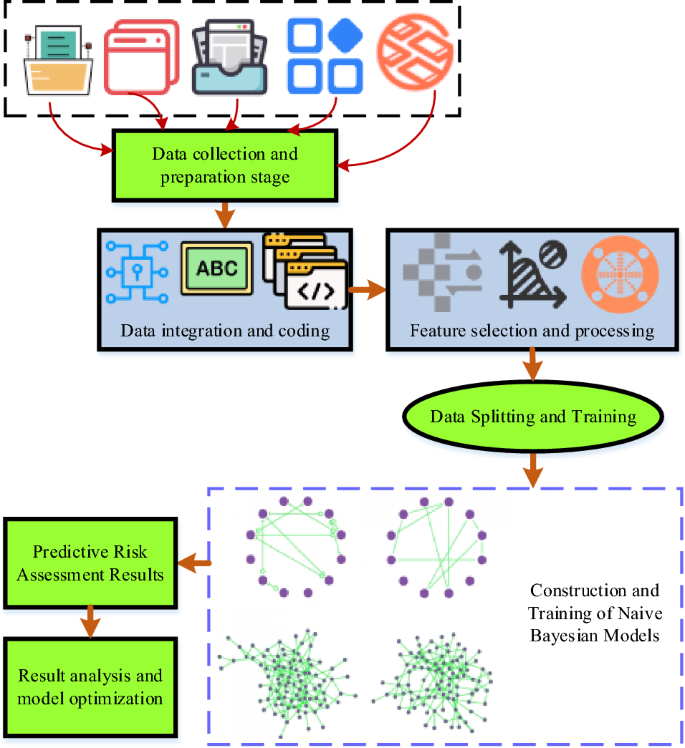

Establishment of naive Bayesian model

The Naive Bayesian algorithm, a probabilistic and statistical classification method renowned for its effectiveness in analyzing and predicting multi-dimensional data, is employed in this paper to conduct the RA for SRPs. The application of the Naive Bayesian algorithm to RA for SRPs aims to discern the influence of various factors on the outcomes of RA. The Naive Bayesian algorithm, depicted in Fig. 1 , operates on the principles of Bayesian theorem, utilizing posterior probability calculations for classification tasks. The fundamental concept of this algorithm hinges on the assumption of independence among different features, embodying the “naivety” hypothesis. In the context of RA for SRPs, the Naive Bayesian algorithm is instrumental in estimating the probability distribution of diverse factors affecting the RA results, thereby enhancing the precision of risk estimates. In the Naive Bayesian model, the initial step involves the computation of posterior probabilities for each factor, considering the given RA result conditions. Subsequently, the category with the highest posterior probability is selected as the predictive outcome.

Naive Bayesian algorithm process.

In Fig. 1 , the data collection process encompasses vital project details such as project scale, budget investment, team member experience, project cycle, technical difficulty, and RA results. This meticulous collection ensures the integrity and precision of the dataset. Subsequently, the gathered data undergoes integration and encoding to convert qualitative data into quantitative form, facilitating model processing and analysis. Tailored to specific requirements, relevant features are chosen for model construction, accompanied by essential preprocessing steps like standardization and normalization. The dataset is then partitioned into training and testing sets, with the model trained on the former and its performance verified on the latter. Leveraging the training data, a Naive Bayesian model is developed to estimate probability distribution parameters for various features across distinct categories. Ultimately, the trained model is employed to predict new project features, yielding RA results.

Naive Bayesian models, in this context, are deployed to forecast diverse project risk levels. Let X symbolize the feature vector, encompassing project scale, budget investment, team member experience, project cycle, and technical difficulty. The objective is to predict the project’s risk level, denoted as Y. Y assumes discrete values representing distinct risk levels. Applying the Bayesian theorem, the posterior probability P(Y|X) is computed, signifying the probability distribution of projects falling into different risk levels given the feature vector X. The fundamental equation governing the Naive Bayesian model is expressed as:

In Eq. ( 1 ), P(Y|X) represents the posterior probability, denoting the likelihood of the project belonging to a specific risk level. P(X|Y) signifies the class conditional probability, portraying the likelihood of the feature vector X occurring under known risk level conditions. P(Y) is the prior probability, reflecting the antecedent likelihood of the project pertaining to a particular risk level. P(X) acts as the evidence factor, encapsulating the likelihood of the feature vector X occurring.

The Naive Bayes, serving as the most elementary Bayesian network classifier, operates under the assumption of attribute independence given the class label c , as expressed in Eq. ( 2 ):

The classification decision formula for Naive Bayes is articulated in Eq. ( 3 ):

The Naive Bayes model, rooted in the assumption of conditional independence among attributes, often encounters deviations from reality. To address this limitation, the Tree-Augmented Naive Bayes (TANB) model extends the independence assumption by incorporating a first-order dependency maximum-weight spanning tree. TANB introduces a tree structure that more comprehensively models relationships between features, easing the constraints of the independence assumption and concurrently mitigating issues associated with multicollinearity. This extension bolsters its efficacy in handling intricate real-world data scenarios. TANB employs conditional mutual information \(I(X_{i} ;X_{j} |C)\) to gauge the dependency between attributes \(X_{j}\) and \(X_{i}\) , thereby constructing the maximum weighted spanning tree. In TANB, any attribute variable \(X_{i}\) is permitted to have at most one other attribute variable as its parent node, expressed as \(Pa\left( {X_{i} } \right) \le 2\) . The joint probability \(P_{con} \left( {x,c} \right)\) undergoes transformation using Eq. ( 4 ):

In Eq. ( 4 ), \(x_{r}\) refers to the root node, which can be expressed as Eq. ( 5 ):

TANB classification decision equation is presented below:

In the RA of SRPs, normal distribution parameters, such as mean (μ) and standard deviation (σ), are estimated for each characteristic dimension (project scale, budget investment, team member experience, project cycle, and technical difficulty). This estimation allows the calculation of posterior probabilities for projects belonging to different risk levels under given feature vector conditions. For each feature dimension \({X}_{i}\) , the mean \({mu}_{i,j}\) and standard deviation \({{\text{sigma}}}_{i,j}\) under each risk level are computed, where i represents the feature dimension, and j denotes the risk level. Parameter estimation employs the maximum likelihood method, and the specific calculations are as follows.

In Eqs. ( 7 ) and ( 8 ), \({N}_{j}\) represents the number of projects belonging to risk level j . \({x}_{i,k}\) denotes the value of the k -th item in the feature dimension i . Finally, under a given feature vector, the posterior probability of a project with risk level j is calculated as Eq. ( 9 ).

In Eq. ( 9 ), d represents the number of feature dimensions, and Z is the normalization factor. \(P(Y=j)\) represents the prior probability of category j . \(P({X}_{i}\mid Y=j)\) represents the normal distribution probability density function of feature dimension i under category j . The risk level of a project can be predicted by calculating the posterior probabilities of different risk levels to achieve RA for SRPs.

This paper integrates the probability estimation of the Naive Bayes model with actual project risk response strategies, enabling a more flexible and targeted response to various risk scenarios. Such integration offers decision support to project managers, enhancing their ability to address potential challenges effectively and ultimately improving the overall success rate of the project. This underscores the notion that risk management is not solely about problem prevention but stands as a pivotal factor contributing to project success.

MLR analysis

MLR analysis is used to validate the hypothesis to deeply explore the impact of various factors on RA of SRPs. Based on the previous research status, the following research hypotheses are proposed.

Hypothesis 1: There is a positive relationship among project scale, budget investment, and team member experience and RA results. As the project scale, budget investment, and team member experience increase, the RA results also increase.

Hypothesis 2: There is a negative relationship between the project cycle and the RA results. Projects with shorter cycles may have higher RA results.

Hypothesis 3: There is a complex relationship between technical difficulty and RA results, which may be positive, negative, or bidirectional in some cases. Based on these hypotheses, an MLR model is established to analyze the impact of factors, such as project scale, budget investment, team member experience, project cycle, and technical difficulty, on RA results. The form of an MLR model is as follows.

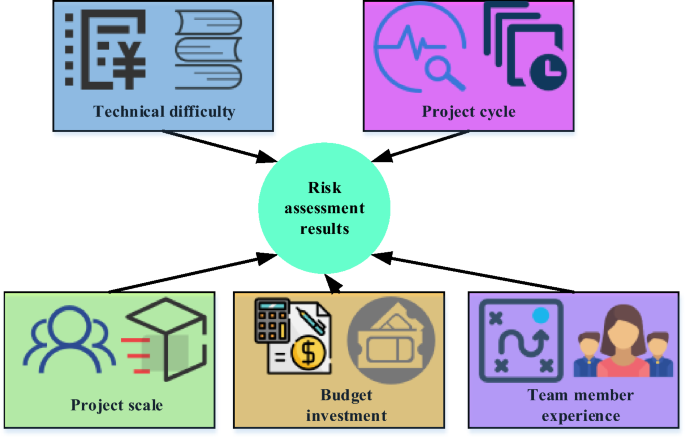

In Eq. ( 10 ), Y represents the RA result (dependent variable). \({X}_{1}\) to \({X}_{5}\) represent factors, such as project scale, budget investment, team member experience, project cycle, and technical difficulty (independent variables). \({\beta }_{0}\) to \({\beta }_{5}\) are the regression coefficients, which represent the impact of various factors on the RA results. \(\epsilon\) represents a random error term. The model structure is shown in Fig. 2 .

Schematic diagram of an MLR model.

In Fig. 2 , the MLR model is employed to scrutinize the influence of various independent variables on the outcomes of RA. In this specific context, the independent variables encompass project size, budget investment, team member experience, project cycle, and technical difficulty, all presumed to impact the project’s RA results. Each independent variable is denoted as a node in the model, with arrows depicting the relationships between these factors. In an MLR model, the arrow direction signifies causality, illustrating the influence of an independent variable on the dependent variable.

When conducting MLR analysis, it is necessary to estimate the parameter \(\upbeta\) in the regression model. These parameters determine the relationship between the independent and dependent variables. Here, the Ordinary Least Squares (OLS) method is applied to estimate these parameters. The OLS method is a commonly used parameter estimation method aimed at finding parameter values that minimize the sum of squared residuals between model predictions and actual observations. The steps are as follows. Firstly, based on the general form of an MLR model, it is assumed that there is a linear relationship between the independent and dependent variables. It can be represented by a linear equation, which includes regression coefficients β and the independent variable X. For each observation value, the difference between its predicted and actual values is calculated, which is called the residual. Residual \({e}_{i}\) can be expressed as:

In Eq. ( 11 ), \({Y}_{i}\) is the actual observation value, and \({\widehat{Y}}_{i}\) is the value predicted by the model. The goal of the OLS method is to adjust the regression coefficients \(\upbeta\) to minimize the sum of squared residuals of all observations. This can be achieved by solving an optimization problem, and the objective function is the sum of squared residuals.

Then, the estimated value of the regression coefficient \(\upbeta\) that minimizes the sum of squared residuals can be obtained by taking the derivative of the objective function and making the derivative zero. The estimated values of the parameters can be obtained by solving this system of equations. The final estimated regression coefficient can be expressed as:

In Eq. ( 13 ), X represents the independent variable matrix. Y represents the dependent variable vector. \(({X}^{T}X{)}^{-1}\) is the inverse of a matrix, and \(\widehat{\beta }\) is a parameter estimation vector.

Specifically, solving for the estimated value of regression coefficient \(\upbeta\) requires matrix operation and statistical analysis. Based on the collected project data, substitute it into the model and calculate the residual. Then, the steps of the OLS method are used to obtain parameter estimates. These parameter estimates are used to establish an MLR model to predict RA results and further analyze the influence of different factors.

The degree of influence of different factors on the RA results can be determined by analyzing the value of the regression coefficient β. A positive \(\upbeta\) value indicates that the factor has a positive impact on the RA results, while a negative \(\upbeta\) value indicates that the factor has a negative impact on the RA results. Additionally, hypothesis testing can determine whether each factor is significant in the RA results.

The TANB model proposed in this paper extends the traditional naive Bayes model by incorporating conditional dependencies between attributes to enhance the representation of feature interactions. While the traditional naive Bayes model assumes feature independence, real-world scenarios often involve interdependencies among features. To address this, the TANB model is introduced. The TANB model introduces a tree structure atop the naive Bayes model to more accurately model feature relationships, overcoming the limitation of assuming feature independence. Specifically, the TANB model constructs a maximum weight spanning tree to uncover conditional dependencies between features, thereby enabling the model to better capture feature interactions.

Assessment indicators

To comprehensively assess the efficacy of the proposed TANB model in the RA for SRPs, a self-constructed dataset serves as the data source for this experimental evaluation, as outlined in Table 1 . The dataset is segregated into training (80%) and test sets (20%). These indicators cover the accuracy, precision, recall rate, F1 value, and Area Under the Curve (AUC) Receiver Operating Characteristic (ROC) of the model. The following are the definitions and equations for each assessment indicator. Accuracy is the proportion of correctly predicted samples to the total number of samples. Precision is the proportion of Predicted Positive (PP) samples to actual positive samples. The recall rate is the proportion of correctly PP samples among the actual positive samples. The F1 value is the harmonic average of precision and recall, considering the precision and comprehensiveness of the model. The area under the ROC curve measures the classification performance of the model, and a larger AUC value indicates better model performance. The ROC curve suggests the relationship between True Positive Rate and False Positive Rate under different thresholds. The AUC value can be obtained by accumulating the area of each small rectangle under the ROC curve. The confusion matrix is used to display the prediction property of the model in different categories, including True Positive (TP), True Negative (TN), False Positive (FP), and False Negative (FN).

The performance of TANB in RA for SRPs can be comprehensively assessed to understand the advantages, disadvantages, and applicability of the model more comprehensively by calculating the above assessment indicators.

Results and discussion

Accuracy analysis of naive bayesian algorithm.

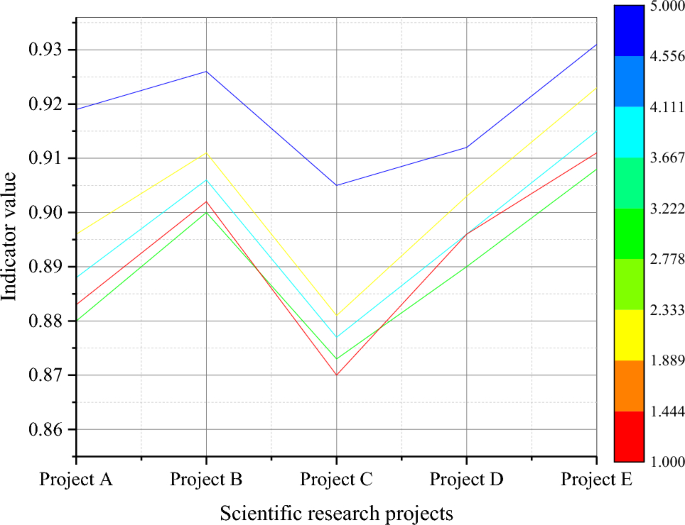

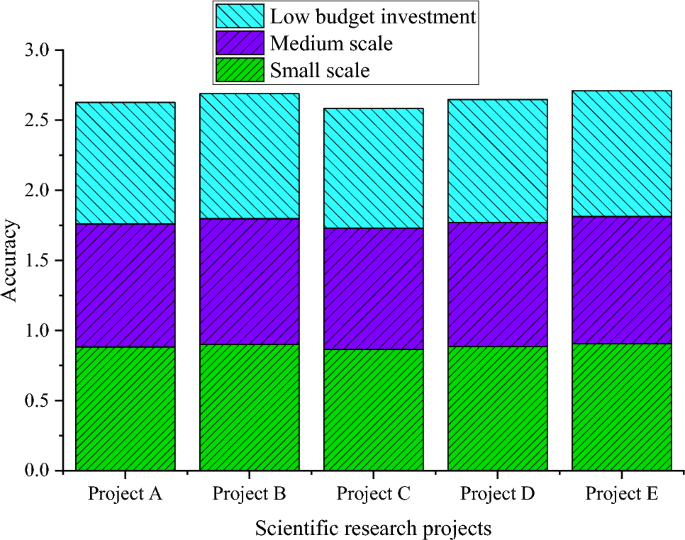

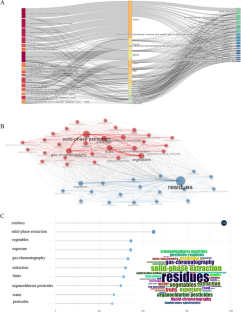

On the dataset of this paper, Fig. 3 reveals the performance of TANB algorithm under different assessment indicators.

Performance assessment of TANB algorithm on different projects.

From Fig. 3 , the TANB algorithm performs well in various projects, ranging from 0.87 to 0.911 in accuracy. This means that the overall accuracy of the model in predicting project risks is quite high. The precision also maintains a high level in various projects, ranging from 0.881 to 0.923, indicating that the model performs well in classifying high-risk categories. The recall rate ranges from 0.872 to 0.908, indicating that the model can effectively capture high-risk samples. Meanwhile, the AUC values in each project are relatively high, ranging from 0.905 to 0.931, which once again emphasizes the effectiveness of the model in risk prediction. From multiple assessment indicators, such as accuracy, precision, recall, F1 value, and AUC, the TANB algorithm has shown good risk prediction performance in representative projects. The performance assessment results of the TANB algorithm under different feature dimensions are plotted in Figs. 4 , 5 , 6 and 7 .

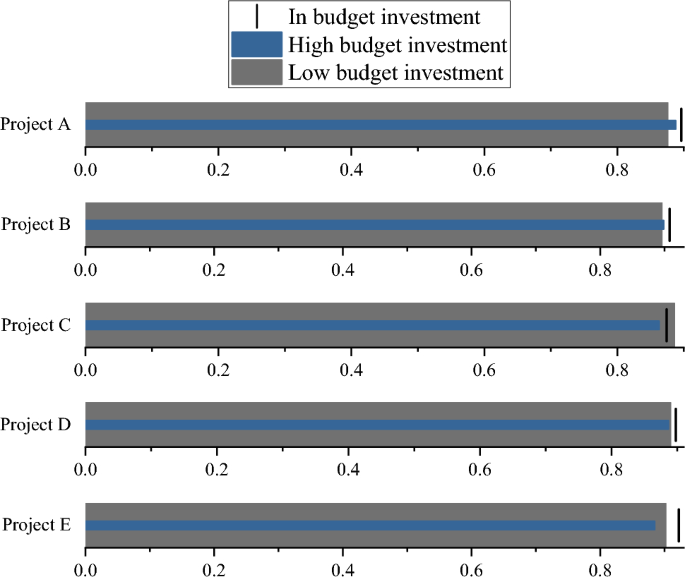

Prediction accuracy of TANB algorithm on different budget investments.

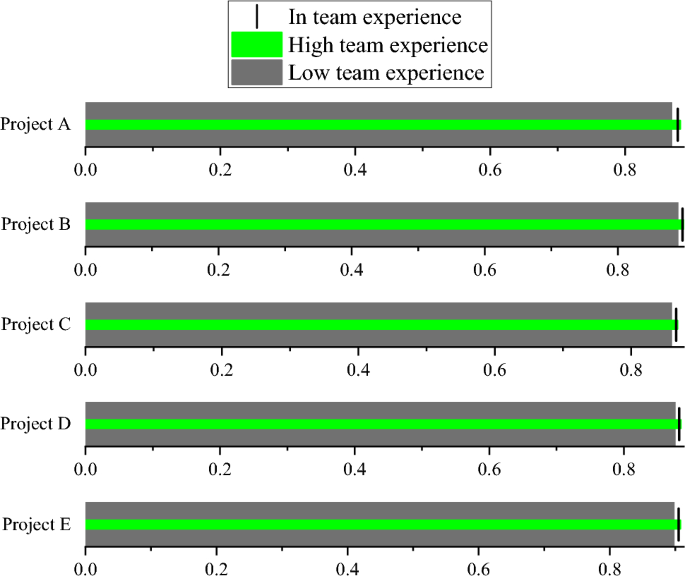

Prediction accuracy of TANB algorithm on different team experiences.

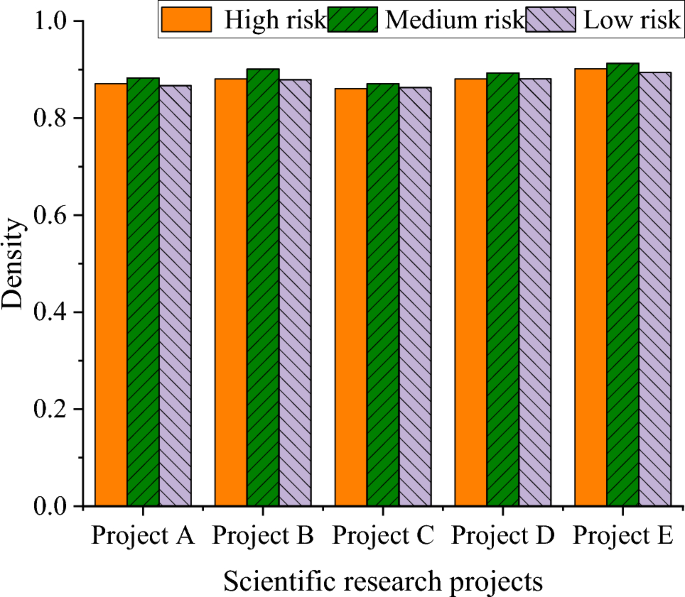

Prediction accuracy of TANB algorithm at different risk levels.

Prediction accuracy of TANB algorithm on different project scales.

From Figs. 4 , 5 , 6 and 7 , as the level of budget investment increases, the accuracy of most projects also shows an increasing trend. Especially in cases of high budget investment, the accuracy of the project is generally high. This may mean that a higher budget investment helps to reduce project risks, thereby improving the prediction accuracy of the TANB algorithm. It can be observed that team experience also affects the accuracy of the model. Projects with high team experience exhibit higher accuracy in TANB algorithms. This may indicate that experienced teams can better cope with project risks to improve the performance of the model. When budget investment and team experience are low, accuracy is relatively low. This may imply that budget investment and team experience can complement each other to affect the model performance.

There are certain differences in the accuracy of projects under different risk levels. Generally speaking, the accuracy of high-risk and medium-risk projects is relatively high, while the accuracy of low-risk projects is relatively low. This may be because high-risk and medium-risk projects require more accurate predictions, resulting in higher accuracy. Similarly, project scale also affects the performance of the model. Large-scale and medium-scale projects exhibit high accuracy in TANB algorithms, while small-scale projects have relatively low accuracy. This may be because the risks of large-scale and medium-scale projects are easier to identify and predict to promote the performance of the model. In high-risk and large-scale projects, accuracy is relatively high. This may indicate that the impact of project scale is more significant in specific risk scenarios.

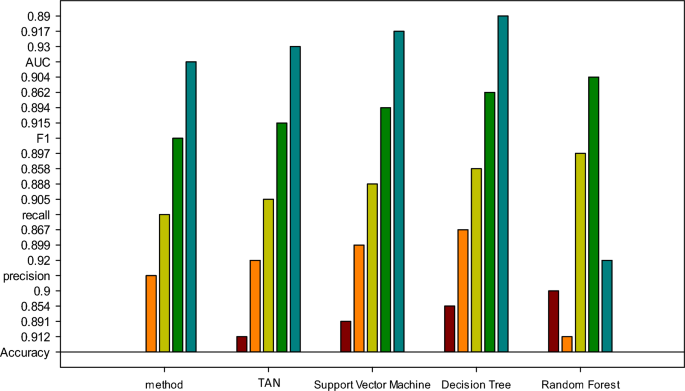

Figure 8 further compares the performance of the TANB algorithm proposed here with other similar algorithms.

Performance comparison of different algorithms in RA of SRPs.

As depicted in Fig. 8 , the TANB algorithm attains an accuracy and precision of 0.912 and 0.920, respectively, surpassing other algorithms. It excels in recall rate and F1 value, registering 0.905 and 0.915, respectively, outperforming alternative algorithms. These findings underscore the proficiency of the TANB algorithm in comprehensively identifying high-risk projects while sustaining high classification accuracy. Moreover, the algorithm achieves an AUC of 0.930, indicative of its exceptional predictive prowess in sample classification. Thus, the TANB algorithm exhibits notable potential for application, particularly in scenarios demanding the recognition and comprehensiveness requisite for high-risk project identification. The evaluation results of the TANB model in predicting project risk levels are presented in Table 2 :

Table 2 demonstrates that the TANB model surpasses the traditional Naive Bayes model across multiple evaluation metrics, including accuracy, precision, and recall. This signifies that, by accounting for feature interdependence, the TANB model can more precisely forecast project risk levels. Furthermore, leveraging the model’s predictive outcomes, project managers can devise tailored risk mitigation strategies corresponding to various risk scenarios. For example, in high-risk projects, more assertive measures can be implemented to address risks, while in low-risk projects, risks can be managed more cautiously. This targeted risk management approach contributes to enhancing project success rates, thereby ensuring the seamless advancement of SRPs.

The exceptional performance of the TANB model in specific scenarios derives from its distinctive characteristics and capabilities. Firstly, compared to traditional Naive Bayes models, the TANB model can better capture the dependencies between attributes. In project RA, project features often exhibit complex interactions. The TANB model introduces first-order dependencies between attributes, allowing features to influence each other, thereby more accurately reflecting real-world situations and improving risk prediction precision. Secondly, the TANB model demonstrates strong adaptability and generalization ability in handling multidimensional data. SRPs typically involve data from multiple dimensions, such as project scale, budget investment, and team experience. The TANB model effectively processes these multidimensional data, extracts key information, and achieves accurate RA for projects. Furthermore, the paper explores the potential of using hybrid models or ensemble learning methods to further enhance model performance. By combining other machine learning algorithms, such as random forests and support vector regressors with sigmoid kernel, through ensemble learning, the shortcomings of individual models in specific scenarios can be overcome, thus improving the accuracy and robustness of RA. For example, in the study, we compared the performance of the TANB model with other algorithms in RA, as shown in Table 3 .

Table 3 illustrates that the TANB model surpasses other models in terms of accuracy, precision, recall, F1 value, and AUC value, further confirming its superiority and practicality in RA. Therefore, the TANB model holds significant application potential in SRPs, offering effective decision support for project managers to better evaluate and manage project risks, thereby enhancing the likelihood of project success.

Analysis of the degree of influence of different factors

Table 4 analyzes the degree of influence and interaction of different factors.

In Table 4 , the regression analysis results reveal that budget investment and team experience exert a significantly positive impact on RA outcomes. This suggests that increasing budget allocation and assembling a team with extensive experience can enhance project RA outcomes. Specifically, the regression coefficient for budget investment is 0.68, and for team experience, it is 0.51, both demonstrating significant positive effects (P < 0.05). The P-values are all significantly less than 0.05, indicating a significant impact. The impact of project scale is relatively small, at 0.31, and its P-value is also much less than 0.05. The degree of interaction influence is as follows. The impact of interaction terms is also significant, especially the interaction between budget investment and team experience and the interaction between budget investment and project scale. The P value of the interaction between budget investment and project scale is 0.002, and the P value of the interaction between team experience and project scale is 0.003. The P value of the interaction among budget investment, team experience, and project scale is 0.005. So, there are complex relationships and interactions among different factors, and budget investment and team experience significantly affect the RA results. However, the budget investment and project scale slightly affect the RA results. Project managers should comprehensively consider the interactive effects of different factors when making decisions to more accurately assess the risks of SRPs.

The interaction between team experience and budget investment

The results of the interaction between team experience and budget investment are demonstrated in Table 5 .

From Table 5 , the degree of interaction impact can be obtained. Budget investment and team experience, along with the interaction between project scale and technical difficulty, are critical factors in risk mitigation. Particularly in scenarios characterized by large project scales and high technical difficulties, adequate budget allocation and a skilled team can substantially reduce project risks. As depicted in Table 5 , under conditions of high team experience and sufficient budget investment, the average RA outcome is 0.895 with a standard deviation of 0.012, significantly lower than assessment outcomes under other conditions. This highlights the synergistic effects of budget investment and team experience in effectively mitigating risks in complex project scenarios. The interaction between team experience and budget investment has a significant impact on RA results. Under high team experience, the impact of different budget investment levels on RA results is not significant, but under medium and low team experience, the impact of different budget investment levels on RA results is significantly different. The joint impact of team experience and budget investment is as follows. Under high team experience, the impact of budget investment is relatively small, possibly because high-level team experience can compensate for the risks brought by insufficient budget to some extent. Under medium and low team experience, the impact of budget investment is more significant, possibly because the lack of team experience makes budget investment play a more important role in RA. Therefore, team experience and budget investment interact in RA of SRPs. They need to be comprehensively considered in project decision-making. High team experience can compensate for the risks brought by insufficient budget to some extent, but in the case of low team experience, the impact of budget investment on RA is more significant. An exhaustive consideration of these factors and their interplay is imperative for effectively assessing the risks inherent in SRPs. Merely focusing on budget allocation or team expertise may not yield a thorough risk evaluation. Project managers must scrutinize the project’s scale, technical complexity, and team proficiency, integrating these aspects with budget allocation and team experience. This holistic approach fosters a more precise RA and facilitates the development of tailored risk management strategies, thereby augmenting the project’s likelihood of success. In conclusion, acknowledging the synergy between budget allocation and team expertise, in conjunction with other pertinent factors, is pivotal in the RA of SRPs. Project managers should adopt a comprehensive outlook to ensure sound decision-making and successful project execution.

Risk mitigation strategies

To enhance the discourse on project risk management in this paper, a dedicated section on risk mitigation strategies has been included. Leveraging the insights gleaned from the predictive model regarding identified risk factors and their corresponding risk levels, targeted risk mitigation measures are proposed.

Primarily, given the significant influence of budget investment and team experience on project RA outcomes, project managers are advised to prioritize these factors and devise pertinent risk management strategies.

For risks stemming from budget constraints, the adoption of flexible budget allocation strategies is advocated. This may involve optimizing project expenditures, establishing financial reserves, or seeking additional funding avenues.

In addressing risks attributed to inadequate team experience, measures such as enhanced training initiatives, engagement of seasoned project advisors, or collaboration with experienced teams can be employed to mitigate the shortfall in expertise.

Furthermore, recognizing the impact of project scale, duration, and technical complexity on RA outcomes, project managers are advised to holistically consider these factors during project planning. This entails adjusting project scale as necessary, establishing realistic project timelines, and conducting thorough assessments of technical challenges prior to project commencement.

These risk mitigation strategies aim to equip project managers with a comprehensive toolkit for effectively identifying, assessing, and mitigating risks inherent in SRPs.

This paper delves into the efficacy of the TANB algorithm in project risk prediction. The findings indicate that the algorithm demonstrates commendable performance across diverse projects, boasting high precision, recall rates, and AUC values, thereby outperforming analogous algorithms. This aligns with the perspectives espoused by Asadullah et al. 37 . Particular emphasis was placed on assessing the impact of variables such as budget investment levels, team experience, and project size on algorithmic performance. Notably, heightened budget investment and extensive team experience positively influenced the results, with project size exerting a comparatively minor impact. Regression analysis elucidates the magnitude and interplay of these factors, underscoring the predominant influence of budget investment and team experience on RA outcomes, whereas project size assumes a relatively marginal role. This underscores the imperative for decision-makers in projects to meticulously consider the interrelationships between these factors for a more precise assessment of project risks, echoing the sentiments expressed by Testorelli et al. 38 .

In sum, this paper furnishes a holistic comprehension of the Naive Bayes algorithm’s application in project risk prediction, offering robust guidance for practical project management. The paper’s tangible applications are chiefly concentrated in the realm of RA and management for SRPs. Such insights empower managers in SRPs to navigate risks with scientific acumen, thereby enhancing project success rates and performance. The paper advocates several strategic measures for SRPs management: prioritizing resource adjustments and team training to elevate the professional skill set of team members in coping with the impact of team experience on risks; implementing project scale management strategies to mitigate potential risks by detailed project stage division and stringent project planning; addressing technical difficulty as a pivotal risk factor through assessment and solution development strategies; incorporating project cycle adjustment and flexibility management to accommodate fluctuations and mitigate associated risks; and ensuring the integration of data quality management strategies to bolster data reliability and enhance model accuracy. These targeted risk responses aim to improve the likelihood of project success and ensure the seamless realization of project objectives.

Achievements

In this paper, the application of Naive Bayesian algorithm in RA of SRPs is deeply explored, and the influence of various factors on RA results and their relationship is comprehensively investigated. The research results fully prove the good accuracy and applicability of Naive Bayesian algorithm in RA of science and technology projects. Through probability estimation, the risk level of the project can be estimated more accurately, which provides a new decision support tool for the project manager. It is found that budget input and team experience are the most significant factors affecting the RA results, and their regression coefficients are 0.68 and 0.51 respectively. However, the influence of project scale on the RA results is relatively small, and its regression coefficient is 0.31. Especially in the case of low team experience, the budget input has a more significant impact on the RA results. However, it should also be admitted that there are some limitations in the paper. First, the case data used is limited and the sample size is relatively small, which may affect the generalization ability of the research results. Second, the factors concerned may not be comprehensive, and other factors that may affect RA, such as market changes and policies and regulations, are not considered.

The paper makes several key contributions. Firstly, it applies the Naive Bayes algorithm to assess the risks associated with SRPs, proposing the TANB and validating its effectiveness empirically. The introduction of the TANB model broadens the application scope of the Naive Bayes algorithm in scientific research risk management, offering novel methodologies for project RA. Secondly, the study delves into the impact of various factors on RA for SRPs through MLR analysis, highlighting the significance of budget investment and team experience. The results underscore the positive influence of budget investment and team experience on RA outcomes, offering valuable insights for project decision-making. Additionally, the paper examines the interaction between team experience and budget investment, revealing a nuanced relationship between the two in RA. This finding underscores the importance of comprehensively considering factors such as team experience and budget investment in project decision-making to achieve more accurate RA. In summary, the paper provides crucial theoretical foundations and empirical analyses for SRPs risk management by investigating RA and its influencing factors in depth. The research findings offer valuable guidance for project decision-making and risk management, bolstering efforts to enhance the success rate and efficiency of SRPs.

This paper distinguishes itself from existing research by conducting an in-depth analysis of the intricate interactions among various factors, offering more nuanced and specific RA outcomes. The primary objective extends beyond problem exploration, aiming to broaden the scope of scientific evaluation and research practice through the application of statistical language. This research goal endows the paper with considerable significance in the realm of science and technology project management. In comparison to traditional methods, this paper scrutinizes project risk with greater granularity, furnishing project managers with more actionable suggestions. The empirical analysis validates the effectiveness of the proposed method, introducing a fresh perspective for decision-making in science and technology projects. Future research endeavors will involve expanding the sample size and accumulating a more extensive dataset of SRPs to enhance the stability and generalizability of results. Furthermore, additional factors such as market demand and technological changes will be incorporated to comprehensively analyze elements influencing the risks of SRPs. Through these endeavors, the aim is to provide more precise and comprehensive decision support to the field of science and technology project management, propelling both research and practice in this domain to new heights.

Limitations and prospects

This paper, while employing advanced methodologies like TANB models, acknowledges inherent limitations that warrant consideration. Firstly, like any model, TANB has its constraints, and predictions in specific scenarios may be subject to these limitations. Subsequent research endeavors should explore alternative advanced machine learning and statistical models to enhance the precision and applicability of RA. Secondly, the focus of this paper predominantly centers on the RA for SRPs. Given the unique characteristics and risk factors prevalent in projects across diverse fields and industries, the generalizability of the paper results may be limited. Future research can broaden the scope of applicability by validating the model across various fields and industries. The robustness and generalizability of the model can be further ascertained through the incorporation of extensive real project data in subsequent research. Furthermore, future studies can delve into additional data preprocessing and feature engineering methods to optimize model performance. In practical applications, the integration of research outcomes into SRPs management systems could provide more intuitive and practical support for project decision-making. These avenues represent valuable directions for refining and expanding the contributions of this research in subsequent studies.

Data availability

All data generated or analysed during this study are included in this published article [and its Supplementary Information files].

Moshtaghian, F., Golabchi, M. & Noorzai, E. A framework to dynamic identification of project risks. Smart and sustain. Built. Environ. 9 (4), 375–393 (2020).

Google Scholar

Nunes, M. & Abreu, A. Managing open innovation project risks based on a social network analysis perspective. Sustainability 12 (8), 3132 (2020).

Article Google Scholar

Elkhatib, M. et al. Agile project management and project risks improvements: Pros and cons. Mod. Econ. 13 (9), 1157–1176 (2022).

Fridgeirsson, T. V. et al. The VUCAlity of projects: A new approach to assess a project risk in a complex world. Sustainability 13 (7), 3808 (2021).

Salahuddin, T. Numerical Techniques in MATLAB: Fundamental to Advanced Concepts (CRC Press, 2023).

Book Google Scholar

Awais, M. & Salahuddin, T. Radiative magnetohydrodynamic cross fluid thermophysical model passing on parabola surface with activation energy. Ain Shams Eng. J. 15 (1), 102282 (2024).

Awais, M. & Salahuddin, T. Natural convection with variable fluid properties of couple stress fluid with Cattaneo-Christov model and enthalpy process. Heliyon 9 (8), e18546 (2023).

Article CAS PubMed PubMed Central Google Scholar

Guan, L., Abbasi, A. & Ryan, M. J. Analyzing green building project risk interdependencies using Interpretive Structural Modeling. J. Clean. Prod. 256 , 120372 (2020).

Gaudenzi, B. & Qazi, A. Assessing project risks from a supply chain quality management (SCQM) perspective. Int. J. Qual. Reliab. Manag. 38 (4), 908–931 (2021).

Lee, K. T., Park, S. J. & Kim, J. H. Comparative analysis of managers’ perception in overseas construction project risks and cost overrun in actual cases: A perspective of the Republic of Korea. J. Asian Archit. Build. Eng. 22 (4), 2291–2308 (2023).

Garai-Fodor, M., Szemere, T. P. & Csiszárik-Kocsir, Á. Investor segments by perceived project risk and their characteristics based on primary research results. Risks 10 (8), 159 (2022).

Senova, A., Tobisova, A. & Rozenberg, R. New approaches to project risk assessment utilizing the Monte Carlo method. Sustainability 15 (2), 1006 (2023).

Tiwari, P. & Suresha, B. Moderating role of project innovativeness on project flexibility, project risk, project performance, and business success in financial services. Glob. J. Flex. Syst. Manag. 22 (3), 179–196 (2021).

de Araújo, F., Lima, P., Marcelino-Sadaba, S. & Verbano, C. Successful implementation of project risk management in small and medium enterprises: A cross-case analysis. Int. J. Manag. Proj. Bus. 14 (4), 1023–1045 (2021).

Obondi, K. The utilization of project risk monitoring and control practices and their relationship with project success in construction projects. J. Proj. Manag. 7 (1), 35–52 (2022).

Atasoy, G. et al. Empowering risk communication: Use of visualizations to describe project risks. J. Constr. Eng. Manage. 148 (5), 04022015 (2022).

Dandage, R. V., Rane, S. B. & Mantha, S. S. Modelling human resource dimension of international project risk management. J. Global Oper. Strateg. Sourcing 14 (2), 261–290 (2021).

Wang, L. et al. Applying social network analysis to genetic algorithm in optimizing project risk response decisions. Inf. Sci. 512 , 1024–1042 (2020).

Marx-Stoelting, P. et al. A walk in the PARC: developing and implementing 21st century chemical risk assessment in Europe. Arch. Toxicol. 97 (3), 893–908 (2023).