Deep Learning for Natural Language Processing: A Survey

- Published: 26 June 2023

- Volume 273 , pages 533–582, ( 2023 )

Cite this article

- E. O. Arkhangelskaya 1 &

- S. I. Nikolenko 2 , 3

887 Accesses

4 Citations

1 Altmetric

Explore all metrics

Over the last decade, deep learning has revolutionized machine learning. Neural network architectures have become the method of choice for many different applications; in this paper, we survey the applications of deep learning to natural language processing (NLP) problems. We begin by briefly reviewing the basic notions and major architectures of deep learning, including some recent advances that are especially important for NLP. Then we survey distributed representations of words, showing both how word embeddings can be extended to sentences and paragraphs and how words can be broken down further in character-level models. Finally, the main part of the survey deals with various deep architectures that have either arisen specifically for NLP tasks or have become a method of choice for them; the tasks include sentiment analysis, dependency parsing, machine translation, dialog and conversational models, question answering, and other applications.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Are Deep Learning Approaches Suitable for Natural Language Processing?

Deep Learning Methods in Natural Language Processing

A Review of the Development and Application of Natural Language Processing

Explore related subjects.

- Artificial Intelligence

M. Abadi, A. Agarwal, P. Barham, E. Brevdo, Z. Chen, C. Citro, G. S. Corrado, A. Davis, J. Dean, M. Devin, S. Ghemawat, I. Goodfellow, A. Harp, G. Irving, M. Isard, Y. Jia, R. Jozefowicz, L. Kaiser, M. Kudlur, J. Levenberg, D. Mané, R. Monga, S. Moore, D. Murray, C. Olah, M. Schuster, J. Shlens, B. Steiner, I. Sutskever, K. Talwar, P. Tucker, V. Vanhoucke, V. Vasudevan, F. Viégas, O. Vinyals, P. Warden, M. Wattenberg, M. Wicke, Y. Yu, and X. Zheng, TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems , 2015, Software available from tensorflow.org .

C. Aggarwal and P. Zhao, Graphical Models for Text: A New Paradigm for Text Representation and Processing , SIGIR ’10, ACM (2010), pp. 899–900.

R. Al-Rfou, B. Perozzi, and S. Skiena, “Polyglot: Distributed word representations for multilingual nlp,” in: Proc. 17th Conference on Computational Natural Language Learning (Sofia, Bulgaria), ACL (2013), pp. 183–192.

Google Scholar

G. Angeli and C. D. Manning, “Naturalli: Natural logic inference for common sense reasoning,” in: Proc. 2014 EMNLP (Doha, Qatar), ACL, (2014), pp. 534–545.

E. Arisoy, T. N. Sainath, B. Kingsbury, and B. Ramabhadran, “Deep neural network language models,” in: Proc. NAACL-HLT 2012 Workshop: Will We Ever Really Replace the N-gram Model? On the Future of Language Modeling for HLT, ACL (2012), pp. 20– 28.

J. Ba, V. Mnih, and K. Kavukcuoglu, Multiple Object Recognition With Visual Attention , ICLR’15 (2015). ’

D. Bahdanau, K. Cho, and Y. Bengio, “Neural machine translation by jointly learning to align and translate,” arXiv (2014).

D. Bahdanau, J. Chorowski, D. Serdyuk, P. Brakel, and Y. Bengio, “End-to-end attention-based large vocabulary speech recognition,” arXiv (2015).

M. Ballesteros, C. Dyer, and N. A. Smith, “Improved transition-based parsing by modeling characters instead of words with lstms,” in: Proc. EMNLP 2015 (Lisbon, Portugal), ACL (2015), pp. 349–359.

P. Baltescu and P. Blunsom, “Pragmatic neural language modelling in machine translation,” NAACL HLT 2015, pp. 820–829.

L. Banarescu, C. Bonial, S. Cai, M. Georgescu, K. Griffitt, U. Hermjakob, K. Knight, P. Koehn, M. Palmer, and N. Schneider, “Abstract meaning representation for sembanking,” in: Proc. 7th Linguistic Annotation Workshop and Interoperability with Discourse (Sofia, Bulgaria), ACL (2013), pp. 178–186.

R. E. Banchs, “Movie-dic: A movie dialogue corpus for research and development,” ACL ’12, ACL (2012), pp. 203–207.

M. Baroni and R. Zamparelli, “Nouns are vectors, adjectives are matrices: Representing adjective-noun constructions in semantic space,” EMNLP ’10 , ACL (2010), pp. 1183– 1193.

S. Bartunov, D. Kondrashkin, A. Osokin and D. P. Vetrov, “Breaking sticks and ambiguities with adaptive skip-gram,” Proc. 19th International Conference on Artificial Intelligence and Statistics , AISTATS 2016, Cadiz, Spain (2016), pp. 130–138.

F. Bastien, P. Lamblin, R. Pascanu, J. Bergstra, I. J. Goodfellow, A. Bergeron, N. Bouchard, and Y. Bengio, “Theano: New features and speed improvements,” Deep Learning and Unsupervised Feature Learning NIPS 2012 Workshop (2012).

Y. Bengio, R. Ducharme, and P. Vincent, “A neural probabilistic language model,” J. Machine Learning Research , 3 , 1137–1155 (2003).

MATH Google Scholar

Y. Bengio, “Learning deep architectures for ai,” Foundations and Trends in Machine Learning , 2 , No. 1, 1–127 (2009).

Article MathSciNet MATH Google Scholar

Y. Bengio, “Practical recommendations for gradient-based training of deep architectures,” in: Neural Networks: Tricks of the Trade , Second ed. (2012), pp. 437–478.

Y. Bengio, A. Courville, and P. Vincent, “Representation learning: A review and new perspectives,” IEEE Transactions on Pattern Analysis and Machine Intelligence , 35 , No. 8, 1798–1828 (2013).

Article Google Scholar

Y. Bengio, P. Lamblin, D. Popovici, and H. Larochelle, “Greedy layer-wise training of deep networks,” NIPS’06 , MIT Press (2006), pp. 153–160.

Y. Bengio, H. Schwenk, J.-S. Senécal, F. Morin, and J.-L. Gauvain, “Neural probabilistic language models,” in: Innovations in Machine Learning , Springer (2006), pp. 137–186.

Chapter Google Scholar

Y. Bengio, L. Yao, G. Alain, and P. Vincent, “Generalized denoising auto-encoders as generative models,” arXiv (2013).

J. Berant, A. Chou, R. Frostig, and P. Liang, “Semantic parsing on Freebase from question-answer pairs,” in: Proc. 2013 EMNLP (Seattle, Washington, USA), ACL (2013), pp. 1533–1544.

J. Bergstra, O. Breuleux, F. Bastien, P. Lamblin, R. Pascanu, G. Desjardins, J. Turian, D. Warde-Farley, and Y. Bengio, “Theano: a CPU and GPU math expression compiler,” in: Proc. Python for Scientific Computing Conference (SciPy) (2010), Oral Presentation.

D. P. Bertsekas, Convex Analysis and Optimization , Athena Scientific (2003).

J. Bian, B. Gao, and T.-Y. Liu, “Knowledge-powered deep learning for word embedding,” in: Machine Learning and Knowledge Discovery in Databases , Springer (2014), pp. 132–148.

C. M. Bishop, Pattern Recognition and Machine Learning , Springer (2006).

K. Bollacker, C. Evans, P. Paritosh, T. Sturge, and J. Taylor, “Freebase: A collaboratively created graph database for structuring human knowledge,” in: SIGMOD ’08 , ACM (2008), pp. 1247–1250.

D. Bollegala, T. Maehara, and K.-i. Kawarabayashi, “Unsupervised cross-domain word representation learning,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 730–740.

F. Bond and K. Paik, A Survey of WordNets and their Licenses , GWC 2012 (2012), p. 64–71.

A. Bordes, X. Glorot, J. Weston, and Y. Bengio, Joint Learning of Words and Meaning Representations for Open-Text Semantic Parsing , JMLR (2012).

A. Bordes, X. Glorot, J. Weston, and Y. Bengio, “A semantic matching energy function for learning with multi-relational data,” Machine Learning , 94 , No. 2, 233–259 (2013).

A. Bordes, N. Usunier, S. Chopra, and J. Weston, “Large-scale simple question answering with memory networks,” arXiv (2015).

A. Borisov, I. Markov, M. de Rijke, and P. Serdyukov, “A neural click model for web search,” in: WWW ’16 , ACM (2016) (to appear).’

E. Boros, R. Besançon, O. Ferret, and B. Grau, “Event role extraction using domainrelevant word representations,” in: Proc. 2014 EMNLP (Doha, Qatar), ACL (2014), pp. 1852–1857.

J. A. Botha and P. Blunsom, “Compositional morphology for word representations and language modelling,” in Proc. 31th ICML (2014), pp. 1899–1907.

H. Bourlard and Y. Kamp, Auto-Association by Multilayer Perceptrons and Singular Value Decomposition , Manuscript M217, Philips Research Laboratory, Brussels, Belgium (1987).

O. Bousquet, U. Luxburg, and G. Ratsch (eds.), Advanced Lectures on Machine Learning , Springer (2004).

S. R. Bowman, C. Potts, and C. D. Manning, “Learning distributed word representations for natural logic reasoning,” arXiv (2014).

S. R. Bowman, C. Potts, and C. D. Manning, “Recursive neural networks for learning logical semantics,” arXiv (2014).

A. Bride, T. Van de Cruys, and N. Asher, “A generalisation of lexical functions for composition in distributional semantics,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 281–291.

P. F. Brown, P. V. deSouza, R. L. Mercer, V. J. D. Pietra, and J. C. Lai, “Class-based n-gram models of natural language,” Comput. Linguist. , 18 , No. 4, 467–479 (1992).

P. F. Brown, V. J. D. Pietra, S. A. D. Pietra, and R. L. Mercer, “The mathematics of statistical machine translation: Parameter estimation,” Comput. Linguist. , 19 , No. 2, 263–311 (1993).

J. Buysand P. Blunsom, “Generative incremental dependency parsing with neural networks,” in: Proc. 53rd ACL and the 7th International Joint Conference on Natural Language Processing of the Asian Federation of Natural Language Processing , Vol. 2, Short Papers (2015), pp. 863–869.

E. Cambria, “Affective computing and sentiment analysis,” IEEE Intelligent Systems , 31 , No. 2 (2016).

Z. Cao, S. Li, Y. Liu, W. Li, and d H. Ji, “A novel neural topic model and its supervised extension,” in: Proc. 29th AAAI Conference on Artificial Intelligence , January 25-30, 2015, Austin, Texas (2015), pp. 2210–2216.

X. Carreras and L. Marquez, “Introduction to the conll-2005 shared task: Semantic role labeling,” in: CONLL ’05 , ACL (2005), pp. 152–164.

B. Chen and H. Guo, “Representation based translation evaluation metrics,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 2, Short Papers (Beijing, China), ACL (2015), pp. 150–155.

D. Chen, R. Socher, C. D. Manning, and A. Y. Ng, “Learning new facts from knowledge bases with neural tensor networks and semantic word vectors,” in: International Conference on Learning Representations (ICLR) (2013).

M. Chen, Z. E. Xu, K. Q. Weinberger, and F. Sha, “Marginalized denoising autoencoders for domain adaptation,” in: Proc. 29th ICML, icml.cc / Omnipress (2012).

S. F. Chen and J. Goodman, “An empirical study of smoothing techniques for language modeling,” in: ACL ’96 , ACL (1996), pp. 310–318.

X. Chen, Y. Zhou, C. Zhu, X. Qiu, and X. Huang, “Transition-based dependency parsing using two heterogeneous gated recursive neural networks,” in: Proc. EMNLP 2015 (Lisbon, Portugal) , ACL (2015), pp. 1879–1889.

Y. Chen, L. Xu, K. Liu, D. Zeng, and J. Zhao, “Event extraction via dynamic multipooling convolutional neural networks,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 167–176.

S. Chetlur, C. Woolley, P. Vandermersch, J. Cohen, J. Tran, B. Catanzaro, and E. Shelhamer, “cudnn: Efficient primitives for deep learning,” arXiv (2014).

K. Cho, Introduction to Neural Machine Translation With Gpus (2015).

K. Cho, B. van Merrienboer, D. Bahdanau, and Y. Bengio, “On the properties of neural machine translation: Encoder-decoder approaches,” arXiv (2014).

K. Cho, B. van Merrienboer, C. Gulcehre, D. Bahdanau, F. Bougares, H. Schwenk, and Y. Bengio, “Learning phrase representations using rnn encoder–decoder for statistical machine translation,” in: Proc. 2014 EMNLP (Doha, Qatar), ACL (2014), pp. 1724–1734.

K. Cho, B. van Merrienboer, Ç. Gulçehre, D. Bahdanau, F. Bougares, H. Schwenk, and Y. Bengio, “Learning phrase representations using RNN encoder-decoder for statistical machine translation,” in: Proc. EMNLP 2014 , pp. 1724–1734.

F. Chollet, “Keras”, https://github.com/fchollet/keras (2015).

J. Chorowski, D. Bahdanau, D. Serdyuk, K. Cho, and Y. Bengio, “Attention-based models for speech recognition,” arXiv (2015).

J. Chung, K. Cho, and Y. Bengio, “A character-level decoder without explicit segmentation for neural machine translation,” arXiv (2016).

J. Chung, Ç. Gulçehre, K. Cho, and Y. Bengio, “Empirical evaluation of gated recurrent neural networks on sequence modeling,” arXiv (2014).

S. Clark, B. Coecke, and M. Sadrzadeh, “A compositional distributional model of meaning,” in: Proc. Second Symposium on Quantum Interaction (QI-2008) (2008), 133–140.

S. Clark, B. Coecke, and M. Sadrzadeh, “Mathematical foundations for a compositional distributed model of meaning,” Linguistic Analysis , 36 , Nos. 1–4, 345–384 (2011).

B. Coecke, M. Sadrzadeh, and S. Clark, “Mathematical foundations for a compositional distributional model of meaning,” arXiv (2010).

R. Collobert, S. Bengio, and J. Marithoz, Torch: A Modular Machine Learning Software Library (2002).

R. Collobert and J. Weston, “A unified architecture for natural language processing: Deep neural networks with multitask learning,” in: Proc. 25th International Conference on Machine Learning , ACM (2008), pp. 160–167.

R. Collobert, J. Weston, L. Bottou, M. Karlen, K. Kavukcuoglu, and P. Kuksa, “Natural language processing (almost) from scratch,” J. Machine Learning Research , 12 , 2493– 2537 (2011).

T. Cooijmans, N. Ballas, C. Laurent, and A. Courville, “Recurrent batch normalization,” arXiv (2016).

L. Deng and Y. Liu (eds.), Deep Learning in Natural Language Processing , Springer (2018).

L. Deng and D. Yu, “Deep learning: Methods and applications,” Foundations and Trends in Signal Processing , 7 , No. 3–4, 197–387 (2014).

L. Deng and D. Yu, “Deep learning: Methods and applications,” Foundations and Trends in Signal Process , 7 , No. 3–4, 197–387 (2014).

J. Devlin, R. Zbib, Z. Huang, T. Lamar, R. Schwartz, and J. Makhoul, “Fast and robust neural network joint models for statistical machine translation,” in: Proc. 52nd ACL , Vol. 1, Long Papers (Baltimore, Maryland), ACL (2014), pp. 1370–1380.

N. Djuric, H. Wu, V. Radosavljevic, M. Grbovic, and N. Bhamidipati, “Hierarchical neural language models for joint representation of streaming documents and their content,” in: WWW ’15 , ACM (2015), pp. 248–255.

B. Dolan, C. Quirk, and C. Brockett, “Unsupervised construction of large paraphrase corpora: Exploiting massively parallel news sources,” in: COLING ’04 , ACL (2004).

L. Dong, F. Wei, M. Zhou, and K. Xu, “Question answering over freebase with multicolumn convolutional neural networks,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 260–269.

S. A. Duffy, J. M. Henderson, and R. K. Morris, “Semantic facilitation of lexical access during sentence processing,” J. Experimental Psychology: Learning, Memory, and Cognition , 15 , 791–801 (1989).

G. Durrett and D. Klein, “Neural CRF parsing,” arXiv (2015).

C. Dyer, M. Ballesteros, W. Ling, A. Matthews, and N. A. Smith, “Transition-based dependency parsing with stack long short-term memory,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 334–343.

J. L. Elman, “Finding structure in time,” Cognitive Science , 14 , No. 2, 179–211 (1990).

K. Erk, “Representing words as regions in vector space,” in: CoNLL ’09 , ACL (2009), pp. 57–65.

A. Fader, L. Zettlemoyer, and O. Etzioni, “Paraphrase-driven learning for open question answering,” in: Proc. 51st ACL , Vol. 1, Long Papers (Sofia, Bulgaria), ACL (2013), pp. 1608–1618.

C. Fellbaum (ed.), WordNet: An Electronic Lexical Database , MIT Press, Cambridge, MA (1998).

C. Fellbaum, Wordnet and Wordnets, Encyclopedia of Language and Linguistics , (K. Brown, ed.), Elsevier (2005), pp. 665–670.

D. A. Ferrucci, E. W. Brown, J. Chu-Carroll, J. Fan, D. Gondek, A. Kalyanpur, A. Lally, J. W. Murdock, E. Nyberg, J. M. Prager, N. Schlaefer, and C. A. Welty, “Building Watson: An overview of the DeepQA project,” AI Magazine , 31 , No. 3, 59–79 (2010).

O. Firat, K. Cho, and Y. Bengio, “Multi-way, multilingual neural machine translation with a shared attention mechanism,” arXiv (2016).

D. Fried, T. Polajnar, and S. Clark, “Low-rank tensors for verbs in compositional distributional semantics,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 2, Short Papers (Beijing, China), ACL (2015), pp. 731–736.

K. Fukushima, “Neural network model for a mechanism of pattern recognition unaffected by shift in position — Neocognitron,” Transactions of the IECE , J62-A(10) , 658–665 (1979).

K. Fukushima, “Neocognitron: A self-organizing neural network for a mechanism of pattern recognition unaffected by shift in position,” Biological Cybernetics , 36 , No. 4, 193–202 (1980).

Y. Gal, “A theoretically grounded application of dropout in recurrent neural networks,” arXiv:1512.05287 (2015).

Y. Gal and Z. Ghahramani, “Dropout as a Bayesian approximation: Insights and applications,” in: Deep Learning Workshop , ICML (2015).

J. Gao, X. He, W. tau Yih, and L. Deng, “Learning continuous phrase representations for translation modeling,” in: Proc. ACL 2014 , ACL (2014).

J. Gao, P. Pantel, M. Gamon, X. He, L. Deng, and Y. Shen, Modeling Interestingness With Deep Neural Networks , EMNLP (2014).

F. A. Gers, J. Schmidhuber, and F. Cummins, “Learning to forget: Continual prediction with LSTM,” Neural Computation 12 , No. 10, 2451–2471 (2000).

F. A. Gers and J. Schmidhuber, “Recurrent nets that time and count,” in: Neural Networks , 2000. IJCNN 2000, Proc. IEEE-INNS-ENNS International Joint Conference on, Vol. 3, IEEE (2000), pp. 189–194.

L. Getoor and B. Taskar, Introduction to Statistical Relational Learning (Adaptive Computation and Machine Learning) , MIT Press (2007).

Book MATH Google Scholar

F. Girosi, M. Jones, and T. Poggio, “Regularization theory and neural networks architectures,” Neural Computation , 7 , No. 2, 219–269 (1995).

X. Glorot and Y. Bengio, “Understanding the difficulty of training deep feedforward neural networks,” in: International Conference on Artificial Intelligence and Statistics (2010), pp. 249–256.

X. Glorot, A. Bordes, and Y. Bengio, “Deep sparse rectifier networks,” AISTATS , 15 , 315–323 (2011).

X. Glorot, A. Bordes, and Y. Bengio, “Domain adaptation for large-scale sentiment classification: A deep learning approach,” in: Proc. 28th ICML (2011), pp. 513–520.

Y. Goldberg, “A primer on neural network models for natural language processing,” arXiv (2015).

I. Goodfellow, Y. Bengio, and A. Courville, Deep Learning , MIT Press (2016), http://www.deeplearningbook.org .

J. T. Goodman, “A bit of progress in language modeling,” Comput. Speech Lang. , 15 , No. 4, 403–434 (2001).

A. Graves, “Generating sequences with recurrent neural networks,” arXiv (2013).

A. Graves, S. Fernandez, and J. Schmidhuber, “Bidirectional LSTM networks for improved phoneme classification and recognition,” in: Artificial Neural Networks: Formal Models and Their Applications – ICANN 2005, 15th International Conference , Warsaw, Poland, Proceedings, Part II (2005), pp. 799–804.

A. Graves and J. Schmidhuber, “Framewise phoneme classification with bidirectional LSTM and other neural network architectures,” Neural Networks , 18 , Nos. 5–6, 602–610 (2005).

E. Grefenstette, “Towards a formal distributional semantics: Simulating logical calculi with tensors,” arXiv (2013).

E. Grefenstette and M. Sadrzadeh, “Experimental support for a categorical compositional distributional model of meaning,” in: EMNLP ’11 , ACL (2011), pp. 1394–1404.

E. Grefenstette, M. Sadrzadeh, S. Clark, B. Coecke, and S. Pulman, “Concrete sentence spaces for compositional distributional models of meaning,” in: Proc. 9th International Conference on Computational Semantics (IWCS11) (2011), 125–134.

E. Grefenstette, M. Sadrzadeh, S. Clark, B. Coecke, and S. Pulman, “Concrete sentence spaces for compositional distributional models of meaning,” in: Computing Meaning , Springer (2014), pp. 71–86.

K. Greff, R. K. Srivastava, J. Koutník, B. R. Steunebrink, and J. Schmidhuber, “LSTM: A search space odyssey,” arXiv (2015).

J. Gu, Z. Lu, H. Li, and V. O. K. Li, “Incorporating copying mechanism in sequence-tosequence learning,” arXiv (2016).

H. Guo, “Generating text with deep reinforcement learning,” arXiv (2015).

S. Guo, Q.Wang, B.Wang, L.Wang, and L. Guo, “Semantically smooth knowledge graph embedding,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 84–94.

R. Gupta, C. Orasan, and J. van Genabith, “Reval: A simple and effective machine translation evaluation metric based on recurrent neural networks,” in: Proc. 2015 EMNLP (Lisbon, Portugal), ACL (2015), pp. 1066–1072.

F. Guzmán, S. Joty, L. Marquez, and P. Nakov, “Pairwise neural machine translation evaluation,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 805–814.

D. Hall, G. Durrett, and D. Klein, “Less grammar, more features,” in: Proc. 52nd ACL , Vol. 1, Long Papers (Baltimore, Maryland), ACL (2014), pp. 228–237.

A. L. F. Han, D. F. Wong, and L. S. Chao, “LEPOR: A robust evaluation metric for machine translation with augmented factors,” in: Proc. COLING 2012: Posters (Mumbai, India), The COLING 2012 Organizing Committee (2012), pp. 441–450.

S. J. Hanson and L. Y. Pratt, “Comparing biases for minimal network construction with back-propagation,” in: Advances in Neural Information Processing Systems (NIPS) 1 (D. S. Touretzky, ed.), San Mateo, CA: Morgan Kaufmann (1989), pp. 177–185.

K. He, X. Zhang, S. Ren, and J. Sun, “Delving deep into rectifiers: Surpassing humanlevel performance on imagenet classification,” in: Proc. ICCV (2015), pp. 1026–1034.

K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in: Proc. 2016 CVPR (2016), pp. 770–778.

K. M. Hermann and P. Blunsom, “Multilingual models for compositional distributed semantics,” in: Proc. 52nd ACL , Vol. 1, Long Papers (Baltimore, Maryland), ACL (2014), pp. 58–68.

K. M. Hermann, T. Ko˘cisky, E. Grefenstette, L. Espeholt, W. Kay, M. Suleyman, and P. Blunsom, “Teaching machines to read and comprehend,” arXiv (2015).

F. Hill, K. Cho, and A. Korhonen, “Learning distributed representations of sentences from unlabelled data,” arXiv (2016).

G. E. Hinton and J. L. McClelland, “Learning representations by recirculation,” Neural Information Processing Systems (D. Z. Anderson, ed.), American Institute of Physics (1988), pp. 358–366.

G. E. Hinton, S. Osindero, and Y.-W. Teh, “A fast learning algorithm for deep belief nets,” Neural Computation , 18 , No. 7, 1527–1554 (2006).

G. E. Hinton and R. S. Zemel, “Autoencoders, minimum description length and helmholtz free energy,” in: Advances in Neural Information Processing Systems 6 (J. D. Cowan, G. Tesauro, and J. Alspector, eds.), Morgan-Kaufmann (1994), pp. 3–10.

S. Hochreiter, Untersuchungen zu dynamischen neuronalen Netzen, Diploma thesis, Institut fur Informatik, Lehrstuhl Prof. Brauer, Technische Universitat Munchen (1991), Advisor: J. Schmidhuber.

S. Hochreiter, Y. Bengio, P. Frasconi, and J. Schmidhuber, Gradient Flow in Recurrent Nets: the Difficulty of Learning Long-Term Dependencies , A Field Guide to Dynamical Recurrent Neural Networks (S. C. Kremer and J. F. Kolen, eds.), IEEE Press (2001).

S. Hochreiter and J. Schmidhuber, Long Short-Term Memory , Tech. Report FKI-207-95, Fakultat fur Informatik, Technische Universitat Munchen (1995).

S. Hochreiter and J. Schmidhuber, “Long Short-Term Memory,” Neural Computation , 9 , No. 8, 1735–1780 (1997).

B. Hu, Z. Lu, H. Li, and Q. Chen, “Convolutional neural network architectures for matching natural language sentences,” in: Advances in Neural Information Processing Systems 27 (Z. Ghahramani, M. Welling, C. Cortes, N. D. Lawrence, and K. Q. Weinberger, eds.), Curran Associates, Inc. (2014), pp. 2042–2050.

E. H. Huang, R. Socher, C. D. Manning, and A. Y. Ng, “Improving word representations via global context and multiple word prototypes,” in: ACL ’12 , ACL (2012), pp. 873–882.

E. H. Huang, R. Socher, C. D. Manning, and A. Y. Ng, “Improving word representations via global context and multiple word prototypes,” in: Proc. 50th ACL: Long Papers- Volume 1, ACL (2012), pp. 873–882.

P.-S. Huang, X. He, J. Gao, L. Deng, A. Acero, and L. Heck, “Learning deep structured semantic models for web search using clickthrough data,” in: Proc. CIKM (2013).

D. H. Hubel and T. N. Wiesel, “Receptive fields and functional architecture of monkey striate cortex,” J. Physiology , 195 , 215–243 (1968).

S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” arXiv (2015).

O. Irsoy and C. Cardie, “Opinion mining with deep recurrent neural networks,” in: Proc. EMNLP (2014), pp. 720–728.

M. Iyyer, J. Boyd-Graber, L. Claudino, R. Socher, and H. Daumé III, “A neural network for factoid question answering over paragraphs,” in: Empirical Methods in NaturalLanguage Processing (2014).

K. Jarrett, K. Kavukcuoglu, M. Ranzato, and Y. LeCun, “What is the best multi-stage architecture for object recognition?,” in: Proc. 12th ICCV (2009), pp. 2146–2153.

S. Jean, K. Cho, R. Memisevic, and Y. Bengio, “On using very large target vocabulary for neural machine translation,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 1–10.

Y. Jia, E. Shelhamer, J. Donahue, S. Karayev, J. Long, R. B. Girshick, S. Guadarrama, and T. Darrell, “Caffe: Convolutional architecture for fast feature embedding,” arXiv (2014).

M. Joshi, M. Dredze, W. W. Cohen, and C. P. Rosé, “What’s in a domain? multi-domain learning for multi-attribute data,” in: Proc. 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Atlanta, Georgia), ACL (2013), pp. 685–690.

A. Joulin and T. Mikolov, “Inferring algorithmic patterns with stack-augmented recurrent nets,” arXiv (2015).

M. Kageback, O. Mogren, N. Tahmasebi, and D. Dubhashi, “Extractive summarization using continuous vector space models,” in: Proc. 2nd Workshop on Continuous Vector Space Models and Their Compositionality (CVSC)@ EACL (2014), pp. 31–39.

L. Kaiser and I. Sutskever, “Neural gpus learn algorithms,” arXiv (2015).

N. Kalchbrenner and P. Blunsom, “Recurrent continuous translation models,” EMNLP , 3 , 413 (2013).

N. Kalchbrenner and P. Blunsom, “Recurrent convolutional neural networks for discourse compositionality,” arXiv (2013).

N. Kalchbrenner, E. Grefenstette, and P. Blunsom, “A convolutional neural network for modelling sentences,” arXiv (2014).

N. Kalchbrenner, E. Grefenstette, and P. Blunsom, “A convolutional neural network for modelling sentences,” in: Proc. 52nd ACL , Vol. 1, Long Papers (Baltimore, Maryland), ACL (2014), pp. 655–665.

A. Karpathy, The Unreasonable Effectiveness of Recurrent Neural Networks (2015).

D. Kartsaklis, M. Sadrzadeh, and S. Pulman, “A unified sentence space for categorical distributional-compositional semantics: Theory and experiments,” in: Proc. 24th International Conference on Computational Linguistics (COLING): Posters (Mumbai, India) (2012), pp. 549–558.

T. Kenter and M. de Rijke, “Short text similarity with word embeddings,” in: CIKM ’15 , ACM (2015), pp. 1411–1420.

Y. Kim, “Convolutional neural networks for sentence classification,” in: Proc. 2014 EMNLP (Doha, Qatar), ACL (2014), pp. 1746–1751.

Y. Kim, Y. Jernite, D. Sontag, and A. M. Rush, “Character-aware neural language models,” arXiv (2015).

D. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv (2014).

D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv (2014).

D. P. Kingma, T. Salimans, M. Welling, “Variational dropout and the local reparameterization trick,” in: Advances in Neural Information Processing Systems 28 (C. Cortes, N. D. Lawrence, D. D. Lee, M. Sugiyama, and R. Garnett, eds.), Curran Associates, Inc. (2015), pp. 2575–2583.

S. Kiritchenko, X. Zhu, and S. M. Mohammad, “Sentiment analysis of short informal texts,” J. Artificial Intelligence Research , 723–762 (2014).

R. Kiros, Y. Zhu, R. R. Salakhutdinov, R. Zemel, R. Urtasun, A. Torralba, and S. Fidler, “Skip-thought vectors,” in: Advances in Neural Information Processing Systems 28 (C. Cortes, N. D. Lawrence, D. D. Lee, M. Sugiyama, and R. Garnett, eds.), Curran Associates, Inc. (2015), pp. 3294–3302.

R. Kneser and H. Ney, “Improved backing-off for m-gram language modeling,” in: Proc. ICASSP-95 , Vol. 1 (1995), pp. 181–184.

P. Koehn, Statistical Machine Translation , 1st ed., Cambridge University Press, New York, USA (2010).

O. Kolomiyets and M.-F. Moens, “A survey on question answering technology from an information retrieval perspective,” Inf. Sci. 181 , No. 24, 5412–5434 (2011).

Article MathSciNet Google Scholar

A. Krogh and J. A. Hertz, “A simple weight decay can improve generalization,” in: Advances in Neural Information Processing Systems 4 (D. S. Lippman, J. E. Moody, and D. S. Touretzky, eds.), Morgan Kaufmann (1992), pp. 950–957.

A. Kumar, O. Irsoy, J. Su, J. Bradbury, R. English, B. Pierce, P. Ondruska, I. Gulrajani, and R. Socher, “Ask me anything: Dynamic memory networks for natural language processing,” arXiv (2015).

J. Lafferty, A. McCallum, and F. C. Pereira, Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data .

T. . Landauer and S. T. Dumais, “A solution to plato’s problem: The latent semantic analysis theory of acquisition, induction, and representation of knowledge,” Psychological review , 104 , No. 2, 211–240 (1997).

H. Larochelle, D. Erhan, A. Courville, J. Bergstra, and Y. Bengio, “An empirical evaluation of deep architectures on problems with many factors of variation,” in: ICML ’07 , ACM (2007), pp. 473–480.

H. Larochelle and G. E. Hinton, “Learning to combine foveal glimpses with a third-order boltzmann machine,” in: Advances in Neural Information Processing Systems 23 (J. D. Lafferty, C. K. I. Williams, J. Shawe-Taylor, R. S. Zemel, and A. Culotta, eds.), Curran Associates, Inc. (2010), pp. 1243–1251.

A. Lavie, K. Sagae, and S. Jayaraman, The Significance of Recall in Automatic Metrics for MT Evaluation , Springer Berlin Heidelberg, Berlin, Heidelberg (2004), pp. 134–143.

Q. V. Le, N. Jaitly, and G. E. Hinton, “A simple way to initialize recurrent networks of rectified linear units,” arXiv (2015).

Q. V. Le and T. Mikolov, “Distributed representations of sentences and documents,” arXiv (2014).

Y. LeCun, “Une procédure d’apprentissage pour réseau a seuil asymétrique,” in: Proc. Cognitiva 85 , Paris (1985), pp. 599–604.

Y. LeCun, Modeles Connexionnistes de l’apprentissage (connectionist learning models) , Ph.D. thesis, Université P. et M. Curie (Paris 6) (1987).

Y. LeCun, “A theoretical framework for back-propagation,” in: Proc. 1988 Connectionist Models Summer School (CMU, Pittsburgh, Pa) (D. Touretzky, G. Hinton, and T. Sejnowski, eds.), Morgan Kaufmann (1988), pp. 21–28.

Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” in: Intelligent Signal Processing , IEEE Press (2001), pp. 306– 351.

Y. LeCun and F. Fogelman-Soulie, Modeles Connexionnistes de l’apprentissage , Intellectica, special issue apprentissage et machine (1987).

Y. LeCun, Y. Bengio, and G. Hinton, “Human-level control through deep reinforcement learning,” Nature , 521 , 436–444 (2015).

Y. LeCun, K. Kavukcuoglu, and C. Farabet, “Convolutional networks and applications in vision,” in: Proc. ISCAS 2010 (2010), pp. 253–256.

O. Levy, Y. Goldberg, and I. Ramat-Gan, “Linguistic regularities in sparse and explicit word representations,” in: CoNLL (2014), pp. 171–180.

J. Li, W. Monroe, A. Ritter, D. Jurafsky, M. Galley, and J. Gao, “Deep reinforcement learning for dialogue generation,” in: Proc. 2016 Conference on Empirical Methods in Natural Language Processing , EMNLP 2016, Austin, Texas, USA (2016), pp. 1192–1202.

T. P. Lillicrap, J. J. Hunt, A. Pritzel, N. Heess, T. Erez, Y. Tassa, D. Silver, and D. Wierstra, “Continuous control with deep reinforcement learning,” arXiv (2015).

C. Lin, Y. He, R. Everson, and S. Ruger, “Weakly supervised joint sentiment-topic detection from text,” IEEE Transactions on Knowledge and Data Engineering , 24 , No. 6, 1134–1145 (2012).

C.-Y. Lin and F. J. Och, “Automatic evaluation of machine translation quality using longest common subsequence and skip-bigram statistics,” in: ACL ’04 , ACL (2004).

Z. Lin, W. Wang, X. Jin, J. Liang, and D. Meng, “A word vector and matrix factorization based method for opinion lexicon extraction,” in: WWW ’15 Companion , ACM (2015), pp. 67–68.

W. Ling, C. Dyer, A. W. Black, I. Trancoso, R. Fermandez, S. Amir, L. Marujo, and T. Luis, “Finding function in form: Compositional character models for open vocabulary word representation,” in Proc. EMNLP 2015 (Lisbon, Portugal), ACL (2015), pp. 1520– 1530.

S. Linnainmaa, “The representation of the cumulative rounding error of an algorithm as a taylor expansion of the local rounding errors,” Master’s thesis, Univ. Helsinki (1970).

B. Liu, Sentiment Analysis and Opinion Mining , Synthesis Lectures on Human Language Technologies, vol. 5, Morgan & Claypool Publishers (2012).

B. Liu, Sentiment Analysis: Mining Opinions, Sentiments, and Emotions , Cambridge University Press (2015).

Book Google Scholar

C. Liu, R. Lowe, I. Serban, M. Noseworthy, L. Charlin, and J. Pineau, “How NOT to evaluate your dialogue system: An empirical study of unsupervised evaluation metrics for dialogue response generation,” in: Proc. EMNLP 2016 (2016), pp. 2122–2132.

P. Liu, X. Qiu, and X. Huang, “Learning context-sensitive word embeddings with neural tensor skip-gram model,” in: IJCAI’15 , AAAI Press (2015), pp. 1284–1290.

Y. Liu, Z. Liu, T.-S. Chua, and M. Sun, “Topical word embeddings,” in: AAAI’15 , AAAI Press (2015), pp. 2418–2424.

A. Lopez, “Statistical machine translation,” ACM Comput. Surv. , 40 , No. 3, 8:1–8:49 (2008).

R. Lowe, M. Noseworthy, I. V. Serban, N. Angelard-Gontier, Y. Bengio, and J. Pineau, “Towards an automatic turing test: Learning to evaluate dialogue responses,” in: Submitted to ICLR 2017 (2017).

R. Lowe, N. Pow, I. Serban, and J. Pineau, “The ubuntu dialogue corpus: A large dataset for research in unstructured multi-turn dialogue systems,” arXiv (2015).

Q. Luo and W. Xu, “Learning word vectors efficiently using shared representations and document representations,” in: AAAI’15 , AAAI Press (2015), pp. 4180–4181.

Q. Luo, W. Xu, and J. Guo, “A study on the cbow model’s overfitting and stability,” in: Web-KR ’14 , ACM (2014), pp. 9–12.

M.-T. Luong, M. Kayser, and C. D. Manning, “Deep neural language models for machine translation,” in: Proc. Conference on Natural Language Learning (CoNLL) (Beijing, China), ACL (2015), pp. 305–309.

M.-T. Luong, R. Socher, and C. D. Manning, “Better word representations with recursive neural networks for morphology,” CoNLL (Sofia, Bulgaria) (2013).

T. Luong, H. Pham, and C. D. Manning, “Effective approaches to attention-based neural machine translation,” in: Proc. 2015 EMNLP (Lisbon, Portugal), ACL, (2015), pp. 1412– 1421.

T. Luong, I. Sutskever, Q. Le, O. Vinyals, and W. Zaremba, “Addressing the rare word problem in neural machine translation,” in: Proc. 53rd ACL and the 7the IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 11–19.

M. Ma, L. Huang, B. Xiang, and B. Zhou, “Dependency-based convolutional neural networks for sentence embedding,” in: Proc. ACL 2015 , Vol. 2, Short Papers (2015), p. 174.

A. L. Maas, R. E. Daly, P. T. Pham, D. Huang, A. Y. Ng, and C. Potts, “Learning word vectors for sentiment analysis,” in: HLT ’11 , ACL (2011), pp. 142–150.

B. MacCartney and C. D. Manning, “An extended model of natural logic,” in: Proc. Eight International Conference on Computational Semantics (Tilburg, The Netherlands), ACL (2009), pp. 140–156.

D. J. MacKay, Information Theory, Inference and Learning Algorithms , Cambridge University Press (2003).

C. D. Manning, Computational Linguistics and Deep Learning , Computational Linguistics (2016).

C. D. Manning, P. Raghavan, and H. Schutze, Introduction to Information Retrieval , Cambridge University Press (2008).

M. Marelli, L. Bentivogli, M. Baroni, R. Bernardi, S. Menini, and R. Zamparelli, Semeval-2014 Task 1: Evaluation of Compositional Distributional Semantic Models on Full Sentences Through Semantic Relatedness and Textual Entailment , SemEval-2014 (2014).

B. Marie and A. Max, “Multi-pass decoding with complex feature guidance for statistical machine translation,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 2, Short Papers (Beijing, China), ACL (2015), pp. 554–559.

W. McCulloch and W. Pitts, “A logical calculus of the ideas immanent in nervous activity,” Bull. Math. Biophysics , 7 , 115–133 (1943).

F. Meng, Z. Lu, M. Wang, H. Li, W. Jiang, and Q. Liu, “Encoding source language with convolutional neural network for machine translation,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 20–30.

T. Mikolov, Statistical Language Models Based on Neural Networks , Ph.D. thesis, Ph. D. thesis, Brno University of Technology (2012).

T. Mikolov, K. Chen, G. Corrado, and J. Dean, “Efficient estimation of word representations in vector space,” arXiv (2013).

T. Mikolov, M. Karafiat, L. Burget, J. Cernocky, and S. Khudanpur, Recurrent Neural Network Based Language Model , INTERSPEECH 2 , 3 (2010).

T. Mikolov, S. Kombrink, L. Burget, J. H. Cernocky, and S. Khudanpur, “Extensions of recurrent neural network language model,” in Acoustics, Speech and Signal Processing (ICASSP), 2011 IEEE International Conference on, IEEE (2011), pp. 5528–5531.

T. Mikolov, I. Sutskever, K. Chen, G. Corrado, and J. Dean, “Distributed representations of words and phrases and their compositionality,” arXiv (2013).

J. Mitchell and M. Lapata, “Composition in distributional models of semantics,” Cognitive Science , 34 , No. 8, 1388–1429 (2010).

A. Mnih and G. E. Hinton, “A scalable hierarchical distributed language model,” in: Advances in Neural Information Processing Systems (2009), pp. 1081–1088.

A. Mnih and K. Kavukcuoglu, “Learning word embeddings efficiently with noisecontrastive estimation,” in: Advances in Neural Information Processing Systems 26 (C. J. C. Burges, L. Bottou, M. Welling, Z. Ghahramani, and K. Q. Weinberger, eds.), Curran Associates, Inc. (2013), pp. 2265–2273.

V. Mnih, N. Heess, A. Graves, and k. Kavukcuoglu, “Recurrent models of visual attention,” in: Advances in Neural Information Processing Systems 27 (Z. Ghahramani, M. Welling, C. Cortes, N. D. Lawrence, and K. Q. Weinberger, eds.), Curran Associates, Inc. (2014), pp. 2204–2212.

V. Mnih, K. Kavukcuoglu, D. Silver, A. Graves, I. Antonoglou, D.Wierstra, and M. Riedmiller, “Playing atari with deep reinforcement learning,” in: NIPS Deep Learning Workshop (2013).

V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski, S. Petersen, C. Beattie, A. Sadik, I. Antonoglou, H. King, D. Kumaran, D. Wierstra, S. Legg, and D. Hassabis, “Humanlevel control through deep reinforcement learning,” Nature , 518 , No. 7540, 529–533 (2015).

G. Montavon, G. B. Orr, and K. Muller (eds.), Neural Networks: Tricks of the Trade (second ed), Lect. Notes Computer Sci., Vol. 7700, Springer (2012).

L. Morgenstern and C. L. Ortiz, “The winograd schema challenge: Evaluating progress in commonsense reasoning,” in: AAAI’15 , AAAI Press (2015), pp. 4024–4025.

K. P. Murphy, Machine Learning: a Probabilistic Perspective , Cambridge University Press (2013).

A. Neelakantan, B. Roth, and A. McCallum, “Compositional vector space models for knowledge base completion,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 156–166.

V. Ng and C. Cardie, “Improving machine learning approaches to coreference resolution,” in: ACL ’02 , ACL (2002), pp. 104–111.

Y. Oda, G. Neubig, S. Sakti, T. Toda, and S. Nakamura, ‘Syntax-based simultaneous translation through prediction of unseen syntactic constituents,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 198–207.

M. Osborne, S. Moran, R. McCreadie, A. Von Lunen, M. Sykora, E. Cano, N. Ireson, C. Macdonald, I. Ounis, Y. He, T. Jackson, F. Ciravegna, and A. O’Brien, “Real-time detection, tracking, and monitoring of automatically discovered events in social media,” in: Proc. 52nd ACL: System Demonstrations (Baltimore, Maryland), ACL (2014), pp. 37– 42.

B. Pang and L. Lee, “Seeing stars: Exploiting class relationships for sentiment categorization with respect to rating scales,” in: ACL ’05 , ACL (2005), pp. 115–124.

B. Pang and L. Lee, “Opinion mining and sentiment analysis,” Foundations and Trends in Information Retrieval , 2 , Nos. 1–2, 1–135 (2008).

P. Pantel, “Inducing ontological co-occurrence vectors,” in: ACL ’05 , ACL (2005), pp. 125–132.

D. Paperno, N. T. Pham, and M. Baroni, “A practical and linguistically-motivated approach to compositional distributional semantics,” in: Proc. 52nd ACL , Vol. 1, Long Papers (Baltimore, Maryland), ACL (2014), pp. 90–99.

K. Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “Bleu: a method for automatic evaluation of machine translation,” in: Proc. 40th ACL, ACL (2002) pp. 311–318.

K. Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “Bleu: A method for automatic evaluation of machine translation,” in: ACL ’02 , ACL (2002), pp. 311–318.

D. B. Parker, Learning-Logic , Tech. Report TR-47, Center for Comp. Research in Economics and Management Sci., MIT (1985).

R. Pascanu, Ç. Gulçehre, K. Cho, and Y. Bengio, “How to construct deep recurrent neural networks,” arXiv (2013).

Y. Peng, S. Wang, and -L. Lu, Marginalized Denoising Autoencoder via Graph Regularization for Domain Adaptation , Springer Berlin Heidelberg, Berlin, Heidelberg, 156–163 (2013).

J. Pennington, R. Socher, and C. Manning, “Glove: Global vectors for word representation,” in: Proc. 2014 EMNLP (Doha, Qatar), ACL (2014), pp. 1532–1543.

J. Pouget-Abadie, D. Bahdanau, B. van Merrienboer, K. Cho, and Y. Bengio, “Overcoming the curse of sentence length for neural machine translation using automatic segmentation,” arXiv (2014).

L. Prechelt, Early Stopping — But When? , Springer Berlin Heidelberg, Berlin, Heidelberg (2012), pp. 53–67.

J. Preiss and M. Stevenson, “Unsupervised domain tuning to improve word sense disambiguation,” in: Proc. 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Atlanta, Georgia), ACL (2013), pp. 680–684.

S. Prince, Computer vision: Models, learning, and inference , Cambridge University Press (2012).

A. Ramesh, S. H. Kumar, J. Foulds, and L. Getoor, “Weakly supervised models of aspectsentiment for online course discussion forums,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 74–83.

R. S. Randhawa, P. Jain, and G. Madan, “Topic modeling using distributed word embeddings,” arXiv (2016).

M. Ranzato, G. E. Hinton, and Y. LeCun, “Guest editorial: Deep learning,” International J. Computer Vision , 113 , No. 1, 1–2 (2015).

J. Reisinger and R. J. Mooney, “Multi-prototype vector-space models of word meaning,” in: HLT ’10 , ACL (2010), pp. 109–117.

X. Rong, “word2vec parameter learning explained,” arXiv (2014).

F. Rosenblatt, Principles of Neurodynamics , Spartan, New York (1962).

F. Rosenblatt, “The perceptron: A probabilistic model for information storage and organization in the brain,” Psychological Review , 65 , No. 6, 386–408 (1958).

H. Rubenstein and J. B. Goodenough, “Contextual correlates of synonymy,” Communications of the ACM , 8 , No. 10, 627–633 (1965).

A. A. Rusu, S. G. Colmenarejo, Ç. Gulçehre, G. Desjardins, J. Kirkpatrick, R. Pascanu, V. Mnih, K. Kavukcuoglu, and R. Hadsell, “Policy distillation,” arXiv (2015).

M. Sachan, K. Dubey, E. Xing, and M. Richardson, “Learning answer-entailing structures for machine comprehension,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 239–249.

M. Sadrzadeh and E. Grefenstette, “A compositional distributional semantics, two concrete constructions, and some experimental evaluations,” in: QI’11 , Springer-Verlag (2011), pp. 35–47.

M. Sahlgren, “The Distributional Hypothesis,” Italian J. Linguistics , 20 , No. 1, 33–54 (2008).

R. Salakhutdinov, “Learning Deep Generative Models,” Annual Review of Statistics and Its Application , 2 , No. 1, 361–385 (2015).

R. Salakhutdinov and G. Hinton, “An efficient learning procedure for deep boltzmann machines,” Neural Computation , 24 , No. 8, 1967–2006 (2012).

R. Salakhutdinov and G. E. Hinton, “Deep boltzmann machines,” in: Proc. Twelfth International Conference on Artificial Intelligence and Statistics, AISTATS Clearwater Beach, Florida, USA (2009), pp. 448–455.

J. Schmidhuber, “Deep learning in neural networks: An overview,” Neural Networks , 61 , 85–117 (2015).

M. Schuster, “On supervised learning from sequential data with applications for speech recognition,” Ph.D. thesis, Nara Institute of Science and Technolog, Kyoto, Japan (1999).

M. Schuster and K. K. Paliwal, “Bidirectional recurrent neural networks,” IEEE Transactions on Signal Processing , 45 , No. 11, 2673–2681 (1997).

H. Schwenk, “Continuous space language models,” Comput. Speech Lang. , 21 , No. 3, 492–518 (2007).

I. V. Serban, A. G. O. II, J. Pineau, and A. C. Courville, “Multi-modal variational encoder-decoders,” arXiv (2016).

I. V. Serban, A. Sordoni, Y. Bengio, A. C. Courville, and J. Pineau, “Hierarchical neural network generative models for movie dialogues,” arXiv (2015).

I. V. Serban, A. Sordoni, R. Lowe, L. Charlin, J. Pineau, A. C. Courville, and Y. Bengio, “A hierarchical latent variable encoder-decoder model for generating dialogues,” in: Proc. 31st AAAI (2017), pp. 3295–3301.

H. Setiawan, Z. Huang, J. Devlin, T. Lamar, R. Zbib, R. Schwartz, and J. Makhoul, “Statistical machine translation features with multitask tensor networks,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 31–41.

A. Severyn and A. Moschitti, “Learning to rank short text pairs with convolutional deep neural networks,” in: SIGIR ’15 , ACM (2015), pp. 373–382.

K. Shah, R. W. M. Ng, F. Bougares, and L. Specia, “Investigating continuous space language models for machine translation quality estimation,” in: Proc. 2015 EMNLP (Lisbon, Portugal), ACL (2015), pp. 1073–1078.

L. Shang, Z. Lu, and H. Li, “Neural responding machine for short-text conversation,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 1577–1586.

Y. Shen, X. He, J. Gao, L. Deng, and G. Mesnil, “A latent semantic model with convolutional-pooling structure for information retrieval,” in: CIKM ’14 , ACM (2014), pp. 101–110.

C. Silberer and M. Lapata, “Learning grounded meaning representations with autoencoders,” ACL , No. 1, 721–732 (2014).

D. Silver, A. Huang, C. J. Maddison, A. Guez, L. Sifre, G. van den Driessche, J. Schrittwieser, I. Antonoglou, V. Panneershelvam, M. Lanctot, S. Dieleman, D. Grewe, J. Nham, N. Kalchbrenner, I. Sutskever, T. Lillicrap, M. Leach, K. Kavukcuoglu, T. Graepel, and D. Hassabis, “Mastering the Game of Go with Deep Neural Networks and Tree Search,” Nature , 529 , No. 7587, 484–489 (2016).

M. Snover, B. Dorr, R. Schwartz, L. Micciulla, and J. Makhoul, “A study of translation edit rate with targeted human annotation,” in: Proc. Association for Machine Translation in the Americas (2006), pp. 223–231.

R. Snow, S. Prakash, D. Jurafsky, and A. Y. Ng, “Learning to Merge Word Senses,” in: Proc. Joint Meeting of the Conference on Empirical Methods on Natural Language Processing and the Conference on Natural Language Learning (2007), pp. 1005–1014.

R. Socher, J. Bauer, C. D. Manning, and A. Y. Ng, “Parsing with compositional vector grammars,” in: Proc. ACL (2013), pp. 455–465.

R. Socher, D. Chen, C. D. Manning, and A. Ng, “ReasoningWith Neural Tensor Networks for Knowledge Base Completion,” Advances in Neural Information Processing Systems (NIPS) (2013).

R. Socher, E. H. Huang, J. Pennin, C. D. Manning, and A. Y. Ng, “Dynamic pooling and unfolding recursive autoencoders for paraphrase detection,” Advances in Neural Information Processing Systems , 801–809 (2011).

R. Socher, A. Karpathy, Q. Le, C. Manning, and A. Ng, “Grounded compositional semantics for finding and describing images with sentences,” Transactions of the Association for Computational Linguistics , 2014 (2014).

R. Socher, J. Pennington, E. H. Huang, A. Y. Ng, and C. D. Manning, “Semi-supervised recursive autoencoders for predicting sentiment distributions,” in: Proc. EMNLP 2011 , ACL (2011), pp. 151–161.

R. Socher, A. Perelygin, J. Y. Wu, J. Chuang, C. D. Manning, A. Y. Ng, and C. Potts, “Recursive deep models for semantic compositionality over a sentiment treebank,” in: Proc. EMNLP 2013 , Vol. 1631, Citeseer (2013), p. 1642.

Y. Song, H. Wang, and X. He, “Adapting deep ranknet for personalized search,” in: WSDM 2014 , ACM (2014).

A. Sordoni, Y. Bengio, H. Vahabi, C. Lioma, J. Grue Simonsen, and J.-Y. Nie, “A hierarchical recurrent encoder-decoder for generative context-aware query suggestion,” in: CIKM ’15 , ACM (2015), pp. 553–562.

R. Soricut and F. Och, “Unsupervised morphology induction using word embeddings,” in: Proc. 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Denver, Colorado), ACL (2015), pp. 1627–1637.

B. Speelpenning, “Compiling fast partial derivatives of functions given by algorithms,” Ph.D. thesis, Department of Computer Science, University of Illinois, Urbana-Champaign (1980).

N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov, “Dropout: A simple way to prevent neural networks from overfitting,” J. Machine Learning Research , 15 , No. 1, 1929–1958 (2014).

MathSciNet MATH Google Scholar

R. K. Srivastava, K. Greff, and J. Schmidhuber, “Training very deep networks,” in: NIPS’15 , MIT Press (2015), pp. 2377–2385.

P. Stenetorp, “Transition-based dependency parsing using recursive neural networks,” in: Deep Learning Workshop at NIPS 2013 (2013).

J. Su, D. Xiong, Y. Liu, X. Han, H. Lin, J. Yao, and M. Zhang, “A context-aware topic model for statistical machine translation,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 229–238.

P.-H. Su, M. Gasic, N. Mrkši, L. M. Rojas Barahona, S. Ultes, D. Vandyke, T.-H.Wen, and S. Young, “On-line active reward learning for policy optimisation in spoken dialogue systems,” in: Proc. 54th ACL , Vol. 1, Long Papers (Berlin, Germany), ACL (2016), pp. 2431–2441.

S. Sukhbaatar, A. Szlam, J. Weston, and R. Fergus, “Weakly supervised memory networks,” arXiv (2015).

F. Sun, J. Guo, Y. Lan, J. Xu, and X. Cheng, “Learning word representations by jointly modeling syntagmatic and paradigmatic relations,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 136–145.

I. Sutskever and G. E. Hinton, “Deep, narrow sigmoid belief networks are universal approximators,” Neural Computation , 20 , No. 11, 2629–2636 (2008).

Article MATH Google Scholar

I. Sutskever, J. Martens, and G. Hinton, “Generating text with recurrent neural networks,” in: ICML ’11 , ACM (2011), pp. 1017–1024.

I. Sutskever, O. Vinyals, and Q. V. Le, “Sequence to sequence learning with neural networks,” arXiv (2014).

Y. Tagami, H. Kobayashi, S. Ono, and A. Tajima, “Modeling user activities on the web using paragraph vector,” in: WWW ’15 Companion , ACM (2015), pp. 125–126.

K. S. Tai, R. Socher, and C. D. Manning, “Improved semantic representations from treestructured long short-term memory networks,” in: Proc. 53rd ACL and 7th IJCNLP , Vol. 1 (2015), pp. 1556–1566.

Y. Taigman, M. Yang, M. Ranzato, and L. Wolf, “Deepface: Closing the gap to humanlevel performance in face verification,” in: CVPR ’14, IEEE Computer Society (2014), pp. 1701–1708.

D. Tang, F. Wei, N. Yang, M. Zhou, T. Liu, and B. Qin, “Learning sentiment-specific word embedding for twitter sentiment classification,” ACL , 1 , 1555–1565 (2014).

W. T. Yih, X. He, and C. Meek, “Semantic parsing for single-relation question answering,” in: Proc. ACL , ACL (2014).

J. Tiedemann, “News from OPUS - A Collection of Multilingual Parallel Corpora with Tools and Interfaces,”in: Recent Advances in Natural Language Processing , Vol. V, (Amsterdam/Philadelphia) (N. Nicolov, K. Bontcheva, G. Angelova, and R. Mitkov, eds.), John Benjamins, Amsterdam/Philadelphia (2009), pp. 237–248.

I. Titov and J. Henderson, “A latent variable model for generative dependency parsing,” in: IWPT ’07 , ACL (2007), pp. 144–155.

E. F. Tjong Kim Sang and S. Buchholz, “Introduction to the conll-2000 shared task: Chunking,” in: ConLL ’00 , ACL (2000), pp. 127–132.

B. Y. Tong Zhang, “Boosting with early stopping: Convergence and consistency,” Annals of Statistics , 33 , No. 4, 1538–1579 (2005).

K. Toutanova, D. Klein, C. D. Manning, and Y. Singer, “Feature-rich part-of-speech tagging with a cyclic dependency network,” in: NAACL ’03 , ACL (2003), pp. 173–180.

Y. Tsuboi and H. Ouchi, “Neural dialog models: A survey,” Available from http://2boy.org/~yuta/publications/neural-dialog-models-survey-20150906.pdf., 2015.

J. Turian, L. Ratinov, and Y. Bengio, “Word representations: A simple and general method for semi-supervised learning,” in: ACL ’10 , ACL (2010), pp. 384–394.

P. D. Turney, P. Pantel, et al., “From frequency to meaning: Vector space models of semantics,” J. Artificial Intelligence Research , 37 , No. 1, 141–188 (2010).

E. Tutubalina and S. I. Nikolenko, “Constructing aspect-based sentiment lexicons with topic modeling,” in: Proc. 5th International Conference on Analysis of Images, Social Networks, and Texts (AIST 2016).

B. van Merri¨enboer, D. Bahdanau, V. Dumoulin, D. Serdyuk, D. Warde-Farley, J. Chorowski, and Y. Bengio, “Blocks and fuel: Frameworks for deep learning,” arXiv (2015).

D. Venugopal, C. Chen, V. Gogate, and V. Ng, “Relieving the computational bottleneck: Joint inference for event extraction with high-dimensional features,” in: Proc. 2014 EMNLP (Doha, Qatar), ACL (2014), pp. 831–843.

P. Vincent, “A connection between score matching and denoising autoencoders,” Neural Computation , 23 , No. 7, 1661–1674 (2011).

P. Vincent, H. Larochelle, Y. Bengio, and P.-A. Manzagol, “Extracting and composing robust features with denoising autoencoders,” in: ICML ’08 , ACM (2008), pp. 1096–1103.

P. Vincent, H. Larochelle, I. Lajoie, Y. Bengio, and P.-A. Manzagol, “Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion,” J. Machine Learning Research , 11 , 3371–3408 (2010).

O. Vinyals, L. Kaiser, T. Koo, S. Petrov, I. Sutskever, and G. E. Hinton, “Grammar as a foreign language,” arXiv (2014).

O. Vinyals and Q. V. Le, “A neural conversational model,” in: ICML Deep Learning Workshop , arXiv:1506.05869 (2015).

V. Viswanathan, N. F. Rajani, Y. Bentor, and R. Mooney, “Stacked ensembles of information extractors for knowledge-base population,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 177–187.

X. Wang, Y. Liu, C. Sun, B. Wang, and X. Wang, “Predicting polarities of tweets by composing word embeddings with long short-term memory,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 1343–1353.

D. Weiss, C. Alberti, M. Collins, and S. Petrov, “Structured training for neural network transition-based parsing,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 323–333.

J. Weizenbaum, “Eliza – a computer program for the study of natural language communication between man and machine,” Communications of the ACM , 9 , No. 1, 36–45 (1966).

T. Wen, M. Gasic, N. Mrksic, L. M. Rojas-Barahona, P. Su, S. Ultes, D. Vandyke, and S. J. Young, “Conditional generation and snapshot learning in neural dialogue systems,” in: Proc. 2016 Conference on Empirical Methods in Natural Language Processing , EMNLP 2016, Austin, Texas, USA (2016), pp. 2153–2162.

P. J. Werbos, “Applications of advances in nonlinear sensitivity analysis,” in: Proc. 10th IFIP Conference , NYC (1981), pp. 762–770.

P. J. Werbos, “Backpropagation through time: what it does and how to do it,” Proc. IEEE , 78 , No. 10, 1550–1560 (1990).

P. J. Werbos, “Backwards differentiation in AD and neural nets: Past links and new opportunities,” in: Automatic Differentiation: Applications, Theory, and Implementations , Springer (2006), pp. 15–34.

Chapter MATH Google Scholar

J. Weston, A. Bordes, S. Chopra, and T. Mikolov, “Towards ai-complete question answering: A set of prerequisite toy tasks,” arXiv (2015).

J. Weston, S. Chopra, and A. Bordes, “Memory networks,” arXiv (2014).

L. White, R. Togneri, W. Liu, and M. Bennamoun, “How well sentence embeddings capture meaning,” in; ADCS ’15 , ACM (2015), pp. 9:1–9:8.

R. J. Williams and D. Zipser, “Gradient-based learning algorithms for recurrent networks and their computational complexity,” in: Backpropagation (Hillsdale, NJ, USA) (Y. Chauvin and D. E. Rumelhart, eds.), L. Erlbaum Associates Inc., Hillsdale, NJ, USA (1995), pp. 433–486.

Y. Wu, M. Schuster, Z. Chen, Q. V. Le, M. Norouzi, W. Macherey, M. Krikun, Y. Cao, Q. Gao, K. Macherey, J. Klingner, A. Shah, M. Johnson, X. Liu, L. Kaiser, S. Gouws, Y. Kato, T. Kudo, H. Kazawa, K. Stevens, G. Kurian, N. Patil, W. Wang, C. Young, J. Smith, J. Riesa, A. Rudnick, O. Vinyals, G. Corrado, M. Hughes, and J. Dean, “Google’s neural machine translation system: Bridging the gap between human and machine translation,” arXiv (2016).

Z. Wu and C. L. Giles, “Sense-aware semantic analysis: A multi-prototype word representation model using wikipedia,” in: AAAI’15 , AAAI Press (2015), pp. 2188–2194.

S. Wubben, A. van den Bosch, and E. Krahmer, “Paraphrase generation as monolingual translation: Data and evaluation,” in: INLG ’10 , ACL (2010), pp. 203–207.

C. Xu, Y. Bai, J. Bian, B. Gao, G. Wang, X. Liu, and T.-Y. Liu, “Rc-net: A general framework for incorporating knowledge into word representations,” in: CIKM ’14 , ACM (2014), pp. 1219–1228.

K. Xu, J. Ba, R. Kiros, K. Cho, A. C. Courville, R. Salakhutdinov, R. S. Zemel, and Y. Bengio, “Show, attend and tell: Neural image caption generation with visual attention,” arXiv (2015).

R. Xu and D. Wunsch, Clustering , Wiley-IEEE Press (2008).

X. Xue, J. Jeon, and W. B. Croft, “Retrieval models for question and answer archives,” in: SIGIR ’08 , ACM (2008), pp. 475–482.

M. Yang, T. Cui, and W. Tu, “Ordering-sensitive and semantic-aware topic modeling,” arXiv (2015).

Y. Yang and J. Eisenstein, “Unsupervised multi-domain adaptation with feature embeddings,” in: Proc. 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Denver, Colorado), ACL (2015), pp. 672–682.

Z. Yang, X. He, J. Gao, L. Deng, and A. J. Smola, “Stacked attention networks for image question answering,” arXiv (2015).

K. Yao, G. Zweig, and B. Peng, “Attention with intention for a neural network conversation model,” arXiv (2015).

X. Yao, J. Berant, and B. Van Durme, “Freebase qa: Information extraction or semantic parsing?” in: Proc. ACL 2014 Workshop on Semantic Parsing (Baltimore, MD), ACL (2014), pp. 82–86.

Y. Yao, L. Rosasco, and A. Caponnetto, “On early stopping in gradient descent learning,” Constructive Approximation , 26 , No. 2, 289–315 (2007).

W.-t. Yih, M.-W. Chang, C. Meek, and A. Pastusiak, “Question answering using enhanced lexical semantic models,” in: Proc. 51st ACL , Vol. 1, Long Papers (Sofia, Bulgaria), ACL (2013), pp. 1744–1753.

W.-t. Yih, G. Zweig, and J. C. Platt, “Polarity inducing latent semantic analysis,” in: EMNLP-CoNLL ’12 , ACL (2012), pp. 1212–1222.

W. Yin and H. Schutze, “Multigrancnn: An architecture for general matching of text chunks on multiple levels of granularity,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 63–73.

W. Yin, H. Schutze, B. Xiang, and B. Zhou, “ABCNN: attention-based convolutional neural network for modeling sentence pairs,” arXiv (2015).

J. Yohan and O. A. H., “Aspect and sentiment unification model for online review analysis,” in: WSDM ’11 , ACM (2011), pp. 815–824.

A. M. Z. Yang, A. Kotov, and S. Lu, “Parametric and non-parametric user-aware sentiment topic models,” in: Proc. 38th ACM SIGIR (2015).

W. Zaremba and I. Sutskever, “Reinforcement learning neural Turing machines,” arXiv (2015).

W. Zaremba, I. Sutskever, and O. Vinyals, “Recurrent neural network regularization,” arXiv (2014).

M. D. Zeiler, “ADADELTA: an adaptive learning rate method,” arXiv (2012).

L. S. Zettlemoyer and M. Collins, “Learning to map sentences to l51ogical form: Structured classification with probabilistic categorial grammars,” arXiv (2012).

X. Zhang and Y. LeCun, “Text understanding from scratch,” arXiv (2015).

X. Zhang, J. Zhao, and Y. LeCun, “Character-level convolutional networks for text classification,” in: Advances in Neural Information Processing Systems 28 (C. Cortes, N. D. Lawrence, D. D. Lee, M. Sugiyama, and R. Garnett, eds.), Curran Associates, Inc. (2015), pp. 649–657.

G. Zhou, T. He, J. Zhao, and P. Hu, “Learning continuous word embedding with metadata for question retrieval in community question answering,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 250–259.

H. Zhou, Y. Zhang, S. Huang, and J. Chen, “A neural probabilistic structured-prediction model for transition-based dependency parsing,” in: Proc. 53rd ACL and the 7th IJCNLP , Vol. 1, Long Papers (Beijing, China), ACL (2015), pp. 1213–1222.

Download references

Author information

Authors and affiliations.

Saarland University, 66123, Saarbrücken, Germany

E. O. Arkhangelskaya

St. Petersburg State University, St. Petersburg, Russia

S. I. Nikolenko

St. Petersburg Department of Steklov Mathematical Institute RAS, St. Petersburg, Russia

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to E. O. Arkhangelskaya .

Additional information

Published in Zapiski Nauchnykh Seminarov POMI , Vol. 499, 2021, pp. 137–205.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Arkhangelskaya, E.O., Nikolenko, S.I. Deep Learning for Natural Language Processing: A Survey. J Math Sci 273 , 533–582 (2023). https://doi.org/10.1007/s10958-023-06519-6

Download citation

Received : 14 January 2019

Published : 26 June 2023

Issue Date : July 2023

DOI : https://doi.org/10.1007/s10958-023-06519-6

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Find a journal

- Publish with us

- Track your research

Advertisement

- Previous Article

- Next Article

1. INTRODUCTION

2. data and preprocessing, 3. performed experiments, applied methods and analysis of results, 4. concluding remarks, the state of the art of natural language processing—a systematic automated review of nlp literature using nlp techniques.

- Cite Icon Cite

- Open the PDF for in another window

- Permissions

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Search Site

Jan Sawicki , Maria Ganzha , Marcin Paprzycki; The State of the Art of Natural Language Processing—A Systematic Automated Review of NLP Literature Using NLP Techniques. Data Intelligence 2023; 5 (3): 707–749. doi: https://doi.org/10.1162/dint_a_00213

Download citation file:

- Ris (Zotero)

- Reference Manager

Nowadays, natural language processing (NLP) is one of the most popular areas of, broadly understood, artificial intelligence. Therefore, every day, new research contributions are posted, for instance, to the arXiv repository. Hence, it is rather difficult to capture the current “state of the field” and thus, to enter it. This brought the id-art NLP techniques to analyse the NLP-focused literature. As a result, (1) meta-level knowledge, concerning the current state of NLP has been captured, and (2) a guide to use of basic NLP tools is provided. It should be noted that all the tools and the dataset described in this contribution are publicly available. Furthermore, the originality of this review lies in its full automation. This allows easy reproducibility and continuation and updating of this research in the future as new researches emerge in the field of NLP.

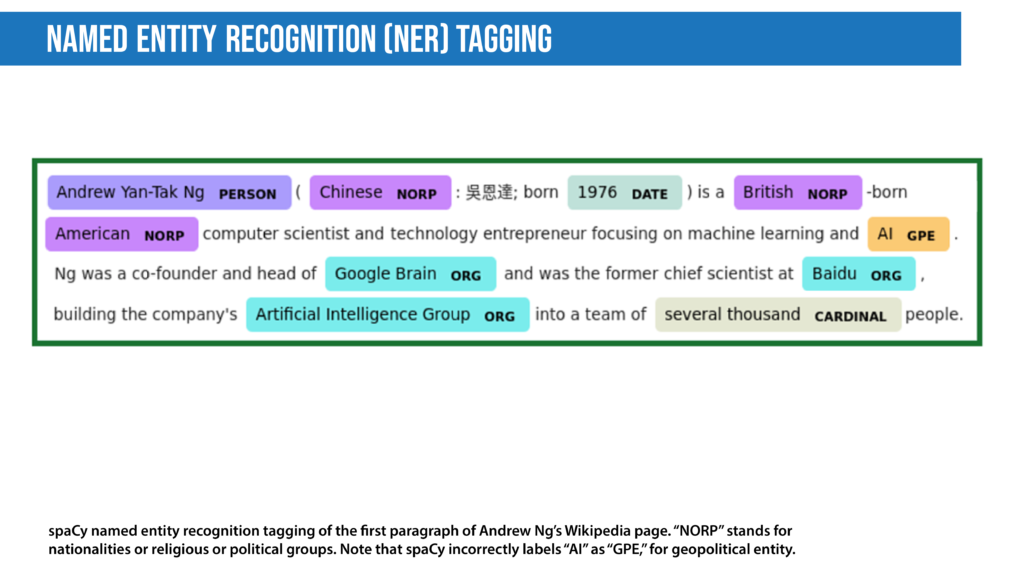

Natural language processing (NLP) is rapidly growing in popularity in a variety of domains, from closely related, like semantics [ 1 , 2 ] and linguistics [ 3 , 4 ] (e.g. inflection [ 5 ], phonetics and onomastics [ 6 ], automatic text correction [ 7 ]), named entity recognition [ 8 , 9 ] to distant ones, like biobliometry [ 10 ], cybersecurity [ 11 ], quantum mechanics [ 12 , 13 ], gender studies [ 14 , 15 ], chemistry [ 16 ] or orthodontia [ 17 ]. This, among others, brings an opportunity, for early-stage researchers, to enter the area. Since NLP can be applied to many domains and languages, and involves use of many techniques and approaches, it is important to realize where to start.

This contribution attempts at addressing this issue, by applying NLP techniques to analysis of NLP-focused literature. As a result, with a fully automated, systematic, visualization-driven literature analysis, a guide to the state-of-the-art of natural language processing is presented. In this way, two goals are achieved. (1) Providing introduction to NLP for scientists entering the field, and (2) supporting possible knowledge update for experienced researchers. The main research questions (RQs) considered in this work are:

RQ1: What datasets are considered to be most useful?

RQ2: Which languages, other than English, appear in NLP research?

RQ3: What are the most popular fields and topics in current NLP research?

Rq4: what particular tasks and problems are most often studied, rq5: is the field “homogenous”, or are there easily identifiable “subgroups”, rq6: how difficult is it to comprehend the nlp literature.

Taking into account that the proposed approach is, itself, anchored in NLP, this work is also an illustration of how selected standard NLP techniques can be used in practice, and which of them should be used for which purpose. However, it should be made clear that considerations presented in what follows should be treated as “illustrative examples”, not “strict guidelines”. Moreover, it should be stressed that none of the applied techniques has been optimized to the task (e.g. no hyperparameter tuning has been applied). This is a deliberate choice, as the goal is to provide an overview and “general ideas”, rather than overwhelm the reader with technical details of individual NLP approaches. For technical details, concerning optimization of mentioned approaches, reader should consult referenced literature.

The whole analysis has been performed in Python—a programming language which is ubiquitous in data science research and projects for years [ 18 , 19 , 20 , 21 , 22 , 23 ]. Python was also chosen for the following reasons:

It provides a heterogeneous environment

It allows use of Jupyter Notebooks ① , which allow quick and easy prototyping, testing and code sharing

There exists an abundance of data science libraries ② , which allow everything from acquiring the dataset, to visualizing the result

It offers readability and speed in development [ 24 ]

Presented analysis follows the order of research questions. To make the text more readable, readers are introduced to pertinent NLP methods in the context of answering individual questions.

At the beginning of NLP research, there is always data. This section introduces the dataset consisting of research papers used in this work, and describes how it was preprocessed.

2.1 Data Used in the Research

To adequately represent the domain, and to apply NLP techniques, it is necessary to select an abundant, and well-documented, repository of related texts (stored in a digital format). Moreover, to automatize the conducted analysis, and to allow easy reproduction, it is crucial to choose a set of papers, which can be easily accessed, e.g. a database with a functional Application Programming Interface (API). Finally, for obvious reasons, open access datasets are the natural targets for NLP-oriented work.

In the context of this work, while there are multiple repositories, which contain NLP-related literature, the best choice turned out to be arXiv (for the papers themselves, and for the metadata it provided), combined with the Semantic Scholar (for the “citation network” and other important metadata; see Section 3.3.1).

Note that other datasets have been considered, but were not selected. Reasons for this decision have been summarized in Table 1 .

| Database . | The reason for in applicability in this research task . |

|---|---|

| Google Scholar | Google Scholar does not contain actual data (text, PDF, etc.) of any work—there are only links to other databases. Moreover, performed tests determined that the API (Python “scholarly” library) works well with small queries, but fetching information about thousands of papers results in download rate limits, and temporary IP address blocking. Finally, Google Scholar is criticized, among others, for excessive secrecy [ ], biased search algorithms [ ], and incorrect citation counts [ ]. |

| PubMed | PubMed is mainly focused on medical and biological papers. Therefore, the number of works related to NLP is somewhat limited, and difficult to identify using straightforward approaches. |

| ResearchGate | There are two main problems with ResearchGate, as seen from the perspective of this work: lack of easy-accessible API and restrictions on some articles’ availability (large number of papers has to be requested from authors—and such requests may not be fulfilled, or wait time may be excessive). |

| Scopus | The Scopus API is not fully open-access, and has restrictions on the number of requests that can be issues within a specific time. |

| JSTOR | Even though the JSTOR website declares that API exists, the link does not provide any information about it (404 not found). |

| Microsoft Academic | The Microsoft Academic API is very well documented, but it does not provide true open access (requires a subscription key). Moreover, it does not contain the actual text of works; mostly metadata. |

| Database . | The reason for in applicability in this research task . |

|---|---|

| Google Scholar | Google Scholar does not contain actual data (text, PDF, etc.) of any work—there are only links to other databases. Moreover, performed tests determined that the API (Python “scholarly” library) works well with small queries, but fetching information about thousands of papers results in download rate limits, and temporary IP address blocking. Finally, Google Scholar is criticized, among others, for excessive secrecy [ ], biased search algorithms [ ], and incorrect citation counts [ ]. |

| PubMed | PubMed is mainly focused on medical and biological papers. Therefore, the number of works related to NLP is somewhat limited, and difficult to identify using straightforward approaches. |

| ResearchGate | There are two main problems with ResearchGate, as seen from the perspective of this work: lack of easy-accessible API and restrictions on some articles’ availability (large number of papers has to be requested from authors—and such requests may not be fulfilled, or wait time may be excessive). |

| Scopus | The Scopus API is not fully open-access, and has restrictions on the number of requests that can be issues within a specific time. |

| JSTOR | Even though the JSTOR website declares that API exists, the link does not provide any information about it (404 not found). |

| Microsoft Academic | The Microsoft Academic API is very well documented, but it does not provide true open access (requires a subscription key). Moreover, it does not contain the actual text of works; mostly metadata. |

2.1.1 Dataset Downloading and Filtering

The papers were fetched from arXiv on 26 August 2021. The resulting dataset includes all articles, which have been extracted as a result of issuing the query “natural language processing” ④ . As a result, 4712 articles were retrieved. Two articles were discarded because their PDFs were too complicated for the tools that were used for the text extraction (1 710.10229v1—problems with chart on page 15; 1803.07136v1 — problems with chart on page 6; see, also, section 2.2). Even though the query was not bounded by the “time when the article was uploaded to arXiv” parameter, it turned out that a solid majority of the articles had submission dates from the last decade. Specifically, the distribution was as follows:

192 records uploaded before 2010-01-01

243 records from between (including) 2010-01-01 and 2014-12-31

697 records from between (including) 2015-01-01 and 2017-12-31

3580 records uploaded after 2018-01-01

On the basis of this distribution, it was decided that there is no reason to impose time constraints, because the “old” works should not be able to “overshadow” the “newest” literature. Moreover, it was decided that it is worth keeping all available publications, as they might result in additional findings (e.g., as what concerns the most original work, described in Section 3.7.4).

Finally, all articles not written in English were discarded, reducing the total count to 4576 texts. This decision, while somewhat controversial, was made to be able to understand the results (by the authors of this contribution) and to avoid complex issues related to text translation. However, it is easy to observe that the number of texts not written in English (and stored in arXiv) was relatively small (< 5%). Nevertheless, this leaves open a question: what is the relationship between NLP-related work that is written in English and that written in other languages. However, addressing this topic is out of scope of this contribution.

2.2 Text Preprocessing

Obviously, the key information about a research contribution is contained in its text. Therefore, subsequent analysis applied NLP techniques to texts of downloaded papers. To do this, the following preprocessing has been applied. The PDFs have been converted to plain text, using pdfminer.six (a Python library ⑤ ). Here, notice that there are several other libraries that can also be used to convert PDF to text. Specifically, the following libraries have been tried: pdfminer ⑥ , pdftotree ⑦ , BeautifulSoup ⑧ . On the basis of performed tests, pdfminer.six was selected, because it provided the simplest API, produced results, which did not have to be further converted (as opposite to, e.g., BeautifulSoup), and performed the fastest conversion.

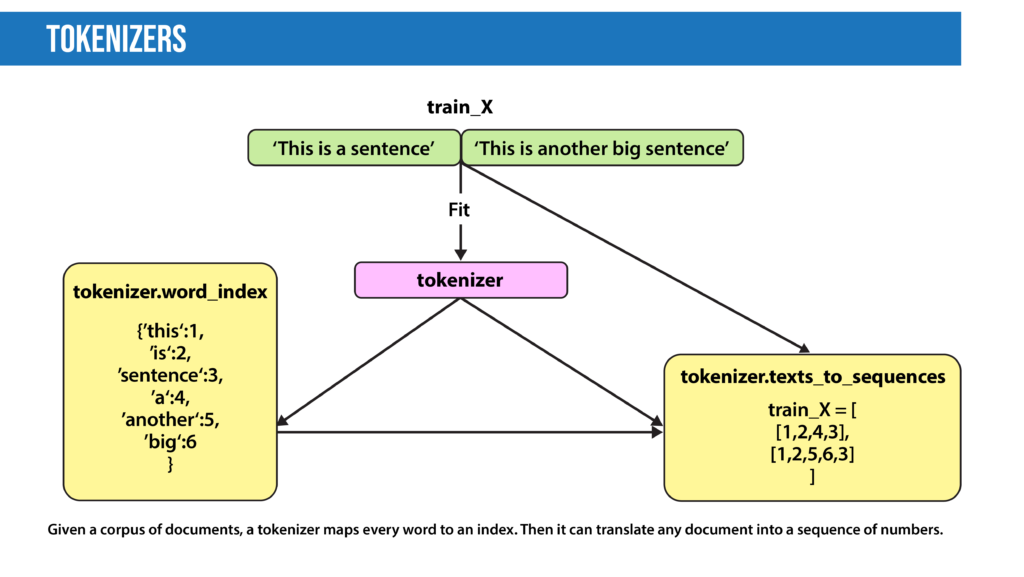

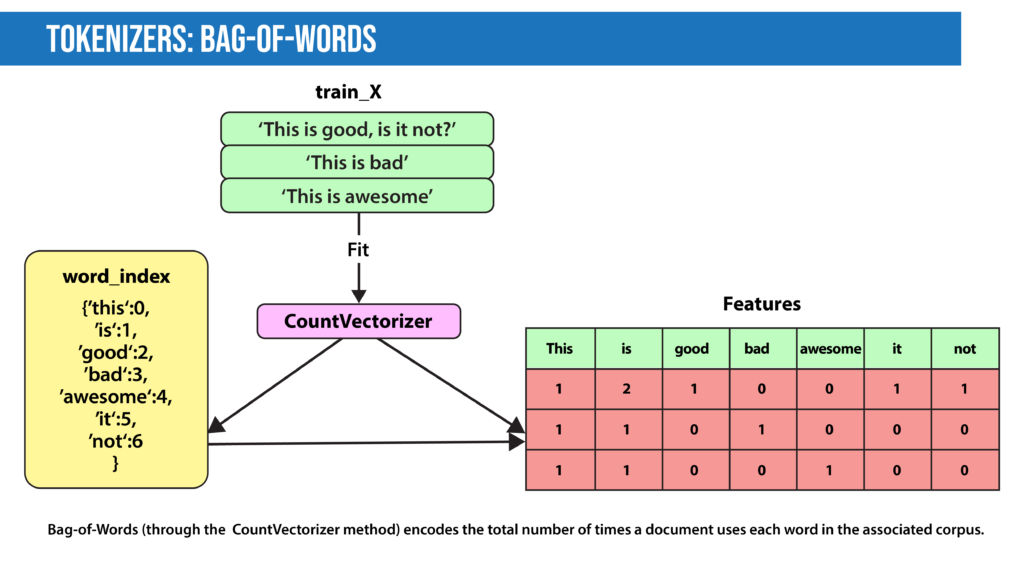

Use of different text analysis methods may require different preprocessing. Some methods, like keyphrase search, work best when the text is “thoroughly cleaned”; i.e. almost reduced to a “bag of words” [ 28 ]. This means that, for instance, words are lemmatized, there is no punctuation, etc. However, some more recent techniques (like text embeddings [ 29 ]) can (and should) be trained on a “dirty” text, like Wikipedia [ 30 ] dumps ⑨ or Common Crawl ⑩ . Hence, it is necessary to distinguish between (at least) two levels of text cleaning: (A) “delicately cleaned” text (in what follows, called “Stage 1” cleaning), where only parts insignificant to the NLP analysis are removed, and (B) a “very strictly cleaned” text (called “Stage 2” cleaning). Specifically, “Stage 1” cleaning includes removal of:

charts and diagrams improperly converted to text,

arXiv “watermarks”,

references section (which were not needed, since metadata from Semantic Scholar was used),

links, formulas, misconverted characters (e.g. “ff”).

Stage 2 cleaning is applied to the results of Stage 1 cleaning, and consists of the following operations:

All punctuation, numbers and other non-letter characters were removed, leaving only letters.