The present and future of AI

Finale doshi-velez on how ai is shaping our lives and how we can shape ai.

Finale Doshi-Velez, the John L. Loeb Professor of Engineering and Applied Sciences. (Photo courtesy of Eliza Grinnell/Harvard SEAS)

How has artificial intelligence changed and shaped our world over the last five years? How will AI continue to impact our lives in the coming years? Those were the questions addressed in the most recent report from the One Hundred Year Study on Artificial Intelligence (AI100), an ongoing project hosted at Stanford University, that will study the status of AI technology and its impacts on the world over the next 100 years.

The 2021 report is the second in a series that will be released every five years until 2116. Titled “Gathering Strength, Gathering Storms,” the report explores the various ways AI is increasingly touching people’s lives in settings that range from movie recommendations and voice assistants to autonomous driving and automated medical diagnoses .

Barbara Grosz , the Higgins Research Professor of Natural Sciences at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) is a member of the standing committee overseeing the AI100 project and Finale Doshi-Velez , Gordon McKay Professor of Computer Science, is part of the panel of interdisciplinary researchers who wrote this year’s report.

We spoke with Doshi-Velez about the report, what it says about the role AI is currently playing in our lives, and how it will change in the future.

Q: Let's start with a snapshot: What is the current state of AI and its potential?

Doshi-Velez: Some of the biggest changes in the last five years have been how well AIs now perform in large data regimes on specific types of tasks. We've seen [DeepMind’s] AlphaZero become the best Go player entirely through self-play, and everyday uses of AI such as grammar checks and autocomplete, automatic personal photo organization and search, and speech recognition become commonplace for large numbers of people.

In terms of potential, I'm most excited about AIs that might augment and assist people. They can be used to drive insights in drug discovery, help with decision making such as identifying a menu of likely treatment options for patients, and provide basic assistance, such as lane keeping while driving or text-to-speech based on images from a phone for the visually impaired. In many situations, people and AIs have complementary strengths. I think we're getting closer to unlocking the potential of people and AI teams.

There's a much greater recognition that we should not be waiting for AI tools to become mainstream before making sure they are ethical.

Q: Over the course of 100 years, these reports will tell the story of AI and its evolving role in society. Even though there have only been two reports, what's the story so far?

There's actually a lot of change even in five years. The first report is fairly rosy. For example, it mentions how algorithmic risk assessments may mitigate the human biases of judges. The second has a much more mixed view. I think this comes from the fact that as AI tools have come into the mainstream — both in higher stakes and everyday settings — we are appropriately much less willing to tolerate flaws, especially discriminatory ones. There's also been questions of information and disinformation control as people get their news, social media, and entertainment via searches and rankings personalized to them. So, there's a much greater recognition that we should not be waiting for AI tools to become mainstream before making sure they are ethical.

Q: What is the responsibility of institutes of higher education in preparing students and the next generation of computer scientists for the future of AI and its impact on society?

First, I'll say that the need to understand the basics of AI and data science starts much earlier than higher education! Children are being exposed to AIs as soon as they click on videos on YouTube or browse photo albums. They need to understand aspects of AI such as how their actions affect future recommendations.

But for computer science students in college, I think a key thing that future engineers need to realize is when to demand input and how to talk across disciplinary boundaries to get at often difficult-to-quantify notions of safety, equity, fairness, etc. I'm really excited that Harvard has the Embedded EthiCS program to provide some of this education. Of course, this is an addition to standard good engineering practices like building robust models, validating them, and so forth, which is all a bit harder with AI.

I think a key thing that future engineers need to realize is when to demand input and how to talk across disciplinary boundaries to get at often difficult-to-quantify notions of safety, equity, fairness, etc.

Q: Your work focuses on machine learning with applications to healthcare, which is also an area of focus of this report. What is the state of AI in healthcare?

A lot of AI in healthcare has been on the business end, used for optimizing billing, scheduling surgeries, that sort of thing. When it comes to AI for better patient care, which is what we usually think about, there are few legal, regulatory, and financial incentives to do so, and many disincentives. Still, there's been slow but steady integration of AI-based tools, often in the form of risk scoring and alert systems.

In the near future, two applications that I'm really excited about are triage in low-resource settings — having AIs do initial reads of pathology slides, for example, if there are not enough pathologists, or get an initial check of whether a mole looks suspicious — and ways in which AIs can help identify promising treatment options for discussion with a clinician team and patient.

Q: Any predictions for the next report?

I'll be keen to see where currently nascent AI regulation initiatives have gotten to. Accountability is such a difficult question in AI, it's tricky to nurture both innovation and basic protections. Perhaps the most important innovation will be in approaches for AI accountability.

Topics: AI / Machine Learning , Computer Science

Cutting-edge science delivered direct to your inbox.

Join the Harvard SEAS mailing list.

Scientist Profiles

Finale Doshi-Velez

Herchel Smith Professor of Computer Science

Press Contact

Leah Burrows | 617-496-1351 | [email protected]

Related News

Alumni profile: Jacomo Corbo, Ph.D. '08

Racing into the future of machine learning

AI / Machine Learning , Computer Science

Ph.D. student Monteiro Paes named Apple Scholar in AI/ML

Monteiro Paes studies fairness and arbitrariness in machine learning models

AI / Machine Learning , Applied Mathematics , Awards , Graduate Student Profile

A new phase for Harvard Quantum Computing Club

SEAS students place second at MIT quantum hackathon

Computer Science , Quantum Engineering , Undergraduate Student Profile

By using our website you agree to our use of cookies. Close

- Reading Room

- Book reviews

- Articles & Shortlisted Essays

- Salary surveys

- Winning Rybczynski Essays

- Newsletter Archive

04 March 2020

How robots change the world: their impact on regional inequalities.

How robots change the world [ pdf | 617 kB ]

Next 2018/19 Rybczynski Prize Essay (1) 12 March 2019

Previous 2020/21 Rybczynski Prize Essay 28 September 2021

The Rybczynski Prize

Discover more about this prestigious award.

To revisit this article, visit My Profile, then View saved stories .

- Backchannel

- Newsletters

- WIRED Insider

- WIRED Consulting

The WIRED Guide to Robots

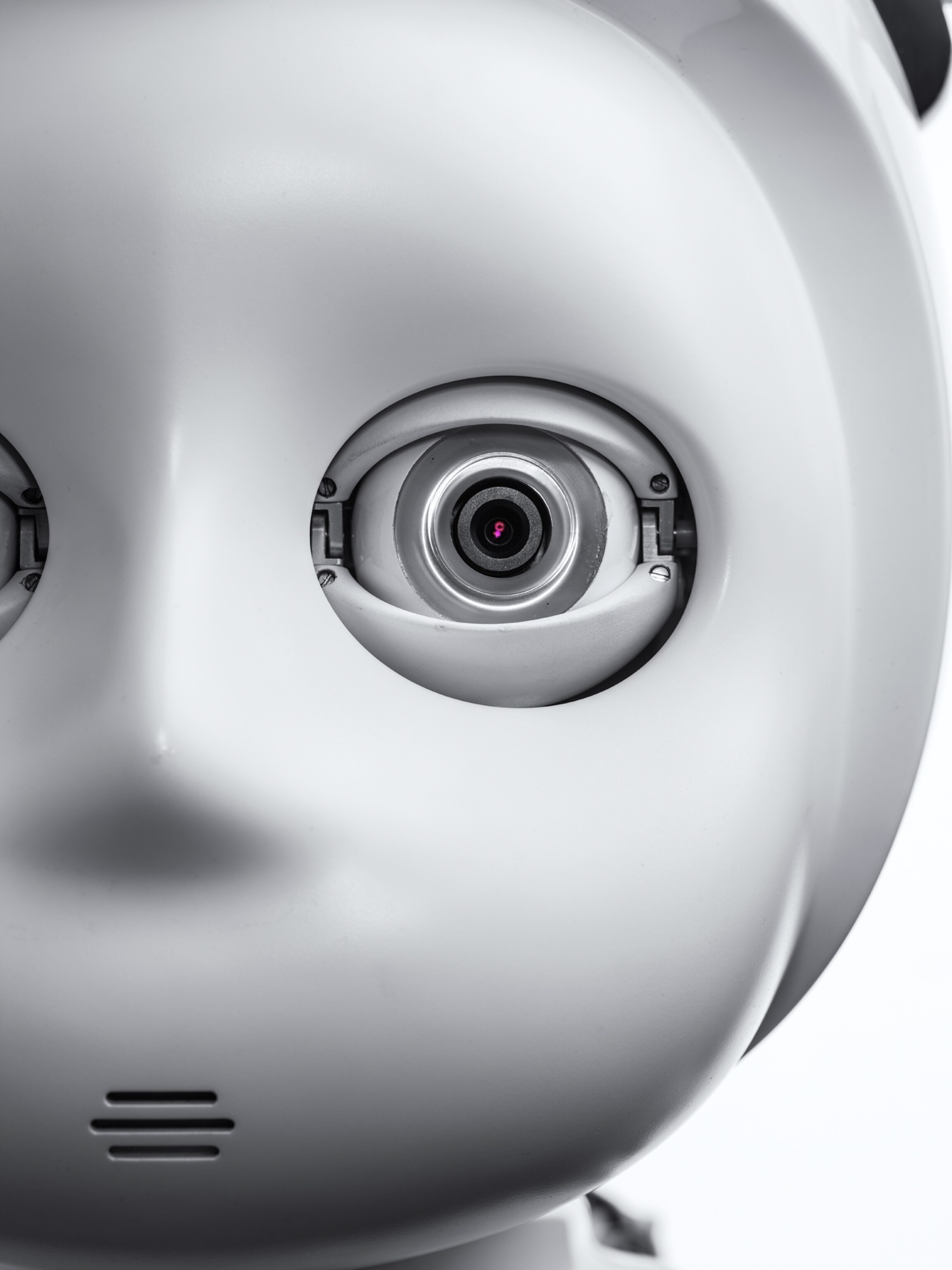

Modern robots are not unlike toddlers: It’s hilarious to watch them fall over, but deep down we know that if we laugh too hard, they might develop a complex and grow up to start World War III. None of humanity’s creations inspires such a confusing mix of awe, admiration, and fear: We want robots to make our lives easier and safer, yet we can’t quite bring ourselves to trust them. We’re crafting them in our own image, yet we are terrified they’ll supplant us.

But that trepidation is no obstacle to the booming field of robotics. Robots have finally grown smart enough and physically capable enough to make their way out of factories and labs to walk and roll and even leap among us . The machines have arrived.

You may be worried a robot is going to steal your job, and we get that. This is capitalism, after all, and automation is inevitable. But you may be more likely to work alongside a robot in the near future than have one replace you. And even better news: You’re more likely to make friends with a robot than have one murder you. Hooray for the future!

The definition of “robot” has been confusing from the very beginning. The word first appeared in 1921, in Karel Capek’s play R.U.R. , or Rossum's Universal Robots. “Robot” comes from the Czech for “forced labor.” These robots were robots more in spirit than form, though. They looked like humans, and instead of being made of metal, they were made of chemical batter. The robots were far more efficient than their human counterparts, and also way more murder-y—they ended up going on a killing spree .

R.U.R. would establish the trope of the Not-to-Be-Trusted Machine (e.g., Terminator , The Stepford Wives , Blade Runner , etc.) that continues to this day—which is not to say pop culture hasn’t embraced friendlier robots. Think Rosie from The Jetsons . (Ornery, sure, but certainly not homicidal.) And it doesn’t get much family-friendlier than Robin Williams as Bicentennial Man .

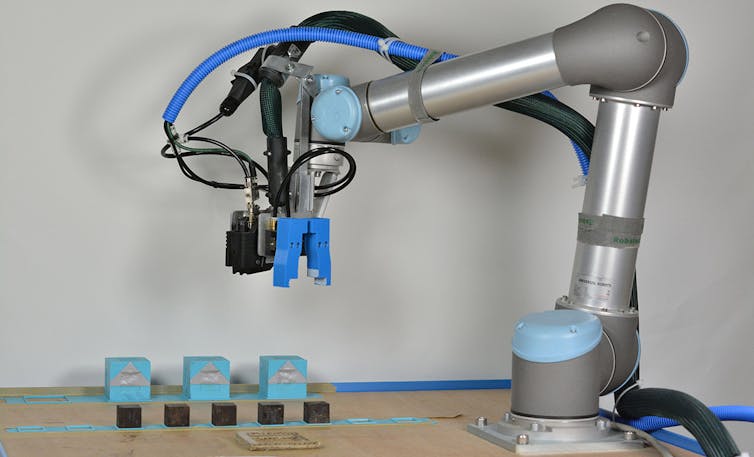

The real-world definition of “robot” is just as slippery as those fictional depictions. Ask 10 roboticists and you’ll get 10 answers—how autonomous does it need to be, for instance. But they do agree on some general guidelines : A robot is an intelligent, physically embodied machine. A robot can perform tasks autonomously to some degree. And a robot can sense and manipulate its environment.

Think of a simple drone that you pilot around. That’s no robot. But give a drone the power to take off and land on its own and sense objects and suddenly it’s a lot more robot-ish. It’s the intelligence and sensing and autonomy that’s key.

But it wasn’t until the 1960s that a company built something that started meeting those guidelines. That’s when SRI International in Silicon Valley developed Shakey , the first truly mobile and perceptive robot. This tower on wheels was well-named—awkward, slow, twitchy. Equipped with a camera and bump sensors, Shakey could navigate a complex environment. It wasn’t a particularly confident-looking machine, but it was the beginning of the robotic revolution.

Around the time Shakey was trembling about, robot arms were beginning to transform manufacturing. The first among them was Unimate , which welded auto bodies. Today, its descendants rule car factories, performing tedious, dangerous tasks with far more precision and speed than any human could muster. Even though they’re stuck in place, they still very much fit our definition of a robot—they’re intelligent machines that sense and manipulate their environment.

Robots, though, remained largely confined to factories and labs, where they either rolled about or were stuck in place lifting objects. Then, in the mid-1980s Honda started up a humanoid robotics program. It developed P3, which could walk pretty darn good and also wave and shake hands, much to the delight of a roomful of suits . The work would culminate in Asimo, the famed biped, which once tried to take out President Obama with a well-kicked soccer ball. (OK, perhaps it was more innocent than that.)

Today, advanced robots are popping up everywhere . For that you can thank three technologies in particular: sensors, actuators, and AI.

So, sensors. Machines that roll on sidewalks to deliver falafel can only navigate our world thanks in large part to the 2004 Darpa Grand Challenge, in which teams of roboticists cobbled together self-driving cars to race through the desert. Their secret? Lidar, which shoots out lasers to build a 3-D map of the world. The ensuing private-sector race to develop self-driving cars has dramatically driven down the price of lidar, to the point that engineers can create perceptive robots on the (relative) cheap.

Reece Rogers

David Kushner

David Gilbert

Emily Mullin

Lidar is often combined with something called machine vision—2-D or 3-D cameras that allow the robot to build an even better picture of its world. You know how Facebook automatically recognizes your mug and tags you in pictures? Same principle with robots. Fancy algorithms allow them to pick out certain landmarks or objects .

Sensors are what keep robots from smashing into things. They’re why a robot mule of sorts can keep an eye on you, following you and schlepping your stuff around ; machine vision also allows robots to scan cherry trees to determine where best to shake them , helping fill massive labor gaps in agriculture.

New technologies promise to let robots sense the world in ways that are far beyond humans’ capabilities. We’re talking about seeing around corners: At MIT, researchers have developed a system that watches the floor at the corner of, say, a hallway, and picks out subtle movements being reflected from the other side that the piddling human eye can’t see. Such technology could one day ensure that robots don’t crash into humans in labyrinthine buildings, and even allow self-driving cars to see occluded scenes.

Within each of these robots is the next secret ingredient: the actuator , which is a fancy word for the combo electric motor and gearbox that you’ll find in a robot’s joint. It’s this actuator that determines how strong a robot is and how smoothly or not smoothly it moves . Without actuators, robots would crumple like rag dolls. Even relatively simple robots like Roombas owe their existence to actuators. Self-driving cars, too, are loaded with the things.

Actuators are great for powering massive robot arms on a car assembly line, but a newish field, known as soft robotics, is devoted to creating actuators that operate on a whole new level. Unlike mule robots, soft robots are generally squishy, and use air or oil to get themselves moving. So for instance, one particular kind of robot muscle uses electrodes to squeeze a pouch of oil, expanding and contracting to tug on weights . Unlike with bulky traditional actuators, you could stack a bunch of these to magnify the strength: A robot named Kengoro, for instance, moves with 116 actuators that tug on cables, allowing the machine to do unsettlingly human maneuvers like pushups . It’s a far more natural-looking form of movement than what you’d get with traditional electric motors housed in the joints.

And then there’s Boston Dynamics, which created the Atlas humanoid robot for the Darpa Robotics Challenge in 2013. At first, university robotics research teams struggled to get the machine to tackle the basic tasks of the original 2013 challenge and the finals round in 2015, like turning valves and opening doors. But Boston Dynamics has since that time turned Atlas into a marvel that can do backflips , far outpacing other bipeds that still have a hard time walking. (Unlike the Terminator, though, it does not pack heat.) Boston Dynamics has also begun leasing a quadruped robot called Spot, which can recover in unsettling fashion when humans kick or tug on it . That kind of stability will be key if we want to build a world where we don’t spend all our time helping robots out of jams. And it’s all thanks to the humble actuator.

At the same time that robots like Atlas and Spot are getting more physically robust, they’re getting smarter, thanks to AI. Robotics seems to be reaching an inflection point, where processing power and artificial intelligence are combining to truly ensmarten the machines . And for the machines, just as in humans, the senses and intelligence are inseparable—if you pick up a fake apple and don’t realize it’s plastic before shoving it in your mouth, you’re not very smart.

This is a fascinating frontier in robotics (replicating the sense of touch, not eating fake apples). A company called SynTouch, for instance, has developed robotic fingertips that can detect a range of sensations , from temperature to coarseness. Another robot fingertip from Columbia University replicates touch with light, so in a sense it sees touch : It’s embedded with 32 photodiodes and 30 LEDs, overlaid with a skin of silicone. When that skin is deformed, the photodiodes detect how light from the LEDs changes to pinpoint where exactly you touched the fingertip, and how hard.

Far from the hulking dullards that lift car doors on automotive assembly lines, the robots of tomorrow will be very sensitive indeed.

Increasingly sophisticated machines may populate our world, but for robots to be really useful, they’ll have to become more self-sufficient. After all, it would be impossible to program a home robot with the instructions for gripping each and every object it ever might encounter. You want it to learn on its own, and that is where advances in artificial intelligence come in.

Take Brett. In a UC Berkeley lab, the humanoid robot has taught itself to conquer one of those children’s puzzles where you cram pegs into different shaped holes. It did so by trial and error through a process called reinforcement learning. No one told it how to get a square peg into a square hole, just that it needed to. So by making random movements and getting a digital reward (basically, yes, do that kind of thing again ) each time it got closer to success, Brett learned something new on its own . The process is super slow, sure, but with time roboticists will hone the machines’ ability to teach themselves novel skills in novel environments, which is pivotal if we don’t want to get stuck babysitting them.

Another tack here is to have a digital version of a robot train first in simulation, then port what it has learned to the physical robot in a lab. Over at Google , researchers used motion-capture videos of dogs to program a simulated dog, then used reinforcement learning to get a simulated four-legged robot to teach itself to make the same movements. That is, even though both have four legs, the robot’s body is mechanically distinct from a dog’s, so they move in distinct ways. But after many random movements, the simulated robot got enough rewards to match the simulated dog. Then the researchers transferred that knowledge to the real robot in the lab, and sure enough, the thing could walk—in fact, it walked even faster than the robot manufacturer’s default gait, though in fairness it was less stable.

13 Robots, Real and Imagined

They may be getting smarter day by day, but for the near future we are going to have to babysit the robots. As advanced as they’ve become, they still struggle to navigate our world. They plunge into fountains , for instance. So the solution, at least for the short term, is to set up call centers where robots can phone humans to help them out in a pinch . For example, Tug the hospital robot can call for help if it’s roaming the halls at night and there’s no human around to move a cart blocking its path. The operator would them teleoperate the robot around the obstruction.

Speaking of hospital robots. When the coronavirus crisis took hold in early 2020, a group of roboticists saw an opportunity: Robots are the perfect coworkers in a pandemic. Engineers must use the crisis, they argued in an editorial , to supercharge the development of medical robots, which never get sick and can do the dull, dirty, and dangerous work that puts human medical workers in harm’s way. Robot helpers could take patients’ temperatures and deliver drugs, for instance. This would free up human doctors and nurses to do what they do best: problem-solving and being empathetic with patients, skills that robots may never be able to replicate.

The rapidly developing relationship between humans and robots is so complex that it has spawned its own field, known as human-robot interaction . The overarching challenge is this: It’s easy enough to adapt robots to get along with humans—make them soft and give them a sense of touch—but it’s another issue entirely to train humans to get along with the machines. With Tug the hospital robot, for example, doctors and nurses learn to treat it like a grandparent—get the hell out of its way and help it get unstuck if you have to. We also have to manage our expectations: Robots like Atlas may seem advanced, but they’re far from the autonomous wonders you might think.

What humanity has done is essentially invented a new species, and now we’re maybe having a little buyers’ remorse. Namely, what if the robots steal all our jobs? Not even white-collar workers are safe from hyper-intelligent AI, after all.

A lot of smart people are thinking about the singularity, when the machines grow advanced enough to make humanity obsolete. That will result in a massive societal realignment and species-wide existential crisis. What will we do if we no longer have to work? How does income inequality look anything other than exponentially more dire as industries replace people with machines?

These seem like far-out problems, but now is the time to start pondering them. Which you might consider an upside to the killer-robot narrative that Hollywood has fed us all these years: The machines may be limited at the moment, but we as a society need to think seriously about how much power we want to cede. Take San Francisco, for instance, which is exploring the idea of a robot tax, which would force companies to pay up when they displace human workers.

I can’t sit here and promise you that the robots won’t one day turn us all into batteries , but the more realistic scenario is that, unlike in the world of R.U.R. , humans and robots are poised to live in harmony—because it’s already happening. This is the idea of multiplicity , that you’re more likely to work alongside a robot than be replaced by one. If your car has adaptive cruise control, you’re already doing this, letting the robot handle the boring highway work while you take over for the complexity of city driving. The fact that the US economy ground to a standstill during the coronavirus pandemic made it abundantly clear that robots are nowhere near ready to replace humans en masse.

The machines promise to change virtually every aspect of human life, from health care to transportation to work. Should they help us drive? Absolutely. (They will, though, have to make the decision to sometimes kill , but the benefits of precision driving far outweigh the risks.) Should they replace nurses and cops? Maybe not—certain jobs may always require a human touch.

One thing is abundantly clear: The machines have arrived. Now we have to figure out how to handle the responsibility of having invented a whole new species.

If You Want a Robot to Learn Better, Be a Jerk to It A good way to make a robot learn is to do the work in simulation, so the machine doesn’t accidentally hurt itself. Even better, you can give it tough love by trying to knock objects out of its hand.

Spot the Robot Dog Trots Into the Big, Bad World Boston Dynamics' creation is starting to sniff out its role in the workforce: as a helpful canine that still sometimes needs you to hold its paw.

Finally, a Robot That Moves Kind of Like a Tongue Octopus arms and elephant trunks and human tongues move in a fascinating way, which has now inspired a fascinating new kind of robot.

Robots Are Fueling the Quiet Ascendance of the Electric Motor For something born over a century ago, the electric motor really hasn’t fully extended its wings. The problem? Fossil fuels are just too easy, and for the time being, cheap. But now, it’s actually robots, with their actuators, that are fueling the secret ascendence of the electric motor.

This Robot Fish Powers Itself With Fake Blood A robot lionfish uses a rudimentary vasculature and “blood” to both energize itself and hydraulically power its fins.

Inside the Amazon Warehouse Where Humans and Machines Become One In an Amazon sorting center, a swarm of robots works alongside humans. Here’s what that says about Amazon—and the future of work.

This guide was last updated on April 13, 2020.

Enjoyed this deep dive? Check out more WIRED Guides .

Rob Reddick

Matt Reynolds

Amos Zeeberg

Caitlin Kelly

Premium Content

The robot revolution has arrived

Machines now perform all sorts of tasks: They clean big stores, patrol borders, and help children with autism. But will they improve our lives?

If you’re like most people, you’ve probably never met a robot. But you will.

I met one on a windy, bright day last January, on the short-grass prairie near Colorado’s border with Kansas, in the company of a rail-thin 31-year-old from San Francisco named Noah Ready-Campbell. To the south, wind turbines stretched to the horizon in uneven ranks, like a silent army of gleaming three-armed giants. In front of me was a hole that would become the foundation for another one.

A Caterpillar 336 excavator was digging that hole—62 feet in diameter, with walls that slope up at a 34-degree angle, and a floor 10 feet deep and almost perfectly level. The Cat piled the dug-up earth on a spot where it wouldn’t get in the way; it would start a new pile when necessary. Every dip, dig, raise, turn, and drop of the 41-ton machine required firm control and well-tuned judgment. In North America, skilled excavator operators earn as much as $100,000 a year.

The seat in this excavator, though, was empty. The operator lay on the cab’s roof. It had no hands; three snaky black cables linked it directly to the excavator’s control system. It had no eyes or ears either, since it used lasers, GPS, video cameras, and gyroscope-like sensors that estimate an object’s orientation in space to watch over its work. Ready-Campbell, co-founder of a San Francisco company called Built Robotics , clomped across the coarse dirt, climbed onto the excavator, and lifted the lid of a fancy luggage carrier on the roof. Inside was his company’s product—a 200-pound device that does work that once required a human being.

“This is where the AI runs,” he said, pointing into the collection of circuit boards, wires, and metal boxes that made up the machine: Sensors to tell it where it is, cameras to let it see, controllers to send its commands to the excavator, communication devices that allow humans to monitor it, and the processor where its artificial intelligence, or AI, makes the decisions a human driver would. “These control signals get passed down to the computers that usually respond to the joysticks and pedals in the cab.”

Some roboticists believe people are more comfortable around robots that look like Curi, from the Socially Intelligent Machines Lab at Georgia Tech. If a robot seems too much like a human, they say, people’s acceptance can plummet into “the uncanny valley,” Masahiro Mori’s term for our feelings when a robot seems less like an enhanced machine and more like a disturbingly diminished human—or a corpse.

Others create machines that imitate humans in detail—like Harmony, an expressive talking head that attaches to a silicone and steel sex doll made by Abyss Creations in San Marcos, California.

When I was a child in the 20th century, hoping to encounter a robot when I grew up, I expected it would look and act human, like C-3PO from Star Wars. Instead, the real robots that were being set up in factories were very different. Today millions of these industrial machines bolt, weld, paint, and do other repetitive, assembly-line tasks. Often fenced off to keep the remaining human workers safe, they are what roboticist Andrea Thomaz at the University of Texas has called “mute and brute” behemoths.

Ready-Campbell’s device isn’t like that (although the Cat did have the words “CAUTION Robotic Equipment Moves Without Warning” stamped on its side). And of course it isn’t like C-3PO, either. It is, instead, a new kind of robot, far from human but still smart, adept, and mobile. Once rare, these devices—designed to “live” and work with people who have never met a robot—are migrating steadily into daily life.

Already, in 2020, robots take inventory and clean floors in Walmart. They shelve goods and fetch them for mailing in warehouses. They cut lettuce and pick apples and even raspberries. They help autistic children socialize and stroke victims regain the use of their limbs. They patrol borders and, in the case of Israel’s Harop drone, attack targets they deem hostile. Robots arrange flowers, perform religious ceremonies, do stand-up comedy, and serve as sexual partners.

And that was before the COVID-19 pandemic. Suddenly, replacing people with robots—an idea majorities of people around the world dislike, according to polls—looks medically wise, if not essential. ( Read more about the skyrocketing demand for robots during the pandemic. )

Robots now deliver food in Milton Keynes, England, tote supplies in a Dallas hospital, disinfect patients’ rooms in China and Europe, and wander parks in Singapore, nagging pedestrians to maintain social distance.

This past spring, in the middle of a global economic collapse, the robotmakers I’d contacted in 2019, when I started working on this article, said they were getting more, not fewer, inquiries from potential customers. The pandemic has made more people realize that “automation is going to be a part of work,” Ready-Campbell told me in May. “The driver of that had been efficiency and productivity, but now there’s this other layer to it, which is health and safety.”

Even before the COVID crisis added its impetus, technological trends were accelerating the creation of robots that could fan out into our lives. Mechanical parts got lighter, cheaper, and sturdier. Electronics packed more computing power into smaller packages. Breakthroughs let engineers put powerful data-crunching tools into robot bodies. Better digital communications let them keep some robot “brains” in a computer elsewhere—or connect a simple robot to hundreds of others, letting them share a collective intelligence, like a beehive’s.

The workplace of the near future “will be an ecosystem of humans and robots working together to maximize efficiency,” said Ahti Heinla, co-founder of the Skype internet-call platform, now co-founder and chief technology officer of Starship Technologies , whose six-wheeled, self-driving delivery robots are rolling around Milton Keynes and other cities in Europe and the United States.

Robots take inventory and clean at big stores. They patrol borders, perform religious ceremonies, and help autistic children.

“We’ve gotten used to having machine intelligence that we can carry around with us,” said Manuela Veloso, an AI roboticist at Carnegie Mellon University in Pittsburgh. She held up her smartphone. “Now we’re going to have to get used to intelligence that has a body and moves around without us.”

Outside her office, her team’s “ cobots ”—collaborative robots—roam the halls, guiding visitors and delivering paperwork. They look like iPads on wheeled display stands. But they move about on their own, even taking elevators when they need to (they beep and flash a polite request to nearby humans to push the buttons for them).

“It’s an inevitable fact that we are going to have machines, artificial creatures, that will be a part of our daily life,” Veloso said. “When you start accepting robots around you, like a third species, along with pets and humans, you want to relate to them.”

We’re all going to have to figure out how. “People have to understand that this isn’t science fiction; it’s not something that’s going to happen 20 years from now,” Veloso said. “It’s started to happen.”

Vidal Pérez likes his new co-worker.

For seven years, working for Taylor Farms in Salinas, California, the 34-year-old used a seven-inch knife to cut lettuce. Bending at the waist, over and over, he would slice off a head of romaine or iceberg, shear off imperfect leaves, and toss it into a bin.

Since 2016, though, a robot has done the slicing. It’s a 28-foot-long, tractorlike harvester that moves steadily down the rows in a cloud of mist from the high-pressure water jet it uses to cut off a lettuce head every time its sensor detects one. The cut lettuce falls onto a sloped conveyor belt that carries it up to the harvester’s platform, where a team of about 20 workers sorts it into bins.

I met Pérez early one morning in June 2019, as he took a break from working a 22-acre field of romaine destined for Taylor’s fast-food and grocery store customers. A couple hundred yards away, another crew of lettuce cutters hunched over the plants, knives flashing as they worked in the old pre-robot style.

“This is better, because you get a lot more tired cutting lettuce with a knife than with this machine,” Pérez said. Riding on the robot, he rotates bins on the conveyor belt. Not all the workers prefer the new system, he said. “Some people want to stay with what they know. And some get bored with standing on the machine, since they’re used to moving all the time through a field.”

Some humans make use of wearable robots, or exoskeletons—combinations of sensors, computers, and motors. Arms with hooks attached, demonstrated by Sarcos Robotics engineer Fletcher Garrison, can lift up to 200 pounds—perhaps as an aid to airport luggage handlers.

Yukio Taguchi, a 59-year-old paraplegic, wears HAL (Hybrid Assistive Limb), developed by Cyberdyne. Taguchi was a surfer and snowboarder for more than 30 years. After a spinal cord injury, he began to train with HAL two times a month at Tsukuba Robocare Center in Tsukuba, Japan.

Taylor Farms is one of the first major California agricultural companies to invest in robotic farming. “We’re going through a generational change … in agriculture,” Taylor Farms California president Mark Borman told me while we drove from the field in his pickup. As older workers leave, younger people aren’t choosing to fill the backbreaking jobs. A worldwide turn toward restrictions on cross-border migration, accelerated by COVID fears, hasn’t helped either. Farming around the world is being roboticized, Borman said. “We’re growing, our workforce is shrinking, so robots present an opportunity that’s good for both of us.”

It was a refrain I heard often last year from employers in farming and construction, manufacturing and health care: We’re giving tasks to robots because we can’t find people to do them.

At the wind farm site in Colorado, executives from the Mortenson Company , a Minneapolis-based construction firm that has hired Built’s robots since 2018, told me about a dire shortage of skilled workers in their industry. Built robots dug 21 foundations at the wind farm.

“Operators will say things like, Oh, hey, here come the job killers,” said Derek Smith, lean innovation manager for Mortenson. “But after they see that the robot takes away a lot of repetitive work and they still have plenty to do, that shifts pretty quickly.”

Once the robot excavator finished the dig we’d watched, a human on a bulldozer smoothed out the work and made ramps. “On this job, we have 229 foundations, and every one is basically the same spec,” Smith said. “We want to take away tasks that are repetitive. Then our operators concentrate on the tasks that involve more art.”

The pandemic’s tsunami of job losses hasn’t changed this outlook, robotmakers and users told me. “Even with a very high unemployment rate, you can’t just snap your fingers and fill jobs that need highly specialized skills, because we don’t have the people that have the training,” said Ben Wolff, chairman and CEO of Sarcos Robotics .

The Utah-based firm makes wearable robots called exoskeletons, which add the strength and precision of a machine to a worker’s movements. Delta Air Lines had just begun to test a Sarcos device with aircraft mechanics when the pandemic decimated air travel.

When I reached Wolff last spring, he was upbeat. “There is a short-term slowdown, but long term we expect more business,” he said.

Most employers are now looking to reduce contact among employees, and a device that lets one do the work of two might help. Since the pandemic began, Wolff told me, Sarcos has seen a jump in inquiries, some from companies he didn’t expect—for example, a major electronics firm, a pharmaceutical company, a meat-packer. The electronics- and pillmakers wanted to move heavy supplies with fewer people. The meat-packer was interested in spreading out its crowded workers.

The RBO Hand 3 uses compressed air in its silicone fingers. When the robot grasps an apple, a flower, or a human hand, the fingers naturally take the shape of the thing grasped. The physics of the situation allows versatility. This “soft robotics” approach to design can create cheaper, more versatile machines—which humans will like. “People are more comfortable with humanlike robot hands,” says roboticist Steffen Puhlmann.

In a world that now fears human contact, it won’t be easy to fill jobs caring for children or the elderly. Maja Matarić , a computer scientist and roboticist at the University of Southern California, develops “socially assistive robots”—machines that do social support rather than physical labor. One of her lab’s projects, for example, is a robot coach that leads an elderly user through an exercise routine, then encourages the human to go outside and walk.

“It says, ‘I can’t go outside, but why don’t you take a walk and tell me about it?’” Matarić told me. The robot is a white plastic head, torso, and arms that sits atop a rolling metal stand. But its sensors and software allow it to do some of what a human coach would do—for example, saying, “Bend your left forearm inward a little,” during exercise, or “Nice job!” afterward.

We walked around her lab—a warren of young people in cubicles, working on the technologies that might let a robot help keep the conversation going in a support group, for example, or respond in a way that makes a human feel like the machine is empathizing. I asked Matarić if people ever got creeped out at the thought of a machine watching over Grandpa.

“We’re not replacing caregivers,” she said. “We’re filling a gap. Grown-up children can’t be there with elderly parents. And the people who take care of other people in this country are underpaid and underappreciated. Until that changes, using robots is what we’ll have to do.”

Days after I visited Matarić’s lab, in a different world 20 miles due south of the university, hundreds of longshoremen were marching against robots. This was in the San Pedro section of Los Angeles, where container cranes tower over a landscape of warehouses and docks and modest residential streets. Generations of people in this tight-knit community have worked as longshoremen on the docks. The current generation didn’t like a plan to bring robot cargo handlers to the port’s largest terminal, even though such machines already are common in ports worldwide, including others in the Los Angeles area.

Designers shape each robot according to its duties—and the needs of people it works with. The five-foot-nine-inch, 222-pound HRP-5P, developed at Japan’s National Institute of Advanced Industrial Science and Technology, has arms, legs, and a head and handles heavy loads in places such as construction sites and shipyards.

In contrast, SQ-2, a security robot, is limbless and quietly unassuming at slightly more than four feet tall and 143 pounds. Its shape accommodates a 360-degree camera, a laser mapping system, and a computer that allows the robot to patrol on its own.

The dockworkers don’t expect the world to stop changing, said Joe Buscaino, who represents San Pedro on the Los Angeles City Council. San Pedro has gone through economic upheavals before, as fishing, canning, and shipbuilding boomed and busted. The problem with robots, Buscaino told me, is the speed with which employers are dropping them into workers’ lives.

“Years ago my dad saw that fishing was coming to an end, so he got a job in a bakery,” he said. “He was able to transition. But automation has the ability to take jobs overnight.”

Economists disagree a great deal about how much and how soon robots will affect future jobs. But many experts do agree on one thing: Some workers will have a much harder time adapting to robots.

“The evidence is fairly clear that we have many, many fewer blue-collar production jobs, assembly jobs, in industries that are adopting robots,” said Daron Acemoglu, an economist at MIT who has studied the effects of robots and other automation. “That doesn’t mean that future technology cannot create jobs. But the notion that we’re going to adopt automation technologies left, right, and center and also create lots of jobs is a purposefully misleading and incorrect fantasy.”

For all the optimism of investors, researchers, and entrepreneurs at start-ups, many people, such as Buscaino, worry about a future full of robots. They fear robots won’t take over just grunt work but the whole job, or at least the parts of it that are challenging, honorable—and well paid. (The latter process is prevalent enough that economists have a name for it: “de-skilling.”) People also fear robots will make work more stressful, perhaps even more dangerous.

Beth Gutelius, an urban planner and economist at the University of Illinois at Chicago who has researched the warehouse industry, told me about one warehouse she visited after it introduced robots. The robots were quickly delivering goods to humans for packing, and this was saving the workers a lot of walking back and forth. It also made them feel rushed and eliminated their chance to speak to one another.

You May Also Like

This ‘SmartBird’ Is the Next Thing in Drone Tech

Medieval robots? They were just one of this Muslim inventor's creations

The uncanny valley, explained: Why you might find AI creepy

Employers should consider that this kind of stress on employees “is not healthy, and it’s real, and it has impacts on the well-being of the workers,” said Dawn Castillo, an epidemiologist who manages occupational robot research at the National Institute for Occupational Safety and Health at the CDC. The Center for Occupational Robotics Research actually expects robot-related deaths “will likely increase over time,” according to its website. This is because there are more robots in more places with each passing year, but also because robots are working in new settings—where they meet people who don’t know what to expect and situations that their designers didn’t necessarily anticipate.

In San Pedro, after Buscaino won a city council vote to block the automation plan, the International Longshore and Warehouse Union negotiated what the union’s local chapter president called a “bittersweet” deal with Maersk, the Danish conglomerate that operates the container terminal. The dockworkers agreed to end the fight against robots in exchange for 450 mechanics getting “upskilled”: trained to work on the robots. Another 450 workers will be “reskilled”: trained to work at new, tech-friendly jobs.

How effective all that retraining will be, especially for middle-aged workers, remains to be seen, Buscaino said. A friend of his is a mechanic, whose background with cars and trucks leaves him well positioned to add robot maintenance to his skills. On the other hand, “my brother-in-law Dominic, who is a longshoreman today, he has no clue how to work on these robots. And he’s 56.”

The word “robot” is precisely 100 years old this year. It was coined by the Czech writer Karel Čapek, in a play that set the template for a century’s machine dreams and nightmares. The robots in that play, R.U.R., look and act like people, do all the work of humans—and wipe out the human race before the curtain falls.

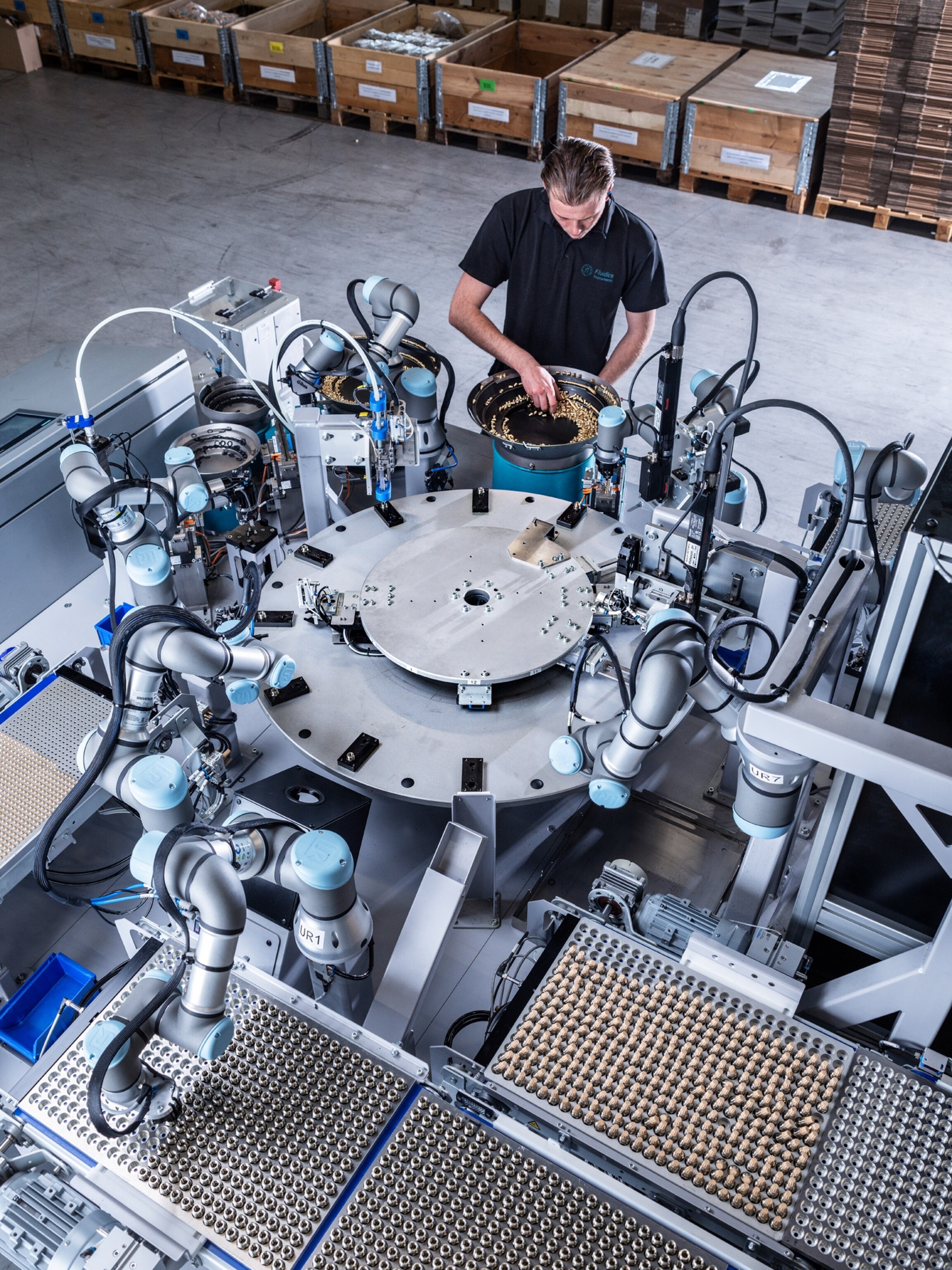

Robot partners come in many forms. At Fluidics Instruments in Eindhoven, Netherlands, an employee works with seven robot arms to assemble parts for oil and gas burners. Like traditional factory robots, these cobots are efficient and precise—able to produce a thousand nozzles an hour. But unlike older machines, they adapt quickly to changed specs or a new task.

At Medical City Heart Hospital in Dallas, nurses work with Moxi, a robot built to learn and then perform tasks that take nurses away from patients, such as fetching supplies, delivering lab samples, and removing bags of soiled linens.

Ever since, imaginary robots from the Terminator to Japan’s Astro Boy to those Star Wars droids have had a huge influence on the plans of robotmakers. They also have shaped the public’s expectations of what robots are and what they can do.

Tensho Goto is a monk in the Rinzai school of Japanese Zen Buddhism. A vigorous, sturdy man with a cheerful manner, Goto met me in a spare, elegant room at Kodai-ji, the 17th-century temple in Kyoto where he is the chief steward. He seemed the picture of tradition. Yet he has been dreaming of robots for many years. It began decades ago, when he read about artificial minds and thought about reproducing the Buddha himself in silicone, plastic, and metal. With android versions of the sages, he said, Buddhists could “hear their words directly.”

Once he began collaborating with roboticists at Osaka University, though, robot reality dampened the robot dream. He learned that “as AI technology exists today, it is impossible to create human intelligence, let alone the personages of those who have attained enlightenment.” But like many roboticists, he didn’t give up, instead settling for what is possible today.

It stands at one end of a white-walled room on the temple grounds: a metal and silicone incarnation of Kannon, the deity who in Japanese Buddhism embodies compassion and mercy. For centuries, temples and shrines have used statues to attract people and get them to focus on Buddhist tenets. “Now, for the first time, a statue moves,” Goto said.

Mindar , as the robot is called, delivers prerecorded sermons in a forceful, not-quite-human female voice, gently gesticulating with her arms and turning her head from side to side to survey the audience. When her eyes fall on you, you feel something—but it isn’t her intelligence. There is no AI in Mindar. Goto hopes that will change over time, and that his moving statue will become capable of holding conversations with people and answering their religious questions.

Robot soccer players have been taking the field since 1996 as part of an international league called Robo-Cup. Pitting the robot teams against one another in local, regional, and world championships is part fun and part research for roboticists around the world—even though humans will remain better at the game for decades to come. Here, Ishan Durugkar, a Ph.D. student in computer science at the University of Texas, prepares to put his school’s squad, the UT Austin Villa robot soccer team, through some drills.

Across the Pacific, in a nondescript house in a quiet suburb of San Diego, I met a man who seeks to provide a different kind of intimate experience with robots. Artist Matt McMullen is CEO of a company called Abyss Creations, which makes realistic, life-size sex dolls. McMullen leads a team of programmers, robotics specialists, special-effects experts, engineers, and artists who create robot companions that can appeal to hearts and minds as well as sex organs.

The company has made silicone-skin, steel-skeleton RealDolls for more than a decade. They go for about $4,000. But these days, for an additional $8,000, a customer receives a robotic head packed with electronics that power facial expressions, a voice, and an artificial intelligence that can be programmed via a smartphone app.

Like Siri or Alexa, the doll’s AI gets to know the user via the commands and questions he or she gives it. Below the neck, for now, the robot is still a doll—its arms and legs move only when the user manipulates them.

“We don’t today have a real artificial intelligence that resembles a human mind,” McMullen acknowledges. “But I think we will. I think that is inevitable.” He has no doubt the market is there. “I think there are people who can greatly benefit from robots that look like people,” he said.

We are getting attached already to ones that don’t look much like us at all.

This isn’t science fiction. It’s not something that’s going to happen 20 years from now. It’s started. Manuela Veloso , Carnegie Mellon AI roboticist

Military units have held funerals for bomb-clearing robots blown up in action. Nurses in hospitals tease their robot colleagues. People in experiments have declined to rat out their robot teammates. As robots get more lifelike, people probably will invest them with even more affection and trust—too much, perhaps. The influence of fantasy robots leads people to think that today’s real machines are far more capable than they really are. Adapting well to their presence among us, experts told me, must start with realistic expectations.

Robots can be programmed or trained to do a well-defined task—dig a foundation, harvest lettuce—better or at least more consistently than humans can. But none can equal the human mind’s ability to do a lot of different tasks, especially unexpected ones. None has yet mastered common sense.

Today’s robots can’t match human hands either, said Chico Marks, a manufacturing engineering manager at Subaru’s auto plant in Lafayette, Indiana. The plant, like those of all carmakers, has used standard industrial robots for decades. It’s now gradually adding new types, for tasks such as moving self-guided carts that take parts around the plant. Marks showed me a combination of wires that would snake through a curving section near a future car’s rear door.

“Routing a wiring harness into a vehicle is not something that lends itself well to automation,” Marks said. “It requires a human brain and tactile feedback to know it’s in the right place and connected.”

Robot legs aren’t any better. In 1996 Veloso, the Carnegie Mellon AI roboticist, was part of a challenge to create robots that would play soccer better than humans by 2050. She was one of a group of researchers that year who created the RoboCup tournament to spur progress. Today RoboCup is a well-loved tradition for engineers on several continents, but no one, including Veloso, expects robots to play soccer better than humans anytime soon.

“It’s crazy how sophisticated our bodies are as machines,” she said. “We’re very good at handling gravity, dealing with forces as we walk, being pushed and keeping our balance. It’s going to be many years before a bipedal robot can walk as well as a person.”

Through “tele-operation”—controlling a robot remotely via computer, smartphone, or even just eye movements—robots that navigate human spaces have expanded opportunities for people who are disabled. Though her mobility is limited by a neuromuscular disorder, Nozomi Murata, 34, works as a secretary in a Tokyo office via an OriHime robot created by OryLab. She tele-operates the robot from her home elsewhere in the city.

In the Minato City section of Tokyo, Murata’s tele-operated OriHime robot greets its inventor, Kentaro Yoshifuji, co-founder and CEO of OryLaboratory, which makes the robot. Yoshifuji created the device to alleviate loneliness by giving people a robotic means to connect directly with one another.

Robots are not going to be artificial people. We need to adapt to them, as Veloso said, as to a different species—and most robotmakers are working hard to engineer robots that make allowances for our human feelings. At the wind farm site, I learned that “bouncing” the toothed bucket of a big excavator against the ground is a sign of inexperience in a human operator. (The resulting jolt can actually injure the person in the cab.) To a robot excavator, the bounce makes little difference. Yet Built Robotics changed its robot’s algorithms to avoid bounce, because it looks bad to human professionals, and Mortenson wants workers of all species to get along.

It’s not just people who change as robots come on line. Taylor Farms, Borman told me, is working on a new light bulb–shaped lettuce with a longer stalk. It won’t taste or feel different; that shape is just easier for a robot to cut.

Bossa Nova Robotics makes a robot that roams thousands of stores in North America, including 500 Walmarts, scanning shelves to track inventory. The firm’s engineers asked themselves how friendly and approachable their robot should look. In the end it looks like a portable air conditioner with a six-and-a-half-foot-high periscope attached—no face or eyes.

“It’s a tool,” explained Sarjoun Skaff, Bossa Nova’s co-founder and chief technology officer. He and the other engineers wanted shoppers and workers to like the machine, but not too much. Too industrial or too strange, and shoppers would flee. Too friendly, and people would chat and play with it and slow down its work. In the long run, Skaff told me, robots and people will settle on “a common set of human-robot interaction conventions” that will enable humans to know “how to interpret what the robot is doing and how to behave around it.” But for now, robotmakers and ordinary people are feeling their way there.

Outside Tokyo, at the factory of Glory , a maker of money-handling devices, I stopped at a workstation where a nine-member team was assembling a coin-change machine. A plastic-sheathed sheet of paper displayed photos and names of three women, two men, and four robots.

The gleaming white, two-armed robots, which looked a little like the offspring of a refrigerator and WALL·E, were named after currencies. As I watched the team swiftly add parts to a coin changer, a robot named Dollar needed help a couple of times—once when it couldn’t peel the backing off a sticker. A red light near its station went on, and a human quickly left his own spot on the line to fix the problem.

Dollar has cameras on its “wrists,” but it also has a head with two camera eyes. “Conceptually it is meant to be a human-shaped robot,” explained manager Toshifumi Kobayashi. “So it has a head.”

That little accommodation didn’t immediately convince the real humans, said Shota Akasaka, 32, a boyish and smiling team leader. “I was really not sure that it would be able to do human work, that it would be able to screw in a screw,” he said. “When I saw the screw go in perfectly, I realized we were at the dawn of a new era.”

In a conference room northeast of Tokyo, I learned what it’s like to work with a robot in the closest way: by wearing it.

The exoskeleton, manufactured by a Japanese firm called Cyberdyne , consisted of two connected white tubes that curved across my back, a belt at my waist, and two straps on my thighs. It felt like being strapped into a parachute or an amusement park ride. I bent at the waist to lift a 40-pound container of water, which should have hurt my lower back. Instead, a computer in the tubes used the change in position to deduce that I was lifting an object, and motors kicked in to assist me. (More advanced users would have worn electrodes so the device could read the signals their brain was sending to their muscles.)

The robot was designed to assist only my back muscles; when I squatted and put the effort into my legs, as you’re supposed to, the device didn’t help much. Still, when it worked, it seemed like a magic trick—I felt the weight, then I didn’t.

Cyberdyne sees a large market in medical rehabilitation; it also makes a lower-limb exoskeleton that is being used to help people regain the use of their own legs. For many of its products, “another market will be for workers, so they can work longer and without risking injuries,” Cyberdyne spokesman Yudai Katami said.

Sarcos Robotics, the other maker of exoskeletons, is thinking along similar lines. One purpose of his devices, said CEO Wolff, was “allowing humans to be more productive so they can keep up with the machines that enable automation.”

Robots can do well-defined tasks, but none has mastered humans’ ability to multitask or use common sense.

Will we adapt to the machines more than they adapt to us? We might be asked to. Roboticists dream of machines that make life better, but companies sometimes have incentives to install robots that don’t. Robots, after all, don’t need paid vacations or medical insurance. Beyond that, many nations get a lot of tax revenue from labor, while encouraging automation with tax breaks and other incentives. Companies thus save money by cutting employees and adding robots.

“You get a lot of subsidies for installing equipment, especially digital equipment and robots,” Acemoglu said. “So that encourages firms to go for machines rather than humans, even if machines are no better.” Robots also are just more exciting than mere humans.

There is “a particular zeitgeist among many technologists and managers that humans are troublesome,” Acemoglu said. There’s this feeling of, “You don’t need them. They make mistakes. They make demands. Let’s go for automation.”

After Noah Ready-Campbell decided to go into construction robots, his father, Scott Campbell, spent more than three hours on a car ride gently asking him if this was really such a good idea. The elder Campbell, who used to work in construction himself, now represents the town of St. Johnsbury in Vermont’s general assembly. He quickly came to believe in his son’s work, but his constituents worry about robots, he told me, and it’s not all about economics. Perhaps it will be possible to give all our work to robots someday—even the work of religious ministry, even “sex work.” But Campbell’s constituents want to keep something for humanity: the work that makes humans feel valued.

Mindar—a robotic incarnation of Kannon, the deity of mercy and compassion in Japanese Buddhism—faces Tensho Goto, a monk at the Kodaiji temple in Kyoto, Japan. Mindar, created by a team led by roboticist Hiroshi Ishiguro of Osaka University, can recite Buddhist teachings.

“What is important about work is not what you get for it but what you become by doing it,” Campbell said. “I feel like it’s profoundly true. That’s the most important thing about doing a job.”

A century after they were first dreamed up for the stage, real robots are making life easier and safer for some people. They’re also making it a bit more robot-like. For many companies, that’s part of the attraction.

“Right now every construction site is different, and every operator is an artist,” said Gaurav Kikani, Built Robotics’ vice president for strategy, operations, and finance. Operators like the variety; employers not so much. They save time and money when they know that a task is done the same way every time and doesn’t depend on an individual’s decisions. Though construction sites will always need human adaptability and ingenuity for some tasks, “with robots we see an opportunity to standardize practices and create efficiencies for the tasks where robots are appropriate,” Kikani said.

In the moments when someone has to decide whose preferences ought to prevail, technology itself has no answers. However far they advance, there’s one task that robots won’t help us solve: Deciding how, when, and where to use them.

Related Topics

- ENGINEERING

- ARTIFICIAL INTELLIGENCE

Hidden details from the Battle of the Bulge come to light

This 'Gate to Hell' has burned for decades. Will we ever shut it?

In 1969, the U.S. turned off Niagara Falls. Here’s what happened next.

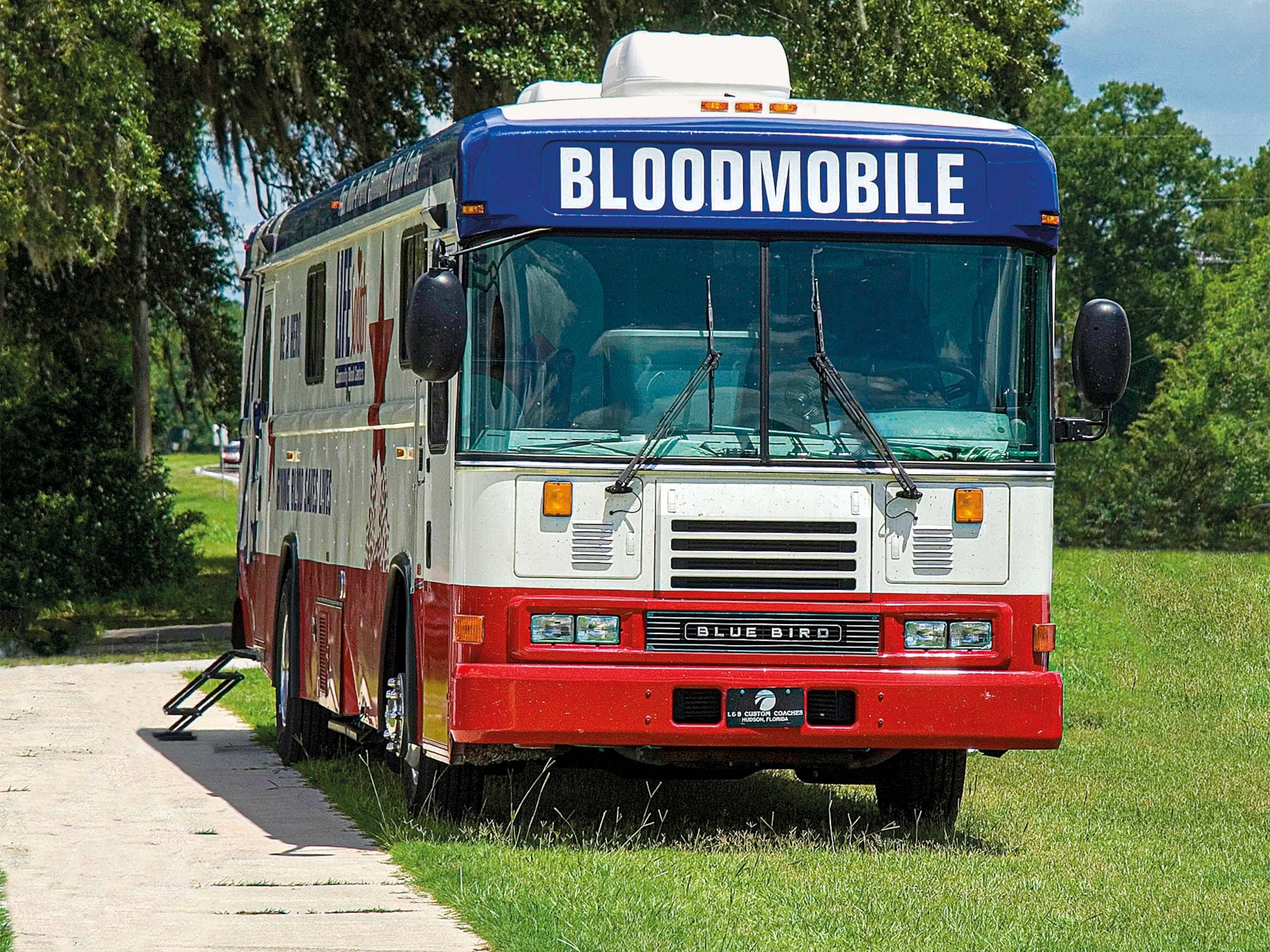

Meet the inventor of the Bloodmobile

IVF revolutionized fertility. Will these new methods do the same?

- History & Culture

- Environment

- Paid Content

History & Culture

- History Magazine

- Mind, Body, Wonder

- Terms of Use

- Privacy Policy

- Your US State Privacy Rights

- Children's Online Privacy Policy

- Interest-Based Ads

- About Nielsen Measurement

- Do Not Sell or Share My Personal Information

- Nat Geo Home

- Attend a Live Event

- Book a Trip

- Inspire Your Kids

- Shop Nat Geo

- Visit the D.C. Museum

- Learn About Our Impact

- Support Our Mission

- Advertise With Us

- Customer Service

- Renew Subscription

- Manage Your Subscription

- Work at Nat Geo

- Sign Up for Our Newsletters

- Contribute to Protect the Planet

Copyright © 1996-2015 National Geographic Society Copyright © 2015-2024 National Geographic Partners, LLC. All rights reserved

How artificial intelligence is transforming the world

Subscribe to techstream, darrell m. west and darrell m. west senior fellow - center for technology innovation , douglas dillon chair in governmental studies john r. allen john r. allen.

April 24, 2018

Artificial intelligence (AI) is a wide-ranging tool that enables people to rethink how we integrate information, analyze data, and use the resulting insights to improve decision making—and already it is transforming every walk of life. In this report, Darrell West and John Allen discuss AI’s application across a variety of sectors, address issues in its development, and offer recommendations for getting the most out of AI while still protecting important human values.

Table of Contents I. Qualities of artificial intelligence II. Applications in diverse sectors III. Policy, regulatory, and ethical issues IV. Recommendations V. Conclusion

- 49 min read

Most people are not very familiar with the concept of artificial intelligence (AI). As an illustration, when 1,500 senior business leaders in the United States in 2017 were asked about AI, only 17 percent said they were familiar with it. 1 A number of them were not sure what it was or how it would affect their particular companies. They understood there was considerable potential for altering business processes, but were not clear how AI could be deployed within their own organizations.

Despite its widespread lack of familiarity, AI is a technology that is transforming every walk of life. It is a wide-ranging tool that enables people to rethink how we integrate information, analyze data, and use the resulting insights to improve decisionmaking. Our hope through this comprehensive overview is to explain AI to an audience of policymakers, opinion leaders, and interested observers, and demonstrate how AI already is altering the world and raising important questions for society, the economy, and governance.

In this paper, we discuss novel applications in finance, national security, health care, criminal justice, transportation, and smart cities, and address issues such as data access problems, algorithmic bias, AI ethics and transparency, and legal liability for AI decisions. We contrast the regulatory approaches of the U.S. and European Union, and close by making a number of recommendations for getting the most out of AI while still protecting important human values. 2

In order to maximize AI benefits, we recommend nine steps for going forward:

- Encourage greater data access for researchers without compromising users’ personal privacy,

- invest more government funding in unclassified AI research,

- promote new models of digital education and AI workforce development so employees have the skills needed in the 21 st -century economy,

- create a federal AI advisory committee to make policy recommendations,

- engage with state and local officials so they enact effective policies,

- regulate broad AI principles rather than specific algorithms,

- take bias complaints seriously so AI does not replicate historic injustice, unfairness, or discrimination in data or algorithms,

- maintain mechanisms for human oversight and control, and

- penalize malicious AI behavior and promote cybersecurity.

Qualities of artificial intelligence

Although there is no uniformly agreed upon definition, AI generally is thought to refer to “machines that respond to stimulation consistent with traditional responses from humans, given the human capacity for contemplation, judgment and intention.” 3 According to researchers Shubhendu and Vijay, these software systems “make decisions which normally require [a] human level of expertise” and help people anticipate problems or deal with issues as they come up. 4 As such, they operate in an intentional, intelligent, and adaptive manner.

Intentionality

Artificial intelligence algorithms are designed to make decisions, often using real-time data. They are unlike passive machines that are capable only of mechanical or predetermined responses. Using sensors, digital data, or remote inputs, they combine information from a variety of different sources, analyze the material instantly, and act on the insights derived from those data. With massive improvements in storage systems, processing speeds, and analytic techniques, they are capable of tremendous sophistication in analysis and decisionmaking.

Artificial intelligence is already altering the world and raising important questions for society, the economy, and governance.

Intelligence

AI generally is undertaken in conjunction with machine learning and data analytics. 5 Machine learning takes data and looks for underlying trends. If it spots something that is relevant for a practical problem, software designers can take that knowledge and use it to analyze specific issues. All that is required are data that are sufficiently robust that algorithms can discern useful patterns. Data can come in the form of digital information, satellite imagery, visual information, text, or unstructured data.

Adaptability

AI systems have the ability to learn and adapt as they make decisions. In the transportation area, for example, semi-autonomous vehicles have tools that let drivers and vehicles know about upcoming congestion, potholes, highway construction, or other possible traffic impediments. Vehicles can take advantage of the experience of other vehicles on the road, without human involvement, and the entire corpus of their achieved “experience” is immediately and fully transferable to other similarly configured vehicles. Their advanced algorithms, sensors, and cameras incorporate experience in current operations, and use dashboards and visual displays to present information in real time so human drivers are able to make sense of ongoing traffic and vehicular conditions. And in the case of fully autonomous vehicles, advanced systems can completely control the car or truck, and make all the navigational decisions.

Related Content

Jack Karsten, Darrell M. West

October 26, 2015

Makada Henry-Nickie

November 16, 2017

Sunil Johal, Daniel Araya

February 28, 2017

Applications in diverse sectors

AI is not a futuristic vision, but rather something that is here today and being integrated with and deployed into a variety of sectors. This includes fields such as finance, national security, health care, criminal justice, transportation, and smart cities. There are numerous examples where AI already is making an impact on the world and augmenting human capabilities in significant ways. 6

One of the reasons for the growing role of AI is the tremendous opportunities for economic development that it presents. A project undertaken by PriceWaterhouseCoopers estimated that “artificial intelligence technologies could increase global GDP by $15.7 trillion, a full 14%, by 2030.” 7 That includes advances of $7 trillion in China, $3.7 trillion in North America, $1.8 trillion in Northern Europe, $1.2 trillion for Africa and Oceania, $0.9 trillion in the rest of Asia outside of China, $0.7 trillion in Southern Europe, and $0.5 trillion in Latin America. China is making rapid strides because it has set a national goal of investing $150 billion in AI and becoming the global leader in this area by 2030.

Meanwhile, a McKinsey Global Institute study of China found that “AI-led automation can give the Chinese economy a productivity injection that would add 0.8 to 1.4 percentage points to GDP growth annually, depending on the speed of adoption.” 8 Although its authors found that China currently lags the United States and the United Kingdom in AI deployment, the sheer size of its AI market gives that country tremendous opportunities for pilot testing and future development.

Investments in financial AI in the United States tripled between 2013 and 2014 to a total of $12.2 billion. 9 According to observers in that sector, “Decisions about loans are now being made by software that can take into account a variety of finely parsed data about a borrower, rather than just a credit score and a background check.” 10 In addition, there are so-called robo-advisers that “create personalized investment portfolios, obviating the need for stockbrokers and financial advisers.” 11 These advances are designed to take the emotion out of investing and undertake decisions based on analytical considerations, and make these choices in a matter of minutes.

A prominent example of this is taking place in stock exchanges, where high-frequency trading by machines has replaced much of human decisionmaking. People submit buy and sell orders, and computers match them in the blink of an eye without human intervention. Machines can spot trading inefficiencies or market differentials on a very small scale and execute trades that make money according to investor instructions. 12 Powered in some places by advanced computing, these tools have much greater capacities for storing information because of their emphasis not on a zero or a one, but on “quantum bits” that can store multiple values in each location. 13 That dramatically increases storage capacity and decreases processing times.

Fraud detection represents another way AI is helpful in financial systems. It sometimes is difficult to discern fraudulent activities in large organizations, but AI can identify abnormalities, outliers, or deviant cases requiring additional investigation. That helps managers find problems early in the cycle, before they reach dangerous levels. 14

National security

AI plays a substantial role in national defense. Through its Project Maven, the American military is deploying AI “to sift through the massive troves of data and video captured by surveillance and then alert human analysts of patterns or when there is abnormal or suspicious activity.” 15 According to Deputy Secretary of Defense Patrick Shanahan, the goal of emerging technologies in this area is “to meet our warfighters’ needs and to increase [the] speed and agility [of] technology development and procurement.” 16

Artificial intelligence will accelerate the traditional process of warfare so rapidly that a new term has been coined: hyperwar.

The big data analytics associated with AI will profoundly affect intelligence analysis, as massive amounts of data are sifted in near real time—if not eventually in real time—thereby providing commanders and their staffs a level of intelligence analysis and productivity heretofore unseen. Command and control will similarly be affected as human commanders delegate certain routine, and in special circumstances, key decisions to AI platforms, reducing dramatically the time associated with the decision and subsequent action. In the end, warfare is a time competitive process, where the side able to decide the fastest and move most quickly to execution will generally prevail. Indeed, artificially intelligent intelligence systems, tied to AI-assisted command and control systems, can move decision support and decisionmaking to a speed vastly superior to the speeds of the traditional means of waging war. So fast will be this process, especially if coupled to automatic decisions to launch artificially intelligent autonomous weapons systems capable of lethal outcomes, that a new term has been coined specifically to embrace the speed at which war will be waged: hyperwar.

While the ethical and legal debate is raging over whether America will ever wage war with artificially intelligent autonomous lethal systems, the Chinese and Russians are not nearly so mired in this debate, and we should anticipate our need to defend against these systems operating at hyperwar speeds. The challenge in the West of where to position “humans in the loop” in a hyperwar scenario will ultimately dictate the West’s capacity to be competitive in this new form of conflict. 17

Just as AI will profoundly affect the speed of warfare, the proliferation of zero day or zero second cyber threats as well as polymorphic malware will challenge even the most sophisticated signature-based cyber protection. This forces significant improvement to existing cyber defenses. Increasingly, vulnerable systems are migrating, and will need to shift to a layered approach to cybersecurity with cloud-based, cognitive AI platforms. This approach moves the community toward a “thinking” defensive capability that can defend networks through constant training on known threats. This capability includes DNA-level analysis of heretofore unknown code, with the possibility of recognizing and stopping inbound malicious code by recognizing a string component of the file. This is how certain key U.S.-based systems stopped the debilitating “WannaCry” and “Petya” viruses.

Preparing for hyperwar and defending critical cyber networks must become a high priority because China, Russia, North Korea, and other countries are putting substantial resources into AI. In 2017, China’s State Council issued a plan for the country to “build a domestic industry worth almost $150 billion” by 2030. 18 As an example of the possibilities, the Chinese search firm Baidu has pioneered a facial recognition application that finds missing people. In addition, cities such as Shenzhen are providing up to $1 million to support AI labs. That country hopes AI will provide security, combat terrorism, and improve speech recognition programs. 19 The dual-use nature of many AI algorithms will mean AI research focused on one sector of society can be rapidly modified for use in the security sector as well. 20

Health care

AI tools are helping designers improve computational sophistication in health care. For example, Merantix is a German company that applies deep learning to medical issues. It has an application in medical imaging that “detects lymph nodes in the human body in Computer Tomography (CT) images.” 21 According to its developers, the key is labeling the nodes and identifying small lesions or growths that could be problematic. Humans can do this, but radiologists charge $100 per hour and may be able to carefully read only four images an hour. If there were 10,000 images, the cost of this process would be $250,000, which is prohibitively expensive if done by humans.

What deep learning can do in this situation is train computers on data sets to learn what a normal-looking versus an irregular-appearing lymph node is. After doing that through imaging exercises and honing the accuracy of the labeling, radiological imaging specialists can apply this knowledge to actual patients and determine the extent to which someone is at risk of cancerous lymph nodes. Since only a few are likely to test positive, it is a matter of identifying the unhealthy versus healthy node.

AI has been applied to congestive heart failure as well, an illness that afflicts 10 percent of senior citizens and costs $35 billion each year in the United States. AI tools are helpful because they “predict in advance potential challenges ahead and allocate resources to patient education, sensing, and proactive interventions that keep patients out of the hospital.” 22

Criminal justice

AI is being deployed in the criminal justice area. The city of Chicago has developed an AI-driven “Strategic Subject List” that analyzes people who have been arrested for their risk of becoming future perpetrators. It ranks 400,000 people on a scale of 0 to 500, using items such as age, criminal activity, victimization, drug arrest records, and gang affiliation. In looking at the data, analysts found that youth is a strong predictor of violence, being a shooting victim is associated with becoming a future perpetrator, gang affiliation has little predictive value, and drug arrests are not significantly associated with future criminal activity. 23

Judicial experts claim AI programs reduce human bias in law enforcement and leads to a fairer sentencing system. R Street Institute Associate Caleb Watney writes:

Empirically grounded questions of predictive risk analysis play to the strengths of machine learning, automated reasoning and other forms of AI. One machine-learning policy simulation concluded that such programs could be used to cut crime up to 24.8 percent with no change in jailing rates, or reduce jail populations by up to 42 percent with no increase in crime rates. 24

However, critics worry that AI algorithms represent “a secret system to punish citizens for crimes they haven’t yet committed. The risk scores have been used numerous times to guide large-scale roundups.” 25 The fear is that such tools target people of color unfairly and have not helped Chicago reduce the murder wave that has plagued it in recent years.

Despite these concerns, other countries are moving ahead with rapid deployment in this area. In China, for example, companies already have “considerable resources and access to voices, faces and other biometric data in vast quantities, which would help them develop their technologies.” 26 New technologies make it possible to match images and voices with other types of information, and to use AI on these combined data sets to improve law enforcement and national security. Through its “Sharp Eyes” program, Chinese law enforcement is matching video images, social media activity, online purchases, travel records, and personal identity into a “police cloud.” This integrated database enables authorities to keep track of criminals, potential law-breakers, and terrorists. 27 Put differently, China has become the world’s leading AI-powered surveillance state.

Transportation