7 QC Tools for Process Improvement | PDF | Case Study

From Where Did the 7 QC Tools Come?

Why we use The 7 QC Tools for Process Improvement?

What is the use of 7 qc tools.

The 7 QC Tools:

- Flow Charts

- Cause and Effect Diagram (Fishbone or Ishikawa)

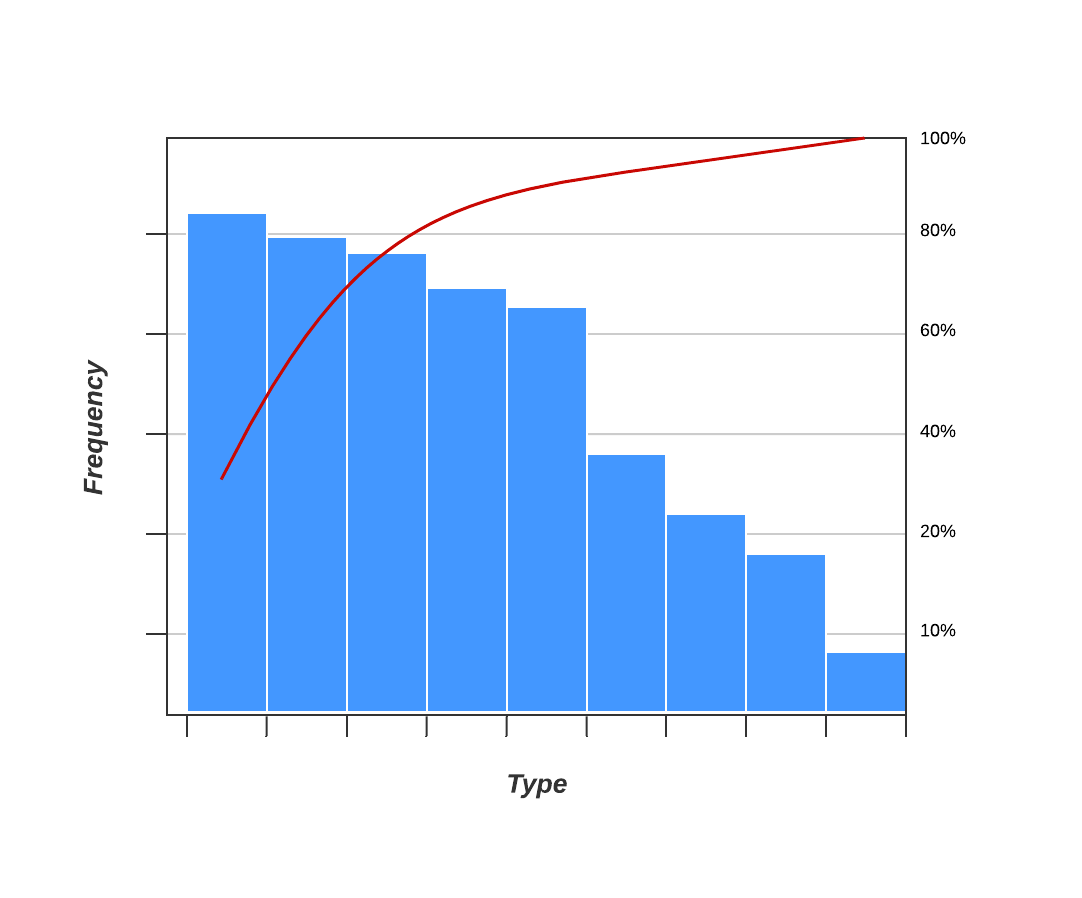

- Pareto Chart

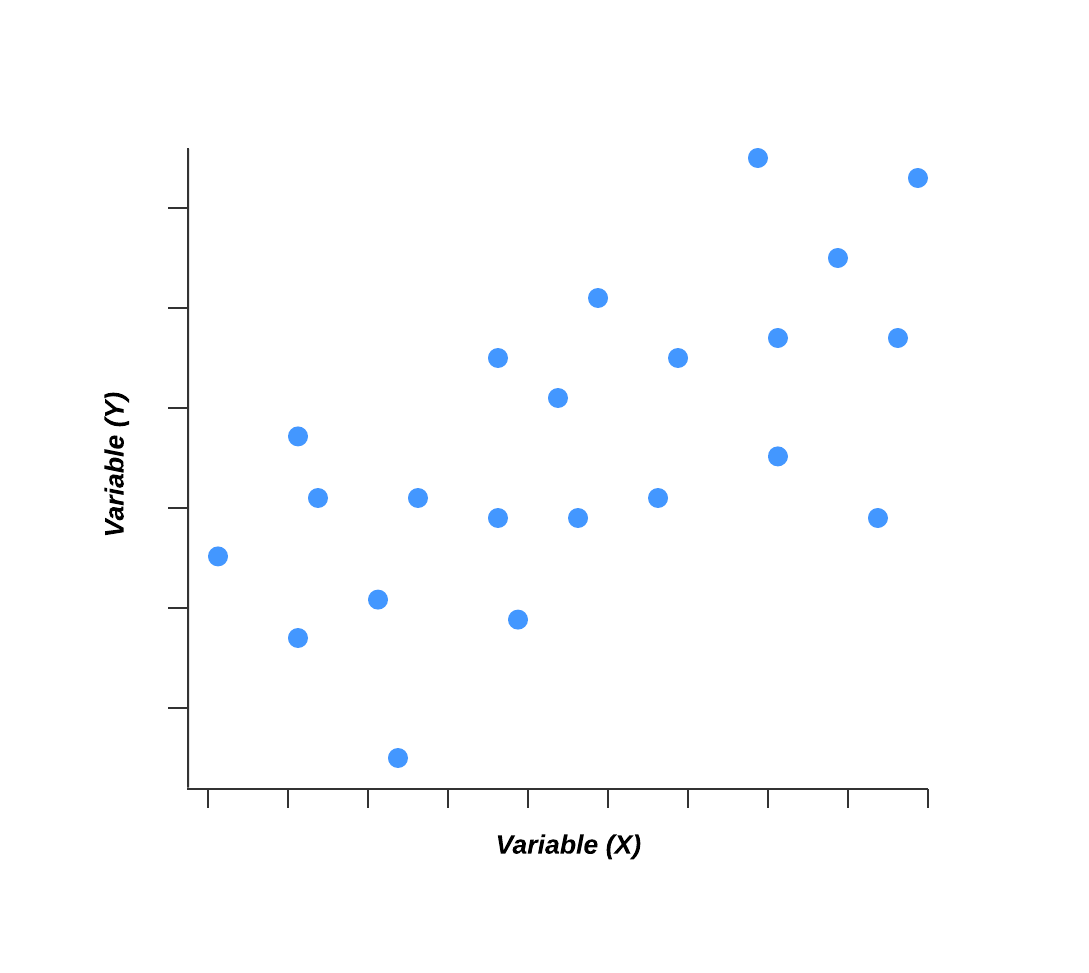

- Scatter Diagram

- Control Chart

👉 Download 7 QC Tools PDF file

[1] flow charts :.

[2] Cause and Effect Diagram :

[3] Check Sheet :

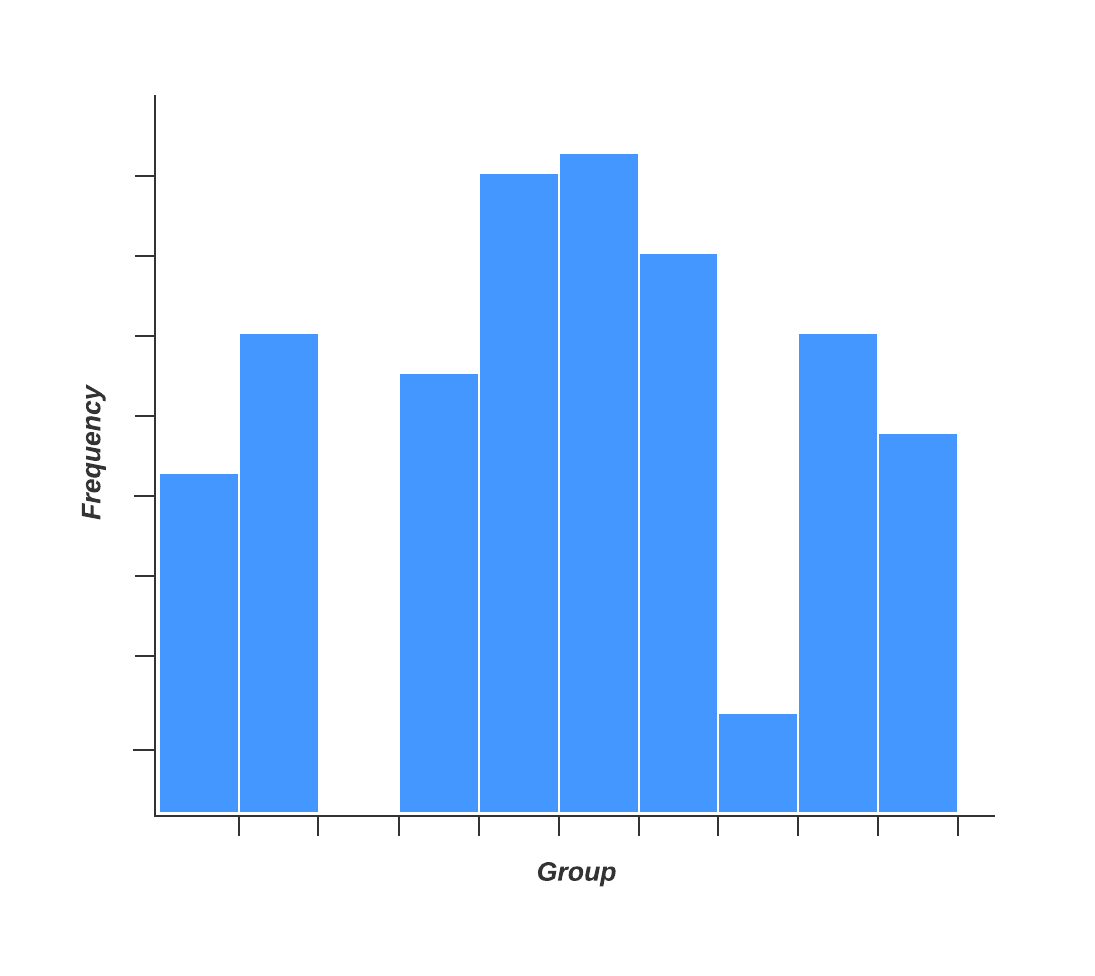

[4] Histogram :

➨ types of histogram:.

[5] Pareto Chart :

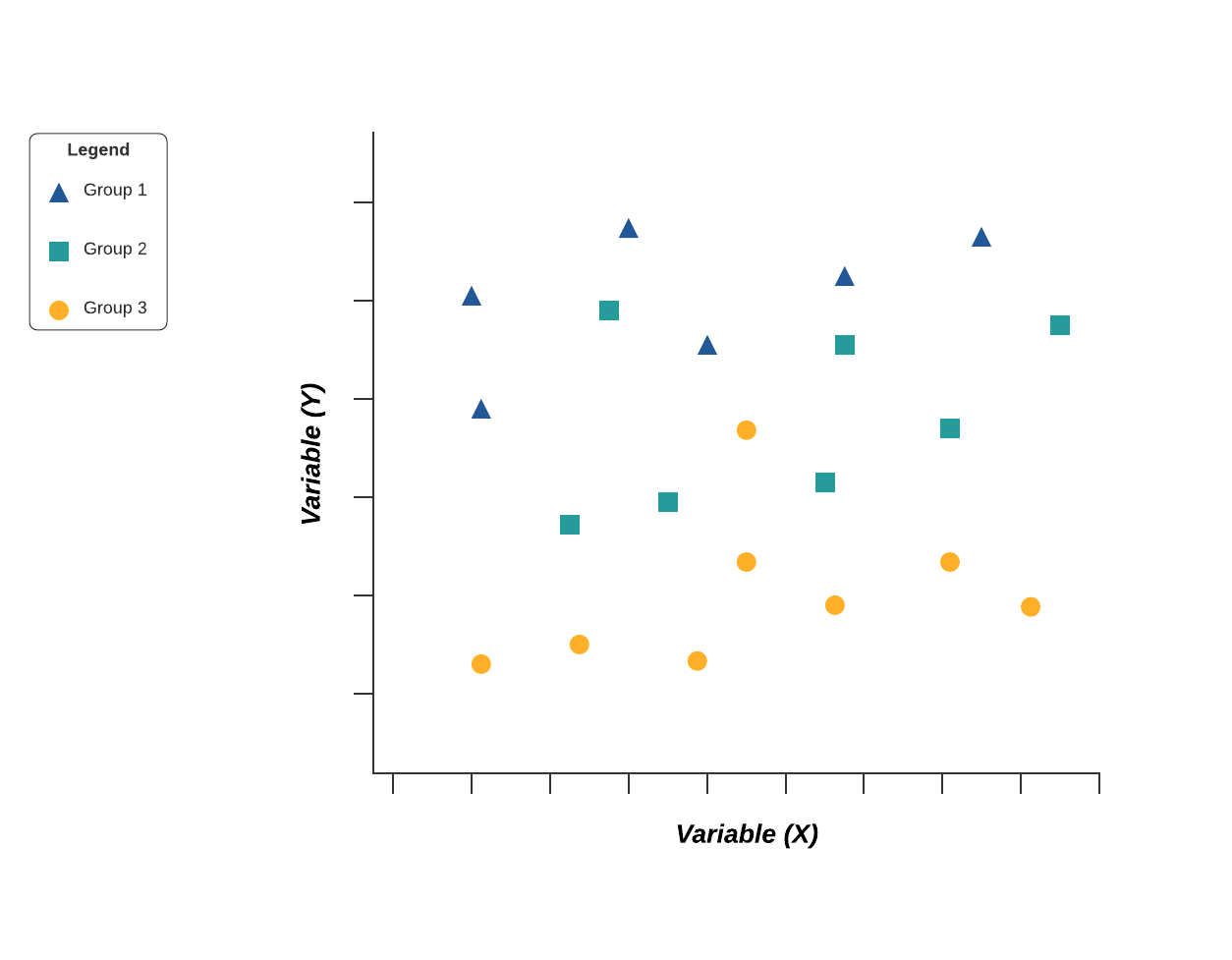

[6] Scatter Diagram :

➨ different names of the scatter diagram:, ➨ different correlation between two variables in the scatter plot:.

[7] Control Chart :

Related Posts

22 comments.

very good presentation skill and to the point explaination

Thanks for your feedback and kind comment!!!

How to download???

You can check the individual articles!!!

Best in short... Great work Nikunj

Thank you very much for your kind comment!!!

Simply wonderful. Thanks very much!

this is a great initiative , well done

Thank you for your kind words!!!

Thanks and happy learning!!!

Nice teaching

Thank you and Happy Learning!!!

Great good initiative 👍 a How to Download

Thank you for your kind word!!

This is so helpful

Thanks for your feedback

HOW MAKE PARETO

Sir can you please share process audit checklist

You can reach us at: [email protected]

Post a Comment

Contact form.

To read this content please select one of the options below:

Please note you do not have access to teaching notes, an empirical study into the use of 7 quality control tools in higher education institutions (heis).

The TQM Journal

ISSN : 1754-2731

Article publication date: 13 September 2022

Issue publication date: 5 September 2023

The main purpose of this study is to revisit Ishikawa's statement: “95% of problems in processes can be accomplished using the original 7 Quality Control (QC) tools”. The paper critically investigates the validity of this statement in higher education institutions (HEIs). It involves analysis of the usage of the 7 QC tools and identifying the barriers, benefits, challenges and critical success factors (CSFs) for the application of the 7 QC tools in a HEI setting.

Design/methodology/approach

An online survey instrument was developed, and as this is a global study, survey participants were contacted via social networks such as LinkedIn. Target respondents were HEIs educators or professionals who are knowledgeable about the 7 QC tools promulgated by Dr Ishikawa. Professionals who work in administrative sectors, such as libraries, information technology and human resources were included in the study. A number of academics who teach the 7 basic tools of QC were also included in the study. The survey link was sent to over 200 educators and professionals and 76 complete responses were obtained.

The primary finding of this study shows that the diffusion of seven QC tools is not widespread in the context of HEIs. Less than 8% of the respondents believe that more than 90% of process problems can be solved by applying the 7 QC tools. These numbers show that modern-quality problems may need more than the 7 basic QC basic tools and there may be a need to revisit the role and contribution of these tools to solve problems in the higher education sector. Tools such as Pareto chart and cause and effect diagram have been widely used in the context of HEIs. The most important barriers highlighted are related to the lack of knowledge about the benefits and about how and when to apply these tools. Among the challenges are the “lack of knowledge of the tools and their applications” and “lack of training in the use of the tools”. The main benefits mentioned by the respondents were “the identification of areas for improvement, problem definition, measurement, and analysis”. According to this study, the most important factors critical for the success of the initiative were “management support”, “widespread training” and “having a continuous improvement program in place”.

Research limitations/implications

The exploratory study provides an initial understanding about the 7 QC tools application in HEIs, and their benefits, challenges and critical success factors, which can act as guidelines for implementation in HEIs. Surveys alone cannot provide deeper insights into the status of the application of 7 QC tools in HEIs, and therefore qualitative studies in the form of semi-structured interviews should be carried out in the future.

Originality/value

This article contributes with an exploratory empirical study on the extent of the use of 7 QC tools in the university processes. The authors claim that this is the first empirical study looking into the use of the 7 QC tools in the university sector.

- 7 quality control tools

- Higher education institutions (HEIs)

- Quality improvement

Mathur, S. , Antony, J. , Olivia, M. , Fabiane Letícia, L. , Shreeranga, B. , Raja, J. and Ayon, C. (2023), "An empirical study into the use of 7 quality control tools in higher education institutions (HEIs)", The TQM Journal , Vol. 35 No. 7, pp. 1777-1798. https://doi.org/10.1108/TQM-07-2022-0222

Emerald Publishing Limited

Copyright © 2022, Emerald Publishing Limited

Related articles

We’re listening — tell us what you think, something didn’t work….

Report bugs here

All feedback is valuable

Please share your general feedback

Join us on our journey

Platform update page.

Visit emeraldpublishing.com/platformupdate to discover the latest news and updates

Questions & More Information

Answers to the most commonly asked questions here

What are the 7 basic quality tools, and how can they change your business for the better?

Reading time: about 6 min

What are the 7 basic quality tools?

- Stratification

- Check sheet (tally sheet)

- Cause and effect diagram (fishbone or Ishikawa diagram)

- Pareto chart (80-20 rule)

- Scatter diagram

- Control chart (Shewhart chart)

The ability to identify and resolve quality-related issues quickly and efficiently is essential to anyone working in quality assurance or process improvement. But statistical quality control can quickly get complex and unwieldy for the average person, making training and quality assurance more difficult to scale.

Thankfully, engineers have discovered that most quality control problems can be solved by following a few key fundamentals. These fundamentals are called the seven basic tools of quality.

With these basic quality tools in your arsenal, you can easily manage the quality of your product or process, no matter what industry you serve.

Learn about these quality management tools and find templates to start using them quickly.

Where did the quality tools originate?

Kaoru Ishikawa, a Japanese professor of engineering, originally developed the seven quality tools (sometimes called the 7 QC tools) in the 1950s to help workers of various technical backgrounds implement effective quality control measures.

At the time, training programs in statistical quality control were complex and intimidating to workers with non-technical backgrounds. This made it difficult to standardize effective quality control across operations. Companies found that simplifying the training to user-friendly fundamentals—or seven quality tools—ensured better performance at scale

7 quality tools

1. stratification.

Stratification analysis is a quality assurance tool used to sort data, objects, and people into separate and distinct groups. Separating your data using stratification can help you determine its meaning, revealing patterns that might not otherwise be visible when it’s been lumped together.

Whether you’re looking at equipment, products, shifts, materials, or even days of the week, stratification analysis lets you make sense of your data before, during, and after its collection.

To get the most out of the stratification process, consider which information about your data’s sources may affect the end results of your data analysis. Make sure to set up your data collection so that that information is included.

2. Histogram

Quality professionals are often tasked with analyzing and interpreting the behavior of different groups of data in an effort to manage quality. This is where quality control tools like the histogram come into play.

The histogram represents frequency distribution of data clearly and concisely amongst different groups of a sample, allowing you to quickly and easily identify areas of improvement within your processes. With a structure similar to a bar graph, each bar within a histogram represents a group, while the height of the bar represents the frequency of data within that group.

Histograms are particularly helpful when breaking down the frequency of your data into categories such as age, days of the week, physical measurements, or any other category that can be listed in chronological or numerical order.

3. Check sheet (or tally sheet)

Check sheets can be used to collect quantitative or qualitative data. When used to collect quantitative data, they can be called a tally sheet. A check sheet collects data in the form of check or tally marks that indicate how many times a particular value has occurred, allowing you to quickly zero in on defects or errors within your process or product, defect patterns, and even causes of specific defects.

With its simple setup and easy-to-read graphics, check sheets make it easy to record preliminary frequency distribution data when measuring out processes. This particular graphic can be used as a preliminary data collection tool when creating histograms, bar graphs, and other quality tools.

4. Cause-and-effect diagram (also known as a fishbone or Ishikawa diagram)

Introduced by Kaoru Ishikawa, the fishbone diagram helps users identify the various factors (or causes) leading to an effect, usually depicted as a problem to be solved. Named for its resemblance to a fishbone, this quality management tool works by defining a quality-related problem on the right-hand side of the diagram, with individual root causes and sub-causes branching off to its left.

A fishbone diagram’s causes and subcauses are usually grouped into six main groups, including measurements, materials, personnel, environment, methods, and machines. These categories can help you identify the probable source of your problem while keeping your diagram structured and orderly.

5. Pareto chart (80-20 rule)

As a quality control tool, the Pareto chart operates according to the 80-20 rule. This rule assumes that in any process, 80% of a process’s or system’s problems are caused by 20% of major factors, often referred to as the “vital few.” The remaining 20% of problems are caused by 80% of minor factors.

A combination of a bar and line graph, the Pareto chart depicts individual values in descending order using bars, while the cumulative total is represented by the line.

The goal of the Pareto chart is to highlight the relative importance of a variety of parameters, allowing you to identify and focus your efforts on the factors with the biggest impact on a specific part of a process or system.

6. Scatter diagram

Out of the seven quality tools, the scatter diagram is most useful in depicting the relationship between two variables, which is ideal for quality assurance professionals trying to identify cause and effect relationships.

With dependent values on the diagram’s Y-axis and independent values on the X-axis, each dot represents a common intersection point. When joined, these dots can highlight the relationship between the two variables. The stronger the correlation in your diagram, the stronger the relationship between variables.

Scatter diagrams can prove useful as a quality control tool when used to define relationships between quality defects and possible causes such as environment, activity, personnel, and other variables. Once the relationship between a particular defect and its cause has been established, you can implement focused solutions with (hopefully) better outcomes.

7. Control chart (also called a Shewhart chart)

Named after Walter A. Shewhart, this quality improvement tool can help quality assurance professionals determine whether or not a process is stable and predictable, making it easy for you to identify factors that might lead to variations or defects.

Control charts use a central line to depict an average or mean, as well as an upper and lower line to depict upper and lower control limits based on historical data. By comparing historical data to data collected from your current process, you can determine whether your current process is controlled or affected by specific variations.

Using a control chart can save your organization time and money by predicting process performance, particularly in terms of what your customer or organization expects in your final product.

Bonus: Flowcharts

Some sources will swap out stratification to instead include flowcharts as one of the seven basic QC tools. Flowcharts are most commonly used to document organizational structures and process flows, making them ideal for identifying bottlenecks and unnecessary steps within your process or system.

Mapping out your current process can help you to more effectively pinpoint which activities are completed when and by whom, how processes flow from one department or task to another, and which steps can be eliminated to streamline your process.

Learn how to create a process improvement plan in seven steps.

Lucidchart, a cloud-based intelligent diagramming application, is a core component of Lucid Software's Visual Collaboration Suite. This intuitive, cloud-based solution empowers teams to collaborate in real-time to build flowcharts, mockups, UML diagrams, customer journey maps, and more. Lucidchart propels teams forward to build the future faster. Lucid is proud to serve top businesses around the world, including customers such as Google, GE, and NBC Universal, and 99% of the Fortune 500. Lucid partners with industry leaders, including Google, Atlassian, and Microsoft. Since its founding, Lucid has received numerous awards for its products, business, and workplace culture. For more information, visit lucidchart.com.

Related articles

In this article we’ll talk about how to improve visualization, even if you are not a visual presentation expert.

Struggling to decide which process improvement methodology to use? Learn about the top approaches—Six Sigma, Lean, TQM, Just-in-time, and others—and the diagrams that can help you implement these techniques starting today.

Bring your bright ideas to life.

or continue with

Application of 7 quality control (7 QC) tools for quality management: A case study of a liquid chemical warehousing

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

- Digital Customer Experience

- Business Process Outsourcing

- Revenue Management (CPG)

- Revenue Cycle Management (RCM)

- Digital Customer Acquisition & Retention

- Digital Transformation

- Artificial Intelligence (AI)

- Banking & Financial Services

- Manufacturing

- On-demand Travel

- Consumer Packaged Goods (CPG)

- About JindalX

- Brand Story

- Case Studies

- White Papers

- Business Process Outsourcing (BPO)

7 Quality Tools in BPO: Essentials for a Successful Business

Business Process Outsourcing (BPO) has become an integral part of modern business operations. By delegating specific tasks or processes to third-party service providers, organizations can streamline their operations, reduce costs, and improve efficiency. However, to ensure the success of a BPO initiative, it is crucial to maintain and monitor the quality of outsourced processes. This is where the 7 Quality Tools in BPO come into play. In this comprehensive guide, we will explore what is BPO, its significance, and the 7 quality tools in BPO through a real-world case study and relevant statistics.

Understanding BPO

What is bpo.

Business Process Outsourcing, commonly known as BPO, refers to the practice of contracting specific business tasks or processes to external service providers. These processes can encompass a wide range of functions, including customer support services , finance and accounting, human resources, data entry, and more. BPO allows organizations to focus on their core competencies while benefiting from cost savings, operational efficiency, and access to specialized skills.

The Significance of BPO

As we have understood What is BPO? Let us now go through the significance of BPO in today’s business landscape as it cannot be overstated:

- Cost Efficiency : Outsourcing can significantly reduce labour and operational costs, making it an attractive option for businesses looking to maximize profitability.

- Global Talent Pool : BPO providers often operate in regions with a skilled workforce, providing access to specialized skills that may not be available in-house.

- Scalability : BPO services can be scaled up or down according to business needs, providing flexibility in resource allocation.

- Focus on Core Competencies : Outsourcing non-core functions allows organizations to concentrate on their primary objectives and strategic initiatives.

The 7 Quality Tools in BPO

To ensure the success of BPO partnerships and maintain high-quality standards, organizations should employ the following 7 quality tools in BPO:

1. Flowcharts and Process Maps

Flowcharts and process maps provide a visual representation of the workflow, making it easier to identify bottlenecks, redundancies, and inefficiencies in outsourced processes. They serve as a blueprint for process improvement.

2. Cause-and-Effect Diagrams (Fishbone Diagrams)

Fishbone diagrams help pinpoint the root causes of issues or defects in BPO processes. By identifying these causes, organizations can implement corrective actions and prevent future problems.

3. Pareto Charts

Pareto charts help prioritize issues or problem areas by showing which factors contribute the most to a particular problem. This tool assists in focusing resources on critical improvement areas.

4. Histograms

Histograms provide a graphical representation of data distribution, enabling organizations to understand the variability in their processes. This insight is crucial for maintaining consistency in BPO operations.

5. Control Charts

Control charts monitor process performance over time, helping organizations detect any deviations from established standards. This tool facilitates early intervention and ensures process stability.

6. Scatter Diagrams

Scatter diagrams help identify potential correlations or relationships between different variables. In a BPO context, this can be used to understand how changes in one aspect of the process may affect another.

7 Check Sheets

Check sheets are simple data collection tools that enable organizations to track and record specific data points. They are valuable for ongoing monitoring and data-driven decision-making in BPO operations.

Case Study – Improving Customer Support in an E-commerce BPO

Let’s illustrate the importance of these 7 quality tools in BPO with a real-world case study:

An e-commerce company decided to outsource its customer support operations to a BPO provider to manage the increasing volume of customer inquiries. However, the company faced challenges related to customer satisfaction, response times, and issue resolution rates. Here 7 quality tools in BPO played a crucial role in overcoming the challenges.

Application of 7 Quality Tools in BPO

Flowcharts and process maps:.

Application : These visual representations provide a clear overview of the entire BPO process, including its various steps and decision points.

Use : BPO providers use flowcharts and process maps to identify bottlenecks, redundancies, and opportunities for process optimization. It helps everyone involved understand the workflow, making it easier to discuss and implement improvements.

Cause-and-Effect Diagrams (Fishbone Diagrams):

Application : Fishbone diagrams are used to analyse complex problems and identify their root causes.

Use : BPO teams employ these diagrams to dissect issues within a process, such as increased error rates or delays. By identifying underlying causes, they can develop strategies to address these issues and prevent their recurrence.

Pareto Charts:

Application : Pareto charts help prioritize problems or issues by showing which factors contribute the most to a particular problem.

Use : BPO managers use Pareto charts to focus their resources on the most critical issues affecting the quality of their services. This ensures that efforts are directed toward the areas with the greatest impact.

Histograms:

Application : Histograms visualize the distribution of data, providing insights into data variability.

Use : In BPO, histograms are used to understand how data is spread across a process, helping to identify variations or inconsistencies. This information is crucial for maintaining process consistency and quality.

Control Charts:

Application : Control charts monitor process performance over time by tracking key performance indicators (KPIs).

Use : BPO teams use control charts to ensure that their processes are stable and within acceptable limits. When a process exceeds these limits, it indicates a potential issue that requires investigation and corrective action.

Scatter Diagrams:

Application : Scatter diagrams help identify potential relationships or correlations between two variables.

Use : In BPO, scatter diagrams are used to explore how changes in one variable might affect another. For example, they can assess how changes in response times may impact customer satisfaction scores.

Check Sheets:

Application : Check sheets are simple data collection tools that enable systematic data recording.

Use : BPO providers use check sheets to gather data on specific aspects of their processes. This data is then analysed to make informed decisions, track progress, and identify trends or patterns.

- Improved Response Times : The BPO provider streamlined the response process, reducing average response times by 31%.

- Enhanced Customer Satisfaction : Customer satisfaction scores increased by 20% due to faster responses and accurate information.

- Reduced Complaints : Customer complaints related to response times and incorrect information decreased by 70%.

- Higher Efficiency and Productivity : By eliminating bottlenecks and inefficiencies identified through flowcharts and process maps, the BPO provider achieved higher efficiency levels. This, in turn, translated into increased productivity, allowing the team to handle more inquiries and tasks within the same timeframe.

- Cost Savings : While not directly mentioned in the case study, the improvements in efficiency, reduced complaints, and increased customer satisfaction can be associated with cost savings. A more efficient process requires fewer resources, and satisfied customers are less likely to churn or require costly escalations.

- Data-Driven Decision-Making : The implementation of check sheets and control charts enabled the BPO provider to collect and analyse data systematically. This data-driven approach to decision-making not only facilitated process improvements but also provided valuable insights for ongoing optimization.

- Employee Engagement : As process improvements and increased customer satisfaction became apparent, employee morale and engagement within the BPO team also improved. Employees took pride in their work and were motivated to maintain the higher service quality standards.

Statistics on BPO Quality Improvement

Here are some relevant statistics showcasing the impact of quality improvement efforts in BPO:

- According to a Deloitte survey, 59% of organizations outsource to reduce costs, while 57% do so to focus on their core business functions.

- The International Association of Outsourcing Professionals (IAOP) reports that 78% of organizations believe that outsourcing gives them a competitive advantage.

- A study by Accenture found that 86% of organizations experienced cost savings through outsourcing, with an average cost reduction of 15%.

- Quality improvement efforts in BPO can lead to significant gains. A case study by Six Sigma Daily reported a 28% increase in process efficiency and a 22% reduction in defects after implementing Six Sigma quality tools in a BPO operation.

Business Process Outsourcing offers numerous advantages, but its success relies heavily on maintaining high-quality standards. The 7 Quality Tools in BPO – flowcharts, cause-and-effect diagrams, Pareto charts, histograms, control charts, scatter diagrams, and check sheets – play a vital role in achieving and sustaining this quality. By applying these tools, organizations can optimize their BPO processes, reduce costs, enhance customer satisfaction, and gain a competitive edge in today’s global business landscape.

If you are ready to transform your business and do more with business process outsourcing model, then you must connect with JindalX . You will be able to learn more about BPO, it’s advantages, tools, benefits to your company, and even integrate with us. JindalX has been a leading BPO company for over 2 decades and has been able to keep their customer satisfaction game at top.

Customer Service Outsourcing Guide for Startups

Unlock Major Pitfalls to Avoid and a Solid Foolproof Personalized CX strategy

Latest Posts

9 Customer-Focused Call Center KPIs You Need to Start Tracking Today

Building Consumer Trust Is the Long-Term Capital for The BFSI Sector

From Cold Food to Late Deliveries: Call Center Services Have Got Your Back

AI In Customer Service: Balancing Automation and Human Touch

4 Customer Experience Game-Changers: Stay Ahead of the Curve

Leave a comment.

Your email address will not be published.

Save my name, email, and website in this browser for the next time I comment.

- Customer Experience

- Content Curation

- Revenue Cycle Management

- Banking & Financial Services

- Manufacturing & Consumer

- The JindalX Proposition

- Leadership Team

- Statutory Compliance

- Current Openings

- Optimization Partners

Contact Number

An official website of the United States government

Here’s how you know

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( A locked padlock ) or https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Heart-Healthy Living

- High Blood Pressure

- Sickle Cell Disease

- Sleep Apnea

- Information & Resources on COVID-19

- The Heart Truth®

- Learn More Breathe Better®

- Blood Diseases and Disorders Education Program

- Publications and Resources

- Blood Disorders and Blood Safety

- Sleep Science and Sleep Disorders

- Lung Diseases

- Health Disparities and Inequities

- Heart and Vascular Diseases

- Precision Medicine Activities

- Obesity, Nutrition, and Physical Activity

- Population and Epidemiology Studies

- Women’s Health

- Research Topics

- Clinical Trials

- All Science A-Z

- Grants and Training Home

- Policies and Guidelines

- Funding Opportunities and Contacts

- Training and Career Development

- Email Alerts

- NHLBI in the Press

- Research Features

- Past Events

- Upcoming Events

- Mission and Strategic Vision

- Divisions, Offices and Centers

- Advisory Committees

- Budget and Legislative Information

- Jobs and Working at the NHLBI

- Contact and FAQs

- NIH Sleep Research Plan

- < Back To Health Topics

Study Quality Assessment Tools

In 2013, NHLBI developed a set of tailored quality assessment tools to assist reviewers in focusing on concepts that are key to a study’s internal validity. The tools were specific to certain study designs and tested for potential flaws in study methods or implementation. Experts used the tools during the systematic evidence review process to update existing clinical guidelines, such as those on cholesterol, blood pressure, and obesity. Their findings are outlined in the following reports:

- Assessing Cardiovascular Risk: Systematic Evidence Review from the Risk Assessment Work Group

- Management of Blood Cholesterol in Adults: Systematic Evidence Review from the Cholesterol Expert Panel

- Management of Blood Pressure in Adults: Systematic Evidence Review from the Blood Pressure Expert Panel

- Managing Overweight and Obesity in Adults: Systematic Evidence Review from the Obesity Expert Panel

While these tools have not been independently published and would not be considered standardized, they may be useful to the research community. These reports describe how experts used the tools for the project. Researchers may want to use the tools for their own projects; however, they would need to determine their own parameters for making judgements. Details about the design and application of the tools are included in Appendix A of the reports.

Quality Assessment of Controlled Intervention Studies - Study Quality Assessment Tools

*CD, cannot determine; NA, not applicable; NR, not reported

Guidance for Assessing the Quality of Controlled Intervention Studies

The guidance document below is organized by question number from the tool for quality assessment of controlled intervention studies.

Question 1. Described as randomized

Was the study described as randomized? A study does not satisfy quality criteria as randomized simply because the authors call it randomized; however, it is a first step in determining if a study is randomized

Questions 2 and 3. Treatment allocation–two interrelated pieces

Adequate randomization: Randomization is adequate if it occurred according to the play of chance (e.g., computer generated sequence in more recent studies, or random number table in older studies). Inadequate randomization: Randomization is inadequate if there is a preset plan (e.g., alternation where every other subject is assigned to treatment arm or another method of allocation is used, such as time or day of hospital admission or clinic visit, ZIP Code, phone number, etc.). In fact, this is not randomization at all–it is another method of assignment to groups. If assignment is not by the play of chance, then the answer to this question is no. There may be some tricky scenarios that will need to be read carefully and considered for the role of chance in assignment. For example, randomization may occur at the site level, where all individuals at a particular site are assigned to receive treatment or no treatment. This scenario is used for group-randomized trials, which can be truly randomized, but often are "quasi-experimental" studies with comparison groups rather than true control groups. (Few, if any, group-randomized trials are anticipated for this evidence review.)

Allocation concealment: This means that one does not know in advance, or cannot guess accurately, to what group the next person eligible for randomization will be assigned. Methods include sequentially numbered opaque sealed envelopes, numbered or coded containers, central randomization by a coordinating center, computer-generated randomization that is not revealed ahead of time, etc. Questions 4 and 5. Blinding

Blinding means that one does not know to which group–intervention or control–the participant is assigned. It is also sometimes called "masking." The reviewer assessed whether each of the following was blinded to knowledge of treatment assignment: (1) the person assessing the primary outcome(s) for the study (e.g., taking the measurements such as blood pressure, examining health records for events such as myocardial infarction, reviewing and interpreting test results such as x ray or cardiac catheterization findings); (2) the person receiving the intervention (e.g., the patient or other study participant); and (3) the person providing the intervention (e.g., the physician, nurse, pharmacist, dietitian, or behavioral interventionist).

Generally placebo-controlled medication studies are blinded to patient, provider, and outcome assessors; behavioral, lifestyle, and surgical studies are examples of studies that are frequently blinded only to the outcome assessors because blinding of the persons providing and receiving the interventions is difficult in these situations. Sometimes the individual providing the intervention is the same person performing the outcome assessment. This was noted when it occurred.

Question 6. Similarity of groups at baseline

This question relates to whether the intervention and control groups have similar baseline characteristics on average especially those characteristics that may affect the intervention or outcomes. The point of randomized trials is to create groups that are as similar as possible except for the intervention(s) being studied in order to compare the effects of the interventions between groups. When reviewers abstracted baseline characteristics, they noted when there was a significant difference between groups. Baseline characteristics for intervention groups are usually presented in a table in the article (often Table 1).

Groups can differ at baseline without raising red flags if: (1) the differences would not be expected to have any bearing on the interventions and outcomes; or (2) the differences are not statistically significant. When concerned about baseline difference in groups, reviewers recorded them in the comments section and considered them in their overall determination of the study quality.

Questions 7 and 8. Dropout

"Dropouts" in a clinical trial are individuals for whom there are no end point measurements, often because they dropped out of the study and were lost to followup.

Generally, an acceptable overall dropout rate is considered 20 percent or less of participants who were randomized or allocated into each group. An acceptable differential dropout rate is an absolute difference between groups of 15 percentage points at most (calculated by subtracting the dropout rate of one group minus the dropout rate of the other group). However, these are general rates. Lower overall dropout rates are expected in shorter studies, whereas higher overall dropout rates may be acceptable for studies of longer duration. For example, a 6-month study of weight loss interventions should be expected to have nearly 100 percent followup (almost no dropouts–nearly everybody gets their weight measured regardless of whether or not they actually received the intervention), whereas a 10-year study testing the effects of intensive blood pressure lowering on heart attacks may be acceptable if there is a 20-25 percent dropout rate, especially if the dropout rate between groups was similar. The panels for the NHLBI systematic reviews may set different levels of dropout caps.

Conversely, differential dropout rates are not flexible; there should be a 15 percent cap. If there is a differential dropout rate of 15 percent or higher between arms, then there is a serious potential for bias. This constitutes a fatal flaw, resulting in a poor quality rating for the study.

Question 9. Adherence

Did participants in each treatment group adhere to the protocols for assigned interventions? For example, if Group 1 was assigned to 10 mg/day of Drug A, did most of them take 10 mg/day of Drug A? Another example is a study evaluating the difference between a 30-pound weight loss and a 10-pound weight loss on specific clinical outcomes (e.g., heart attacks), but the 30-pound weight loss group did not achieve its intended weight loss target (e.g., the group only lost 14 pounds on average). A third example is whether a large percentage of participants assigned to one group "crossed over" and got the intervention provided to the other group. A final example is when one group that was assigned to receive a particular drug at a particular dose had a large percentage of participants who did not end up taking the drug or the dose as designed in the protocol.

Question 10. Avoid other interventions

Changes that occur in the study outcomes being assessed should be attributable to the interventions being compared in the study. If study participants receive interventions that are not part of the study protocol and could affect the outcomes being assessed, and they receive these interventions differentially, then there is cause for concern because these interventions could bias results. The following scenario is another example of how bias can occur. In a study comparing two different dietary interventions on serum cholesterol, one group had a significantly higher percentage of participants taking statin drugs than the other group. In this situation, it would be impossible to know if a difference in outcome was due to the dietary intervention or the drugs.

Question 11. Outcome measures assessment

What tools or methods were used to measure the outcomes in the study? Were the tools and methods accurate and reliable–for example, have they been validated, or are they objective? This is important as it indicates the confidence you can have in the reported outcomes. Perhaps even more important is ascertaining that outcomes were assessed in the same manner within and between groups. One example of differing methods is self-report of dietary salt intake versus urine testing for sodium content (a more reliable and valid assessment method). Another example is using BP measurements taken by practitioners who use their usual methods versus using BP measurements done by individuals trained in a standard approach. Such an approach may include using the same instrument each time and taking an individual's BP multiple times. In each of these cases, the answer to this assessment question would be "no" for the former scenario and "yes" for the latter. In addition, a study in which an intervention group was seen more frequently than the control group, enabling more opportunities to report clinical events, would not be considered reliable and valid.

Question 12. Power calculation

Generally, a study's methods section will address the sample size needed to detect differences in primary outcomes. The current standard is at least 80 percent power to detect a clinically relevant difference in an outcome using a two-sided alpha of 0.05. Often, however, older studies will not report on power.

Question 13. Prespecified outcomes

Investigators should prespecify outcomes reported in a study for hypothesis testing–which is the reason for conducting an RCT. Without prespecified outcomes, the study may be reporting ad hoc analyses, simply looking for differences supporting desired findings. Investigators also should prespecify subgroups being examined. Most RCTs conduct numerous post hoc analyses as a way of exploring findings and generating additional hypotheses. The intent of this question is to give more weight to reports that are not simply exploratory in nature.

Question 14. Intention-to-treat analysis

Intention-to-treat (ITT) means everybody who was randomized is analyzed according to the original group to which they are assigned. This is an extremely important concept because conducting an ITT analysis preserves the whole reason for doing a randomized trial; that is, to compare groups that differ only in the intervention being tested. When the ITT philosophy is not followed, groups being compared may no longer be the same. In this situation, the study would likely be rated poor. However, if an investigator used another type of analysis that could be viewed as valid, this would be explained in the "other" box on the quality assessment form. Some researchers use a completers analysis (an analysis of only the participants who completed the intervention and the study), which introduces significant potential for bias. Characteristics of participants who do not complete the study are unlikely to be the same as those who do. The likely impact of participants withdrawing from a study treatment must be considered carefully. ITT analysis provides a more conservative (potentially less biased) estimate of effectiveness.

General Guidance for Determining the Overall Quality Rating of Controlled Intervention Studies

The questions on the assessment tool were designed to help reviewers focus on the key concepts for evaluating a study's internal validity. They are not intended to create a list that is simply tallied up to arrive at a summary judgment of quality.

Internal validity is the extent to which the results (effects) reported in a study can truly be attributed to the intervention being evaluated and not to flaws in the design or conduct of the study–in other words, the ability for the study to make causal conclusions about the effects of the intervention being tested. Such flaws can increase the risk of bias. Critical appraisal involves considering the risk of potential for allocation bias, measurement bias, or confounding (the mixture of exposures that one cannot tease out from each other). Examples of confounding include co-interventions, differences at baseline in patient characteristics, and other issues addressed in the questions above. High risk of bias translates to a rating of poor quality. Low risk of bias translates to a rating of good quality.

Fatal flaws: If a study has a "fatal flaw," then risk of bias is significant, and the study is of poor quality. Examples of fatal flaws in RCTs include high dropout rates, high differential dropout rates, no ITT analysis or other unsuitable statistical analysis (e.g., completers-only analysis).

Generally, when evaluating a study, one will not see a "fatal flaw;" however, one will find some risk of bias. During training, reviewers were instructed to look for the potential for bias in studies by focusing on the concepts underlying the questions in the tool. For any box checked "no," reviewers were told to ask: "What is the potential risk of bias that may be introduced by this flaw?" That is, does this factor cause one to doubt the results that were reported in the study?

NHLBI staff provided reviewers with background reading on critical appraisal, while emphasizing that the best approach to use is to think about the questions in the tool in determining the potential for bias in a study. The staff also emphasized that each study has specific nuances; therefore, reviewers should familiarize themselves with the key concepts.

Quality Assessment of Systematic Reviews and Meta-Analyses - Study Quality Assessment Tools

Guidance for Quality Assessment Tool for Systematic Reviews and Meta-Analyses

A systematic review is a study that attempts to answer a question by synthesizing the results of primary studies while using strategies to limit bias and random error.424 These strategies include a comprehensive search of all potentially relevant articles and the use of explicit, reproducible criteria in the selection of articles included in the review. Research designs and study characteristics are appraised, data are synthesized, and results are interpreted using a predefined systematic approach that adheres to evidence-based methodological principles.

Systematic reviews can be qualitative or quantitative. A qualitative systematic review summarizes the results of the primary studies but does not combine the results statistically. A quantitative systematic review, or meta-analysis, is a type of systematic review that employs statistical techniques to combine the results of the different studies into a single pooled estimate of effect, often given as an odds ratio. The guidance document below is organized by question number from the tool for quality assessment of systematic reviews and meta-analyses.

Question 1. Focused question

The review should be based on a question that is clearly stated and well-formulated. An example would be a question that uses the PICO (population, intervention, comparator, outcome) format, with all components clearly described.

Question 2. Eligibility criteria

The eligibility criteria used to determine whether studies were included or excluded should be clearly specified and predefined. It should be clear to the reader why studies were included or excluded.

Question 3. Literature search

The search strategy should employ a comprehensive, systematic approach in order to capture all of the evidence possible that pertains to the question of interest. At a minimum, a comprehensive review has the following attributes:

- Electronic searches were conducted using multiple scientific literature databases, such as MEDLINE, EMBASE, Cochrane Central Register of Controlled Trials, PsychLit, and others as appropriate for the subject matter.

- Manual searches of references found in articles and textbooks should supplement the electronic searches.

Additional search strategies that may be used to improve the yield include the following:

- Studies published in other countries

- Studies published in languages other than English

- Identification by experts in the field of studies and articles that may have been missed

- Search of grey literature, including technical reports and other papers from government agencies or scientific groups or committees; presentations and posters from scientific meetings, conference proceedings, unpublished manuscripts; and others. Searching the grey literature is important (whenever feasible) because sometimes only positive studies with significant findings are published in the peer-reviewed literature, which can bias the results of a review.

In their reviews, researchers described the literature search strategy clearly, and ascertained it could be reproducible by others with similar results.

Question 4. Dual review for determining which studies to include and exclude

Titles, abstracts, and full-text articles (when indicated) should be reviewed by two independent reviewers to determine which studies to include and exclude in the review. Reviewers resolved disagreements through discussion and consensus or with third parties. They clearly stated the review process, including methods for settling disagreements.

Question 5. Quality appraisal for internal validity

Each included study should be appraised for internal validity (study quality assessment) using a standardized approach for rating the quality of the individual studies. Ideally, this should be done by at least two independent reviewers appraised each study for internal validity. However, there is not one commonly accepted, standardized tool for rating the quality of studies. So, in the research papers, reviewers looked for an assessment of the quality of each study and a clear description of the process used.

Question 6. List and describe included studies

All included studies were listed in the review, along with descriptions of their key characteristics. This was presented either in narrative or table format.

Question 7. Publication bias

Publication bias is a term used when studies with positive results have a higher likelihood of being published, being published rapidly, being published in higher impact journals, being published in English, being published more than once, or being cited by others.425,426 Publication bias can be linked to favorable or unfavorable treatment of research findings due to investigators, editors, industry, commercial interests, or peer reviewers. To minimize the potential for publication bias, researchers can conduct a comprehensive literature search that includes the strategies discussed in Question 3.

A funnel plot–a scatter plot of component studies in a meta-analysis–is a commonly used graphical method for detecting publication bias. If there is no significant publication bias, the graph looks like a symmetrical inverted funnel.

Reviewers assessed and clearly described the likelihood of publication bias.

Question 8. Heterogeneity

Heterogeneity is used to describe important differences in studies included in a meta-analysis that may make it inappropriate to combine the studies.427 Heterogeneity can be clinical (e.g., important differences between study participants, baseline disease severity, and interventions); methodological (e.g., important differences in the design and conduct of the study); or statistical (e.g., important differences in the quantitative results or reported effects).

Researchers usually assess clinical or methodological heterogeneity qualitatively by determining whether it makes sense to combine studies. For example:

- Should a study evaluating the effects of an intervention on CVD risk that involves elderly male smokers with hypertension be combined with a study that involves healthy adults ages 18 to 40? (Clinical Heterogeneity)

- Should a study that uses a randomized controlled trial (RCT) design be combined with a study that uses a case-control study design? (Methodological Heterogeneity)

Statistical heterogeneity describes the degree of variation in the effect estimates from a set of studies; it is assessed quantitatively. The two most common methods used to assess statistical heterogeneity are the Q test (also known as the X2 or chi-square test) or I2 test.

Reviewers examined studies to determine if an assessment for heterogeneity was conducted and clearly described. If the studies are found to be heterogeneous, the investigators should explore and explain the causes of the heterogeneity, and determine what influence, if any, the study differences had on overall study results.

Quality Assessment Tool for Observational Cohort and Cross-Sectional Studies - Study Quality Assessment Tools

Guidance for Assessing the Quality of Observational Cohort and Cross-Sectional Studies

The guidance document below is organized by question number from the tool for quality assessment of observational cohort and cross-sectional studies.

Question 1. Research question

Did the authors describe their goal in conducting this research? Is it easy to understand what they were looking to find? This issue is important for any scientific paper of any type. Higher quality scientific research explicitly defines a research question.

Questions 2 and 3. Study population

Did the authors describe the group of people from which the study participants were selected or recruited, using demographics, location, and time period? If you were to conduct this study again, would you know who to recruit, from where, and from what time period? Is the cohort population free of the outcomes of interest at the time they were recruited?

An example would be men over 40 years old with type 2 diabetes who began seeking medical care at Phoenix Good Samaritan Hospital between January 1, 1990 and December 31, 1994. In this example, the population is clearly described as: (1) who (men over 40 years old with type 2 diabetes); (2) where (Phoenix Good Samaritan Hospital); and (3) when (between January 1, 1990 and December 31, 1994). Another example is women ages 34 to 59 years of age in 1980 who were in the nursing profession and had no known coronary disease, stroke, cancer, hypercholesterolemia, or diabetes, and were recruited from the 11 most populous States, with contact information obtained from State nursing boards.

In cohort studies, it is crucial that the population at baseline is free of the outcome of interest. For example, the nurses' population above would be an appropriate group in which to study incident coronary disease. This information is usually found either in descriptions of population recruitment, definitions of variables, or inclusion/exclusion criteria.

You may need to look at prior papers on methods in order to make the assessment for this question. Those papers are usually in the reference list.

If fewer than 50% of eligible persons participated in the study, then there is concern that the study population does not adequately represent the target population. This increases the risk of bias.

Question 4. Groups recruited from the same population and uniform eligibility criteria

Were the inclusion and exclusion criteria developed prior to recruitment or selection of the study population? Were the same underlying criteria used for all of the subjects involved? This issue is related to the description of the study population, above, and you may find the information for both of these questions in the same section of the paper.

Most cohort studies begin with the selection of the cohort; participants in this cohort are then measured or evaluated to determine their exposure status. However, some cohort studies may recruit or select exposed participants in a different time or place than unexposed participants, especially retrospective cohort studies–which is when data are obtained from the past (retrospectively), but the analysis examines exposures prior to outcomes. For example, one research question could be whether diabetic men with clinical depression are at higher risk for cardiovascular disease than those without clinical depression. So, diabetic men with depression might be selected from a mental health clinic, while diabetic men without depression might be selected from an internal medicine or endocrinology clinic. This study recruits groups from different clinic populations, so this example would get a "no."

However, the women nurses described in the question above were selected based on the same inclusion/exclusion criteria, so that example would get a "yes."

Question 5. Sample size justification

Did the authors present their reasons for selecting or recruiting the number of people included or analyzed? Do they note or discuss the statistical power of the study? This question is about whether or not the study had enough participants to detect an association if one truly existed.

A paragraph in the methods section of the article may explain the sample size needed to detect a hypothesized difference in outcomes. You may also find a discussion of power in the discussion section (such as the study had 85 percent power to detect a 20 percent increase in the rate of an outcome of interest, with a 2-sided alpha of 0.05). Sometimes estimates of variance and/or estimates of effect size are given, instead of sample size calculations. In any of these cases, the answer would be "yes."

However, observational cohort studies often do not report anything about power or sample sizes because the analyses are exploratory in nature. In this case, the answer would be "no." This is not a "fatal flaw." It just may indicate that attention was not paid to whether the study was sufficiently sized to answer a prespecified question–i.e., it may have been an exploratory, hypothesis-generating study.

Question 6. Exposure assessed prior to outcome measurement

This question is important because, in order to determine whether an exposure causes an outcome, the exposure must come before the outcome.

For some prospective cohort studies, the investigator enrolls the cohort and then determines the exposure status of various members of the cohort (large epidemiological studies like Framingham used this approach). However, for other cohort studies, the cohort is selected based on its exposure status, as in the example above of depressed diabetic men (the exposure being depression). Other examples include a cohort identified by its exposure to fluoridated drinking water and then compared to a cohort living in an area without fluoridated water, or a cohort of military personnel exposed to combat in the Gulf War compared to a cohort of military personnel not deployed in a combat zone.

With either of these types of cohort studies, the cohort is followed forward in time (i.e., prospectively) to assess the outcomes that occurred in the exposed members compared to nonexposed members of the cohort. Therefore, you begin the study in the present by looking at groups that were exposed (or not) to some biological or behavioral factor, intervention, etc., and then you follow them forward in time to examine outcomes. If a cohort study is conducted properly, the answer to this question should be "yes," since the exposure status of members of the cohort was determined at the beginning of the study before the outcomes occurred.

For retrospective cohort studies, the same principal applies. The difference is that, rather than identifying a cohort in the present and following them forward in time, the investigators go back in time (i.e., retrospectively) and select a cohort based on their exposure status in the past and then follow them forward to assess the outcomes that occurred in the exposed and nonexposed cohort members. Because in retrospective cohort studies the exposure and outcomes may have already occurred (it depends on how long they follow the cohort), it is important to make sure that the exposure preceded the outcome.

Sometimes cross-sectional studies are conducted (or cross-sectional analyses of cohort-study data), where the exposures and outcomes are measured during the same timeframe. As a result, cross-sectional analyses provide weaker evidence than regular cohort studies regarding a potential causal relationship between exposures and outcomes. For cross-sectional analyses, the answer to Question 6 should be "no."

Question 7. Sufficient timeframe to see an effect

Did the study allow enough time for a sufficient number of outcomes to occur or be observed, or enough time for an exposure to have a biological effect on an outcome? In the examples given above, if clinical depression has a biological effect on increasing risk for CVD, such an effect may take years. In the other example, if higher dietary sodium increases BP, a short timeframe may be sufficient to assess its association with BP, but a longer timeframe would be needed to examine its association with heart attacks.

The issue of timeframe is important to enable meaningful analysis of the relationships between exposures and outcomes to be conducted. This often requires at least several years, especially when looking at health outcomes, but it depends on the research question and outcomes being examined.

Cross-sectional analyses allow no time to see an effect, since the exposures and outcomes are assessed at the same time, so those would get a "no" response.

Question 8. Different levels of the exposure of interest

If the exposure can be defined as a range (examples: drug dosage, amount of physical activity, amount of sodium consumed), were multiple categories of that exposure assessed? (for example, for drugs: not on the medication, on a low dose, medium dose, high dose; for dietary sodium, higher than average U.S. consumption, lower than recommended consumption, between the two). Sometimes discrete categories of exposure are not used, but instead exposures are measured as continuous variables (for example, mg/day of dietary sodium or BP values).

In any case, studying different levels of exposure (where possible) enables investigators to assess trends or dose-response relationships between exposures and outcomes–e.g., the higher the exposure, the greater the rate of the health outcome. The presence of trends or dose-response relationships lends credibility to the hypothesis of causality between exposure and outcome.

For some exposures, however, this question may not be applicable (e.g., the exposure may be a dichotomous variable like living in a rural setting versus an urban setting, or vaccinated/not vaccinated with a one-time vaccine). If there are only two possible exposures (yes/no), then this question should be given an "NA," and it should not count negatively towards the quality rating.

Question 9. Exposure measures and assessment

Were the exposure measures defined in detail? Were the tools or methods used to measure exposure accurate and reliable–for example, have they been validated or are they objective? This issue is important as it influences confidence in the reported exposures. When exposures are measured with less accuracy or validity, it is harder to see an association between exposure and outcome even if one exists. Also as important is whether the exposures were assessed in the same manner within groups and between groups; if not, bias may result.

For example, retrospective self-report of dietary salt intake is not as valid and reliable as prospectively using a standardized dietary log plus testing participants' urine for sodium content. Another example is measurement of BP, where there may be quite a difference between usual care, where clinicians measure BP however it is done in their practice setting (which can vary considerably), and use of trained BP assessors using standardized equipment (e.g., the same BP device which has been tested and calibrated) and a standardized protocol (e.g., patient is seated for 5 minutes with feet flat on the floor, BP is taken twice in each arm, and all four measurements are averaged). In each of these cases, the former would get a "no" and the latter a "yes."

Here is a final example that illustrates the point about why it is important to assess exposures consistently across all groups: If people with higher BP (exposed cohort) are seen by their providers more frequently than those without elevated BP (nonexposed group), it also increases the chances of detecting and documenting changes in health outcomes, including CVD-related events. Therefore, it may lead to the conclusion that higher BP leads to more CVD events. This may be true, but it could also be due to the fact that the subjects with higher BP were seen more often; thus, more CVD-related events were detected and documented simply because they had more encounters with the health care system. Thus, it could bias the results and lead to an erroneous conclusion.

Question 10. Repeated exposure assessment

Was the exposure for each person measured more than once during the course of the study period? Multiple measurements with the same result increase our confidence that the exposure status was correctly classified. Also, multiple measurements enable investigators to look at changes in exposure over time, for example, people who ate high dietary sodium throughout the followup period, compared to those who started out high then reduced their intake, compared to those who ate low sodium throughout. Once again, this may not be applicable in all cases. In many older studies, exposure was measured only at baseline. However, multiple exposure measurements do result in a stronger study design.

Question 11. Outcome measures

Were the outcomes defined in detail? Were the tools or methods for measuring outcomes accurate and reliable–for example, have they been validated or are they objective? This issue is important because it influences confidence in the validity of study results. Also important is whether the outcomes were assessed in the same manner within groups and between groups.

An example of an outcome measure that is objective, accurate, and reliable is death–the outcome measured with more accuracy than any other. But even with a measure as objective as death, there can be differences in the accuracy and reliability of how death was assessed by the investigators. Did they base it on an autopsy report, death certificate, death registry, or report from a family member? Another example is a study of whether dietary fat intake is related to blood cholesterol level (cholesterol level being the outcome), and the cholesterol level is measured from fasting blood samples that are all sent to the same laboratory. These examples would get a "yes." An example of a "no" would be self-report by subjects that they had a heart attack, or self-report of how much they weigh (if body weight is the outcome of interest).

Similar to the example in Question 9, results may be biased if one group (e.g., people with high BP) is seen more frequently than another group (people with normal BP) because more frequent encounters with the health care system increases the chances of outcomes being detected and documented.

Question 12. Blinding of outcome assessors

Blinding means that outcome assessors did not know whether the participant was exposed or unexposed. It is also sometimes called "masking." The objective is to look for evidence in the article that the person(s) assessing the outcome(s) for the study (for example, examining medical records to determine the outcomes that occurred in the exposed and comparison groups) is masked to the exposure status of the participant. Sometimes the person measuring the exposure is the same person conducting the outcome assessment. In this case, the outcome assessor would most likely not be blinded to exposure status because they also took measurements of exposures. If so, make a note of that in the comments section.

As you assess this criterion, think about whether it is likely that the person(s) doing the outcome assessment would know (or be able to figure out) the exposure status of the study participants. If the answer is no, then blinding is adequate. An example of adequate blinding of the outcome assessors is to create a separate committee, whose members were not involved in the care of the patient and had no information about the study participants' exposure status. The committee would then be provided with copies of participants' medical records, which had been stripped of any potential exposure information or personally identifiable information. The committee would then review the records for prespecified outcomes according to the study protocol. If blinding was not possible, which is sometimes the case, mark "NA" and explain the potential for bias.

Question 13. Followup rate

Higher overall followup rates are always better than lower followup rates, even though higher rates are expected in shorter studies, whereas lower overall followup rates are often seen in studies of longer duration. Usually, an acceptable overall followup rate is considered 80 percent or more of participants whose exposures were measured at baseline. However, this is just a general guideline. For example, a 6-month cohort study examining the relationship between dietary sodium intake and BP level may have over 90 percent followup, but a 20-year cohort study examining effects of sodium intake on stroke may have only a 65 percent followup rate.

Question 14. Statistical analyses

Were key potential confounding variables measured and adjusted for, such as by statistical adjustment for baseline differences? Logistic regression or other regression methods are often used to account for the influence of variables not of interest.

This is a key issue in cohort studies, because statistical analyses need to control for potential confounders, in contrast to an RCT, where the randomization process controls for potential confounders. All key factors that may be associated both with the exposure of interest and the outcome–that are not of interest to the research question–should be controlled for in the analyses.

For example, in a study of the relationship between cardiorespiratory fitness and CVD events (heart attacks and strokes), the study should control for age, BP, blood cholesterol, and body weight, because all of these factors are associated both with low fitness and with CVD events. Well-done cohort studies control for multiple potential confounders.

Some general guidance for determining the overall quality rating of observational cohort and cross-sectional studies

The questions on the form are designed to help you focus on the key concepts for evaluating the internal validity of a study. They are not intended to create a list that you simply tally up to arrive at a summary judgment of quality.

Internal validity for cohort studies is the extent to which the results reported in the study can truly be attributed to the exposure being evaluated and not to flaws in the design or conduct of the study–in other words, the ability of the study to draw associative conclusions about the effects of the exposures being studied on outcomes. Any such flaws can increase the risk of bias.

Critical appraisal involves considering the risk of potential for selection bias, information bias, measurement bias, or confounding (the mixture of exposures that one cannot tease out from each other). Examples of confounding include co-interventions, differences at baseline in patient characteristics, and other issues throughout the questions above. High risk of bias translates to a rating of poor quality. Low risk of bias translates to a rating of good quality. (Thus, the greater the risk of bias, the lower the quality rating of the study.)

In addition, the more attention in the study design to issues that can help determine whether there is a causal relationship between the exposure and outcome, the higher quality the study. These include exposures occurring prior to outcomes, evaluation of a dose-response gradient, accuracy of measurement of both exposure and outcome, sufficient timeframe to see an effect, and appropriate control for confounding–all concepts reflected in the tool.

Generally, when you evaluate a study, you will not see a "fatal flaw," but you will find some risk of bias. By focusing on the concepts underlying the questions in the quality assessment tool, you should ask yourself about the potential for bias in the study you are critically appraising. For any box where you check "no" you should ask, "What is the potential risk of bias resulting from this flaw in study design or execution?" That is, does this factor cause you to doubt the results that are reported in the study or doubt the ability of the study to accurately assess an association between exposure and outcome?

The best approach is to think about the questions in the tool and how each one tells you something about the potential for bias in a study. The more you familiarize yourself with the key concepts, the more comfortable you will be with critical appraisal. Examples of studies rated good, fair, and poor are useful, but each study must be assessed on its own based on the details that are reported and consideration of the concepts for minimizing bias.

Quality Assessment of Case-Control Studies - Study Quality Assessment Tools

Guidance for Assessing the Quality of Case-Control Studies

The guidance document below is organized by question number from the tool for quality assessment of case-control studies.

Did the authors describe their goal in conducting this research? Is it easy to understand what they were looking to find? This issue is important for any scientific paper of any type. High quality scientific research explicitly defines a research question.

Question 2. Study population

Did the authors describe the group of individuals from which the cases and controls were selected or recruited, while using demographics, location, and time period? If the investigators conducted this study again, would they know exactly who to recruit, from where, and from what time period?

Investigators identify case-control study populations by location, time period, and inclusion criteria for cases (individuals with the disease, condition, or problem) and controls (individuals without the disease, condition, or problem). For example, the population for a study of lung cancer and chemical exposure would be all incident cases of lung cancer diagnosed in patients ages 35 to 79, from January 1, 2003 to December 31, 2008, living in Texas during that entire time period, as well as controls without lung cancer recruited from the same population during the same time period. The population is clearly described as: (1) who (men and women ages 35 to 79 with (cases) and without (controls) incident lung cancer); (2) where (living in Texas); and (3) when (between January 1, 2003 and December 31, 2008).

Other studies may use disease registries or data from cohort studies to identify cases. In these cases, the populations are individuals who live in the area covered by the disease registry or included in a cohort study (i.e., nested case-control or case-cohort). For example, a study of the relationship between vitamin D intake and myocardial infarction might use patients identified via the GRACE registry, a database of heart attack patients.

NHLBI staff encouraged reviewers to examine prior papers on methods (listed in the reference list) to make this assessment, if necessary.

Question 3. Target population and case representation

In order for a study to truly address the research question, the target population–the population from which the study population is drawn and to which study results are believed to apply–should be carefully defined. Some authors may compare characteristics of the study cases to characteristics of cases in the target population, either in text or in a table. When study cases are shown to be representative of cases in the appropriate target population, it increases the likelihood that the study was well-designed per the research question.

However, because these statistics are frequently difficult or impossible to measure, publications should not be penalized if case representation is not shown. For most papers, the response to question 3 will be "NR." Those subquestions are combined because the answer to the second subquestion–case representation–determines the response to this item. However, it cannot be determined without considering the response to the first subquestion. For example, if the answer to the first subquestion is "yes," and the second, "CD," then the response for item 3 is "CD."

Question 4. Sample size justification

Did the authors discuss their reasons for selecting or recruiting the number of individuals included? Did they discuss the statistical power of the study and provide a sample size calculation to ensure that the study is adequately powered to detect an association (if one exists)? This question does not refer to a description of the manner in which different groups were included or excluded using the inclusion/exclusion criteria (e.g., "Final study size was 1,378 participants after exclusion of 461 patients with missing data" is not considered a sample size justification for the purposes of this question).

An article's methods section usually contains information on sample size and the size needed to detect differences in exposures and on statistical power.

Question 5. Groups recruited from the same population

To determine whether cases and controls were recruited from the same population, one can ask hypothetically, "If a control was to develop the outcome of interest (the condition that was used to select cases), would that person have been eligible to become a case?" Case-control studies begin with the selection of the cases (those with the outcome of interest, e.g., lung cancer) and controls (those in whom the outcome is absent). Cases and controls are then evaluated and categorized by their exposure status. For the lung cancer example, cases and controls were recruited from hospitals in a given region. One may reasonably assume that controls in the catchment area for the hospitals, or those already in the hospitals for a different reason, would attend those hospitals if they became a case; therefore, the controls are drawn from the same population as the cases. If the controls were recruited or selected from a different region (e.g., a State other than Texas) or time period (e.g., 1991-2000), then the cases and controls were recruited from different populations, and the answer to this question would be "no."

The following example further explores selection of controls. In a study, eligible cases were men and women, ages 18 to 39, who were diagnosed with atherosclerosis at hospitals in Perth, Australia, between July 1, 2000 and December 31, 2007. Appropriate controls for these cases might be sampled using voter registration information for men and women ages 18 to 39, living in Perth (population-based controls); they also could be sampled from patients without atherosclerosis at the same hospitals (hospital-based controls). As long as the controls are individuals who would have been eligible to be included in the study as cases (if they had been diagnosed with atherosclerosis), then the controls were selected appropriately from the same source population as cases.

In a prospective case-control study, investigators may enroll individuals as cases at the time they are found to have the outcome of interest; the number of cases usually increases as time progresses. At this same time, they may recruit or select controls from the population without the outcome of interest. One way to identify or recruit cases is through a surveillance system. In turn, investigators can select controls from the population covered by that system. This is an example of population-based controls. Investigators also may identify and select cases from a cohort study population and identify controls from outcome-free individuals in the same cohort study. This is known as a nested case-control study.

Question 6. Inclusion and exclusion criteria prespecified and applied uniformly