- Subject List

- Take a Tour

- For Authors

- Subscriber Services

- Publications

- African American Studies

- African Studies

- American Literature

- Anthropology

- Architecture Planning and Preservation

- Art History

- Atlantic History

- Biblical Studies

- British and Irish Literature

- Childhood Studies

- Chinese Studies

- Cinema and Media Studies

- Communication

- Criminology

- Environmental Science

- Evolutionary Biology

- International Law

- International Relations

- Islamic Studies

- Jewish Studies

- Latin American Studies

- Latino Studies

- Linguistics

- Literary and Critical Theory

- Medieval Studies

- Military History

- Political Science

- Public Health

- Renaissance and Reformation

- Social Work

- Urban Studies

- Victorian Literature

- Browse All Subjects

How to Subscribe

- Free Trials

In This Article Expand or collapse the "in this article" section Single-Case Experimental Designs

Introduction, general overviews and primary textbooks.

- Textbooks in Applied Behavior Analysis

- Types of Single-Case Experimental Designs

- Model Building and Randomization in Single-Case Experimental Designs

- Visual Analysis of Single-Case Experimental Designs

- Effect Size Estimates in Single-Case Experimental Designs

- Reporting Single-Case Design Intervention Research

Related Articles Expand or collapse the "related articles" section about

About related articles close popup.

Lorem Ipsum Sit Dolor Amet

Vestibulum ante ipsum primis in faucibus orci luctus et ultrices posuere cubilia Curae; Aliquam ligula odio, euismod ut aliquam et, vestibulum nec risus. Nulla viverra, arcu et iaculis consequat, justo diam ornare tellus, semper ultrices tellus nunc eu tellus.

- Action Research

- Ambulatory Assessment in Behavioral Science

- Effect Size

- Mediation Analysis

- Path Models

- Research Methods for Studying Daily Life

Other Subject Areas

Forthcoming articles expand or collapse the "forthcoming articles" section.

- Data Visualization

- Remote Work

- Workforce Training Evaluation

- Find more forthcoming articles...

- Export Citations

- Share This Facebook LinkedIn Twitter

Single-Case Experimental Designs by S. Andrew Garbacz , Thomas R. Kratochwill LAST MODIFIED: 29 July 2020 DOI: 10.1093/obo/9780199828340-0265

Single-case experimental designs are a family of experimental designs that are characterized by researcher manipulation of an independent variable and repeated measurement of a dependent variable before (i.e., baseline) and after (i.e., intervention phase) introducing the independent variable. In single-case experimental designs a case is the unit of intervention and analysis (e.g., a child, a school). Because measurement within each case is conducted before and after manipulation of the independent variable, the case typically serves as its own control. Experimental variants of single-case designs provide a basis for determining a causal relation by replication of the intervention through (a) introducing and withdrawing the independent variable, (b) manipulating the independent variable across different phases, and (c) introducing the independent variable in a staggered fashion across different points in time. Due to their economy of resources, single-case designs may be useful during development activities and allow for rapid replication across studies.

Several sources provide overviews of single-case experimental designs. Barlow, et al. 2009 includes an overview for the development of single-case experimental designs, describes key considerations for designing and conducting single-case experimental design research, and reviews procedural elements, assessment strategies, and replication considerations. Kazdin 2011 provides detailed coverage of single-case experimental design variants as well as approaches for evaluating data in single-case experimental designs. Kratochwill and Levin 2014 describes key methodological features that underlie single-case experimental designs, including philosophical and statistical foundations and data evaluation. Ledford and Gast 2018 covers research conceptualization and writing, design variants within single-case experimental design, definitions of variables and associated measurement, and approaches to organize and evaluate data. Riley-Tillman and Burns 2009 provides a practical orientation to single-case experimental designs to facilitate uptake and use in applied settings.

Barlow, D. H., M. K. Nock, and M. Hersen, eds. 2009. Single case experimental designs: Strategies for studying behavior change . 3d ed. New York: Pearson.

A comprehensive reference about the process of designing and conducting single-case experimental design studies. Chapters are integrative but can stand alone.

Kazdin, A. E. 2011. Single-case research designs: Methods for clinical and applied settings . 2d ed. New York: Oxford Univ. Press.

A complete overview and description of single-case experimental design variants as well as information about data evaluation.

Kratochwill, T. R., and J. R. Levin, eds. 2014. Single-case intervention research: Methodological and statistical advances . New York: Routledge.

The authors describe in depth the methodological and analytic considerations necessary for designing and conducting research that uses a single-case experimental design. In addition, the text includes chapters from leaders in psychology and education who provide critical perspectives about the use of single-case experimental designs.

Ledford, J. R., and D. L. Gast, eds. 2018. Single case research methodology: Applications in special education and behavioral sciences . New York: Routledge.

Covers the research process from writing literature reviews, to designing, conducting, and evaluating single-case experimental design studies.

Riley-Tillman, T. C., and M. K. Burns. 2009. Evaluating education interventions: Single-case design for measuring response to intervention . New York: Guilford Press.

Focuses on accelerating uptake and use of single-case experimental designs in applied settings. This book provides a practical, “nuts and bolts” orientation to conducting single-case experimental design research.

back to top

Users without a subscription are not able to see the full content on this page. Please subscribe or login .

Oxford Bibliographies Online is available by subscription and perpetual access to institutions. For more information or to contact an Oxford Sales Representative click here .

- About Psychology »

- Meet the Editorial Board »

- Abnormal Psychology

- Academic Assessment

- Acculturation and Health

- Action Regulation Theory

- Addictive Behavior

- Adolescence

- Adoption, Social, Psychological, and Evolutionary Perspect...

- Advanced Theory of Mind

- Affective Forecasting

- Affirmative Action

- Ageism at Work

- Allport, Gordon

- Alzheimer’s Disease

- Analysis of Covariance (ANCOVA)

- Animal Behavior

- Animal Learning

- Anxiety Disorders

- Art and Aesthetics, Psychology of

- Artificial Intelligence, Machine Learning, and Psychology

- Assessment and Clinical Applications of Individual Differe...

- Attachment in Social and Emotional Development across the ...

- Attention-Deficit/Hyperactivity Disorder (ADHD) in Adults

- Attention-Deficit/Hyperactivity Disorder (ADHD) in Childre...

- Attitudinal Ambivalence

- Attraction in Close Relationships

- Attribution Theory

- Authoritarian Personality

- Bayesian Statistical Methods in Psychology

- Behavior Therapy, Rational Emotive

- Behavioral Economics

- Behavioral Genetics

- Belief Perseverance

- Bereavement and Grief

- Biological Psychology

- Birth Order

- Body Image in Men and Women

- Bystander Effect

- Categorical Data Analysis in Psychology

- Childhood and Adolescence, Peer Victimization and Bullying...

- Clark, Mamie Phipps

- Clinical Neuropsychology

- Clinical Psychology

- Cognitive Consistency Theories

- Cognitive Dissonance Theory

- Cognitive Neuroscience

- Communication, Nonverbal Cues and

- Comparative Psychology

- Competence to Stand Trial: Restoration Services

- Competency to Stand Trial

- Computational Psychology

- Conflict Management in the Workplace

- Conformity, Compliance, and Obedience

- Consciousness

- Coping Processes

- Correspondence Analysis in Psychology

- Counseling Psychology

- Creativity at Work

- Critical Thinking

- Cross-Cultural Psychology

- Cultural Psychology

- Daily Life, Research Methods for Studying

- Data Science Methods for Psychology

- Data Sharing in Psychology

- Death and Dying

- Deceiving and Detecting Deceit

- Defensive Processes

- Depressive Disorders

- Development, Prenatal

- Developmental Psychology (Cognitive)

- Developmental Psychology (Social)

- Diagnostic and Statistical Manual of Mental Disorders (DSM...

- Discrimination

- Dissociative Disorders

- Drugs and Behavior

- Eating Disorders

- Ecological Psychology

- Educational Settings, Assessment of Thinking in

- Embodiment and Embodied Cognition

- Emerging Adulthood

- Emotional Intelligence

- Empathy and Altruism

- Employee Stress and Well-Being

- Environmental Neuroscience and Environmental Psychology

- Ethics in Psychological Practice

- Event Perception

- Evolutionary Psychology

- Expansive Posture

- Experimental Existential Psychology

- Exploratory Data Analysis

- Eyewitness Testimony

- Eysenck, Hans

- Factor Analysis

- Festinger, Leon

- Five-Factor Model of Personality

- Flynn Effect, The

- Forensic Psychology

- Forgiveness

- Friendships, Children's

- Fundamental Attribution Error/Correspondence Bias

- Gambler's Fallacy

- Game Theory and Psychology

- Geropsychology, Clinical

- Global Mental Health

- Habit Formation and Behavior Change

- Health Psychology

- Health Psychology Research and Practice, Measurement in

- Heider, Fritz

- Heuristics and Biases

- History of Psychology

- Human Factors

- Humanistic Psychology

- Implicit Association Test (IAT)

- Industrial and Organizational Psychology

- Inferential Statistics in Psychology

- Insanity Defense, The

- Intelligence

- Intelligence, Crystallized and Fluid

- Intercultural Psychology

- Intergroup Conflict

- International Classification of Diseases and Related Healt...

- International Psychology

- Interviewing in Forensic Settings

- Intimate Partner Violence, Psychological Perspectives on

- Introversion–Extraversion

- Item Response Theory

- Law, Psychology and

- Lazarus, Richard

- Learned Helplessness

- Learning Theory

- Learning versus Performance

- LGBTQ+ Romantic Relationships

- Lie Detection in a Forensic Context

- Life-Span Development

- Locus of Control

- Loneliness and Health

- Mathematical Psychology

- Meaning in Life

- Mechanisms and Processes of Peer Contagion

- Media Violence, Psychological Perspectives on

- Memories, Autobiographical

- Memories, Flashbulb

- Memories, Repressed and Recovered

- Memory, False

- Memory, Human

- Memory, Implicit versus Explicit

- Memory in Educational Settings

- Memory, Semantic

- Meta-Analysis

- Metacognition

- Metaphor, Psychological Perspectives on

- Microaggressions

- Military Psychology

- Mindfulness

- Mindfulness and Education

- Minnesota Multiphasic Personality Inventory (MMPI)

- Money, Psychology of

- Moral Conviction

- Moral Development

- Moral Psychology

- Moral Reasoning

- Nature versus Nurture Debate in Psychology

- Neuroscience of Associative Learning

- Nonergodicity in Psychology and Neuroscience

- Nonparametric Statistical Analysis in Psychology

- Observational (Non-Randomized) Studies

- Obsessive-Complusive Disorder (OCD)

- Occupational Health Psychology

- Olfaction, Human

- Operant Conditioning

- Optimism and Pessimism

- Organizational Justice

- Parenting Stress

- Parenting Styles

- Parents' Beliefs about Children

- Peace Psychology

- Perception, Person

- Performance Appraisal

- Personality and Health

- Personality Disorders

- Personality Psychology

- Phenomenological Psychology

- Placebo Effects in Psychology

- Play Behavior

- Positive Psychological Capital (PsyCap)

- Positive Psychology

- Posttraumatic Stress Disorder (PTSD)

- Prejudice and Stereotyping

- Pretrial Publicity

- Prisoner's Dilemma

- Problem Solving and Decision Making

- Procrastination

- Prosocial Behavior

- Prosocial Spending and Well-Being

- Protocol Analysis

- Psycholinguistics

- Psychological Literacy

- Psychological Perspectives on Food and Eating

- Psychology, Political

- Psychoneuroimmunology

- Psychophysics, Visual

- Psychotherapy

- Psychotic Disorders

- Publication Bias in Psychology

- Reasoning, Counterfactual

- Rehabilitation Psychology

- Relationships

- Reliability–Contemporary Psychometric Conceptions

- Religion, Psychology and

- Replication Initiatives in Psychology

- Research Methods

- Risk Taking

- Role of the Expert Witness in Forensic Psychology, The

- Sample Size Planning for Statistical Power and Accurate Es...

- Schizophrenic Disorders

- School Psychology

- School Psychology, Counseling Services in

- Self, Gender and

- Self, Psychology of the

- Self-Construal

- Self-Control

- Self-Deception

- Self-Determination Theory

- Self-Efficacy

- Self-Esteem

- Self-Monitoring

- Self-Regulation in Educational Settings

- Self-Report Tests, Measures, and Inventories in Clinical P...

- Sensation Seeking

- Sex and Gender

- Sexual Minority Parenting

- Sexual Orientation

- Signal Detection Theory and its Applications

- Simpson's Paradox in Psychology

- Single People

- Single-Case Experimental Designs

- Skinner, B.F.

- Sleep and Dreaming

- Small Groups

- Social Class and Social Status

- Social Cognition

- Social Neuroscience

- Social Support

- Social Touch and Massage Therapy Research

- Somatoform Disorders

- Spatial Attention

- Sports Psychology

- Stanford Prison Experiment (SPE): Icon and Controversy

- Stereotype Threat

- Stereotypes

- Stress and Coping, Psychology of

- Student Success in College

- Subjective Wellbeing Homeostasis

- Taste, Psychological Perspectives on

- Teaching of Psychology

- Terror Management Theory

- Testing and Assessment

- The Concept of Validity in Psychological Assessment

- The Neuroscience of Emotion Regulation

- The Reasoned Action Approach and the Theories of Reasoned ...

- The Weapon Focus Effect in Eyewitness Memory

- Theory of Mind

- Therapies, Person-Centered

- Therapy, Cognitive-Behavioral

- Thinking Skills in Educational Settings

- Time Perception

- Trait Perspective

- Trauma Psychology

- Twin Studies

- Type A Behavior Pattern (Coronary Prone Personality)

- Unconscious Processes

- Video Games and Violent Content

- Virtues and Character Strengths

- Women and Science, Technology, Engineering, and Math (STEM...

- Women, Psychology of

- Work Well-Being

- Wundt, Wilhelm

- Privacy Policy

- Cookie Policy

- Legal Notice

- Accessibility

Powered by:

- [66.249.64.20|185.80.151.41]

- 185.80.151.41

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Transl Behav Med

- v.4(3); 2014 Sep

Optimizing behavioral health interventions with single-case designs: from development to dissemination

Jesse dallery.

Department of Psychology, University of Florida, P. O. box 112250, Gainesville, FL 32611 USA

Bethany R Raiff

Department of Psychology, Rowan University, Glassboro, USA

Over the past 70 years, single-case design (SCD) research has evolved to include a broad array of methodological and analytic advances. In this article, we describe some of these advances and discuss how SCDs can be used to optimize behavioral health interventions. Specifically, we discuss how parametric analysis, component analysis, and systematic replications can be used to optimize interventions. We also describe how SCDs can address other features of optimization, which include establishing generality and enabling personalized behavioral medicine. Throughout, we highlight how SCDs can be used during both the development and dissemination stages of behavioral health interventions.

Research methods are tools to discover new phenomena, test theories, and evaluate interventions. Many researchers have argued that our research tools have become limited, particularly in the domain of behavioral health interventions [ 1 – 9 ]. The reasons for their arguments vary, but include an overreliance on randomized controlled trials, the slow pace and high cost of such trials, and the lack of attention to individual differences. In addition, advances in mobile and sensor-based data collection now permit real-time, continuous observation of behavior and symptoms over extended durations [ 3 , 10 , 11 ]. Such fine-grained observation can lead to tailoring of treatment based on changes in behavior, which is challenging to evaluate with traditional methods such as a randomized trial.

In light of the limitations of traditional designs and advances in data collection methods, a growing number of researchers have advocated for alternative research designs [ 2 , 7 , 10 ]. Specifically, one family of research designs, known as single-case designs (SCDs), has been proposed as a useful way to establish the preliminary efficacy of health interventions [ 3 ]. In the present article, we recapitulate and expand on this proposal, and argue that they can be used to optimize health interventions.

We begin with a description of what we consider to be a set of criteria, or ideals, for what research designs should accomplish in attempting to optimize an intervention. Admittedly, these criteria are self-serving in the sense that most of them constitute the strengths of SCDs, but they also apply to other research designs discussed in this volume. Next, we introduce SCDs and how they can be used to optimize treatment using parametric and component analyses. We also describe how SCDs can address other features of optimization, which include establishing generality and enabling personalized behavioral medicine. Throughout, we also highlight how these designs can be used during both the development and dissemination of behavioral health interventions. Finally, we evaluate the extent to which SCDs live up to our ideals.

AN OPTIMIZATION IDEAL

During development and testing of a new intervention, our methods should be efficient, flexible, and rigorous. We would like efficient methods to help us establish preliminary efficacy, or “clinically significant patient improvement over the course of treatment” [ 12 ] (p. 137). We also need flexible methods to test different parameters or components of an intervention. Just as different doses of a drug treatment may need to be titrated to optimize effects, different parameters or components of a behavioral treatment may need to be titrated to optimize effects. It should go without saying that we also want our methods to be rigorous, and therefore eliminate or reduce threats to internal validity.

Also, during development, we would like methods that allow us to assess replications of effects to establish the reliability and generality of an intervention. Replications, if done systematically and thoughtfully, can answer questions about for whom and under what conditions an intervention is effective. Answering these questions speaks to the generality of research findings. As Cohen [ 13 ] noted in a seminal article: “For generalization, psychologists must finally rely, as has been done in all the older sciences, on replication” (p. 997). Relying on replications and establishing the conditions under which an intervention works could also lead to more targeted, efficient dissemination efforts.

During dissemination, when an intervention is implemented in clinical practice, we again would like to know if the intervention is producing a reliable change in behavior for a particular individual. (Here, “we” may refer to practitioners in addition to researchers.) With knowledge derived from development and efficacy testing, we may be able to alter components of an intervention that impact its effectiveness. But, ideally, we would like to not only alter but verify whether these components are working. Also, recognizing that behavior change is idiosyncratic and dynamic, we may need methods that allow ongoing tailoring and testing. This may result in a kind of personalized behavioral medicine in which what gets personalized, and when, is determined through experimental analysis.

In addition, during both development and dissemination, we want methods that afford innovation. We should have methods that allow rapid, rigorous testing of new treatments, and which permit incorporating new technologies to assess and treat behavior as they become available. This might be thought of as systematic play. Whatever we call it, it is a hallmark of the experimental attitude in science.

INTRODUCTION TO SINGLE-CASE DESIGNS

SCDs include an array of methods in which each participant, or case, serves as his or her own control. Although these methods are conceptually rooted in the study of cognition and behavior [ 14 ], they are theory-neutral and can be applied to any health intervention. In a typical study, some behavior or symptom is measured repeatedly during all conditions for all participants. The experimenter systematically introduces and withdraws control and intervention conditions, and assesses effects of the intervention on behavior across replications of these conditions within and across participants. Thus, these studies include repeated, frequent assessment of behavior, experimental manipulation of the independent variable (the intervention or components of the intervention), and replication of effects within and across participants.

The main challenge in conducting a single-case experiment is collecting data of the same behavior or symptom repeatedly over time. In other words, a time series must be possible. If behavior or symptoms cannot be assessed frequently, then SCDs cannot be used (e.g., on a weekly basis, at a minimum, for most health interventions). Fortunately, technology is revolutionizing methods to collect data. For example, ecological momentary assessment (EMA) enables frequent input by an end-user into a handheld computer or mobile phone [ 15 ]. Such input occurs in naturalistic settings, and it usually occurs on a daily basis for several weeks to months. EMA can therefore reveal behavioral variation over time and across contexts, and it can document effects of an intervention on an individual’s behavior [ 15 ]. Sensors to record physical activity, medication adherence, and recent drug use also enable the kind of assessment required for single-case research [ 10 , 16 ]. In addition, advances in information technology and mobile phones can permit frequent assessment of behavior or symptoms [ 17 , 18 ]. Thus, SCDs can capitalize on the ability of technology to easily, unobtrusively, and repeatedly assess health behavior [ 3 , 18 , 19 ].

SCDs suffer from several misconceptions that may limit their use [ 20 – 23 ]. First, a single case does not mean “ n of 1.” The number of participants in a typical study is almost always more than 1, usually around 6 but sometimes as many as 20, 40, or more participants [ 24 , 25 ]. Also, the unit of analysis, or “case,” could be individual participants, clinics, group homes, hospitals, health care agencies, or communities [ 1 ]. Given that the unit of analysis is each case (i.e., participant), a single study could be conceptualized as a series of single-case experiments. Perhaps a better label for these designs would be “intrasubject replication designs” [ 26 ]. Second, SCDs are not limited to interventions that produce large, immediate changes in behavior. They can be used to detect small but meaningful changes in behavior and to assess behavior that may change slowly over time (e.g., learning a new skill) [ 27 ]. Third, SCDs are not quasi-experimental designs [ 20 ]. The conventional notions that detecting causal relations requires random assignment and/or random sampling are false [ 26 ]. Single-case experiments are fully experimental and include controls and replications to permit crisp statements about causal relations between independent and dependent variables.

VARIETIES OF SINGLE-CASE DESIGNS

The most relevant SCDs to behavioral health interventions are presented in Table 1 . The table also presents some procedural information and advantages and disadvantages for each design. (The material below is adapted from [ 3 ]) There are also a number of variants of these designs, enabling flexibility in tailoring the design based on practical or empirical considerations [ 27 , 28 ]. For example, there are several variants to circumvent long periods of assessing behavior during baseline conditions, which may be problematic if the behavior is dangerous, before introducing a potentially effective intervention [ 28 ].

Several single-case designs, including general procedures, advantages, and disadvantages

Procedural controls must be in place to make inferences about causal relations, such as clear, operational definitions of the dependent variables, reliable and valid techniques to assess the behavior, and the experimental design must be sufficient to rule out alternative hypotheses for the behavior change. Table 2 presents a summary of methodological and assessment standards to permit conclusions about treatment effects [ 29 , 30 ]. These standards were derived from Horner et al. [ 29 ] and from the recently released What Works Clearinghouse (WWC) pilot standards for evaluating single-case research to inform policy and practice (hereafter referred to as the SCD standards) [ 31 ].

Quality indicators for single-case research [ 29 ]

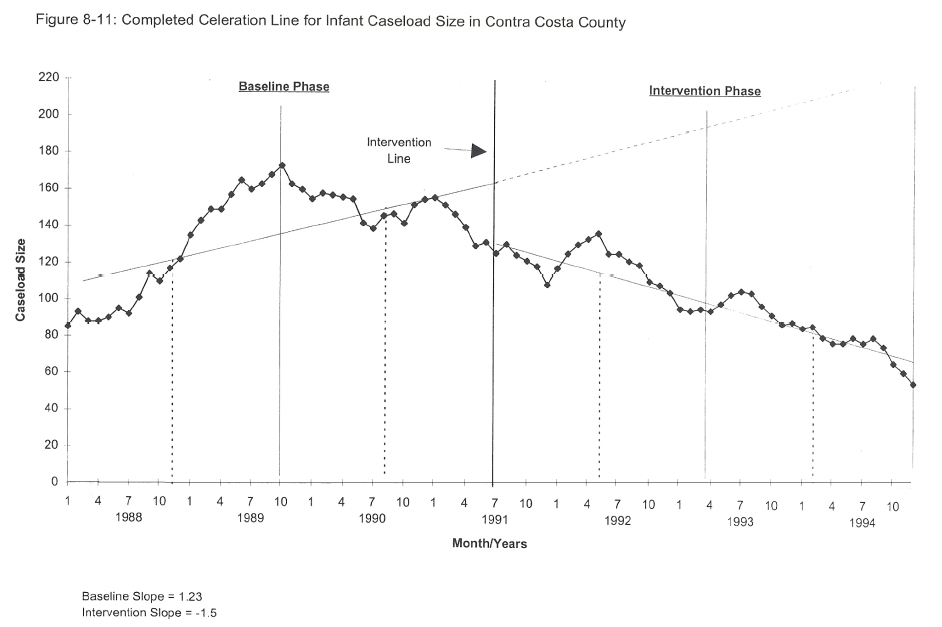

All of the designs listed in Table 1 entail a baseline period of observation. During this period, the dependent variable is measured repeatedly under control conditions. For example, Dallery, Glenn, and Raiff [ 24 ] used a reversal design to assess effects of an internet-based incentive program to promote smoking cessation, and the baseline phase included self-monitoring, carbon monoxide assessment of smoking status via a web camera, and monetary incentives for submitting videos. The active ingredient in the intervention, incentives contingent on objectively verified smoking abstinence, was not introduced until the treatment phase.

The duration of the baseline and the pattern of the data should be sufficient to predict future behavior. That is, the level of the dependent variable should be stable enough to predict its direction if the treatment was not introduced. If there is a trend in the direction of the anticipated treatment effect during baseline, or if there is too much variability, the ability to detect a treatment effect will be compromised. Thus, stability, or in some cases a trend in the direction opposite the predicted treatment effect, is desirable during baseline conditions.

In some cases, the source(s) of variability can be identified and potentially mitigated (e.g., variability could be reduced by automating data collection, standardizing the setting and time for data collection). However, there may be instances when there is too much variability during baseline conditions, and thus, detecting a treatment effect will not be feasible. There are no absolute standards to define what “too much” variability means [ 27 ]. Excessive variability is a relative term, which is typically determined by a comparison of performance within and between conditions (e.g., between baseline and intervention conditions) in a single-case experiment. The mere presence of variability does not mean that a single-case approach should be abandoned, however. Indeed, identifying the sources of variability and/or assessing new measurement strategies can be evaluated using SCDs. Under these conditions, the outcome of interest is not an increase or a decrease in some behavior or symptom but a reduction in variability. Once accomplished, the researcher has not only learned something useful but is also better prepared to evaluate the effects of an intervention to increase or decrease some health behavior.

REVERSAL DESIGNS

In a reversal design, a treatment is introduced after the baseline period, and then a baseline period is re-introduced, hence, the “reversal” in this design (also known as an ABA design, where “A” is baseline and “B” is treatment). Using only two conditions, such as a pre-post design, is not considered sufficient to demonstrate experimental control because other sources of influence on behavior cannot be ruled out [ 31 , 32 ]. For example, a smoking cessation intervention could coincide with a price increase in cigarettes. By returning to baseline conditions, we could assess and possibly rule out the influence of the price increase on smoking. Researchers also often use a reversal to the treatment condition. Thus, the experiment ends during a treatment period (an ABAB design). Not only is this desirable from the participant’s perspective but it also provides a replication of the main variable of interest—the treatment [ 33 ].

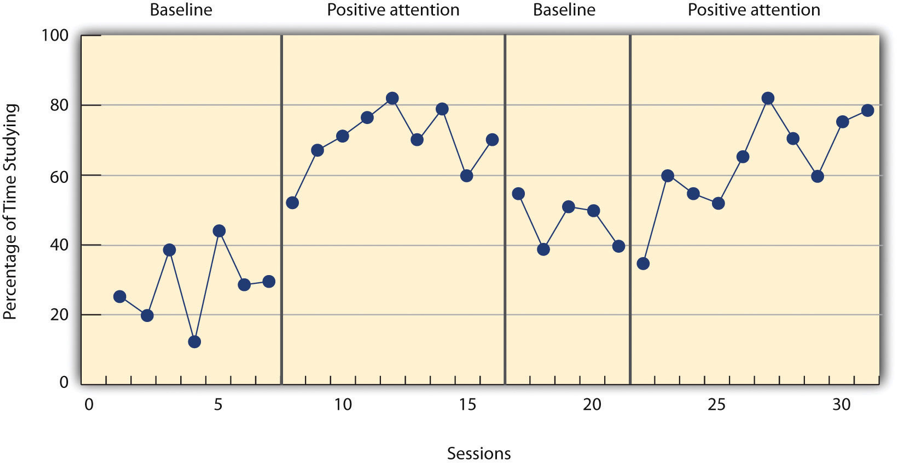

Figure 1 displays an idealized, ABAB reversal design, and each panel shows data from a different participant. Although all participants were exposed to the same four conditions, the duration of the conditions differed because of trends in the conditions. For example, for participant 1, the beginning of the first baseline condition displays a consistent downward trend (in the same direction as the expected text-message treatment effects). If we were to introduce the smoking cessation-related texts after only five or six baseline sessions, it would be unclear if the decrease in smoking was a function of the independent variable. Therefore, continuing the baseline condition until there is no visible trend helps build our confidence about the causal role of the treatment when it is introduced. The immediate decrease in the level of smoking for participant 1 when the treatment is introduced also implicates the treatment. We can also detect, however, an increasing trend in the early portion of the treatment condition. Thus, we need to continue the treatment condition until there is no undesirable trend before returning to the baseline condition. Similar patterns can be seen for participants 2–4. Based on visual analysis of Fig. 1 , we would conclude that treatment is exerting a reliable effect on smoking. But, the meaningfulness of this effect requires additional considerations (see the section below on “ Visual, Statistical, and Social Validity Analysis ”).

Example of a reversal design showing experimental control and replications within and between subjects. Each panel represents a different participant, each of whom experienced two baseline and two treatment conditions

Studies using reversal designs typically include at least four or more participants. The goal is to generate enough replications, both within participants and across participants, to permit a confident statement about causal relations. For example, several studies on incentive-based treatment to promote drug abstinence have used 20 participants in a reversal design [ 24 , 25 ]. According to the SCD standards, there must be a minimum of three replications to support conclusions about experimental control and thus causation. Also, according to the SCD standards, there must be at least three and preferably five data points per phase to allow the researcher to evaluate stability and experimental effects [ 31 ].

There are two potential limitations of reversal designs in the context of behavioral health interventions. First, the treatment must be withdrawn to demonstrate causal relations. Some have raised an ethical objection about this practice [ 11 ]. However, we think that the benefits of demonstrating that a treatment works outweigh the risks of temporarily withdrawing treatment (in most cases). The treatment can also be re-instituted in a reversal design (i.e., an ABAB design). Second, if the intervention produces relatively permanent changes in behavior, then a reversal to pre-intervention conditions may not be possible. For example, a treatment that develops new skills may imply that these skills cannot be “reversed.” Some interventions do not produce permanent change and must remain in effect for behavior change to be maintained, such as some medications and incentive-based procedures. Under conditions where behavior may not return to baseline levels when treatment is withdrawn, alternative designs, such as multiple-baseline designs, should be used.

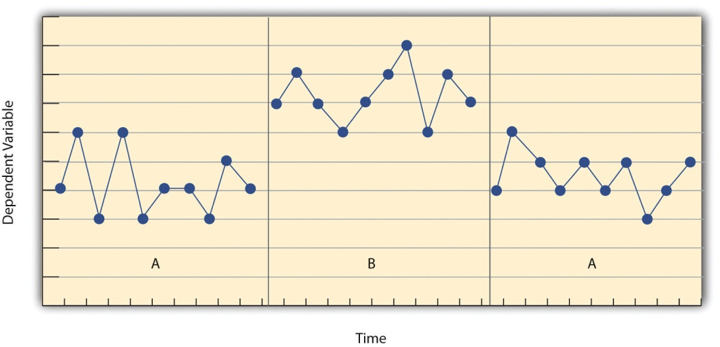

MULTIPLE-BASELINE DESIGNS

In a multiple-baseline design, the durations of the baselines vary systematically for each participant in a so-called staggered fashion. For example, one participant may start treatment after five baseline days, another after seven baseline days, then nine, and so on. After baseline, treatment is introduced, and it remains until the end of the experiment (i.e., there are no reversals). Like all SCDs, this design can be applied to individual participants, clusters of individuals, health care agencies, and communities. These designs are also referred to as interrupted time-series designs [ 1 ] and stepped wedge designs [ 7 ].

The utility of these designs is derived from demonstrating that change occurs when, and only when, the intervention is directed at a particular participant (or whatever the unit of analysis happens to be [ 28 ]). The influence of other factors, such as idiosyncratic experiences of the individual or self-monitoring (e.g., reactivity), can be ruled out by replicating the effect across multiple individuals. A key to ruling out extraneous factors is a stable enough baseline phase (either no trends or a trend in the opposite direction to the treatment effect). As replications are observed across individuals, and behavior changes when and only when treatment is introduced, confidence that behavior change was caused by the treatment increases.

As noted above, multiple-baseline designs are useful for interventions that teach new skills, where behavior would not be expected to “reverse” to baseline levels. Multiple-baseline designs also obviate the ethical concern about withdrawing treatment (as in a reversal design) or using a placebo control comparison group (as in randomized trials), as all participants are exposed to the treatment with multiple-baseline designs.

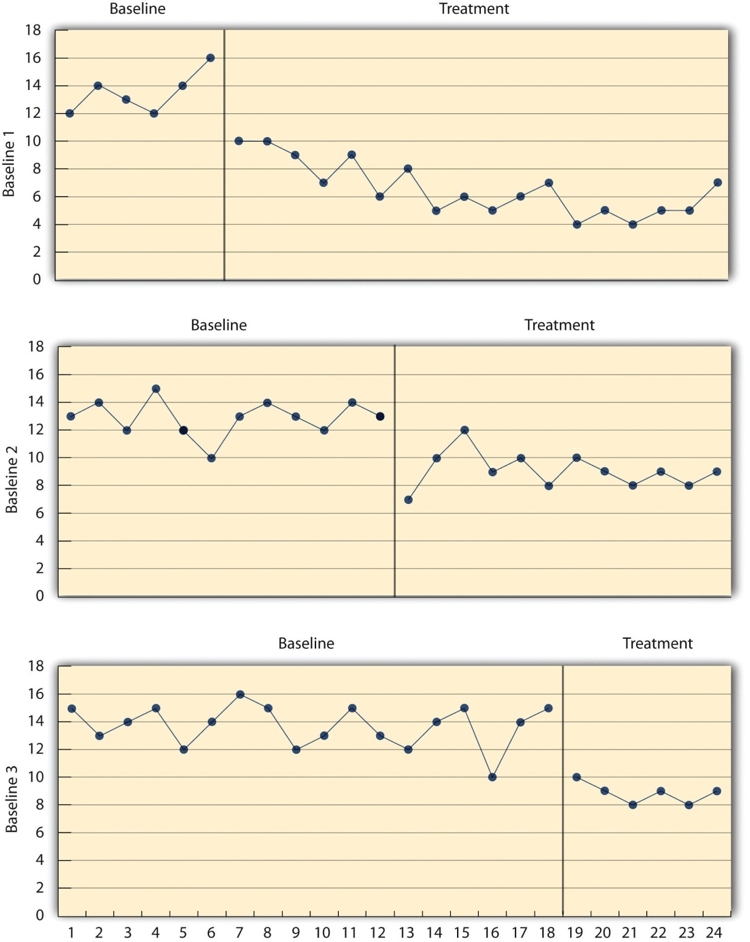

Figure 2 illustrates a simple, two-condition multiple-baseline design replicated across four participants. As noted above, the experimenter should introduce treatment only when the data appear stable during baseline conditions. The durations of the baseline conditions are staggered for each participant, and the dependent variable increases when, and only when, the independent variable is introduced for all participants. The SCD standards requires at least six phases (i.e., three baseline and three treatment) with at least five data points per phase [ 31 ]. Figure 2 suggests reliable increases in behavior and that the treatment was responsible for these changes.

Example of a multiple-baseline design showing experimental control and replications between subjects. Each row represents a different participant, each of whom experienced a baseline and treatment. The baseline durations differed across participants

CHANGING CRITERION DESIGN

The changing criterion design is also relevant to optimizing interventions [ 34 ]. In a changing criterion design, a baseline is conducted until stability is attained. Then, a treatment goal is introduced, and goals are made progressively more difficult. Behavior should track the introduction of each goal, thus demonstrating control by the level of the independent variable [ 28 ]. For example, Kurti and Dallery [ 35 ] used a changing criterion design to increase activity in six sedentary adults using an internet-based contingency management program to promote walking. Weekly step count goals were gradually increased across 5-day blocks. The step counts for all six participants increased reliably with each increase in the goals, thereby demonstrating experimental control of the intervention. This design has many of the same benefits of the multiple-baseline design, namely that a reversal is not required for ethical or potentially practical reasons (i.e., irreversible treatment effects).

VISUAL, STATISTICAL, AND SOCIAL VALIDITY ANALYSIS

Analyzing the data from SCDs involves three questions: (a) Is there a reliable effect of the intervention? (b) What is the magnitude of the effect? and (c) Are the results clinically meaningful and socially valid [ 31 ]? Social validity refers to the extent to which the goals, procedures, and results of an intervention are socially acceptable to the client, the researcher or health care practitioner, and society [ 36 – 39 ]. The first two questions can be answered by visual and statistical analysis, whereas the third question requires additional considerations.

The SCD standards prioritizes visual analysis of the time-series data to assess the reliability and magnitude of intervention effects [ 29 , 31 , 40 ]. Clinically significant change in patient behavior should be visible. Visual analysis prioritizes clinically significant change in health-related behavior as opposed to statistically significant change in group behavior [ 13 , 41 , 42 ]. Although several researchers have argued that visual analysis may be prone to elevated rates of type 1 error, such errors may be limited to a narrow range of conditions (e.g., when graphs do not contain contextual information about the nature of the plotted behavioral data) [ 27 , 43 ]. Furthermore, in recent years, training in visual analysis has become more formalized and rigorous [ 44 ]. Perhaps as a result, Kahng and colleagues found high reliability among visual analysts in judging treatment effects based on analysis of 36 ABAB graphs [ 45 ]. The SCD standards recommends four steps and the evaluation of six features of the graphical displays for all participants in a study, which are displayed in Table 3 [ 31 ]. As the visual analyst progresses through the steps, he or she also uses the six features to evaluate effects within and across experimental phases.

Four steps and six outcome measures to evaluate when conducting visual analysis of time-series data

In addition to visual analysis, several regression-based approaches are available to analyze time-series data, such as autoregressive models, robust regression, and hierarchical linear modeling (HLM) [ 46 – 49 ]. A variety of non-parametric statistics are also available [ 27 ]. Perhaps because of the proliferation of statistical methods, there is a lack of consensus about which methods are most appropriate in light of different properties of the data (e.g., the presence of trends and autocorrelation [ 43 , 50 ], the number of data points collected, etc.). A discussion of statistical techniques is beyond the scope of this paper. We recommend Kazdin’s [ 27 ] or Barlow and colleague’s [ 28 ] textbooks as useful resources regarding statistical analysis of time-series data. The SCD standards also includes a useful discussion of statistical approaches for data analysis [ 31 ].

A variety of effect size calculations have been proposed for SCDs [ 13 , 51 – 54 ]. Although effect size estimates may allow for rank ordering of most to least effective treatments [ 55 ], most estimates do not provide metrics that are comparable to effect sizes derived from group designs [ 31 ]. However, one estimate that provides metrics comparable to group designs has been developed and tested by Shadish and colleagues [ 56 , 57 ]. They describe a standardized mean difference statistic ( d ) that is equivalent to the more conventional d in between-groups experiments. The d statistic can also be used to compute power based on the number of observations in each condition and the number of cases in an experiment [ 57 ]. In addition, advances in effect size estimates has led to several meta-analyses of results from SCDs [ 48 , 58 – 61 ]. Zucker and associates [ 62 ] explored Bayesian mixed-model strategy to combining SCDs using, which allowed population-level claims about the merits of different intervention strategies.

Determining whether the results are clinically meaningful and socially valid can be informed by visual and most forms of statistical analysis (i.e., not null-hypothesis significance testing) [ 42 , 63 ]. One element in judging social validity concerns the clinical meaningfulness of the magnitude of behavior change. This judgment can be made by the researcher or clinician in light of knowledge of the subject matter, and perhaps by the client being treated. Depending on factors such as the type of behavior and the way in which change is measured, the judgment can also be informed by previous research on a minimal clinically important difference (MCID) for the behavior or symptom under study [ 64 , 65 ]. The procedures used to generate the effect also require consideration. Intrusive procedures may be efficacious yet not acceptable. The social validity of results and procedures should be explicitly assessed when conducting SCD research, and a variety of tools have emerged to facilitate such efforts [ 37 ]. Social validity assessment should also be viewed as a process [ 37 ]. That is, it can and should be assessed at various time points as an intervention is developed, refined, and eventually implemented. Social validity may change as the procedures and results of an intervention are improved and better appreciated in the society at large.

OPTIMIZATION METHODS AND SINGLE-CASE DESIGNS

The SCDs described above provide an efficient way to evaluate the effects of a behavioral intervention. However, in most of the examples above, the interventions were held constant during treatment periods; that is, they were procedurally static (cf. [ 35 ]). This is similar to a randomized trial, in which all components of an intervention are delivered all at once and held constant throughout the study. However, the major difference between the examples above and traditional randomized trials is efficiency: SCDs usually require less time and fewer resources to demonstrate that an intervention can change behavior. Nevertheless, a single, procedurally static single-case experiment does not optimize treatment beyond showing whether or not it works.

One way to make initial efficacy testing more dynamic would be to conduct a series of single-case experiments in which aspects of the treatment are systematically explored. For example, a researcher could assess effects of different frequencies, timings, or tailoring dimensions of a text-based intervention to promote physical activity. Such manipulation could also be conducted in separate experiments conducted by the same or different researchers. Some experiments may reveal larger effects than others, which could then lead to further replications of the effects of the more promising intervention elements. This iterative development process, with a focus on systematic manipulation of treatment elements and replications of effects within and across experiments, could lead to an improved intervention within a few years’ time. Arguably, this process could yield more clinically useful information than a procedurally static randomized trial conducted over the same period [ 5 , 17 ].

To further increase the efficiency of optimizing treatment, different components or parameters of an intervention can be systematically evaluated within and across single-case experiments. There are two ways to optimize treatment using these methods: parametric and component analyses.

PARAMETRIC ANALYSIS

Parametric analysis involves exposing participants to a range of values of the independent variable, as opposed to just one or two values. To qualify as a parametric analysis, three is the minimum number of values that must be evaluated, as this number is the minimum to evaluate the function form relating the independent to the dependent variable. One goal of a parametric analysis is to identify the optimal value that produces a behavioral outcome. Another goal is to identify general patterns of behavior engendered by a range of values of the independent variable [ 26 , 63 ].

Many behavioral health interventions can be delivered at different levels [ 66 ] and are therefore amenable to parametric analysis. For example, text-based prompts can be delivered at different frequencies, incentives can be delivered at different magnitudes and frequencies, physical activity can occur at different frequencies and intensities, engagement in a web-based program can occur at different levels, medications can be administered at different doses and frequencies, and all of the interventions could be delivered for different durations.

The repeated measures, and resulting time-series data, that are inherent to all SCDs (e.g., reversal and multiple-baseline designs) make them useful designs to conduct parametric analyses. For example, two doses of a medication, low versus high, labeled B and C, respectively, could be assessed using a reversal design [ 67 ]. There may be several possible sequences to conduct the assessment such as ABCBCA or ABCABCA. If C is found to be more effective of the two, it might behoove the researcher to replicate this condition using an ABCBCAC design. A multiple baseline across participants could also be conducted to assess the two doses, one dose for each participant, but this approach may be complicated by individual variability in medication effects. Instead, the multiple-baseline approach could be used on a within-subject basis, where the durations of not just the baselines but of the different dose conditions are varied across participants [ 68 ].

Guyatt and colleagues [ 5 ] provide an excellent discussion about how parametric analysis can be used to optimize an intervention. The intervention was amitriptyline for the treatment of fibrositis. The logic and implications of the research tactics, however, also apply to other interventions that have parametric dimensions. At the time that the research was conducted, a dose of 50 mg/day was the standard recommendation for patients. To determine whether this dose was optimal for a given individual, the researchers first exposed participants to low doses, and if no response was noted relative to placebo, then they systematically increased the dose until a response was observed, or until they reached the maximum of 50 mg/day. In general, their method involved a reversal design in which successively higher doses alternated with placebo. So, for example, if one participant did not respond to a low dose, then doses might be increased to generate an ABCD design, where each successive letter represents a higher dose (other sequences were arranged as well). Parametrically examining doses in this way, and examining individual subject data, the researchers found that some participants responded favorably at lower doses than 50 mg/day (e.g., 10 or 20 mg/day). This was an important finding because the higher doses often produced unwanted side effects. Once optimal doses were identified for individuals, the researchers were able to conduct further analyses using a reversal design, exposing them to either their optimal dose or placebo on different days.

Guyatt and colleagues also investigated the minimum duration of treatment necessary to detect an effect [ 5 ]. Initially, all participants were exposed to the medication for 4 weeks. Visual analysis of the time-series data revealed that medication effects were apparent within about 1–2 weeks of exposure, making a 4-week trial unnecessary. This discovery was replicated in a number of subjects and led them to optimize future, larger studies by only conducting a 2-week intervention. Investigating different treatment durations, such as this, is also a parametric analysis.

Parametric analysis can detect effects that may be missed using a standard group design with only one or two values of the independent variable. For example, in the studies conducted by Guyatt and colleagues [ 5 ], if only the lowest dose of amitriptyline had been investigated using a group approach, the researchers may have incorrectly concluded that the intervention was ineffective because this dose only worked for some individuals. Likewise, if only the highest dose had been investigated, it may have been shown to be effective, but potentially more individuals would have experienced unnecessary side effects (i.e., the results would have low social validity for these individuals). Perhaps most importantly, in contrast to what is typically measured in a group design (e.g., means, confidence intervals, etc.), optimizing treatment effects is fundamentally a question about an individual ’ s behavior.

COMPONENT ANALYSIS

A component analysis is “any experiment designed to identify the active elements of a treatment condition, the relative contributions of different variables in a treatment package, and/or the necessary and sufficient components of an intervention” [ 69 ]. Behavioral health interventions often entail more than one potentially active treatment element. Determining the active elements may be important to increase dissemination potential and decrease cost. Single-case research designs, in particular the reversal and multiple-baseline designs, may be used to perform a component analysis. The essential experimental ingredients, regardless of the method, are that the independent variable(s) are systematically introduced and/or withdrawn, combined with replication of effects within and/or between subjects.

There are two main variants of component analyses: the dropout and add-in analyses. In a dropout analysis, the full treatment package is presented following a baseline phase and then components are systematically withdrawn from the package. A limitation of dropout analyses is when components produce irreversible behavior change (i.e., learning a new skill). Given that most interventions seek to produce sustained changes in health-related behavior, dropout analyses may have limited applicability. Instead, in add-in analyses, components can be assessed individually and/or in combination before the full treatment package is assessed [ 69 ]. Thus, a researcher could conduct an ABACAD design, where A is baseline, B and C are the individual components, and D is the combination of the two B and C components. Other sequences are also possible, and which one is selected will require careful consideration. For example, sequence effects should be considered, and researchers could address these effects through counterbalancing, brief “washout” periods, or explicit investigation of these effects [ 26 ]. If sequence effects cannot be avoided, combined SCD and group designs can be used to perform a component analysis. Thus, different components of a treatment package can be delivered between two groups, and within each group, a SCD can be used to assess effects of each combination of components. Although very few component analyses have assessed health behavior or symptoms per se as the outcome measure, there are a variety of behavioral interventions that have been evaluated using component analysis [ 63 ]. For example, Sanders [ 70 ] conducted a component analysis of an intervention to decrease lower back pain (and increase time standing/walking). The analysis consisted of four components: functional analysis of pain behavior (e.g., self-monitoring of pain and the conditions that precede and follow pain), progressive relaxation training, assertion training, and social reinforcement of increased activity. Sanders concluded that both relaxation training and reinforcement of activity were necessary components (see [ 69 ] for a discussion of some limitations of this study).

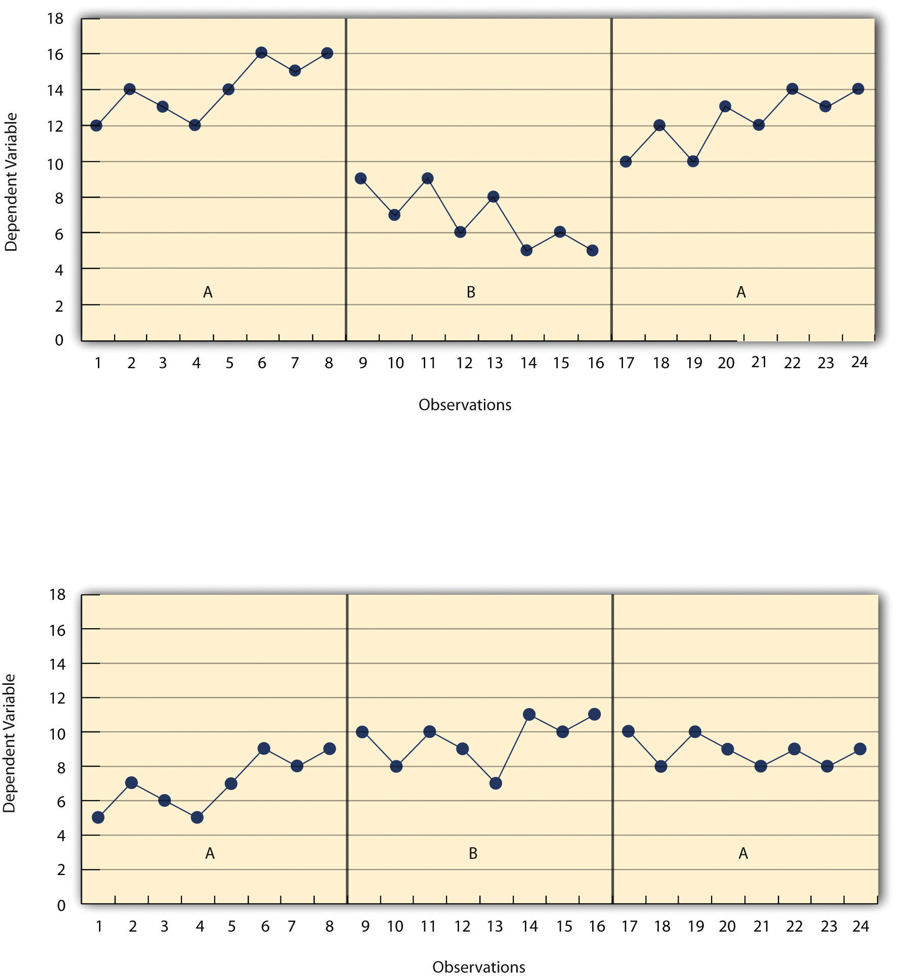

Several conclusions can be drawn about the effects of the various components in changing behavior. The data should first be evaluated to determine the extent to which the effects of individual components are independent of one another. If they are, then the effects of the components are additive. If they are not, then the effects are multiplicative, or the effects of one component depend on the presence of another component. Figure 3 presents simplified examples of these two possibilities using a reversal design and short data streams (adapted from [ 69 ]). The panel on the left shows additive effects, and the panel on the right shows multiplicative effects. The data also can be analyzed to determine whether each component is necessary and sufficient to produce behavior change. For instance, the panel on the right shows that neither the component labeled X (e.g., self-monitoring of health behavior) nor the component labeled Y (e.g., counseling to change health behavior) is sufficient, and both components are necessary. If two components produce equal changes in behavior, and the same amount of change when both are combined, then either component is sufficient but neither is necessary.

Two examples of possible results from a component analysis. BSL baseline, X first component, Y second component. The panel on the left shows an additive effect of components X and Y, and the panel of the right shows a multiplicative effect of components X and Y

The logic of the component analyses described here is similar to new methods derived from an engineering framework [ 2 , 9 , 71 ]. During the initial stages of intervention development, researchers use factorial designs to allocate participants to different combinations of treatment components. These designs, called fractional factorials because not all combinations of components are tested, can be used to screen promising components of treatment packages. The components tested may be derived from theory or working assumptions about which components and combinations will be of interest, which is the same process used to guide design choices in SCD research. Just as engineering methods seek to isolate and combine active treatment components to optimize interventions, so too do single-case methods. The main difference between approaches is the focus on the individual as the unit of analysis in SCDs.

OPTIMIZING WITH REPLICATIONS AND ESTABLISHING GENERALITY

Another form of optimization is an understanding of the conditions under which an intervention may be successful. These conditions may relate to particular characteristics of the participant (or whatever the unit of analysis happens to be) or to different situations. In other words, optimizing an intervention means establishing its generality.

In the context of single-case research, generality can be demonstrated experimentally in several ways. The most basic way is via direct replication [ 26 ]. Direct replication means conducting the same experiment on the same behavioral problem across several individuals (i.e., a single-case experiment). For example, Raiff and Dallery [ 72 ] achieved a direct replication of the effects of internet-based contingency management (CM) on adherence to glucose testing in four adolescents. One goal of the study was to establish experimental control by the intervention and to minimize as many extraneous factors as possible. Overall, direct replication can help establish generality across participants. It cannot answer questions about generality across settings, behavior change agents, target behaviors, or participants that differ in some way from the original experiment (e.g., to adults diagnosed with type 1 diabetes). Instead, systematic replication can answer these questions. In a systematic replication, the methods from previous direct replication studies are used in a new setting, target behavior, group of participants, and so on [ 73 ]. The Raiff and Dallery study, therefore, was also a systematic replication of effects of internet-based CM to promote smoking cessation to a new problem and to a new group of participants because the procedure had originally been tested with adult smokers [ 24 ]. Effects of internet-based CM for smoking cessation also were systematically replicated in an application to adolescent smokers using a single-case design [ 74 ].

Systematic replication also occurs with parametric manipulation [ 63 ]. In other words, rather than changing the type of participants or setting, we change the value of the independent variable. In addition to demonstrating an optimal effect, parametric analysis may also reveal boundary conditions. These may be conditions under which an intervention no longer has an effect, or points of diminishing returns in which further increases in some parameter produce no further increases in efficacy. For example, if one study was conducted showing that 30 min of moderate exercise produced a decrease in cigarette cravings, a systematic replication, using parametric analysis, might be conducted to determine the effects of other exercise durations (e.g., 5, 30, 60 min) on cigarette craving to identify the boundary parameters (i.e., the minimum and maximum number of minutes of exercise needed to continue to see changes in cigarette craving). Boundary conditions are critical in establishing generality of an intervention. In most cases, the only way to assess boundary conditions is through experimental, parametric analysis of an individual’s behavior.

By carefully choosing the characteristics of the individuals, settings, or other relevant variables in a systematic replication, the researcher can help identify the conditions under which a treatment works. To be sure, as with any new treatment, failures will occur. However, the failure does not detract from the prior successes: “…a procedure can be quite valuable even though it is effective under a narrow range of conditions, as long as we know what those conditions are” [ 75 ]. Such information is important for treatment recommendations in a clinical setting, and scientifically, it means that the conditions themselves may become the subject of experimental analysis.

This discussion leads to a type of generality called scientific generality [ 63 ], which is at the heart of a scientific understanding of behavioral health interventions (or any intervention for that matter). As described by Branch and Pennypacker [ 63 ], scientific generality is characterized by knowledgeable reproducibility, or knowledge of the factors that are required for a phenomenon to occur. Scientific generality can be attained through parametric and component analysis, and through systematic replication. One advantage of a single-case approach to establishing generality is that a series of strategic studies can be conducted with some degree of efficiency. Moreover, the data intimacy afforded by SCDs can help achieve scientific generality about behavioral health interventions.

PERSONALIZED BEHAVIORAL MEDICINE

Personalized behavioral medicine involves three steps: assessing diagnostic, demographic, and other variables that may influence treatment outcomes; assigning an individual to treatment based on this information; and using SCDs to assess and tailor treatment. The first and second steps may be informed by outcomes using SCDs. In addition, the clinician may be in a better position to personalize treatment with knowledge derived from a body of SCD research about generality, boundary conditions, and the factors that are necessary for an effect to occur. (Of course, this information can come from a variety of sources—we are simply highlighting how SCDs may fit in to this process.)

In addition, with advances in genomics and technology-enabled behavioral assessment prior to treatment (i.e., a baseline phase), the clinician may further target treatment to the unique characteristics of the individual [ 76 ]. Genetic testing is becoming more common before prescribing various medications [ 17 ], and it may become useful to predict responses for treatments targeting health behavior. Baseline assessment of behavior using technology such as EMA may allow the clinician to develop a tailored treatment protocol. For example, assessment could reveal the temporal patterning of risky situations, such as drinking alcohol, having an argument, or long periods of inactivity. A text-based support system could be tailored such that the timings of texts are tied to the temporal pattern of the problem behavior. The baseline assessment may also be useful to simply establish whether a problem exists. Also, the data path during baseline may reveal that behavior or symptoms are already improving prior to treatment, which would suggest that other, non-treatment variables are influencing behavior. Perhaps more importantly, compared to self-report, baseline conditions provide a more objective benchmark to assess effects of treatment on behavior and symptoms.

In addition to greater personalization at the start of treatment, ongoing assessment and treatment tailoring can be achieved with SCDs. Hayes [ 77 ] described how parametric and component analyses can be conducted in clinical practice. For example, reversal designs could be used to conduct a component analysis. Two components, or even different treatments, could be systematically introduced alone and together. If the treatments are different, such comparisons would also yield a kind of comparative effectiveness analysis. For example, contingency contracting and pharmacotherapy for smoking cessation could be presented alone using a BCBC design (where B is contracting and C is pharmacotherapy). A combined treatment could also be added, and depending on results, a return to one or the other treatment could follow (e.g., BCDCB, where D is the combined treatment). Furthermore, if a new treatment becomes available, it could be tested relative to an existing standard treatment in the same fashion. One potential limitation of such designs is when a reversal to baseline conditions (i.e., no treatment) is necessary to document treatment effects. Such a return to baseline may be challenging for ethical, reimbursement, and other issues.

Multiple-baseline designs also can be used in clinical contexts. Perhaps the simplest example would be a multiple baseline across individuals with similar problems. Each individual would experience an AB sequence, where the durations of the baseline phases vary. Another possibility is to target different behavior in the same individual in a multiple-baseline across behavior design. For example, a skills training program to improve social behavior could target different aspects of such behavior in a sequential fashion, starting with eye contact, then posture, then speech volume, and so on. If behavior occurs in a variety of distinct settings, the treatment could be sequentially implemented across these settings. Using the same example, treatment could target social behavior at family events, work, and different social settings. It can be problematic if generalization of effects occurs, but it may not necessarily negate the utility of such a design [ 27 ].

Multiple-baseline designs can be used in contexts other than outpatient therapy. Biglan and associates [ 1 ] argued that such designs are particularly useful in community interventions. For example, they described how a multiple baseline across communities and even states could be used to assess effects of changes in drinking age on car crashes. These designs may be especially useful to evaluate technology-based health interventions. A web-based program could be sequentially rolled out to different schools, communities, or other clusters of individuals. Although these research designs are also referred to as interrupted time series and stepped wedge designs, we think it may be more likely for researchers and clinicians to access the rich network of resources, concepts, and analytic tools if these designs are subsumed under the category of multiple-baseline designs.

The systematic comparisons afforded by SCDs can answer several key questions relevant to optimization. The first question a clinician may have is whether a particular intervention will work for his or her client [ 27 ]. It may be that the client has such a unique history and profile of symptoms, the clinician may not be confident about the predictive validity of a particular intervention for his or her client [ 6 ]. SCDs can be used to answer this question. Also, as just described, they can address which of two treatments work better, whether adding two treatments (or components) together works better than either one alone, which level of treatment is optimal (i.e., a parametric analysis), and whether a client prefers one treatment over another (i.e., via social validity assessment). Furthermore, the use of SCDs in practice conforms to the scientist-practitioner ideal espoused by training models in clinical psychology and allied disciplines [ 78 ].

OPTIMIZING FROM DEVELOPMENT TO DISSEMINATION

We are now in a position to evaluate whether SCDs live up to our ideals about optimization. During development, SCDs may obviate some logistical issues in using between-group designs to conduct initial efficacy testing [ 3 , 8 ]. Specifically, the costs and duration needed to conduct a SCD to establish preliminary efficacy would be considerably lower than traditional randomized designs. Riley and colleagues [ 8 ] noted that randomized trials take approximately 5.5 years from the initiation of enrollment to publication, and even longer from the time a grant application is submitted. In addition to establishing whether a treatment works, SCDs have the flexibility to efficiently address which parameters and components are necessary or optimal. In light of traditional methods to establish preliminary efficacy and optimize treatments, Riley and colleagues advocated for “rapid learning research systems.” SCDs are one such system.

Although some logistical issues may be mitigated by using SCDs, they do not necessarily represent easy alternatives to traditional group designs. They require a considerable amount of data per participant (as opposed to a large number of individuals in a group), enough participants to reliably demonstrate experimental effects, and systematic manipulation of variables over a long duration. For the vast majority of research questions, however, SCDs can reduce the resource and time burdens associated with between group designs and allow the investigator to detect important treatment parameters that might otherwise have been missed.

SCDs can minimize or eliminate a number of threats to internal validity. Although a complete discussion of these threats is beyond the scope of this paper (see [ 1 , 27 , 28 ]), the standards listed in Table 1 can provide protection against most threats. For example, the threat known as “testing” refers to the fact that repeated measurement alone may change behavior. To address this, baseline phases need to be sufficiently long, and there must be enough within and/or between participant replications to rule out the effect of testing. Such logic applies to a number of other potential threats (e.g., instrumentation, history, regression to the mean, etc.). In addition, a plethora of new analytic techniques can supplement experimental techniques to make inferences about causal relations. Combining SCD results in meta-analyses can yield information about comparative effects of different treatments, and combing results using Bayesian methods may yield information about likely effects at the population level.

Because of their efficiency and rigor, SCDs permit systematic replications across types of participants, behavior problems, and settings. This research process has also led to “gold-standard,” evidence-based treatments in applied behavior analysis and education [ 29 , 79 ]. More importantly, in several fields, such research has led to scientific understanding of the conditions under which treatment may be effective or ineffective [ 79 , 80 ]. The field of applied behavior analysis, for example, has matured to the extent that individualized assessment of the causes of problem behavior must occur before treatment recommendations.

Our discussion of personalized behavioral medicine highlighted how SCDs can be used in clinical practice to evaluate and optimize interventions. The advent of technology-based assessment makes SCDs much easier to implement. Technology could propel a “super convergence” of SCDs and clinical practice [ 76 ]. Advances in technology-based assessment can also promote the kind of systematic play central to the experimental attitude. It can also allow testing of new interventions as they become available. Such translational efforts can occur in several ways: from laboratory and other controlled settings to clinical practice, from SCD to SCD within clinical practice, and from randomized efficacy trials to clinical practice.

Over the past 70 years, SCD research has evolved to include a broad array of methodological and analytic advances. It also has generated evidence-based practices in health care and related disciplines such as clinical psychology [ 81 ], substance abuse [ 82 , 83 ], education [ 29 ], medicine [ 4 ], neuropsychology [ 30 ], developmental disabilities [ 27 ], and occupational therapy [ 84 ]. Although different methods are required for different purposes, SCDs are ideally suited to optimize interventions, from development to dissemination.

Acknowledgments

We wish to thank Paul Soto for comments on a previous draft of this manuscript. Preparation of this paper was supported in part by Grants P30DA029926 and R01DA023469 from the National Institute on Drug Abuse.

Conflict of interest

The authors have no conflicts of interest to disclose.

Implications

Practitioners: practitioners can use single-case designs in clinical practice to help ensure that an intervention or component of an intervention is working for an individual client or group of clients.

Policy makers: results from a single-case design research can help inform and evaluate policy regarding behavioral health interventions.

Researchers: researchers can use single-case designs to evaluate and optimize behavioral health interventions.

Contributor Information

Jesse Dallery, Phone: +1-352-3920601, Fax: +1-352-392-7985, Email: ude.lfu@yrellad .

Bethany R Raiff, Email: ude.nawor@ffiar .

Our websites may use cookies to personalize and enhance your experience. By continuing without changing your cookie settings, you agree to this collection. For more information, please see our University Websites Privacy Notice .

Neag School of Education

Educational Research Basics by Del Siegle

Single subject research.

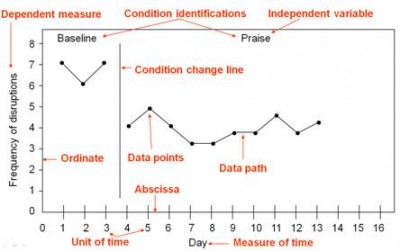

“ Single subject research (also known as single case experiments) is popular in the fields of special education and counseling. This research design is useful when the researcher is attempting to change the behavior of an individual or a small group of individuals and wishes to document that change. Unlike true experiments where the researcher randomly assigns participants to a control and treatment group, in single subject research the participant serves as both the control and treatment group. The researcher uses line graphs to show the effects of a particular intervention or treatment. An important factor of single subject research is that only one variable is changed at a time. Single subject research designs are “weak when it comes to external validity….Studies involving single-subject designs that show a particular treatment to be effective in changing behavior must rely on replication–across individuals rather than groups–if such results are be found worthy of generalization” (Fraenkel & Wallen, 2006, p. 318).

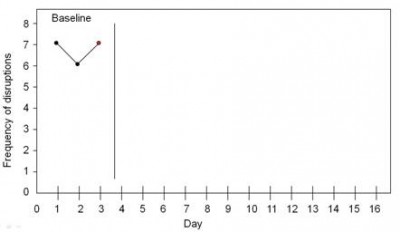

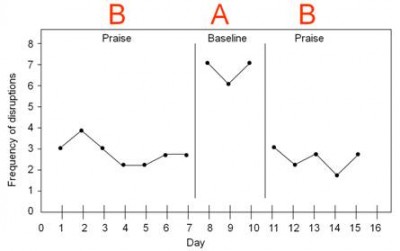

Suppose a researcher wished to investigate the effect of praise on reducing disruptive behavior over many days. First she would need to establish a baseline of how frequently the disruptions occurred. She would measure how many disruptions occurred each day for several days. In the example below, the target student was disruptive seven times on the first day, six times on the second day, and seven times on the third day. Note how the sequence of time is depicted on the x-axis (horizontal axis) and the dependent variable (outcome variable) is depicted on the y-axis (vertical axis).

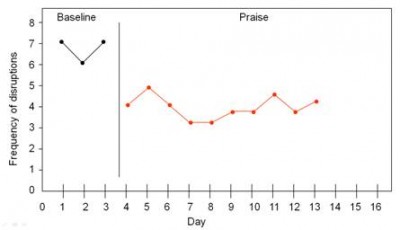

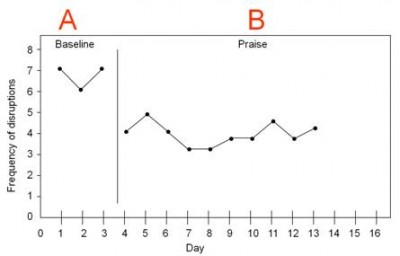

Once a baseline of behavior has been established (when a consistent pattern emerges with at least three data points), the intervention begins. The researcher continues to plot the frequency of behavior while implementing the intervention of praise.

In this example, we can see that the frequency of disruptions decreased once praise began. The design in this example is known as an A-B design. The baseline period is referred to as A and the intervention period is identified as B.

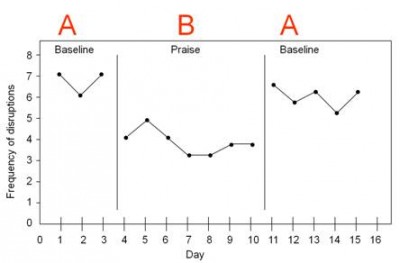

Another design is the A-B-A design. An A-B-A design (also known as a reversal design) involves discontinuing the intervention and returning to a nontreatment condition.

Sometimes an individual’s behavior is so severe that the researcher cannot wait to establish a baseline and must begin with an intervention. In this case, a B-A-B design is used. The intervention is implemented immediately (before establishing a baseline). This is followed by a measurement without the intervention and then a repeat of the intervention.

Multiple-Baseline Design

Sometimes, a researcher may be interested in addressing several issues for one student or a single issue for several students. In this case, a multiple-baseline design is used.

“In a multiple baseline across subjects design, the researcher introduces the intervention to different persons at different times. The significance of this is that if a behavior changes only after the intervention is presented, and this behavior change is seen successively in each subject’s data, the effects can more likely be credited to the intervention itself as opposed to other variables. Multiple-baseline designs do not require the intervention to be withdrawn. Instead, each subject’s own data are compared between intervention and nonintervention behaviors, resulting in each subject acting as his or her own control (Kazdin, 1982). An added benefit of this design, and all single-case designs, is the immediacy of the data. Instead of waiting until postintervention to take measures on the behavior, single-case research prescribes continuous data collection and visual monitoring of that data displayed graphically, allowing for immediate instructional decision-making. Students, therefore, do not linger in an intervention that is not working for them, making the graphic display of single-case research combined with differentiated instruction responsive to the needs of students.” (Geisler, Hessler, Gardner, & Lovelace, 2009)

Regardless of the research design, the line graphs used to illustrate the data contain a set of common elements.

Generally, in single subject research we count the number of times something occurs in a given time period and see if it occurs more or less often in that time period after implementing an intervention. For example, we might measure how many baskets someone makes while shooting for 2 minutes. We would repeat that at least three times to get our baseline. Next, we would test some intervention. We might play music while shooting, give encouragement while shooting, or video the person while shooting to see if our intervention influenced the number of shots made. After the 3 baseline measurements (3 sets of 2 minute shooting), we would measure several more times (sets of 2 minute shooting) after the intervention and plot the time points (number of baskets made in 2 minutes for each of the measured time points). This works well for behaviors that are distinct and can be counted.

Sometimes behaviors come and go over time (such as being off task in a classroom or not listening during a coaching session). The way we can record these is to select a period of time (say 5 minutes) and mark down every 10 seconds whether our participant is on task. We make a minimum of three sets of 5 minute observations for a baseline, implement an intervention, and then make more sets of 5 minute observations with the intervention in place. We use this method rather than counting how many times someone is off task because one could continually be off task and that would only be a count of 1 since the person was continually off task. Someone who might be off task twice for 15 second would be off task twice for a score of 2. However, the second person is certainly not off task twice as much as the first person. Therefore, recording whether the person is off task at 10-second intervals gives a more accurate picture. The person continually off task would have a score of 30 (off task at every second interval for 5 minutes) and the person off task twice for a short time would have a score of 2 (off task only during 2 of the 10 second interval measures.

I also have additional information about how to record single-subject research data .