Creating and Scoring Essay Tests

FatCamera / Getty Images

- Tips & Strategies

- An Introduction to Teaching

- Policies & Discipline

- Community Involvement

- School Administration

- Technology in the Classroom

- Teaching Adult Learners

- Issues In Education

- Teaching Resources

- Becoming A Teacher

- Assessments & Tests

- Elementary Education

- Secondary Education

- Special Education

- Homeschooling

- M.Ed., Curriculum and Instruction, University of Florida

- B.A., History, University of Florida

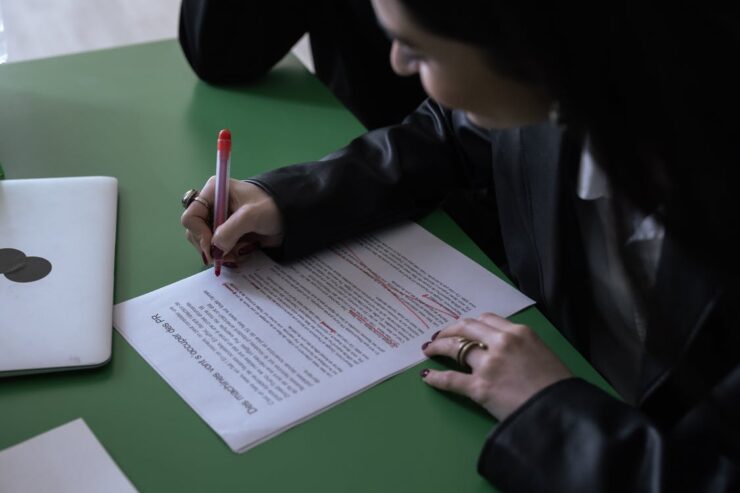

Essay tests are useful for teachers when they want students to select, organize, analyze, synthesize, and/or evaluate information. In other words, they rely on the upper levels of Bloom's Taxonomy . There are two types of essay questions: restricted and extended response.

- Restricted Response - These essay questions limit what the student will discuss in the essay based on the wording of the question. For example, "State the main differences between John Adams' and Thomas Jefferson's beliefs about federalism," is a restricted response. What the student is to write about has been expressed to them within the question.

- Extended Response - These allow students to select what they wish to include in order to answer the question. For example, "In Of Mice and Men , was George's killing of Lennie justified? Explain your answer." The student is given the overall topic, but they are free to use their own judgment and integrate outside information to help support their opinion.

Student Skills Required for Essay Tests

Before expecting students to perform well on either type of essay question, we must make sure that they have the required skills to excel. Following are four skills that students should have learned and practiced before taking essay exams:

- The ability to select appropriate material from the information learned in order to best answer the question.

- The ability to organize that material in an effective manner.

- The ability to show how ideas relate and interact in a specific context.

- The ability to write effectively in both sentences and paragraphs.

Constructing an Effective Essay Question

Following are a few tips to help in the construction of effective essay questions:

- Begin with the lesson objectives in mind. Make sure to know what you wish the student to show by answering the essay question.

- Decide if your goal requires a restricted or extended response. In general, if you wish to see if the student can synthesize and organize the information that they learned, then restricted response is the way to go. However, if you wish them to judge or evaluate something using the information taught during class, then you will want to use the extended response.

- If you are including more than one essay, be cognizant of time constraints. You do not want to punish students because they ran out of time on the test.

- Write the question in a novel or interesting manner to help motivate the student.

- State the number of points that the essay is worth. You can also provide them with a time guideline to help them as they work through the exam.

- If your essay item is part of a larger objective test, make sure that it is the last item on the exam.

Scoring the Essay Item

One of the downfalls of essay tests is that they lack in reliability. Even when teachers grade essays with a well-constructed rubric, subjective decisions are made. Therefore, it is important to try and be as reliable as possible when scoring your essay items. Here are a few tips to help improve reliability in grading:

- Determine whether you will use a holistic or analytic scoring system before you write your rubric . With the holistic grading system, you evaluate the answer as a whole, rating papers against each other. With the analytic system, you list specific pieces of information and award points for their inclusion.

- Prepare the essay rubric in advance. Determine what you are looking for and how many points you will be assigning for each aspect of the question.

- Avoid looking at names. Some teachers have students put numbers on their essays to try and help with this.

- Score one item at a time. This helps ensure that you use the same thinking and standards for all students.

- Avoid interruptions when scoring a specific question. Again, consistency will be increased if you grade the same item on all the papers in one sitting.

- If an important decision like an award or scholarship is based on the score for the essay, obtain two or more independent readers.

- Beware of negative influences that can affect essay scoring. These include handwriting and writing style bias, the length of the response, and the inclusion of irrelevant material.

- Review papers that are on the borderline a second time before assigning a final grade.

- Utilizing Extended Response Items to Enhance Student Learning

- How to Create a Rubric in 6 Steps

- Study for an Essay Test

- Top 10 Tips for Passing the AP US History Exam

- ACT Format: What to Expect on the Exam

- 10 Common Test Mistakes

- Tips to Create Effective Matching Questions for Assessments

- GMAT Exam Structure, Timing, and Scoring

- Self Assessment and Writing a Graduate Admissions Essay

- Holistic Grading (Composition)

- The Computer-Based GED Test

- UC Personal Statement Prompt #1

- SAT Sections, Sample Questions and Strategies

- Tips to Cut Writing Assignment Grading Time

- Ideal College Application Essay Length

- How To Study for Biology Exams

CAVEON SECURITY INSIGHTS BLOG

The World's Only Test Security Blog

Pull up a chair among Caveon's experts in psychometrics, psychology, data science, test security, law, education, and oh-so-many other fields and join in the conversation about all things test security.

Constructing Test Items (Guidelines & 7 Common Item Types)

Posted by Erika Johnson

December 7, 2023 at 4:16 PM

updated over a week ago

Introduction

Let's say you have been given the task of building an examination for your organization.

Finally (after spending two weeks panicking about how you would do this and definitely not procrastinating the work that must be done), you are finally ready to begin the test development process.

But you can't help but ask yourself:

- Where in the world do you begin?

- Why do you need to create this exam?

- And while you know you need to construct test items, which item types are the best fit for your exam?

- Who is your audience?

- How do you determine all that?

Luckily for you, Caveon has an amazing team of experts on hand to help with every step of the way: Caveon Secure Exam Development (C-SEDs). Whether working with our team or trying your hand at test development yourself, here's some information on item best practices to help guide you on your way.

Table of Contents

- The Benefits of Identifying Your Exam’s Purpose

- What Is a Minimally Qualified Candidate (MQC)?

Common Exam Types

Common item types.

- General Guidelines for Constructing Test Items

- Conclusion & More Resources

Determine Your Purpose for Testing: Why and Who

First thing’s first.

Before creating your test, you need to determine your purpose:

- Why you are testing your candidates, and

- Who exactly will be taking your exam

Assessing your testing program's purpose (the "why" and "who" of your exam) is the first vital step of the development process. You do not want to test just to test; you want to scope out the reason for your exam. Ask yourself:

- Why is this exam important to your organization?

- What are you trying to achieve with having your test takers sit for it?

Consider the following:

Is your organization interested in testing to see what was learned at the end of a course presented to students?

- Are you looking to assess if an applicant for a job has the necessary knowledge to perform the role?

- Are candidates trying to obtain certification within a certain field?

The Benefits of Identifying Your Exam's Purpose

Learning the purpose of your exam will help you come up with a plan on how best to set up your exam—which exam type to use, which type of exam items will best measure the skills of your candidates (we will discuss this in a minute), etc.

Determining your test's purpose will also help you to be better able to figure out your testing audience, which will en sure your exam is testing your examinees at the right level.

Whether they are students still in school, individuals looking to qualify for a position, or experts looking to get certification in a certain product or field, it’s important to make sure your exam is actually testing at the appropriate level .

For example, your test scores will not be valid if your items are too easy or too hard, so keeping the minimally qualified candidate (MQC) in mind during all of the steps of the exam development process will ensure you are capturing valid test results overall.

What Is the MQC?

MQC is the acronym for “minimally qualified candidate.”

The MQC is a conceptualization of the assessment candidate who possesses the minimum knowledge, skills, experience, and competence to just meet the expectations of a credentialed individual.

If the credential is entry level, the expectations of the MQC will be less than if the credential is designated at an intermediate or expert level.

Think of an ability continuum that goes from low ability to high ability. Somewhere along that ability continuum, a cut point will be set. Those candidates who score below that cut point are not qualified and will fail the test. Those candidates who score above that cut point are qualified and will pass.

The minimally qualified candidate, though, should just barely make the cut. It’s important to focus on the word “qualified,” because even though this candidate will likely gain more expertise over time, they are still deemed to have the requisite knowledge and abilities to perform the job or understand the subject.

Factors to Consider when Constructing Your Test

Now that you’ve determined the purpose of your exam and identified the audience, it’s time to decide on the exam type and which item types to use that will be most appropriate to measure the skills of your test takers.

First up, your exam type.

The type of exam you choose depends on what you are trying to test and the kind of tool you are using to deliver your exam.

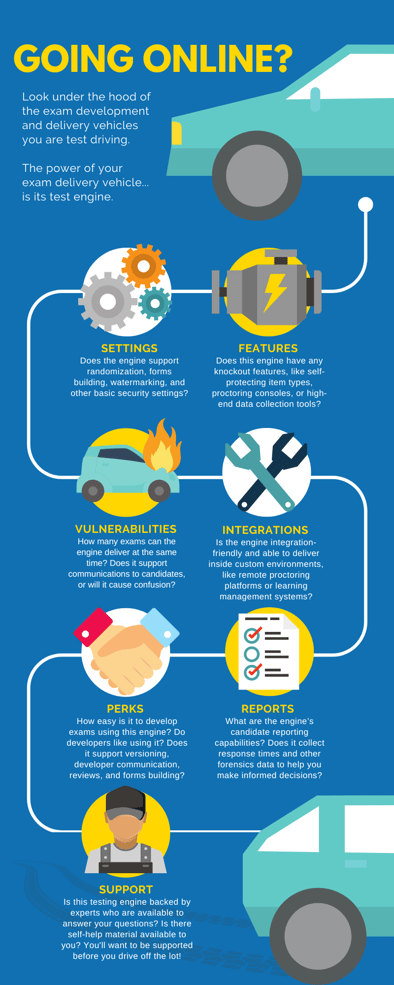

You should always make sure the software you use to develop and deliver your exam is thoroughly vetted—here's an outline of some of the most important things to look for in your testing engine:

Next up, the type of exam and items you choose.

The type of exam and type(s) of items you choose depend on your measurement goals and what you are trying to assess. It is essential to take all of this into consideration before moving forward with development.

Here are some common exam types to consider:

Fixed-Form Exam

Fixed-form delivery is a method of testing where every test taker receives the same items. An organization can have more than one fixed-item form in rotation, using the same items that are randomized on each live form. Additionally, forms can be made using a larger item bank and published with a fixed set of items equated to a comparable difficulty and content area match.

Computer Adaptive Testing (CAT)

A CAT exam is a test that adapts to the candidate's ability in real time by selecting different questions from the bank in order to provide a more accurate measurement of their ability level on a common scale. Every time a test taker answers an item, the computer re-estimates the tester’s ability based on all the previous answers and the difficulty of those items. The computer then selects the next item that the test taker should have a 50% chance of answering correctly.

Linear on the Fly Testing (LOFT)

A LOFT exam is a test where the items are drawn from an item bank pool and presented on the exam in a way that each person sees a different set of items. The difficulty of the overall test is controlled to be equal for all examinees. LOFT exams utilize automated item generation ( AIG ) to create large item banks.

The above three exam types can be used with any standard item type.

Before moving on, however, there is another more innovative exam type to consider if your delivery method allows for it:

Performance-Based Testing

A performance-based assessment measures the test taker's ability to apply the skills and knowledge learned beyond typical methods of study and/or learned through research and experience. For example, a test taker in a medical field may be asked to draw blood from a patient to show they can competently perform the task. Or a test taker wanting to become a chef may be asked to prepare a specific dish to ensure they can execute it properly.

Once you've decided on the type of exam you'll use, it's time to choose your item types.

There are many different item types to choose from (you can check out a few of our favorites in this article.)

While utilizing more item types on your exam won’t ensure you have more valid test results , it’s important to know what’s available in order to decide on the best item format for your program.

Here are a few of the most common items to consider when constructing your test:

Multiple-Choice

A multiple-choice item is a question where a candidate is asked to select the correct response from a choice of four (or more) options.

Multiple Response

A multiple response item is an item where a candidate is asked to select more than one response from a select pool of options (i.e., “choose two,” “choose 3,” etc.)

Short Answer

Short answer items ask a test taker to synthesize, analyze, and evaluate information, and then to present it coherently in written form.

A matching item requires test takers to connect a definition/description/scenario to its associated correct keyword or response.

A build list item challenges a candidate’s ability to identify and order the steps/tasks needed to perform a process or procedure.

Discrete Option Multiple Choice ™ (DOMC)

DOMC™ is known as the “multiple-choice item makeover.” Instead of showing all the answer options, DOMC options are randomly presented one at a time. For each option, the test taker chooses “yes” or “no.” When the question is answered correctly or incorrectly, the next question is presented. DOMC has been used by award-winning testing programs to prevent cheating and test theft. You can learn more about the DOMC item type in this white paper .

SmartItem ™

A self-protecting item, otherwise known as a SmartItem , employs a proprietary technology resistant to cheating and theft. A SmartItem contains multiple variations, all of which work together to cover an entire learning objective completely. Each time the item is administered, the computer generates a random variation. SmartItem technology has numerous benefits, including curbing item development costs and mitigating the effects of testwiseness. You can learn more about the SmartItem in this infographic and this white paper .

What Are the General Guidelines for Constructing Test Items?

Regardless of the exam type and item types you choose, focusing on some best practice guidelines can set up your exam for success in the long run.

There are many guidelines for creating tests (see this handy guide, for example), but this list sticks to the most important points. Little things can really make a difference when developing a valid and reliable exam!

Institute Fairness

Although you want to ensure that your items are difficult enough that not everyone gets them correct, you never want to trick your test takers! Keeping your wording clear and making sure your questions are direct and not ambiguous is very important. For example, asking a question such as “What is the most important ingredient to include when baking chocolate chip cookies?” does not set your test taker up for success. One person may argue that sugar is the most important, while another test taker may say that the chocolate chips are the most necessary ingredient. A better way to ask this question would be “What is an ingredient found in chocolate chip cookies?” or “Place the following steps in the proper order when baking chocolate chip cookies.”

Stick to the Topic at Hand

When creating your items, ensuring that each item aligns with the objective being tested is very important. If the objective asks the test taker to identify genres of music from the 1990s, and your item is asking the test taker to identify different wind instruments, your item is not aligning with the objective.

Ensure Item Relevancy

Your items should be relevant to the task that you are trying to test. Coming up with ideas to write on can be difficult, but avoid asking your test takers to identify trivial facts about your objective just to find something to write about. If your objective asks the test taker to know the main female characters in the popular TV show Friends , asking the test taker what color Rachel’s skirt was in episode 3 is not an essential fact that anyone would need to recall to fully understand the objective.

Gauge Item Difficulty

As discussed above, remembering your audience when writing your test items can make or break your exam. To put it into perspective, if you are writing a math exam for a fourth-grade class, but you write all of your items on advanced trigonometry, you have clearly not met the difficulty level for the test taker.

Inspect Your Options

When writing your options, keep these points in mind:

- Always make sure your correct option is 100% correct, and your incorrect options are 100% incorrect. By using partially correct or partially incorrect options, you will confuse your candidate. Doing this could keep a truly qualified candidate from answering the item correctly.

- Make sure your distractors are plausible. If your correct response logically answers the question being asked, but your distractors are made up or even silly, it will be very easy for any test taker to figure out which option is correct. Thus, your exam will not properly discriminate between qualified and unqualified candidates.

- Try to make your options parallel to one another. Ensuring that your options are all worded similarly and are approximately the same length will keep one from standing out from another, helping to remove that testwiseness effect.

Constructing test items and creating entire examinations is no easy undertaking.

This article will hopefully help you identify your specific purpose for testing and determine the exam and item types you can use to best measure the skills of your test takers.

We’ve also gone over general best practices to consider when constructing items, and we’ve sprinkled helpful resources throughout to help you on your exam development journey.

(Note: This article helps you tackle the first step of the 8-step assessment process : Planning & Developing Test Specifications.)

To learn more about creating your exam —i ncluding how to increase the usable lifespan of your exam — review our ultimate guide on secure exam creation and also our workbook on evaluating your testing engine, leveraging secure item types, and increasing the number of items on your tests.

And if you need help constructing your exam and/or items, our award-winning exam development team is here to help!

Erika Johnson

Erika is an Exam Development Manager in Caveon’s C-SEDs group. With almost 20 years in the testing industry, nine of which have been with Caveon, Erika is a veteran of both exam development and test security. Erika has extensive experience working with new, innovative test designs, and she knows how to best keep an exam secure and valid.

About Caveon

For more than 18 years, Caveon Test Security has driven the discussion and practice of exam security in the testing industry. Today, as the recognized leader in the field, we have expanded our offerings to encompass innovative solutions and technologies that provide comprehensive protection: Solutions designed to detect, deter, and even prevent test fraud.

Topics from this blog: Exam Development K-12 Education Test Security Basics DOMC™ Certification Higher Education Online Exams Automated Item Generation (AIG) SmartItem™ Medical Licensure

Posts by Topic

- Test Security Basics (34)

- Detection Measures (29)

- K-12 Education (27)

- Online Exams (21)

- Test Security Plan (21)

- Higher Education (20)

- Prevention Measures (20)

- Test Security Consulting (20)

- Certification (19)

- Exam Development (19)

- Deterrence Measures (15)

- Medical Licensure (15)

- Web Monitoring (12)

- Data Forensics (11)

- Investigating Security Incidents (11)

- Test Security Stories (9)

- Security Incident Response Plan (8)

- Monitoring Test Administration (7)

- SmartItem™ (7)

- Automated Item Generation (AIG) (6)

- Braindumps (6)

- Proctoring (4)

- DMCA Letters (2)

Recent Posts

Subscribe to our newsletter.

Get expert knowledge delivered straight to your inbox, including exclusive access to industry publications and Caveon's subscriber-only resource, The Lockbox .

VIEW MORE RESOURCES

NEED HELP CREATING TEST ITEMS?

THAT'S WHAT WE'RE HERE FOR.

elttguide.com

- Premium Content

- Publications

- Lesson Plans

Writing Effective Test Items: The Definitive Guide

Difficult Job

It is not easy to construct a good test to measure what you want. Writing good effective test items is a difficult job as it needs much practice and experience and there are many things to be considered to create good test items.

A Valuable Resource

With this challenge in mind, I’ve created this guide: “Writing Effective Test Items: The Definitive Guide” which is a valuable resource for all teachers who are interested in constructing effective test items that can capture effectively what a student knows.

Moreover, with this definitive guide , teachers will be able to avoid any pitfalls in the testing of their students.

A Practical Guide

It is a practical guide to constructing effective test questions. It provides teachers with step-by-step instructions they need to go deeper, further, and faster toward making a success in preparing effective classroom exams with ease and comfort.

All Teachers Need It

I’ve created this book because many teachers asked me frequently for it: a definitive guide to help them write test questions in a more effective way.

Gist Of My Experience

In this book, I share my +20-year experience in creating successful exams. It is the comprehensive guide that I wish someone would have shared with me when I first started to make classroom exams for my students.

After Reading This Guide, Teachers Will Be Able To:

- Establish the technical quality of a test by achieving certain standards.

- Use and develop effective test items following appropriate guidelines.

- Avoid common pitfalls in testing their students.

- Grade essay tests more effectively and fairly.

Get The Book Now

You can also join my email list not only to be notified of the latest updates on elttguide.com but also to get TWO of my products : Quick-Start Guide To Teaching Listening In The Classroom & Quick-Start Guide To Teaching Grammar In The Classroom For FREE!

Join My Email List Now (It’s FREE)!

More publications.

I offer more ELT publications on teaching English as a foreign language.

In these publications, I put the gist of my experience in TEFL for +20 years with various learners and in various environments and cultures.

The techniques and tips in these publications are sure-fire teaching methods that worked for me well and they can work for you, as well, FOR SURE.

Go ahead and get a look at these publications to know more about each one of them and the problem & challenge each one focuses on to overcome.

Then, you can get what you have an interest in. It is very easy and cheap. You can afford it and you’ll never regret it if you decide to get one of them, FOR SURE.

Now, click to get a look at these Publications

Searching for the best tefl courses your search is over, start with ita now.

The ELT training that you will receive at ITA is invaluable in that you will prepare yourself effectively as an EFL/ESL teacher.

I’m sure you will be amazed by their:

- Countless TEFL courses,

- Course structure,

- Alumni community,

- A high number of practicum hours,

- Customer service,

- Lifetime TEFL job assistance,

- Reviews and credentials.

Start now with ITA to make a difference in your TEFL journey teaching online or abroad.

If you like it, share it on:

Leave a reply cancel reply.

You must be logged in to post a comment.

Subscribe to My Newsletter

Affiliate disclosure.

This website might have affiliate links, and if you buy something by clicking on them, the website owner could earn some money. To learn more, read the full disclosure.

Study TEFL/TESOL Online

Get 15% Discount

Visit My Video Channel

Articles Categories

- Back To School

- Brain-based ELT

- Classroom Management

- CLT Communicative Language Teaching

- Correcting Mistakes

- Develop Students' Speaking Skills

- Developing Critical Thinking

- Developing Life Skills

- ELT Snippets

- ELTT Questions & Answers

- For IELTS Exam

- Guest Posts

- Job Interview Preparation

- Lanaguage Teaching Approaches

- Learning How to Learn

- Lesson Planning

- Low Achiever Students

- Online Courses

- Printables Library

- Professional Development

- Talk on Supervision

- Teach Conversations

- Teach Grammar

- Teach Language Functions

- Teach Listening Activities

- Teach Pronunciation

- Teach Reading

- Teach Vocabulary

- Teach Writing

- Teacher Wellness

- Teaching Aids

- TEFL Essential Skills

- TEFL to Young Learners

- Testing and Assessment

- The ELT Insider

- Uncategorized

- Using Technology in EFL Classes

Assessment: Test construction basics

- Aligning outcomes, assessment, and instruction

- Examples from a range of disciplines

- Examples from carpentry

- Examples from electrical

- Examples from english

- Examples from english language development

- Examples from marketing

- Examples from practical nursing

- Examples from psychology

- Types of assessment

Test construction basics

- Multiple choice questions

- Short answer questions

- Completion questions

- Matching questions

- True/false questions

- Take home/open book

- Reducing test anxiety

- Benefits and challenges

- Planning and implementing group work

- Preparing students for group work

- Assessing group work

- Additional resources

- Writing clear assignments

- Assessment and AI This link opens in a new window

- Academic integrity

- Further readings

The following information provides some general guidelines to assist with test development and is meant to be applicable across disciplines.

Make sure you are familiar with Bloom’s Taxonomy , as it is referenced frequently.

General Tips

Start with your learning outcomes. Choose objective and subjective assessments that match your learning outcomes and the level of complexity of the learning outcome.

Use a test blueprint. A test blueprint is a rubric, document, or table that lists the learning outcomes to be tested, the level of complexity, and the weight for the learning outcome (see sample). A blueprint will make writing the test easier and contribute immensely to test validity. Note that Bloom’s taxonomy can be very useful with this activity. Share this information with your students, to help them to prepare for the test.

Let your students know what to expect on the test . Be explicit; otherwise students may make incorrect assumptions about the test.

Word questions clearly and simply. Avoid complex questions, double negatives, and idiomatic language that may be difficult for students, especially multilingual students, to understand.

Have a colleague or instructional assistant read through (or even take) your exam. This will help ensure your questions and exam are clear and unambiguous. This also contributes to the reliability and validity of the test

Assess the length of the exam . Unless your goal is to assess students’ ability to work within time constraints, design your exam so that students can comfortably complete it in the allocated time. A good guideline is to take the exam yourself and time it, then triple the amount of time it took you to complete the exam, or adjust accordingly.

Write your exam key prior to students taking the exam. The point value you assign to each question should align with the level of difficulty and the importance of the skill being assessed. Writing the exam key enables you to see how the questions align with instructional activities. You should be able to easily answer all the questions. Decide if you will give partial credit to multi-step questions and determine the number of steps that will be assigned credit. Doing this in advance assures the test is reliable and valid.

Design your exam so that students in your class have an equal opportunity to fully demonstrate their learning . Use different types of questions, reduce or eliminate time pressure, allow memory aids when appropriate, and make your questions fair. An exam that is too easy or too demanding will not accurately measure your students’ understanding of the material.

Characteristics of test questions, and how to choose which to use

Including a variety of question types in an exam enables the test designer to better leverage the strengths and overcome the weaknesses of any individual question type. Multiple choice questions are popular for their versatility and efficiency, but many other question types can add value to a test. Some points to consider when deciding which, when, and how often to use a particular question type include:

- Workload: Some questions require more front-end workload (i.e., time-consuming to write), while others require more back-end workload (i.e., time-consuming to mark).

- Depth of knowledge: Some question types are better at tapping higher-order thinking skills, such as analyzing or synthesizing, while others are better for surface level recall.

- Processing speed: Some question types are more easily processed and can be more quickly answered. This can impact the timing of the test and the distribution of students’ effort across different knowledge domains.

All test items should :

- Assess achievement of learning outcomes for the unit and/or course

- Measure important concepts and their relationship to that unit and/or course

- Align with your teaching and learning activities and the emphasis placed on concepts and tasks

- Measure the appropriate level of knowledge

- Vary in levels of difficulty (some factual recall and demonstration of knowledge, some application and analysis, and some evaluation and creation)

Two important characteristics of tests are:

- Reliability – to be reliable, the test needs to be consistent and free from errors.

- Validity – to be valid, the test needs to measure what it is supposed to measure.

There are two general categories for test items:

1. Objective items – students select the correct response from several alternatives or supply a word or short phrase answer. These types of items are easier to create for lower order Bloom’s (recall and comprehension) while still possible to design for higher order thinking test items (apply and analyze).

Objective test items include:

- Multiple choice

- Completion/Fill-in-the-blank

Objective test items are best used when:

- The group tested is large; objective tests are fast and easy to score.

- The test will be reused (must be stored securely).

- Highly reliable scores on a broad range of learning goals must be obtained as efficiently as possible.

- Fairness and freedom from possible test scoring influences are essential.

2. Subjective or essay items – students present an original answer. These types of items are easier to use for higher order Bloom’s (apply, analyze, synthesize, create, evaluate).

Subjective test items include:

- Short answer essay

- Extended response essay

- Problem solving

- Performance test items (these can be graded as complete/incomplete, performed/not performed)

Subjective test items are best used when:

- The group to be tested is small or there is a method in place to minimize marking load.

- The test in not going to be reused (but could be built upon).

- The development of students’ writing skills is a learning outcome for the course.

- Student attitudes, critical thinking, and perceptions are as, or more, important than measuring achievement.

Objective and subjective test items are both suitable for measuring most learning outcomes and are often used in combination. Both types can be used to test comprehension, application of concepts, problem solving, and ability to think critically. However, certain types of test items are better suited than others to measure learning outcomes. For example, learning outcomes that require a student to ‘demonstrate’ may be better measured by a performance test item, whereas an outcome requiring the student to ‘evaluate’ may be better measured by an essay or short answer test item.

Bloom's Taxonomy

Bloom’s Taxonomy by Vanderbilt University Center for Teaching , licensed under CC-BY 2.0 .

Common question types

- Multiple choice

- True/false

- Completion/fill-in-the-blank

- Short answer

Tips to reduce cheating

- Use randomized questions

- Use question pools

- Use calculated formula questions

- Use a range of different types of questions

- Avoid publisher test banks

- Do not re-use old tests

- Minimize use of multiple choice questions

- Have students “show their work” (for online courses they can scan/upload their work)

- Remind students of academic integrity guidelines, policies and consequences

- Have students sign an academic honesty form at the beginning of the assessment

Additional considerations for constructing effective tests

Prepare new or revised tests each time you teach a course. A past test will not reflect the changes in how you presented the material and the topics you emphasized. Writing questions at the end of each unit is one way to make sure your test reflects the learning outcomes and teaching activities for the unit.

Be cautious about using item banks from textbook publishers. The items may be poorly written, may focus on trivial topics, and may not reflect the learning outcomes for your course.

Make your tests cumulative. Cumulative tests require students to review material they have already studied and provide additional opportunity to include higher-order thinking questions, thus improving retention and learning.

Printable version

- Test Construction Basics

- Sample Test Blueprint

- << Previous: Test construction

- Next: Multiple choice questions >>

- Last Updated: Nov 10, 2023 11:13 AM

- URL: https://camosun.libguides.com/AFL

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Constructing Essay Tests

Related Papers

Journal of Basic Writing

Carol Haviland

Current Developments in English for Academic and Occupational Purposes

Kibiwott P Kurgatt

Most literature on writing assessment offers a lot of guidance on design and validation of measures and items in essays and examination questions (e.g. Alderson and North 1991, Bachman 1990, Hamp-Lyons 1988). Most of this literature, however, seems to be oriented towards language tests and not to how information concerning exam questions is interpreted in the academic contexts for which most students work in. Nevertheless, the most extensive analysis of prompts in subject-specific contexts can be rightly attributed to Horowitz (1986a and 1986b), Canseco and Byrd 1989 and Braine (1989). These studies also make suggestions concerning implications of the analysis to the teaching of English for Academic Purposes. The concern of the present paper was to seek to build on these earlier studies by looking at the types of prompts found in essay and examination questions in an ESL context in Africa. In particular it attempts to find out: a) The general features of first year undergraduate essay and examination questions b) What these features tell us about what students need to know c) What are the features of format and/or content of which students need to be aware d) Whether there is significance in the nature of the distribution of prompts (specific or general) across disciplines or courses. This paper starts with looking at the general features of the questions including the marks allocated to different types of questions per department. Subsequently, the questions are then classified according to the categories and subcategories of prompts as proposed by Horowitz. The nature of these prompts is then discussed and a summary of the results and conclusions is then presented.

karen gabinete

Journal of Research in Science Teaching

Gordon Warren

Assessing Writing

Jennifer Kobrin

Journal of Baltic Science Education

Çetin DOGAR

Putu Febry Valentina Griadhi

Febry Valentina

Assessment is the systematic and ongoing process of acquiring, evaluating, and applying data from measured outcomes to evaluate, quantify, and document many elements of learning, such as academic preparedness, learning progress, skill acquisition, and students' educational needs. It refers to the act of judging or deciding the amount, value, quality, or significance of anything, as well as the judgment or decision made. Assessments in the educational setting can take many different forms and are intended to measure specific aspects of learning, identify individual student shortcomings and strengths, and inform educational programming. There are three types of assessments, which are summative assessment, formative assessment, and diagnostic assessment. Summative assessment is a type of evaluation that is used at the end of a unit or course to assess a student's understanding and learning by comparing performance to a standard. Formative assessment refers to a wide range of approaches used by teachers to assess student comprehension, learning progress, and academic support during a lesson, unit, or course. Diagnostic assessment is a type of pre-assessment or pre-test used by teachers to evaluate students' strengths, weaknesses, knowledge, and skills before instruction, usually at the start of a course, grade level, unit, or lesson. These tests are intended to discover learning gaps, clarify misconceptions, and guide instructional tactics. Diagnostic tests are often low-stakes and do not contribute toward a student's grade. Assessment is important for instruction because it pushes teaching by promoting critical thinking, reasoning, and reflection, providing a high-quality learning environment, and allowing teachers to quantify the efficacy of their instruction by relating student performance to specific learning objectives. It also aids in the monitoring of students' progress, gives diagnostic feedback, and informs teaching methods, resulting in greater learning and the enhancement of teaching approaches. Assessment of student learning is an essential component of a good education, it influences grade, placement, advancement, instructional requirements, curriculum, and, in certain situations, making decisions. Both cognitive and non-cognitive diagnostic assessments must be used to ensure that students accomplish their learning objectives. A well-rounded assessment strategy also includes the implementation of higher-order thinking skills (HOTS), numeracy literacy, culturally responsive pedagogy, reflection, differentiated assessment, assessment instruments, and technological integration. This essay intends to demonstrate the importance of assessment procedures in understanding student learning and ensuring equitable opportunities for academic success through an observational study of assessment procedures in a Singaraja secondary school.

Education. Innovation. Diversity.

Sandija Gabdulļina

This scientific research explores the potential of using essays as a formative assessment tool in the context of the competencies approach. The competencies approach emphasises the importance of focusing on learning progress and needs to promote successful learning, thus formative assessment plays a pivotal role in facilitating effective learning. The study highlights the significance of essay writing in promoting critical thinking, problem-solving, and self-directed learning. However, students often perceive essays solely as a means of summative assessment, lacking a comprehensive understanding of the assessment criteria. To address this issue, the research emphasizes the importance of involving students in the learning process by collectively defining outcomes, establishing assessment criteria, and providing constructive feedback. Clear objectives and feedback are crucial in fostering self-regulated learning and lifelong learning. The study highlights the need for student-teacher ...

Gazi Medical Journal

CHANDNI GUPTA

Catherine Trapani

Loading Preview

Sorry, preview is currently unavailable. You can download the paper by clicking the button above.

RELATED PAPERS

Thilanka Gunaratne

Romain Rancière

Revista de Derecho de la UNED (RDUNED)

carmen muñoz delgado

Marco Costantino

Solar Physics

Xenophon Moussas

Journal of Biological Chemistry

Teresa Soto

Itä-Suomen yliopisto

AHMAD SOLIHIN

NURFIKA ARIFIANI

Esperanza Liger Pérez

Annals of Nuclear Energy

Moon-Ghu Park

Drug Metabolism and Disposition

Vanessa Gonzalez

Stefania Raimondo

Biomolecules

Nadia Lotti

Misheck Nyirenda

Applied Surface Science

FAIZA ANWAR

International research journal of engineering, IT & scientific research

Mawiti Yekti

The European Physical Journal D

Mateusz Plewicki

Acta Universitatis Agriculturae et Silviculturae Mendelianae Brunensis

Iveta Palečková

Revista Psicologia, Diversidade e Saúde

Márcia Aparecida Padovan Otani

Dicky Yusuf A.P

European Journal of Public Health

kate Frazer

International Journal of Electrical Power & Energy Systems

Magnus Korpås

Peter Grimbeek

Transplantation

Sahithi Kuravi

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

Constructing Test Items pp 1–16 Cite as

What Is Constructing Test Items?

- Steven J. Osterlind 4

214 Accesses

1 Citations

Part of the book series: Evaluation in Education and Human Services ((EEHS,volume 25))

Constructing test items for standardized tests of achievement, ability, and aptitude is a task of enormous importance—and one fraught with difficulty. The task is important because test items are the foundation of written tests of mental attributes, and the ideas they express must be articulated precisely and succinctly. Being able to draw valid and reliable inferences from a test’s scores rests in great measure upon attention to the construction of test items. If a test’s scores are to yield valid inferences about an examinee’s mental attributes, its items must reflect a specific psychological construct or domain of content. Without a strong association between a test item and a psychological construct or domain of content, the test item lacks meaning and purpose, like a mere free-floating thought on a page with no rhyme or reason for being there at all.

- Reference Source

- Test Developer

- Item Format

- Good Writing

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Unable to display preview. Download preview PDF.

Author information

Authors and affiliations.

University of Missouri, Columbia, USA

Steven J. Osterlind

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 1989 Kluwer Academic Publishers

About this chapter

Cite this chapter.

Osterlind, S.J. (1989). What Is Constructing Test Items?. In: Constructing Test Items. Evaluation in Education and Human Services, vol 25. Springer, Dordrecht. https://doi.org/10.1007/978-94-009-1071-3_1

Download citation

DOI : https://doi.org/10.1007/978-94-009-1071-3_1

Publisher Name : Springer, Dordrecht

Print ISBN : 978-94-010-6971-7

Online ISBN : 978-94-009-1071-3

eBook Packages : Springer Book Archive

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Family Community Med

- v.13(3); Sep-Dec 2006

GUIDELINES FOR THE CONSTRUCTION OF MULTIPLE CHOICE QUESTIONS TESTS

Mohammed o. al-rukban.

Department of Family & Community Medicine, College of Medicine and King Khalid University Hospital, King Saud University, Riyadh, Saudi Arabia

Multiple Choice Questions (MCQs) are generally recognized as the most widely applicable and useful type of objective test items. They could be used to measure the most important educational outcomes - knowledge, understanding, judgment and problem solving. The objective of this paper is to give guidelines for the construction of MCQs tests. This includes the construction of both “single best option” type, and “extended matching item” type. Some templates for use in the “single best option” type of questions are recommended.

INTRODUCTION

In recent years, there has been much discussion about what should be taught to medical students and how they should be assessed. In addition, highly publicized instances of the poor performance of medical doctors have fuelled the drive to find a way for ensuring that qualified doctors achieve and maintain appropriate knowledge, skills and attitudes throughout their working lives. 1

Selecting an assessment method for measuring students’ performance remains a daunting task for many medical institutions. 2 Assessment should be educational and formative if it is going to promote appropriate learning. It is important that individuals learn from any assessment process and receive feedback on which to build their knowledge and skills. It is also important for an assessment to have a summative function to demonstrate competence. 1

Assessment may act as a trigger, informing examinees what instructors really regard as important 3 and the value they attach to different forms of knowledge and ways of thinking. In fact, assessment has been identified as possibly the single most potent influence on student learning; narrowing students’ focus only on topics to be tested on (i.e. what is to be studied) and shaping their learning approaches (i.e. how it is going to be studied). 4 Students have been found to differ in the quality of their learning when instructed to focus either on factual details or on the assessment of evidence. 5 Furthermore, research has reported that changes in assessment methods have been found to influence medical students to alter their study activities. 4 As methods of assessment drive learning in medicine and other disciplines, 1 it is important that the assessment tools test the attributes required of students or professionals undergoing revalidation. Staff subsequently, redesign their methods of assessment to ensure a match between assessment forms and their educational goals. 6

Methods of assessment of medical students and practicing doctors have changed considerably during the last 5 decades. 7 No single method is appropriate, however, for assessing all the skills, knowledge and attitudes needed in medicine, so a combination of assessment techniques will always be required. 8 – 10

When designing assessments of medical competencies, a number of issues need to be addressed; reliability, which refers to the reproducibility or consistency of a test score, validity, which refers to the extent to which a test measures what it purports to measure, 11 , 12 and standard setting which defines the endpoint of the assessment. 1 Sources of the evidence of validity are related to the content, response process, internal structure, relationship to other variables, and consequences of the assessment scores. 13

Validity requires the selection of appropriate test formats for the competencies to be tested. This invariably requires a composite examination. Reliability, however, requires an adequate sample of the necessary knowledge, skills, and attitudes to be tested.

However, measuring students’ performances is not the sole determinant for choosing an assessment method. Other factors such as cost, suitability, and safety have profound influences on the selection of an assessment method and, most probably, constitute the major reason for inter-institutional variations for the selection of assessment methods as well success rates. 14

Examiners need to use a variety of test formats when organizing test papers; each format being selected on account of its strength as regards to validity, reliability, objectivity and feasibility. 15

For as long as there is a need to test knowledge in the assessment of doctors and medical undergraduates, multiple choice questions (MCQs) will always play a role as a component in the assessment of clinical competence. 16

Multiple choice questions were introduced into medical examinations in the 1950s and have been shown to be more reliable in testing knowledge than the traditional essay questions. It represents one of the most important well-established examination tools widely used in assessment at the undergraduate and postgraduate levels of medical examinations. The MCQ is an objective question for which there is prior agreement on what constitutes the correct answer. This widespread use may have led examiners to use the term MCQ as synonym to an objective question. 15 Since their introduction, there have been many modifications to MCQs resulting in formats. 16 Like other methods of assessment, they have their strengths and weaknesses. Scoring of the questions is easy and reliable, and their use permits a wide sampling of student's knowledge in an examination of reasonable duration. 15 – 19 MCQ-based exams are also reliable because they are time-efficient and a short exam still allows a breadth of sampling of any topic. 19 Well-constructed MCQs can also assess taxonomically higher-order cognitive processing such as interpretation, synthesis and application of knowledge rather than the test of recall of isolated facts. 20 They could test a number of skills in addition to the recall of factual knowledge, and are reliable, discriminatory, reproducible and cost-effective. It is generally, agreed that MCQs should not be used as a sole assessment method in summative examinations, but alongside other test forms. They are designed to broaden the range of skills to be tested during all phases of medical education, whether undergraduate, postgraduate or continuing. 21

Though writing the questions requires considerable effort, their high objectivity makes it possible for the results to be released immediately after marking by anyone including a machine. 15 , 18 This facilitates the computerized analysis of the raw data and allows the examining body to compare the performance of either the group or an individual with that of past candidates by the use of discriminator questions. 22 Ease of marking by computer makes MCQs an ideal method for assessing the knowledge of a large number of candidates. 16 , 22

However, a notable concern of many health professionals is that they are frequently faced with the task of constructing tests with little or no experience or training on how to perform this task. Examiners need to spend considerable time and effort to produce satisfactory questions. 15

The objective of this paper is to describe guidelines for the construction of two common MCQs types: the “single best option” type, and “extended matching item” type. Available templates for the “single best option” type will be discussed.

Single Best Option

The first step for writing any exam is to have a blueprint (table of specifications). Blueprinting is the planning of the test against the learning objectives of a course or competencies essential to a specialty. 1 A test blueprint is a guide used for creating a balanced examination and consists of a list of the competencies and topics (with specified weight for each) that should be tested on an examination, as in the example presented in Table 1 .

Example of a table of specifications (Blueprint) based on the context, for Internal Medicine examination

If there is no blueprint, the examination committee should decide on the system to be tested by brainstorming to produce a list of possible topics/themes for question items. For example, abdominal pain, back pain, chest pain, dizziness, fatigue, fever, etc, 23 and then select one theme (topic) from the list. When choosing a topic for a question, the focus should be on one important concept, typically a common or a serious and treatable clinical problem from the specialty. After choosing the topic, an appropriate context for the question is chosen. The context defines the clinical situation that will test the topic. This is important because it determines the type of information that should be included in the stem and the response options. Consider the following example: (Topic= Hypertension; Context= Therapy).

The basic MCQ model comprises a stem and a lead-in question followed by a number of answers (options). 19 The option which matches the key in a MCQ is best called “the correct answer” 15 and the other options are the “distracters”.

For writing a single best option type of MCQs, as shown in Appendix 1 , it is recommended that the options are written first. 23 A list of possible homogeneous options based on the selected topic and context is then generated. The options should be readily understood and as short as possible. 18 It is best to start with a list of more than five options (although only five options are usually used in the final version). This allows a couple of ‘spares’, which often come in handy! It is important that this list be HOMOGENOUS (i.e. all about diagnoses, or therapeutics, lab investigations, complications… etc) 23 and one of the options selected as the key answer to the question.

MCQ Preparation Form

A good distracter should be inferior to the correct answer but should also be plausible to a non-competent candidate. 24 All options should be true and contain facts that are acceptable to varying degrees. The examiner would ask for the most appropriate, most common, least harmful or any other feature which is at the uppermost or lowermost point in a range. It needs to be expressed clearly that only one answer is correct. A candidate's response is considered correct if his/her selection matches the examiner's key. 15

When creating a distracter, it helps to predict how an inexperienced examinee might react to the clinical case described in the stem. 24

A question stem is then written with lead-in statement based on the selected correct option. Well-constructed MCQs should test the application of medical knowledge (context-rich) rather than just the recall of information (context- free). Schuwirth et al, 25 found that context-rich questions lead to thinking processes which represent problem solving ability better than those elicited by context-free questions. The focus should be on problems that would be encountered in clinical practice rather than an assessment of the candidate's knowledge of trivial facts or obscure problems that are seldom encountered. The types of problems that commonly encountered in one's own practice can provide good examples for the development of questions. To make testing both fair and consequentially valid, MCQs should be used strategically to test important content, and clinical competence. 19

The clinical case should begin with the presentation of a problem and followed by relevant signs, symptoms, results of diagnostic studies, initial treatment, subsequent findings, etc. In essence, all the information that is necessary for a competent candidate to answer the question should be provided in the stem. For example:

- Age, sex (e.g., a 45-year-old man).

- Site of care (e.g. comes to the emergency department).

- Presenting complaint (e.g. because of a headache).

- Duration (e.g. that has continued for 2 days).

- Patient history (with family history).

- Physical findings.

- +/− Results of diagnostic studies.

- +/− Initial treatment, subsequent findings, etc.

The lead-in question should give clear directions as to what the candidate should do to answer the question. Ambiguity and the use of imprecise terms should be avoided. 16 , 18 There is no place for trick questions in MCQ examinations. Negative stems should be avoided, as should double negatives. Always, never and only are obviously contentious in an inexact science like medicine and should not be used. 16 , 18

Consider the following examples of lead-in questions:

Example 1: Regarding myocardial infarction.

Example 2: What is the most likely diagnosis?

Note that for Example 1, no task is presented to the candidate. This type of lead-in statement will often lead to an ambiguous or unfocused question. In the second example, the task is clear and will lead to a more focused question. To ensure that the lead-in question is well constructed, the question should be answerable without looking at the response options. As a check, the response options should be covered and an attempt made to answer the question.

Well constructed MCQs should be written at a level of difficulty appropriate to level of the candidates. A reason often given for using difficult questions is that they help the examiner to identify the `cream’ of the students. However, most tests would function with greater test reliability when questions of medium difficulty are used. 26 An exception, however, would be the assessment of achievement in topic areas that all students are expected to master. Questions used here will be correctly answered by nearly all the candidates and consequently, will have high difficulty index values. On the other hand, if a few candidates are to be selected for honours, scholarships, etc., it is preferable to have an examination of the appropriately high level of difficulty specifically for that purpose. It is important to bear in mind that the level of learning is the only factor that should determine the ability of a candidate to answer a question correctly. 15

The next step is to reduce the list of option to the intended number of options which is usually five options (including, of course, the correct answer).

Lastly, the option list is to be arranged into a logical order to reduce guessing and avoid putting the correct answer in habitual location (e.g. using alphabetical order will make it possible to avoid choosing options B or C as key answers more frequently).

The role of guessing in answering MCQs has been debated extensively and a variety of approaches have been suggested to deal with the candidate who responds to questions without possessing the required level of knowledge. 27 – 29 A number of issues need closer analysis when dealing with this problem. Increasing the number of questions in a test paper will reduce the probability of passing the test by chance. 15

Once the MCQs have been written, they should be criticized by as many people as possible and they should be reviewed after their use. 16 , 18 The most common construction error encountered is the use of imprecise terms. Many MCQs used in medical education contain undefined terms. Furthermore, there is a wide range of opinions among the examiners themselves about the meanings of such vague terms. 30 The stem and options should read logically. It is easy to write items that look adequate but do not constitute proper English or do not make sense. 18

When constructing a paper from a bank of MCQs, care should be taken to ensure that there is a balanced spread of questions across the subject matter of the discipline being tested. 16 A fair or defensible MCQ exam should be closely aligned with the syllabus; be combined with practical competence testing; sample taken broadly from important content and be free from construction errors. 19

Extended Matching Items (EMIs)

Several analytic approaches have been used to obtain the optimal number of response options for multiple-choice items. 31 – 35 Focus has shifted from traditional 3–5 branches to larger numbers of branches. This may be 20-30 in the case of extended-matching questions (EMIs), or up to 500 for open-ended or ‘uncued’ formats. 36 However, the use of smaller numbers of options (and more items) results in a more efficient use of testing time. 37

Extended-matching items are multiple choice items organized into sets that use one list of options for all items in the set. There is a theme, an option list, a lead-in statement and at least two item stems. A typical set of EMIs begins with an option list of four to 26 options; more than ten options are usually used. The option list is followed by two or more patient-based items requiring the examinee to indicate a clinical decision for each item. The candidate is asked to match one or more options to each item stem.

Extended matching items have become popular in such specialties as internal and family medicine because they can be used to test diagnostic ability and clinical judgment. 15 Its use likely to increase in postgraduate examinations as well as in undergraduate assessment. 21 Computer-based extended matching items have been used for in-course continuous assessment. 38

EMIs are more difficult, more reliable, more discriminating, and capable of reducing the testing time. In addition, they are quicker and easier to write than other test formats. 39 , 40 Over the past 20 years, multiple studies have found that EMI-based tests are more reproducible (reliable) than other multiple-choice question (MCQ) formats aimed at the assessment of medical decision making. 20 , 41 , 42 There is a wealth of evidence that EMIs are the fairest format. 19

Another more recent development is uncued questions where answers are picked from a list of several hundred choices. These have been advocated for use in assessing clinical judgment, 43 but extended matching questions have surprisingly been shown to be as statistically reliable and valid as uncued queries. 20 , 41 , 44

Extended matching questions overcome the problem of cueing by increasing the number of options and are a compromise between free-response questions and MCQs. This offers an objective assessment that is both reliable and easy to mark. 45 – 47

Nevertheless, MCQs have strengths and weaknesses and those responsible for setting MCQ papers may consider investigating the viability and value of including some questions in the extended matching format. Item writers should be encouraged to use the EMI format with a large number of options because of the efficiencies this approach affords in item preparation. 20 , 39

For the construction of EMIs the following steps are suggested ( Appendix 2 ):

Preparation of Extended Matching Item (EMI)

Step 1: The selection of the system, the context, and the theme should be based on a blueprint. Otherwise, the following sequence should be followed:

Example: Respiratory system .

Example: Laboratory investigations .

Example: Respiratory Tract Infection .

Step 2: Write the lead-in statement.

Example: Which is the best specimen to send to the Microbiology laboratory for confirmation of the diagnosis ?

Step 3: Prepare the options: Make a list of 10-15 homogenous options. For example, they should all be diagnoses, managements, blood gas values, enzymes, prognoses, etc, and each should be short (normally only one or two words).

Example of options :

- – Sputum bacterial culture .

- – Nasal swab .

- – Blood for C-reactive protein .

- – Blood culture .

- – Perinasal swab .

- – Cough swab .

- – Throat swab .

- – Bronchioalveolar lavage .

- – Urine for antigen detection .

- – Single clotted blood specimen .

Step 4: Select two to three of the options as the correct answers (keys).

- – Sputum bacterial culture.

- – Blood culture.

- – Bronchioalveolar lavage.

Step 5: The question stems or scenarios: Write two to three vignettes (case scenarios) that suit the selected options, to form the question stem. The scenarios should not be overly complex and should contain only relevant information. This should normally be between two and five sentences in length. For the questions:

- Use patient scenarios.

- Include key patient information.

- Structure all similar scenarios in one group (do not mix adult and paediatric scenarios).

- Scenarios should be straightforward.

Scenarios for most of the options for possible use in future examinations can be written.

- - A 21-year-old man severely ill with lobar pneumonia .

- - A 68-year-old woman with an exacerbation of COPD .

- - A 60-year-old man with strongly suspected TB infection on whom three previous sputum specimens have been film (smear) negative .

N.B: The response options are first written and then the appropriate scenario built for each one.

Step 6: Ensure validity and discrimination:

Look at other options in the provisional list and delete any that suits the written case scenario or that is clearly wrong or plausible.

Step 7: Reduce the option list to the intended number e.g. (10-15 options for a two case scenarios).

Step 8: Review the questions and ensure that there is only one best answer for each question. Ensure that there are at least four reasonable (plausible) distracters for each scenario and ensure that the reasons for matching are clear. It is advisable to ask a colleague to review the EMIs without the answers. If the colleague has difficulty, modify the option list or scenario as appropriate.

The following is a sample:

Respiratory Tract Infection

For the following patients, which is the best specimen to send to the Microbiology laboratory for confirmation of the diagnosis ?

A 21-year-old man severely ill with lobar pneumonia . (d)

A 6- year-old woman with an exacerbation of COPD . (a)

A 60-year-old man with strongly suspected TB infection on whom three previous sputum specimens have been film (smear) negative . (h)

- Sputum bacterial culture.

- Nasal swab.

- Blood for C-reactive protein.

- Blood culture.

- Perinasal swab.

- Cough swab.

- Throat swab.

- Bronchioalveolar lavage.

- Urine for antigen detection.

- Single clotted blood specimen.

It is advisable for the writer of MCQ to use templates for the construction of a single best option MCQ in both basic sciences and physician (clinical) tasks. 48

Although the topics in basic sciences could be tested by recall type MCQs, as was discussed earlier, case scenario questions are preferable. Therefore, the focus here will be on this type of questions. The components of patient vignettes for possible inclusion were also described earlier.

Patient Vignettes

- - A (patient description) has a (type of injury and location). Which of the following structures is most likely to be affected?

- - A (patient description) has (history findings) and is taking (medications). Which of the following medications is the most likely cause of his (one history, physical examination or lab finding)?

- - A (patient description) has (abnormal findings). Which [additional] finding would suggest/suggests a diagnosis of (disease 1) rather than (disease 2)?

- - A (patient description) has (symptoms and signs). These observations suggest that the disease is a result of the (absence or presence) of which of the following (enzymes, mechanisms)?

- - A (patient description) follows a (specific dietary regime); which of the following conditions is most likely to occur?

- - A (patient description) has (symptoms, signs, or specific disease) and is being treated with (drug or drug class). The drug acts by inhibiting which of the following (functions, processes)?

- - (Time period) after a (event such as trip or meal with certain foods), a (patient or group description) became ill with (symptoms and signs). Which of the following (organisms, agents) is most likely to be found on analysis of (food)?

- - Following (procedure), a (patient description) develops (symptoms and signs). Laboratory findings show (findings). Which of the following is the most likely cause?

Sample Lead-ins and Option Lists

Option sets could include sites of lesions; list of nerves; list of muscles; list of enzymes; list of hormones; types of cells; list of neurotransmitters; list of toxins, molecules, vessels, and spinal segments.

Option sets could include a list of laboratory results; list of additional physical signs; autopsy results; results of microscopic examination of fluids, muscle or joint tissue; DNA analysis results, and serum levels.

Option sets could include a list of underlying mechanisms of the disease; drugs or drug classes that might cause side effects; toxic agents; hemodynamic mechanisms, viruses, and metabolic defects.

Items Related to Physician Tasks 48

The classic diagnosis item begins with a patient description (including age, sex, symptoms and signs and their duration, history, physical findings on exam, findings on diagnostic and lab studies) and ends with a question:

- - Which of the following is the most likely diagnosis?

- - Which of the following is the most appropriate next step in diagnosis?

- - Which of the following is most likely to confirm the diagnosis?

Management:

Questions to ask include:

- - Which of the following is the most appropriate initial or next step in patient care?

- - Which of the following is the most effective management?

- - Which of the following is the most appropriate pharmacotherapy?

- - Which of the following is the first priority in caring for this patient? (eg, in the emergency department).

Health and Health Maintenance:

The following lead-ins are examples of those used in this category:

- - Which of the following immunizations should be administered at this time?

- - Which of the following is the most appropriate screening test?

- - Which of the following tests would have predicted these findings?

- - Which of the following is the most appropriate intervention?

- - For which of the following conditions is the patient at greatest risk?

- - Which of the following is most likely to have prevented this condition?

- - Which of the following is the most appropriate next step in the management to prevent [morbidity/mortality/disability]?

- - Which of the following should be recommended to prevent disability from this injury/condition?

- - Early treatment with which of the following is most likely to have prevented this patient's condition?

- - Supplementation with which of the following is most likely to have prevented this condition?

Mechanisms of Disease:

Begin your mechanism items with a clinical vignette of a patient and his/her symptoms, signs, history, laboratory results, etc., then ask a question such as one of these:

- - Which of the following is the most likely explanation for these findings?

- - Which of the following is the most likely location of the patient's lesion?

- - Which of the following is the most likely pathogen?

- - Which of the following findings is most likely to be increased/decreased?

- - A biopsy is most likely to show which of the following?

ACKNOWLEDGMENT

The author wishes to gratefully acknowledge and express his gratitude to all professors who have provided him with comments, with special gratitude to Professor Eiad A. Al Faris, Professor Ahmed A. Abdel Hameed and Dr. Ibrahim A Alorainy (College of Medicine, King Saud University) for their support.

9 Tips and Rules to Follow when Constructing an Essay

When you’re a college student or a high schooler, knowing how to write a convincing essay will be something that’s expected from you.

However, essay writing is a skill that takes time, practice, and tons of research to be developed. It’s not something you’ll just know how to do by default.

So, in this article, we’ll provide you with some tips and rules that will help you start writing better essays and get higher grades as a result.

Without any further ado, let’s get right to the bottom of it!

1. Understand the essay topic properly

Before you start writing, make sure you’ve understood the assignment as deeply as you possibly can. This includes looking for relevant sources , and references, and reading the recommended literature. From then on, you can continue to draft a hypothesis and your abstract.

Try not to stray too far from the topic – it’s one of the most common mistakes people make when writing an academic essay. It’s easy to get caught up in the literature, especially when your topic is something extremely specific.

2. Set the tone for your essay

Is the essay supposed to include your own ideas and opinions, or should it be based on strict academic sources only? Your professors will probably be very specific in regards to that. Besides, the topic itself can be indicative of the tone that should be set in your writing.

For example, if you’re writing an essay for your English class, you’ll probably be allowed to „sprinkle in“ some of your own thoughts and feelings, while the expectations for your essay about, let’s say, thermodynamics would be completely different.

3. Draft an outline

Once you start exploring the recommended literature, you’ll quickly be able to divide your essay into paragraphs or subtitles. Do this as early in the process as you can – it will help you stay on the right track.

Now, of course, making an outline before you’ve started writing can feel a bit restrictive. Make sure you look at it from a different point of view – you can still be flexible and change the „flow“ of your essay easily – these sections should just be looked at as nothing more than rough guidelines.

4. Begin with the body

Writing the introduction can be a daunting task, especially if you’re not entirely sure which direction to take with your essay. There’s nothing wrong with leaving it for later, especially if you’re working on a tight schedule. Once you’re halfway with the body, you’ll be able to finish the introduction in no time whatsoever.

Again, your introduction is one of the most important parts of your entire essay. It’s how the reader will decide whether your work is worth reading or not, so make it as convincing as possible.

5. Get professional writing help

As we’ve mentioned before, there’s an expectation of high school and college students to simply know how to write an academic essay. This approach is completely wrong – the students should be taught proper techniques and rules before they’re expected to write one themselves.

If the essay-writing tasks seem too overbearing for you, don’t hesitate to talk to your professors and teachers and ask for some practical tips. If they’re not able to provide you with those, then you should seek assistance from professional writers such as homeworkhelpglobal.com .