Automated Essay Scoring with Discourse-Aware Neural Models

Farah Nadeem , Huy Nguyen , Yang Liu , Mari Ostendorf

Export citation

- Preformatted

Markdown (Informal)

[Automated Essay Scoring with Discourse-Aware Neural Models](https://aclanthology.org/W19-4450) (Nadeem et al., BEA 2019)

- Automated Essay Scoring with Discourse-Aware Neural Models (Nadeem et al., BEA 2019)

- Farah Nadeem, Huy Nguyen, Yang Liu, and Mari Ostendorf. 2019. Automated Essay Scoring with Discourse-Aware Neural Models . In Proceedings of the Fourteenth Workshop on Innovative Use of NLP for Building Educational Applications , pages 484–493, Florence, Italy. Association for Computational Linguistics.

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

Automatic Essay Scoring

Folders and files

Repository files navigation.

Neural models for essay scoring for TOEFL essays ( https://catalog.ldc.upenn.edu/LDC2014T06 ) and ASAP essays ( http://www.kaggle.com/c/asap-aes ). The TensorFlow version is 1.12 , Cuda 9.0, and CUDNN 7.1.4.

The models are pretrained using a discourse marker prediction task, natural language inference task, or using pretrained text representation from BERT ( https://arxiv.org/pdf/1810.04805.pdf ) or USE ( https://static.googleusercontent.com/media/research.google.com/en//pubs/archive/46808.pdf ).

Details can be found in the paper “Automated Essay Scoring with Discourse Aware Neural Models” F. Nadeem, H. Nguyen, Y. Liu and M. Ostendorf, Proceedings of the 14th Workshop on Innovative Use of NLP for Building Educational Applications at ACL 2019. Models can be downloaded at https://sites.google.com/site/nadeemf0755/research/automatic-essay-scoring

For the two data sets, ASAP and TOEFL (LDC), the first step is to run the data parse scripts, either ASAP_dataparse.ipynb or TOEFL_dataparse.ipynb. After that the training or testing scripts can be run for all models except the ones that use BERT. For the models using BERT, the BERT preprocessing scripts should be run before the training or testing (BERT_text_representation.ipynb), based on BERT serving client https://pypi.org/project/bert-serving-client/ .

For BERT_text_representation, please initialize the service with pooling_strategy set to NONE, so that the BERT output is not pooled across tokens.

- Jupyter Notebook 80.0%

- Python 20.0%

Subscribe to the PwC Newsletter

Join the community, edit social preview.

Add a new code entry for this paper

Remove a code repository from this paper, mark the official implementation from paper authors, add a new evaluation result row.

- AUTOMATED ESSAY SCORING

- FEATURE ENGINEERING

Remove a task

Add a method, remove a method, edit datasets, automated essay scoring with discourse-aware neural models.

WS 2019 · Farah Nadeem , Huy Nguyen , Yang Liu , Mari Ostendorf · Edit social preview

Automated essay scoring systems typically rely on hand-crafted features to predict essay quality, but such systems are limited by the cost of feature engineering. Neural networks offer an alternative to feature engineering, but they typically require more annotated data. This paper explores network structures, contextualized embeddings and pre-training strategies aimed at capturing discourse characteristics of essays. Experiments on three essay scoring tasks show benefits from all three strategies in different combinations, with simpler architectures being more effective when less training data is available.

Code Edit Add Remove Mark official

Tasks edit add remove, datasets edit.

Results from the Paper Edit Add Remove

Methods edit add remove.

Neural Automated Essay Scoring and Coherence Modeling for Adversarially Crafted Input

We demonstrate that current state-of-the-art approaches to Automated Essay Scoring (AES) are not well-suited to capturing adversarially crafted input of grammatical but incoherent sequences of sentences. We develop a neural model of local coherence that can effectively learn connectedness features between sentences, and propose a framework for integrating and jointly training the local coherence model with a state-of-the-art AES model. We evaluate our approach against a number of baselines and experimentally demonstrate its effectiveness on both the AES task and the task of flagging adversarial input, further contributing to the development of an approach that strengthens the validity of neural essay scoring models.

1 Introduction

Automated Essay Scoring (AES) focuses on automatically analyzing the quality of writing and assigning a score to the text. Typically, AES models exploit a wide range of manually-tuned shallow and deep linguistic features Shermis and Hammer ( 2012 ) ; Burstein et al. ( 2003 ) ; Rudner et al. ( 2006 ) ; Williamson et al. ( 2012 ) ; Andersen et al. ( 2013 ) . Recent advances in deep learning have shown that neural approaches to AES achieve state-of-the-art results Alikaniotis et al. ( 2016 ) ; Taghipour and Ng ( 2016 ) with the additional advantage of utilizing features that are automatically learned from the data. In order to facilitate interpretability of neural models, a number of visualization techniques have been proposed to identify textual (superficial) features that contribute to model performance Alikaniotis et al. ( 2016 ) .

To the best of our knowledge, however, no prior work has investigated the robustness of neural AES systems to adversarially crafted input that is designed to trick the model into assigning desired missclassifications; for instance, a high score to a low quality text. Examining and addressing such validity issues is critical and imperative for AES deployment. Previous work has primarily focused on assessing the robustness of ‘‘standard’’ machine learning approaches that rely on manual feature engineering; for example, Powers et al. ( 2002 ) ; Yannakoudakis et al. ( 2011 ) have shown that such AES systems, unless explicitly designed to handle adversarial input, can be susceptible to subversion by writers who understand something of the systems’ workings and can exploit this to maximize their score.

In this paper, we make the following contributions:

We examine the robustness of state-of-the-art neural AES models to adversarially crafted input, 1 1 1 We use the terms ‘adversarially crafted input’ and ‘adversarial input’ to refer to text that is designed with the intention to trick the system. and specifically focus on input related to local coherence ; that is, grammatical but incoherent sequences of sentences. 2 2 2 Coherence can be assessed locally in terms of transitions between adjacent sentences. In addition to the superiority in performance of neural approaches against ‘‘standard’’ machine learning models Alikaniotis et al. ( 2016 ) ; Taghipour and Ng ( 2016 ) , such a setup allows us to investigate their potential superiority / capacity in handling adversarial input without being explicitly designed to do so.

We demonstrate that state-of-the-art neural AES is not well-suited to capturing adversarial input of grammatical but incoherent sequences of sentences, and develop a neural model of local coherence that can effectively learn connectedness features between sentences.

A local coherence model is typically evaluated based on its ability to rank coherently ordered sequences of sentences higher than their incoherent / permuted counterparts (e.g., Barzilay and Lapata ( 2008 ) ). We focus on a stricter evaluation setting in which the model is tested on its ability to rank coherent sequences of sentences higher than any incoherent / permuted set of sentences, and not just its own permuted counterparts. This supports a more rigorous evaluation that facilitates development of more robust models.

We propose a framework for integrating and jointly training the local coherence model with a state-of-the-art AES model. We evaluate our approach against a number of baselines and experimentally demonstrate its effectiveness on both the AES task and the task of flagging adversarial input, further contributing to the development of an approach that strengthens AES validity.

At the outset, our goal is to develop a framework that strengthens the validity of state-of-the-art neural AES approaches with respect to adversarial input related to local aspects of coherence. For our experiments, we use the Automated Student Assessment Prize (ASAP) dataset, 3 3 3 https://www.kaggle.com/c/asap-aes/ which contains essays written by students ranging from Grade 7 to Grade 10 in response to a number of different prompts (see Section 4 ).

2 Related Work

AES Evaluation against Adversarial Input One of the earliest attempts at evaluating AES models against adversarial input was by Powers et al. ( 2002 ) who asked writing experts -- that had been briefed on how the e-Rater scoring system works -- to write essays to trick e-Rater Burstein et al. ( 1998 ) . The participants managed to fool the system into assigning higher-than-deserved grades, most notably by simply repeating a few well-written paragraphs several times. Yannakoudakis et al. ( 2011 ) and Yannakoudakis and Briscoe ( 2012 ) created and used an adversarial dataset of well-written texts and their random sentence permutations, which they released in the public domain, together with the grades assigned by a human expert to each piece of text. Unfortunately, however, the dataset is quite small, consisting of 12 12 12 texts in total. Higgins and Heilman ( 2014 ) proposed a framework for evaluating the susceptibility of AES systems to gaming behavior. Neural AES Models Alikaniotis et al. ( 2016 ) developed a deep bidirectional Long Short-Term Memory (LSTM) Hochreiter and Schmidhuber ( 1997 ) network, augmented with score-specific word embeddings that capture both contextual and usage information for words. Their approach outperformed traditional feature-engineered AES models on the ASAP dataset. Taghipour and Ng ( 2016 ) investigated various recurrent and convolutional architectures on the same dataset and found that an LSTM layer followed by a Mean over Time operation achieves state-of-the-art results. Dong and Zhang ( 2016 ) showed that a two-layer Convolutional Neural Network (CNN) outperformed other baselines (e.g., Bayesian Linear Ridge Regression) on both in-domain and domain-adaptation experiments on the ASAP dataset. Neural Coherence Models A number of approaches have investigated neural models of coherence on news data. Li and Hovy ( 2014 ) used a window approach where a sliding kernel of weights was applied over neighboring sentence representations to extract local coherence features. The sentence representations were constructed with recursive and recurrent neural methods. Their approach outperformed previous methods on the task of selecting maximally coherent sentence orderings from sets of candidate permutations Barzilay and Lapata ( 2008 ) . Lin et al. ( 2015 ) developed a hierarchical Recurrent Neural Network (RNN) for document modeling. Among others, they looked at capturing coherence between sentences using a sentence-level language model, and evaluated their approach on the sentence ordering task. Tien Nguyen and Joty ( 2017 ) built a CNN over entity grid representations, and trained the network in a pairwise ranking fashion. Their model outperformed other graph-based and distributed sentence models. We note that our goal is not to identify the ‘‘best’’ model of local coherence on randomly permuted grammatical sentences in the domain of AES, but rather to propose a framework that strengthens the validity of AES approaches with respect to adversarial input related to local aspects of coherence.

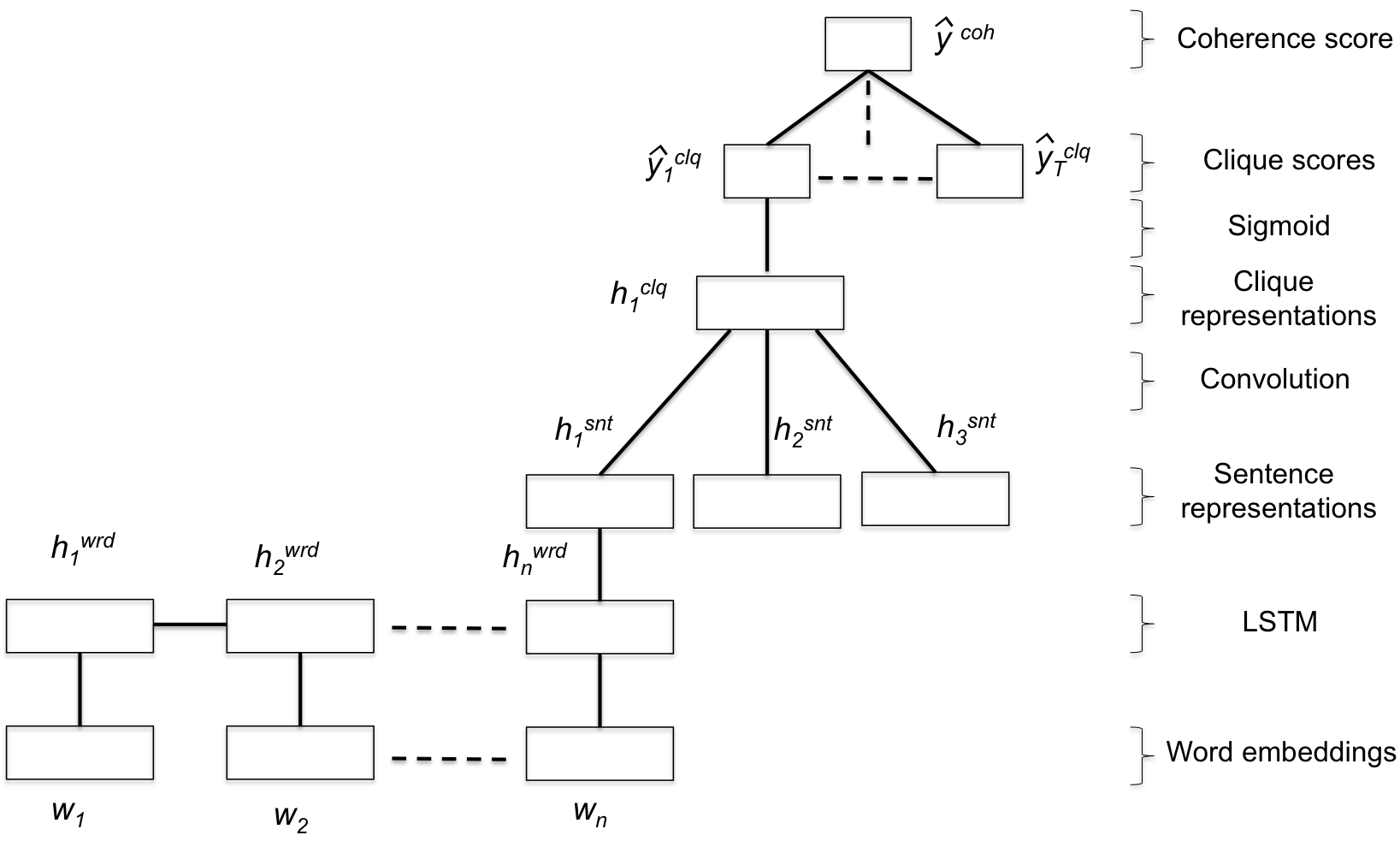

3.1 Local Coherence (LC) Model

Our local coherence model is inspired by the model of Li and Hovy ( 2014 ) which uses a window approach to evaluate coherence. 4 4 4 We note that Li and Jurafsky ( 2017 ) also present an extended version of the work by Li and Hovy ( 2014 ) , evaluated on different domains. Figure 1 presents a visual representation of the network architecture, which is described below in detail. Sentence Representation This part of the model composes the sentence representations that can be utilized to learn connectedness features between sentences. Each word in the text is initialized with a k 𝑘 k -dimensional vector w 𝑤 w from a pre-trained word embedding space. Unlike Li and Hovy ( 2014 ) , we use an LSTM layer 5 5 5 LSTMs have been shown to produce state-of-the-art results in AES Alikaniotis et al. ( 2016 ); Taghipour and Ng ( 2016 ) . to capture sentence compositionality by mapping words in a sentence s = { w 1 , w 2 , … , w n } 𝑠 subscript 𝑤 1 subscript 𝑤 2 … subscript 𝑤 𝑛 s=\{w_{1},w_{2},...,w_{n}\} at each time step t 𝑡 t ( w t subscript 𝑤 𝑡 w_{t} , where t ≤ n 𝑡 𝑛 t\leq n ) onto a fixed-size vector h t w r d ∈ ℝ d l s t m superscript subscript ℎ 𝑡 𝑤 𝑟 𝑑 superscript ℝ subscript 𝑑 𝑙 𝑠 𝑡 𝑚 h_{t}^{wrd}\in\mathbb{R}^{d_{lstm}} (where d l s t m subscript 𝑑 𝑙 𝑠 𝑡 𝑚 {d_{lstm}} is a hyperparameter). The sentence representation h s n t superscript ℎ 𝑠 𝑛 𝑡 h^{snt} is then the representation of the last word in the sentence:

Clique Representation Each window of sentences in a text represents a clique q = { s 1 , … , s m } 𝑞 subscript 𝑠 1 … subscript 𝑠 𝑚 q=\{s_{1},...,s_{m}\} , where m 𝑚 m is a hyperparameter indicating the window size. A clique is assigned a score of 1 1 1 if it is coherent (i.e., the sentences are not shuffled) and 0 0 if it is incoherent (i.e., the sentences are shuffled). The clique embedding is created by concatenating the representations of the sentences it contains according to Equation 1 . A convolutional operation -- using a filter W c l q ∈ ℝ m × d l s t m × d c n n superscript 𝑊 𝑐 𝑙 𝑞 superscript ℝ 𝑚 subscript 𝑑 𝑙 𝑠 𝑡 𝑚 subscript 𝑑 𝑐 𝑛 𝑛 W^{clq}\in\mathbb{R}^{m\times d_{lstm}\times d_{cnn}} , where d c n n subscript 𝑑 𝑐 𝑛 𝑛 d_{cnn} denotes the convolutional output size -- is then applied to the clique embedding, followed by a non-linearity in order to extract the clique representation h c l q ∈ ℝ d c n n superscript ℎ 𝑐 𝑙 𝑞 superscript ℝ subscript 𝑑 𝑐 𝑛 𝑛 h^{clq}\in\mathbb{R}^{d_{cnn}} :

𝑁 𝑚 1 j\in\{1,...,N-m+1\} , N 𝑁 N is the number of sentences in the text, and ∗ * is the linear convolutional operation. Scoring The cliques’ predicted scores are calculated via a linear operation followed by a sigmoid function to project the predictions to a [ 0 , 1 ] 0 1 [0,1] probability space:

where V ∈ ℝ d c n n 𝑉 superscript ℝ subscript 𝑑 𝑐 𝑛 𝑛 V\in\mathbb{R}^{d_{cnn}} is a learned weight. The network optimizes its parameters to minimize the negative log-likelihood of the cliques’ gold scores y c l q superscript 𝑦 𝑐 𝑙 𝑞 y^{clq} , given the network’s predicted scores:

𝑁 𝑚 1 T=N-m+1 (number of cliques in text). The final prediction of the text’s coherence score is calculated as the average of all of its clique scores:

This is in contrast to Li and Hovy ( 2014 ) , who multiply all the estimated clique scores to generate the overall document score. This means that if only one clique is misclassified as incoherent and assigned a score of 0 0 , the whole document is regarded as incoherent. We aim to soften this assumption and use the average instead to allow for a more fine-grained modeling of degrees of coherence. 6 6 6 Our experiments showed that using the multiplicative approach gives poor results, as presented in Section 6 .

We train the LC model on synthetic data automatically generated by creating random permutations of highly-scored ASAP essays (Section 4 ).

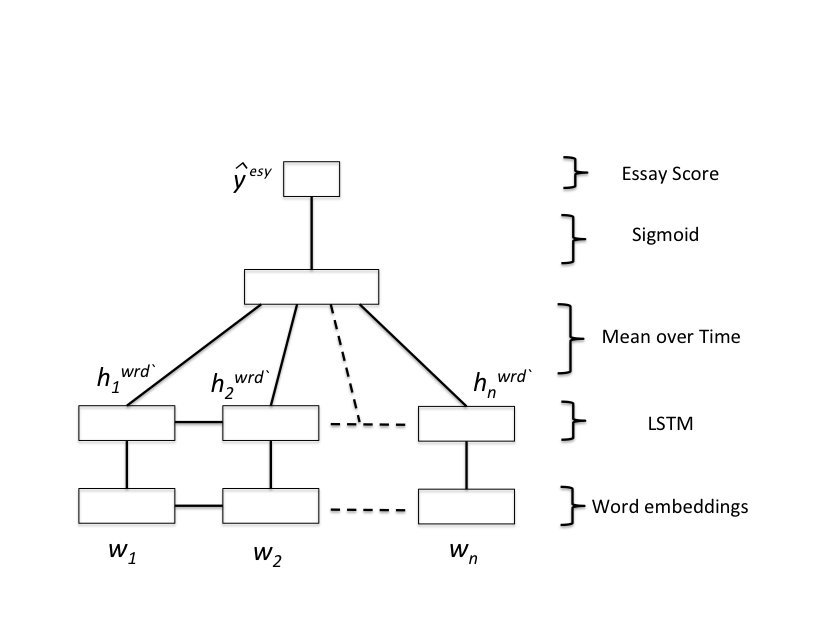

3.2 LSTM AES Model

We utilize the LSTM AES model of Taghipour and Ng ( 2016 ) shown in Figure 2 (LSTM T&N ), which is trained, and yields state-of-the-art results on the ASAP dataset. The model is a one-layer LSTM that encodes the sequence of words in an essay, followed by a Mean over Time operation that averages the word representations generated from the LSTM layer. 7 7 7 We note that the authors achieve slightly higher results when averaging ensemble results of their LSTM model together with CNN models. We use their main LSTM model which, for the purposes of our experiments, does not affect our conclusions.

3.3 Combined Models

We propose a framework for integrating the LSTM T&N model with the Local Coherence (LC) one. Our goal is to have a robust AES system that is able to correctly flag adversarial input while maintaining a high performance on essay scoring.

3.3.1 Baseline: Vector Concatenation (VecConcat)

The baseline model simply concatenates the output representations of the pre-prediction layers of the trained LSTM T&N and LC networks, and feeds the resulting vector to a machine learning algorithm (e.g., Support Vector Machines, SVMs) to predict the final overall score. In the LSTM T&N model, the output representation (hereafter referred to as the essay representation ) is the vector produced from the Mean Over Time operation; in the LC model, we use the generated clique representations (Figure 1 ) aggregated with a max operation; 8 8 8 We note that max aggregation outperformed other aggregation functions. (hereafter referred to as the clique representation ). Although the LC model is trained on permuted ASAP essays (Section 4 ) and the LSTM T&N model on the original ASAP data, essay and clique representations are generated for both the ASAP and the synthetic essays containing reordered sentences.

3.3.2 Joint Learning

Instead of training the LSTM T&N and LC models separately and then concatenating their output representations, we propose a framework where both models are trained jointly, and where the final network has then the capacity to predict AES scores and flag adversarial input (Figure 3 ).

Specifically, the LSTM T&N and LC networks predict an essay and coherence score respectively (as described earlier), but now they both share the word embedding layer. The training set is the aggregate of both the ASAP and permuted data to allow the final network to learn from both simultaneously. Concretely, during training, for the ASAP essays, we assume that both the gold essay and coherence scores are the same and equal to the gold ASAP scores. This is not too strict an assumption, as overall scores of writing competence tend to correlate highly with overall coherence. For the synthetic essays, we set the ‘‘gold’’ coherence scores to zero, and the ‘‘gold’’ essay scores to those of their original non-permuted counterparts in the ASAP dataset. The intuition is as follows: firstly, setting the ‘‘gold’’ essay scores of synthetic essays to zero would bias the model into over-predicting zeros; secondly, our approach reinforces the LSTM T&N ’s inability to detect adversarial input, and forces the overall network to rely on the LC branch to identify such input. 9 9 9 We note that, during training, the scores are mapped to a range between 0 and 1 (similarly to Taghipour and Ng ( 2016 ) ), and then scaled back to their original range during evaluation.

The two sub-networks are trained together and the error gradients are back-propagated to the word embeddings. To detect whether an essay is adversarial, we further augment the system with an adversarial text detection component that simply captures adversarial input based on the difference between the predicted essay and coherence scores. Specifically, we use our development set to learn a threshold for this difference, and flag an essay as adversarial if the difference is larger than the threshold. We experimentally demonstrate that this approach enables the model to perform well on both original ASAP and synthetic essays. During model evaluation, the texts flagged as adversarial by the model are assigned a score of zero, while the rest are assigned the predicted essay score ( y ^ e s y superscript ^ 𝑦 𝑒 𝑠 𝑦 \hat{y}^{esy} in Figure 3 ).

4 Data and Evaluation

To create adversarial input, we select high scoring essays per prompt (given a pre-defined score threshold, Table 1 ) 10 10 10 We note that this threshold is different than the one mentioned in Section 3.3.2 . that are assumed coherent, and create 10 10 10 permutations per essay by randomly shuffling its sentences. In the joint learning setup, we augment the original ASAP dataset with a subset of the synthetic essays. Specifically, we randomly select 4 4 4 permutations per essay to include in the training set, 11 11 11 This is primarily done to keep the data balanced: initial experiments showed that training with all 10 10 10 permutations per essay harms AES performance, but has negligible effect on adversarial input detection. but include all 10 10 10 permutations in the test set. Table 1 presents the details of the datasets. We test performance on the ASAP dataset using Quadratic Weighted Kappa (QWK), which was the official evaluation metric in the ASAP competition, while we test performance on the synthetic dataset using pairwise ranking accuracy (PRA) between an original non-permuted essay and its permuted counterparts. PRA is typically used as an evaluation metric on coherence assessment tasks on other domains Barzilay and Lapata ( 2008 ) , and is based on the fraction of correct pairwise rankings in the test data (i.e., a coherent essay should be ranked higher than its permuted counterpart). Herein, we extend this metric and furthermore evaluate the models by comparing each original essay to all adversarial / permuted essays in the test data, and not just its own permuted counterparts -- we refer to this metric as total pairwise ranking accuracy (TPRA).

5 Model Parameters and Baselines

Coherence models We train and test the LC model described in Section 3.1 on the synthetic dataset and evaluate it using PRA and TPRA. During pre-processing, words are lowercased and initialized with pre-trained word embeddings Zou et al. ( 2013 ) . Words that occur only once in the training set are mapped to a special UNK embedding. All network weights are initialized to values drawn randomly from a uniform distribution with scale = 0.05 absent 0.05 =0.05 , and biases are initialized to zeros. We apply a learning rate of 0.001 0.001 0.001 and RMSProp Tieleman and Hinton ( 2012 ) for optimization. A size of 100 100 100 is chosen for the hidden layers ( d l s t m subscript 𝑑 𝑙 𝑠 𝑡 𝑚 d_{lstm} and d c n n subscript 𝑑 𝑐 𝑛 𝑛 d_{cnn} ), and the convolutional window size ( m 𝑚 m ) is set to 3 3 3 . Dropout Srivastava et al. ( 2014 ) is applied for regularization to the output of the convolutional operation with probability 0.3 0.3 0.3 . The network is trained for 60 60 60 epochs and performance is monitored on the development sets -- we select the model that yields the highest PRA value. 12 12 12 Our implementation is available at https://github.com/Youmna-H/Coherence_AES

We use as a baseline the LC model that is based on the multiplication of the clique scores (similarly to Li and Hovy ( 2014 ) ), and compare the results (LC mul ) to our averaged approach. As another baseline, we use the entity grid (EGrid) Barzilay and Lapata ( 2008 ) that models transitions between sentences based on sequences of entity mentions labeled with their grammatical role. EGrid has been shown to give competitive results on similar coherence tasks in other domains. Using the Brown Coherence Toolkit Eisner and Charniak ( 2011 ) , 13 13 13 https://bitbucket.org/melsner/browncoherence we construct the entity transition probabilities with length = 3 3 3 and salience = 2 2 2 . The transition probabilities are then used as features that are fed as input to an SVM classifier with an RBF kernel and penalty parameter C = 1.5 𝐶 1.5 C=1.5 to predict a coherence score. LSTM T&N model We replicate and evaluate the LSTM model of Taghipour and Ng ( 2016 ) 14 14 14 https://github.com/nusnlp/nea on ASAP and our synthetic data. Combined models After training the LC and LSTM T&N models, we concatenate their output vectors to build the Baseline: Vector Concatenation (VecConcat) model as described in Section 3.3.1 , and train a Kernel Ridge Regression model. 15 15 15 We use scikit-learn with the following parameters: alpha= 0.1 0.1 0.1 , coef0= 1 1 1 , degree= 3 3 3 , gamma= 0.1 0.1 0.1 , kernel=‘rbf’.

The Joint Learning network is trained on both the ASAP and synthetic dataset as described in Section 3.3.2 . Adversarial input is detected based on an estimated threshold on the difference between the predicted essay and coherence scores (Figure 3 ). The threshold value is empirically calculated on the development sets, and set to be the average difference between the predicted essay and coherence scores in the synthetic data:

where M 𝑀 M is the number of synthetic essays in the development set.

We furthermore evaluate a baseline where the joint model is trained without sharing the word embedding layer between the two sub-models, and report the effect on performance (Joint Learning no_layer_sharing ). Finally, we evaluate a baseline where for the joint model we set the ‘‘gold’’ essay scores of synthetic data to zero (Joint Learning zero_score ), as opposed to our proposed approach of setting them to be the same as the score of their original non-permuted counterpart in the ASAP dataset.

The state-of-the-art LSTM T&N model, as shown in Table 2 , gives the highest performance on the ASAP data, but is not robust to adversarial input and therefore unable to capture aspects of local coherence, with performance on synthetic data that is less than 0.5 0.5 0.5 . On the other hand, both our LC model and the EGrid significantly outperform LSTM T&N on synthetic data. While EGrid is slightly better in terms of TPRA compared to LC ( 0.706 0.706 0.706 vs. 0.689 0.689 0.689 ), LC is substantially better on PRA ( 0.946 0.946 0.946 vs. 0.718 0.718 0.718 ). This could be attributed to the fact that LC is optimised using PRA on the development set. The LC mul variation has a performance similar to LC in terms of PRA, but is significantly worse in terms of TPRA, which further supports the use of our proposed LC model.

Our Joint Learning model manages to exploit the best of both the LSTM T&N and LC approaches: performance on synthetic data is significantly better compared to LSTM T&N (and in particular gives the highest TPRA value on synthetic data compared to all models), while manages to maintain the high performance of LSTM T&N on ASAP data (performance slighly drops from 0.739 0.739 0.739 to 0.724 0.724 0.724 though not significantly). When the Joint Learning model is compared against the VecConcat baseline, we can again confirm its superiority on both datasets, giving significant differences on synthetic data.

7 Further Analysis

We furthermore evaluate the performance of the the Joint Learning model when trained using different parameters (Table 3 ). When assigning ‘‘gold’’ essay scores of zero to adversarial essays (Joint Learning zero_score ), AES performance on the ASAP data drops to 0.449 0.449 0.449 QWK, and the results are statistically significant. 16 16 16 Note that we do not report performance of this model on synthetic data. In this case, the thresholding technique cannot be applied as both sub-models are trained with the same “gold” scores and thus have very similar predictions on synthetic data. This is partly explained by the fact that the model, given the training data gold scores, is biased towards predicting zeros. The result, however, further supports our hypothesis that forcing the Joint Learning model to rely on the coherence branch for adversarial input detection further improves performance. Importantly, we need something more than just training a state-of-the-art AES model (in our case, LSTM T&N ) on both original and synthetic data.

We also compare Joint Learning to Joint Learning no_layer_sharing in which the the two sub-models are trained separately without sharing the first layer of word representations. While the difference in performance on the ASAP test data is small, the differences are much larger on synthetic data, and are significant in terms of TPRA. By examining the false positives of both systems (i.e., the coherent essays that are misclassified as adversarial), we find that when the embeddings are not shared, the system is biased towards flagging long essays as adversarial, while interestingly, this bias is not present when the embeddings are shared. For instance, the average number of words in the false positive cases of Joint Learning no_layer_sharing on the ASAP data is 426 426 426 , and the average number of sentences is 26 26 26 ; on the other hand, with the Joint Learning model, these numbers are 340 340 340 and 19 19 19 respectively. 17 17 17 Adversarial texts in the synthetic dataset have an average number of 306 306 306 words and an average number of 18 18 18 sentences. A possible explanation for this is that training the words with more contextual information (in our case, via embeddings sharing), is advantageous for longer documents with a large number of sentences.

Ideally, no essays in the ASAP data should be flagged as adversarial as they were not designed to trick the system. We calculate the number of ASAP texts incorrectly detected as adversarial, and find that the average error in the Joint Learning model is quite small ( 0.382 % percent 0.382 0.382\% ). This increases with Joint Learning no_layer_sharing ( 1 % percent 1 1\% ), although still remains relatively small.

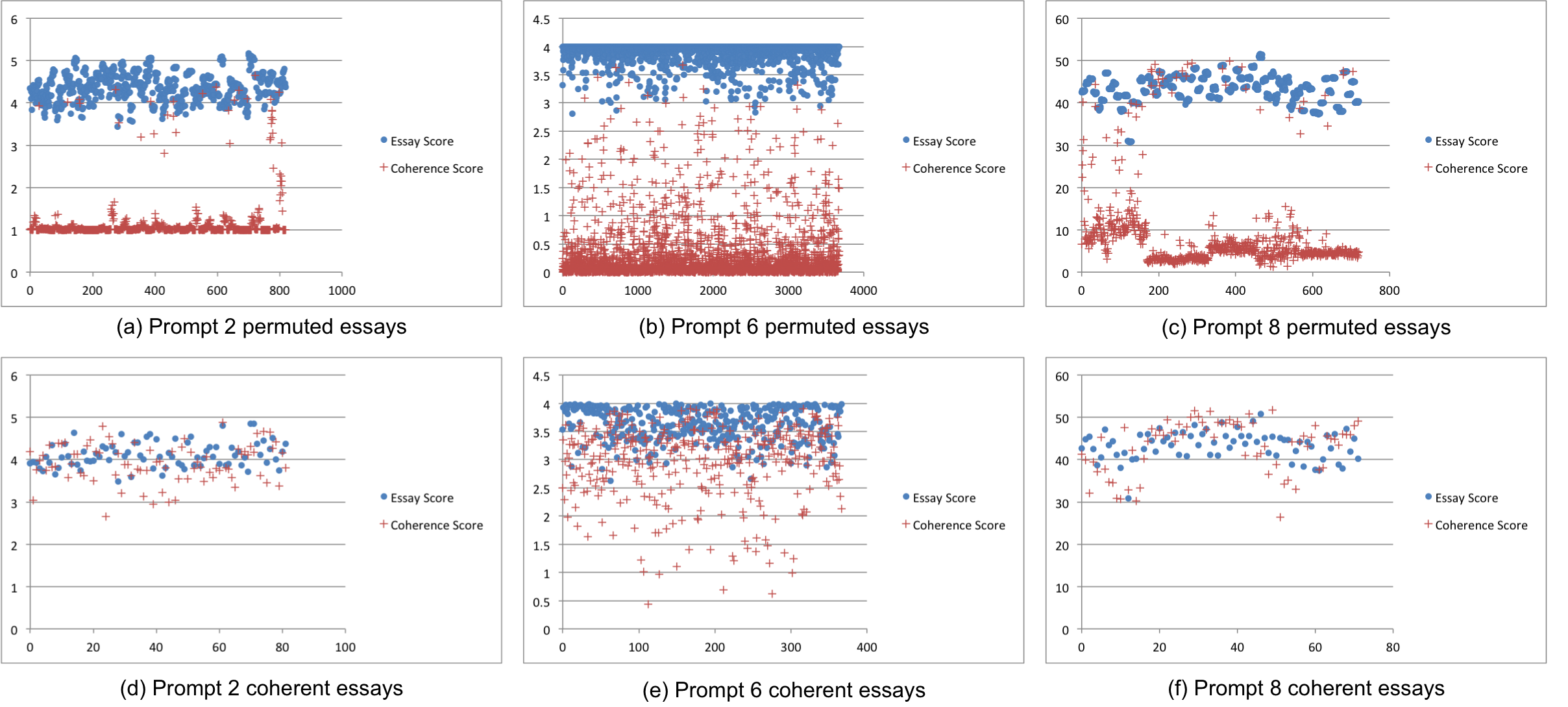

We further investigate the essay and coherence scores predicted by our best model, Joint Learning, for the permuted and original ASAP essays in the synthetic dataset (for which we assume that the selected, highly scored ASAP essays are coherent, Section 4 ), and present results for 3 3 3 randomly selected prompts in Figure 4 . The graphs show a large difference between predicted essay and coherence scores on permuted / adversarial data ((a), (b) and (c)), where the system predicts high essay scores for permuted texts (as a result of our training strategy), but low coherence scores (as predicted by the LC model). For highly scored ASAP essays ((d), (e) and (f)), the system predictions are less varied and positively contributes to the performance of our proposed approach.

8 Conclusion

We evaluated the robustness of state-of-the-art neural AES approaches on adversarial input of grammatical but incoherent sequences of sentences, and demonstrated that they are not well-suited to capturing such cases. We created a synthetic dataset of such adversarial examples and trained a neural local coherence model that is able to discriminate between such cases and their coherent counterparts. We furthermore proposed a framework for jointly training the coherence model with a state-of-the-art neural AES model, and introduced an effective strategy for assigning ‘‘gold’’ scores to adversarial input during training. When compared against a number of baselines, our joint model achieves better performance on randomly permuted sentences, while maintains a high performance on the AES task. Among others, our results demonstrate that it is not enough to simply (re-)train neural AES models with adversarially crafted input, nor is it sufficient to rely on ‘‘simple’’ approaches that concatenate output representations from different neural models. Finally, our framework strengthens the validity of neural AES approaches with respect to adversarial input designed to trick the system.

Acknowledgements

We gratefully acknowledge the support of NVIDIA Corporation with the donation of the Titan X Pascal GPU used for this research. We are also grateful to Cambridge Assessment for their support of the ALTA Institute. Special thanks to Christopher Bryant and Marek Rei for their valuable feedback.

- Alikaniotis et al. (2016) Dimitrios Alikaniotis, Helen Yannakoudakis, and Marek Rei. 2016. Automatic text scoring using neural networks. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) . Association for Computational Linguistics, pages 715--725.

- Andersen et al. (2013) Øistein E Andersen, Helen Yannakoudakis, Fiona Barker, and Tim Parish. 2013. Developing and testing a self-assessment and tutoring system. In Proceedings of the Eighth Workshop on Innovative Use of NLP for Building Educational Applications, BEA . Association for Computational Linguistics, pages 32--41.

- Barzilay and Lapata (2008) Regina Barzilay and Mirella Lapata. 2008. Modeling local coherence: An entity-based approach. Computational Linguistics 34(1):1--34.

- Burstein et al. (2003) Jill Burstein, Martin Chodorow, and Claudia Leacock. 2003. Criterion: Online essay evaluation: An application for automated evaluation of student essays. In Proceedings of the fifteenth annual conference on innovative applications of artificial intelligence . American Association for Artificial Intelligence, pages 3--10.

- Burstein et al. (1998) Jill Burstein, Karen Kukich, Susanne Wolff, Chi Lu, Martin Chodorow, Lisa Braden-Harder, and Mary Dee Harris. 1998. Automated scoring using a hybrid feature identification technique. In Proceedings of the 36th Annual Meeting of the Association for Computational Linguistics and 17th International Conference on Computational Linguistics - Volume 1 . pages 206--210.

- Dong and Zhang (2016) Fei Dong and Yue Zhang. 2016. Automatic features for essay scoring -- an empirical study. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing . pages 1072--1077.

- Eisner and Charniak (2011) Micha Eisner and Eugene Charniak. 2011. Extending the entity grid with entity-specific features. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies: Short Papers - Volume 2 . Association for Computational Linguistics, pages 125--129.

- Higgins and Heilman (2014) Derrick Higgins and Michael Heilman. 2014. Managing what we can measure: Quantifying the susceptibility of automated scoring systems to gaming behavior. Educational Measurement: Issues and Practice 33:36–46.

- Hochreiter and Schmidhuber (1997) Sepp Hochreiter and Jürgen Schmidhuber. 1997. Long short-term memory. Neural computation 9(8):1735--1780.

- Li and Hovy (2014) Jiwei Li and Eduard Hovy. 2014. A model of coherence based on distributed sentence representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP) . pages 2039--2048.

- Li and Jurafsky (2017) Jiwei Li and Dan Jurafsky. 2017. Neural net models for open-domain discourse coherence. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing . pages 198--209.

- Lin et al. (2015) Rui Lin, Shujie Liu, Muyun Yang, Mu Li, Ming Zhou, and Sheng Li. 2015. Hierarchical recurrent neural network for document modeling. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing . pages 899--907.

- Powers et al. (2002) Donald E. Powers, Jill Burstein, Martin Chodorow, Mary E. Fowles, and Karen Kukich. 2002. Stumping e-rater: challenging the validity of automated essay scoring. Computers in Human Behavior 18(2):103--134.

- Rudner et al. (2006) LM Rudner, Veronica Garcia, and Catherine Welch. 2006. An evaluation of IntelliMetric essay scoring system. The Journal of Technology, Learning, and Assessment 4(4):1 -- 22.

- Shermis and Hammer (2012) M Shermis and B Hammer. 2012. Contrasting state-of-the-art automated scoring of essays: analysis. In Annual National Council on Measurement in Education Meeting . pages 1--54.

- Srivastava et al. (2014) Nitish Srivastava, Geoffrey E Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. 2014. Dropout: a simple way to prevent neural networks from overfitting. Journal of Machine Learning Research 15(1):1929--1958.

- Taghipour and Ng (2016) Kaveh Taghipour and Hwee Tou Ng. 2016. A neural approach to automated essay scoring. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing . Association for Computational Linguistics, pages 1882--1891.

- Tieleman and Hinton (2012) Tijmen Tieleman and Geoffrey Hinton. 2012. Lecture 6.5 - rmsprop. Technical report .

- Tien Nguyen and Joty (2017) Dat Tien Nguyen and Shafiq Joty. 2017. A neural local coherence model. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) . Association for Computational Linguistics, pages 1320--1330.

- Williamson et al. (2012) DM Williamson, Xiaoming Xi, and FJ Breyer. 2012. A framework for evaluation and use of automated scoring. Educational Measurement: Issues and Practice 31(1):2--13.

- Yannakoudakis and Briscoe (2012) Helen Yannakoudakis and Ted Briscoe. 2012. Modeling coherence in esol learner texts. In Proceedings of the Seventh Workshop on Building Educational Applications Using NLP . Association for Computational Linguistics, pages 33--43.

- Yannakoudakis et al. (2011) Helen Yannakoudakis, Ted Briscoe, and Ben Medlock. 2011. A new dataset and method for automatically grading esol texts. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1 . Association for Computational Linguistics, pages 180--189.

- Zou et al. (2013) Will Y Zou, Richard Socher, Daniel M Cer, and Christopher D Manning. 2013. Bilingual word embeddings for phrase-based machine translation. In EMNLP . pages 1393--1398.

Robust Neural Automated Essay Scoring Using Item Response Theory

- Conference paper

- First Online: 30 June 2020

- Cite this conference paper

- Masaki Uto ORCID: orcid.org/0000-0002-9330-5158 13 &

- Masashi Okano 13

Part of the book series: Lecture Notes in Computer Science ((LNAI,volume 12163))

Included in the following conference series:

- International Conference on Artificial Intelligence in Education

6721 Accesses

24 Citations

Automated essay scoring (AES) is the task of automatically assigning scores to essays as an alternative to human grading. Conventional AES methods typically rely on manually tuned features, which are laborious to effectively develop. To obviate the need for feature engineering, many deep neural network (DNN)-based AES models have been proposed and have achieved state-of-the-art accuracy. DNN-AES models require training on a large dataset of graded essays. However, assigned grades in such datasets are known to be strongly biased due to effects of rater bias when grading is conducted by assigning a few raters in a rater set to each essay. Performance of DNN models rapidly drops when such biased data are used for model training. In the fields of educational and psychological measurement, item response theory (IRT) models that can estimate essay scores while considering effects of rater characteristics have recently been proposed. This study therefore proposes a new DNN-AES framework that integrates IRT models to deal with rater bias within training data. To our knowledge, this is a first attempt at addressing rating bias effects in training data, which is a crucial but overlooked problem.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

A review of deep-neural automated essay scoring models

Deep Learning in Automated Essay Scoring

Automated Essay Scoring and the Deep Learning Black Box: How Are Rubric Scores Determined?

- Deep neural networks

- Item response theory

- Automated essay scoring

1 Introduction

In various assessment fields, essay-writing tests have attracted much attention as a way to measure practical and higher-order abilities such as logical thinking, critical reasoning, and creative thinking [ 1 , 4 , 13 , 18 , 33 , 35 ]. In essay-writing tests, examinees write essays about a given topic, and human raters grade those essays based on a scoring rubric. However, grading can be an expensive and time-consuming process when there are many examinees [ 13 , 16 ]. In addition, human grading is not always sufficiently accurate even when a rubric is used because assigned scores depend strongly on rater characteristics such as strictness and inconsistency [ 9 , 11 , 15 , 26 , 31 , 43 ]. Automated essay scoring (AES), which utilizes natural language processing (NLP) and machine learning techniques to automatically grade essays, is one approach toward resolving this problem.

Many AES methods have been developed over the past decades, and can generally be classified as feature-engineering or automatic feature extraction approaches [ 13 , 16 ].

The feature-engineering approach predicts scores using manually tuned features such as essay length and number of spelling errors (e.g., [ 3 , 5 , 22 , 28 ]). Advantages of this approach include interpretability and explainability. However, these approaches generally require extensive feature redesigns to achieve high prediction accuracy.

To obviate the need for feature engineering, automatic feature extraction based on deep neural networks (DNNs) has recently attracted attention. Many DNN-AES models have been proposed in the last few years (e.g., [ 2 , 6 , 10 , 14 , 23 , 24 , 27 , 37 , 47 ]) and have achieved state-of-the-art accuracy. This approach requires a large dataset of essays graded by human raters as training data. Essay grading tasks are generally shared among many raters, assigning a few raters to each essay to lower assessment burdens. However, assigned scores are known to be strongly biased due to the effects of rater characteristics [ 8 , 15 , 26 , 31 , 34 , 39 , 40 ]. Performance of DNN models rapidly drops when biased data are used for model training, because the resulting model reflects bias effects [ 3 , 12 , 17 ]. This problem has been generally overlooked or ignored, but it is a significant issue affecting all AES methods using supervised machine learning models, including DNN, and because cost concerns make it generally difficult to remove rater bias in practical testing situations.

In the fields of educational and psychological measurement, statistical models for estimating essay scores while considering rater characteristic effects have recently been proposed. Specifically, they are formulated as item response theory (IRT) models that incorporate parameters representing rater characteristics [ 9 , 29 , 30 , 38 , 42 , 43 , 44 , 45 ]. Such models have been applied to various performance tests, including essay writing. Previous studies have reported that they can provide reliable scores by removing adverse effects of rater bias (e.g., [ 38 , 39 , 41 , 42 , 44 ]).

This study therefore proposes a new DNN-AES framework that integrates IRT models to deal with rater bias in training data. Specifically, we propose a two-stage architecture that stacks an IRT model over a conventional DNN-AES model. In our framework, the IRT model is first applied to raw rating data to estimate reliable scores that remove effects of rater bias. Then, the DNN-AES model is trained using the IRT-based scores. Since the IRT-based scores are theoretically free from rater bias, the DNN-AES model will not reflect bias effects. Our framework is simple and easily applied to various conventional AES models. Moreover, this framework is highly suited to educational contexts and to low- and medium-stakes tests, because preparing high-quality training data in such situations is generally difficult. To our knowledge, this study is a first attempt at mitigating rater bias effects in DNN-AES models.

We assume the training dataset consists of essays written by J examinees and essay scores assigned by R raters. Let \(e_{j}\) be an essay by examinee \(j \in \mathcal{J} = \{ 1, \cdots , J \}\) and let \(U_{jr}\) represent a categorical score \(k \in \mathcal{K} = \{1,\cdots ,K\}\) assigned by rater \(r \in \mathcal{R} = \{1,\cdots , R\}\) to \(e_{j}\) . The score data can then be defined as \(\varvec{U} = \{ U_{jr} \in \mathcal{K} \cup \{-1\} \mid j \in \mathcal{J},r \in \mathcal{R}\}\) , with \(U_{jr} = -1\) denoting missing data. Missing data occur because only a few graders in \(\mathcal{R}\) can practically grade each essay \(e_{j}\) to reduce assessment workload. Furthermore, letting \(\mathcal{V} = \{1, \cdots , V\}\) be a vocabulary list for essay collection \(\varvec{E} = \{ e_{j} \mid j \in \mathcal{J} \}\) , essay \(e_{j} \in \varvec{E}\) is definable as a list of vocabulary words \(e_{j} = \{\varvec{w}_{jt} \in \mathcal{V} \mid t = \{1, \cdots , N_{j} \} \}\) , where \(\varvec{w}_{jt}\) is a one-hot representation of the t -th word in \(e_{j}\) , and \(N_{j}\) is the number of words in \(e_{j}\) . This study aimed at training DNN-AES models using this training data.

3 Neural Automated Essay Scoring Models

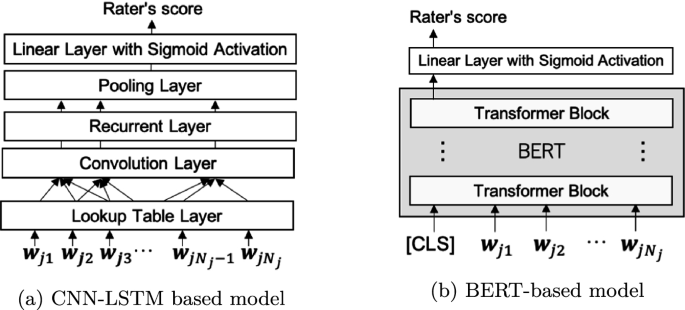

This section briefly introduces the DNN-AES models used in this study. Although many models have been proposed in the last few years, we apply the most popular model that uses convolution neural networks (CNN) with long short-term memory (LSTM) [ 2 ], and an advanced model based on bidirectional encoder representations from transformers (BERT) [ 7 ].

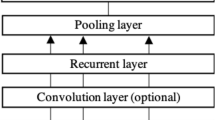

3.1 CNN-LSTM-Based Model

A CNN-LSTM-based model [ 2 ] proposed in 2016 was the first DNN-AES model. Figure 1 (a) shows the model architecture. This model calculates a score for a given essay, which is defined as a sequence of one-hot word vectors, through the following multi-layered neural networks.

Lookup table layer: This layer transforms each word in a given essay into a D -dimensional word-embedding representation, in which words with the same meaning have similar representations. Specifically, letting \(\varvec{A}\) be a \(D \times V\) -dimensional embeddings matrix, the embedding representation corresponding to \(\varvec{w}_{jt} \in e_{j}\) is calculable as the dot-product \(\varvec{A}\cdot \varvec{w}_{jt}\) .

Convolution layer: This layer extracts n-gram level features using CNN from the sequence of word embedding vectors. These features capture local textual dependencies among n-gram words. Zero padding is applied to outputs from this layer to preserve the word length. This is an optional layer, often omitted in current studies.

Recurrent layer: This layer is a LSTM network that outputs a vector at each timestep to capture long-distance dependencies of the words. A single-layer unidirectional LSTM is generally used, but bidirectional or multilayered LSTMs are also often used.

Pooling layer: This layer transforms outputs of the recurrent layer \(\mathcal {H}=\{ \varvec{h}_{j1},\) \(\varvec{h}_{j2},\) \(\cdots ,\) \(\varvec{h}_{jN_{j}}\}\) into a fixed-length vector. Mean-over-time (MoT) pooling, which calculates an average vector \(\varvec{M}_{j}=\frac{1}{N_{j}}\sum _{t=1}^{N_{j}}\varvec{h}_{jt}\) , is generally used because it tends to provide stable accuracy. Other frequently used pooling methods include the last pool, which uses the last output of the recurrent layer \(\varvec{h}_{jN_{j}}\) , and a pooling-with-attention mechanism.

Linear layer with sigmoid activation: This layer projects pooling-layer output to a scalar value in the range [0, 1] by utilizing the sigmoid function as \(\sigma (\varvec{W}\varvec{M}_{j}+\text{ b })\) , where \(\varvec{W}\) is a weight matrix and \(\text{ b }\) is a bias. Model training is conducted by normalizing gold-standard scores to [0, 1], but the predicted scores are rescaled to the original score range in the prediction phase.

Architectures of DNN-AES models.

3.2 BERT-Based Model

BERT, a pretrained language model released by the Google AI Language team, has achieved state-of-the-art results in various NLP tasks [ 7 ]. BERT has been applied to AES [ 32 ] and automated short-answer grading (SAG) [ 19 , 21 , 36 ] since 2019, and provides good accuracy.

BERT is defined as a multilayer bidirectional transformer network [ 46 ]. Transformers are a neural network architecture designed to handle ordered sequences of data using an attention mechanism. Specifically, transformers consist of multiple layers (called transformer blocks ), each containing a multi-head self-attention and a position-wise fully connected feed-forward network. See Ref. [ 46 ] for details of this architecture.

BERT is trained in pretraining and fine-tuning steps. Pretraining is conducted on huge amounts of unlabeled text data over two tasks, masked language modeling and next-sentence prediction , the former predicting the identities of words that have been masked out of the input text and the latter predicting whether two given sentences are adjacent.

Using BERT for a target NLP task, including AES, requires fine-tuning (retraining), which is conducted from a task-specific supervised dataset after initializing model parameters to pretrained values. When using BERT for AES, input essays require preprocessing, namely adding a special token (“CLS”) to the beginning of each input. BERT output corresponding to this token is used as the aggregate sequence representation [ 7 ]. We can thus score an essay by inputting its representation to a linear layer with sigmoid activation , as illustrated in Fig. 1 (b).

3.3 Problems in Model Training

Training of CNN-LSTM-based AES models and fine-tuning of BERT-based AES models are conducted using large datasets of essays by graded human raters. For model training, the mean-squared error (MSE) between predicted and gold-standard scores is used as the loss function. Specifically, letting \(y_{j}\) be the gold-standard score for essay \(e_j\) and letting \(\hat{y}_{j}\) be the predicted score, the MSE loss function is defined as \(\frac{1}{J}\sum _{j=1}^J(y_{j}-\hat{y}_{j})^2\) .

The gold-standard score \(y_{j}\) is a score for essay \(e_j\) assigned by a human rater in a set of raters \(\mathcal{R}\) . When multiple raters grade each essay, the gold-standard score should be determined by selecting one score or by calculating an average or total score. In any case, such scores depend strongly on rater characteristics, as discussed in Sect. 1 . The accuracy of a DNN model drops when such biased data are used for model training, because the trained model inherits bias effects [ 3 , 12 , 17 ]. In educational and psychological measurement research, item response theory (IRT) models that can estimate essay scores while considering effects of rater characteristics have recently been proposed [ 9 , 29 , 30 , 38 , 42 , 43 , 44 ]. The main goal of this study is to train AES models using IRT-based unbiased scores. The next section introduces the IRT models.

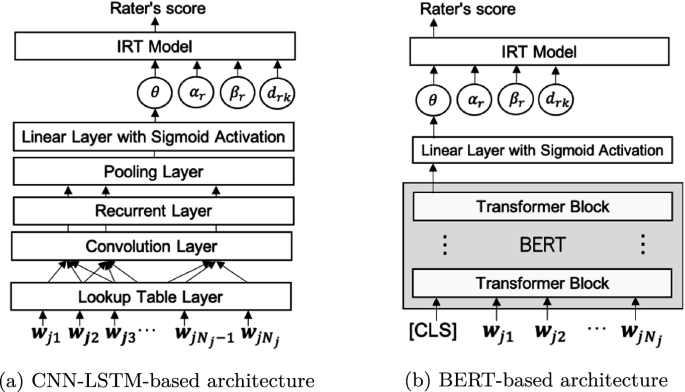

4 Item Response Theory Models with Rater Parameters

IRT [ 20 ] is a test theory based on mathematical models. IRT represents the probability of an examinee response to a test item as a function of latent examinee ability and item characteristics such as difficulty and discrimination. IRT is widely used for educational testing because it offers many benefits. For example, IRT can estimate examinee ability considering effects of item characteristics. Also, the abilities of examinees responding to different test items can be measured on the same scale, and missing response data can be easily handled.

Traditional IRT models are applicable to two-way data (examinees \(\times \) test items), consisting of examinee test item scores. For example, the generalized partial credit model (GPCM) [ 25 ], a representative polytomous IRT model, defines the probability that examinee j receives score k for test item i as

where \(\theta _j\) is the latent ability of examinee j , \(\alpha _i\) is a discrimination parameter for item i , \(\beta _{i}\) is a difficulty parameter for item i , and \(d_{ik}\) is a step difficulty parameter denoting difficulty of transition between scores \(k-1\) and k in the item. Here, \(d_{i1}=0\) , and \(\sum _{k=2}^{K} d_{ik} = 0\) is given for model identification.

However, conventional GPCM ignores rater factors, so it is not applicable to rating data given by multiple raters as assumed in this study. Extension models that incorporate parameters representing rater characteristics have been proposed to resolve this difficulty [ 29 , 30 , 38 , 42 , 43 , 44 , 45 ]. This study introduces a state-of-the-art model [ 44 , 45 ] that is most robust for a large variety of raters. This model defines the probability that rater r assigns score k to examinee j ’s essay for a test item (e.g., an essay task) i as

where \(\alpha _r\) is the consistency of rater r , \(\beta _{r}\) is the strictness of rater r , and \(d_{rk}\) is the severity of rater r within category k . For model identification, we assume \(\sum _{i=1}^{I} \log \alpha _{i} = 0\) , \(\sum _{i=1}^{I} \beta _{i} = 0\) , \(d_{r1}=0\) , and \(\sum _{k=2}^{K} d_{rk} = 0\) .

This study applies this IRT model to rating data \(\varvec{U}\) in training data. Note that DNN-AES models are trained for each essay task. Therefore, rating data \(\varvec{U}\) are defined as two-way data (examinees \(\times \) raters). When the number of tasks is fixed to one in the model, the above model identification constraints make \(\alpha _i\) and \(\beta _i\) ignorable, so Eq. ( 2 ) becomes

This equation is consistent with conventional GPCM, regarding use of item parameters as the rater parameters. Note that \(\theta _j\) in Eq. ( 3 ) represents not only the ability of examinee j but also the latent unbiased scores for essay \(e_j\) , because only one essay is associated with each examinee. This model thus provides essay scores with rater bias effects removed.

5 Proposed Method

We propose a DNN-AES framework that uses IRT-based unbiased scores \(\varvec{\theta } = \{\theta _j \mid j \in \mathcal{J}\}\) to deal with rater bias in training data.

Proposed architectures.

Figure 2 shows the architectures of the proposed method. As that figure shows, the proposed method is defined by stacking an IRT model over a conventional DNN-AES model. Training of our models occurs in two steps:

Estimate the IRT scores \(\varvec{\theta }\) from the rating data \(\varvec{U}\) .

Train AES models using the IRT scores \(\varvec{\theta }\) as the gold-standard scores. Specifically, the MSE loss function for training is defined as \(\frac{1}{J}\sum _{j=1}^J(\theta _{j}-\hat{\theta }_{j})^2\) , where \(\hat{\theta }_j\) represents the AES’s predicted score for essay \(e_j\) . Since scores \(\varvec{\theta }\) are estimated while considering rater bias effects, a trained model will not reflect bias effects. Note that the gold-standard scores must be rescaled to the range [0, 1] for training because sigmoid activation is used in the output layer. In IRT, \(99.7\%\) of \({\theta }_j\) fall within the range [−3, 3] because a standard normal distribution is generally assumed. We therefore apply a linear transformation from the range [−3, 3] to [0, 1] after rounding the scores lower than \(-3\) to \(-3\) , and those higher than 3 to 3.

Note that the increase in training time for the proposed method compared with a conventional method is the time for IRT parameter estimation.

In the testing phase, the score for new essay \(e_{j'}\) is predicted in two steps:

Predict the IRT score \(\theta _{j'}\) from a trained AES model, and rescale it to the range [−3,3].

Calculate the expected score \(\hat{U}_{j'}\) , which corresponds to an unbiased original-scaled score of \(e_{j'}\) [ 39 ], as

6 Experiments

This section describes evaluation of the effectiveness of the proposed method through actual data experiments.

6.1 Actual Data

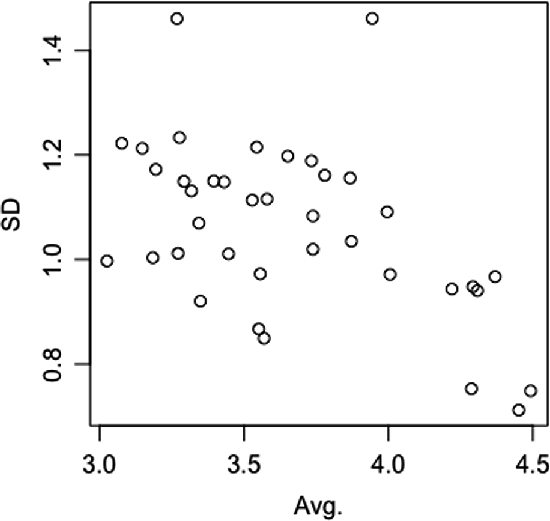

Score statistics (average and SD) for each rater.

These experiments used the Automated Student Assessment Prize (ASAP) dataset, which is widely used as benchmark data in AES studies. This dataset consists of essays on eight topics, originally written by students from grades 7 to 10. There are 12,978 essays, averaging 1,622 essays per topic. However, this dataset cannot be directly used to evaluate the proposed method, because despite its essays having been graded by multiple raters, it contains no rater identifiers.

We therefore employed other raters and asked them to grade essays in the ASAP dataset. We used essay data for the fifth ASAP topic, because the number of essays in that topic is relatively large ( \(n=1805\) ). We recruited 38 native English speakers as raters through Amazon Mechanical Turk and assigned four raters to each essay. Each rater graded around 195 essays. The assessment rubric used the same five rating categories as ASAP. Average Pearson’s correlation between the collected rating scores and the original ASAP scores was 0.675.

To confirm any differences in rater characteristics, we plotted averaged score values and standard deviations (SD) for each rater, as shown in Fig. 3 . In that figure, each plot represents a rater, and horizontal and vertical axes respectively show the average and SD values. In addition, Table 1 shows appearance rates in the five rating categories for 10 representative raters. The figure and table show extreme differences in grading characteristics among the raters, suggesting that consideration of rater bias is required.

6.2 Experimental Procedures

This subsection shows that the proposed method can provide more robust scores than can conventional AES models, even when the rater grading each essay in the training data changes. The experimental procedures, which are similar to those used in previous studies examining IRT scoring robustness [ 39 , 40 , 41 , 42 ], were as follows:

We estimated IRT parameters by the Markov chain Monte Carlo (MCMC) algorithm [ 30 , 42 ] using all rating data.

We created a dataset consisting of (essay, score) pairs by randomly selecting one score for each essay from among the scores assigned by multiple raters. We repeated this data generation 10 times. Hereafter, the m -th generated dataset is represented as \(\varvec{U}^{\prime }_m\) .

From each dataset \(\varvec{U}^{\prime }_m\) , we estimated IRT scores \(\varvec{\theta }\) (referred to as \(\varvec{\theta }_m\) ) given the rater parameters obtained in Step 1, and then created a dataset \(\varvec{U}^{\prime \prime }_m\) comprising essays and \(\varvec{\theta }_m\) values.

Using each dataset \(\varvec{U}^{\prime \prime }_m\) , we conducted five-fold cross validation to train AES models and to obtain predicted scores \(\varvec{\hat{\theta }}_m\) for all essays.

We calculated metrics for agreement between the expected scores calculated by Eq. ( 4 ) given \(\varvec{\hat{\theta }}_m\) and those calculated given \(\varvec{\hat{\theta }}_{m^{\prime }}\) for all unique \(m, m^{\prime } \in \{1,\cdots ,10\}\) pairs ( \(_{10} C_2 = 45\) pairs in total). As agreement metrics, we used Cohen’s kappa, weighted kappa, root mean squared error (RMSE), and Pearson correlation coefficient.

We calculated average metric values obtained from the 45 pairs.

High kappa and correlation and low RMSE values obtained from the experiment indicate that score predictions are more robust for different raters.

We conducted a similar experiment using conventional DNN-AES models without the IRT model. Specifically, using each dataset \(\varvec{U}^{\prime }_m\) , we predicted essay scores from a DNN-AES model through five-fold cross validation procedures as in Step 4. We then calculated the four agreement metrics among the predicted scores obtained from different datasets \(\varvec{U}^{\prime }_m\) , and averaged them.

These experiments were conducted with several DNN-AES models. Specifically, we examined CNN-LSTM models using MoT pooling or last pooling, those models without a CNN layer, and the BERT model. These models were implemented in Python with the Keras library. For the BERT model, we used the base -sized pretrained model. The hyperparameters and dropout settings were determined following Refs. [ 2 , 7 , 46 ].

6.3 Experimental Results

Table 2 shows the results, which indicate that the proposed method sufficiently improves agreement metrics as compared to the conventional models in all cases. The results indicate that the proposed method provides stable scores when the rater allocation for each essay in training data is changed, thus demonstrating that it is highly robust against rater bias. Note that the values in Table 2 are not comparable with the results of previous AES studies because our experiment and previous experiments evaluated different aspects of AES performance.

In addition, as in previous AES studies, we evaluated score ( \(\theta \) ) prediction accuracy of the proposed method through five-fold cross-validation. We measured accuracy using mean absolute error (MAE), RMSE, the correlation coefficient, and the coefficient of determination ( \(R^2\) ), because \(\theta \) is a continuous variable. Table 3 shows the results, which indicate that the CNN-LSTM and LSTM models with MoT pooling achieved higher performance than did those with last pooling. The table also shows that the CNN did not effectively improve accuracy. These tendencies are consistent with a previous study [ 2 ]. In addition, the BERT provided the highest accuracy, which is also consistent with current NLP studies.

Tables 2 and 3 show that the score prediction robustness in Table 2 tends to increase with score prediction accuracy. This might be because scores in low-performance DNN-AES models are strongly biased not only by rater characteristics, but also by prediction errors arising from the model itself. With increasing accuracy of DNN-AES models, rater bias effects as a percentage of overall error increases, suggesting that the impact of the proposed method increases.

7 Conclusion

We showed that DNN-AES model performance strongly depends on the characteristics of raters grading essays in training data. To resolve this problem, we proposed a new DNN-AES framework that integrates IRT models. Specifically, we formulated our method as a two-stage architecture that stacks the IRT model over a conventional DNN-AES model. Through experiments using an actual dataset, we demonstrated that the proposed method can provide more robust essay scores than can conventional DNN-AES models. The proposed method is simple but powerful, and is easily applicable to any AES model. As described in the Introduction, our method is also highly suited to situations where high-quality training data are hard to prepare, including educational contexts.

In future studies, we expect to evaluate effectiveness of the proposed method using various datasets. Although this study mainly focused on robustness against rater bias, the proposed method might also improve prediction accuracy for each rater’s score. In future studies, the accuracy should be evaluated. Our method is defined as a two-stage procedure for separately training IRT models and DNN-AES models. However, conducting end-to-end optimization would further improve the performance. This extension is another topic for future study.

Abosalem, Y.: Beyond translation: adapting a performance-task-based assessment of critical thinking ability for use in Rwanda. Int. J. Secondary Educ. 4 (1), 1–11 (2016)

Article Google Scholar

Alikaniotis, D., Yannakoudakis, H., Rei, M.: Automatic text scoring using neural networks. In: Proceedings of the Annual Meeting of the Association for Computational Linguistics, pp. 715–725 (2016)

Google Scholar

Amorim, E., Cançado, M., Veloso, A.: Automated essay scoring in the presence of biased ratings. In: Proceedings of the Annual Conference of the North American Chapter of the Association for Computational Linguistics, pp. 229–237 (2018)

Bernardin, H.J., Thomason, S., Buckley, M.R., Kane, J.S.: Rater rating-level bias and accuracy in performance appraisals: the impact of rater personality, performance management competence, and rater accountability. Hum. Resour. Manag. 55 (2), 321–340 (2016)

Dascalu, M., Westera, W., Ruseti, S., Trausan-Matu, S., Kurvers, H.: ReaderBench learns Dutch: building a comprehensive automated essay scoring system for Dutch language. In: André, E., Baker, R., Hu, X., Rodrigo, M.M.T., du Boulay, B. (eds.) AIED 2017. LNCS (LNAI), vol. 10331, pp. 52–63. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-61425-0_5

Chapter Google Scholar

Dasgupta, T., Naskar, A., Dey, L., Saha, R.: Augmenting textual qualitative features in deep convolution recurrent neural network for automatic essay scoring. In: Proceedings of the Workshop on Natural Language Processing Techniques for Educational Applications, Association for Computational Linguistics, pp. 93–102 (2018)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the Annual Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 4171–4186 (2019)

Eckes, T.: Examining rater effects in TestDaF writing and speaking performance assessments: a many-facet Rasch analysis. Lang. Assess. Q. 2 (3), 197–221 (2005)

Eckes, T.: Introduction to Many-Facet Rasch Measurement: Analyzing and Evaluating Rater-Mediated Assessments. Peter Lang Publication Inc., New York (2015)

Farag, Y., Yannakoudakis, H., Briscoe, T.: Neural automated essay scoring and coherence modeling for adversarially crafted input. In: Proceedings of the Annual Conference of the North American Chapter of the Association for Computational Linguistics, pp. 263–271 (2018)

Hua, C., Wind, S.A.: Exploring the psychometric properties of the mind-map scoring rubric. Behaviormetrika 46 (1), 73–99 (2018). https://doi.org/10.1007/s41237-018-0062-z

Huang, J., Qu, L., Jia, R., Zhao, B.: O2U-Net: a simple noisy label detection approach for deep neural networks. In: Proceedings of the IEEE International Conference on Computer Vision (2019)

Hussein, M.A., Hassan, H.A., Nassef, M.: Automated language essay scoring systems: a literature review. PeerJ Comput. Sci. 5 , e208 (2019)

Jin, C., He, B., Hui, K., Sun, L.: TDNN: a two-stage deep neural network for prompt-independent automated essay scoring. In: Proceedings of the Annual Meeting of the Association for Computational Linguistics, pp. 1088–1097 (2018)

Kassim, N.L.A.: Judging behaviour and rater errors: an application of the many-facet Rasch model. GEMA Online J. Lang. Stud. 11 (3), 179–197 (2011)

Ke, Z., Ng, V.: Automated essay scoring: a survey of the state of the art. In: Proceedings of the International Joint Conference on Artificial Intelligence, pp. 6300–6308 (2019)

Li, S., et al.: Coupled-view deep classifier learning from multiple noisy annotators. In: Proceedings of the Association for the Advancement of Artificial Intelligence (2020)

Liu, O.L., Frankel, L., Roohr, K.C.: Assessing critical thinking in higher education: current state and directions for next-generation assessment. ETS Res. Rep. Ser. 1 , 1–23 (2014)

Liu, T., Ding, W., Wang, Z., Tang, J., Huang, G.Y., Liu, Z.: Automatic short answer grading via multiway attention networks. In: Isotani, S., Millán, E., Ogan, A., Hastings, P., McLaren, B., Luckin, R. (eds.) AIED 2019. LNCS (LNAI), vol. 11626, pp. 169–173. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-23207-8_32

Lord, F.: Applications of Item Response Theory to Practical Testing Problems. Erlbaum Associates, Mahwah (1980)

Lun, J., Zhu, J., Tang, Y., Yang, M.: Multiple data augmentation strategies for improving performance on automatic short answer scoring. In: Proceedings of the Association for the Advancement of Artificial Intelligence (2020)

Shermis, M.D., Burstein, J.C.: Automated Essay Scoring: A Cross-disciplinary Perspective. Taylor & Francis, Abingdon (2016)

Mesgar, M., Strube, M.: A neural local coherence model for text quality assessment. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing, pp. 4328–4339 (2018)

Mim, F.S., Inoue, N., Reisert, P., Ouchi, H., Inui, K.: Unsupervised learning of discourse-aware text representation for essay scoring. In: Proceedings of the Annual Meeting of the Association for Computational Linguistics: Student Research Workshop, pp. 378–385 (2019)

Muraki, E.: A generalized partial credit model. In: van der Linden, W.J., Hambleton, R.K. (eds.) Handbook of Modern Item Response Theory, pp. 153–164. Springer, Heidelberg (1997). https://doi.org/10.1007/978-1-4757-2691-6_9

Myford, C.M., Wolfe, E.W.: Detecting and measuring rater effects using many-facet Rasch measurement: part I. J. Appl. Measur. 4 , 386–422 (2003)

Nadeem, F., Nguyen, H., Liu, Y., Ostendorf, M.: Automated essay scoring with discourse-aware neural models. In: Proceedings of the Workshop on Innovative Use of NLP for Building Educational Applications, Association for Computational Linguistics, pp. 484–493 (2019)

Nguyen, H.V., Litman, D.J.: Argument mining for improving the automated scoring of persuasive essays. In: Proceedings of the Association for the Advancement of Artificial Intelligence, pp. 5892–5899 (2018)

Patz, R.J., Junker, B.W., Johnson, M.S., Mariano, L.T.: The hierarchical rater model for rated test items and its application to large-scale educational assessment data. J. Educ. Behav. Stat. 27 (4), 341–384 (2002)

Patz, R.J., Junker, B.: Applications and extensions of MCMC in IRT: multiple item types, missing data, and rated responses. J. Educ. Behav. Stat. 24 (4), 342–366 (1999)

Rahman, A.A., Ahmad, J., Yasin, R.M., Hanafi, N.M.: Investigating central tendency in competency assessment of design electronic circuit: analysis using many facet Rasch measurement (MFRM). Int. J. Inf. Educ. Technol. 7 (7), 525–528 (2017)

Rodriguez, P.U., Jafari, A., Ormerod, C.M.: Language models and automated essay scoring. arXiv, cs.CL (2019)

Rosen, Y., Tager, M.: Making student thinking visible through a concept map in computer-based assessment of critical thinking. J. Educ. Comput. Res. 50 (2), 249–270 (2014)

Saal, F., Downey, R., Lahey, M.: Rating the ratings: assessing the psychometric quality of rating data. Psychol. Bull. 88 (2), 413–428 (1980)

Schendel, R., Tolmie, A.: Assessment techniques and students’ higher-order thinking skills. Assess. Eval. High. Educ. 42 (5), 673–689 (2017)

Sung, C., Dhamecha, T.I., Mukhi, N.: Improving short answer grading using transformer-based pre-training. In: Isotani, S., Millán, E., Ogan, A., Hastings, P., McLaren, B., Luckin, R. (eds.) AIED 2019. LNCS (LNAI), vol. 11625, pp. 469–481. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-23204-7_39

Taghipour, K., Ng, H.T.: A neural approach to automated essay scoring. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing, pp. 1882–1891 (2016)

Ueno, M., Okamoto, T.: Item response theory for peer assessment. In: Proceedings of the IEEE International Conference on Advanced Learning Technologies, pp. 554–558 (2008)

Uto, M.: Rater-effect IRT model integrating supervised LDA for accurate measurement of essay writing ability. In: Isotani, S., Millán, E., Ogan, A., Hastings, P., McLaren, B., Luckin, R. (eds.) AIED 2019. LNCS (LNAI), vol. 11625, pp. 494–506. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-23204-7_41

Uto, M., Thien, N.D., Ueno, M.: Group optimization to maximize peer assessment accuracy using item response theory. In: André, E., Baker, R., Hu, X., Rodrigo, M.M.T., du Boulay, B. (eds.) AIED 2017. LNCS (LNAI), vol. 10331, pp. 393–405. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-61425-0_33

Uto, M., Duc Thien, N., Ueno, M.: Group optimization to maximize peer assessment accuracy using item response theory and integer programming. IEEE Trans. Learn. Technol. 13 (1), 91–106 (2020)

Uto, M., Ueno, M.: Item response theory for peer assessment. IEEE Trans. Learn. Technol. 9 (2), 157–170 (2016)

Uto, M., Ueno, M.: Empirical comparison of item response theory models with rater’s parameters. Heliyon 4 (5), 1–32 (2018). Elsevier

Uto, M., Ueno, M.: Item response theory without restriction of equal interval scale for rater’s score. In: Penstein Rosé, C., et al. (eds.) AIED 2018. LNCS (LNAI), vol. 10948, pp. 363–368. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-93846-2_68

Uto, M., Ueno, M.: A generalized many-facet Rasch model and its Bayesian estimation using Hamiltonian Monte Carlo. Behaviormetrika 47 , 1–28 (2020). https://doi.org/10.1007/s41237-020-00115-7

Vaswani, A., et al.: Attention is all you need. In: Proceedings of the International Conference on Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

Wang, Y., Wei, Z., Zhou, Y., Huang, X.: Automatic essay scoring incorporating rating schema via reinforcement learning. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing, pp. 791–797 (2018)

Download references

Acknowledgment

This work was supported by JSPS KAKENHI 17H04726 and 17K20024.

Author information

Authors and affiliations.

The University of Electro-Communications, Tokyo, Japan

Masaki Uto & Masashi Okano

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Masaki Uto .

Editor information

Editors and affiliations.

Federal University of Alagoas, Maceió, Brazil

Ig Ibert Bittencourt

University College London, London, UK

Mutlu Cukurova

Carleton University, Ottawa, ON, Canada

Kasia Muldner

Rose Luckin

University of Malaga, Málaga, Spain

Rights and permissions

Reprints and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper.

Uto, M., Okano, M. (2020). Robust Neural Automated Essay Scoring Using Item Response Theory. In: Bittencourt, I., Cukurova, M., Muldner, K., Luckin, R., Millán, E. (eds) Artificial Intelligence in Education. AIED 2020. Lecture Notes in Computer Science(), vol 12163. Springer, Cham. https://doi.org/10.1007/978-3-030-52237-7_44

Download citation

DOI : https://doi.org/10.1007/978-3-030-52237-7_44

Published : 30 June 2020

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-52236-0

Online ISBN : 978-3-030-52237-7

eBook Packages : Computer Science Computer Science (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Entropy (Basel)

Improving Automated Essay Scoring by Prompt Prediction and Matching

1 School of Artificial Intelligence, Beijing Normal University, Beijing 100875, China

Tianbao Song

2 School of Computer Science and Engineering, Beijing Technology and Business University, Beijing 100048, China

Weiming Peng

Associated data.

Publicly available datasets were used in this study. These data can be found here: http://hsk.blcu.edu.cn/ (accessed on 6 March 2022).

Automated essay scoring aims to evaluate the quality of an essay automatically. It is one of the main educational application in the field of natural language processing. Recently, Pre-training techniques have been used to improve performance on downstream tasks, and many studies have attempted to use pre-training and then fine-tuning mechanisms in an essay scoring system. However, obtaining better features such as prompts by the pre-trained encoder is critical but not fully studied. In this paper, we create a prompt feature fusion method that is better suited for fine-tuning. Besides, we use multi-task learning by designing two auxiliary tasks, prompt prediction and prompt matching, to obtain better features. The experimental results show that both auxiliary tasks can improve model performance, and the combination of the two auxiliary tasks with the NEZHA pre-trained encoder produces the best results, with Quadratic Weighted Kappa improving 2.5% and Pearson’s Correlation Coefficient improving 2% on average across all results on the HSK dataset.

1. Introduction

Automated essay scoring (AES), which aims to automatically evaluate and score essays, is one typical application of natural language processing (NLP) technique in the field of education [ 1 ]. In earlier studies, a combination of handcrafted design features and statistical machine learning is used [ 2 , 3 ], and with the development of deep learning, neural network-based approaches gradually become mainstream [ 4 , 5 , 6 , 7 , 8 ]. Recently, pre-trained language models have gradually become the foundation module of NLP, and the paradigm of pre-training, then fine-tuning, is also widely adopted. Pre-training is the most common method for transfer learning, in which a model is trained on a surrogate task and then adapted to the desired downstream task by fine-tuning [ 9 ]. Some research has attempted to use pre-training modules in AES tasks [ 10 , 11 , 12 ]. Howard et al. [ 10 ] utilize the pre-trained encoder as a feature extraction module to obtain a representation of the input text and update the pre-trained model parameters based on the downstream text classification task by adding a linear layer. Rodriguez et al. [ 11 ] employ a pre-trained encoder as the essay representation extraction module for the AES task, with inputs at various granularities of the sentence, paragraph, overall, etc., and then use regression as the training target for the downstream task to further optimize the representation. In this paper, we fine-tune the pre-trained encoder as a feature extraction module and convert the essay scoring task into regression as in previous studies [ 4 , 5 , 6 , 7 ].