Smart. Open. Grounded. Inventive. Read our Ideas Made to Matter.

Which program is right for you?

Through intellectual rigor and experiential learning, this full-time, two-year MBA program develops leaders who make a difference in the world.

A rigorous, hands-on program that prepares adaptive problem solvers for premier finance careers.

A 12-month program focused on applying the tools of modern data science, optimization and machine learning to solve real-world business problems.

Earn your MBA and SM in engineering with this transformative two-year program.

Combine an international MBA with a deep dive into management science. A special opportunity for partner and affiliate schools only.

A doctoral program that produces outstanding scholars who are leading in their fields of research.

Bring a business perspective to your technical and quantitative expertise with a bachelor’s degree in management, business analytics, or finance.

A joint program for mid-career professionals that integrates engineering and systems thinking. Earn your master’s degree in engineering and management.

An interdisciplinary program that combines engineering, management, and design, leading to a master’s degree in engineering and management.

Executive Programs

A full-time MBA program for mid-career leaders eager to dedicate one year of discovery for a lifetime of impact.

This 20-month MBA program equips experienced executives to enhance their impact on their organizations and the world.

Non-degree programs for senior executives and high-potential managers.

A non-degree, customizable program for mid-career professionals.

Sam Altman thinks AI will change the world. All of it.

Categorical thinking can lead to investing errors

How storytelling helps data-driven teams succeed

Credit: Alejandro Giraldo

Ideas Made to Matter

How to use algorithms to solve everyday problems

Kara Baskin

May 8, 2017

How can I navigate the grocery store quickly? Why doesn’t anyone like my Facebook status? How can I alphabetize my bookshelves in a hurry? Apple data visualizer and MIT System Design and Management graduate Ali Almossawi solves these common dilemmas and more in his new book, “ Bad Choices: How Algorithms Can Help You Think Smarter and Live Happier ,” a quirky, illustrated guide to algorithmic thinking.

For the uninitiated: What is an algorithm? And how can algorithms help us to think smarter?

An algorithm is a process with unambiguous steps that has a beginning and an end, and does something useful.

Algorithmic thinking is taking a step back and asking, “If it’s the case that algorithms are so useful in computing to achieve predictability, might they also be useful in everyday life, when it comes to, say, deciding between alternative ways of solving a problem or completing a task?” In all cases, we optimize for efficiency: We care about time or space.

Note the mention of “deciding between.” Computer scientists do that all the time, and I was convinced that the tools they use to evaluate competing algorithms would be of interest to a broad audience.

Why did you write this book, and who can benefit from it?

All the books I came across that tried to introduce computer science involved coding. My approach to making algorithms compelling was focusing on comparisons. I take algorithms and put them in a scene from everyday life, such as matching socks from a pile, putting books on a shelf, remembering things, driving from one point to another, or cutting an onion. These activities can be mapped to one or more fundamental algorithms, which form the basis for the field of computing and have far-reaching applications and uses.

I wrote the book with two audiences in mind. One, anyone, be it a learner or an educator, who is interested in computer science and wants an engaging and lighthearted, but not a dumbed-down, introduction to the field. Two, anyone who is already familiar with the field and wants to experience a way of explaining some of the fundamental concepts in computer science differently than how they’re taught.

I’m going to the grocery store and only have 15 minutes. What do I do?

Do you know what the grocery store looks like ahead of time? If you know what it looks like, it determines your list. How do you prioritize things on your list? Order the items in a way that allows you to avoid walking down the same aisles twice.

For me, the intriguing thing is that the grocery store is a scene from everyday life that I can use as a launch pad to talk about various related topics, like priority queues and graphs and hashing. For instance, what is the most efficient way for a machine to store a prioritized list, and what happens when the equivalent of you scratching an item from a list happens in the machine’s list? How is a store analogous to a graph (an abstraction in computer science and mathematics that defines how things are connected), and how is navigating the aisles in a store analogous to traversing a graph?

Nobody follows me on Instagram. How do I get more followers?

The concept of links and networks, which I cover in Chapter 6, is relevant here. It’s much easier to get to people whom you might be interested in and who might be interested in you if you can start within the ball of links that connects those people, rather than starting at a random spot.

You mention Instagram: There, the hashtag is one way to enter that ball of links. Tag your photos, engage with users who tag their photos with the same hashtags, and you should be on your way to stardom.

What are the secret ingredients of a successful Facebook post?

I’ve posted things on social media that have died a sad death and then posted the same thing at a later date that somehow did great. Again, if we think of it in terms that are relevant to algorithms, we’d say that the challenge with making something go viral is really getting that first spark. And to get that first spark, a person who is connected to the largest number of people who are likely to engage with that post, needs to share it.

With [my first book], “Bad Arguments,” I spent a month pouring close to $5,000 into advertising for that project with moderate results. And then one science journalist with a large audience wrote about it, and the project took off and hasn’t stopped since.

What problems do you wish you could solve via algorithm but can’t?

When we care about efficiency, thinking in terms of algorithms is useful. There are cases when that’s not the quality we want to optimize for — for instance, learning or love. I walk for several miles every day, all throughout the city, as I find it relaxing. I’ve never asked myself, “What’s the most efficient way I can traverse the streets of San Francisco?” It’s not relevant to my objective.

Algorithms are a great way of thinking about efficiency, but the question has to be, “What approach can you optimize for that objective?” That’s what worries me about self-help: Books give you a silver bullet for doing everything “right” but leave out all the nuances that make us different. What works for you might not work for me.

Which companies use algorithms well?

When you read that the overwhelming majority of the shows that users of, say, Netflix, watch are due to Netflix’s recommendation engine, you know they’re doing something right.

Related Articles

DEV Community

Posted on Nov 8, 2023 • Updated on Nov 9, 2023

Introduction to Algorithms: What Every Beginner Should Know

Algorithms are the beating heart of computer science and programming. They are the step-by-step instructions that computers follow to solve problems and perform tasks. Whether you're a beginner or an aspiring programmer, understanding the fundamentals of algorithms is essential. In this blog post, we will introduce you to the world of algorithms, what they are, why they matter, and the key concepts every beginner should know.

What is an Algorithm?

At its core, an algorithm is a finite set of well-defined instructions for solving a specific problem or task. These instructions are executed in a predetermined sequence, often designed to transform input data into a desired output.

Think of algorithms as recipes: just as a recipe tells you how to prepare a meal, an algorithm tells a computer how to perform a particular task. But instead of cooking a delicious dish, you're instructing a computer to perform a specific computation or solve a problem.

Why Do Algorithms Matter?

Algorithms play a pivotal role in the world of computing for several reasons:

Efficiency: Well-designed algorithms can execute tasks quickly and use minimal resources, which is crucial in applications like search engines and real-time systems.

Problem Solving: Algorithms provide structured approaches to problem-solving and help us tackle complex issues more effectively.

Reusability: Once developed, algorithms can be used in various applications, promoting code reuse and reducing redundancy.

Scalability: Algorithms are essential for handling large datasets and growing computational needs in modern software.

Key Concepts in Algorithm Design

1. input and output.

Input: Algorithms take input data as their starting point. This could be a list of numbers, a text document, or any other data structure.

Output: Algorithms produce a result or output based on the input. This could be a sorted list, a specific calculation, or a solution to a problem.

2. Correctness

An algorithm is considered correct if it produces the desired output for all valid inputs. Ensuring correctness is a fundamental aspect of algorithm design and testing.

3. Efficiency

Efficiency is a critical consideration in algorithm design. It's about finding the most optimal way to solve a problem, which often involves minimizing the consumption of time and resources.

4. Scalability

Algorithms should be designed to handle input data of varying sizes efficiently. Scalable algorithms can adapt to growing data requirements without a significant decrease in performance.

5. Time Complexity and Space Complexity

Time complexity refers to the amount of time an algorithm takes to complete based on the size of the input. Space complexity concerns the amount of memory an algorithm requires. Understanding these complexities helps assess an algorithm's efficiency.

Examples of Common Algorithms

Sorting Algorithms: Algorithms like "Bubble Sort," "Quick Sort," and "Merge Sort" rearrange a list of items into a specific order.

Search Algorithms: Algorithms like "Binary Search" efficiently find a specific item in a sorted list.

Graph Algorithms: Algorithms like "Breadth-First Search" and "Dijkstra's Algorithm" navigate through networks and find optimal routes.

Dynamic Programming: Algorithms like the "Fibonacci Sequence" solver use a technique called dynamic programming to optimize problem-solving.

Algorithms are the building blocks of computer science and programming. While they might sound complex, they are at the heart of everything from internet search engines to your favorite social media platforms. As a beginner, understanding the basics of algorithms and their importance is a significant step in your coding journey.

As you dive into the world of algorithms, you'll discover that they can be both fascinating and challenging. Don't be discouraged by complex problems; instead, view them as opportunities to develop your problem-solving skills. Whether you're aiming to become a software developer, data scientist, or just a more proficient programmer, a solid grasp of algorithms is an invaluable asset. Happy coding! 🚀💡

Also, I have to tell you, this article was partially generated with the help of ChatGPT!!!

Top comments (1)

Templates let you quickly answer FAQs or store snippets for re-use.

- Location Bangkok 🇹🇭

- Joined Jun 1, 2018

Hi there. This post reads a lot like it was generated or strongly assisted by AI. If so, please consider amending it to comply with the DEV.to guidelines concerning such content...

From " The DEV Community Guidelines for AI-Assisted and -Generated Articles ":

AI-assisted and -generated articles should… Be created and published in good faith, meaning with honest, sincere, and harmless intentions. Disclose the fact that they were generated or assisted by AI in the post, either upfront using the tag #ABotWroteThis or at any point in the article’s copy (including right at the end). - For example, a conclusion that states “Surprise, this article was generated by ChatGPT!” or the disclaimer “This article was created with the help of AI” would be appropriate. Ideally add something to the conversation regarding AI and its capabilities. Tell us your story of using the tool to create content, and why!

Are you sure you want to hide this comment? It will become hidden in your post, but will still be visible via the comment's permalink .

Hide child comments as well

For further actions, you may consider blocking this person and/or reporting abuse

Redis Pipeline: The Way of Sending Redis Commands in One Shot

Nightsilver Academy - May 5

Git is Elegant

Ron Newcomb - May 4

Access your Synology NAS with a custom domain on Bunny.net (DDNS)

Steeve - May 4

How do you use git between devices?

Doug Bridgens - May 7

We're a place where coders share, stay up-to-date and grow their careers.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1: Algorithmic Problem Solving

- Last updated

- Save as PDF

- Page ID 46789

- Harrison Njoroge

- African Virtual University

Unit Objectives

Upon completion of this unit the learner should be able to:

- describe an algorithm

- explain the relationship between data and algorithm

- outline the characteristics of algorithms

- apply pseudo codes and flowcharts to represent algorithms

Unit Introduction

This unit introduces learners to data structures and algorithm course. The unit is on the different data structures and their algorithms that can help implement the different data structures in the computer. The application of the different data structures is presented by using examples of algorithms and which are not confined to a particular computer programming language.

- Data: the structural representation of logical relationships between elements of data

- Algorithm: finite sequence of steps for accomplishing some computational task

- Pseudo code: an informal high-level description of the operating principle of a computer program or other algorithm

- Flow chart: diagrammatic representation illustrates a solution model to a given problem.

Learning Activities

- 1.1: Activity 1 - Introduction to Algorithms and Problem Solving In this learning activity section, the learner will be introduced to algorithms and how to write algorithms to solve tasks faced by learners or everyday problems. Examples of the algorithm are also provided with a specific application to everyday problems that the learner is familiar with. The learners will particularly learn what is an algorithm, the process of developing a solution for a given task, and finally examples of application of the algorithms are given.

- 1.2: Activity 2 - The characteristics of an algorithm This section introduces the learners to the characteristics of algorithms. These characteristics make the learner become aware of what to ensure is basic, present and mandatory for any algorithm to qualify to be one. It also exposes the learner to what to expect from an algorithm to achieve or indicate. Key expectations are: the fact that an algorithm must be exact, terminate, effective, general among others.

- 1.3: Activity 3 - Using pseudo-codes and flowcharts to represent algorithms The student will learn how to design an algorithm using either a pseudo code or flowchart. Pseudo code is a mixture of English like statements, some mathematical notations and selected keywords from a programming language. It is one of the tools used to design and develop the solution to a task or problem. Pseudo codes have different ways of representing the same thing and emphasis is on the clarity and not style.

- 1.4: Unit Summary In this unit, you have seen what an algorithm is. Based on this knowledge, you should now be able to characterize an algorithm by stating its properties. We have explored the different ways of representing an algorithm such as using human language, pseudo codes and flow chart. You should now be able to present solutions to problems in form of an algorithm.

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Unit 1: Algorithms

About this unit.

We've partnered with Dartmouth college professors Tom Cormen and Devin Balkcom to teach introductory computer science algorithms, including searching, sorting, recursion, and graph theory. Learn with a combination of articles, visualizations, quizzes, and coding challenges.

Intro to algorithms

- What is an algorithm and why should you care? (Opens a modal)

- A guessing game (Opens a modal)

- Route-finding (Opens a modal)

- Discuss: Algorithms in your life (Opens a modal)

Binary search

- Binary search (Opens a modal)

- Implementing binary search of an array (Opens a modal)

- Challenge: Binary search (Opens a modal)

- Running time of binary search (Opens a modal)

- Running time of binary search 5 questions Practice

Asymptotic notation

- Asymptotic notation (Opens a modal)

- Big-θ (Big-Theta) notation (Opens a modal)

- Functions in asymptotic notation (Opens a modal)

- Big-O notation (Opens a modal)

- Big-Ω (Big-Omega) notation (Opens a modal)

- Comparing function growth 4 questions Practice

- Asymptotic notation 5 questions Practice

Selection sort

- Sorting (Opens a modal)

- Challenge: implement swap (Opens a modal)

- Selection sort pseudocode (Opens a modal)

- Challenge: Find minimum in subarray (Opens a modal)

- Challenge: implement selection sort (Opens a modal)

- Analysis of selection sort (Opens a modal)

- Project: Selection sort visualizer (Opens a modal)

Insertion sort

- Insertion sort (Opens a modal)

- Challenge: implement insert (Opens a modal)

- Insertion sort pseudocode (Opens a modal)

- Challenge: Implement insertion sort (Opens a modal)

- Analysis of insertion sort (Opens a modal)

Recursive algorithms

- Recursion (Opens a modal)

- The factorial function (Opens a modal)

- Challenge: Iterative factorial (Opens a modal)

- Recursive factorial (Opens a modal)

- Challenge: Recursive factorial (Opens a modal)

- Properties of recursive algorithms (Opens a modal)

- Using recursion to determine whether a word is a palindrome (Opens a modal)

- Challenge: is a string a palindrome? (Opens a modal)

- Computing powers of a number (Opens a modal)

- Challenge: Recursive powers (Opens a modal)

- Multiple recursion with the Sierpinski gasket (Opens a modal)

- Improving efficiency of recursive functions (Opens a modal)

- Project: Recursive art (Opens a modal)

Towers of Hanoi

- Towers of Hanoi (Opens a modal)

- Towers of Hanoi, continued (Opens a modal)

- Challenge: Solve Hanoi recursively (Opens a modal)

- Move three disks in Towers of Hanoi 3 questions Practice

- Divide and conquer algorithms (Opens a modal)

- Overview of merge sort (Opens a modal)

- Challenge: Implement merge sort (Opens a modal)

- Linear-time merging (Opens a modal)

- Challenge: Implement merge (Opens a modal)

- Analysis of merge sort (Opens a modal)

- Overview of quicksort (Opens a modal)

- Challenge: Implement quicksort (Opens a modal)

- Linear-time partitioning (Opens a modal)

- Challenge: Implement partition (Opens a modal)

- Analysis of quicksort (Opens a modal)

Graph representation

- Describing graphs (Opens a modal)

- Representing graphs (Opens a modal)

- Challenge: Store a graph (Opens a modal)

- Describing graphs 6 questions Practice

- Representing graphs 5 questions Practice

Breadth-first search

- Breadth-first search and its uses (Opens a modal)

- The breadth-first search algorithm (Opens a modal)

- Challenge: Implement breadth-first search (Opens a modal)

- Analysis of breadth-first search (Opens a modal)

Further learning

- Where to go from here (Opens a modal)

- School Guide

- Class 8 Syllabus

- Maths Notes Class 8

- Science Notes Class 8

- History Notes Class 8

- Geography Notes Class 8

- Civics Notes Class 8

- NCERT Soln. Class 8 Maths

- RD Sharma Soln. Class 8

- Math Formulas Class 8

How to Use Algorithms to Solve Problems?

- How to use Chat-GPT to solve Coding Problems?

- Wicked Problems and How to Solve Them?

- How to implement Genetic Algorithm using PyTorch

- Best Data Structures and Algorithms Books

- How to improve your DSA skills?

- Tricks To Solve Age-Based Problems

- The Role of Algorithms in Computing

- Most important type of Algorithms

- How to Identify & Solve Binary Search Problems?

- Quiz on Algorithms | DSA MCQs

- Introduction to Beam Search Algorithm

- Algorithms Quiz | Sudo Placement [1.8] | Question 5

- Top 50 Problems on Recursion Algorithm asked in SDE Interviews

- What are Logical Puzzles And How to Solve them?

- What is Algorithm | Introduction to Algorithms

- Top 10 Algorithms in Interview Questions

- Data Structures & Algorithms Guide for Developers

- Top 50 Binary Search Tree Coding Problems for Interviews

- Top 20 Greedy Algorithms Interview Questions

An algorithm is a process or set of rules which must be followed to complete a particular task. This is basically the step-by-step procedure to complete any task. All the tasks are followed a particular algorithm, from making a cup of tea to make high scalable software. This is the way to divide a task into several parts. If we draw an algorithm to complete a task then the task will be easier to complete.

The algorithm is used for,

- To develop a framework for instructing computers.

- Introduced notation of basic functions to perform basic tasks.

- For defining and describing a big problem in small parts, so that it is very easy to execute.

Characteristics of Algorithm

- An algorithm should be defined clearly.

- An algorithm should produce at least one output.

- An algorithm should have zero or more inputs.

- An algorithm should be executed and finished in finite number of steps.

- An algorithm should be basic and easy to perform.

- Each step started with a specific indentation like, “Step-1”,

- There must be “Start” as the first step and “End” as the last step of the algorithm.

Let’s take an example to make a cup of tea,

Step 1: Start

Step 2: Take some water in a bowl.

Step 3: Put the water on a gas burner .

Step 4: Turn on the gas burner

Step 5: Wait for some time until the water is boiled.

Step 6: Add some tea leaves to the water according to the requirement.

Step 7: Then again wait for some time until the water is getting colorful as tea.

Step 8: Then add some sugar according to taste.

Step 9: Again wait for some time until the sugar is melted.

Step 10: Turn off the gas burner and serve the tea in cups with biscuits.

Step 11: End

Here is an algorithm for making a cup of tea. This is the same for computer science problems.

There are some basics steps to make an algorithm:

- Start – Start the algorithm

- Input – Take the input for values in which the algorithm will execute.

- Conditions – Perform some conditions on the inputs to get the desired output.

- Output – Printing the outputs.

- End – End the execution.

Let’s take some examples of algorithms for computer science problems.

Example 1. Swap two numbers with a third variable

Step 1: Start Step 2: Take 2 numbers as input. Step 3: Declare another variable as “temp”. Step 4: Store the first variable to “temp”. Step 5: Store the second variable to the First variable. Step 6: Store the “temp” variable to the 2nd variable. Step 7: Print the First and second variables. Step 8: End

Example 2. Find the area of a rectangle

Step 1: Start Step 2: Take the Height and Width of the rectangle as input. Step 3: Declare a variable as “area” Step 4: Multiply Height and Width Step 5: Store the multiplication to “Area”, (its look like area = Height x Width) Step 6: Print “area”; Step 7: End

Example 3. Find the greatest between 3 numbers.

Step 1: Start Step 2: Take 3 numbers as input, say A, B, and C. Step 3: Check if(A>B and A>C) Step 4: Then A is greater Step 5: Print A Step 6 : Else Step 7: Check if(B>A and B>C) Step 8: Then B is greater Step 9: Print B Step 10: Else C is greater Step 11 : Print C Step 12: End

Advantages of Algorithm

- An algorithm uses a definite procedure.

- It is easy to understand because it is a step-by-step definition.

- The algorithm is easy to debug if there is any error happens.

- It is not dependent on any programming language

- It is easier for a programmer to convert it into an actual program because the algorithm divides a problem into smaller parts.

Disadvantages of Algorithms

- An algorithm is Time-consuming, there is specific time complexity for different algorithms.

- Large tasks are difficult to solve in Algorithms because the time complexity may be higher, so programmers have to find a good efficient way to solve that task.

- Looping and branching are difficult to define in algorithms.

Please Login to comment...

Similar reads.

- School Learning

- School Programming

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- 1. Micro-Worlds

- 2. Light-Bot in Java

- 3. Jeroos of Santong Island

- 4. Problem Solving and Algorithms

- 5. Creating Jeroo Methods

- 6. Conditionally Executing Actions

- 7. Repeating Actions

- 8. Handling Touch Events

- 9. Adding Text to the Screen

Problem Solving and Algorithms

Learn a basic process for developing a solution to a problem. Nothing in this chapter is unique to using a computer to solve a problem. This process can be used to solve a wide variety of problems, including ones that have nothing to do with computers.

Problems, Solutions, and Tools

I have a problem! I need to thank Aunt Kay for the birthday present she sent me. I could send a thank you note through the mail. I could call her on the telephone. I could send her an email message. I could drive to her house and thank her in person. In fact, there are many ways I could thank her, but that's not the point. The point is that I must decide how I want to solve the problem, and use the appropriate tool to implement (carry out) my plan. The postal service, the telephone, the internet, and my automobile are tools that I can use, but none of these actually solves my problem. In a similar way, a computer does not solve problems, it's just a tool that I can use to implement my plan for solving the problem.

Knowing that Aunt Kay appreciates creative and unusual things, I have decided to hire a singing messenger to deliver my thanks. In this context, the messenger is a tool, but one that needs instructions from me. I have to tell the messenger where Aunt Kay lives, what time I would like the message to be delivered, and what lyrics I want sung. A computer program is similar to my instructions to the messenger.

The story of Aunt Kay uses a familiar context to set the stage for a useful point of view concerning computers and computer programs. The following list summarizes the key aspects of this point of view.

A computer is a tool that can be used to implement a plan for solving a problem.

A computer program is a set of instructions for a computer. These instructions describe the steps that the computer must follow to implement a plan.

An algorithm is a plan for solving a problem.

A person must design an algorithm.

A person must translate an algorithm into a computer program.

This point of view sets the stage for a process that we will use to develop solutions to Jeroo problems. The basic process is important because it can be used to solve a wide variety of problems, including ones where the solution will be written in some other programming language.

An Algorithm Development Process

Every problem solution starts with a plan. That plan is called an algorithm.

There are many ways to write an algorithm. Some are very informal, some are quite formal and mathematical in nature, and some are quite graphical. The instructions for connecting a DVD player to a television are an algorithm. A mathematical formula such as πR 2 is a special case of an algorithm. The form is not particularly important as long as it provides a good way to describe and check the logic of the plan.

The development of an algorithm (a plan) is a key step in solving a problem. Once we have an algorithm, we can translate it into a computer program in some programming language. Our algorithm development process consists of five major steps.

Step 1: Obtain a description of the problem.

Step 2: analyze the problem., step 3: develop a high-level algorithm., step 4: refine the algorithm by adding more detail., step 5: review the algorithm..

This step is much more difficult than it appears. In the following discussion, the word client refers to someone who wants to find a solution to a problem, and the word developer refers to someone who finds a way to solve the problem. The developer must create an algorithm that will solve the client's problem.

The client is responsible for creating a description of the problem, but this is often the weakest part of the process. It's quite common for a problem description to suffer from one or more of the following types of defects: (1) the description relies on unstated assumptions, (2) the description is ambiguous, (3) the description is incomplete, or (4) the description has internal contradictions. These defects are seldom due to carelessness by the client. Instead, they are due to the fact that natural languages (English, French, Korean, etc.) are rather imprecise. Part of the developer's responsibility is to identify defects in the description of a problem, and to work with the client to remedy those defects.

The purpose of this step is to determine both the starting and ending points for solving the problem. This process is analogous to a mathematician determining what is given and what must be proven. A good problem description makes it easier to perform this step.

When determining the starting point, we should start by seeking answers to the following questions:

What data are available?

Where is that data?

What formulas pertain to the problem?

What rules exist for working with the data?

What relationships exist among the data values?

When determining the ending point, we need to describe the characteristics of a solution. In other words, how will we know when we're done? Asking the following questions often helps to determine the ending point.

What new facts will we have?

What items will have changed?

What changes will have been made to those items?

What things will no longer exist?

An algorithm is a plan for solving a problem, but plans come in several levels of detail. It's usually better to start with a high-level algorithm that includes the major part of a solution, but leaves the details until later. We can use an everyday example to demonstrate a high-level algorithm.

Problem: I need a send a birthday card to my brother, Mark.

Analysis: I don't have a card. I prefer to buy a card rather than make one myself.

High-level algorithm:

Go to a store that sells greeting cards Select a card Purchase a card Mail the card

This algorithm is satisfactory for daily use, but it lacks details that would have to be added were a computer to carry out the solution. These details include answers to questions such as the following.

"Which store will I visit?"

"How will I get there: walk, drive, ride my bicycle, take the bus?"

"What kind of card does Mark like: humorous, sentimental, risqué?"

These kinds of details are considered in the next step of our process.

A high-level algorithm shows the major steps that need to be followed to solve a problem. Now we need to add details to these steps, but how much detail should we add? Unfortunately, the answer to this question depends on the situation. We have to consider who (or what) is going to implement the algorithm and how much that person (or thing) already knows how to do. If someone is going to purchase Mark's birthday card on my behalf, my instructions have to be adapted to whether or not that person is familiar with the stores in the community and how well the purchaser known my brother's taste in greeting cards.

When our goal is to develop algorithms that will lead to computer programs, we need to consider the capabilities of the computer and provide enough detail so that someone else could use our algorithm to write a computer program that follows the steps in our algorithm. As with the birthday card problem, we need to adjust the level of detail to match the ability of the programmer. When in doubt, or when you are learning, it is better to have too much detail than to have too little.

Most of our examples will move from a high-level to a detailed algorithm in a single step, but this is not always reasonable. For larger, more complex problems, it is common to go through this process several times, developing intermediate level algorithms as we go. Each time, we add more detail to the previous algorithm, stopping when we see no benefit to further refinement. This technique of gradually working from a high-level to a detailed algorithm is often called stepwise refinement .

The final step is to review the algorithm. What are we looking for? First, we need to work through the algorithm step by step to determine whether or not it will solve the original problem. Once we are satisfied that the algorithm does provide a solution to the problem, we start to look for other things. The following questions are typical of ones that should be asked whenever we review an algorithm. Asking these questions and seeking their answers is a good way to develop skills that can be applied to the next problem.

Does this algorithm solve a very specific problem or does it solve a more general problem ? If it solves a very specific problem, should it be generalized?

For example, an algorithm that computes the area of a circle having radius 5.2 meters (formula π*5.2 2 ) solves a very specific problem, but an algorithm that computes the area of any circle (formula π*R 2 ) solves a more general problem.

Can this algorithm be simplified ?

One formula for computing the perimeter of a rectangle is:

length + width + length + width

A simpler formula would be:

2.0 * ( length + width )

Is this solution similar to the solution to another problem? How are they alike? How are they different?

For example, consider the following two formulae:

Rectangle area = length * width Triangle area = 0.5 * base * height

Similarities: Each computes an area. Each multiplies two measurements.

Differences: Different measurements are used. The triangle formula contains 0.5.

Hypothesis: Perhaps every area formula involves multiplying two measurements.

Example 4.1: Pick and Plant

This section contains an extended example that demonstrates the algorithm development process. To complete the algorithm, we need to know that every Jeroo can hop forward, turn left and right, pick a flower from its current location, and plant a flower at its current location.

Problem Statement (Step 1)

A Jeroo starts at (0, 0) facing East with no flowers in its pouch. There is a flower at location (3, 0). Write a program that directs the Jeroo to pick the flower and plant it at location (3, 2). After planting the flower, the Jeroo should hop one space East and stop. There are no other nets, flowers, or Jeroos on the island.

Analysis of the Problem (Step 2)

The flower is exactly three spaces ahead of the jeroo.

The flower is to be planted exactly two spaces South of its current location.

The Jeroo is to finish facing East one space East of the planted flower.

There are no nets to worry about.

High-level Algorithm (Step 3)

Let's name the Jeroo Bobby. Bobby should do the following:

Get the flower Put the flower Hop East

Detailed Algorithm (Step 4)

Get the flower Hop 3 times Pick the flower Put the flower Turn right Hop 2 times Plant a flower Hop East Turn left Hop once

Review the Algorithm (Step 5)

The high-level algorithm partitioned the problem into three rather easy subproblems. This seems like a good technique.

This algorithm solves a very specific problem because the Jeroo and the flower are in very specific locations.

This algorithm is actually a solution to a slightly more general problem in which the Jeroo starts anywhere, and the flower is 3 spaces directly ahead of the Jeroo.

Java Code for "Pick and Plant"

A good programmer doesn't write a program all at once. Instead, the programmer will write and test the program in a series of builds. Each build adds to the previous one. The high-level algorithm will guide us in this process.

FIRST BUILD

To see this solution in action, create a new Greenfoot4Sofia scenario and use the Edit Palettes Jeroo menu command to make the Jeroo classes visible. Right-click on the Island class and create a new subclass with the name of your choice. This subclass will hold your new code.

The recommended first build contains three things:

The main method (here myProgram() in your island subclass).

Declaration and instantiation of every Jeroo that will be used.

The high-level algorithm in the form of comments.

The instantiation at the beginning of myProgram() places bobby at (0, 0), facing East, with no flowers.

Once the first build is working correctly, we can proceed to the others. In this case, each build will correspond to one step in the high-level algorithm. It may seem like a lot of work to use four builds for such a simple program, but doing so helps establish habits that will become invaluable as the programs become more complex.

SECOND BUILD

This build adds the logic to "get the flower", which in the detailed algorithm (step 4 above) consists of hopping 3 times and then picking the flower. The new code is indicated by comments that wouldn't appear in the original (they are just here to call attention to the additions). The blank lines help show the organization of the logic.

By taking a moment to run the work so far, you can confirm whether or not this step in the planned algorithm works as expected.

THIRD BUILD

This build adds the logic to "put the flower". New code is indicated by the comments that are provided here to mark the additions.

FOURTH BUILD (final)

Example 4.2: replace net with flower.

This section contains a second example that demonstrates the algorithm development process.

There are two Jeroos. One Jeroo starts at (0, 0) facing North with one flower in its pouch. The second starts at (0, 2) facing East with one flower in its pouch. There is a net at location (3, 2). Write a program that directs the first Jeroo to give its flower to the second one. After receiving the flower, the second Jeroo must disable the net, and plant a flower in its place. After planting the flower, the Jeroo must turn and face South. There are no other nets, flowers, or Jeroos on the island.

Jeroo_2 is exactly two spaces behind Jeroo_1.

The only net is exactly three spaces ahead of Jeroo_2.

Each Jeroo has exactly one flower.

Jeroo_2 will have two flowers after receiving one from Jeroo_1. One flower must be used to disable the net. The other flower must be planted at the location of the net, i.e. (3, 2).

Jeroo_1 will finish at (0, 1) facing South.

Jeroo_2 is to finish at (3, 2) facing South.

Each Jeroo will finish with 0 flowers in its pouch. One flower was used to disable the net, and the other was planted.

Let's name the first Jeroo Ann and the second one Andy.

Ann should do the following: Find Andy (but don't collide with him) Give a flower to Andy (he will be straight ahead) After receiving the flower, Andy should do the following: Find the net (but don't hop onto it) Disable the net Plant a flower at the location of the net Face South

Ann should do the following: Find Andy Turn around (either left or right twice) Hop (to location (0, 1)) Give a flower to Andy Give ahead Now Andy should do the following: Find the net Hop twice (to location (2, 2)) Disable the net Toss Plant a flower at the location of the net Hop (to location (3, 2)) Plant a flower Face South Turn right

The high-level algorithm helps manage the details.

This algorithm solves a very specific problem, but the specific locations are not important. The only thing that is important is the starting location of the Jeroos relative to one another and the location of the net relative to the second Jeroo's location and direction.

Java Code for "Replace Net with Flower"

As before, the code should be written incrementally as a series of builds. Four builds will be suitable for this problem. As usual, the first build will contain the main method, the declaration and instantiation of the Jeroo objects, and the high-level algorithm in the form of comments. The second build will have Ann give her flower to Andy. The third build will have Andy locate and disable the net. In the final build, Andy will place the flower and turn East.

This build creates the main method, instantiates the Jeroos, and outlines the high-level algorithm. In this example, the main method would be myProgram() contained within a subclass of Island .

This build adds the logic for Ann to locate Andy and give him a flower.

This build adds the logic for Andy to locate and disable the net.

This build adds the logic for Andy to place a flower at (3, 2) and turn South.

Solve Me First Easy Problem Solving (Basic) Max Score: 1 Success Rate: 97.80%

Simple array sum easy problem solving (basic) max score: 10 success rate: 94.50%, compare the triplets easy problem solving (basic) max score: 10 success rate: 95.79%, a very big sum easy problem solving (basic) max score: 10 success rate: 98.81%, diagonal difference easy problem solving (basic) max score: 10 success rate: 96.00%, plus minus easy problem solving (basic) max score: 10 success rate: 98.38%, staircase easy problem solving (basic) max score: 10 success rate: 98.36%, mini-max sum easy problem solving (basic) max score: 10 success rate: 94.43%, birthday cake candles easy problem solving (basic) max score: 10 success rate: 97.12%, time conversion easy problem solving (basic) max score: 15 success rate: 92.31%, cookie support is required to access hackerrank.

Seems like cookies are disabled on this browser, please enable them to open this website

What is Problem Solving Algorithm?, Steps, Representation

- Post author: Disha Singh

- Post published: 6 June 2021

- Post category: Computer Science

- Post comments: 0 Comments

Table of Contents

- 1 What is Problem Solving Algorithm?

- 2 Definition of Problem Solving Algorithm

- 3.1 Analysing the Problem

- 3.2 Developing an Algorithm

- 3.4 Testing and Debugging

- 4.1 Flowchart

- 4.2 Pseudo code

What is Problem Solving Algorithm?

Computers are used for solving various day-to-day problems and thus problem solving is an essential skill that a computer science student should know. It is pertinent to mention that computers themselves cannot solve a problem. Precise step-by-step instructions should be given by us to solve the problem.

Thus, the success of a computer in solving a problem depends on how correctly and precisely we define the problem, design a solution (algorithm) and implement the solution (program) using a programming language.

Thus, problem solving is the process of identifying a problem, developing an algorithm for the identified problem and finally implementing the algorithm to develop a computer program.

Definition of Problem Solving Algorithm

These are some simple definition of problem solving algorithm which given below:

Steps for Problem Solving

When problems are straightforward and easy, we can easily find the solution. But a complex problem requires a methodical approach to find the right solution. In other words, we have to apply problem solving techniques.

Problem solving begins with the precise identification of the problem and ends with a complete working solution in terms of a program or software. Key steps required for solving a problem using a computer.

For Example: Suppose while driving, a vehicle starts making a strange noise. We might not know how to solve the problem right away. First, we need to identify from where the noise is coming? In case the problem cannot be solved by us, then we need to take the vehicle to a mechanic.

The mechanic will analyse the problem to identify the source of the noise, make a plan about the work to be done and finally repair the vehicle in order to remove the noise. From the example, it is explicit that, finding the solution to a problem might consist of multiple steps.

Following are Steps for Problem Solving :

Analysing the Problem

Developing an algorithm, testing and debugging.

It is important to clearly understand a problem before we begin to find the solution for it. If we are not clear as to what is to be solved, we may end up developing a program which may not solve our purpose.

Thus, we need to read and analyse the problem statement carefully in order to list the principal components of the problem and decide the core functionalities that our solution should have. By analysing a problem, we would be able to figure out what are the inputs that our program should accept and the outputs that it should produce.

It is essential to device a solution before writing a program code for a given problem. The solution is represented in natural language and is called an algorithm. We can imagine an algorithm like a very well-written recipe for a dish, with clearly defined steps that, if followed, one will end up preparing the dish.

We start with a tentative solution plan and keep on refining the algorithm until the algorithm is able to capture all the aspects of the desired solution. For a given problem, more than one algorithm is possible and we have to select the most suitable solution.

After finalising the algorithm, we need to convert the algorithm into the format which can be understood by the computer to generate the desired solution. Different high level programming languages can be used for writing a program. It is equally important to record the details of the coding procedures followed and document the solution. This is helpful when revisiting the programs at a later stage.

The program created should be tested on various parameters. The program should meet the requirements of the user. It must respond within the expected time. It should generate correct output for all possible inputs. In the presence of syntactical errors, no output will be obtained. In case the output generated is incorrect, then the program should be checked for logical errors, if any.

Software industry follows standardised testing methods like unit or component testing, integration testing, system testing, and acceptance testing while developing complex applications. This is to ensure that the software meets all the business and technical requirements and works as expected.

The errors or defects found in the testing phases are debugged or rectified and the program is again tested. This continues till all the errors are removed from the program. Once the software application has been developed, tested and delivered to the user, still problems in terms of functioning can come up and need to be resolved from time to time.

The maintenance of the solution, thus, involves fixing the problems faced by the user, answering the queries of the user and even serving the request for addition or modification of features.

Representation of Algorithms

Using their algorithmic thinking skills, the software designers or programmers analyse the problem and identify the logical steps that need to be followed to reach a solution. Once the steps are identified, the need is to write down these steps along with the required input and desired output.

There are two common methods of representing an algorithm —flowchart and pseudocode. Either of the methods can be used to represent an algorithm while keeping in mind the following:

- It showcases the logic of the problem solution, excluding any implementational details.

- It clearly reveals the flow of control during execution of the program.

A flowchart is a visual representation of an algorithm . A flowchart is a diagram made up of boxes, diamonds and other shapes, connected by arrows. Each shape represents a step of the solution process and the arrow represents the order or link among the steps.

A flow chart is a step by step diagrammatic representation of the logic paths to solve a given problem. Or A flowchart is visual or graphical representation of an algorithm .

The flowcharts are pictorial representation of the methods to b used to solve a given problem and help a great deal to analyze the problem and plan its solution in a systematic and orderly manner. A flowchart when translated in to a proper computer language, results in a complete program.

Advantages of Flowcharts:

- The flowchart shows the logic of a problem displayed in pictorial fashion which felicitates easier checking of an algorithm

- The Flowchart is good means of communication to other users. It is also a compact means of recording an algorithm solution to a problem.

- The flowchart allows the problem solver to break the problem into parts. These parts can be connected to make master chart.

- The flowchart is a permanent record of the solution which can be consulted at a later time.

Differences between Algorithm and Flowchart

Pseudo code.

The Pseudo code is neither an algorithm nor a program. It is an abstract form of a program. It consists of English like statements which perform the specific operations. It is defined for an algorithm. It does not use any graphical representation.

In pseudo code , the program is represented in terms of words and phrases, but the syntax of program is not strictly followed.

Advantages of Pseudocode

- Before writing codes in a high level language, a pseudocode of a program helps in representing the basic functionality of the intended program.

- By writing the code first in a human readable language, the programmer safeguards against leaving out any important step. Besides, for non-programmers, actual programs are difficult to read and understand.

- But pseudocode helps them to review the steps to confirm that the proposed implementation is going to achieve the desire output.

Related posts:

10 Types of Computers | History of Computers, Advantages

What is microprocessor evolution of microprocessor, types, features, types of computer memory, characteristics, primary memory, secondary memory, data and information: definition, characteristics, types, channels, approaches, what is cloud computing classification, characteristics, principles, types of cloud providers, what is debugging types of errors, types of storage devices, advantages, examples, 10 evolution of computing machine, history.

- What are Functions of Operating System? 6 Functions

Advantages and Disadvantages of Operating System

- Data Representation in Computer: Number Systems, Characters, Audio, Image and Video

What are Data Types in C++? Types

What are operators in c different types of operators in c.

- What are Expressions in C? Types

What are Decision Making Statements in C? Types

You might also like.

Generations of Computer First To Fifth, Classification, Characteristics, Features, Examples

What is Flowchart in Programming? Symbols, Advantages, Preparation

What is operating system? Functions, Types, Types of User Interface

What are c++ keywords set of 59 keywords in c ++.

What is Computer System? Definition, Characteristics, Functional Units, Components

What is Artificial Intelligence? Functions, 6 Benefits, Applications of AI

What is C++ Programming Language? C++ Character Set, C++ Tokens

Types of Computer Software: Systems Software, Application Software

- Entrepreneurship

- Organizational Behavior

- Financial Management

- Communication

- Human Resource Management

- Sales Management

- Marketing Management

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

Overview of the Problem-Solving Mental Process

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Rachel Goldman, PhD FTOS, is a licensed psychologist, clinical assistant professor, speaker, wellness expert specializing in eating behaviors, stress management, and health behavior change.

:max_bytes(150000):strip_icc():format(webp)/Rachel-Goldman-1000-a42451caacb6423abecbe6b74e628042.jpg)

- Identify the Problem

- Define the Problem

- Form a Strategy

- Organize Information

- Allocate Resources

- Monitor Progress

- Evaluate the Results

Frequently Asked Questions

Problem-solving is a mental process that involves discovering, analyzing, and solving problems. The ultimate goal of problem-solving is to overcome obstacles and find a solution that best resolves the issue.

The best strategy for solving a problem depends largely on the unique situation. In some cases, people are better off learning everything they can about the issue and then using factual knowledge to come up with a solution. In other instances, creativity and insight are the best options.

It is not necessary to follow problem-solving steps sequentially, It is common to skip steps or even go back through steps multiple times until the desired solution is reached.

In order to correctly solve a problem, it is often important to follow a series of steps. Researchers sometimes refer to this as the problem-solving cycle. While this cycle is portrayed sequentially, people rarely follow a rigid series of steps to find a solution.

The following steps include developing strategies and organizing knowledge.

1. Identifying the Problem

While it may seem like an obvious step, identifying the problem is not always as simple as it sounds. In some cases, people might mistakenly identify the wrong source of a problem, which will make attempts to solve it inefficient or even useless.

Some strategies that you might use to figure out the source of a problem include :

- Asking questions about the problem

- Breaking the problem down into smaller pieces

- Looking at the problem from different perspectives

- Conducting research to figure out what relationships exist between different variables

2. Defining the Problem

After the problem has been identified, it is important to fully define the problem so that it can be solved. You can define a problem by operationally defining each aspect of the problem and setting goals for what aspects of the problem you will address

At this point, you should focus on figuring out which aspects of the problems are facts and which are opinions. State the problem clearly and identify the scope of the solution.

3. Forming a Strategy

After the problem has been identified, it is time to start brainstorming potential solutions. This step usually involves generating as many ideas as possible without judging their quality. Once several possibilities have been generated, they can be evaluated and narrowed down.

The next step is to develop a strategy to solve the problem. The approach used will vary depending upon the situation and the individual's unique preferences. Common problem-solving strategies include heuristics and algorithms.

- Heuristics are mental shortcuts that are often based on solutions that have worked in the past. They can work well if the problem is similar to something you have encountered before and are often the best choice if you need a fast solution.

- Algorithms are step-by-step strategies that are guaranteed to produce a correct result. While this approach is great for accuracy, it can also consume time and resources.

Heuristics are often best used when time is of the essence, while algorithms are a better choice when a decision needs to be as accurate as possible.

4. Organizing Information

Before coming up with a solution, you need to first organize the available information. What do you know about the problem? What do you not know? The more information that is available the better prepared you will be to come up with an accurate solution.

When approaching a problem, it is important to make sure that you have all the data you need. Making a decision without adequate information can lead to biased or inaccurate results.

5. Allocating Resources

Of course, we don't always have unlimited money, time, and other resources to solve a problem. Before you begin to solve a problem, you need to determine how high priority it is.

If it is an important problem, it is probably worth allocating more resources to solving it. If, however, it is a fairly unimportant problem, then you do not want to spend too much of your available resources on coming up with a solution.

At this stage, it is important to consider all of the factors that might affect the problem at hand. This includes looking at the available resources, deadlines that need to be met, and any possible risks involved in each solution. After careful evaluation, a decision can be made about which solution to pursue.

6. Monitoring Progress

After selecting a problem-solving strategy, it is time to put the plan into action and see if it works. This step might involve trying out different solutions to see which one is the most effective.

It is also important to monitor the situation after implementing a solution to ensure that the problem has been solved and that no new problems have arisen as a result of the proposed solution.

Effective problem-solvers tend to monitor their progress as they work towards a solution. If they are not making good progress toward reaching their goal, they will reevaluate their approach or look for new strategies .

7. Evaluating the Results

After a solution has been reached, it is important to evaluate the results to determine if it is the best possible solution to the problem. This evaluation might be immediate, such as checking the results of a math problem to ensure the answer is correct, or it can be delayed, such as evaluating the success of a therapy program after several months of treatment.

Once a problem has been solved, it is important to take some time to reflect on the process that was used and evaluate the results. This will help you to improve your problem-solving skills and become more efficient at solving future problems.

A Word From Verywell

It is important to remember that there are many different problem-solving processes with different steps, and this is just one example. Problem-solving in real-world situations requires a great deal of resourcefulness, flexibility, resilience, and continuous interaction with the environment.

Get Advice From The Verywell Mind Podcast

Hosted by therapist Amy Morin, LCSW, this episode of The Verywell Mind Podcast shares how you can stop dwelling in a negative mindset.

Follow Now : Apple Podcasts / Spotify / Google Podcasts

You can become a better problem solving by:

- Practicing brainstorming and coming up with multiple potential solutions to problems

- Being open-minded and considering all possible options before making a decision

- Breaking down problems into smaller, more manageable pieces

- Asking for help when needed

- Researching different problem-solving techniques and trying out new ones

- Learning from mistakes and using them as opportunities to grow

It's important to communicate openly and honestly with your partner about what's going on. Try to see things from their perspective as well as your own. Work together to find a resolution that works for both of you. Be willing to compromise and accept that there may not be a perfect solution.

Take breaks if things are getting too heated, and come back to the problem when you feel calm and collected. Don't try to fix every problem on your own—consider asking a therapist or counselor for help and insight.

If you've tried everything and there doesn't seem to be a way to fix the problem, you may have to learn to accept it. This can be difficult, but try to focus on the positive aspects of your life and remember that every situation is temporary. Don't dwell on what's going wrong—instead, think about what's going right. Find support by talking to friends or family. Seek professional help if you're having trouble coping.

Davidson JE, Sternberg RJ, editors. The Psychology of Problem Solving . Cambridge University Press; 2003. doi:10.1017/CBO9780511615771

Sarathy V. Real world problem-solving . Front Hum Neurosci . 2018;12:261. Published 2018 Jun 26. doi:10.3389/fnhum.2018.00261

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

- Runestone in social media: Follow @iRunestone Our Facebook Page

- Table of Contents

- Assignments

- Peer Instruction (Instructor)

- Peer Instruction (Student)

- Change Course

- Instructor's Page

- Progress Page

- Edit Profile

- Change Password

- Scratch ActiveCode

- Scratch Activecode

- Instructors Guide

- About Runestone

- Report A Problem

- This Chapter

- 1. Introduction' data-toggle="tooltip" >

Problem Solving with Algorithms and Data Structures using Python ¶

By Brad Miller and David Ranum, Luther College

There is a wonderful collection of YouTube videos recorded by Gerry Jenkins to support all of the chapters in this text.

- 1.1. Objectives

- 1.2. Getting Started

- 1.3. What Is Computer Science?

- 1.4. What Is Programming?

- 1.5. Why Study Data Structures and Abstract Data Types?

- 1.6. Why Study Algorithms?

- 1.7. Review of Basic Python

- 1.8.1. Built-in Atomic Data Types

- 1.8.2. Built-in Collection Data Types

- 1.9.1. String Formatting

- 1.10. Control Structures

- 1.11. Exception Handling

- 1.12. Defining Functions

- 1.13.1. A Fraction Class

- 1.13.2. Inheritance: Logic Gates and Circuits

- 1.14. Summary

- 1.15. Key Terms

- 1.16. Discussion Questions

- 1.17. Programming Exercises

- 2.1.1. A Basic implementation of the MSDie class

- 2.2. Making your Class Comparable

- 3.1. Objectives

- 3.2. What Is Algorithm Analysis?

- 3.3. Big-O Notation

- 3.4.1. Solution 1: Checking Off

- 3.4.2. Solution 2: Sort and Compare

- 3.4.3. Solution 3: Brute Force

- 3.4.4. Solution 4: Count and Compare

- 3.5. Performance of Python Data Structures

- 3.7. Dictionaries

- 3.8. Summary

- 3.9. Key Terms

- 3.10. Discussion Questions

- 3.11. Programming Exercises

- 4.1. Objectives

- 4.2. What Are Linear Structures?

- 4.3. What is a Stack?

- 4.4. The Stack Abstract Data Type

- 4.5. Implementing a Stack in Python

- 4.6. Simple Balanced Parentheses

- 4.7. Balanced Symbols (A General Case)

- 4.8. Converting Decimal Numbers to Binary Numbers

- 4.9.1. Conversion of Infix Expressions to Prefix and Postfix

- 4.9.2. General Infix-to-Postfix Conversion

- 4.9.3. Postfix Evaluation

- 4.10. What Is a Queue?

- 4.11. The Queue Abstract Data Type

- 4.12. Implementing a Queue in Python

- 4.13. Simulation: Hot Potato

- 4.14.1. Main Simulation Steps

- 4.14.2. Python Implementation

- 4.14.3. Discussion

- 4.15. What Is a Deque?

- 4.16. The Deque Abstract Data Type

- 4.17. Implementing a Deque in Python

- 4.18. Palindrome-Checker

- 4.19. Lists

- 4.20. The Unordered List Abstract Data Type

- 4.21.1. The Node Class

- 4.21.2. The Unordered List Class

- 4.22. The Ordered List Abstract Data Type

- 4.23.1. Analysis of Linked Lists

- 4.24. Summary

- 4.25. Key Terms

- 4.26. Discussion Questions

- 4.27. Programming Exercises

- 5.1. Objectives

- 5.2. What Is Recursion?

- 5.3. Calculating the Sum of a List of Numbers

- 5.4. The Three Laws of Recursion

- 5.5. Converting an Integer to a String in Any Base

- 5.6. Stack Frames: Implementing Recursion

- 5.7. Introduction: Visualizing Recursion

- 5.8. Sierpinski Triangle

- 5.9. Complex Recursive Problems

- 5.10. Tower of Hanoi

- 5.11. Exploring a Maze

- 5.12. Dynamic Programming

- 5.13. Summary

- 5.14. Key Terms

- 5.15. Discussion Questions

- 5.16. Glossary

- 5.17. Programming Exercises

- 6.1. Objectives

- 6.2. Searching

- 6.3.1. Analysis of Sequential Search

- 6.4.1. Analysis of Binary Search

- 6.5.1. Hash Functions

- 6.5.2. Collision Resolution

- 6.5.3. Implementing the Map Abstract Data Type

- 6.5.4. Analysis of Hashing

- 6.6. Sorting

- 6.7. The Bubble Sort

- 6.8. The Selection Sort

- 6.9. The Insertion Sort

- 6.10. The Shell Sort

- 6.11. The Merge Sort

- 6.12. The Quick Sort

- 6.13. Summary

- 6.14. Key Terms

- 6.15. Discussion Questions

- 6.16. Programming Exercises

- 7.1. Objectives

- 7.2. Examples of Trees

- 7.3. Vocabulary and Definitions

- 7.4. List of Lists Representation

- 7.5. Nodes and References

- 7.6. Parse Tree

- 7.7. Tree Traversals

- 7.8. Priority Queues with Binary Heaps

- 7.9. Binary Heap Operations

- 7.10.1. The Structure Property

- 7.10.2. The Heap Order Property

- 7.10.3. Heap Operations

- 7.11. Binary Search Trees

- 7.12. Search Tree Operations

- 7.13. Search Tree Implementation

- 7.14. Search Tree Analysis

- 7.15. Balanced Binary Search Trees

- 7.16. AVL Tree Performance

- 7.17. AVL Tree Implementation

- 7.18. Summary of Map ADT Implementations

- 7.19. Summary

- 7.20. Key Terms

- 7.21. Discussion Questions

- 7.22. Programming Exercises

- 8.1. Objectives

- 8.2. Vocabulary and Definitions

- 8.3. The Graph Abstract Data Type

- 8.4. An Adjacency Matrix

- 8.5. An Adjacency List

- 8.6. Implementation

- 8.7. The Word Ladder Problem

- 8.8. Building the Word Ladder Graph

- 8.9. Implementing Breadth First Search

- 8.10. Breadth First Search Analysis

- 8.11. The Knight’s Tour Problem

- 8.12. Building the Knight’s Tour Graph

- 8.13. Implementing Knight’s Tour

- 8.14. Knight’s Tour Analysis

- 8.15. General Depth First Search

- 8.16. Depth First Search Analysis

- 8.17. Topological Sorting

- 8.18. Strongly Connected Components

- 8.19. Shortest Path Problems

- 8.20. Dijkstra’s Algorithm

- 8.21. Analysis of Dijkstra’s Algorithm

- 8.22. Prim’s Spanning Tree Algorithm

- 8.23. Summary

- 8.24. Key Terms

- 8.25. Discussion Questions

- 8.26. Programming Exercises

Acknowledgements ¶

We are very grateful to Franklin Beedle Publishers for allowing us to make this interactive textbook freely available. This online version is dedicated to the memory of our first editor, Jim Leisy, who wanted us to “change the world.”

Indices and tables ¶

Search Page

Why are algorithms called algorithms? A brief history of the Persian polymath you’ve likely never heard of

Digital Health Research Fellow, The University of Melbourne

Disclosure statement

Debbie Passey does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

University of Melbourne provides funding as a founding partner of The Conversation AU.

View all partners

Algorithms have become integral to our lives. From social media apps to Netflix, algorithms learn your preferences and prioritise the content you are shown. Google Maps and artificial intelligence are nothing without algorithms.

So, we’ve all heard of them, but where does the word “algorithm” even come from?

Over 1,000 years before the internet and smartphone apps, Persian scientist and polymath Muhammad ibn Mūsā al-Khwārizmī invented the concept of algorithms.

In fact, the word itself comes from the Latinised version of his name, “algorithmi”. And, as you might suspect, it’s also related to algebra.

Largely lost to time

Al-Khwārizmī lived from 780 to 850 CE, during the Islamic Golden Age . He is considered the “ father of algebra ”, and for some, the “ grandfather of computer science ”.

Yet, few details are known about his life. Many of his original works in Arabic have been lost to time.

It is believed al-Khwārizmī was born in the Khwarazm region south of the Aral Sea in present-day Uzbekistan. He lived during the Abbasid Caliphate, which was a time of remarkable scientific progress in the Islamic Empire.

Al-Khwārizmī made important contributions to mathematics, geography, astronomy and trigonometry. To help provide a more accurate world map, he corrected Alexandrian polymath Ptolemy’s classic cartography book, Geographia.

He produced calculations for tracking the movement of the Sun, Moon and planets. He also wrote about trigonometric functions and produced the first table of tangents.

Al-Khwārizmī was a scholar in the House of Wisdom ( Bayt al-Hikmah ) in Baghdad. At this intellectual hub , scholars were translating knowledge from around the world into Arabic, synthesising it to make meaningful progress in a range of disciplines. This included mathematics, a field deeply connected to Islam .

The ‘father of algebra’

Al-Khwārizmī was a polymath and a religious man. His scientific writings started with dedications to Allah and the Prophet Muhammad. And one of the major projects Islamic mathematicians undertook at the House of Wisdom was to develop algebra.

Around 830 CE, Caliph al-Ma’mun encouraged al-Khwārizmī to write a treatise on algebra , Al-Jabr (or The Compendious Book on Calculation by Completion and Balancing). This became his most important work.

At this point, “algebra” had been around for hundreds of years, but al-Khwārizmī was the first to write a definitive book on it. His work was meant to be a practical teaching tool. Its Latin translation was the basis for algebra textbooks in European universities until the 16th century.

In the first part, he introduced the concepts and rules of algebra, and methods for calculating the volumes and areas of shapes. In the second part he provided real-life problems and worked out solutions, such as inheritance cases, the partition of land and calculations for trade.

Al-Khwārizmī didn’t use modern-day mathematical notation with numbers and symbols. Instead, he wrote in simple prose and employed geometric diagrams:

Four roots are equal to twenty, then one root is equal to five, and the square to be formed of it is twenty-five.

In modern-day notation we’d write that like so:

4x = 20, x = 5, x 2 = 25

Grandfather of computer science

Al-Khwārizmī’s mathematical writings introduced the Hindu-Arabic numerals to Western mathematicians. These are the ten symbols we all use today: 1, 2, 3, 4, 5, 6, 7, 8, 9, 0.

The Hindu-Arabic numerals are important to the history of computing because they use the number zero and a base-ten decimal system. Importantly, this is the numeral system that underpins modern computing technology.

Al-Khwārizmī’s art of calculating mathematical problems laid the foundation for the concept of algorithms . He provided the first detailed explanations for using decimal notation to perform the four basic operations (addition, subtraction, multiplication, division) and computing fractions.

This was a more efficient computation method than using the abacus. To solve a mathematical equation, al-Khwārizmī systematically moved through a sequence of steps to find the answer. This is the underlying concept of an algorithm.

Algorism , a Medieval Latin term named after al-Khwārizmī, refers to the rules for performing arithmetic using the Hindu-Arabic numeral system. Translated to Latin, al-Khwārizmī’s book on Hindu numerals was titled Algorithmi de Numero Indorum.

In the early 20th century, the word algorithm came into its current definition and usage: “a procedure for solving a mathematical problem in a finite number of steps; a step-by-step procedure for solving a problem”.

Muhammad ibn Mūsā al-Khwārizmī played a central role in the development of mathematics and computer science as we know them today.

The next time you use any digital technology – from your social media feed to your online bank account to your Spotify app – remember that none of it would be possible without the pioneering work of an ancient Persian polymath.

Correction: This article was amended to correct a quote from al-Khwārizmī’s work.

- Mathematics

- History of computing

Events and Communications Coordinator

Assistant Editor - 1 year cadetship

Executive Dean, Faculty of Health

Lecturer/Senior Lecturer, Earth System Science (School of Science)

Sydney Horizon Educators (Identified)

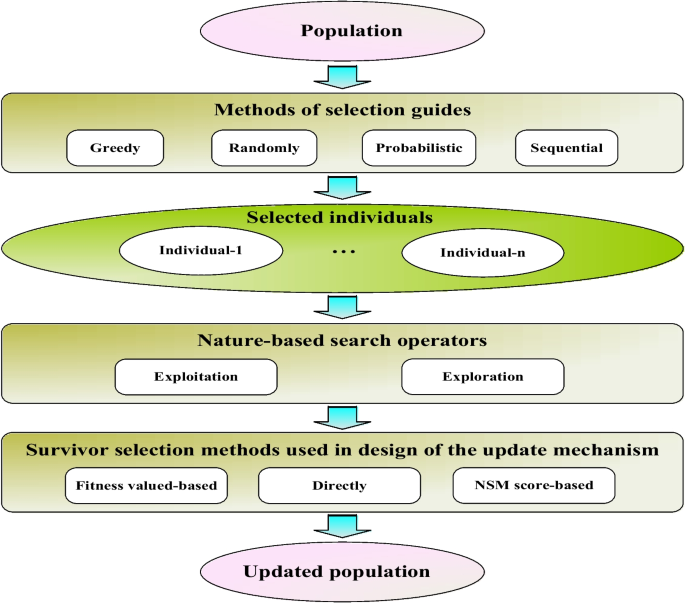

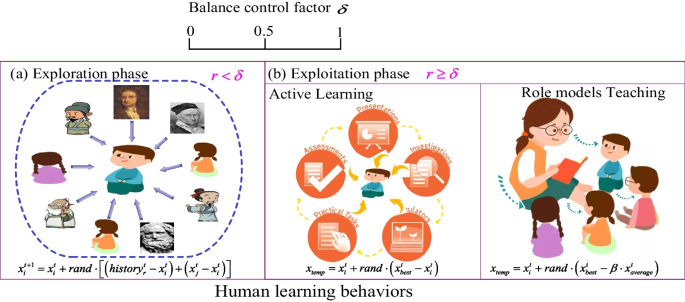

Learning search algorithm: framework and comprehensive performance for solving optimization problems

- Open access

- Published: 09 May 2024

- Volume 57 , article number 139 , ( 2024 )

Cite this article

You have full access to this open access article

- Chiwen Qu 1 ,

- Xiaoning Peng 2 &

- Qilan Zeng 3

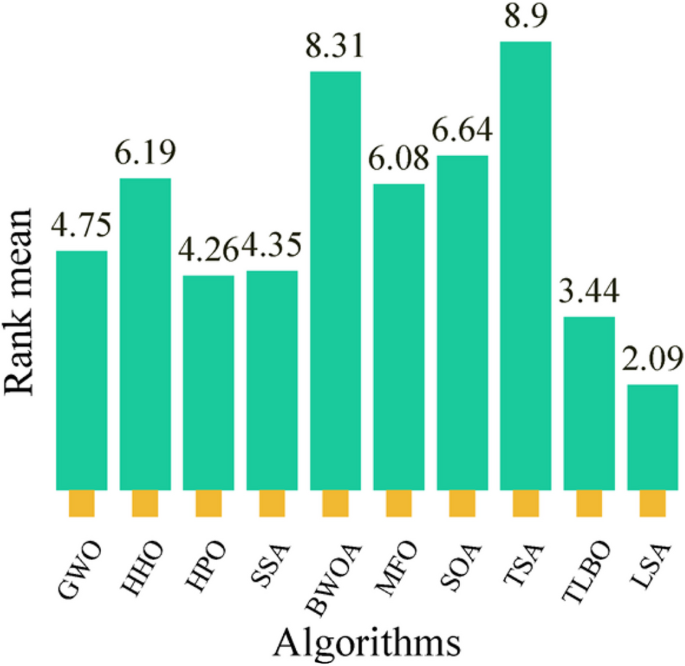

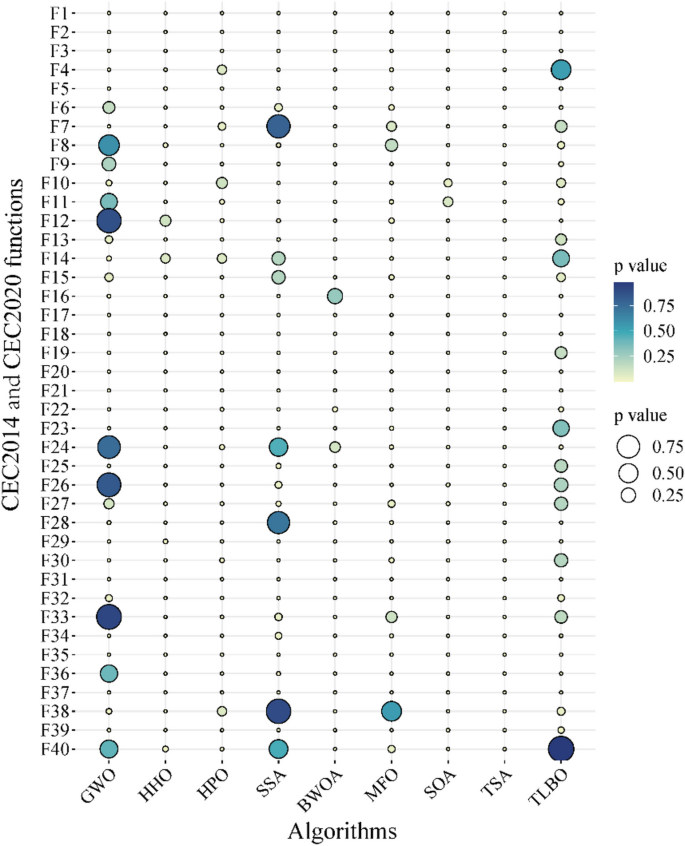

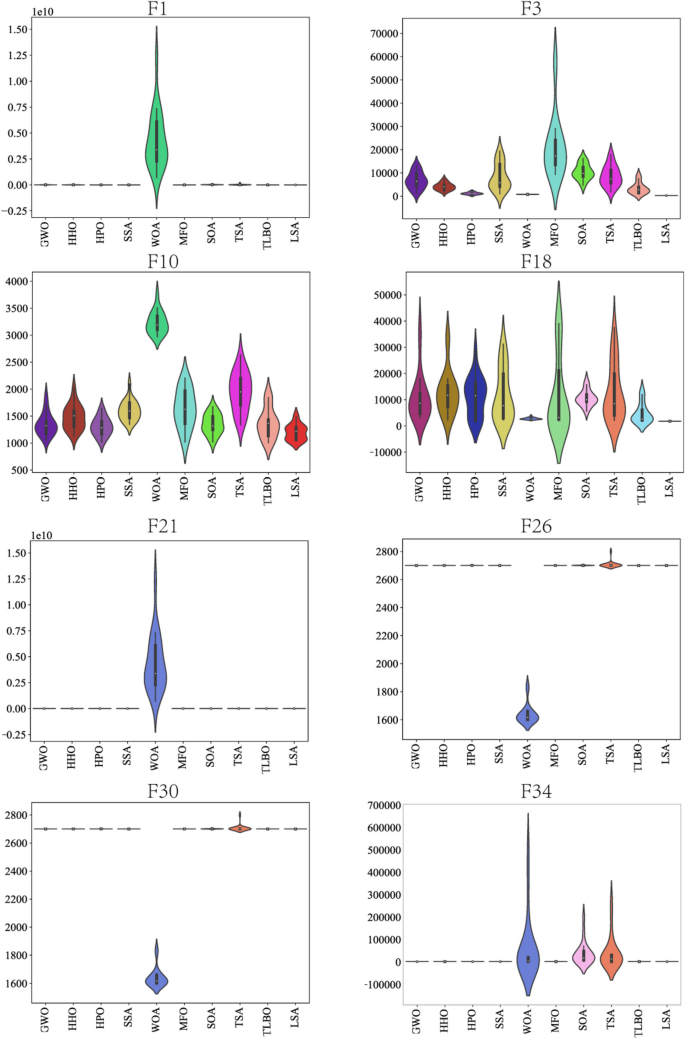

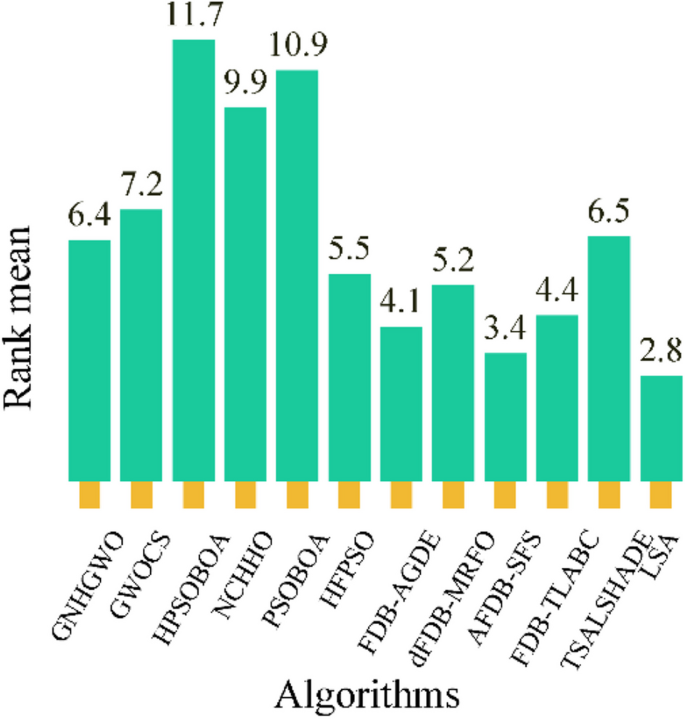

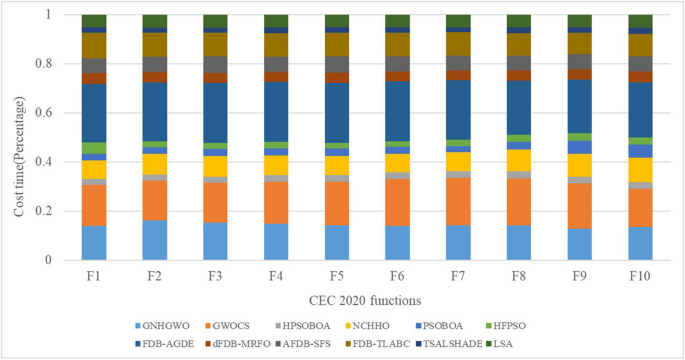

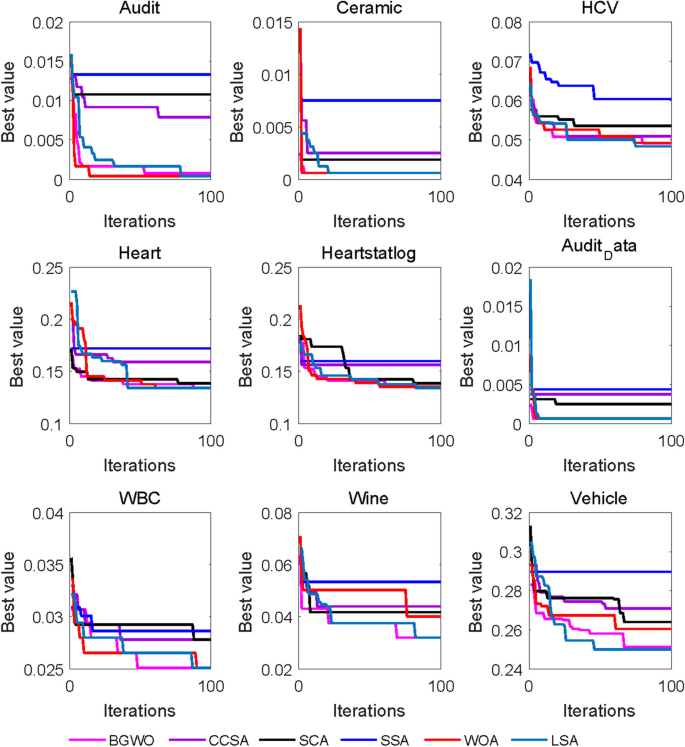

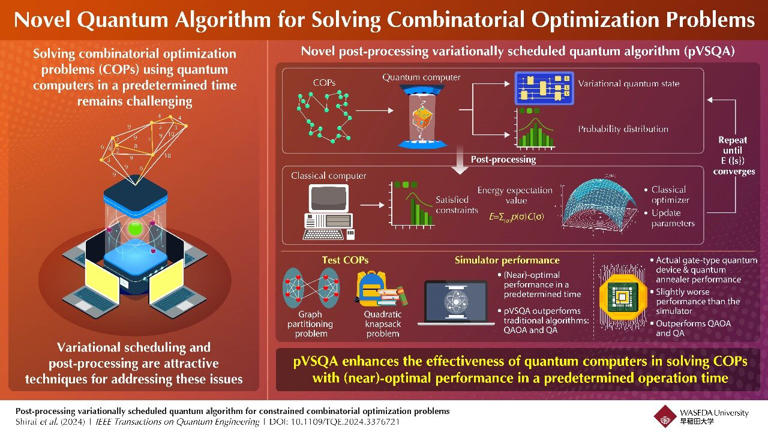

In this study, the Learning Search Algorithm (LSA) is introduced as an innovative optimization algorithm that draws inspiration from swarm intelligence principles and mimics the social learning behavior observed in humans. The LSA algorithm optimizes the search process by integrating historical experience and real-time social information, enabling it to effectively navigate complex problem spaces. By doing so, it enhances its global development capability and provides efficient solutions to challenging optimization tasks. Additionally, the algorithm improves the collective learning capacity by incorporating teaching and active learning behaviors within the population, leading to improved local development capabilities. Furthermore, a dynamic adaptive control factor is utilized to regulate the algorithm’s global exploration and local development abilities. The proposed algorithm is rigorously evaluated using 40 benchmark test functions from IEEE CEC 2014 and CEC 2020, and compared against nine established evolutionary algorithms as well as 11 recently improved algorithms. The experimental results demonstrate the superiority of the LSA algorithm, as it achieves the top rank in the Friedman rank-sum test, highlighting its power and competitiveness. Moreover, the LSA algorithm is successfully applied to solve six real-world engineering problems and 15 UCI datasets of feature selection problems, showcasing its significant advantages and potential for practical applications in engineering problems and feature selection problems.

Avoid common mistakes on your manuscript.

1 Introduction