Decision Tree: A Step-by-Step Guide with Examples

Today, in our data-driven world, it’s more important than ever to make well-informed decisions. Whether you work with data, analyze business trends, or make important choices in any field, understanding and utilizing decision trees can greatly improve your decision-making process. In this blog post, we will guide you through the basics of decision trees, covering essential concepts and advanced techniques, to give you a comprehensive understanding of this powerful tool.

What is a Decision Tree?

Let’s start with the definition of Decision Tree.

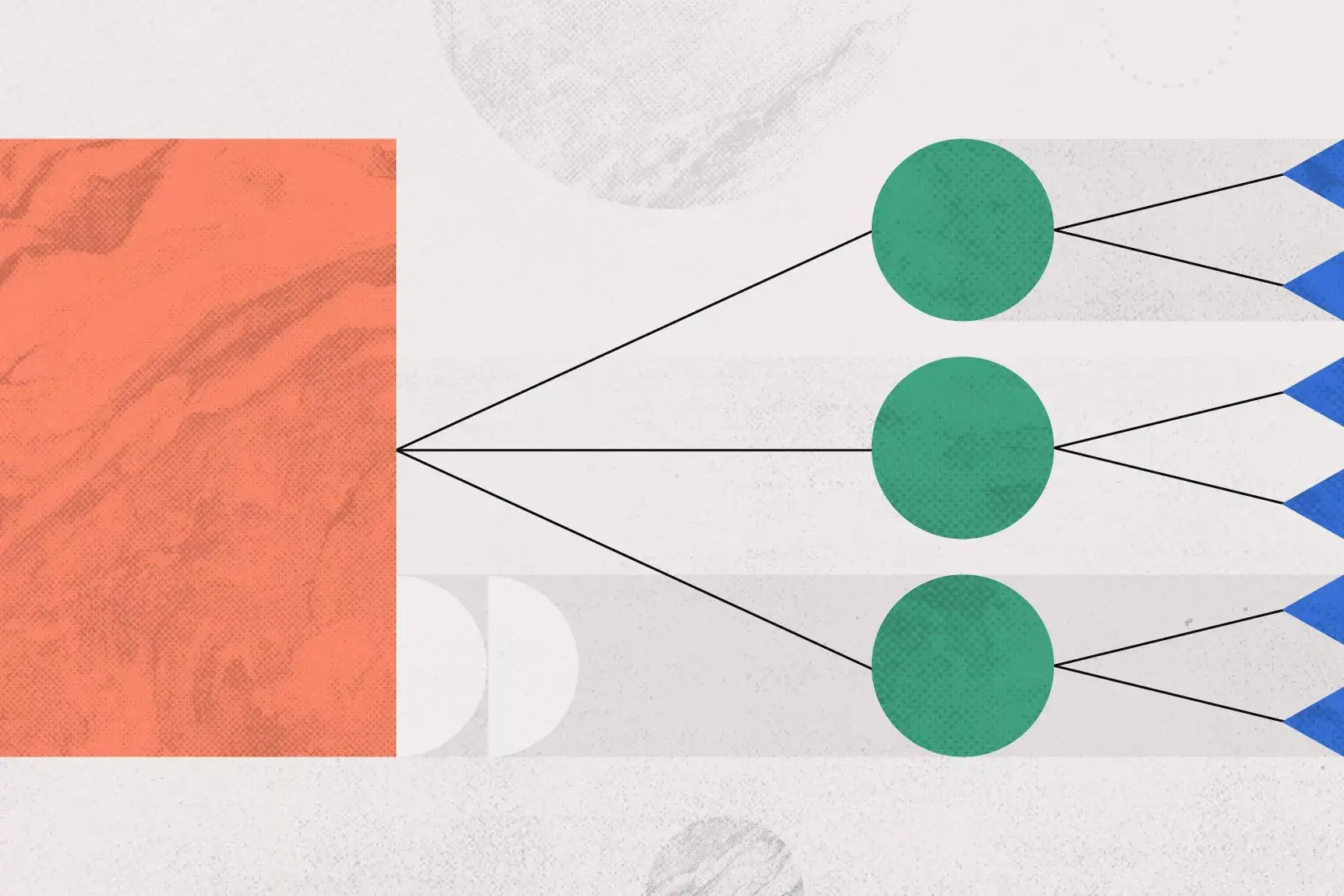

A decision tree is a graphical representation that outlines the various choices available and the potential outcomes of those choices. It begins with a root node, which represents the initial decision or problem. From this root node, branches extend to represent different options or actions that can be taken. Each branch leads to further decision nodes, where additional choices can be made, and these in turn branch out to show the possible consequences of each decision. This continues until the branches reach leaf nodes, which represent the final outcomes or decisions.

- Ready to use

- Fully customizable template

- Get Started in seconds

The decision tree structure allows for a clear and organized way to visualize the decision-making process , making it easier to understand how different choices lead to different results. This is particularly useful in complex scenarios where multiple factors and potential outcomes need to be considered. By breaking down the decision process into manageable steps and visually mapping them out, decision trees help decision-makers evaluate the potential risks and benefits of each option, leading to more informed and rational decisions.

Decision trees are useful tools in many fields like business, healthcare, and finance. They help analyze things systematically by providing a simple way to compare different strategies and their likely impacts. This helps organizations and individuals make decisions that are not only based on data but also transparent and justifiable. This ensures that the chosen path aligns with their objectives and constraints.

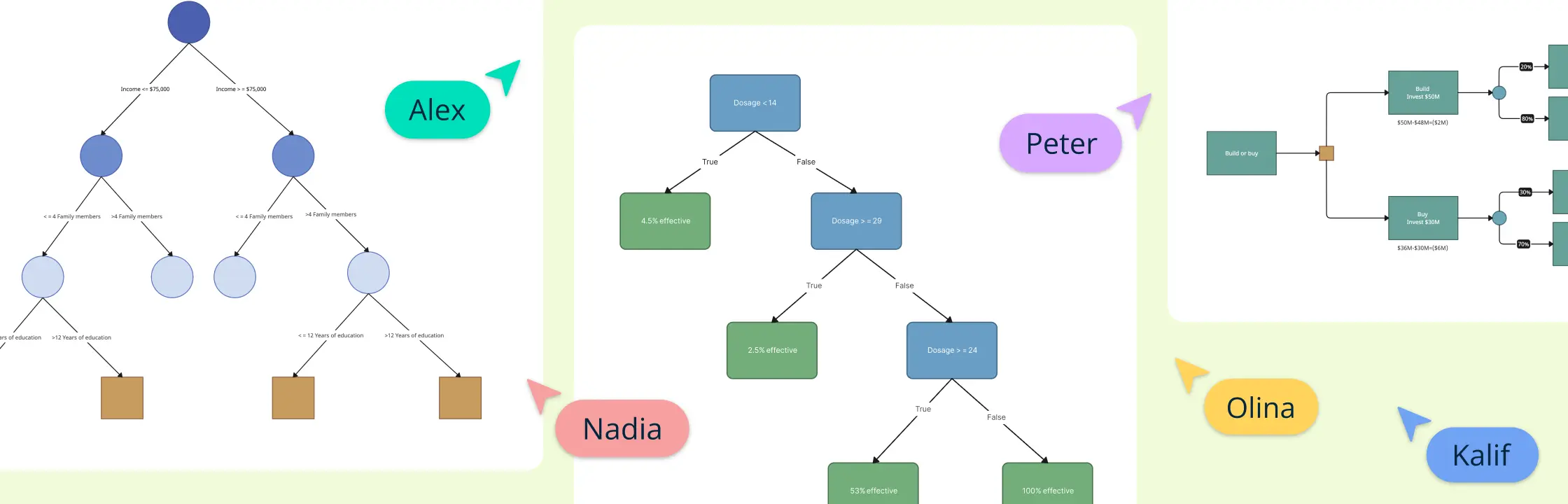

Decision Tree Symbols

Understanding the symbols used in a decision tree is essential for interpreting and creating decision trees effectively. Here are the main symbols and their meanings:

- Decision node: A point in the decision tree where a choice needs to be made. Represents decisions that split the tree into branches, each representing a possible choice.

- Chance node: A point where an outcome is uncertain. Represents different possible outcomes of an event, often associated with probabilities.

- Terminal (or end) node: The end point of a decision path. Represents the final outcome of a series of decisions and chance events, such as success or failure.

- Branches: Lines that connect nodes to show the flow from one decision or chance node to the next. Represent the different paths that can be taken based on decisions and chance events.

- Arrows: Indicate the direction of flow from one node to another. Show the progression from decisions and chance events to outcomes.

Types of Decision Trees

It’s important to remember the different types of decision trees: classification trees and regression trees. Each type has various algorithms, nodes, and branches that make them unique. It’s crucial to select the type that best fits the purpose of your decision tree.

Classification Trees

Classification trees are used when the target variable is categorical. The tree splits the dataset into subsets based on the values of attributes, aiming to classify instances into classes or categories. For example, determining whether an email is spam or not spam.

Regression Trees

Regression trees are employed when the target variable is continuous. They predict outcomes that are real numbers or continuous values by recursively partitioning the data into smaller subsets. For example, predicting the price of a house based on its features.

How to Make a Decision Tree in 7 Steps

Follow these steps and principles to create a robust decision tree that effectively predicts outcomes and aids in making informed decisions based on data.

1. Define the decision objective

- Identify the goal : Clearly articulate what decision you need to make. This could be a choice between different strategic options, such as launching a product , entering a new market, or investing in new technology.

- Scope : Determine the boundaries of your decision. What factors and constraints are relevant? This helps in focusing the decision-making process and avoiding unnecessary complexity.

2. Gather relevant data

- Collect information : Gather all the necessary information related to your decision. This might include historical data, market research, financial reports, and expert opinions.

- Identify key factors : Determine the critical variables that will influence your decision. These could be costs, potential revenues, risks, resource availability, or market conditions.

3. Identify decision points and outcomes

- Decision points : Identify all the points where a decision needs to be made. Each decision point should represent a clear choice between different actions.

- Possible outcomes : List all potential outcomes or actions for each decision point. Consider both positive and negative outcomes, as well as their probabilities and impacts.

4. Structure the decision tree

- Root node : Start with the main decision or question at the root of your tree. This represents the initial decision point.

- Branches : Draw branches from the root to represent each possible decision or action. Each branch leads to a node, which represents subsequent decisions or outcomes.

- Nodes : At the end of each branch, add nodes that represent the next decision point or the final outcome. Continue branching out until all possible decisions and outcomes are covered.

5. Assign probabilities and values

- Probability of outcomes : Assign probabilities to each outcome based on data or expert judgment. This step is crucial for evaluating the likelihood of different scenarios.

- Impact assessment : Evaluate the impact or value of each outcome. This might involve estimating potential costs, revenues, or other metrics relevant to your decision.

6. Calculate expected values

- Example: For a decision to launch a product, you might have a 60% chance of success with an impact of $500,000, and a 40% chance of failure with an impact of -$200,000.

- Expected value: (0.6 * $500,000) + (0.4 * -$200,000) = $300,000 - $80,000 = $220,000

- Compare paths : Compare the expected values of different decision paths to identify the most favorable option.

7. Optimize and prune the tree

- Prune irrelevant branches : Remove branches that do not significantly impact the decision. This helps in simplifying the decision tree and focusing on the most critical factors.

- Simplify the tree : Aim to make the decision tree as straightforward as possible while retaining all necessary information. A simpler tree is easier to understand and use.

How to Read a Decision Tree

Reading a decision tree involves starting at the root node and following the branches based on conditions until you reach a leaf node, which represents the final outcome. Each node in the tree represents a decision based on an attribute, and the branches represent the possible conditions or outcomes of that decision.

For example, in a project management decision tree, you might start at the root node, which could represent the choice between two project approaches (A and B). From there, you follow the branch for Approach A or Approach B. Each subsequent node represents another decision, such as cost or time, and each branch represents the conditions of that decision (e.g., high cost vs. low/medium cost).

As you continue following the branches, you eventually reach a leaf node, which gives you the final outcome based on the path you took. For instance, if you followed the path for Approach A with high cost, you might reach a leaf node indicating project failure. Conversely, Approach B with a short time might lead you to a leaf node indicating project success.

Decision Tree Best Practices

Follow these best practices to develop and deploy decision trees that are reliable and effective tools for making informed decisions across various domains.

- Define clear objectives : Clearly articulate the decision you need to make and its scope. This helps in focusing the analysis and ensuring the decision tree addresses the right questions.

- Gather quality data : Gather relevant and accurate data that will be used to build and validate the decision tree. Ensure the data is representative and covers all necessary factors influencing the decision.

- Keep it simple : Aim for simplicity in the decision tree structure. Avoid unnecessary complexity that can confuse users or obscure key decision points.

- Understand and involve stakeholders : Involve stakeholders who will be impacted by or involved in the decision-making process. Ensure they understand the decision tree’s construction and can provide input on relevant factors and outcomes.

- Validate and verify : Validate the data used to build the decision tree to ensure its accuracy and reliability. Use techniques such as cross-validation or sensitivity analysis to verify the robustness of the tree.

- Interpretability : Use clear and intuitive visual representations of the decision tree. This aids in understanding how decisions are made and allows stakeholders to follow the logic easily.

- Consider uncertainty and risks : Incorporate probabilities of outcomes and consider uncertainties in data or assumptions. This helps in making informed decisions that account for potential risks and variability.

How Can a Decision Tree Help with Decision Making?

A decision tree simplifies the decision-making process in several key ways:

- Visual clarity : It presents decisions and their possible outcomes in a clear, visual format, making complex choices easier to understand at a glance.

- Structured approach : By breaking down decisions into a step-by-step sequence, it guides you through the decision-making process, ensuring that all factors are considered.

- Risk assessment : It incorporates probabilities and potential impacts of different outcomes, helping you evaluate risks and benefits systematically.

- Comparative analysis : Decision trees allow you to compare different choices side by side, making it easier to see which option offers the best expected value or outcome.

- Informed decisions : By organizing information logically, decision trees help you make decisions based on data and clear reasoning rather than intuition or guesswork.

- Flexibility : They can be easily updated with new information or adjusted to reflect changing circumstances, keeping the decision-making process dynamic and relevant.

Decision Tree Examples

Here are some decision tree examples to help you understand them better and get a head start on creating them. Explore more examples with our page on decision tree templates .

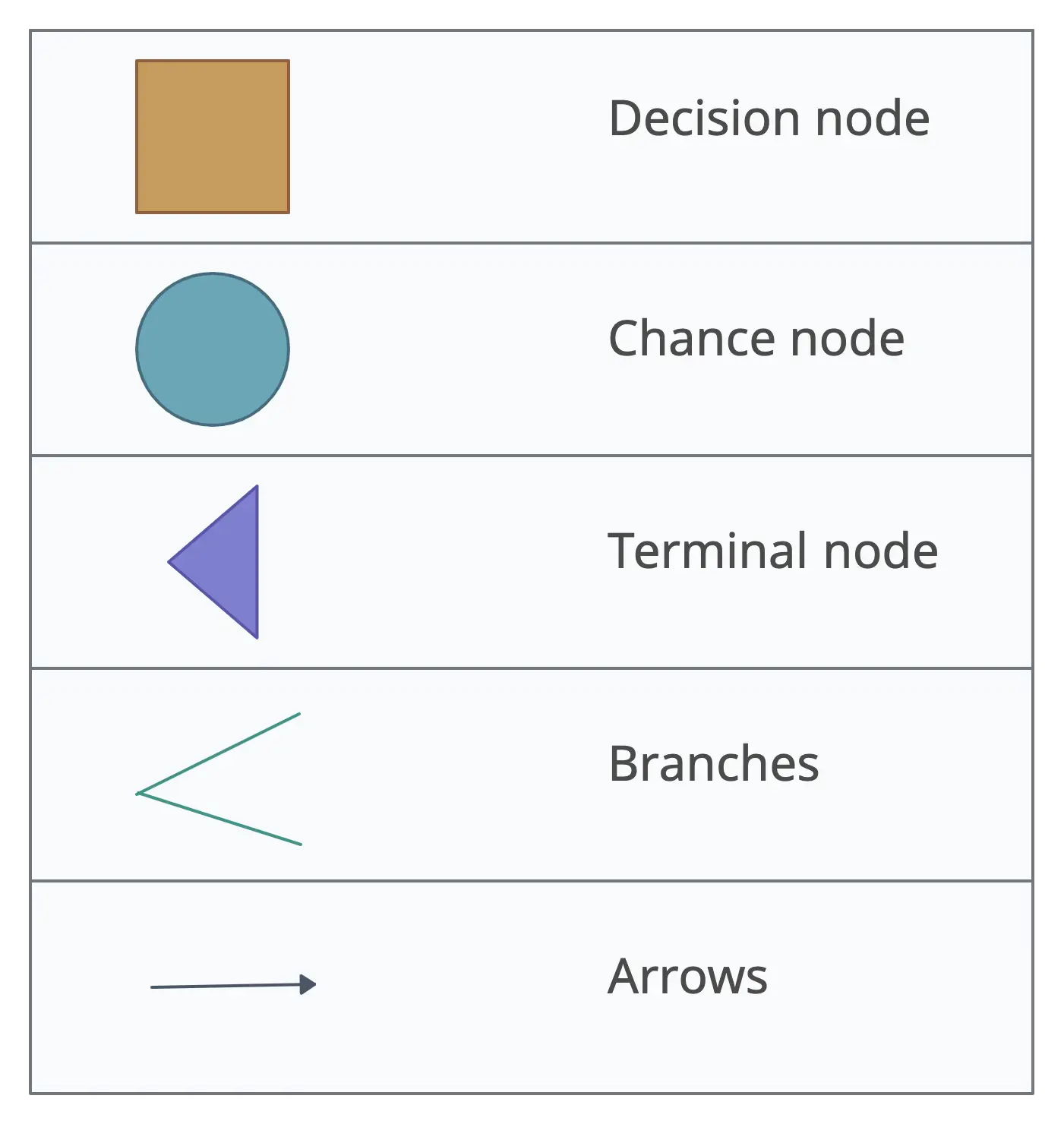

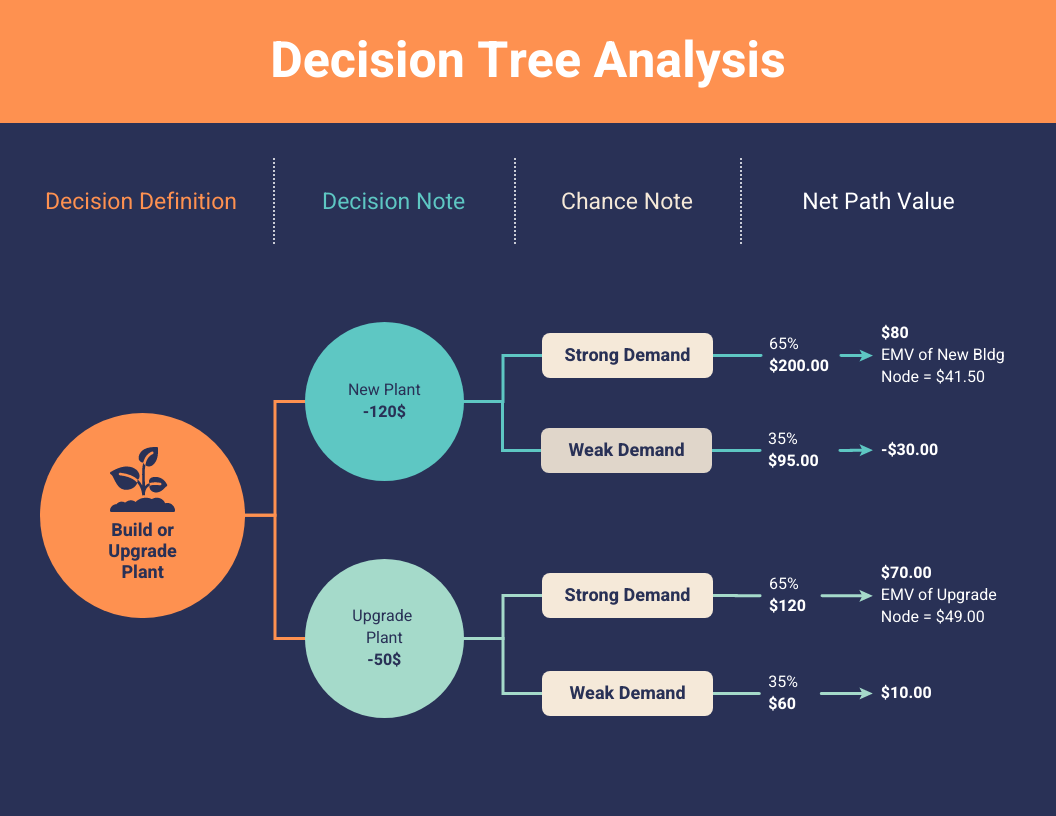

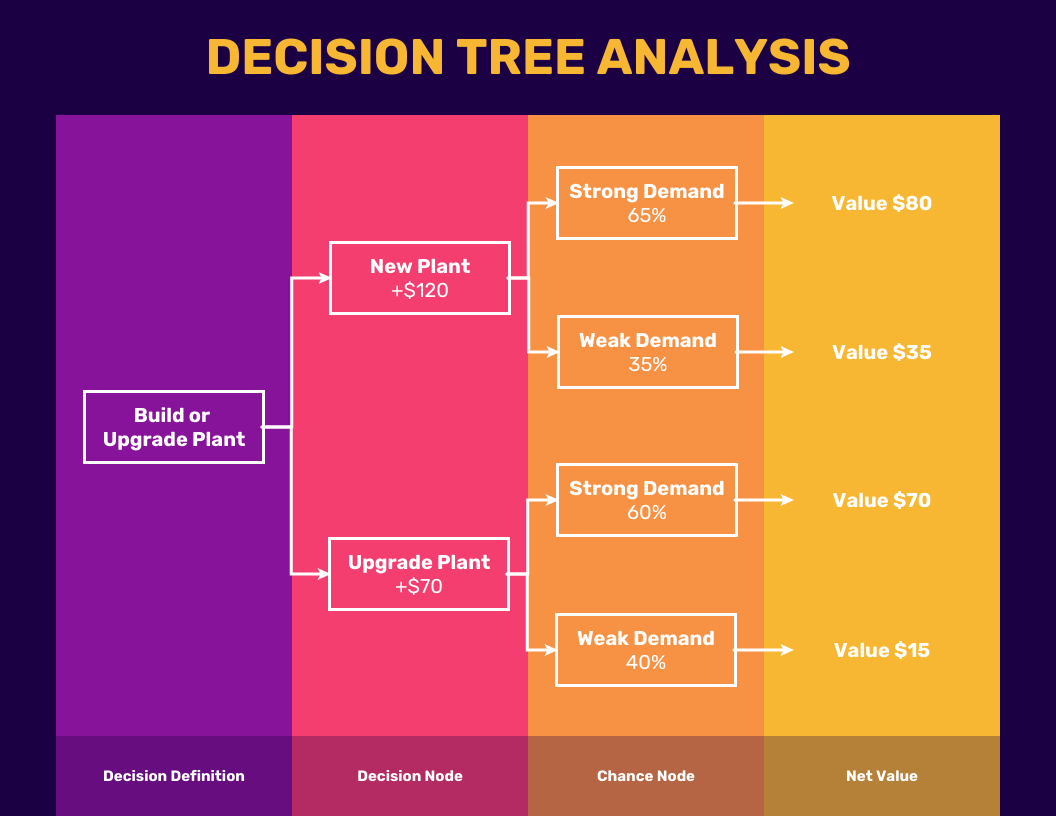

Decision Tree Analysis Example

This template helps make informed decisions by systematically organizing and analyzing complex information, ensuring clarity and consistency in the decision-making process.

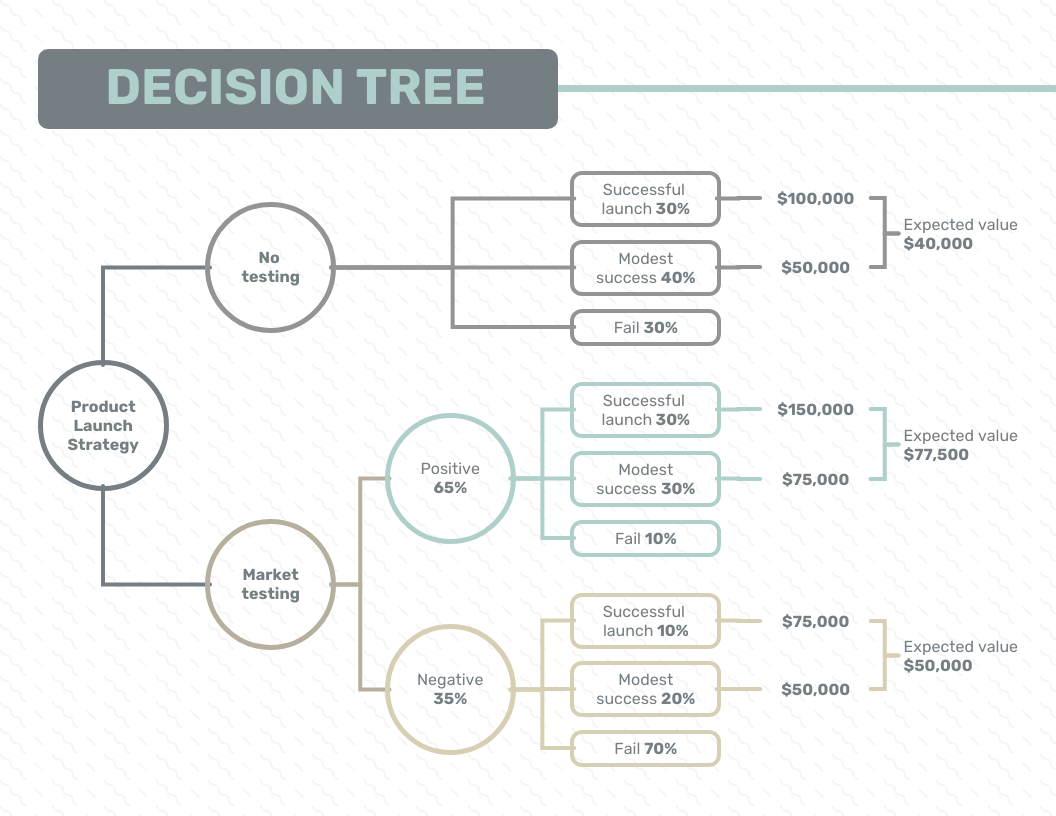

Bank decision tree

This Decision Tree helps banks decide if they should launch a new financial product by predicting outcomes based on market demand and customer interest. It guides banks in assessing risks and making informed decisions to meet market needs effectively.

Risk decision tree for software engineering

The tree shows possible outcomes based on the severity and likelihood of each risk, guiding teams to make informed decisions that keep projects on track and within budget.

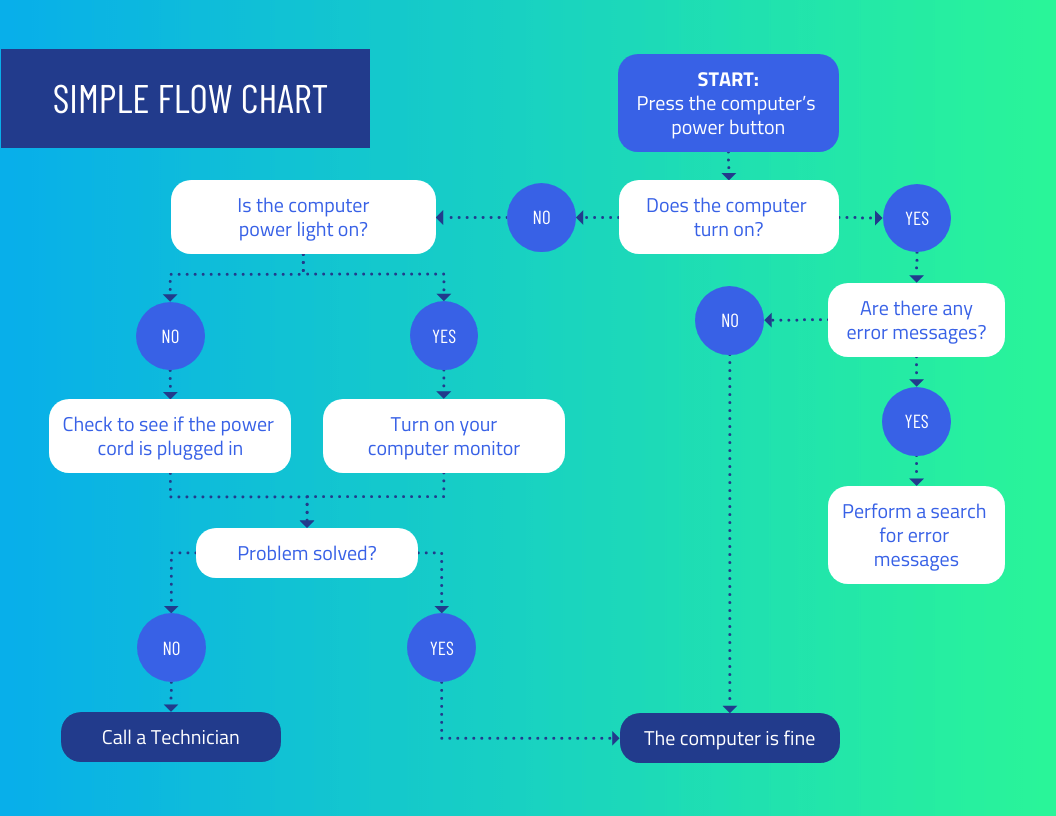

Simple Decision Tree

Use this simple decision tree to analyze choices systematically and clearly before making a final decision.

Advantages and Disadvantages of a Decision Tree

Understanding these advantages and disadvantages helps in determining when to use decision trees and how to mitigate their limitations for effective machine learning applications.

| Easy to interpret and visualize. | Can overfit complex datasets without proper pruning. |

| Can handle both numerical and categorical data without requiring data normalization. | Small changes in data can lead to different tree structures. |

| Captures non-linear relationships between features and target variables effectively. | Tends to favor dominant classes in imbalanced datasets. |

| Automatically selects significant variables and feature interactions. | May struggle with complex interdependencies between variables. |

| Applicable to both classification and regression tasks across various domains. | Can become memory-intensive with large datasets. |

Using Decision Trees in Data Mining and Machine Learning

Decision tree analysis is a method used in data mining and machine learning to help make decisions based on data. It creates a tree-like model with nodes representing decisions or events, branches showing possible outcomes, and leaves indicating final decisions. This method helps in evaluating options systematically by considering factors like probabilities, costs, and desired outcomes.

The process begins with defining the decision problem and collecting relevant data. Algorithms like ID3 or C4.5 are then used to build the tree, selecting attributes that best split the dataset to maximize information gain. Once constructed, the tree is analyzed to understand relationships between variables and visualize decision paths.

Decision tree analysis is commonly used in;

Business decision making:

- Strategic planning : Evaluating different strategic options and outcomes.

- Operational efficiency : Optimizing workflows and processes.

Healthcare:

- Medical diagnosis : Assisting in diagnosing diseases.

- Treatment plans : Determining the best treatment options.

- Credit scoring : Evaluating creditworthiness of loan applicants.

- Investment decisions : Assessing risks and returns of investments.

- Customer segmentation : Identifying customer groups for targeted marketing.

- Campaign effectiveness : Predicting success of marketing campaigns.

Environmental science:

- Environmental planning : Analyzing impacts of environmental policies.

- Conservation efforts : Identifying critical areas for conservation.

Risk assessment:

- Project risk analysis : Evaluating project risks and their impacts.

- Operational risk management : Identifying and mitigating operational risks.

Diagnostic reasoning:

- Fault diagnosis : Detecting faults in industrial processes.

- System troubleshooting : Guiding troubleshooting in technical systems.

While decision tree analysis offers clarity and flexibility, it can become too specific if not pruned properly and is influenced by variations in input data. Overall, decision tree analysis is valuable for pulling out useful insights from complicated datasets to help with decision-making.

Wrapping up

A decision tree is an invaluable tool for simplifying decision-making processes by visually mapping out choices and potential outcomes in a structured manner. Despite their straightforward approach, decision trees offer robust support for strategic planning across various domains, including project management. While they excel in clarifying decisions and handling different data types effectively, challenges like overfitting and managing complex datasets should be considered. Nevertheless, mastering decision tree analysis empowers organizations to make well-informed decisions by systematically evaluating options and optimizing outcomes based on defined criteria.

Join over thousands of organizations that use Creately to brainstorm, plan, analyze, and execute their projects successfully.

More Related Articles

Amanda Athuraliya is the communication specialist/content writer at Creately, online diagramming and collaboration tool. She is an avid reader, a budding writer and a passionate researcher who loves to write about all kinds of topics.

Decision Tree Examples: Problems With Solutions

On this page:

- What is decision tree? Definition.

- 5 solved simple examples of decision tree diagram (for business, financial, personal, and project management needs).

- Steps to creating a decision tree.

Let’s define it.

A decision tree is a diagram representation of possible solutions to a decision. It shows different outcomes from a set of decisions. The diagram is a widely used decision-making tool for analysis and planning.

The diagram starts with a box (or root), which branches off into several solutions. That’s way, it is called decision tree.

Decision trees are helpful for a variety of reasons. Not only they are easy-to-understand diagrams that support you ‘see’ your thoughts, but also because they provide a framework for estimating all possible alternatives.

In addition, decision trees help you manage the brainstorming process so you are able to consider the potential outcomes of a given choice.

Example 1: The Structure of Decision Tree

Let’s explain the decision tree structure with a simple example.

Each decision tree has 3 key parts:

- a root node

- leaf nodes, and

No matter what type is the decision tree, it starts with a specific decision. This decision is depicted with a box – the root node.

Root and leaf nodes hold questions or some criteria you have to answer. Commonly, nodes appear as a squares or circles. Squares depict decisions, while circles represent uncertain outcomes.

As you see in the example above, branches are lines that connect nodes, indicating the flow from question to answer.

Each node normally carries two or more nodes extending from it. If the leaf node results in the solution to the decision, the line is left empty.

How long should the decision trees be?

Now we are going to give more simple decision tree examples.

Example 2: Simple Personal Decision Tree Example

Let’s say you are wondering whether to quit your job or not. You have to consider some important points and questions. Here is an example of a decision tree in this case.

Download the following decision tree in PDF

Now, let’s deep further and see decision tree examples in business and finance.

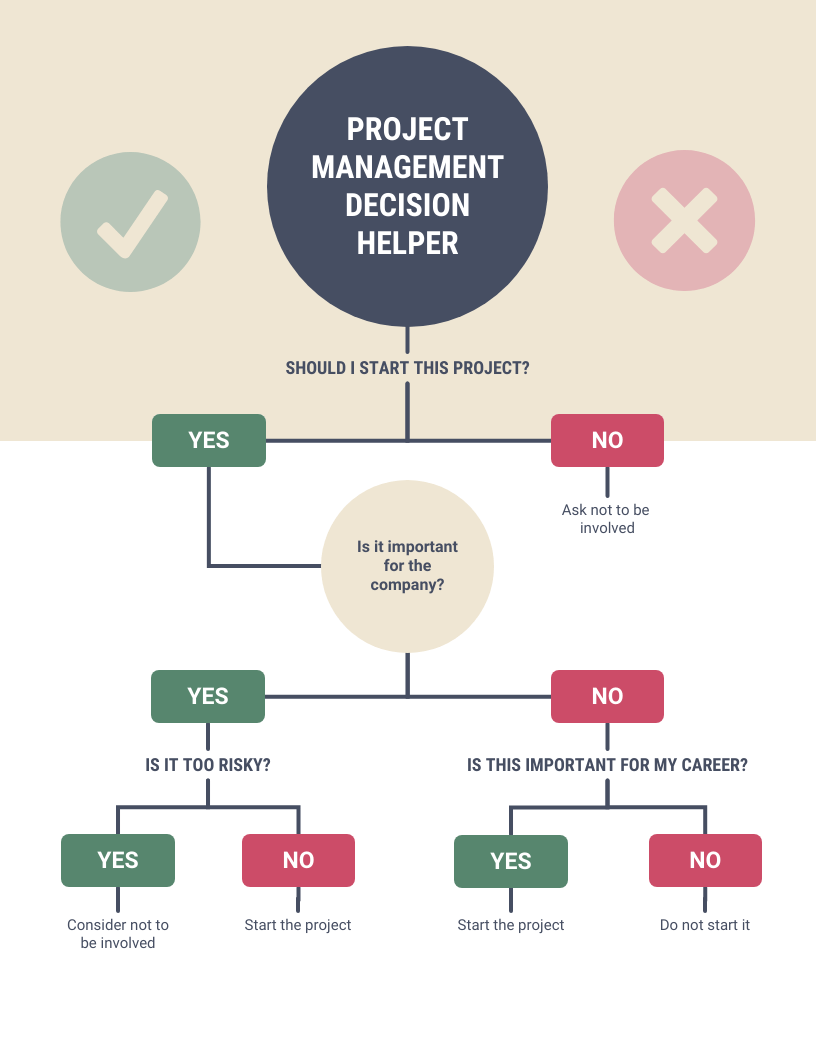

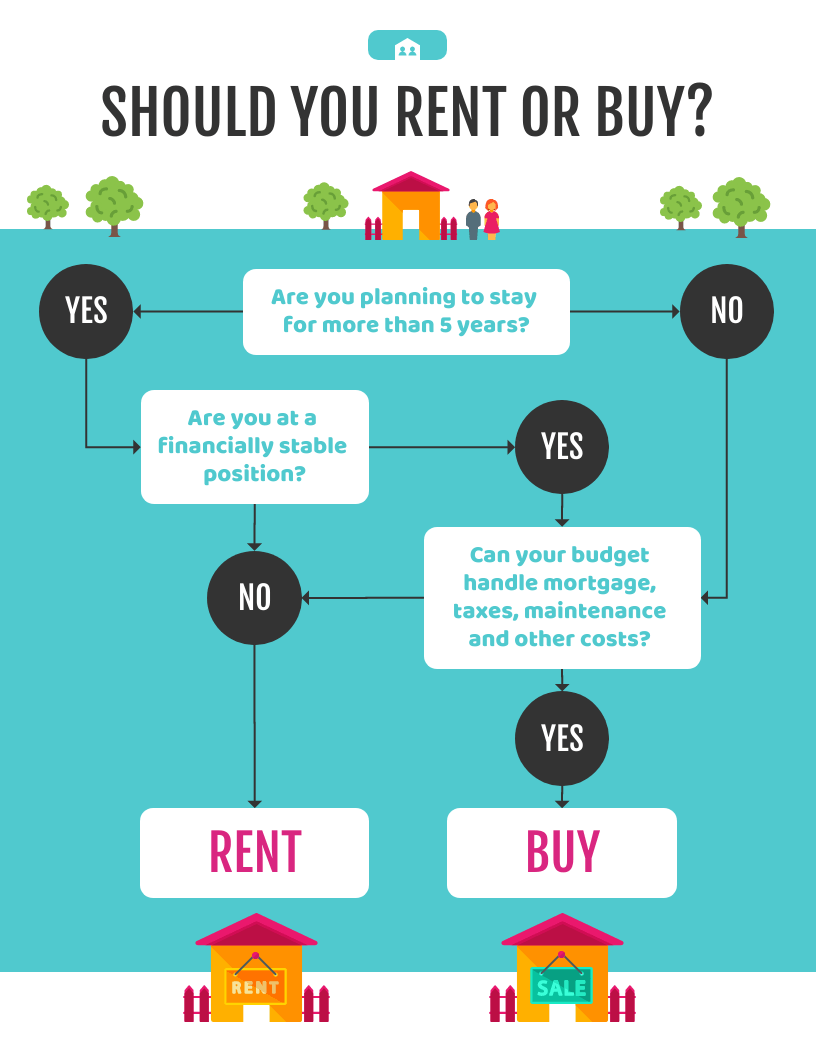

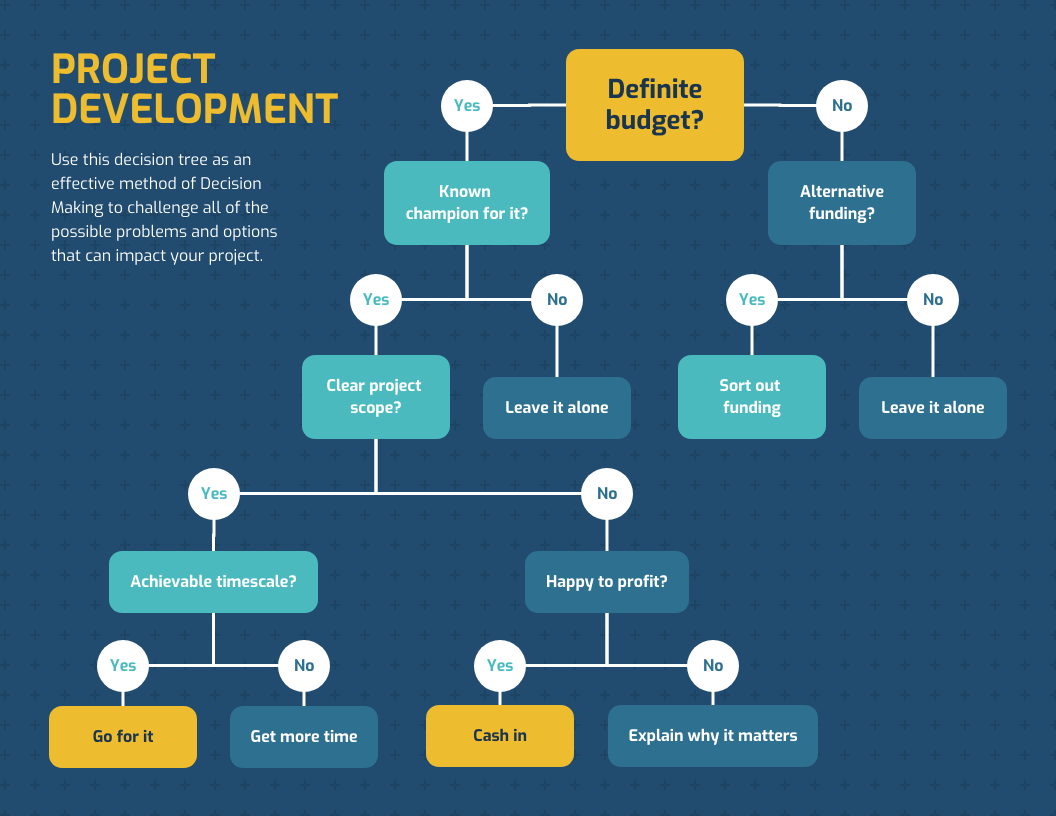

Example 3: Project Management Decision Tree Example

Imagine you are an IT project manager and you need to decide whether to start a particular project or not. You need to take into account important possible outcomes and consequences.

The decision tree examples, in this case, might look like the diagram below.

Download the following decision tree diagram in PDF.

Don’t forget that in each decision tree, there is always a choice to do nothing!

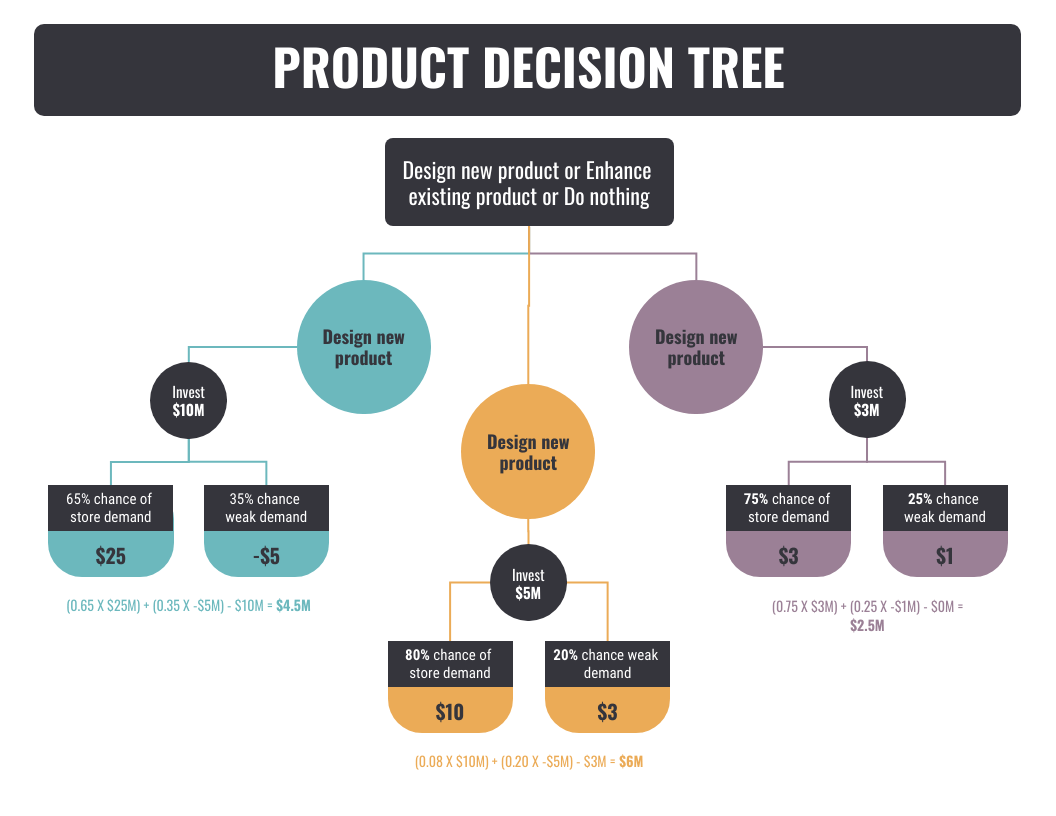

Example 4: Financial Decision Tree Example

When it comes to the finance area, decision trees are a great tool to help you organize your thoughts and to consider different scenarios.

Let’s say you are wondering whether it’s worth to invest in new or old expensive machines. This is a classical financial situation. See the decision tree diagram example below.

Download it.

The above decision tree example representing the financial consequences of investing in old or new machines. It is quite obvious that buying new machines will bring us much more profit than buying old ones.

Need more decision tree diagram examples?

Example 5: Very Simple Desicion Tree Example

As we have the basis, let’ sum the steps for creating decision tree diagrams.

Steps for Creating Decision Trees:

1. Write the main decision.

Begin the decision tree by drawing a box (the root node) on 1 edge of your paper. Write the main decision on the box.

2. Draw the lines

Draw line leading out from the box for each possible solution or action. Make at least 2, but better no more than 4 lines. Keep the lines as far apart as you can to enlarge the tree later.

3. Illustrate the outcomes of the solution at the end of each line.

A tip: It is a good practice here to draw a circle if the outcome is uncertain and to draw a square if the outcome leads to another problem.

4. Continue adding boxes and lines.

Continue until there are no more problems, and all lines have either uncertain outcome or blank ending.

5. Finish the tree.

The boxes that represent uncertain outcomes remain as they are.

A tip: A very good practice is to assign a score or a percentage chance of an outcome happening. For example, if you know for a certain situation there is 50% chance to happen, place that 50 % on the appropriate branch.

When you finish your decision tree, you’re ready to start analyzing the decisions and problems you face.

How to Create a Decision Tree?

In our IT world, it is a piece of cake to create decision trees. You have a plenty of different options. For example, you can use paid or free graphing software or free mind mapping software solutions such as:

- Silverdecisions

The above tools are popular online chart creators that allow you to build almost all types of graphs and diagrams from scratch.

Of course, you also might want to use Microsoft products such as:

And finally, you can use a piece of paper and a pen or a writing board.

Advantages and Disadvantages of Decision Trees:

Decision trees are powerful tools that can support decision making in different areas such as business, finance, risk management, project management, healthcare and etc. The trees are also widely used as root cause analysis tools and solutions.

As any other thing in this world, the decision tree has some pros and cons you should know.

Advantages:

- It is very easy to understand and interpret.

- The data for decision trees require minimal preparation.

- They force you to find many possible outcomes of a decision.

- Can be easily used with many other decision tools.

- Helps you to make the best decisions and best guesses on the basis of the information you have.

- Helps you to see the difference between controlled and uncontrolled events.

- Helps you estimate the likely results of one decision against another.

Disadvantages:

- Sometimes decision trees can become too complex.

- The outcomes of decisions may be based mainly on your expectations. This can lead to unrealistic decision trees.

- The diagrams can narrow your focus to critical decisions and objectives.

Conclusion:

The above decision tree examples aim to make you understand better the whole idea behind. As you see, the decision tree is a kind of probability tree that helps you to make a personal or business decision.

In addition, they show you a balanced picture of the risks and opportunities related to each possible decision.

If you need more examples, our posts fishbone diagram examples and Venn diagram examples might be of help.

About The Author

Silvia Valcheva

Silvia Valcheva is a digital marketer with over a decade of experience creating content for the tech industry. She has a strong passion for writing about emerging software and technologies such as big data, AI (Artificial Intelligence), IoT (Internet of Things), process automation, etc.

Leave a Reply Cancel Reply

This site uses Akismet to reduce spam. Learn how your comment data is processed .

- Product overview

- All features

- Latest feature release

- App integrations

CAPABILITIES

- project icon Project management

- Project views

- Custom fields

- Status updates

- goal icon Goals and reporting

- Reporting dashboards

- workflow icon Workflows and automation

- portfolio icon Resource management

- Capacity planning

- Time tracking

- my-task icon Admin and security

- Admin console

- asana-intelligence icon Asana AI

- list icon Personal

- premium icon Starter

- briefcase icon Advanced

- Goal management

- Organizational planning

- Campaign management

- Creative production

- Content calendars

- Marketing strategic planning

- Resource planning

- Project intake

- Product launches

- Employee onboarding

- View all uses arrow-right icon

- Project plans

- Team goals & objectives

- Team continuity

- Meeting agenda

- View all templates arrow-right icon

- Work management resources Discover best practices, watch webinars, get insights

- Customer stories See how the world's best organizations drive work innovation with Asana

- Help Center Get lots of tips, tricks, and advice to get the most from Asana

- Asana Academy Sign up for interactive courses and webinars to learn Asana

- Developers Learn more about building apps on the Asana platform

- Community programs Connect with and learn from Asana customers around the world

- Events Find out about upcoming events near you

- Partners Learn more about our partner programs

- Asana for nonprofits Get more information on our nonprofit discount program, and apply.

Featured Reads

- Project planning |

- What is decision tree analysis? 5 steps ...

What is decision tree analysis? 5 steps to make better decisions

Decision tree analysis involves visually outlining the potential outcomes, costs, and consequences of a complex decision. These trees are particularly helpful for analyzing quantitative data and making a decision based on numbers. In this article, we’ll explain how to use a decision tree to calculate the expected value of each outcome and assess the best course of action. Plus, get an example of what a finished decision tree will look like.

Have you ever made a decision knowing your choice would have major consequences? If you have, you know that it’s especially difficult to determine the best course of action when you aren’t sure what the outcomes will be.

What is a decision tree?

A decision tree is a flowchart that starts with one main idea and then branches out based on the consequences of your decisions. It’s called a “decision tree” because the model typically looks like a tree with branches.

These trees are used for decision tree analysis, which involves visually outlining the potential outcomes, costs, and consequences of a complex decision. You can use a decision tree to calculate the expected value of each outcome based on the decisions and consequences that led to it. Then, by comparing the outcomes to one another, you can quickly assess the best course of action. You can also use a decision tree to solve problems, manage costs, and reveal opportunities.

Decision tree symbols

A decision tree includes the following symbols:

Alternative branches: Alternative branches are two lines that branch out from one decision on your decision tree. These branches show two outcomes or decisions that stem from the initial decision on your tree.

Decision nodes: Decision nodes are squares and represent a decision being made on your tree. Every decision tree starts with a decision node.

Chance nodes: Chance nodes are circles that show multiple possible outcomes.

End nodes: End nodes are triangles that show a final outcome.

A decision tree analysis combines these symbols with notes explaining your decisions and outcomes, and any relevant values to explain your profits or losses. You can manually draw your decision tree or use a flowchart tool to map out your tree digitally.

What is decision tree analysis used for?

You can use decision tree analysis to make decisions in many areas including operations, budget planning, and project management . Where possible, include quantitative data and numbers to create an effective tree. The more data you have, the easier it will be for you to determine expected values and analyze solutions based on numbers.

For example, if you’re trying to determine which project is most cost-effective, you can use a decision tree to analyze the potential outcomes of each project and choose the project that will most likely result in highest earnings.

How to create a decision tree

Follow these five steps to create a decision tree diagram to analyze uncertain outcomes and reach the most logical solution.

![problem solving approach decision tree [inline illustration] decision tree analysis in five steps (infographic)](https://assets.asana.biz/transform/06bd6d24-56d2-4550-b13b-f2b3fac01c6a/inline-project-planning-decision-tree-analysis-1-2x?io=transform:fill,width:2560&format=webp)

1. Start with your idea

Begin your diagram with one main idea or decision. You’ll start your tree with a decision node before adding single branches to the various decisions you’re deciding between.

For example, if you want to create an app but can’t decide whether to build a new one or upgrade an existing one, use a decision tree to assess the possible outcomes of each.

In this case, the initial decision node is:

Create an app

The three options—or branches—you’re deciding between are:

Building a new scheduling app

Upgrading an existing scheduling app

Building a team productivity app

2. Add chance and decision nodes

After adding your main idea to the tree, continue adding chance or decision nodes after each decision to expand your tree further. A chance node may need an alternative branch after it because there could be more than one potential outcome for choosing that decision.

For example, if you decide to build a new scheduling app, there’s a chance that your revenue from the app will be large if it’s successful with customers. There’s also a chance the app will be unsuccessful, which could result in a small revenue. Mapping both potential outcomes in your decision tree is key.

3. Expand until you reach end points

Keep adding chance and decision nodes to your decision tree until you can’t expand the tree further. At this point, add end nodes to your tree to signify the completion of the tree creation process.

Once you’ve completed your tree, you can begin analyzing each of the decisions.

4. Calculate tree values

Ideally, your decision tree will have quantitative data associated with it. The most common data used in decision trees is monetary value.

For example, it’ll cost your company a specific amount of money to build or upgrade an app. It’ll also cost more or less money to create one app over another. Writing these values in your tree under each decision can help you in the decision-making process .

You can also try to estimate expected value you’ll create, whether large or small, for each decision. Once you know the cost of each outcome and the probability it will occur, you can calculate the expected value of each outcome using the following formula:

Expected value (EV) = (First possible outcome x Likelihood of outcome) + (Second possible outcome x Likelihood of outcome) - Cost

Calculate the expected value by multiplying both possible outcomes by the likelihood that each outcome will occur and then adding those values. You’ll also need to subtract any initial costs from your total.

5. Evaluate outcomes

Once you have your expected outcomes for each decision, determine which decision is best for you based on the amount of risk you’re willing to take. The highest expected value may not always be the one you want to go for. That’s because, even though it could result in a high reward, it also means taking on the highest level of project risk .

Keep in mind that the expected value in decision tree analysis comes from a probability algorithm. It’s up to you and your team to determine how to best evaluate the outcomes of the tree.

Pros and cons of decision tree analysis

Used properly, decision tree analysis can help you make better decisions, but it also has its drawbacks. As long as you understand the flaws associated with decision trees, you can reap the benefits of this decision-making tool.

![problem solving approach decision tree [inline illustration] pros and cons of decision tree analysis (infographic)](https://assets.asana.biz/transform/ab6b5d75-1d26-4ec1-a00c-4d657e4dd7be/inline-project-planning-decision-tree-analysis-2-2x?io=transform:fill,width:2560&format=webp)

When you’re struggling with a complex decision and juggling a lot of data, decision trees can help you visualize the possible consequences or payoffs associated with each choice.

Transparent: The best part about decision trees is that they provide a focused approach to decision making for you and your team. When you parse out each decision and calculate their expected value, you’ll have a clear idea about which decision makes the most sense for you to move forward with.

Efficient: Decision trees are efficient because they require little time and few resources to create. Other decision-making tools like surveys, user testing , or prototypes can take months and a lot of money to complete. A decision tree is a simple and efficient way to decide what to do.

Flexible: If you come up with a new idea once you’ve created your tree, you can add that decision into the tree with little work. You can also add branches for possible outcomes if you gain information during your analysis.

There are drawbacks to a decision tree that make it a less-than-perfect decision-making tool. By understanding these drawbacks, you can use your tree as part of a larger forecasting process.

Complex: While decision trees often come to definite end points, they can become complex if you add too many decisions to your tree. If your tree branches off in many directions, you may have a hard time keeping the tree under wraps and calculating your expected values. The best way to use a decision tree is to keep it simple so it doesn’t cause confusion or lose its benefits. This may mean using other decision-making tools to narrow down your options, then using a decision tree once you only have a few options left.

Unstable: It’s important to keep the values within your decision tree stable so that your equations stay accurate. If you change even a small part of the data, the larger data can fall apart.

Risky: Because the decision tree uses a probability algorithm, the expected value you calculate is an estimation, not an accurate prediction of each outcome. This means you must take these estimations with a grain of salt. If you don’t sufficiently weigh the probability and payoffs of your outcomes, you could take on a lot of risk with the decision you choose.

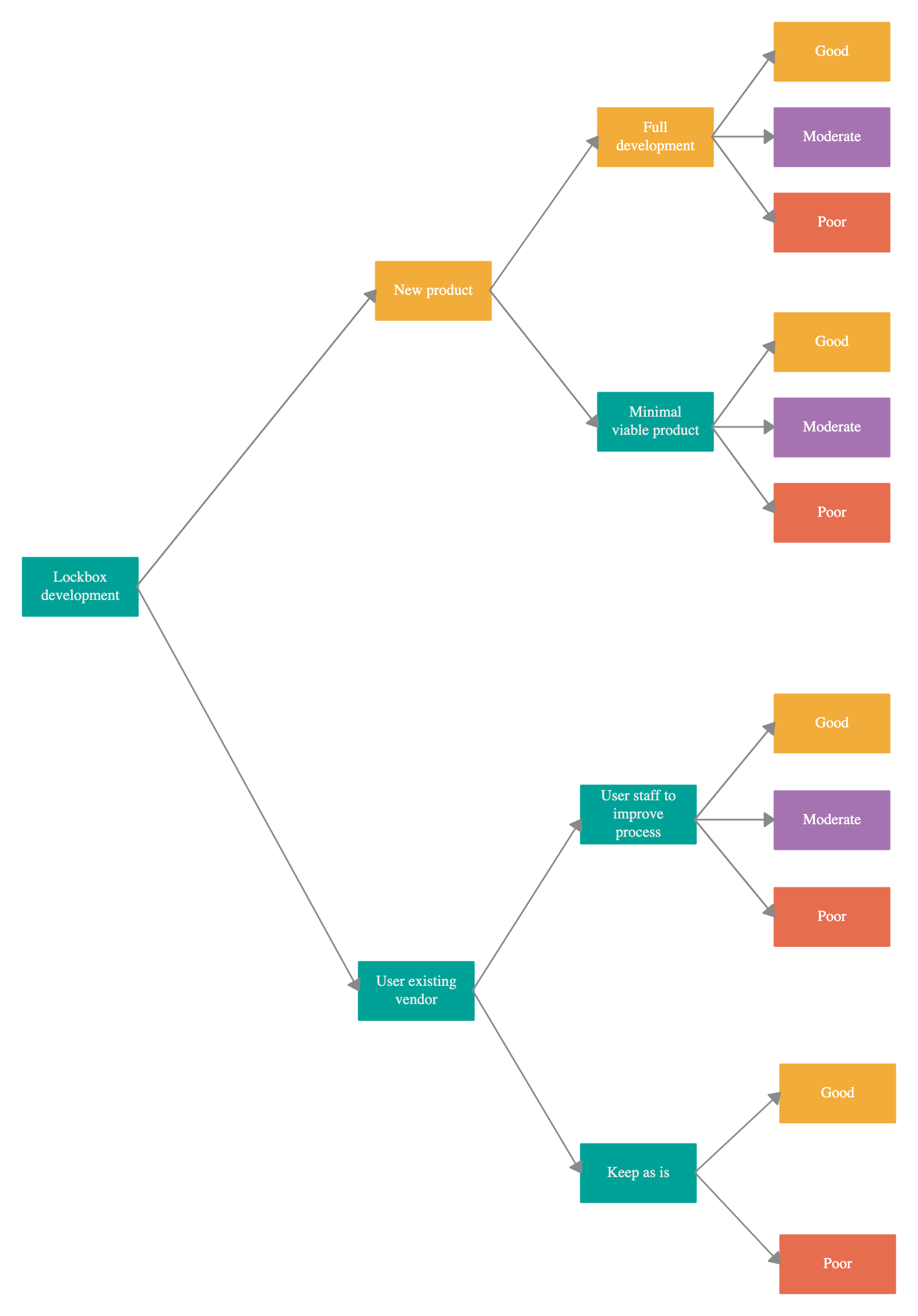

Decision tree analysis example

In the decision tree analysis example below, you can see how you would map out your tree diagram if you were choosing between building or upgrading a new software app.

As the tree branches out, your outcomes involve large and small revenues and your project costs are taken out of your expected values.

Decision nodes from this example :

Build new scheduling app: $50K

Upgrade existing scheduling app: $25K

Build team productivity app: $75K

Chance nodes from this example:

Large and small revenue for decision one: 40 and 55%

Large and small revenue for decision two: 60 and 38%

Large and small revenue for decision three: 55 and 45%

End nodes from this example:

Potential profits for decision one: $200K or $150K

Potential profits for decision two: $100K or $80K

Potential profits for decision three: $250K or $200K

![problem solving approach decision tree [inline illustration] decision tree analysis (example)](https://assets.asana.biz/transform/d3d2161f-6968-4d42-ab08-23a005d1ee0c/inline-project-planning-decision-tree-analysis-3-2x?io=transform:fill,width:2560&format=webp)

Although building a new team productivity app would cost the most money for the team, the decision tree analysis shows that this project would also result in the most expected value for the company.

Use a decision tree to find the best outcome

You can draw a decision tree by hand, but using decision tree software to map out possible solutions will make it easier to add various elements to your flowchart, make changes when needed, and calculate tree values. With Asana’s Lucidchart integration, you can build a detailed diagram and share it with your team in a centralized project management tool .

Decision tree software will make you feel confident in your decision-making skills so you can successfully lead your team and manage projects.

Related resources

New site openings: How to reduce costs and delays

Provider onboarding software: Simplify your hiring process

15 creative elevator pitch examples for every scenario

Timesheet templates: How to track team progress

We use essential cookies to make Venngage work. By clicking “Accept All Cookies”, you agree to the storing of cookies on your device to enhance site navigation, analyze site usage, and assist in our marketing efforts.

Manage Cookies

Cookies and similar technologies collect certain information about how you’re using our website. Some of them are essential, and without them you wouldn’t be able to use Venngage. But others are optional, and you get to choose whether we use them or not.

Strictly Necessary Cookies

These cookies are always on, as they’re essential for making Venngage work, and making it safe. Without these cookies, services you’ve asked for can’t be provided.

Show cookie providers

- Google Login

Functionality Cookies

These cookies help us provide enhanced functionality and personalisation, and remember your settings. They may be set by us or by third party providers.

Performance Cookies

These cookies help us analyze how many people are using Venngage, where they come from and how they're using it. If you opt out of these cookies, we can’t get feedback to make Venngage better for you and all our users.

- Google Analytics

Targeting Cookies

These cookies are set by our advertising partners to track your activity and show you relevant Venngage ads on other sites as you browse the internet.

- Google Tag Manager

- Infographics

- Daily Infographics

- Popular Templates

- Accessibility

- Graphic Design

- Graphs and Charts

- Data Visualization

- Human Resources

- Beginner Guides

Blog Graphs and Charts Decision Tree Analysis: Definition, Examples, How to Perform

Decision Tree Analysis: Definition, Examples, How to Perform

Written by: Letícia Fonseca May 05, 2022

The purpose of a decision tree analysis is to show how various alternatives can create different possible solutions to solve problems. A decision tree, in contrast to traditional problem-solving methods, gives a “visual” means of recognizing uncertain outcomes that could result from certain choices or decisions.

For those who have never worked with decision trees before, this article will explain how they function and it will also provide some examples to illustrate the ideas. To save you time, use Venngage’s Decision Tree Maker or browse our gallery of decision tree templates to help you get started.

Click to jump ahead:

What is a decision tree analysis?

What is the importance of decision tree analysis, 4 decision tree analysis examples, 5 steps to create a decision node analysis, when do you use or apply a decision tree analysis, how to create a decision node diagram with venngage, faqs on decision tree analysis.

A decision tree is a diagram that depicts the many options for solving an issue. Given particular criteria, decision trees usually provide the best beneficial option, or a combination of alternatives, for many cases. By employing easy-to-understand axes and graphics, a decision tree makes difficult situations more manageable. An event, action, decision, or attribute linked with the problem under investigation is represented by each box or node.

For risk assessment, asset values, manufacturing costs, marketing strategies, investment plans, failure mode effects analyses (FMEA), and scenario-building, a decision tree is used in business planning. Data from a decision tree can also build predictive models.

There are four basic forms of decision tree analysis , each with its own set of benefits and scenarios for which it is most useful. These subtypes include decision under certainty, decision under risk, decision-making, and decision under uncertainty. In terms of how they are addressed and applied to diverse situations, each type has its unique impact.

Business owners and other decision-makers can use a decision tree to help them consider their alternatives and the potential repercussions of each one. The examination of a decision tree can be used to:

- Determine the level of risk that each option entails. Before making a final decision, you can see how changing one component impacts others, so you can identify where more research or information is needed. Data from decision trees can also be utilized to build predictive models or to analyze an expected value.

- Demonstrate how particular acts or occurrences may unfold in the context of other events. It’s easy to see how different decisions and possible outcomes interact when you’re looking at decision trees.

- Concentrate your efforts. The most effective ways for reaching the desired and final outcome are shown in decision trees. They can be utilized in a multitude of industries, including goal setting, project management, manufacturing, marketing, and more.

Advantages of using a tree diagram as a decision-making tool

Decision tree analysis can be used to make complex decisions easier. They explain how changing one factor impacts the other and how it affects other factors by simplifying concepts. A summary of data can also be included in a decision tree as a reference or as part of a report. They show which methods are most effective in reaching the outcome, but they don’t say what those strategies should be.

Even if new information arises later that contradicts previous assumptions and hypotheses, decision-makers may find it difficult to change their minds once they have made and implemented an initial choice. Decision-makers can use decision-making tools like tree analysis to experiment with different options before reaching a final decision; this can help them gain expertise in making difficult decisions.

When presented with a well-reasoned argument based on facts rather than simply articulating their own opinion, decision-makers may find it easier to persuade others of their preferred solution. A decision tree is very useful when there is any uncertainty regarding which course of action will be most advantageous or when prior data is inadequate or partial.

Before implementing possible solutions, a decision tree analysis can assist business owners and other decision-makers in considering the potential ramifications of different solutions.

Disadvantages of using a tree diagram as a decision-making tool

Rather than displaying real outcomes, decision trees only show patterns connected with decisions. Because decision trees don’t provide information on aspects like implementation, timeliness, and prices, more research may be needed to figure out if a particular plan is viable.

This type of model does not provide insight into why certain events are likely while others are not, but it can be used to develop prediction models that illustrate the chance of an event occurring in certain situations.

Many businesses employ decision tree analysis to establish an effective business, marketing, and advertising strategies. Based on the probable consequences of each given course of action, decision trees assist marketers to evaluate which of their target audiences may respond most favorably to different sorts of advertisements or campaigns.

A decision tree example is that a marketer might wonder which style of advertising strategy will yield the best results. The decision tree analysis would assist them in determining the best way to create an ad campaign, whether print or online, considering how each option could affect sales in specific markets, and then deciding which option would deliver the best results while staying within their budget.

Another decision tree diagram example is when a corporation that wishes to grow sales might start by determining their course of action, which includes the many marketing methods that they can use to create leads. Before making a decision, they may use a decision tree analysis to explore each alternative and assess the probable repercussions.

If a company chooses TV ads as their proposed solution, decision tree analysis might help them figure out what aspects of their TV adverts (e.g. tone of voice and visual style) make consumers more inclined to buy, so they can better target new customers or get more out of their advertising dollars.

Related: 15+ Decision Tree Infographics to Visualize Problems and Make Better Decisions

This style of problem-solving helps people make better decisions by allowing them to better comprehend what they’re entering into before they commit too much money or resources. The five-step decision tree analysis procedure is as follows:

1. Determine your options

Which can help deal with an issue or answer a question. A problem to be addressed, a goal to be achieved, and additional criteria that will influence the outcome are all required for decision tree analysis to be successful, especially when there are multiple options for resolving a problem or a topic.

2. Examine the most effective course of action

Taking into account the potential rewards as well as the risks and expenses that each alternative may entail. If you’re starting a new firm, for example, you’ll need to decide what kind of business model or service to offer, how many employees to hire, where to situate your company, and so on.

3. Determine how a specific course will affect your company’s long-term success.

Depending on the data being studied, several criteria are defined for decision tree analysis. For instance, by comparing the cost of a drug or therapy to the effects of other potential therapies, decision tree analysis can be used to determine how effective a drug or treatment will be. When making decisions, a decision tree analysis can also assist in prioritizing the expected values of various factors.

4. Use each alternative course of action to examine multiple possible outcomes

This way you can decide which decision you believe is the best and what criteria it meets (the “branches” of your decision tree). Concentrate on determining which solutions are most likely to bring you closer to attaining your goal of resolving your problem while still meeting any of the earlier specified important requirements or additional considerations.

5. To evaluate which choice will be most effective

Compare the potential outcomes of each branch. Implement and track the effects of decision tree analysis to ensure that you appropriately assess the benefits and drawbacks of several options so that you can concentrate on the ones that offer the best return on investment while minimizing the risks and drawbacks.

A decision tree diagram employs symbols to represent the problem’s events, actions, decisions, or qualities. Given particular criteria, decision trees usually provide the best beneficial option, or a combination of alternatives, for many cases.

By employing easy-to-understand axes and drawings, as well as breaking down the critical components involved with each choice or course of action, decision trees help make difficult situations more manageable. This type of analysis seeks to help you make better decisions about your business operations by identifying potential risks and expected consequences.

In this case, the tree can be seen as a metaphor for problem-solving: it has numerous roots that descend into diverse soil types and reflect one’s varied options or courses of action, while each branch represents the possible and uncertain outcomes. The act of creating a “tree” based on specified criteria or initial possible solutions has to be implemented.

You may start with a query like, “What is the best approach for my company to grow sales?” After that, you’d make a list of feasible actions to take, as well as the probable results of each one. The goal of a decision tree analysis is to help you understand the potential repercussions of your decisions before you make them so that you have the best chance of making a good decision.

Regardless of the level of risk involved, decision tree analysis can be a beneficial tool for both people and groups who want to make educated decisions.

Venngage has built-in templates that are already arranged according to various data kinds, which can assist in swiftly building decision nodes and decision branches. Here’s how to create one with Venngage:

1. Sign up for a free account here .

2. From Home or your dashboard, click on Templates.

3. There are hundreds of templates to pick from, but Venngage’s built-in Search engine makes it simple to find what you’re looking for.

4. Once you have chosen the template that’s best for you, click Create to begin editing.

5. Venngage allows you to download your project as a PNG, PNG HD, PDF or PowerPoint with a Business plan .

Venngage also has a business feature called My Brand Kit that enables you to add your company’s logo, color palette, and fonts to all your designs with a single click.

For example, you can make the previous decision tree analysis template reflect your brand design by uploading your brand logo, fonts, and color palette using Venngage’s branding feature.

Not only are Venngage templates free to use and professionally designed, but they are also tailored for various use cases and industries to fit your exact needs and requirements.

A business account also includes the real-time collaboration feature , so you can invite members of your team to work simultaneously on a project.

Venngage allows you to share your decision tree online as well as download it as a PNG or PDF file. That way, your design will always be presentation-ready.

How important is a decision tree in management?

Project managers can utilize decision tree analysis to produce successful solutions, making it a key element of their success process. They can use a decision tree to think about how each decision will affect the company as a whole and make sure that all factors are taken into account before making a decision.

This decision tree can assist you in making smarter investments as well as identifying any dangers or negative outcomes that may arise as a result of certain choices. You will have more information on what works best if you explore all potential outcomes so that you can make better decisions in the future.

What is a decision tree in system analysis?

For studying several systems that work together, a decision tree is useful. You can use decision tree analysis to see how each portion of a system interacts with the others, which can help you solve any flaws or restrictions in the system.

Create a professional decision tree with Venngage

Simply defined, a decision tree analysis is a visual representation of the alternative solutions and expected outcomes you have while making a decision. It can help you quickly see all your potential outcomes and how each option might play out.

Venngage makes the process of creating a decision tree simple and offers a variety of templates to help you. It is the most user-friendly platform for building professional-looking decision trees and other data visualizations. Sign up for a free account and give it a shot right now. You might be amazed at how much easier it is to make judgments when you have all of your options in front of you.

Discover popular designs

Infographic maker

Brochure maker

White paper online

Newsletter creator

Flyer maker

Timeline maker

Letterhead maker

Mind map maker

Ebook maker

Hacking the Case Interview

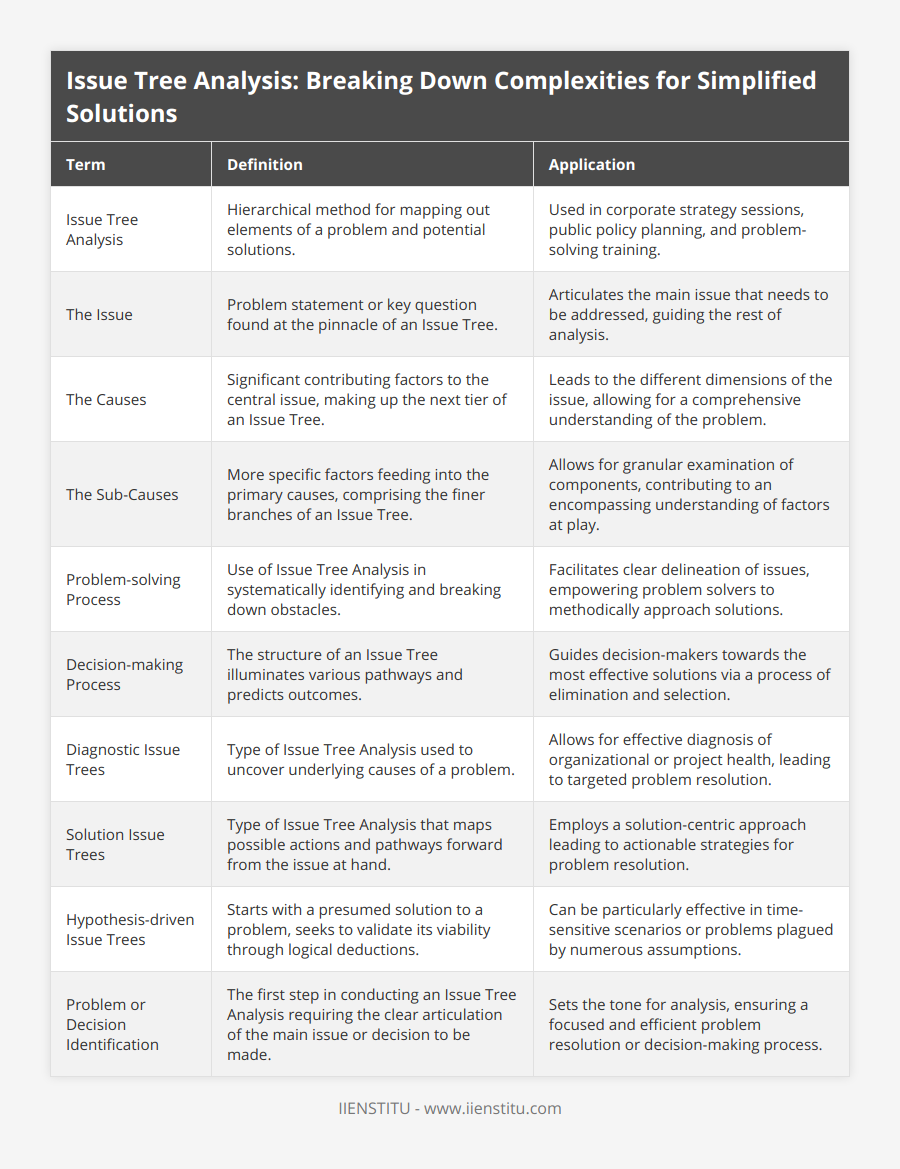

An issue tree is a structured framework used to break down and analyze complex problems or questions into smaller components. It is a visual representation of the various aspects, sub-issues, and potential solutions related to a particular problem.

Issue trees are commonly used in business, consulting, problem-solving, and decision-making processes.

If you’re looking to better understand issue trees and how to use them in consulting case interviews or in business, we have you covered.

In this comprehensive article, we’ll cover:

- What is an issue tree?

- Why are issue trees important?

- How do I create an issue tree?

- How do I use issue trees in consulting case interviews?

- What are examples of issue trees?

- What are tips for making effective issue trees?

If you’re looking for a step-by-step shortcut to learn case interviews quickly, enroll in our case interview course . These insider strategies from a former Bain interviewer helped 30,000+ land consulting offers while saving hundreds of hours of prep time.

What is an Issue Tree?

An issue tree is a visual representation of a complex problem or question broken down into smaller, more manageable components. It consists of a top level issue, visualized as the root question, and sub-issues, visualized as branches and sub-branches.

- Top Level Issue (Root Question) : This is the main problem or question that needs to be addressed. It forms the root of the tree.

- Sub-issues (Branches) : Underneath the top level issue are branches representing the major categories or dimensions of the problem. These are the high-level areas that contribute to the overall problem.

- Further Sub-issues (Sub-branches) : Each branch can be broken down further into more specific sub-issues.

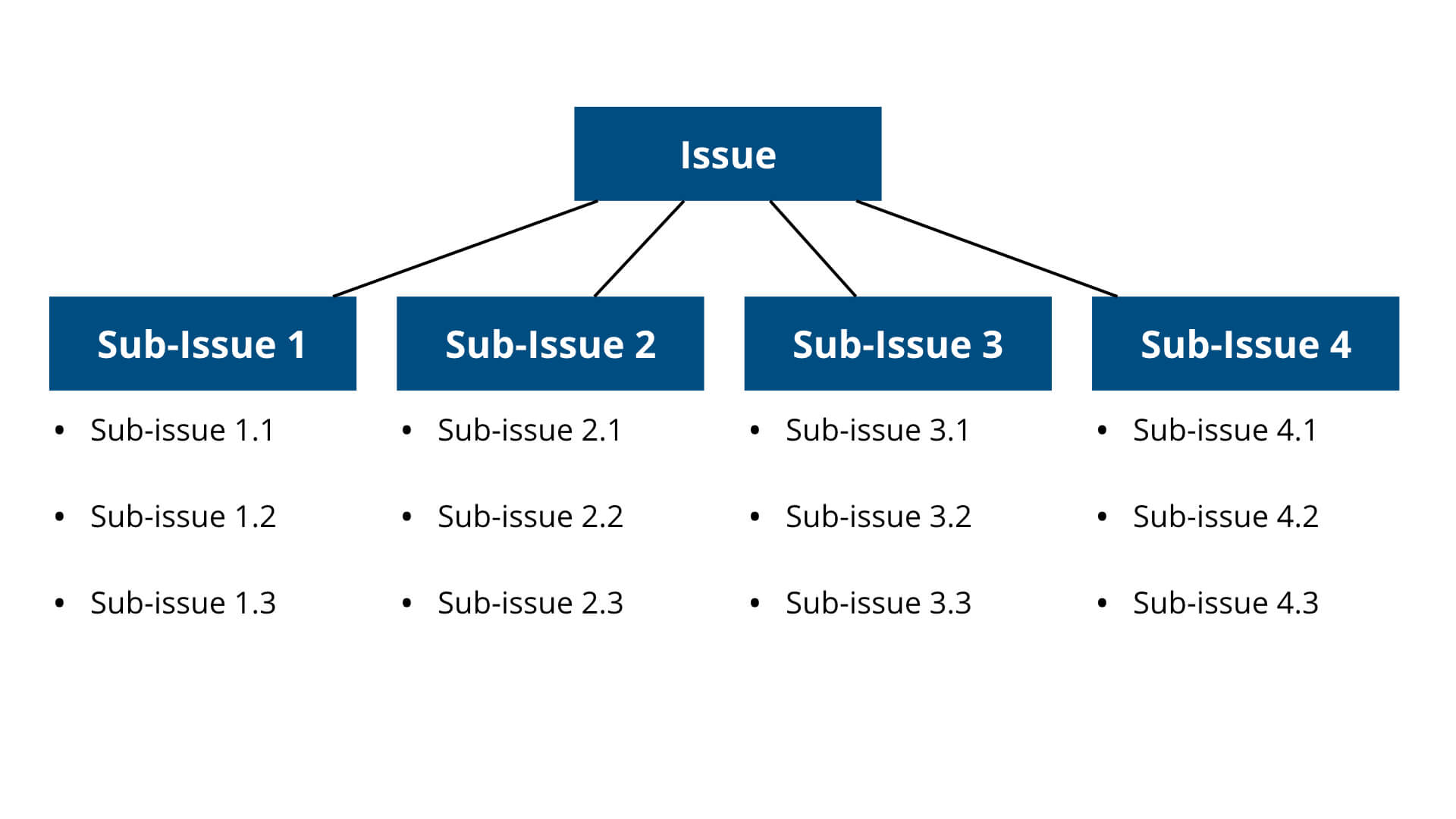

Issue trees generally take on the following structure.

Issue trees get their name because the primary issue that you are solving for can be broken down into smaller issues or branches. These issues can then be further broken down into even smaller issues or branches.

This can be continued until you are left with a long list of small issues that are much simpler and more manageable. No matter how complicated or difficult a problem is, an issue tree can provide a way to structure the problem to make it easier to solve.

As an example, let’s say that we are trying to help a lemonade stand increase their profits. The overall problem is determining how to increase profits.

Since profits is equal to revenue minus costs, we can break this problem down into two smaller problems:

- How can we increase revenues?

- How can we decrease costs?

Since revenue is equal to quantity times price, we can further break this revenue problem down into two even smaller problems:

- How can we increase quantity sold?

- How can we increase price?

Looking at the problem of how to increase quantity sold, we can further break that problem down:

- How can we increase the quantity of lemonade sold?

- How can we increase the quantity of other goods sold?

We can repeat the same procedure for the costs problem since we know that costs equal variable costs plus fixed costs.

- How can we decrease variable costs?

- How can we decrease fixed costs?

Looking at the problem of how to decrease variable costs, we can further break that down by the different variable cost components of lemonade:

- How can we decrease costs of lemons?

- How can we decrease costs of water?

- How can we decrease costs of ice?

- How can we decrease costs of sugar?

- How can we decrease costs of cups?

The overall issue tree for this example would look like the following:

In this example, the issue tree is a special kind of issue tree known as a profit tree.

Why are Issue Trees Important?

Issue trees are helpful because they facilitate systematic analysis, managing complexity, prioritization, generating solutions, identifying root causes, work subdivision, roadmap generation, and effective communication.

Systematic analysis : Issue trees guide a systematic analysis of the problem. By dissecting the problem into its constituent parts, you can thoroughly examine each aspect and understand its implications.

Managing complexity : Complex problems often involve multiple interrelated factors. Issue trees provide a way to manage this complexity by organizing and visualizing the relationships between different components.

Prioritization : Issue trees help in prioritizing actions. By assessing the importance and impact of each sub-issue, you can determine which aspects of the problem require immediate attention.

Generating solutions : Issue trees facilitate the generation of potential solutions or strategies for each component of the problem. This allows for a more comprehensive approach to problem-solving.

Identifying root causes : Issue trees help in identifying the root causes of a problem. By drilling down through the sub-issues, you can uncover the underlying factors contributing to the main issue.

Work subdivision : Issue trees provide you with a list of smaller, distinct problems or areas to explore. This distinction makes it easy for you to divide up work.

Roadmap generation : Issue trees layout exactly all of the different areas or issues that you need to focus on in order to solve the overall problem. This gives you a clear idea of where to focus your attention and work on.

Effective communication : Issue trees are powerful communication tools. Visualizing the problem in a structured format helps in explaining it to others, including team members, stakeholders, or clients.

How Do I Create An Issue Tree?

Creating an issue tree involves several steps. Here's a step-by-step guide to help you through the process:

Step 1: Define the top-level issue

Start by clearly articulating the main problem or question that you want to address. This will form the root of your issue tree.

Step 2: Identify the branches (sub-issues)

Consider the major sub-issues that contribute to the overall problem. These will become the branches of your issue tree. Brainstorm and list them down.

There are four major ways that you can break down the root problem in an issue tree. You can break down the issue by stakeholder, process, segments, or math.

- Stakeholder : Break the problem down by identifying all stakeholders involved. This may include the company, customers, competitors, suppliers, manufacturers, distributors, and retailers. Each stakeholder becomes a branch for the top-level issue.

- Process : Break the problem down by identifying all of the different steps in the process. Each step becomes a branch for the top-level issue.

- Segment : Break the problem down into smaller segments. This may include breaking down the problem by geography, product, customer segment, market segment, distribution channel, or time horizon. Each segment becomes a branch for the top-level issue.

- Math : Break a problem down by quantifying the problem into an equation or formula . Each term in the equation is a branch for the top-level issue.

Step 3: Break down each branch

For each branch, ask yourself if there are further components that contribute to it. If so, break down each branch into more specific components. Continue this process until you've reached a level of detail that allows for meaningful analysis.

Similar to the previous step, you can break down a branch by stakeholder, process, segment, or by math.

Step 4: Review and refine

Take a step back and review your issue tree. Make sure it accurately represents the problem and its components. Look for any missing or redundant branches or sub-issues.

Step 5: Prioritize and evaluate

Consider assigning priorities to different sub-issues or potential solutions. This will help guide your decision-making process.

How Do I Use Issue Trees in Consulting Case Interviews?

Issue trees are used near the beginning of the consulting case interview to break down the business problem into smaller, more manageable components.

After the interviewer provides the case background information, you’ll be expected to quickly summarize the context of the case and verify the case objective. After asking clarifying questions, you’ll ask for a few minutes of silence to create an issue tree.

After you have created an issue tree, here’s how you would use it:

Step 1: Walk your interviewer through the issue tree

Once you’ve created an issue tree, provide a concise summary of how it's structured and how it addresses the problem at hand. Explain the different branches and sub-branches. They may ask a few follow-up questions.

As you are presenting your issue tree, periodically check in with the interviewer to ensure you're on the right track. Your interviewer may provide some input or guidance on improving your issue tree.

Step 2: Identify an area of your issue tree to start investigating

Afterwards, you’ll use the issue tree to help identify a branch to start investigating. There is generally no wrong answer here as long as you have a reason that supports why you want to start with a particular branch.

To determine which branch to start investigating, ask yourself a few questions. What is the most important sub-issue? Consider factors like urgency, impact, or feasibility. What is your best guess for how the business problem can be solved?

Step 3: Gather data and information

Collect relevant facts, data, and information for the sub-issue that you are investigating. This will provide the necessary context and evidence for your analysis.

Step 4 : Record key insights on the issue tree

After diving deeper into each sub-issue or branch on your issue tree, you may find it helpful to write a few bullets on the key takeaways or insights that you’ve gathered through your analysis.

This will help you remember all the work that you have done during the case interview so far. It’ll also help you develop a recommendation at the end of the case interview because you’ll quickly be able to read a summary of all of your analysis.

Step 5: Iterate and adjust as needed

As you work through the problem-solving process, be prepared to adjust and update the issue tree based on new information, insights, or changes in the situation.

Remember, creating an issue tree is not a one-size-fits-all process. It's a dynamic tool that can be adapted to suit the specific needs and complexity of the problem you're addressing.

Step 6: Select the next area of your issue tree to investigate

Once you have finished analyzing a branch or sub-issue on your issue tree and reached a satisfactory insight or conclusion, move onto the next branch or sub-issue.

Again, consider factors like urgency, impact, or feasibility when prioritizing which branch or sub-issue to dive deeper into. Repeat this step until the end of the case interview when you are asked for a final recommendation.

What are Examples of Issue Trees?

Below are five issue tree examples for five common types of business situations and case interviews.

If you want to learn strategies on how to create unique and tailored issue trees for any case interview, check out our comprehensive article on case interview frameworks .

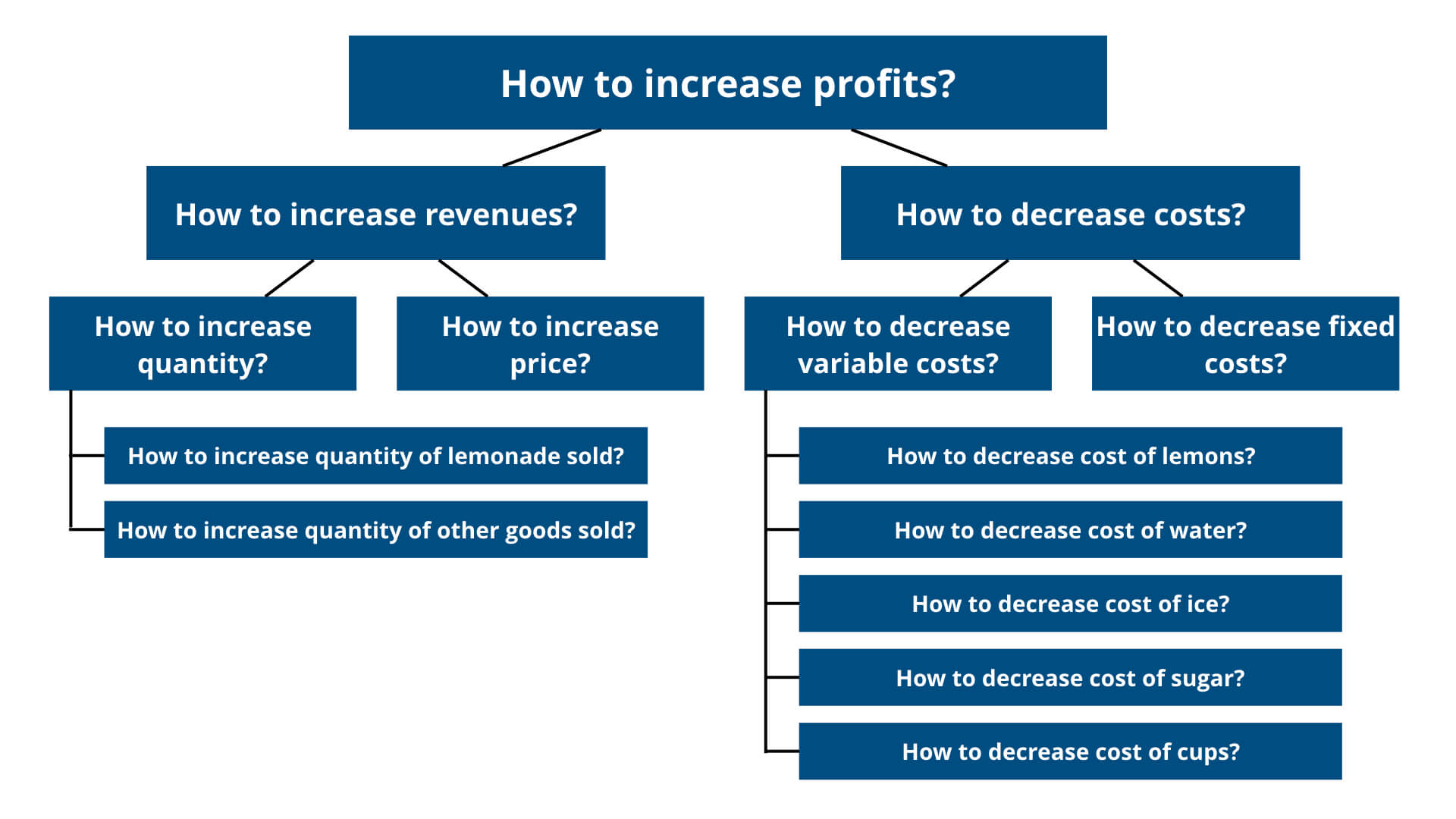

Profitability Issue Tree Example

Profitability cases ask you to identify what is causing a company’s decline in profits and what can be done to address this problem.

A potential issue tree template for this case could explore four major issues:

- What is causing the decline in profitability?

- Is the decline due to changes among customers?

- Is the decline due to changes among competitors?

- Is the decline due to market trends?

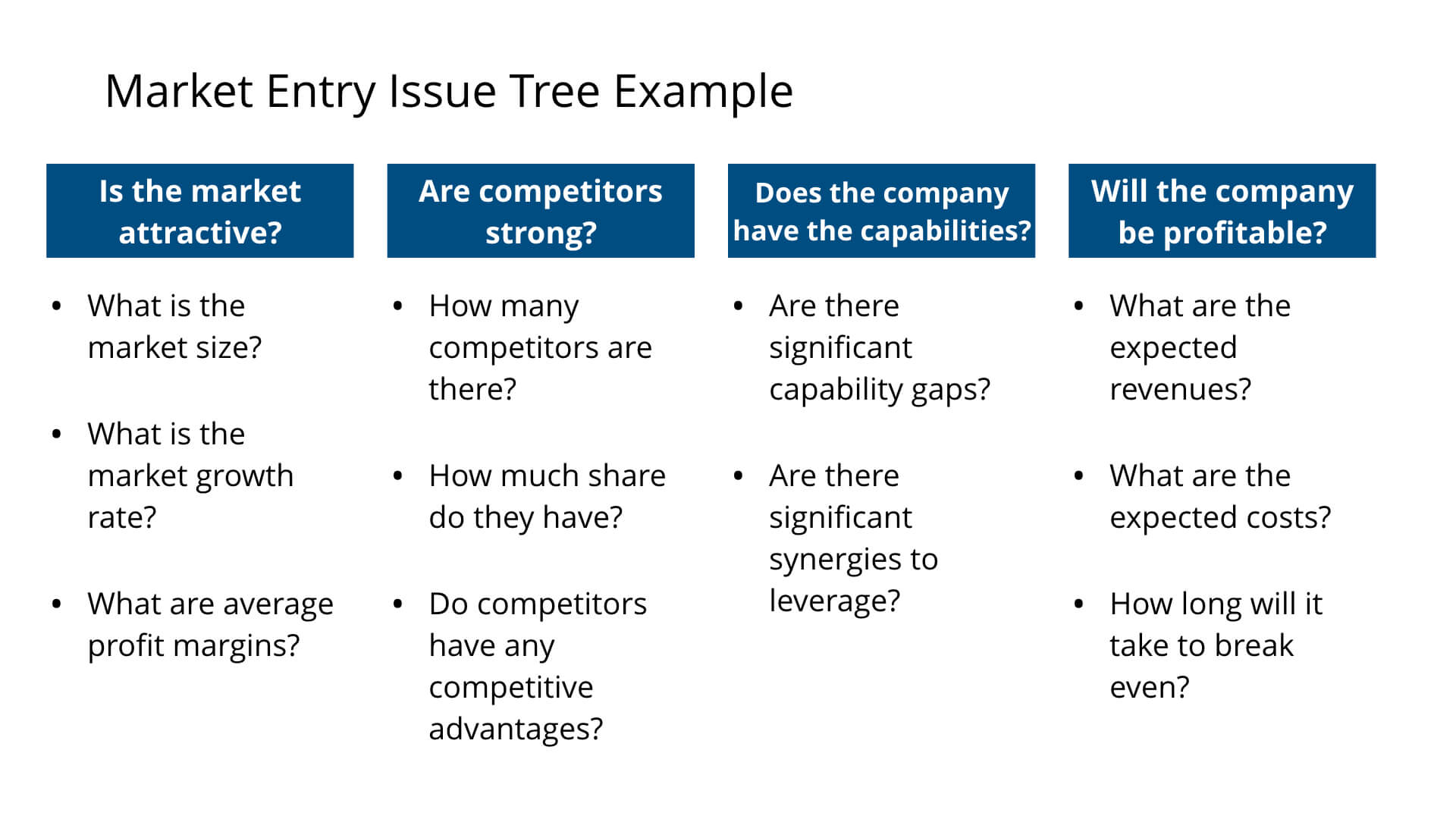

Market Entry Issue Tree Example

Market entry cases ask you to determine whether a company should enter a new market.

- Is the market attractive?

- Are competitors strong?

- Does the company have the capabilities to enter?

- Will the company be profitable from entering the market?

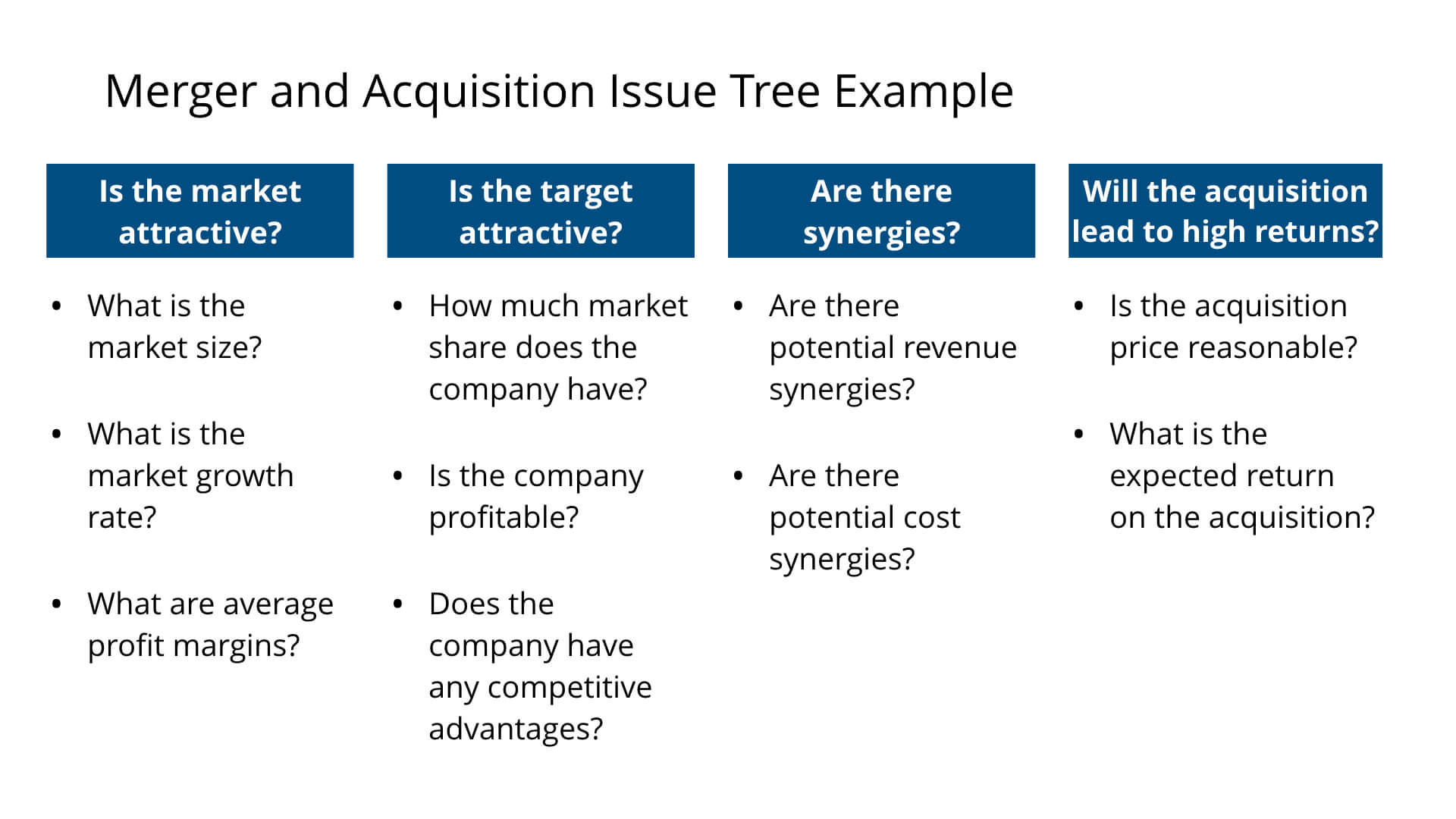

Merger and Acquisition Issue Tree Example

Merger and acquisition cases ask you to determine whether a company or private equity firm should acquire a particular company.

- Is the market that the target is in attractive?

- Is the acquisition target an attractive company?

- Are there any acquisition synergies?

- Will the acquisition lead to high returns?

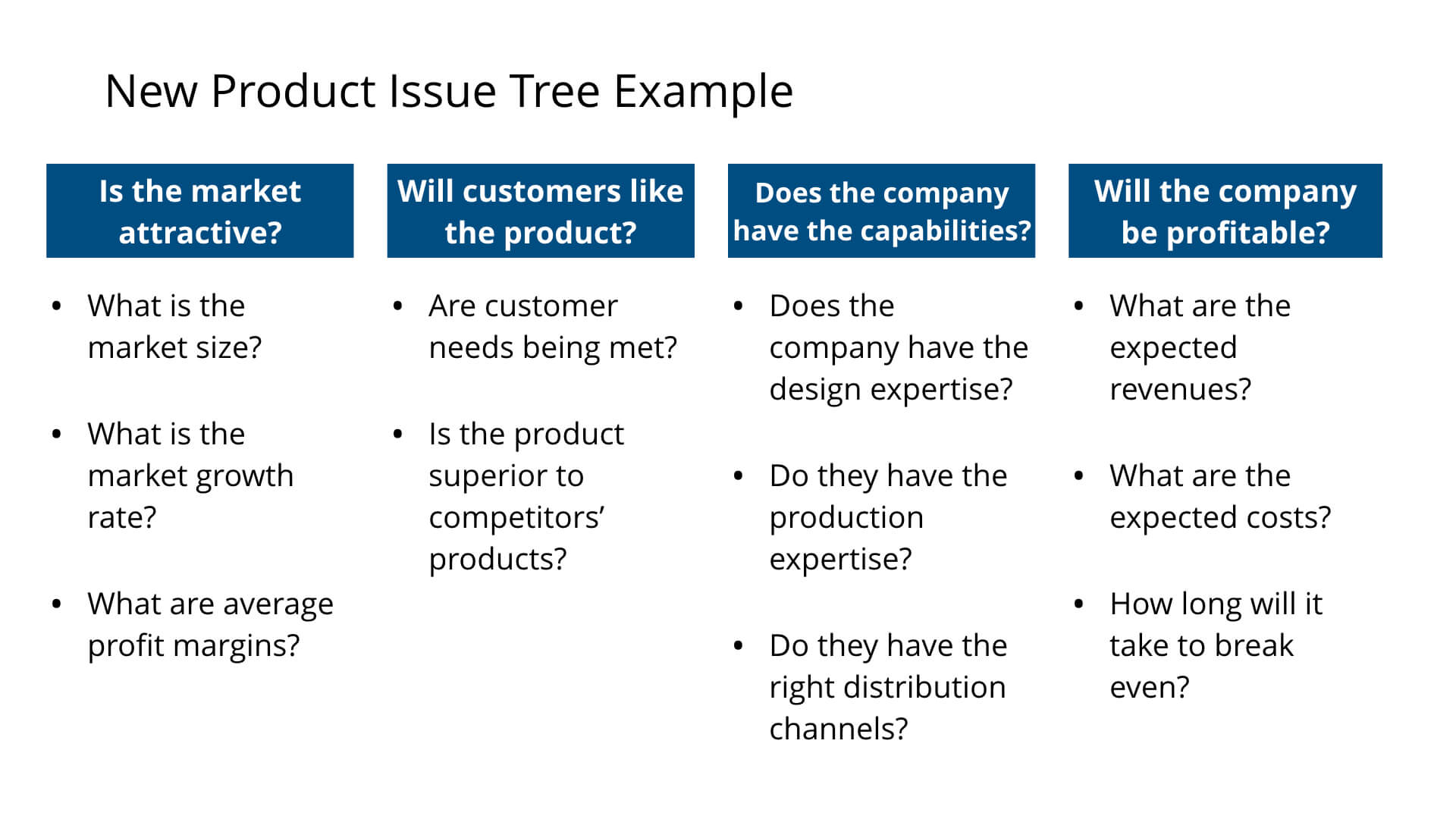

New Product Issue Tree Example

New product cases ask you to determine whether a company should launch a new product or service.

- Will customers like the product?

- Does the company have the capabilities to successfully launch the product?

- Will the company be profitable from launching the product?

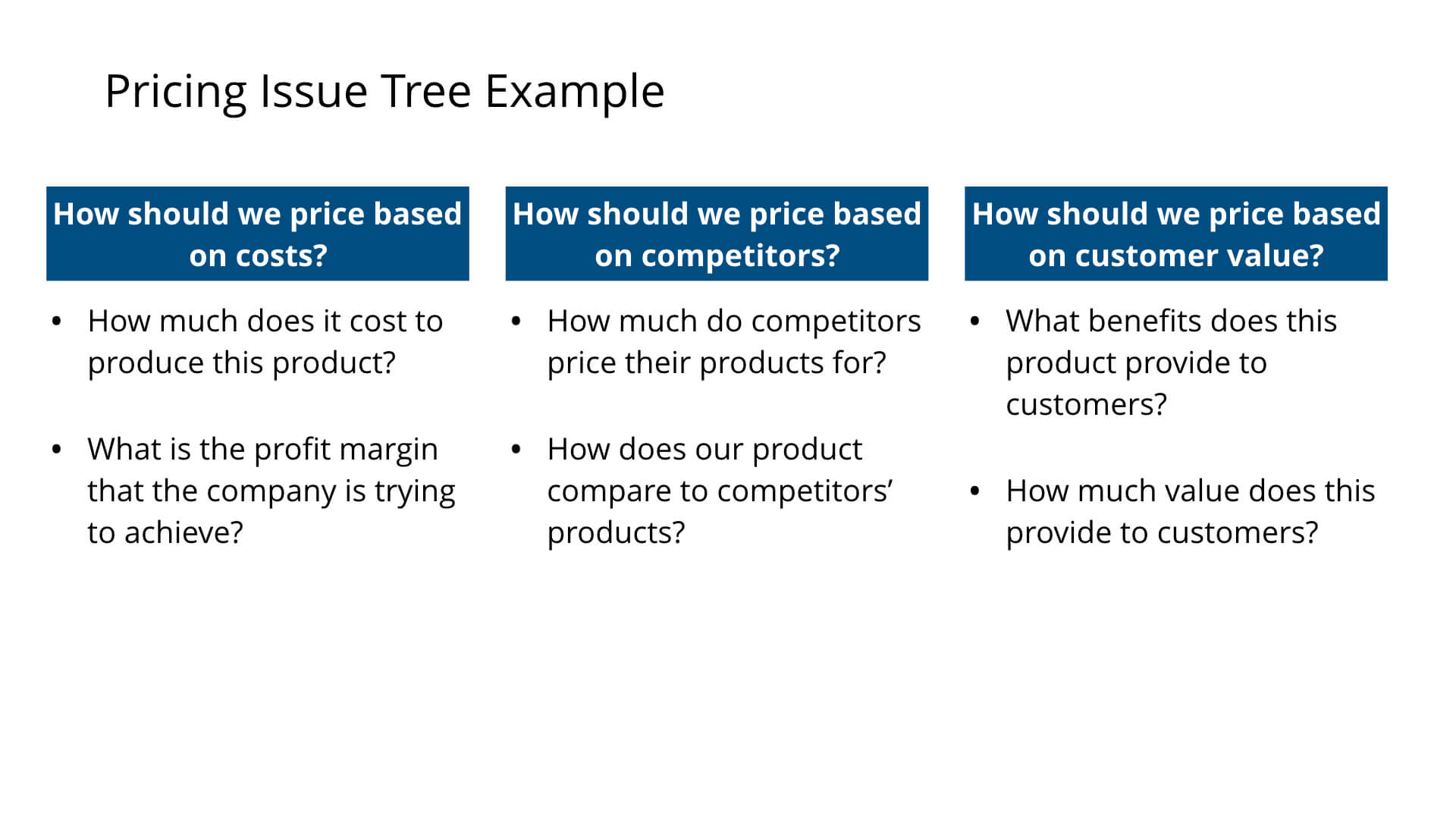

Pricing Issue Tree Example

Pricing cases ask you to determine how to price a particular product or service.

A potential issue tree template for this case could explore three major issues:

- How should we price based on the product cost?

- How should we price based on competitors’ products?

- How should we price based on customer value?

What are Tips for Making Effective Issue Trees?

Issue trees are powerful tools to solve complex business problems, but they are much less effective if they don’t follow these important tips.

Issue tree tip #1: Be MECE

MECE stands for mutually exclusive and collectively exhaustive. When breaking down the overall problem in your issue tree, the final list of smaller problems needs to be mutually exclusive and collectively exhaustive.

Mutually exclusive means that none of the smaller problems in your issue tree overlap with each other. This ensures that you are working efficiently since there will be no duplicated or repeated work.

For example, let’s say that two of the issues in your issue tree are:

- Determine how to increase cups of lemonade sold

- Determine how to partner with local organizations to sell lemonade

This is not mutually exclusive because determining how to partner with local organizations would include determining how to increase cups of lemonade sold.

In determining how to increase cups of lemonade sold, you may be duplicating work from determining how to partner with local organizations.

Collectively exhaustive means that the list of smaller problems in your issue tree account for all possible ideas and possibilities. This ensures that your issue tree is not missing any critical areas to explore.

For example, let’s say that you break down the issue of determining how to decrease variable costs into the following issues:

This is not collectively exhaustive because you are missing two key variable costs: sugar and cups. These could be important areas that could increase profitability, which are not captured by your issue tree.

You can read a full explanation of this in our article on the MECE principle .

Issue tree tip #2: Be 80/20

The 80/20 principle states that 80% of the results come from 20% of the effort or time invested.

In other words, it is a much more efficient use of time to spend a day solving 80% of a problem and then moving onto solving the next few problems than to spend five days solving 100% of one problem.

This same principle should be applied to your issue tree. You do not need to solve every single issue that you have identified. Instead, focus on solving the issues that have the greatest impact and require the least amount of work.

Let’s return to our lemonade stand example. If we are focusing on the issue of how to decrease costs, we can consider fixed costs and variable costs.

It may be a better use of time to focus on decreasing variable costs because they are generally easier to lower than fixed costs.

Fixed costs, such as paying for a business permit or purchasing a table and display sign, typically have long purchasing periods, making them more difficult to reduce in the short-term.

Issue tree tip #3: Have three to five branches

Your issue tree needs to be both comprehensive, but also clear and easy to follow. Therefore, your issue tree should have at least three branches to be able to cover enough breadth of the key issue.

Additionally, your issue tree should have no more than five branches. Any more than this will make your issue tree too complicated and difficult to follow. By having more than five branches, you also increase the likelihood that there will be redundancies or overlap among your branches, which is not ideal.

Having three to five branches helps achieve a balance between going deep into specific sub-issues and covering a broad range of aspects. It balances breadth and depth.

Issue tree tip #4: Clearly define the top-level issue

Make sure that you clearly articulate the main problem or question. This sets the foundation for the entire issue tree. If you are addressing the wrong problem or question, your entire issue tree will be useless to you.

Issue tree tip #5: Visualize the issue tree clearly

If you're using a visual representation, make sure it's easy to follow. Use clean lines, appropriate spacing, and clear connections between components.

Keep your issue tree organized and neat. A cluttered or disorganized tree can be confusing and difficult to follow.

Ensure that each branch and sub-issue is labeled clearly and concisely. Use language that is easily understandable to your audience.

Issue tree tip #6: Order your branches logically

Whenever possible, try to organize the branches in your issue tree logically.

For example, if the branches in your issue tree are segmented by time, arrange them as short-term, medium-term, and long-term. This is a logical order that is arranged by length of time.

It does not make sense to order the branches as long-term, short-term, and medium- term. This ordering is confusing and will make the entire issue tree harder to follow.

Issue tree tip #7: Branches should be parallel

The branches on your issue tree should all be on the same logical level.

For example, if you decide to segment the branches on your issue tree by geography, your branches could be: North America, South America, Europe, Asia, Africa, and Australia. This segmentation is logical because each segment is a continent.

It would not make sense to segment the branches on your issue as United States, South America, China, India, Australia, and rest of the world. This segmentation does not follow logical consistency because it mixes continents and countries.

Issue tree tip #8: Practice and get feedback

It takes practice to create comprehensive, clear, and concise issue trees. This is a skill that takes time to develop and refine.

When you initially create your first few issue trees, it may take you a long period of time and you may be missing key sub-issues. However, with enough practice, you’ll be able to create issue trees effortlessly and effectively.

Practice creating issue trees on different problems to improve your skills. Seek feedback from peers or mentors to refine your approach.

Recommended Consulting Interview Resources

Here are the resources we recommend to land your dream consulting job:

For help landing consulting interviews

- Resume Review & Editing : Transform your resume into one that will get you multiple consulting interviews

For help passing case interviews

- Comprehensive Case Interview Course (our #1 recommendation): The only resource you need. Whether you have no business background, rusty math skills, or are short on time, this step-by-step course will transform you into a top 1% caser that lands multiple consulting offers.

- Case Interview Coaching : Personalized, one-on-one coaching with a former Bain interviewer.

- Hacking the Case Interview Book (available on Amazon): Perfect for beginners that are short on time. Transform yourself from a stressed-out case interview newbie to a confident intermediate in under a week. Some readers finish this book in a day and can already tackle tough cases.

- The Ultimate Case Interview Workbook (available on Amazon): Perfect for intermediates struggling with frameworks, case math, or generating business insights. No need to find a case partner – these drills, practice problems, and full-length cases can all be done by yourself.

For help passing consulting behavioral & fit interviews

- Behavioral & Fit Interview Course : Be prepared for 98% of behavioral and fit questions in just a few hours. We'll teach you exactly how to draft answers that will impress your interviewer.

Land Multiple Consulting Offers

Complete, step-by-step case interview course. 30,000+ happy customers.

How to master the seven-step problem-solving process

In this episode of the McKinsey Podcast , Simon London speaks with Charles Conn, CEO of venture-capital firm Oxford Sciences Innovation, and McKinsey senior partner Hugo Sarrazin about the complexities of different problem-solving strategies.

Podcast transcript

Simon London: Hello, and welcome to this episode of the McKinsey Podcast , with me, Simon London. What’s the number-one skill you need to succeed professionally? Salesmanship, perhaps? Or a facility with statistics? Or maybe the ability to communicate crisply and clearly? Many would argue that at the very top of the list comes problem solving: that is, the ability to think through and come up with an optimal course of action to address any complex challenge—in business, in public policy, or indeed in life.

Looked at this way, it’s no surprise that McKinsey takes problem solving very seriously, testing for it during the recruiting process and then honing it, in McKinsey consultants, through immersion in a structured seven-step method. To discuss the art of problem solving, I sat down in California with McKinsey senior partner Hugo Sarrazin and also with Charles Conn. Charles is a former McKinsey partner, entrepreneur, executive, and coauthor of the book Bulletproof Problem Solving: The One Skill That Changes Everything [John Wiley & Sons, 2018].

Charles and Hugo, welcome to the podcast. Thank you for being here.

Hugo Sarrazin: Our pleasure.

Charles Conn: It’s terrific to be here.

Simon London: Problem solving is a really interesting piece of terminology. It could mean so many different things. I have a son who’s a teenage climber. They talk about solving problems. Climbing is problem solving. Charles, when you talk about problem solving, what are you talking about?

Charles Conn: For me, problem solving is the answer to the question “What should I do?” It’s interesting when there’s uncertainty and complexity, and when it’s meaningful because there are consequences. Your son’s climbing is a perfect example. There are consequences, and it’s complicated, and there’s uncertainty—can he make that grab? I think we can apply that same frame almost at any level. You can think about questions like “What town would I like to live in?” or “Should I put solar panels on my roof?”

You might think that’s a funny thing to apply problem solving to, but in my mind it’s not fundamentally different from business problem solving, which answers the question “What should my strategy be?” Or problem solving at the policy level: “How do we combat climate change?” “Should I support the local school bond?” I think these are all part and parcel of the same type of question, “What should I do?”

I’m a big fan of structured problem solving. By following steps, we can more clearly understand what problem it is we’re solving, what are the components of the problem that we’re solving, which components are the most important ones for us to pay attention to, which analytic techniques we should apply to those, and how we can synthesize what we’ve learned back into a compelling story. That’s all it is, at its heart.

I think sometimes when people think about seven steps, they assume that there’s a rigidity to this. That’s not it at all. It’s actually to give you the scope for creativity, which often doesn’t exist when your problem solving is muddled.

Simon London: You were just talking about the seven-step process. That’s what’s written down in the book, but it’s a very McKinsey process as well. Without getting too deep into the weeds, let’s go through the steps, one by one. You were just talking about problem definition as being a particularly important thing to get right first. That’s the first step. Hugo, tell us about that.

Hugo Sarrazin: It is surprising how often people jump past this step and make a bunch of assumptions. The most powerful thing is to step back and ask the basic questions—“What are we trying to solve? What are the constraints that exist? What are the dependencies?” Let’s make those explicit and really push the thinking and defining. At McKinsey, we spend an enormous amount of time in writing that little statement, and the statement, if you’re a logic purist, is great. You debate. “Is it an ‘or’? Is it an ‘and’? What’s the action verb?” Because all these specific words help you get to the heart of what matters.

Want to subscribe to The McKinsey Podcast ?

Simon London: So this is a concise problem statement.

Hugo Sarrazin: Yeah. It’s not like “Can we grow in Japan?” That’s interesting, but it is “What, specifically, are we trying to uncover in the growth of a product in Japan? Or a segment in Japan? Or a channel in Japan?” When you spend an enormous amount of time, in the first meeting of the different stakeholders, debating this and having different people put forward what they think the problem definition is, you realize that people have completely different views of why they’re here. That, to me, is the most important step.