Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Business articles from across Nature Portfolio

Latest research and reviews.

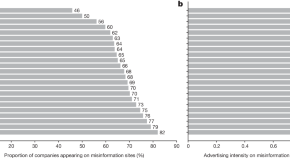

Companies inadvertently fund online misinformation despite consumer backlash

Many companies unknowingly advertise on websites that publish misinformation despite the reputational and financial risks, and increased transparency for consumers and advertisers could counter unintended ad revenue going to misinformation websites.

- Wajeeha Ahmad

- Erik Brynjolfsson

Alternative protein sources: science powered startups to fuel food innovation

Harnessing the potential of considerable food security efforts requires the ability to translate them into commercial applications. In this Perspective, the author explores the alternative protein source start-up landscape.

- Elena Lurie-Luke

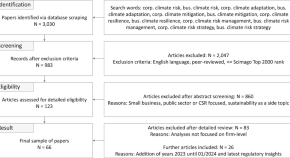

Facing the storm: Developing corporate adaptation and resilience action plans amid climate uncertainty

- Katharina Hennes

- David Bendig

- Andreas Löschel

A new commercial boundary dataset for metropolitan areas in the USA and Canada, built from open data

- Byeonghwa Jeong

- Karen Chapple

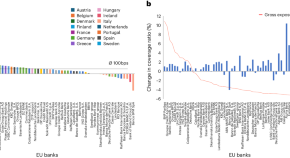

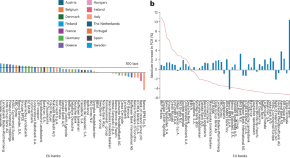

Model-based financial regulations impair the transition to net-zero carbon emissions

As the financial system is increasingly important in catalysing the green transition, it is critical to assess the impediments it may face. This study shows that existing financial regulations may impair the shift of financial resources from high-carbon to low-carbon assets.

- Matteo Gasparini

- Matthew C. Ives

- Eric Beinhocker

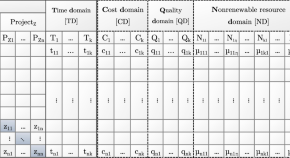

Compound Matrix-Based Project Database (CMPD)

- Zsolt T. Kosztyán

- Gergely L. Novák

News and Comment

Green and greening jobs

Scaling up adoption of green technologies in energy, mobility, construction, manufacturing and agriculture is imperative to set countries on a sustainable development path, but that hinges on having the right workforce, argues Jonatan Pinkse.

- Jonatan Pinkse

I fell out of love with the lab, and in love with business

The COVID-19 pandemic changed Karolina Makovskytė’s career ambitions, propelling her to a business development role in her home nation of Lithuania.

- Jacqui Thornton

Model-based financial regulation challenges for the net-zero transition

Current model-based financial regulations favour carbon-intensive investments. This is likely to disincentivize banks from investing in new low-carbon assets, impairing the transition to net zero. Financial regulators and policymakers should consider how this bias may impact financial system stability and broader societal objectives.

- Matthew Ives

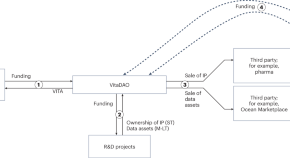

The potential of DAOs for funding and collaborative development in the life sciences

VitaDAO funds longevity research through a blockchain-based decentralized autonomous organization (DAO), showcasing the potential of collaborative, transparent and alternative systems while also highlighting the challenges of coordination, regulation, biases and skepticism in reshaping traditional research financing methods.

- Simone Fantaccini

- Laura Grassi

- Andrea Rampoldi

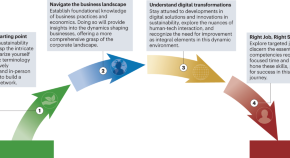

Growing demand for environmental science expertise in the corporate sector

Growing awareness of environmental risks and mounting regulatory and consumer pressure have driven unprecedented demand for environmental science expertise in the corporate sector. Recruiting skilled individuals with academic backgrounds and fostering collaboration among businesses, research institutions, universities and environmental professionals are vital for enhancing environmental knowledge and capability in companies.

- Alexey K. Pavlov

- Daiane G. Faller

- Jane E. Collins

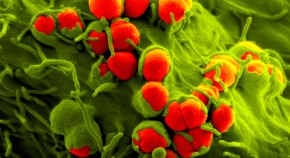

Can non-profits beat antibiotic resistance and soaring drug costs?

Effective, affordable antimicrobial drugs aren’t moneymakers, despite being desperately needed. Can non-profit organizations pick up the slack?

- Maryn McKenna

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Journal Menu

- Administrative Sciences Home

- Aims & Scope

- Editorial Board

- Instructions for Authors

- Special Issues

- Article Processing Charge

- Indexing & Archiving

- Editor’s Choice Articles

- Most Cited & Viewed

- Journal Statistics

- Journal History

- Journal Awards

- Editorial Office

Journal Browser

- arrow_forward_ios Forthcoming issue arrow_forward_ios Current issue

- Vol. 14 (2024)

- Vol. 13 (2023)

- Vol. 12 (2022)

- Vol. 11 (2021)

- Vol. 10 (2020)

- Vol. 9 (2019)

- Vol. 8 (2018)

- Vol. 7 (2017)

- Vol. 6 (2016)

- Vol. 5 (2015)

- Vol. 4 (2014)

- Vol. 3 (2013)

- Vol. 2 (2012)

- Vol. 1 (2011)

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

What Is in the Future of Business Research and Management? Emerging Issues after COVID-19 Time

- Print Special Issue Flyer

Special Issue Editors

Special issue information.

- Published Papers

A special issue of Administrative Sciences (ISSN 2076-3387).

Deadline for manuscript submissions: closed (1 October 2022) | Viewed by 36306

Share This Special Issue

Dear Colleagues,

The recent experience of the COVID-19 pandemic has forever marked our experience, perspective, and attitude at the individual and organizational level. Being positioned in a new reality, a need to reshape and restructure, as well as continuously adapt, has arisen in order to face the unpredicted sorrounding business environment. To survive under restrictive government policies and challenging market behaviours, business organizations had to efficiently and effectively respond the recent pandemic. This involved the necessity of flexibility, reflection, and resilient adaption, so that businesses remained in equilibrium.

In this situation, management scholars need to reshape and question the theoretical frameworks which have been in place for the past decades, providing a supplement to the existing literature and exteding it beyond—trying to comply with the so-called “new normal” of the business environment.

The aim of this Special Issue is to discuss the most important managerial and organizational implications of the pandemic and the future challenges that public and private organizations will have to face in the coming years; we are interested in future-oriented business implications deriving from the occurred pandemic.

Theoretical, conceptual, and empirical contributions in the field of business research and management linked to, but not limited to, the following topics are welcomed: business modeling and planning; change management; big data and business analytics; innovation and technology management; business ethics; corporate governance and accountability; corporate social responsibility; human and intellectual capital management; corporate finance and investments; accounting, auditing, and budgeting; financial analysis and reporting; international management; and public management and governance.

All the publications of the papers in this issue will be presented in the “1st Conference in Business Research and Management” organized by the University of Castilla-La Mancha and the University of Rome “Tor Vergata”.

Dr. Matteo Cristofaro Dr. Pablo Ruiz-Palomino Dr. Fiorella Pia Salvatore Dr. Pedro Jiménez Estevez Dr. Andromahi Kufo Dr. Ricardo Martínez-Cañas Guest Editors

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website . Once you are registered, click here to go to the submission form . Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the special issue website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a double-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Administrative Sciences is an international peer-reviewed open access monthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 1400 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.

- business research

- organizations

- future challenges

Published Papers (5 papers)

Further Information

Mdpi initiatives, follow mdpi.

Subscribe to receive issue release notifications and newsletters from MDPI journals

A review of data science in business and industry and a future view

- October 2019

- Applied Stochastic Models in Business and Industry 36(4)

- Politecnico di Torino

- Newcastle University

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- Rendi Aprijal

- Iqbal Wiranata Siregar

- Andysah Putera Utama Siahaan

- Leni Marlina

- Dilek Özdemir Yılmaz

- Shirley COLEMAN

- Fitria Wulandari Ramlan

- Syahril Efendi

- Yoshida Sary

- Wayne S. Smith

- J. Bacardit

- STAT PROBABIL LETT

- Gareth James

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

Business Analytics and Data Science: Once Again?

- Published: 20 December 2016

- Volume 59 , pages 77–79, ( 2017 )

Cite this article

- Martin Bichler 1 ,

- Armin Heinzl 2 &

- Wil M. P. van der Aalst 3

9469 Accesses

30 Citations

Explore all metrics

Avoid common mistakes on your manuscript.

1 Introduction

“Everything has already been said, but not yet by everyone”. We wouldn’t be surprised if the title of this editorial reminds you of this famous quote attributed to Karl Valentin. Business Analytics is a relatively new term and there does not seem to be an established academic definition. Holsapple et al. ( 2014 ) write, “a crucial observation, on which the paper is based, is that ‘the definition’ of analytics does not exist”. They describe Business Analytics as “evidence-based problem recognition and solving that happen within the context of business situations”, and also highlight that mathematical and statistical techniques have long been studied in business schools under such titles as Operations Research and Management Science, Simulation Analysis, Econometrics, and Financial Analysis. However, their article shows that the availability of large data sets in business has made these techniques much more important in all fields of management.

At the same time, Data Science has become a very popular term describing an interdisciplinary field about processes and systems to extract knowledge or insights from data. Data Science is a broader term, but also closely related to Business Analytics. In a recent book by one of the authors, Data Science is defined as follows: “Data science is an interdisciplinary field aiming to turn data into real value. Data may be structured or unstructured, big or small, static or streaming. Value may be provided in the form of predictions, automated decisions, models learned from data, or any type of data visualization delivering insights. Data science includes data extraction, data preparation, data exploration, data transformation, storage and retrieval, computing infrastructures, various types of mining and learning, presentation of explanations and predictions, and the exploitation of results taking into account ethical, social, legal, and business aspects” (Van der Aalst 2016 ).

Dhar ( 2013 ) starts his article asking why we need a new term and whether Data Science is different from statistics and gives an affirmative answer. He mentions new types of data being analyzed, new methods, and new questions being asked. Clearly, both definitions are overlapping. Both Business Analytics and Data Science want to “turn data into value”.

It is not surprising, that these terms have been adopted quickly by those in our community who are close to Operations Research and the Management Sciences (OR/MS). Data analysis and optimization have always been at the core of the INFORMS, and the INFORMS Information Systems Society (ISS) is one of the large INFORMS sub-communities. Many sessions at the INFORMS Annual Meeting or the Conference on Information Systems and Technology (CIST), which is organized by the INFORMS ISS just before the Annual Meeting every year, devoted to predictive or prescriptive analytics. But the range topics is much wider than that.

Process mining has become an important direction in Business Process Management (BPM). About half of the papers presented at the International Conference on Business Process Management are about data-driven process management. A successful track on Data Science and Business Analytics has been established at ICIS in the recent years, and many papers combining data analysis, information technology, and optimization are submitted to our BISE department “Computational Methods and Decision Support Systems” and the department “IS Engineering and Technology”. For the remainder of this editorial we will only talk about Analytics for brevity, and leave the question open which term may be adopted in which community in the in the future.

As many recent topics in Information Systems, Analytics is multi-disciplinary. There are colleagues in Econometrics, in Machine Learning, and Operations Research who contribute significantly. In this brief editorial, we want to discuss how Analytics contributes to our field, and how our profession can contribute to developments in Analytics. Given the vast amounts of data that we are collecting, this topic will most likely stay with us for a long time and eventually have a significant impact on both our research and teaching.

Internet-based systems generate huge amounts of data, which allow us to better understand how people interact on markets or in social media. Many new types of information systems draw on the availability of data about user behavior or sensor data about the environment. Such information systems adapt to the users and provide better ways to coordinate. Let’s pick a few examples to make the point.

Recommender systems are probably among the most well-known types of analytics-based information systems. They collect data about user preferences to then provide tailor-made recommendations for books, movies, or other products. By now, there is a large body of literature about mathematical methods such as matrix factorization or collaborative filtering, which allow for effective recommendations. At the same time, there is a growing behavioral literature analyzing the impact of these systems on human decision making. Thus, the topic addresses both, design and user behavior, a combination that was always at the core of information systems research.

Interactive marketing is another field which heavily draws on analytics. Real-time bidding (RTB) is a means by which display advertising is bought and sold on a per-impression basis via auction. With real-time bidding, advertising buyers bid on an impression and, if the bid is won, the buyer’s ad is instantly displayed on the publisher’s site. This is the fastest growing segment in the digital advertising market and it combines predictive models to better estimate the preferences and tastes of users and the bidding in a highly automated fashion. The topic addresses a wealth of problems ranging from distributed systems to auction theory, machine learning, and, last but not least, data privacy!

Also, the Internet-of-Things has led to many new applications which require data analysis, distributed systems, and optimization to go hand in hand in ever growing applications. We have all seen case studies on smart mobility solutions, intelligent ports and transportation systems, or smart home solutions where sensors communicate and coordinate with humans in real-time, often with a substantial increase in economic efficiency. Consider for example condition-based maintenance making use of the analysis of sensors data: maintenance is performed when analytical techniques suggest that the system is going to fail or that performance is deteriorating.

These are just a few examples. Analytics are used in many other domains ranging from customer journeys, call-centers, and credit rating to staffing, e-government, and delivery services. Moreover, there is no reason to assume that the trend towards more data and evidence-based management and analytics-based information systems will end.

In the BISE editorial statement “information systems are understood as socio-technical systems comprising people, tasks, and information technology”. The above examples suggest that Analytics opens up many new and exciting research questions for our community, eventually leading to a new class of analytics - based information systems , which sense their environment and respond to the users that they ultimately serve. This requires an integrated view addressing privacy concerns, engineering challenges, and a thorough “social science” analysis of the impact of new systems. In summary, Analytics provides many new opportunities for our field and describes an almost natural progression of many lines of information systems research.

Information Systems, as it is taught at most universities, is already well-prepared for the design and analysis of this new breed of analytics-based information systems. On the one hand, Information Systems programs typically include topics such as data engineering, data mining, software engineering, distributed systems, and operations research, which are essential for their design.

On the other hand, our field is also a social science and as such most curricula have courses on Econometrics and empirical methods. Techniques for causal inference and discrete choice models have always been important ingredients of a social scientist’s education, and they prove to be incredibly valuable for business analysts. Econometrics and Machine Learning will be essential elements of new curricula in most schools in the future, if this is not already the case. Overall, the fact that we address “design and behavior” in our education can be a significant advantage for our students in the job market and in research.

While our Bachelor and Master programs are well positioned, there are many new developments in education in Analytics. There are so many new Bachelor and Master programs in either Business Analytics or Data Science at various universities that we do not even start to list specific programs. The European Data Science Academy ( http://edsa-project.eu/ ) is an EU Horizon 2020 funded program providing useful information. For our field, it is important to stay abreast of these new developments.

4 Conclusion

Analytics is not just a short-term trend. The availability of more and more data from sensor networks or human–computer interaction leads to new types of information systems in which data analysis plays an important role. Analytics will help us to better understand the environment and to adapt to the needs of users and organizations, when we design new systems. It is important to reflect this development also in our curricula. Analytics is inherently linked with our field, and we are looking forward to a growing number of submissions in this area.

Dhar V (2013) Data science and prediction. Commun ACM 56(12):64–73

Article Google Scholar

Holsapple C, Lee-Post A, Pakath R (2014) A unified foundation for business analytics. Decis Support Syst 64C(August):130–141

Van der Aalst W (2016) Process mining: data science in action. Springer, Heidelberg

Book Google Scholar

Download references

Author information

Authors and affiliations.

Department of Informatics, Decision Sciences and Systems, Technical University of Munich (TUM), Boltzmannstr 3, 85748, Munich, Germany

Martin Bichler

Chair of General Management and Information Systems, University of Mannheim, 68161, Mannheim, Germany

Armin Heinzl

Department of Mathematics and Computer Science (MF 7.103), Eindhoven University of Technology, PO Box 513, 5600 MB, Eindhoven, The Netherlands

Wil M. P. van der Aalst

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Martin Bichler .

Rights and permissions

Reprints and permissions

About this article

Bichler, M., Heinzl, A. & van der Aalst, W.M.P. Business Analytics and Data Science: Once Again?. Bus Inf Syst Eng 59 , 77–79 (2017). https://doi.org/10.1007/s12599-016-0461-1

Download citation

Published : 20 December 2016

Issue Date : April 2017

DOI : https://doi.org/10.1007/s12599-016-0461-1

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Find a journal

- Publish with us

- Track your research

- Tools and Resources

- Customer Services

- Business Education

- Business Law

- Business Policy and Strategy

- Entrepreneurship

- Human Resource Management

- Information Systems

- International Business

- Negotiations and Bargaining

- Operations Management

- Organization Theory

- Organizational Behavior

- Problem Solving and Creativity

- Research Methods

- Social Issues

- Technology and Innovation Management

- Share This Facebook LinkedIn Twitter

Article contents

Qualitative designs and methodologies for business, management, and organizational research.

- Robert P. Gephart Robert P. Gephart Alberta School of Business, University of Alberta

- and Rohny Saylors Rohny Saylors Carson College of Business, Washington State University

- https://doi.org/10.1093/acrefore/9780190224851.013.230

- Published online: 28 September 2020

Qualitative research designs provide future-oriented plans for undertaking research. Designs should describe how to effectively address and answer a specific research question using qualitative data and qualitative analysis techniques. Designs connect research objectives to observations, data, methods, interpretations, and research outcomes. Qualitative research designs focus initially on collecting data to provide a naturalistic view of social phenomena and understand the meaning the social world holds from the point of view of social actors in real settings. The outcomes of qualitative research designs are situated narratives of peoples’ activities in real settings, reasoned explanations of behavior, discoveries of new phenomena, and creating and testing of theories.

A three-level framework can be used to describe the layers of qualitative research design and conceptualize its multifaceted nature. Note, however, that qualitative research is a flexible and not fixed process, unlike conventional positivist research designs that are unchanged after data collection commences. Flexibility provides qualitative research with the capacity to alter foci during the research process and make new and emerging discoveries.

The first or methods layer of the research design process uses social science methods to rigorously describe organizational phenomena and provide evidence that is useful for explaining phenomena and developing theory. Description is done using empirical research methods for data collection including case studies, interviews, participant observation, ethnography, and collection of texts, records, and documents.

The second or methodological layer of research design offers three formal logical strategies to analyze data and address research questions: (a) induction to answer descriptive “what” questions; (b) deduction and hypothesis testing to address theory oriented “why” questions; and (c) abduction to understand questions about what, how, and why phenomena occur.

The third or social science paradigm layer of research design is formed by broad social science traditions and approaches that reflect distinct theoretical epistemologies—theories of knowledge—and diverse empirical research practices. These perspectives include positivism, interpretive induction, and interpretive abduction (interpretive science). There are also scholarly research perspectives that reflect on and challenge or seek to change management thinking and practice, rather than producing rigorous empirical research or evidence based findings. These perspectives include critical research, postmodern research, and organization development.

Three additional issues are important to future qualitative research designs. First, there is renewed interest in the value of covert research undertaken without the informed consent of participants. Second, there is an ongoing discussion of the best style to use for reporting qualitative research. Third, there are new ways to integrate qualitative and quantitative data. These are needed to better address the interplay of qualitative and quantitative phenomena that are both found in everyday discourse, a phenomenon that has been overlooked.

- qualitative methods

- research design

- methods and methodologies

- interpretive induction

- interpretive science

- critical theory

- postmodernism

- organization development

Introduction

Qualitative research uses linguistic symbols and stories to describe and understand actual behavior in real settings (Denzin & Lincoln, 1994 ). Understanding requires describing “specific instances of social phenomena” (Van Maanen, 1998 , p. xi) to determine what this behavior means to lay participants and to scientific researchers. This process produces “narratives-non-fiction division that link events to events in storied or dramatic fashion” to uncover broad social science principles at work in specific cases (p. xii).

A research design and/or proposal is often created at the outset of research to act as a guide. But qualitative research is not a rule-governed process and “no one knows” the rules to write memorable and publishable qualitative research (Van Maanen, 1998 , p. xxv). Thus qualitative research “is anything but standardized, or, more tellingly, impersonal” (p. xi). Design is emergent and is often created as it is being done.

Qualitative research is also complex. This complexity is addressed by providing a framework with three distinct layers of knowledge creation resources that are assembled during qualitative research: the methods layer, the logic layer, and the paradigmatic layer. Research methods are addressed first because “there is no necessary connection between research strategies and methods of data collection and analysis” (Blaikie, 2010 , p. 227). Research methods (e.g., interviews) must be adapted for use with the specific logical strategies and paradigmatic assumptions in mind.

The first, or methods, layer uses qualitative methods to “collect data.” That is, to observe phenomena and record written descriptions of observations, often through field notes. Established methods for description include participant and non-participant observation, ethnography, focus groups, individual interviews, and collection of documentary data. The article explains how established methods have been adapted and used to answer a range of qualitative research questions.

The second, or logic, layer involves selecting a research strategy—a “logic, or set of procedures, for answering research questions” (Blaikie, 2010 , p. 18). Research strategies link research objectives, data collection methods, and logics of analysis. The three logical strategies used in qualitative organizational research are inductive logic, deductive logic and abductive logic (Blaikie, 2010 , p. 79). 1 Each logical strategy makes distinct assumptions about the nature of knowledge (epistemology), the nature of being (ontology), and how logical strategies and assumptions are used in data collection and analysis. The task is to describe important methods suitable for each logical strategy, factors to consider when selecting methods (Blaikie, 2010 ), and illustrates how data collection and analysis methods are adapted to ensure for consistency with specific logics and paradigms.

The third, or paradigms, layer of research design addresses broad frameworks and scholarly traditions for understanding research findings. Commitment to a paradigm or research tradition entails commitments to theories, research strategies, and methods. Three paradigms that do empirical research and seek scientific knowledge are addressed first: positivism, interpretive induction, and interpretive abduction. Then, three scholarly and humanist approaches that critique conventional research and practice to encourage organizational change are discussed: critical theory and research, postmodern perspectives, and organization development (OD). Paradigms or traditions provide broad scholarly contexts that make specific studies comprehensible and meaningful. Lack of grounding in an intellectual tradition limits the ability of research to contribute: contributions always relate to advancing the state of knowledge in specific unfolding research traditions that also set norms for assessing research quality. The six research designs are explained to show how consistency in design levels can be achieved for each of the different paradigms. Further, qualitative research designs must balance the need for a clear plan to achieve goals with the need for adaptability and flexibility to incorporate insights and overcome obstacles that emerge during research.

Our general goal has been to provide a practical guide to inspire and assist readers to better understand, design, implement, and publish qualitative research. We conclude by addressing future challenges and trends in qualitative research.

The Substance of Research Design

A research design is a written text that can be prepared prior to the start of a research project (Blaikie, 2010 , p. 4) and shared or used as “a private working document.” Figure 1 depicts the elements of a qualitative research design and research process. Interest in a topic or problem leads researchers to pose questions and select relevant research methods to fulfill research purposes. Implementation of the methods requires use of logical strategies in conjunction with paradigms of research to specify concepts, theories, and models. The outcomes, depending on decisions made during research, are scientific knowledge, scholarly (non-scientific) knowledge, or applied knowledge useful for practice.

Figure 1. Elements of qualitative research design.

Research designs describe a problem or research question and explain how to use specific qualitative methods to collect and analyze qualitative data that answer a research question. The purposes of design are to describe and justify the decisions made during the research process and to explain how the research outcomes can be produced. Designs are thus future-oriented plans that specify research activities, connect activities to research goals and objectives, and explain how to interpret the research outcomes using paradigms and theories.

In contrast, a research proposal is “a public document that is used to obtain necessary approvals for a research proposal to proceed” (Blaikie, 2010 , p. 4). Research designs are often prepared prior to creating a research proposal, and research proposals often require the inclusion of research designs. Proposals also require greater formality when they are the basis for a legal contract between a researcher and a funding agency. Thus, designs and proposals are mutually relevant and have considerable overlap but are addressed to different audiences. Table 1 provides the specific features of designs and proposals. This discussion focuses on designs.

Table 1. Decisions Necessitated by Research Designs and Proposals

RESEARCH DESIGNS |

|---|

Title or topic of project |

Research problem and rationale for exploring problem |

Research questions to address problem: purpose of study |

Choice of logic of inquiry to investigate each research question |

Statement of ontological and epistemological assumptions made |

Statement or description of research paradigms used |

Explanation of relevant concepts and role in research process |

Statement of hypotheses to be tested (positivist), orienting proposition to be examined (interpretive) or mechanisms investigated (critical realism) |

Description of data sources |

Discussion of methods used to select data from sources |

Description of methods of data collection, summarization, and analysis |

Discussion of problems and limitations |

RESEARCH PROPOSALS: add the items below to items above |

Statement of aims and research significance |

Background on need for research |

Budget and justification for each item |

Timetable or stages of research process |

Specification of expected outcomes and benefits |

Statement of ethical issues and how they can be managed |

Explanation of how new knowledge will be disseminated |

Source: Based on Blaikie ( 2010 ), pp. 12–34.

The “real starting point” for a research design (or proposal) is “the formulation of the research question” (Blaikie, 2010 , p. 17). There are three types of research questions: “what” questions seek descriptions; “why” questions seek answers and understanding; and “how” questions address conditions where certain events occur, underlying mechanisms, and conditions necessary for change interventions (p. 17). It is useful to start with research questions rather than goals, and to explain what the research is intended to achieve (p. 17) in a technical way.

The process of finding a topic and formulating a useful research question requires several considerations (Silverman, 2014 , pp. 31–33, 34–40). Researchers must avoid settings where data collection will be difficult (pp. 31–32); specify an appropriate scope for the topic—neither too wide or too narrow—that can be addressed (pp. 35–36); fit research questions into a relevant theory (p. 39); find the appropriate level of theory to address (p. 42); select appropriate designs and research methods (pp. 42–44); ensure the volume of data can be handled (p. 48); and do an effective literature review (p. 48).

A literature review is an important way to link the proposed research to current knowledge in the field, and to explain what was previously known or what theory suggests to be the case (Blaikie, 2010 , p. 17). Research questions can used to bound and frame the literature review while the literature review often inspires research questions. The review may also provide bases for creating new hypotheses and for answering some of the initial research questions (Blaikie, 2010 , p. 18).

Layers of Research Design

There are three layers of research design. The first layer focuses on research methods for collecting data. The second layer focuses on the logical frameworks used for analyzing data. The third layer focuses on the paradigm used to create a coherent worldview from research methods and logical frameworks.

Layer One: Design as Research Methods

Qualitative research addresses the meanings people have for phenomena. It collects narratives of organizational activity, uses analytical induction to create coherent representations of the truths and meanings in organizational contexts, and then creates explanations of this conduct and its prevalence (Van Maanan, 1998 , pp. xi–xii). Thus qualitative research involves “doing research with words” (Gephart, 2013 , title) in order to describe the linguistic symbols and stories that members use in specific settings.

There are four general methods for collecting qualitative data and creating qualitative descriptions (see Table 2 ). The in-depth case study approach provides a history of an event or phenomenon over time using multiple data sources. Observational strategies use the researcher to observe and describe behavior in actual settings. Interview strategies use a format where a researcher asks questions of an informant. And documentary research collects texts, documents, official records, photographs, and videos as data—formally written or visually recorded evidence that can be replayed and reviewed (Creswell, 2014 , p. 190). These methods are adapted to fit the needs of specific projects.

Table 2. Qualitative Data Collection Methods

Type | Brief Description | Key Example(s) and Reference Source(s) |

|---|---|---|

| Provides thick description of a single event or phenomenon unfolding over time | Perlow ( ); Mills, Duerpos, and Wiebe ( ); Stake ( ); Piekkari and Welch ( ) |

| ||

Participant Observation | Observe, participate in, and describe actual settings and behaviors | McCall and Simmons ( ) Barker ( ) Graham ( ) |

Ethnography | Insider description of micro-culture developed through active participation in the culture | Van Maanen ( ); Ybema, Yanow, Wels, and Kamsteeg ( ); Cunliffe ( ); Van Maanen ( ) |

Systematic Self-Observation | Strategy for training lay informants to observe and immediately record selected experiences | Rodrguez, Ryave, and Tracewell ( ); Rodriguez and Ryave ( ) |

| ||

Single-Informant Interviews | ||

Traditional structured interview | Pose preset and fixed questions and record answers to produce (factual) information on phenomena, explore concepts and test theory | Easterby-Smith, Thorpe, and Jackson et al. ( ) |

Unstructured interview | Use interview guide with themes to develop and pose in situ questions that fit unfolding interview | Easterby-Smith et al. ( ) |

Active interview | Unstructured interview with questions and answers co-constructed with informant that reveals the co-construction of meaning | Holstein and Gubrium ( ) |

Ethnographic interview | Meeting where researcher meets informant to pose systematic questions that teach the researcher about the informant’s questions | Spradley ( ) McCurdy, Spradley, and Shandy ( ) |

Long interview | Extended use of structured interview method that includes demographic and open-ended questions. Designed to efficiently uncover the worldview of informants without prolonged field involvement | McCracken ( ) Gephart and Richardson ( ) |

| ||

Focus Group | A group interview used to collect data on a predetermined topic (focus) and mediated by the researcher | Morgan ( ) |

Records and Texts | ||

Photographic and visual methods | Produce accurate visual images of physical phenomena in field settings that can be analyzed or used to elicit informant reports | Ray and Smith ( ) Greenwood, Jack, and Haylock ( ) |

Video methods | Produce “different views’ of activity and permanent record that can be repeatedly examined and used to verify accuracy and validity of research claims | LeBaron, Jarzabkowski, Pratt, and Fetzer ( ) |

Textual data and documentary data collection | Hodder ( ) |

The In-Depth Case Study Method

The in-depth case study is a key strategy for qualitative research (Piekkari & Welch, 2012 ). It was the most common qualitative method used during the formative years of the field, from 1956 to 1965 , when 48% of qualitative papers published in the Administrative Science Quarterly used the case study method (Van Maanen, 1998 , p. xix). The case design uses one or more data collection strategies to describe in detail how a single event or phenomenon, selected by a researcher, has changed over time. This provides an understanding of the processes that underlie changes to the phenomenon. In-depth case study methods use observations, documents, records, and interviews that describe the events in the case unfolded and their implications. Case studies contextualize phenomena by studying them in actual situations. They provide rich insights into multiple dimensions of a single phenomenon (Campbell, 1975 ); offer empirical insights into what, how, and why questions related to phenomena; and assist in the creation of robust theory by providing diverse data collected over time (Gephart & Richardson, 2008 , p. 36).

Maniha and Perrow ( 1965 ) provide an example of a case study concerned with organizational goal displacement, an important issue in early organizational theorizing that proposed organizations emerge from rational goals. Organizational rationality was becoming questioned at the time that the authors studied a Youth Commission with nine members in a city of 70,000 persons (Maniha & Perrow, 1965 ). The organization’s activities were reconstructed from interviews with principals and stakeholders of the organization, minutes from Youth Commission meetings, documents, letters, and newspaper accounts (Maniha & Perrow, 1965 ).

The account that emerged from the data analysis is a history of how a “reluctant organization” with “no goals to guide it” was used by other aggressive organizations for their own ends. It ultimately created its own mission (Maniha & Perrow, 1965 ). Thus, an organization that initially lacked rational goals developed a mission through the irrational process of goal slippage or displacement. This finding challenged prevailing thinking at the time.

Observational Strategies

Observational strategies involve a researcher present in a situation who observes and records, the activities and conversations that occur in the setting, usually in written field notes. The three observational strategies in Table 2 —participant observation, ethnography, and systematic self-observation—differ in terms of the role of the researcher and in the data collection approach.

Participant observation . This is one of the earliest qualitative methods (McCall & Simmons, 1969 ). One gains access to a setting and an informant holding an appropriate social role, for example, client, customer, volunteer, or researcher. One then observes and records what occurs in the setting using field notes. Many features or topics in a setting can become a focus for participant observers. And observations can be conducted using continuum of different roles from the complete participant, observer as participant, and participant observer, to the complete observer who observes without participation (Creswell, 2014 , Table 9.2, p. 191).

Ethnography . An ethnography is “a written representation of culture” (Van Maanen, 1988 ) produced after extended participation in a culture. Ethnography is a form of participant observation that focuses on the cultural aspects of the group or organization under study (Van Maanen, 1988 , 2010 ). It involves prolonged and close contact with group members in a role where the observer becomes an apprentice to an informant to learn about a culture (Agar, 1980 ; McCurdy, Spradley, & Shandy, 2005 ; Spradley, 1979 ).

Ethnography produces fine-grained descriptions of a micro-culture, based on in-depth cultural participation (McCurdy et al., 2005 ; Spradley, 1979 , 2016 ). Ethnographic observations seek to capture cultural members’ worldviews (see Perlow, 1997 ; Van Maanen, 1988 ; Watson, 1994 ). Ethnographic techniques for interviewing informants have been refined into an integrated developmental research strategy—“the ethno-semantic method”—for undertaking qualitative research (Spradley, 1979 , 2016 ; Van Maanen, 1981 ). The ethnosemantic method uses a structured approach to uncover and confirm key cultural features, themes, and cultural reasoning processes (McCurdy et al., 2005 , Table 3 ; Spradley, 1979 ).

Systematic Self-Observation . Systematic self-observation (SSO) involves “training informants to observe and record a selected feature of their own everyday experience” (Rodrigues & Ryave, 2002 , p. 2; Rodriguez, Ryave, & Tracewell, 1998 ). Once aware that they are experiencing the target phenomenon, informants “immediately write a field report on their observation” (Rodrigues & Ryave, 2002 , p. 2) describing what was said and done, and providing background information on the context, thoughts, emotions, and relationships of people involved. SSO generates high-quality field notes that provide accurate descriptions of informants’ experiences (pp. 4–5). SSO allows informants to directly provide descriptions of their personal experiences including difficult to capture emotions.

Interview Strategies

Interviews are conversations between researchers and research participants—termed “subjects” in positivist research and informants in “interpretive research.” Interviews can be conducted as individual face-to-face interactions (Creswell, 2014 , p. 190) or by telephone, email, or through computer-based media. Two broad types of interview strategies are (a) the individual interview and (b) the group interview or focus group (Morgan, 1997 ). Interviews elicit informants’ insights into their culture and background information, and obtain answers and opinions. Interviews typically address topics and issues that occur outside the interview setting and at previous times. Interview data are thus reconstructions or undocumented descriptions of action in past settings (Creswell, 2014 , p. 191) that provide descriptions that are less accurate and valid descriptions than direct, real-time observations of settings.

Structured and unstructured interviews. Structured interviews pose a standardized set of fixed, closed-ended questions (Easterby-Smith, Thorpe, & Jackson, 2012 ) to respondents whose responses are recorded as factual information. Responses may be forced choice or open ended. However, most qualitative research uses unstructured or partially structured interviews that pose open-ended questions in a flexible order that can be adapted. Unstructured interviews allow for detailed responses and clarification of statements (Easterby-Smith et al., 2012 ; McLeod, 2014 )and the content and format can be tailored to the needs and assumptions of specific research projects (Gephart & Richardson, 2008 , p. 40).

The informant interview (Spradley, 1979 ) poses questions to informants to elicit and clarify background information about their culture, and to validate ethnographic observations. In interviews, informants teach the researcher their culture (Spradley, 1979 , pp. 24–39). The informant interview is part of a developmental research sequence (McCurdy et al., 2005 ; Spradley, 1979 ) that begins with broad “grand tour” questions that ask an informant to describe an important domain in their culture. The questions later narrow to focus on details of cultural domains and members’ folk concepts. This process uncovers semantic relationships among concepts of members and deeper cultural themes (McCurdy et al., 2005 ; Spradley, 1979 ).

The long interview (McCracken, 1988 ) involves a lengthy, quasi-structured interview sessions with informants to acquire rapid and efficient access to cultural themes and issues in a group. Long interviews differ ethnographic interviews by using a “more efficient and less obtrusive format” (p. 7). This creates a “sharply focused, rapid and highly intense interview process” that avoids indeterminate and redundant questions and pre-empts the need for observation or involvement in a culture. There are four stages in the long interview: (a) review literature to uncover analytical categories and design the interview; (b) review cultural categories to prepare the interview guide; (c) construct the questionnaire; and (d) analyze data to discover analytical categories (p. 30, fig. 1 ).

The active interview is a dynamic process where the researcher and informant co-construct and negotiate interview responses (Holstein & Gubrium, 1995 ). The goal is to uncover the subjective meanings that informants hold for phenomenon, and to understand how meaning is produced through communication. The active approach is common in interpretive, critical, and postmodern research that assumes a negotiated order. For example, Richardson and McKenna ( 2000 ) explored how ex-patriate British faculty members themselves interpreted and explained their expatriate experience. The researchers viewed the interview setting as one where the researchers and informants negotiated meanings between themselves, rather than a setting where prepared questions and answers were shared.

Documentary, Photographic, and Video Records as Data

Documents, records, artifacts, photographs, and video recordings are physically enduring forms of data that are separable from their producers and provide mute evidence with no inherent meaning until they are read, written about, and discussed (Hodder, 1994 , p. 393). Records (e.g., marriage certificate) attest to a formal transaction, are associated with formal governmental institutions, and may have legally restricted access. In contrast, documents are texts prepared for personal reasons with fewer legal restrictions but greater need for contextual interpretation. Several approaches to documentary and textual data analysis have been developed (see Table 3 ). Documents that researchers have found useful to collect include public documents and minutes of meetings; detailed transcripts of public hearings; corporate and government press releases; annual reports and financial documents; private documents such as diaries of informants; and news media reports.

Photographs and videos are useful for capturing “accurate” visual images of physical phenomena (Ray & Smith, 2012 ) that can be repeatedly reexamined and used as evidence to substantiate research claims (LeBaron, Jarzabkowski, Pratt, & Fetzer, 2018 ). Photos taken from different positions in space may also reveal different features of phenomena. Videos show movement and reveal activities as processes unfolding over time and space. Both photos and videos integrate and display the spatiotemporal contexts of action.

Layer Two: Design as Logical Frameworks

The second research design layer links data collection and analysis methods (Tables 2 and 3 ) to three logics of enquiry that answer specific questions: inductive, deductive, and abductive logical strategies (see Table 4 ). Each logical strategy focuses on producing different types of knowledge using distinctive research principles, processes, and types of research questions they can address.

Table 3. Data Analysis and Integrated Data Collection and Analysis Strategies

Strategy | Brief Explanation | Key References |

|---|---|---|

Compassionate Research Methods | Immersive and experimental approach to using ethnographic understanding to enhancing care for others | Dutton, Workman, and Hardin ( ) Hansen and Trank ( ) |

Computer-Aided Interpretive Textual Analysis | Strategy for computer supported interpretive textual analysis of documents and discourse that capture members’ first-order meanings | Kelle ( ) Gephart ( , ) |

Content Analysis | Establishing categories for a document or text then counting the occurrences of categories and showing concern with issues of reliability and validity | Sonpar and Golden-Biddle ( ) Duriau, Reger, and Pfarrer ( ) Greckhamer, Misngyi, Elms, and Lacey ( ) Silverman ( ) |

Document, Record and Artifact Analysis | Uses many procedures for contemporary, non-document data analysis | Hodder ( ) |

Dream Analysis | Technique for detecting countertransference of emotions from researcher to informant to uncover how researchers are tacitly and unconsciously embedded in their own observations and interpretations | de Rond and Tuncalp ( ) |

Ethnomethodology | A sociological approach to analysis of sensemaking practices used in face to face communication | Coulon ( ) Garfinkel ( , ) Gephart ( , ) Whittle ( ) |

Ethnosemantic Analysis | Systematic approach to uncover first-order concepts and terms of members, verify their meaning, and construct folk taxonomies for meaningful cultural domains | Spradley ( ) McCurdy, Spradley, and Shandy ( ) Akeson ( ) Van Maanen ( ) |

Expansion Analysis | Form of discourse analysis that produces a detailed, line by line, data-driven interpretation of a text or transcript | Cicourel ( ) Gephart, Topal, and Zhang ( ) |

Grounded Theorizing | Inductive development of theory from systematically obtained and analyzed observations | Glaser and Strauss ( ) Gephart ( ) Locke ( , ) Smith ( ) Walsh et al. ( ) |

Interpretive Science | A methodology for doing scientific research using abduction that provides discovery oriented replicable scientific knowledge that is interpretive and not positivist | Schutz ( , ) Garfinkel ( ) Gephart ( ) |

Pattern matching | Unspecified process of matching/finding patterns in qualitative data, often confirmed by subjects’ verbal reports and quantitative analysis | Lee and Mitchell ( ) Lee, Mitchell, Wise, and Fireman ( ) Yan and Gray ( ) |

Phenomenological Analysis | Methodology/ies for examining individuals’ experiences | Gill ( ) |

Storytelling Inquiry | Six distinct approaches to storytelling useful for eliciting fine-grained and detailed stories from informants | Boje ( ) Rosile, Boje, Carlon, Downs, and Saylors ( ) Boje and Saylors ( ) |

Narrative and Textual Analysis | Analysis of written and spoken verbal behavior and documents using techniques from literary criticism, rhetoric, and sociolinguistic analysis to understand discourse | McCloskey ( ) Boje ( ) Gephart ( , , ) Ganzin, Gephart, and Suddaby ( ) Martin ( ) Calas and Smircich ( ) Pollach ( ) |

Organization Development/Action Research | Approaches to improving organizational structure and functioning through practice-based interventions | Cummings and Worley ( ) Buono and Savall ( ) Worley, Zardet, Bonnet, and Savall ( ) |

Table 4. Logical Strategies for Answering Qualitative Research Questions with Evidence

Feature | Inductive | Deductive | Abductive |

|---|---|---|---|

Ontology | Realist | Realist/Objectivist | Interpretive/Constructionist |

Assumptions | Objective world that is perceived subjectively; hence perceptions of reality can differ | Single objective reality independent of people’s perceptions | |

Questions | What—describe and explain phenomena | Why—explain associations between/among phenomena | What, why, and how—describe and explain conditions for occurrence of phenomena from lay and scientific perspectives |

Aim | |||

Logic | Linear: Begin with singular statements and conclude via induction with generalizations | Linear: Establish associations via induction or abduction then test them using deductive reasoning | Spiral processes: Analytical process moves from lay actors’ accounts to technical descriptions using scientific accounts Scientist makes an hypothesis that appears to explain observations then proposes what gave rise to it (Blaikie, , p. 164) |

Primary Focus | Objective features of settings described through subjective, personal perspectives | Objective features of broad realities described from objective, unbiased perspectives | Intersubjective meanings and interpretations used in everyday life to construct objective features and reveal subjective meanings |

Principles | Facts gained by unbiased observations Elimination method Hypotheses are not used to compare facts | Borrow or invent a theory, express it as a deductive argument, deduce a conclusion, test the conclusion. If it passes, treat the conclusion as the explanation. | Construct second-order scientific theories by generalization/induction and inference from observations of actors’ activities, terms, meanings, and theories. Incorporate members’ meanings—phenomena left out of inductive and deductive research. |

Outcomes | Describes features of domain of social action and infers from one set of facts to another: hence can confirm existence of phenomena in initial domain but cannot discover phenomena outside of previously known domain | Scientist has great freedom to propose theory but nature decides on the validity of conclusions: knowledge limited to prior hypotheses, no discovery possible (Blaikie, , p. 144) | , p. 165) |

Based in part on Blaikie ( 1993 ), ch. 5 & 6; Blaikie ( 2010 ), p. 84, table 4.1

The Inductive Strategy

Induction is the scientific method for many scholars (Blaikie, 1993 , p. 134), and an essential logic for qualitative management research (Pratt, 2009 , p. 856). Inductive strategies ask “what” questions to explore a domain to discover unknown features of a phenomenon (Blaikie, 2010 , p. 83). There are four stages to the inductive strategy: (a) observe and record all facts without selection or anticipating their importance; (b) analyze, compare, and classify facts without employing hypotheses; (c) develop generalizations inductively based on the analyses; and (d) subject generalizations to further testing (Blaikie, 1993 , p. 137).

Inductive research assumes a real world outside human thought that can be directly sensed and described (Blaikie, 2010 ). Principles of inductive research reflect a realist and objectivist ontology. The selection, definition, and measurement of characteristics to be studied are developed from an objective, scientific point of view. Facts about organizational features need to be obtained using unbiased measurement. Further, the elimination method is used to find “the characteristics present in all the positive cases, which are absent in all the negative cases, and which vary in appropriate degrees” (Blaikie, 1993 , p. 135). This requires data collection methods that provide unbiased evidence of the objective facts without pre-supposing their importance.

Induction can establish limited generalizations about phenomena based solely on the observations collected. Generalizations need to be based on the entire sample of data, not on selected observations from large data sets, to establish their validity. The scope of generalization is limited to the sample of data itself. Induction creates evidence to increase our confidence in a conclusion, but the conclusions do not logically follow from premises (Blaikie, 1993 , p. 164). Indeed, inferences from induction cannot be extended beyond the original set of observations and no logical or formal process exists to establish the universality of inferences.

Key data collection methods for inductive designs include observational strategies that allow the researcher to view behavior without making a priori hypotheses, to describe behavior that occurs “naturally” in settings, and to record non-impressionistic descriptions of behavior. Interviews can also elicit descriptions of settings and behavior for inductive qualitative research. Data analysis methods need to describe actual interactions in real settings including discourse among members. These methods include ethnosemantic analysis to uncover key terms and validate actual meanings used by members; analyses of conversational practices that show how meaning is negotiated through sequential turn taking in discourse; and grounded theory-based concept coding and theory development that use the constant comparative method.

Facts or descriptions of events can be compared to one another and generalizations can be made about the world using induction (Blaikie, 2010 ). Outcomes from inductive analysis include descriptions of features in a limited domain of social action that are inferred to exist in other similar settings. Propositions and broader insights can be developed inductively from these descriptions.

The Deductive Strategy

Deductive logic (Blaikie, 1993 , 2010 ) addresses “why” questions to explain associations between concepts that represent phenomena of interest. Researchers can use induction, abduction, or any means, to develop then test the hypotheses to see if they are valid. Hypotheses that are not rejected are temporarily corroborated. The outcomes from deduction are tested hypotheses. Researchers can thus be very creative in hypothesis construction but they cannot discover new phenomena with deduction that is based only on phenomena known in advance (Blaikie, 2010 ). And there is also no purely logical or mechanical process to establish “the validity of [inductively constructed] universal statements from a set of singular statements” from which deductive hypotheses were formed (Hempel, 1966 , p. 15 cited in Blaikie, 1993 , p. 140).

The deductive strategy uses a realist and objectivist ontology and imitates natural science methods. Useful data collection methods include observation, interviewing, and collection of documents that contain facts. Deduction addresses the assumedly objective features of settings and interactions. Appropriate data analysis methods include content coding to identify different types, features, and frequencies of observed phenomena; grounded theory coding and analytical induction to create categories in data, determine how categories are interrelated, and induce theory from observations; and pattern recognition to compare current data to prior models and samples. Content analysis and non-parametric statistics can be used to quantify qualitative data and make it more amenable to analysis, although quantitative analysis of qualitative data is not, strictly speaking, qualitative research (Gephart, 2004 ).

The Abductive Strategy

Abduction is “the process used to produce social scientific accounts of social life by drawing on the concepts and meanings used by social actors, and the activities in which they engage” (Blaikie, 1993 , p. 176). Abductive reasoning assumes that the socially meaningful world is the world experienced by members. The first abductive task is to discover the insider view that is basic to the actions of social actors (p. 176) by uncovering the subjective meanings held by social actors. Subjective meaning (Schutz, 1973a , 1973b ) refers to the meaning that actions hold for the actors themselves and that they can express verbally. Subjective meaning is not inexpressible ideas locked in one’s mind. Abduction starts with lay descriptions of social life, then moves to technical, scientific descriptions of social life (Blaikie, 1993 , p. 177) (see Table 4 ). Abduction answers “what” questions with induction, why questions with deduction, and “how” questions with hypothesized processes that explain how, and under what conditions, phenomena occur. Abduction involves making a logical leap that infers an explanatory process to explain an outcome in an oscillating logic. Deductive, inductive, and inferential processes move recursively from actors’ accounts to social science accounts and back again in abduction (Gephart, 2018 ). This process enables all theory and second-order scientific concepts to be grounded in actors’ first-order meanings.

The abductive strategy contains four layers: (a) everyday concepts and meanings of actors, used for (b) social interaction, from which (c) actors provide accounts, from which (d) social scientific descriptions are made, or theories are generated and applied, to interpret phenomena (Blaikie, 1993 , p. 177). The multifaceted research process, described in Table 4 , requires locating and comprehending members’ important everyday concepts and theories before observing or creating disruptions that force members to explain the unstated knowledge behind their action. The researcher then integrates members’ first-order concepts into a general, second-order scientific theory that makes first-order understandings recoverable.

Abduction emerged from Weber’s interpretive sociology ( 1978 ) and Peirce’s ( 1936 ) philosophy. But Alfred Schutz ( 1973a , 1973b ) is the contemporary scholar who did the most to extend our understanding of abduction, although he never used the term “abduction” (Blaikie, 1993 , 2010 ; Gephart, 2018 ). Schutz conceived abduction as an approach to verifiable interpretive knowledge that is scientific and rigorous (Blaikie, 1993 ; Gephart, 2018 ). Abduction is appropriate for research that seeks to go beyond description to explanation and prediction (Blaikie, 1993 , p. 163) and discovery (Gephart, 2018 ). It employs an interpretive ontology (Schutz, 1973a , 1973b ) and social constructionist epistemology (Berger & Luckmann, 1966 ), using qualitative methods to discover “why people do what they do” (Blaikie, 1993 ).

Dynamic data collection methods are needed for abductive research to capture descriptions of interactions in actual settings and their meanings to members. Observational and interview approaches that elicit members’ concepts and theories are particularly relevant to abductive understanding (see Table 2 ). Data analysis methods must analyze situated, first-order (common sense) discourse as it unfolds in real settings and then systematically develop second-order concepts or theories from data. Relevant approaches to produce and validate findings include ethnography, ethnomethodology, and grounded theorizing (see Table 3 ). The combination of what, why, and how questions used in abduction produces a broader understanding of phenomena than do what and why deductive and inductive questions.

Layer Three: Paradigms of Research

Scholarly paradigms integrate methods, logics, and intellectual worldviews into coherent theoretical perspectives and form the most abstract level of research design. Six paradigms are widely used in management research (Burrell & Morgan, 1979 ; Cunliffe, 2011 ; Gephart, 2004 , 2013 ; Gephart & Richardson, 2008 ; Hassard, 1993 ). The first three perspectives—positivism, interpretive induction, and interpretive abduction—build on logics of design and seek to produce rigorous empirical research that constitutes evidence (see Table 5 ). Three additional perspectives pursue philosophical, critical, and practical knowledge: critical theory, postmodernism, and organization development (see Table 6 ). Tables 5 and 6 describe important features of each research design to show similarities and differences in the processes through which theoretical meaning is bestowed on research results in management and organization studies.

Table 5. Paradigms, Logical Strategies, and Methodologies for Empirical Research

DIMENSION | Positivism | Interpretive Induction | Interpretive Science |

|---|---|---|---|

Nature of Reality | Realism: Single objective, durable, knowable reality independent of people | Socially constructed reality with subjective and objective features | Material reality socially constructed through inter-subjective practices that link objective to subjective meanings |

Goal | Discover facts and causal interrelationships among facts (variables) | Provide descriptive accounts, theories and data-based understandings of members’ practices | Develop second-order scientific theories from lay members’ first-order concepts and everyday understandings |

Research Questions | Why questions | What questions | What, why, and how questions |

Methods Foci | Facts Variables, hypotheses, associations, and correlations | Meanings: Describe language use in real life contexts, communication, meaning during organizational action | Meaning: Describe how members construct and maintain a sense of shared meaning and social structure (intersubjectivity) |

Methods Orientation | |||

Logical strategies | Induction | Abduction Induction Deduction | |

Data Collection Methods | Observation Interviews Audio and video records Field notes Document collection | Ethnography Participant observation Interviewing Audio or video tape recording Field notes Document collection | Ethnography Participant observation Informant interviewing Audio or video with detailed transcriptions of conversation and recording Field notes Document collection |

Data Analysis Methods | Pattern matching Content analysis Grounded Theory | Analytical induction Grounded theory coding Gioia method | Schutz’s abductive method Expansion analysis Conversation analysis Ethnomethodogy Interpretive textual analysis |

Research Process | , p. 90) | ||

Research Design Stages | |||

Research Outcomes | |||

Assessing knowledge | |||

Types of Knowledge Sought | Scientific knowledge | Scholarly knowledge that is interpretive and has scientific features | Scientific knowledge that is replicable, reliable and valid Practice-oriented knowledge of members’ gained based on first-order understandings |

Sources: Based on and adapted and extended from Blaikie ( 1993 , pp. 137, 145, & 152); Blaikie ( 2010 , Table 4.1, p. 84); Gephart ( 2013 , Table 9.1, p. 291) and Gephart ( 2018 , Table 3.1, pp. 38–39).

Table 6. Alternative Paradigms, Logical Strategies, and Methodologies

Dimension | Critical Research | Postmodern Perspectives | Organization Development Research |

|---|---|---|---|

| Dialectical reality with objective contradictions and reified structures that produce power-based inequities | ||

| Uncover, dereify, and challenge taken-for-granted meanings and practices to reduce power inequities, enable emancipation, and motivate social change | Reduce hidden costs Enhance value added for humans | |

| |||

| Actions and ideologies that create reified, objective social structures that are oppressive—OR—disrupt reified structures | Analysis of texts and discourse that shape and bestow power to show their value-laden nature | |

| Describe and uncover sources of oppression and discord Produce accounts that enable or encourage social action and change Emphasis on description, unveiling of reified structure, change | Describe and uncover sources of oppression and discord Produce accounts that enable or encourage social action and change Emphasis on description, unveiling of reified structure, change | |

| Reflection, Critical reflexivity Dialectical methods | Reflection Deconstruction Linguistic play | Deduction Induction Abduction |

| All methods possibly useful Case descriptions Document collection | Collect documents and texts | Observations, interviews |

| All qualitative methods are possibly useful Dialogical Inquiry Critical ethnography | Storytelling inquiry Critical discourse analysis Narrative and rhetorical analysis Deconstruction | Pattern matching Storytelling Qualimetrics Hidden cost analysis |

| Unmasking of oppression Development of political strategies for action Trigger actions that produce change Trace the conflictual role of power in organizational life | Create texts that disrupt the readers’ conceptions and viewpoints Challenge status quo knowledge Expose hidden knowledge and hidden interests Motivate action to resist categorizations | Qualitative and quantitative improvements in organizational functioning and performance Reduction of hidden costs |

| Quality of theory developed Positive impacts on management policies and practices to reduce oppression, inequities | Novel research to produce novelinsights | Examineperformance outcomes |

| Political knowledge, historical knowledge, change orientation | Disruptive knowledge, change orientation, philosophical, literary, and rhetorical texts | Practical knowledge Actionable knowledge |

Based in part on Gephart ( 2004 , 2013 , 2018 ).

The Positivist Approach

The qualitative positivist approach makes assumptions equivalent to those of quantitative research (Gephart, 2004 , 2018 ). It assumes the world is objectively describable and comprehensible using inductive and deductive logics. And rigor is important and achieved by reliability, validity, and generalizability of findings (Kirk & Miller, 1986 ; Malterud, 2001 ). Qualitative positivism mimics natural science logics and methods using data recorded as words and talk rather than numerals.

Positivist research (Bitektine, 2008 ; Su, 2018 ) starts with a hypothesis. This can, but need not, be based in data or inductive theory. The research process, aimed at publication in peer-reviewed journals, requires researchers to (a) identify variables to measure, (b) develop operational definitions of the variables, (c) measure (describe) the variables and their inter-relationships, (d) pose hypotheses to test relationships among variables, then (e) compare observations to hypotheses for testing (Blaikie, 2010 ). When data are consistent with theory, theory passes the test. Otherwise the theory fails. This theory is also assessed for its logical correctness and value for knowledge. The positivist approach can assess deductive and inductive generalizations and provide evidence concerning why something occurs—if proposed hypotheses are not rejected.

Positivists view qualitative research as highly subject to biases that must be prevented to ensure rigor, and 23 methodological steps are recommended to enhance rigor and prevent bias (Gibbert & Ruigrok, 2010 , p. 720). Replicability is another concern because methodology descriptions in qualitative publications “insufficiently describe” how methods are used (Lee, Mitchell, & Sablynski, 1999 , p. 182) and thereby prevent replication. To ensure replicability, a qualitative “article’s description of the method must be sufficiently detailed to allow a reader . . . to replicate that reported study either in a hypothetical or actual manner.”

Qualitative research allows positivists to observe naturally unfolding behavior in real settings and allow “the real world” of work to inform research and theory (Locke & Golden-Biddle, 2004 ). Encounters with the actual world provide insights into meaning construction by members that cannot be captured with outsider (etic) approaches. For example, past quantitative research provided inconsistent findings on the importance of pre- and post-recruitment screening interviews for job choices of recruits. A deeper investigation was thus designed to examine how recruitment impacts job selection (Rynes, Bretz, & Gerhart, 1991 ). To do so, students undergoing recruitment were asked to “tell us in their own words” how their recruiting and decision processes unfolded (Rynes et al., 1991 , p. 399). Using qualitative evidence, the researchers found that, in contrast to quantitative findings, “people do make choices based on how they are treated” (p. 509), and the choices impact recruitment outcomes. Rich descriptions of actual behavior can disconfirm quantitative findings and produce new findings that move the field forward.