ESL Essay Writing Rubric

- Resources for Teachers

- Pronunciation & Conversation

- Writing Skills

- Reading Comprehension

- Business English

- TESOL Diploma, Trinity College London

- M.A., Music Performance, Cologne University of Music

- B.A., Vocal Performance, Eastman School of Music

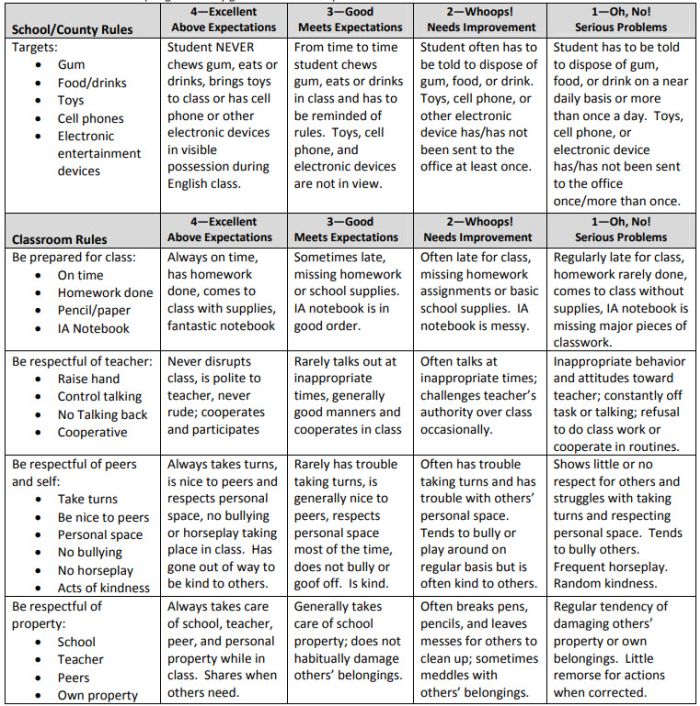

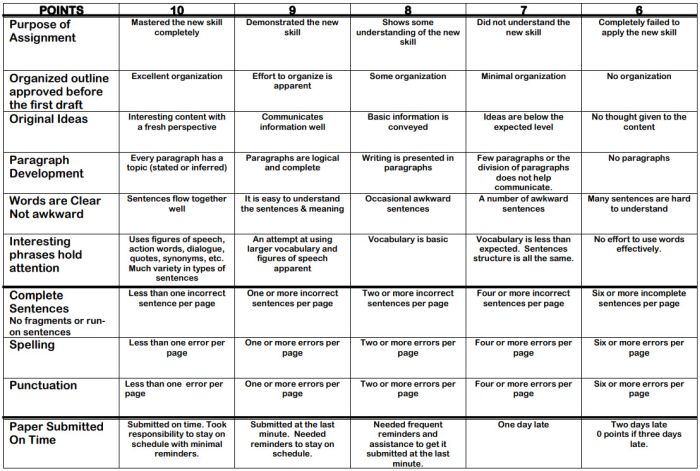

Scoring essays written by English learners can at times be difficult due to the challenging task of writing larger structures in English. ESL / EFL teachers should expect errors in each area and make appropriate concessions in their scoring. Rubrics should be based on a keen understanding of English learner communicative levels . This essay writing rubric provides a scoring system which is more appropriate to English learners than standard rubrics. This essay writing rubric also contains marks not only for organization and structure, but also for important sentence level mistakes such as the correct usage of linking language , spelling , and grammar.

Essay Writing Rubric

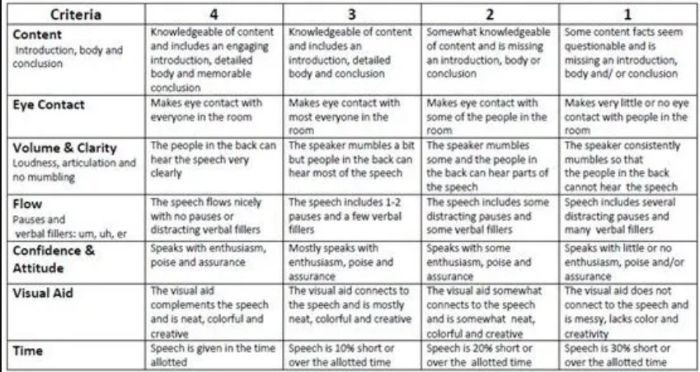

- ESL Presentation Rubric

- How to Teach Essay Writing

- Beginning Level Curriculum for ESL Classes

- Writing Cause and Effect Essays for English Learners

- Learn English

- Beginning Guide to Teaching ESL

- Standard Lesson Plan Format for ESL Teachers

- Synonyms and Antonyms for ESL

- ESL Tips to Improve Your English Online

- Beginning Writing Short Writing Assignments

- Sentence Connectors and Sentences

- English Teaching Abbreviations Explained

- Top Lesson Plans for ESL and EFL

- Sentence Type Basics for English Learners

- How to Build an ESL Class Curriculum

- CALL Use in the ESL/EFL Classroom

Essay Rubric

About this printout

This rubric delineates specific expectations about an essay assignment to students and provides a means of assessing completed student essays.

Teaching with this printout

More ideas to try.

Grading rubrics can be of great benefit to both you and your students. For you, a rubric saves time and decreases subjectivity. Specific criteria are explicitly stated, facilitating the grading process and increasing your objectivity. For students, the use of grading rubrics helps them to meet or exceed expectations, to view the grading process as being “fair,” and to set goals for future learning. In order to help your students meet or exceed expectations of the assignment, be sure to discuss the rubric with your students when you assign an essay. It is helpful to show them examples of written pieces that meet and do not meet the expectations. As an added benefit, because the criteria are explicitly stated, the use of the rubric decreases the likelihood that students will argue about the grade they receive. The explicitness of the expectations helps students know exactly why they lost points on the assignment and aids them in setting goals for future improvement.

- Routinely have students score peers’ essays using the rubric as the assessment tool. This increases their level of awareness of the traits that distinguish successful essays from those that fail to meet the criteria. Have peer editors use the Reviewer’s Comments section to add any praise, constructive criticism, or questions.

- Alter some expectations or add additional traits on the rubric as needed. Students’ needs may necessitate making more rigorous criteria for advanced learners or less stringent guidelines for younger or special needs students. Furthermore, the content area for which the essay is written may require some alterations to the rubric. In social studies, for example, an essay about geographical landforms and their effect on the culture of a region might necessitate additional criteria about the use of specific terminology.

- After you and your students have used the rubric, have them work in groups to make suggested alterations to the rubric to more precisely match their needs or the parameters of a particular writing assignment.

- Print this resource

Explore Resources by Grade

- Kindergarten K

Two ESL Writing Rubric Templates

Providing timely and high-quality feedback to students is key to ensure they make consistent progress, but grading (English as a Second Language) ESL writing assignments is time-consuming. An ESL writing rubric can make the process significantly easier for you and transparent for your students.

In this blog post, we will show you two ESL writing rubric templates that you can use to grade your student’s writing. But first, let’s understand what is an ESL writing rubric.

What is an ESL Writing Rubric?

Grading written essays objectively and consistently is difficult without standardized rubrics. With an ESL writing feedback rubric teachers can provide detailed, personalized, fair and unbiased feedback to their students.

Rubric-based feedback is helpful for students because it clearly communicates their instructor's expectations and how they measure against it, so don’t forget to craft a rubric that very clearly captures expectations and your feedback for the assignment.

ESL Writing Rubric Template

Esl essay writing rubric template.

Thank you for submitting your assignment. You can read my feedback below: Purpose {formtoggle: name=Clearly defined; default=yes}Purpose is clearly defined; includes many details that connect talking points and develop the assignment; may include minor irrelevant information.{endformtoggle: trim=yes} {formtoggle: name=good, not well supported; default=no}Purpose is comprehensible and defined; includes some details, although not all points are adequately supported; some irrelevant information.{endformtoggle: trim=yes} {formtoggle: name=not comprehensible, loose connections; default=no}Purpose is defined, but may not be comprehensible for readers who don’t know the writer; few details included; loose connections; a lot of irrelevant information.{endformtoggle: trim=yes}{formtoggle: name=no supporting details; default=no}Attempts to define the purpose; few to no supporting details.{endformtoggle} Organization {formtoggle: name=coherent and logical; default=yes}Coherent and logical throughout the assignment; clear beginning, middle and end; smooth transitions used to connect ideas.{endformtoggle: trim=yes} {formtoggle: name=follows a logical sequence; default=no}Assignment follows a logical sequence; contains a beginning, middle and end.{endformtoggle: trim=yes} {formtoggle: name=beginning or end is unclear; default=no}Attempts to follow a logical sequence; beginning or ending is unclear or ends abruptly.{endformtoggle: trim=yes} {formtoggle: name=incoherent, disconnected ideas; default=no}No coherent order; written with sentence fragments or disconnected ideas.{endformtoggle} Vocabulary {formtoggle: name=wide vocab; default=yes}Wide variety of vocabulary used; words help expand the topic; minor inaccuracies that do not affect the reader's ability to comprehend the assignment.{endformtoggle: trim=yes} {formtoggle: name=good vocab, few errors; default=no}Variety of words related to the topic; few errors.{endformtoggle: trim=yes} {formtoggle: name=basic vocab, unrelated words; default=no}Uses basic vocabulary; some words are not related to the topic.{endformtoggle: trim=yes} {formtoggle: name=limited vocab, very inaccurate; default=no}Uses a limited vocabulary that is below the expected level; vocabulary is largely inaccurate.{endformtoggle} Grammar/Punctuation/Conventions {formtoggle: name=excellent; default=yes}The writer demonstrates an excellent understanding of English conventions; limited errors that do not affect the reader’s ability to comprehend the assignment.{endformtoggle: trim=yes} {formtoggle: name=average; default=no}Demonstrates an average understanding of English conventions; errors are unlikely to affect reader comprehension; low amount of errors.{endformtoggle: trim=yes} {formtoggle: name=frequent errors; default=no}Shows some understanding of English conventions; frequent errors; errors hinder reader’s ability to comprehend the assignment.{endformtoggle: trim=yes} {formtoggle: name=incomprehensible; default=no}Demonstrates lower than expected knowledge of English conventions; the piece is nearly incomprehensible.{endformtoggle}

Fluency {formtoggle: name=noticeable effort to write; default=yes}The writer does not use translation; puts noticeable effort to write like a native speaker.{endformtoggle: trim=yes} {formtoggle: name=very few errors; default=no}Very little translation is used in the assignment; effort to write like a native speaker; errors are few.{endformtoggle: trim=yes} {formtoggle: name=hard to understand; default=no}Heavy translation throughout the document; writing is hard to understand.{endformtoggle: trim=yes} {formtoggle: name=incomprehensible; default=no}Writing is incomprehensible.{endformtoggle}

Hi there! You made it all the way down to the bottom of this article. Take a few seconds to share it.

Want to turbo charge your work with templates and snippets? Text Blaze is the fastest way to do that.

120 ESL Report Card Comments in 2024

eslwriting.org

A panoply of teaching resources.

ESL Writing: A Simple Scoring Rubric

Anyone who teaches ESL writing knows there comes a time when student papers pile up on the desk and they have to be marked. The hard part, when faced with 30 or more regular submissions, is finding a way to give English writing students meaningful input without spending hours generating feedback.

A writing rubric is one possible solution.

Below is a suggested scoring guide. It is simple, yes. Though I hope it provides useful guidance.

This rubric is suitable for one paragraph writing submissions. In addition, it is useful for classes where the emphasis is on three main elements:

- a strong topic sentence (control sentence)

- several sentences of supporting material (reasons, evidence or details)

- a conclusion

ESL Writing Rubric

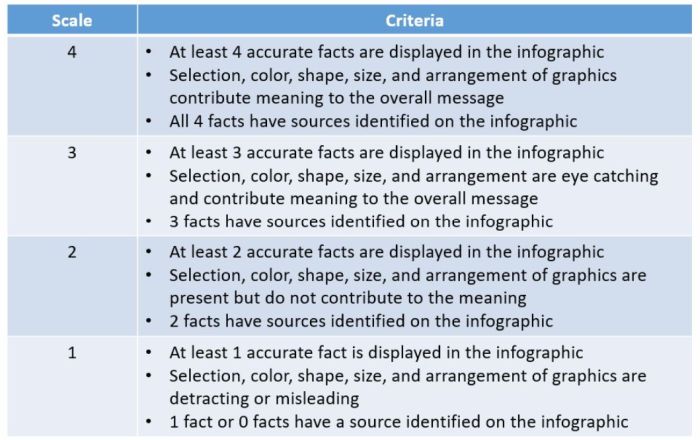

This ESL writing rubric uses a 1 to 4 scoring system.

- A well-structured paragraph (clear topic sentence, good support and conclusion). Grammar and spelling errors are present though the meaning of the passage is clear.

- A good topic sentence though the paragraph lacks unity (the main idea is not well supported) or there are too many grammar or spelling errors.

- It looks like a rough draft. The topic sentence is not well formulated, the supporting data is unclear and there are too many errors.

- Incomplete. It is not possible to understand the meaning of the paragraph because key sections are missing or they cannot be understood.

Leave a Comment

This site uses Akismet to reduce spam. Learn how your comment data is processed .

- help_outline help

iRubric: ESL Writing Assessment (Intermediate) rubric

- writing, esl, intermediate, composition

- Foreign Languages

Rubric Best Practices, Examples, and Templates

A rubric is a scoring tool that identifies the different criteria relevant to an assignment, assessment, or learning outcome and states the possible levels of achievement in a specific, clear, and objective way. Use rubrics to assess project-based student work including essays, group projects, creative endeavors, and oral presentations.

Rubrics can help instructors communicate expectations to students and assess student work fairly, consistently and efficiently. Rubrics can provide students with informative feedback on their strengths and weaknesses so that they can reflect on their performance and work on areas that need improvement.

How to Get Started

Best practices, moodle how-to guides.

- Workshop Recording (Fall 2022)

- Workshop Registration

Step 1: Analyze the assignment

The first step in the rubric creation process is to analyze the assignment or assessment for which you are creating a rubric. To do this, consider the following questions:

- What is the purpose of the assignment and your feedback? What do you want students to demonstrate through the completion of this assignment (i.e. what are the learning objectives measured by it)? Is it a summative assessment, or will students use the feedback to create an improved product?

- Does the assignment break down into different or smaller tasks? Are these tasks equally important as the main assignment?

- What would an “excellent” assignment look like? An “acceptable” assignment? One that still needs major work?

- How detailed do you want the feedback you give students to be? Do you want/need to give them a grade?

Step 2: Decide what kind of rubric you will use

Types of rubrics: holistic, analytic/descriptive, single-point

Holistic Rubric. A holistic rubric includes all the criteria (such as clarity, organization, mechanics, etc.) to be considered together and included in a single evaluation. With a holistic rubric, the rater or grader assigns a single score based on an overall judgment of the student’s work, using descriptions of each performance level to assign the score.

Advantages of holistic rubrics:

- Can p lace an emphasis on what learners can demonstrate rather than what they cannot

- Save grader time by minimizing the number of evaluations to be made for each student

- Can be used consistently across raters, provided they have all been trained

Disadvantages of holistic rubrics:

- Provide less specific feedback than analytic/descriptive rubrics

- Can be difficult to choose a score when a student’s work is at varying levels across the criteria

- Any weighting of c riteria cannot be indicated in the rubric

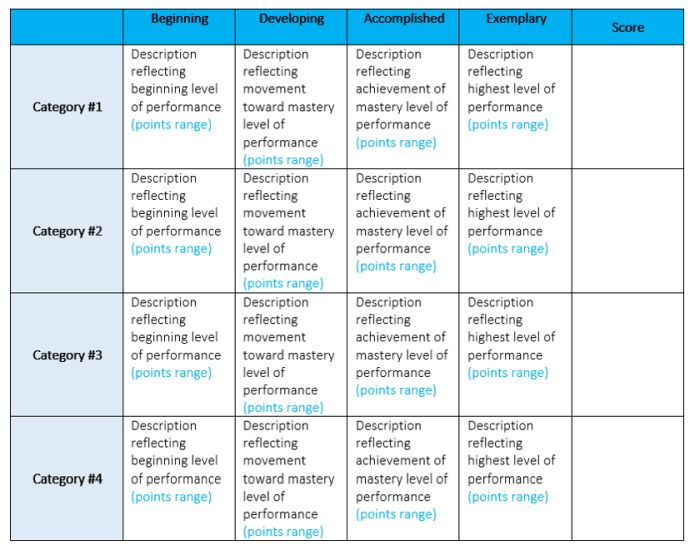

Analytic/Descriptive Rubric . An analytic or descriptive rubric often takes the form of a table with the criteria listed in the left column and with levels of performance listed across the top row. Each cell contains a description of what the specified criterion looks like at a given level of performance. Each of the criteria is scored individually.

Advantages of analytic rubrics:

- Provide detailed feedback on areas of strength or weakness

- Each criterion can be weighted to reflect its relative importance

Disadvantages of analytic rubrics:

- More time-consuming to create and use than a holistic rubric

- May not be used consistently across raters unless the cells are well defined

- May result in giving less personalized feedback

Single-Point Rubric . A single-point rubric is breaks down the components of an assignment into different criteria, but instead of describing different levels of performance, only the “proficient” level is described. Feedback space is provided for instructors to give individualized comments to help students improve and/or show where they excelled beyond the proficiency descriptors.

Advantages of single-point rubrics:

- Easier to create than an analytic/descriptive rubric

- Perhaps more likely that students will read the descriptors

- Areas of concern and excellence are open-ended

- May removes a focus on the grade/points

- May increase student creativity in project-based assignments

Disadvantage of analytic rubrics: Requires more work for instructors writing feedback

Step 3 (Optional): Look for templates and examples.

You might Google, “Rubric for persuasive essay at the college level” and see if there are any publicly available examples to start from. Ask your colleagues if they have used a rubric for a similar assignment. Some examples are also available at the end of this article. These rubrics can be a great starting point for you, but consider steps 3, 4, and 5 below to ensure that the rubric matches your assignment description, learning objectives and expectations.

Step 4: Define the assignment criteria

Make a list of the knowledge and skills are you measuring with the assignment/assessment Refer to your stated learning objectives, the assignment instructions, past examples of student work, etc. for help.

Helpful strategies for defining grading criteria:

- Collaborate with co-instructors, teaching assistants, and other colleagues

- Brainstorm and discuss with students

- Can they be observed and measured?

- Are they important and essential?

- Are they distinct from other criteria?

- Are they phrased in precise, unambiguous language?

- Revise the criteria as needed

- Consider whether some are more important than others, and how you will weight them.

Step 5: Design the rating scale

Most ratings scales include between 3 and 5 levels. Consider the following questions when designing your rating scale:

- Given what students are able to demonstrate in this assignment/assessment, what are the possible levels of achievement?

- How many levels would you like to include (more levels means more detailed descriptions)

- Will you use numbers and/or descriptive labels for each level of performance? (for example 5, 4, 3, 2, 1 and/or Exceeds expectations, Accomplished, Proficient, Developing, Beginning, etc.)

- Don’t use too many columns, and recognize that some criteria can have more columns that others . The rubric needs to be comprehensible and organized. Pick the right amount of columns so that the criteria flow logically and naturally across levels.

Step 6: Write descriptions for each level of the rating scale

Artificial Intelligence tools like Chat GPT have proven to be useful tools for creating a rubric. You will want to engineer your prompt that you provide the AI assistant to ensure you get what you want. For example, you might provide the assignment description, the criteria you feel are important, and the number of levels of performance you want in your prompt. Use the results as a starting point, and adjust the descriptions as needed.

Building a rubric from scratch

For a single-point rubric , describe what would be considered “proficient,” i.e. B-level work, and provide that description. You might also include suggestions for students outside of the actual rubric about how they might surpass proficient-level work.

For analytic and holistic rubrics , c reate statements of expected performance at each level of the rubric.

- Consider what descriptor is appropriate for each criteria, e.g., presence vs absence, complete vs incomplete, many vs none, major vs minor, consistent vs inconsistent, always vs never. If you have an indicator described in one level, it will need to be described in each level.

- You might start with the top/exemplary level. What does it look like when a student has achieved excellence for each/every criterion? Then, look at the “bottom” level. What does it look like when a student has not achieved the learning goals in any way? Then, complete the in-between levels.

- For an analytic rubric , do this for each particular criterion of the rubric so that every cell in the table is filled. These descriptions help students understand your expectations and their performance in regard to those expectations.

Well-written descriptions:

- Describe observable and measurable behavior

- Use parallel language across the scale

- Indicate the degree to which the standards are met

Step 7: Create your rubric

Create your rubric in a table or spreadsheet in Word, Google Docs, Sheets, etc., and then transfer it by typing it into Moodle. You can also use online tools to create the rubric, but you will still have to type the criteria, indicators, levels, etc., into Moodle. Rubric creators: Rubistar , iRubric

Step 8: Pilot-test your rubric

Prior to implementing your rubric on a live course, obtain feedback from:

- Teacher assistants

Try out your new rubric on a sample of student work. After you pilot-test your rubric, analyze the results to consider its effectiveness and revise accordingly.

- Limit the rubric to a single page for reading and grading ease

- Use parallel language . Use similar language and syntax/wording from column to column. Make sure that the rubric can be easily read from left to right or vice versa.

- Use student-friendly language . Make sure the language is learning-level appropriate. If you use academic language or concepts, you will need to teach those concepts.

- Share and discuss the rubric with your students . Students should understand that the rubric is there to help them learn, reflect, and self-assess. If students use a rubric, they will understand the expectations and their relevance to learning.

- Consider scalability and reusability of rubrics. Create rubric templates that you can alter as needed for multiple assignments.

- Maximize the descriptiveness of your language. Avoid words like “good” and “excellent.” For example, instead of saying, “uses excellent sources,” you might describe what makes a resource excellent so that students will know. You might also consider reducing the reliance on quantity, such as a number of allowable misspelled words. Focus instead, for example, on how distracting any spelling errors are.

Example of an analytic rubric for a final paper

Example of a holistic rubric for a final paper, single-point rubric, more examples:.

- Single Point Rubric Template ( variation )

- Analytic Rubric Template make a copy to edit

- A Rubric for Rubrics

- Bank of Online Discussion Rubrics in different formats

- Mathematical Presentations Descriptive Rubric

- Math Proof Assessment Rubric

- Kansas State Sample Rubrics

- Design Single Point Rubric

Technology Tools: Rubrics in Moodle

- Moodle Docs: Rubrics

- Moodle Docs: Grading Guide (use for single-point rubrics)

Tools with rubrics (other than Moodle)

- Google Assignments

- Turnitin Assignments: Rubric or Grading Form

Other resources

- DePaul University (n.d.). Rubrics .

- Gonzalez, J. (2014). Know your terms: Holistic, Analytic, and Single-Point Rubrics . Cult of Pedagogy.

- Goodrich, H. (1996). Understanding rubrics . Teaching for Authentic Student Performance, 54 (4), 14-17. Retrieved from

- Miller, A. (2012). Tame the beast: tips for designing and using rubrics.

- Ragupathi, K., Lee, A. (2020). Beyond Fairness and Consistency in Grading: The Role of Rubrics in Higher Education. In: Sanger, C., Gleason, N. (eds) Diversity and Inclusion in Global Higher Education. Palgrave Macmillan, Singapore.

My Life! Teaching in a Korean University

in Life in the Classroom

ESL Writing Grading Rubric | Evaluating English Writing (For English Learners)

Do you teach writing to intermediate or advanced level English students, particularly in a university where you have to give grades? You’ve come to the right place! Keep on reading for all the details about my ESL rubric for writing that you can use to evaluate your students fairly. This ESL writing rubric is super easy to use and I think you’ll love it as much I do!

ESL writing rubric

Let’s get into all the details about this writing rubric for ESL students. Keep on reading!

ESL Writing Grading Rubric Introduction

For English teachers, grading writing and speaking are not easy when compared to something like grammar or vocabulary because there’s no right or wrong answer. Everything falls on a continuum from terrible to excellent.

The challenge for teachers is to grade in a way that’s fair and that also appears this way to the students. To do this, you’ll need an ESL writing rubric. I generally use the same one with all my writing classes, and for a wide variety of topics. Of course, feel free to adapt it to suit your needs for each individual situation.

Writing rubric for ESL students

ESL Writing Grading Rubric

My solution to ensure fair grading and also appear this way to my students is to use this ESL Writing Grading Rubric. I use it, even though within reading the first couple sentences of a 5-paragraph, 500-word essay, I usually know what grade the student will get. This is especially true if it’s going to be an A, or D/F.

The middle ones require a bit of a closer look to separate the B students from the C ones. This ESL essay rubric started off more complicated, but over the years I’ve simplified it. The best ESL rubrics for writing are simple enough that students can clearly understand why they received the grade that they did.

The Categories I Evaluate for English Writing

Each of these five categories has an equal number of possible points (from 0-5), for a total of 25 points. To make your grading life easier, if the essay is worth 30%, you can adjust the rubric to make each category out of 6 points. Or, for 20%, make it out of four points.

If you make each section out of 4 points, you can use something like the following for an ESL writing rubric:

1- Inadequate

2- Needs improvement

3- Meets expectations

4- Exceeds expectations

Here are the various categories that I look at when evaluating ESL writing:

- Sentences/paragraphs/format

- Grammar/spelling/punctuation/vocabulary

- Hook/thesis statement/topic sentences

- Task completion/effect on the reader

Here are more details about each of those things.

#1: Sentence Structure, paragraphs, format

The best essays have sentences and paragraphs that are complete and easy to read. A nice variety of conjunctions and transitions are used to join them together.

#2: Grammar, spelling, punctuation, vocabulary

The best pieces of writing may only have 1-2 small errors in this area. There is a good use of higher level grammar and vocabulary.

This section includes pretty much all the formatting, language (vocabulary and grammar) that a student at their level would be expected to know, even if we explicitly haven’t discussed it in class.

#3: Hook, thesis statement, topic sentence

To get full marks, all of these will have to be very well done, and actually almost perfect. This is because I teach this extensively in class, and ensure that it students take away one thing from my writing course, it’s how to do this.

The ideas in the writing are clear, logical and well organized. Good supporting facts and information are used. If it’s a take-home assignment, I expect it to include real stats and facts. If the essay is for a test written in class, then good logic will have to be used.

#5: Task completion, the effect on the reader

The best essays are easy to understand on a first, quick read-through. The student followed the directions for the assignment (word count, etc.)

Learn More about Assessing Writing for ESL/EFL Students

Can I Evaluate ESL/EFL Writing Without a Rubric?

You may be tempted to evaluate student writing without a rubric because you think it will save time. I don’t recommend this unless you’re teaching informally and the grades/feedback you give students doesn’t actually count towards an official grade of some kind.

But, if you’re teaching at a university in a credit class, grades actually matter. Beyond that simple fact, here’s why you should use a rubric to evaluate written work:

- It actually saves time because students will understand exactly how they got the grade that they did. You won’t have a steady stream of students in your office disputing their grade!

- The feedback is more meaningful and students have a better idea about how to improve their writing.

- You have some proof of why a student got the grade they did, if someone were to ask. It happened to me a number of times when teaching in Korea.

- Bolen, Jackie (Author)

- English (Publication Language)

- 72 Pages - 12/09/2019 (Publication Date) - Independently published (Publisher)

Can I use This ESL Writing Grading Rubric for a Paragraph?

I used to teach academic writing to advanced students where they had to write five paragraph essays. However, the above rubric would work equally well for a paragraph. Just about the only thing that would change is that you wouldn’t have a topic sentence because you’d only need a thesis statement for the entire piece.

Quick Tip to Cut Down on Grading Time for ESL Writing

The other tip besides using ESL rubrics for writing is for when grading ESL writing. This tip will reduce the number of complaints by a lot!

Tell the students that of course they are free to ask you to re-grade their essay and you will do your best to look at their essay with fresh eyes but you’ll spend 3-4 times the amount of time you spent the first time around and get out your red pen and circle every single mistake they’ve made and not overlook anything. I always mention that I’m very kind and generally overlook almost all the small-medium things.

I mention that it’s possible to get a higher grade but it’s also very possible to get a lower one. Finally, give the example of them getting 20/25, but they think they maybe should have gotten 21 or 22. In this case, it’s a terrible idea to ask me to re-grade it. However maybe I made a serious mistake and gave them 15/25, but their essay was actually quite good and they should have gotten 24/25. Then it would make sense to ask me to check again.

Mean-Yes. Effective-also Yes

Is this mean? Perhaps. But, it’s also quite effective and this past semester in my 3 writing classes, a grand total of zero students asked me to take a second look. I strive for total professionalism at all times, but I’m also not willing to let my job consume every last second of free time that I have.

Teaching ESL writing, the easy way. It’s possible! Use this ESL rubric for writing and you’ll be well on your way!

How Much Feedback Do You Give when using this Rubric?

It’s an excellent question! When I teach English writing, I’m ALL about teaching self-editing instead of having students rely on me to correct all their errors. During the semester, students are free to come to my office during my allotted hours for me to have a quick read-through of their writing. I’ll usually give feedback along the lines of:

“Your thesis statement is kind of weak. Have a look at that and see if you can make it more concise.”

“I noticed that you have very few transitions in your essay. It makes it kind of hard to read.”

“You have many grammar errors. For example, subject-verb agreement.”

“Can you try to use some more complicated grammar or vocabulary? It’s fine, but all the sentences are so similar.”

What about Feedback on Assignments and Tests?

Along with this rubric, I’ll write some comments on my students’ work, usually 3-4 sentences next to their grade. Throughout their essay, I’ll pick out around 5 things to circle as problems or errors.

I’ll put a checkmark as a sign of a good thing like the thesis statement or topic sentences.

Is it necessary to correct every single error? Not really. It’s often more helpful to just point out mistakes that students have made more than once.

Which ESL Writing Textbook Do you Recommend?

Looking for an excellent textbook for teaching academic writing to ESL or EFL students? Stop looking right now and go buy this: Great Writing 4: From Great Paragraphs to Great Essays .

It’s an ideal introduction to writing an essay for high intermediate to advanced level English students. In my case, I used it when teaching 3rd or 4th year English majors at a university in South Korea.

Writing grading rubric for ESL

How Do I Prevent Cheating in a Writing Class?

If you teach ESL writing in a for-credit class where you have to assign grades, you will almost certainly have students who try to cheat. There are a few things that I do to combat this and make things fair for the students.

Homework Assignments: Not Worth that Many Marks

During my course, I did have homework assignments. They just weren’t worth that many points, usually a maximum of 20% of the final grade.

The bulk of the grade was things we did in class: journalling and then the midterm and final exam that had to be physically written in class. This gave a better indication of who could actually write, without having the crutch of the Internet to assist them.

The Ultimate Thing to Do on the First Day of Class

On the first day of any writing class, I get students to complete a “Get to know you assignment.” I give them about 20 minutes to write 3 short paragraphs.

- The past (high school days, growing up, etc.)

- The present (university life and their thoughts about it)

- The future (dreams, hopes, etc.)

This shows you how in a very clear way how proficient students are at the past/present/future verb tenses and it’s often quite obvious who will do well in your class and who will struggle.

Then, keep these papers and in case of a questionable homework assignment, you have something to compare to. For example, I had one student submit something that I myself probably couldn’t have produced. It was actually that good and had advanced level vocabulary that I had to look up.

I pulled out her assignment from the first day and found it riddled with simple mistakes like not using the correct past tense verb form and other comparable mistakes. She clearly could not have done that homework assignment herself and my guess is that she paid someone to do it because I was unable to find it through a Google search.

Midterm/Final Exam: Assign Random Topics

Some teachers assign a single topic for the exams and then allow the students to prepare their essay beforehand. During the exam, they just have to write it out basically. I try to avoid this.

Instead, I give students a list of around 20 possible topics. Then, I give students a slip of paper with two possible choices that they must choose from. Each student gets a random combination.

This allows students to prepare in terms of ideas and main points, but they can’t memorize an essay word for word and I find it a better test of actual writing ability.

Consider Using the Grammarly Plagiarism Checker

If you’re serious about catching students who plagiarize, then you’ll want to invest in Grammarly. It’s just so much better than searching around on Google and can save you a ton of time when grading assignments. I get students to submit them electronically and then run them through this tool as the first step.

Head over there now to learn more about it:

Best Plagiarism Checker & Proofreader

ESL Writing Rubric FAQs

There are a number of common questions that people have about ESL rubrics for writing. Here are the answers to some of the most popular ones.

What is ESL writing?

ESL writing is writing for people who don’t speak English as their first language (ESL = English as a Second language). These learners are also known as non-native speakers.

How do I teach ESL writing?

Teaching ESL writing starts with forming good sentences. Then, move from sentences to writing good paragraphs with a thesis statement or informal diaries. Finally, students can begin to write academic essays , newspaper articles, blog posts, and more.

How do beginners learn to write ESL?

ESL beginners can learn to write by following a few simple steps to construct good sentences.

- Each sentence starts with a capital (big) letter.

- Finish each sentence with a period, question mark or exclamation point.

- Use a capital letter for proper names and “I.”

- At a minimum, each sentence must contain a subject and verb. Usually, they also contain a complement like a prepositional phrase or direct object.

How do I write a paragraph for ESL?

Writing a paragraph for ESL involves a few simple steps:

- Start with a good thesis statement that explains what your paragraph is about.

- Give some facts, reasons or examples to support your opinion or idea.

- Finish with a concluding sentence.

Have your say about this ESL Writing Grading Rubric

What do you use in your ESL writing classes to evaluate your students in a fair way? Do you like this ESL writing activity rubric, or do you prefer another one ? Leave a comment below and share your wisdom with us! We’d love to hear from you.

Also be sure to give this article a share on Facebook, Twitter, or Pinterest. It’ll help other busy teachers, like yourself find this useful resource.

ELL writing rubric

Last update on 2022-06-17 / Affiliate links / Images from Amazon Product Advertising API

About Jackie

Jackie Bolen has been teaching English for more than 15 years to students in South Korea and Canada. She's taught all ages, levels and kinds of TEFL classes. She holds an MA degree, along with the Celta and Delta English teaching certifications.

Jackie is the author of more than 30 books for English teachers and English learners, including Advanced English Conversation Dialogues and 39 No-Prep/Low-Prep ESL Speaking Activities for Teenagers and Adults . She loves to share her ESL games, activities, teaching tips, and more with other teachers throughout the world.

You can find her on social media at: Facebook Pinterest

About and Contact

Jackie Bolen has been talking ESL South Korea since 2014. The goal is to bring you the best tips, ideas, and news for everything teaching English in Korea, including university jobs.

Contact Jackie Bolen and My Life! Teaching in a Korean University here.

Email: [email protected]

Address: 2436 Kelly Ave, Port Coquitlam, Canada

Find the Privacy Policy here .

Best Seller

As an Amazon Associate, I earn from qualifying purchases.

Advertise on My Life! Teaching in Korean University

Recent Articles

Parts of the Body ESL Activities, Games, Worksheets & Lesson Plans

Val Hamer: Life After EFL and her Return to the UK

WH Questions ESL Activities and Games | ESL WH Question Ideas

How to Find a Language Partner | English Conversation Partner

Center for Excellence in Teaching

Home > Resources > Academic essay rubric

Academic essay rubric

This is a grading rubric an instructor uses to assess students’ work on this type of assignment. It is a sample rubric that needs to be edited to reflect the specifics of a particular assignment.

Download this file

Download this file [63.33 KB]

Back to Resources Page

Essay Papers Writing Online

Effective essay writing rubrics to enhance students’ skills and performance.

Essay writing is a fundamental skill that students must master to succeed academically. To help students improve their writing, educators often use rubrics as a tool for assessment and feedback. An essay writing rubric is a set of criteria that outlines what is expected in a well-crafted essay, providing students with clear guidelines for success.

Effective essay writing rubrics not only evaluate the quality of the content but also assess the organization, coherence, language use, and overall structure of an essay. By using a rubric, students can better understand what aspects of their writing need improvement and how to achieve higher grades.

This article will explore the importance of essay writing rubrics in academic success and provide tips on how students can use them to enhance their writing skills. By understanding and mastering the criteria outlined in a rubric, students can not only improve their writing but also excel in their academic endeavors.

Learn the Key Elements

When it comes to effective essay writing, understanding the key elements is essential for academic success. Here are some important elements to keep in mind:

- Thesis Statement: A clear and concise thesis statement that presents the main idea of your essay.

- Structure: A well-organized structure with introduction, body paragraphs, and a conclusion.

- Evidence: Use relevant evidence and examples to support your arguments.

- Analysis: Analyze the evidence provided and draw conclusions based on your analysis.

- Clarity and Coherence: Ensure that your essay is clear, coherent, and easy to follow.

By mastering these key elements, you can improve your essay writing skills and achieve academic success.

Elements of Effective Essay Writing

When it comes to writing a successful essay, there are several key elements that you should keep in mind to ensure your work is of high quality. Here are some essential aspects to consider:

Understand the Importance

Understanding the importance of essay writing rubrics is crucial for academic success. Rubrics provide clear criteria for evaluating and assessing essays, which helps both students and instructors. They help students understand what is expected of them, leading to more focused and organized writing. Rubrics also provide consistency in grading, ensuring that all students are evaluated fairly and objectively. By using rubrics, students can see where they excel and where they need to improve, making the feedback process more constructive and impactful.

of Clear and Concise Rubrics

One key aspect of effective essay writing rubrics is the clarity and conciseness of the criteria they outline. Clear and concise rubrics provide students with a roadmap for success and make grading more efficient for instructors. When creating rubrics, it is essential to clearly define the expectations for each aspect of the essay, including content, organization, style, and grammar.

By using language that is straightforward and easy to understand, students can easily grasp what is expected of them and how they will be evaluated. Additionally, concise rubrics avoid confusion and ambiguity, which can lead to frustration and misunderstanding among students.

Furthermore, clear and concise rubrics help instructors provide more targeted feedback to students, allowing them to focus on specific areas for improvement. This ultimately leads to better learning outcomes and helps students develop their writing skills more effectively.

Implement Structured Guidelines

Structured guidelines are essential for effective essay writing. These guidelines provide a framework for organizing your thoughts, ideas, and arguments in a logical and coherent manner. By implementing structured guidelines, you can ensure that your essay is well-organized, cohesive, and easy to follow.

One important aspect of structured guidelines is creating an outline before you start writing. An outline helps you plan the structure of your essay, including the introduction, body paragraphs, and conclusion. It also helps you organize your ideas and ensure that your arguments flow logically from one point to the next.

Another important aspect of structured guidelines is using transitions to connect your ideas and arguments. Transitions help readers follow the flow of your essay and understand how one point relates to the next. They also help maintain the coherence and clarity of your writing.

Finally, structured guidelines include formatting requirements such as word count, font size, spacing, and citation style. Adhering to these formatting requirements ensures that your essay meets academic standards and is visually appealing to readers.

for Academic Excellence

When aiming for academic excellence in essay writing, it is essential to adhere to rigorous standards and criteria set by the educational institution. The use of effective essay writing rubrics can greatly enhance the quality of academic work by providing clear guidelines and criteria for assessment.

Academic excellence in essay writing involves demonstrating a deep understanding of the subject matter, presenting a well-structured argument, and showcasing critical thinking skills. Rubrics can help students focus on key elements such as thesis development, evidence analysis, organization, and clarity of expression.

By following a well-designed rubric, students can ensure that their essays meet the desired academic standards and expectations. Feedback provided based on the rubric can also help students identify areas for improvement and guide them towards achieving academic success.

Master the Art

Mastering the art of essay writing requires dedication, practice, and attention to detail. To become a proficient essay writer, one must develop strong writing skills, a clear and logical structure, as well as an effective argumentative style. Start by understanding the purpose of your essay and conducting thorough research to gather relevant information and evidence to support your claims.

Furthermore, pay close attention to grammar, punctuation, and spelling to ensure your work is polished and professional. Use essay writing rubrics as a guide to help you stay on track and meet the necessary criteria for academic success. Practice makes perfect, so don’t be afraid to revise and edit your work to improve your writing skills even further.

By mastering the art of essay writing through consistent practice and attention to detail, you can set yourself up for academic success and develop a valuable skill that will benefit you in various aspects of your life.

of Constructing Detailed Evaluations

Constructing detailed evaluations in essay writing rubrics is essential for providing specific feedback to students. When designing a rubric, it is important to consider the criteria that will be assessed and clearly define each level of performance. This involves breaking down the evaluation criteria into smaller, measurable components, which allows for more precise and accurate assessments.

Each criterion in the rubric should have a clear description of what is expected at each level of performance, from basic to proficient to advanced. This helps students understand the expectations and enables them to self-assess their work against the rubric. Additionally, including specific examples or descriptors for each level can further clarify the expectations and provide guidance to students.

Constructing detailed evaluations also involves ensuring that the rubric aligns with the learning objectives of the assignment. By mapping the evaluation criteria to the desired learning outcomes, instructors can ensure that the rubric accurately reflects what students are expected to demonstrate in their essays. This alignment helps students see the relevance of the assessment criteria and encourages them to strive for mastery of the material.

Related Post

How to master the art of writing expository essays and captivate your audience, convenient and reliable source to purchase college essays online, step-by-step guide to crafting a powerful literary analysis essay, tips and techniques for crafting compelling narrative essays.

- Grades 6-12

- School Leaders

Enter Today's Teacher Appreciation Giveaway!

15 Helpful Scoring Rubric Examples for All Grades and Subjects

In the end, they actually make grading easier.

When it comes to student assessment and evaluation, there are a lot of methods to consider. In some cases, testing is the best way to assess a student’s knowledge, and the answers are either right or wrong. But often, assessing a student’s performance is much less clear-cut. In these situations, a scoring rubric is often the way to go, especially if you’re using standards-based grading . Here’s what you need to know about this useful tool, along with lots of rubric examples to get you started.

What is a scoring rubric?

In the United States, a rubric is a guide that lays out the performance expectations for an assignment. It helps students understand what’s required of them, and guides teachers through the evaluation process. (Note that in other countries, the term “rubric” may instead refer to the set of instructions at the beginning of an exam. To avoid confusion, some people use the term “scoring rubric” instead.)

A rubric generally has three parts:

- Performance criteria: These are the various aspects on which the assignment will be evaluated. They should align with the desired learning outcomes for the assignment.

- Rating scale: This could be a number system (often 1 to 4) or words like “exceeds expectations, meets expectations, below expectations,” etc.

- Indicators: These describe the qualities needed to earn a specific rating for each of the performance criteria. The level of detail may vary depending on the assignment and the purpose of the rubric itself.

Rubrics take more time to develop up front, but they help ensure more consistent assessment, especially when the skills being assessed are more subjective. A well-developed rubric can actually save teachers a lot of time when it comes to grading. What’s more, sharing your scoring rubric with students in advance often helps improve performance . This way, students have a clear picture of what’s expected of them and what they need to do to achieve a specific grade or performance rating.

Learn more about why and how to use a rubric here.

Types of Rubric

There are three basic rubric categories, each with its own purpose.

Holistic Rubric

Source: Cambrian College

This type of rubric combines all the scoring criteria in a single scale. They’re quick to create and use, but they have drawbacks. If a student’s work spans different levels, it can be difficult to decide which score to assign. They also make it harder to provide feedback on specific aspects.

Traditional letter grades are a type of holistic rubric. So are the popular “hamburger rubric” and “ cupcake rubric ” examples. Learn more about holistic rubrics here.

Analytic Rubric

Source: University of Nebraska

Analytic rubrics are much more complex and generally take a great deal more time up front to design. They include specific details of the expected learning outcomes, and descriptions of what criteria are required to meet various performance ratings in each. Each rating is assigned a point value, and the total number of points earned determines the overall grade for the assignment.

Though they’re more time-intensive to create, analytic rubrics actually save time while grading. Teachers can simply circle or highlight any relevant phrases in each rating, and add a comment or two if needed. They also help ensure consistency in grading, and make it much easier for students to understand what’s expected of them.

Learn more about analytic rubrics here.

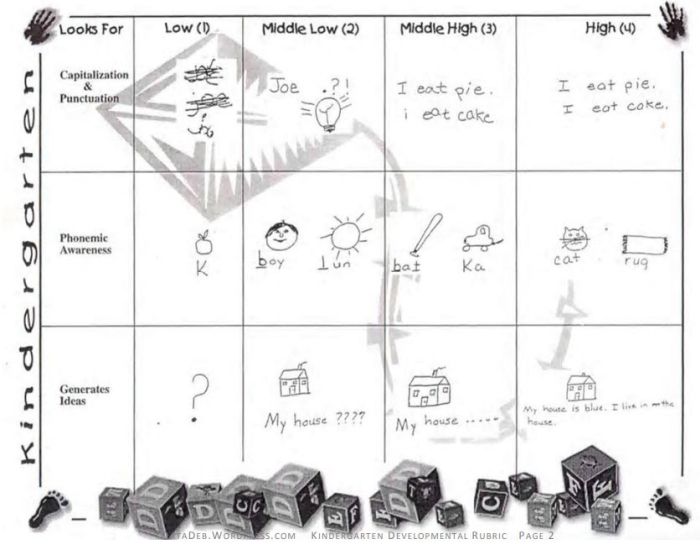

Developmental Rubric

Source: Deb’s Data Digest

A developmental rubric is a type of analytic rubric, but it’s used to assess progress along the way rather than determining a final score on an assignment. The details in these rubrics help students understand their achievements, as well as highlight the specific skills they still need to improve.

Developmental rubrics are essentially a subset of analytic rubrics. They leave off the point values, though, and focus instead on giving feedback using the criteria and indicators of performance.

Learn how to use developmental rubrics here.

Ready to create your own rubrics? Find general tips on designing rubrics here. Then, check out these examples across all grades and subjects to inspire you.

Elementary School Rubric Examples

These elementary school rubric examples come from real teachers who use them with their students. Adapt them to fit your needs and grade level.

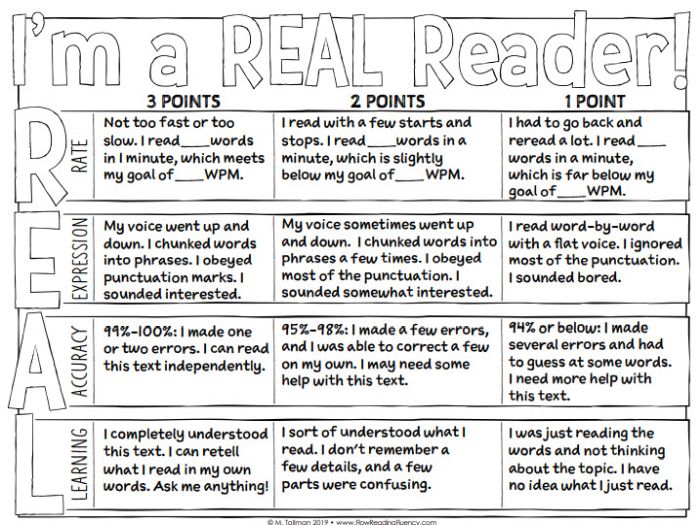

Reading Fluency Rubric

You can use this one as an analytic rubric by counting up points to earn a final score, or just to provide developmental feedback. There’s a second rubric page available specifically to assess prosody (reading with expression).

Learn more: Teacher Thrive

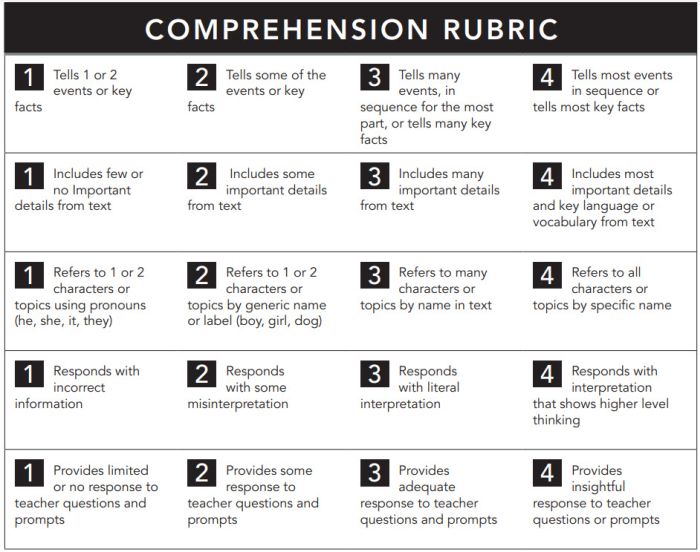

Reading Comprehension Rubric

The nice thing about this rubric is that you can use it at any grade level, for any text. If you like this style, you can get a reading fluency rubric here too.

Learn more: Pawprints Resource Center

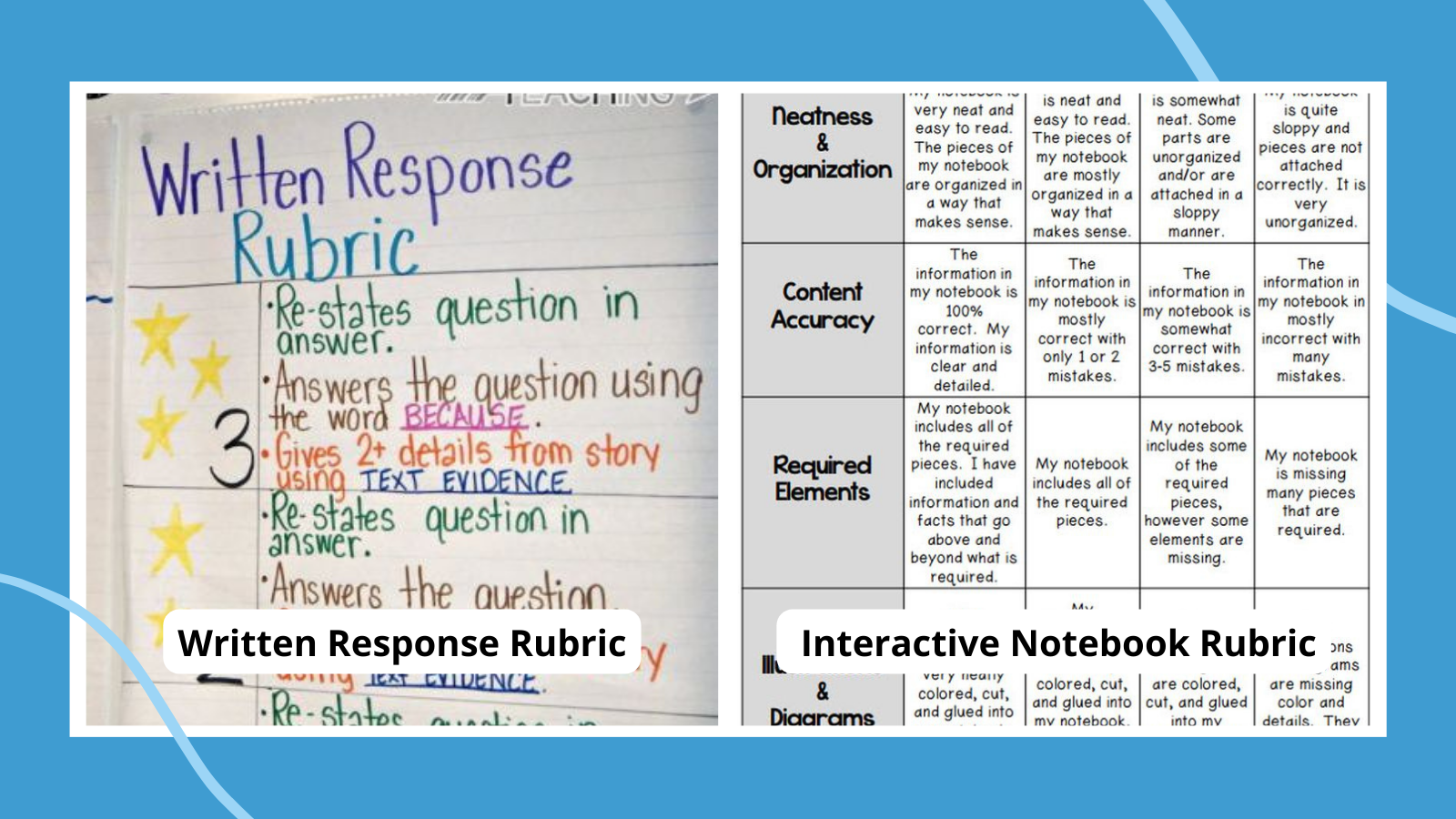

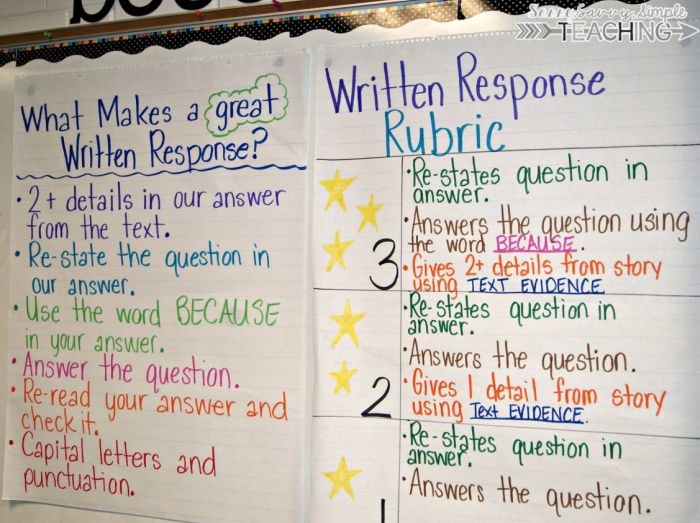

Written Response Rubric

Rubrics aren’t just for huge projects. They can also help kids work on very specific skills, like this one for improving written responses on assessments.

Learn more: Dianna Radcliffe: Teaching Upper Elementary and More

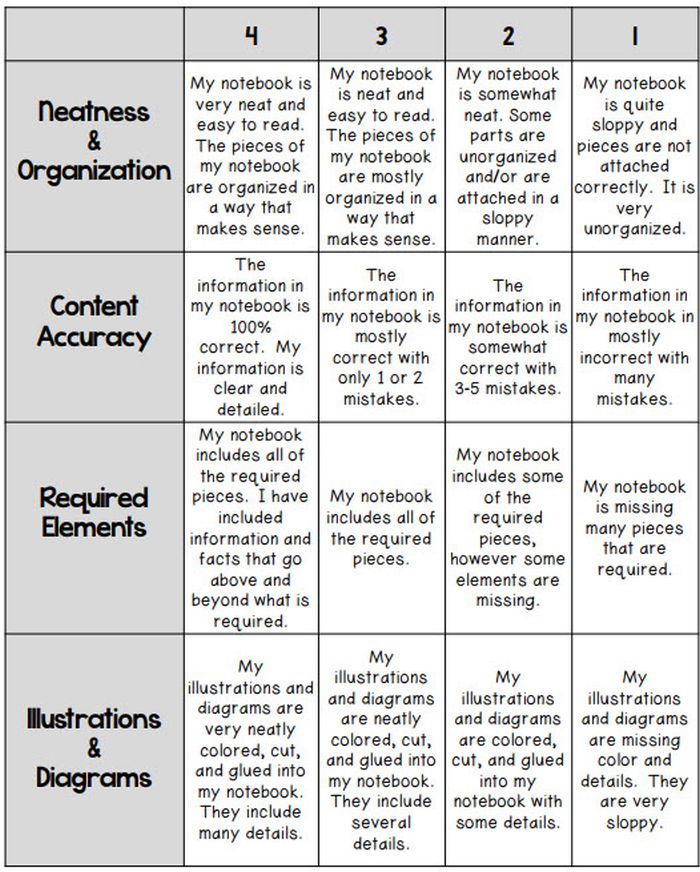

Interactive Notebook Rubric

If you use interactive notebooks as a learning tool , this rubric can help kids stay on track and meet your expectations.

Learn more: Classroom Nook

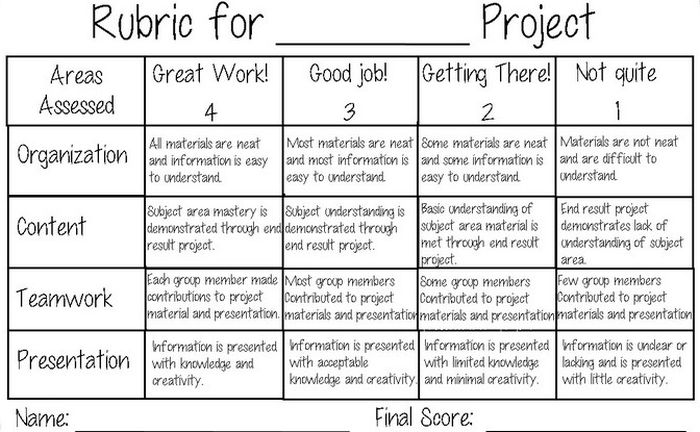

Project Rubric

Use this simple rubric as it is, or tweak it to include more specific indicators for the project you have in mind.

Learn more: Tales of a Title One Teacher

Behavior Rubric

Developmental rubrics are perfect for assessing behavior and helping students identify opportunities for improvement. Send these home regularly to keep parents in the loop.

Learn more: Teachers.net Gazette

Middle School Rubric Examples

In middle school, use rubrics to offer detailed feedback on projects, presentations, and more. Be sure to share them with students in advance, and encourage them to use them as they work so they’ll know if they’re meeting expectations.

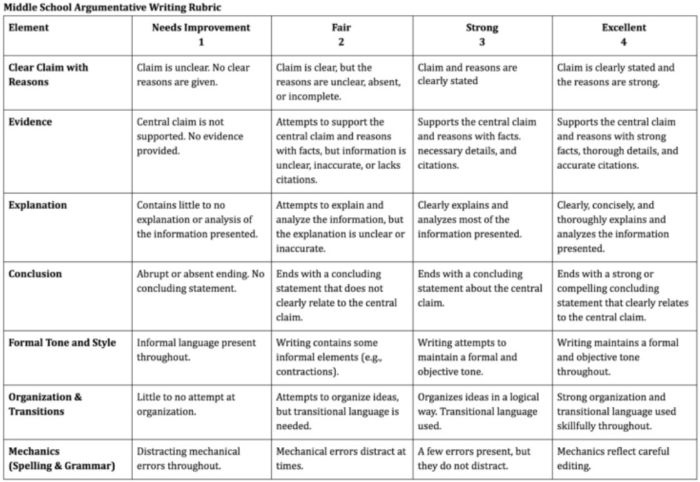

Argumentative Writing Rubric

Argumentative writing is a part of language arts, social studies, science, and more. That makes this rubric especially useful.

Learn more: Dr. Caitlyn Tucker

Role-Play Rubric

Role-plays can be really useful when teaching social and critical thinking skills, but it’s hard to assess them. Try a rubric like this one to evaluate and provide useful feedback.

Learn more: A Question of Influence

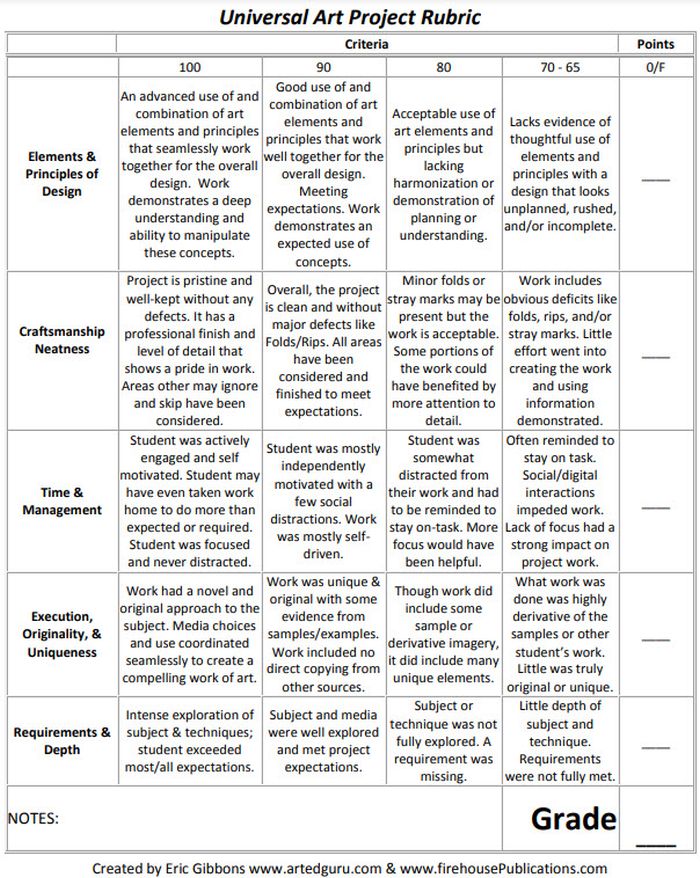

Art Project Rubric

Art is one of those subjects where grading can feel very subjective. Bring some objectivity to the process with a rubric like this.

Source: Art Ed Guru

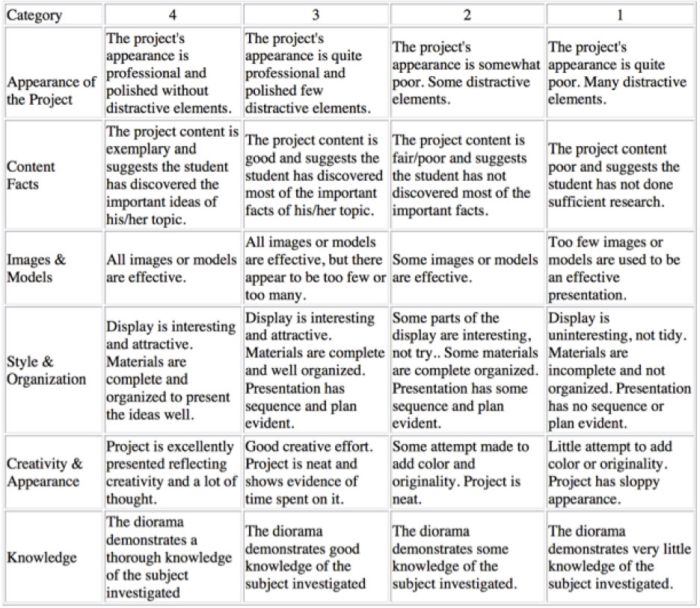

Diorama Project Rubric

You can use diorama projects in almost any subject, and they’re a great chance to encourage creativity. Simplify the grading process and help kids know how to make their projects shine with this scoring rubric.

Learn more: Historyourstory.com

Oral Presentation Rubric

Rubrics are terrific for grading presentations, since you can include a variety of skills and other criteria. Consider letting students use a rubric like this to offer peer feedback too.

Learn more: Bright Hub Education

High School Rubric Examples

In high school, it’s important to include your grading rubrics when you give assignments like presentations, research projects, or essays. Kids who go on to college will definitely encounter rubrics, so helping them become familiar with them now will help in the future.

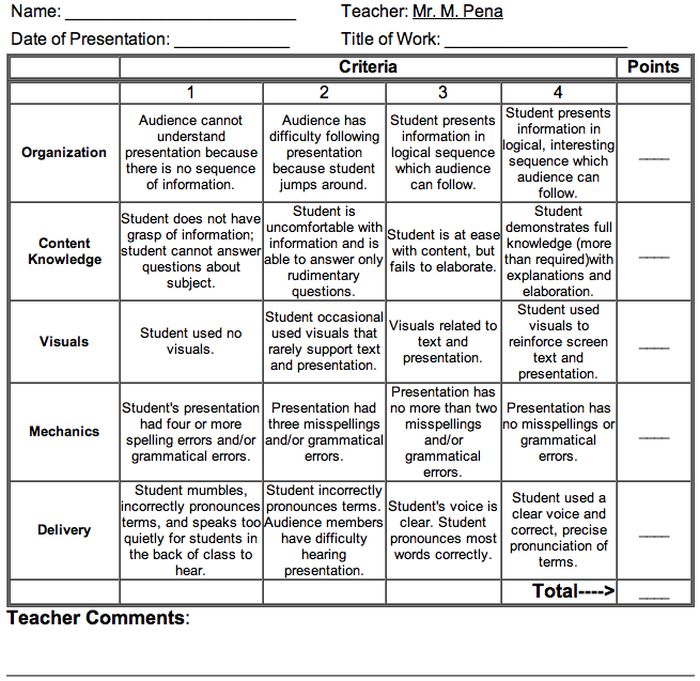

Presentation Rubric

Analyze a student’s presentation both for content and communication skills with a rubric like this one. If needed, create a separate one for content knowledge with even more criteria and indicators.

Learn more: Michael A. Pena Jr.

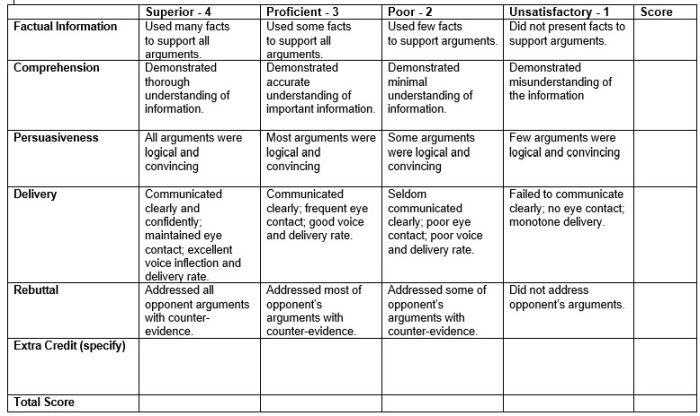

Debate Rubric

Debate is a valuable learning tool that encourages critical thinking and oral communication skills. This rubric can help you assess those skills objectively.

Learn more: Education World

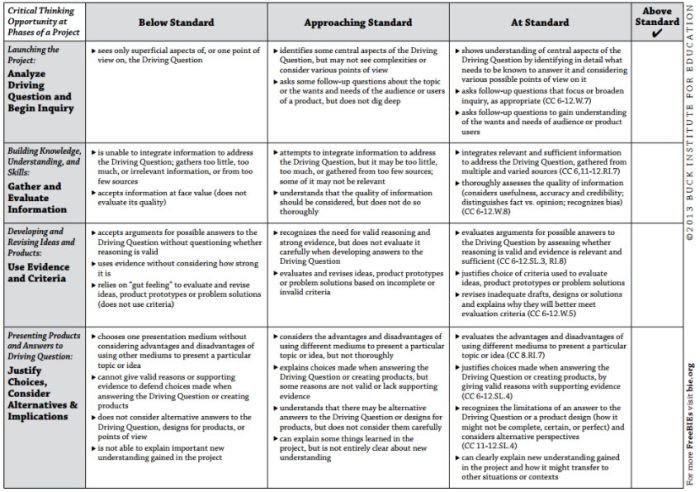

Project-Based Learning Rubric

Implementing project-based learning can be time-intensive, but the payoffs are worth it. Try this rubric to make student expectations clear and end-of-project assessment easier.

Learn more: Free Technology for Teachers

100-Point Essay Rubric

Need an easy way to convert a scoring rubric to a letter grade? This example for essay writing earns students a final score out of 100 points.

Learn more: Learn for Your Life

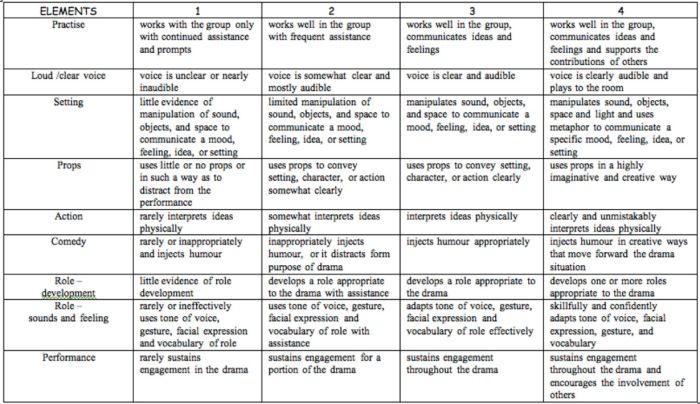

Drama Performance Rubric

If you’re unsure how to grade a student’s participation and performance in drama class, consider this example. It offers lots of objective criteria and indicators to evaluate.

Learn more: Chase March

How do you use rubrics in your classroom? Come share your thoughts and exchange ideas in the WeAreTeachers HELPLINE group on Facebook .

Plus, 25 of the best alternative assessment ideas ..

You Might Also Like

How To Get Started With Interactive Notebooks (Plus 25 Terrific Examples)

It's so much more than a place to take notes during class. Continue Reading

Copyright © 2024. All rights reserved. 5335 Gate Parkway, Jacksonville, FL 32256

Choose Your Test

Sat / act prep online guides and tips, act writing rubric: full analysis and essay strategies.

ACT Writing

What time is it? It's essay time! In this article, I'm going to get into the details of the newly transformed ACT Writing by discussing the ACT essay rubric and how the essay is graded based on that. You'll learn what each item on the rubric means for your essay writing and what you need to do to meet those requirements.

feature image credit: A study in human nature, being an interpretation with character analysis chart of Hoffman's master painting "Christ in the temple"; (1920) by CircaSassy , used under CC BY 2.0 /Resized from original.

ACT Essay Grading: The Basics

If you've chosen to take the ACT Plus Writing , you'll have 40 minutes to write an essay (after completing the English, Math, Reading, and Science sections of the ACT, of course). Your essay will be evaluated by two graders , who score your essay from 1-6 on each of 4 domains, leading to scores out of 12 for each domain. Your Writing score is calculated by averaging your four domain scores, leading to a total ACT Writing score from 2-12.

NOTE : From September 2015 to June 2016, ACT Writing scores were calculated by adding together your domain scores and scaling to a score of 1-36; the change to an averaged 2-12 ACT Writing score was announced June 28, 2016 and put into action September 2016.

The Complete ACT Grading Rubric

Based on ACT, Inc's stated grading criteria, I've gathered all the relevant essay-grading criteria into a chart. The information itself is available on the ACT's website , and there's more general information about each of the domains here . The columns in this rubric are titled as per the ACT's own domain areas, with the addition of another category that I named ("Mastery Level").

ACT Writing Rubric: Item-by-Item Breakdown

Whew. That rubric might be a little overwhelming—there's so much information to process! Below, I've broken down the essay rubric by domain, with examples of what a 3- and a 6-scoring essay might look like.

Ideas and Analysis

The Ideas and Analysis domain is the rubric area most intimately linked with the basic ACT essay task itself. Here's what the ACT website has to say about this domain:

Scores in this domain reflect the ability to generate productive ideas and engage critically with multiple perspectives on the given issue. Competent writers understand the issue they are invited to address, the purpose for writing, and the audience. They generate ideas that are relevant to the situation.

Based on this description, I've extracted the three key things you need to do in your essay to score well in the Ideas and Analysis domain.

#1: Choose a perspective on this issue and state it clearly. #2: Compare at least one other perspective to the perspective you have chosen. #3: Demonstrate understanding of the ways the perspectives relate to one another. #4: Analyze the implications of each perspective you choose to discuss.

There's no cool acronym, sorry. I guess a case could be made for "ACCE," but I wanted to list the points in the order of importance, so "CEAC" it is.

Fortunately, the ACT Writing Test provides you with the three perspectives to analyze and choose from, which will save you some of the time of "generating productive ideas." In addition, "analyzing each perspective" does not mean that you need to argue from each of the points of view. Instead, you need to choose one perspective to argue as your own and explain how your point of view relates to at least one other perspective by evaluating how correct the perspectives you discuss are and analyzing the implications of each perspective.

Note: While it is technically allowable for you to come up with a fourth perspective as your own and to then discuss that point of view in relation to another perspective, we do not recommend it. 40 minutes is already a pretty short time to discuss and compare multiple points of view in a thorough and coherent manner—coming up with new, clearly-articulated perspectives takes time that could be better spend devising a thorough analysis of the relationship between multiple perspectives.

To get deeper into what things fall in the Ideas and Analysis domain, I'll use a sample ACT Writing prompt and the three perspectives provided:

Many of the goods and services we depend on daily are now supplied by intelligent, automated machines rather than human beings. Robots build cars and other goods on assembly lines, where once there were human workers. Many of our phone conversations are now conducted not with people but with sophisticated technologies. We can now buy goods at a variety of stores without the help of a human cashier. Automation is generally seen as a sign of progress, but what is lost when we replace humans with machines? Given the accelerating variety and prevalence of intelligent machines, it is worth examining the implications and meaning of their presence in our lives.

Perspective One : What we lose with the replacement of people by machines is some part of our own humanity. Even our mundane daily encounters no longer require from us basic courtesy, respect, and tolerance for other people.

Perspective Two : Machines are good at low-skill, repetitive jobs, and at high-speed, extremely precise jobs. In both cases they work better than humans. This efficiency leads to a more prosperous and progressive world for everyone.

Perspective Three : Intelligent machines challenge our long-standing ideas about what humans are or can be. This is good because it pushes both humans and machines toward new, unimagined possibilities.

First, in order to "clearly state your own perspective on the issue," you need to figure out what your point of view, or perspective, on this issue is going to be. For the sake of argument, let's say that you agree the most with the second perspective. A essay that scores a 3 in this domain might simply restate this perspective:

I agree that machines are good at low-skill, repetitive jobs, and at high-speed, extremely precise jobs. In both cases they work better than humans. This efficiency leads to a more prosperous and progressive world for everyone.

In contrast, an essay scoring a 6 in this domain would likely have a more complex point of view (with what the rubric calls "nuance and precision in thought and purpose"):

Machines will never be able to replace humans entirely, as creativity is not something that can be mechanized. Because machines can perform delicate and repetitive tasks with precision, however, they are able to take over for humans with regards to low-skill, repetitive jobs and high-skill, extremely precise jobs. This then frees up humans to do what we do best—think, create, and move the world forward.

Next, you must compare at least one other perspective to your perspective throughout your essay, including in your initial argument. Here's what a 3-scoring essay's argument would look like:

I agree that machines are good at low-skill, repetitive jobs, and at high-speed, extremely precise jobs. In both cases they work better than humans. This efficiency leads to a more prosperous and progressive world for everyone. Machines do not cause us to lose our humanity or challenge our long-standing ideas about what humans are or can be.

And here, in contrast, is what a 6-scoring essay's argument (that includes multiple perspectives) would look like:

Machines will never be able to replace humans entirely, as creativity is not something that can be mechanized, which means that our humanity is safe. Because machines can perform delicate and repetitive tasks with precision, however, they are able to take over for humans with regards to low-skill, repetitive jobs and high-skill, extremely precise jobs. Rather than forcing us to challenge our ideas about what humans are or could be, machines simply allow us to BE, without distractions. This then frees up humans to do what we do best—think, create, and move the world forward.

You also need to demonstrate a nuanced understanding of the way in which the two perspectives relate to each other. A 3-scoring essay in this domain would likely be absolute, stating that Perspective Two is completely correct, while the other two perspectives are absolutely incorrect. By contrast, a 6-scoring essay in this domain would provide a more insightful context within which to consider the issue:

In the future, machines might lead us to lose our humanity; alternatively, machines might lead us to unimaginable pinnacles of achievement. I would argue, however, projecting possible futures does not make them true, and that the evidence we have at present supports the perspective that machines are, above all else, efficient and effective completing repetitive and precise tasks.

Finally, to analyze the perspectives, you need to consider each aspect of each perspective. In the case of Perspective Two, this means you must discuss that machines are good at two types of jobs, that they're better than humans at both types of jobs, and that their efficiency creates a better world. The analysis in a 3-scoring essay is usually "simplistic or somewhat unclear." By contrast, the analysis of a 6-scoring essay "examines implications, complexities and tensions, and/or underlying values and assumptions."

- Choose a perspective that you can support.

- Compare at least one other perspective to the perspective you have chosen.

- Demonstrate understanding of the ways the perspectives relate to one another.

- Analyze the implications of each perspective you choose to discuss.

To score well on the ACT essay overall, however, it's not enough to just state your opinions about each part of the perspective; you need to actually back up your claims with evidence to develop your own point of view. This leads straight into the next domain: Development and Support.

Development and Support

Another important component of your essay is that you explain your thinking. While it's obviously important to clearly state what your ideas are in the first place, the ACT essay requires you to demonstrate evidence-based reasoning. As per the description on ACT.org [bolding mine]:

Scores in this domain reflect the ability to discuss ideas, offer rationale, and bolster an argument. Competent writers explain and explore their ideas, discuss implications, and illustrate through examples . They help the reader understand their thinking about the issue.

"Machines are good at low-skill, repetitive jobs, and at high-speed, extremely precise jobs. In both cases they work better than humans. This efficiency leads to a more prosperous and progressive world for everyone."

In your essay, you might start out by copying the perspective directly into your essay as your point of view, which is fine for the Ideas and Analysis domain. To score well in the Development and Support domain and develop your point of view with logical reasoning and detailed examples, however, you're going to have to come up with reasons for why you agree with this perspective and examples that support your thinking.

Here's an example from an essay that would score a 3 in this domain:

Machines are good at low-skill, repetitive jobs and at high-speed, extremely precise jobs. In both cases, they work better than humans. For example, machines are better at printing things quickly and clearly than people are. Prior to the invention of the printing press by Gutenberg people had to write everything by hand. The printing press made it faster and easier to get things printed because things didn't have to be written by hand all the time. In the world today we have even better machines like laser printers that print things quickly.

Essays scoring a 3 in this domain tend to have relatively simple development and tend to be overly general, with imprecise or repetitive reasoning or illustration. Contrast this with an example from an essay that would score a 6:

Machines are good at low-skill, repetitive jobs and at high-speed, extremely precise jobs. In both cases, they work better than humans. Take, for instance, the example of printing. As a composer, I need to be able to create many copies of my sheet music to give to my musicians. If I were to copy out each part by hand, it would take days, and would most likely contain inaccuracies. On the other hand, my printer (a machine) is able to print out multiple copies of parts with extreme precision. If it turns out I made an error when I was entering in the sheet music onto the computer (another machine), I can easily correct this error and print out more copies quickly.

The above example of the importance of machines to composers uses "an integrated line of skillful reasoning and illustration" to support my claim ("Machines are good at low-skill, repetitive jobs and at high-speed, extremely precise jobs. In both cases, they work better than humans"). To develop this example further (and incorporate the "This efficiency leads to a more prosperous and progressive world for everyone" facet of the perspective), I would need to expand my example to explain why it's so important that multiple copies of precisely replicated documents be available, and how this affects the world.

World Map - Abstract Acrylic by Nicolas Raymond , used under CC BY 2.0 /Resized from original.

Organization

Essay organization has always been integral to doing well on the ACT essay, so it makes sense that the ACT Writing rubric has an entire domain devoted to this. The organization of your essay refers not just to the order in which you present your ideas in the essay, but also to the order in which you present your ideas in each paragraph. Here's the formal description from the ACT website :

Scores in this domain reflect the ability to organize ideas with clarity and purpose. Organizational choices are integral to effective writing. Competent writers arrange their essay in a way that clearly shows the relationship between ideas, and they guide the reader through their discussion.

Making sure your essay is logically organized relates back to the "development" part of the previous domain. As the above description states, you can't just throw examples and information into your essay willy-nilly, without any regard for the order; part of constructing and developing a convincing argument is making sure it flows logically. A lot of this organization should happen while you are in the planning phase, before you even begin to write your essay.

Let's go back to the machine intelligence essay example again. I've decided to argue for Perspective Two, which is:

An essay that scores a 3 in this domain would show a "basic organizational structure," which is to say that each perspective analyzed would be discussed in its own paragraph, "with most ideas logically grouped." A possible organization for a 3-scoring essay:

An essay that scores a 6 in this domain, on the other hand, has a lot more to accomplish. The "controlling idea or purpose" behind the essay should be clearly expressed in every paragraph, and ideas should be ordered in a logical fashion so that there is a clear progression from the beginning to the end. Here's a possible organization for a 6-scoring essay:

In this example, the unifying idea is that machines are helpful (and it's mentioned in each paragraph) and the progression of ideas makes more sense. This is certainly not the only way to organize an essay on this particular topic, or even using this particular perspective. Your essay does, however, have to be organized, rather than consist of a bunch of ideas thrown together.

Here are my Top 5 ACT Writing Organization Rules to follow:

#1: Be sure to include an introduction (with your thesis stating your point of view), paragraphs in which you make your case, and a conclusion that sums up your argument

#2: When planning your essay, make sure to present your ideas in an order that makes sense (and follows a logical progression that will be easy for the grader to follow).

#3: Make sure that you unify your essay with one main idea . Do not switch arguments partway through your essay.

#4: Don't write everything in one huge paragraph. If you're worried you're going to run out of space to write and can't make your handwriting any smaller and still legible, you can try using a paragraph symbol, ¶, at the beginning of each paragraph as a last resort to show the organization of your essay.

#5: Use transitions between paragraphs (usually the last line of the previous paragraph and the first line of the paragraph) to "strengthen the relationships among ideas" ( source ). This means going above and beyond "First of all...Second...Lastly" at the beginning of each paragraph. Instead, use the transitions between paragraphs as an opportunity to describe how that paragraph relates to your main argument.

Language Use

The final domain on the ACT Writing rubric is Language Use and Conventions. This the item that includes grammar, punctuation, and general sentence structure issues. Here's what the ACT website has to say about Language Use:

Scores in this domain reflect the ability to use written language to convey arguments with clarity. Competent writers make use of the conventions of grammar, syntax, word usage, and mechanics. They are also aware of their audience and adjust the style and tone of their writing to communicate effectively.

I tend to think of this as the "be a good writer" category, since many of the standards covered in the above description are ones that good writers will automatically meet in their writing. On the other hand, this is probably the area non-native English speakers will struggle the most, as you must have a fairly solid grasp of English to score above a 2 on this domain. The good news is that by reading this article, you're already one step closer to improving your "Language Use" on ACT Writing.

There are three main parts of this domain:

#1: Grammar, Usage, and Mechanics #2: Sentence Structure #3: Vocabulary and Word Choice

I've listed them (and will cover them) from lowest to highest level. If you're struggling with multiple areas, I highly recommend starting out with the lowest-level issue, as the components tend to build on each other. For instance, if you're struggling with grammar and usage, you need to focus on fixing that before you start to think about precision of vocabulary/word choice.

Grammar, Usage, and Mechanics

At the most basic level, you need to be able to "effectively communicate your ideas in standard written English" ( ACT.org ). First and foremost, this means that your grammar and punctuation need to be correct. On ACT Writing, it's all right to make a few minor errors if the meaning is clear, even on essays that score a 6 in the Language Use domain; however, the more errors you make, the more your score will drop.

Here's an example from an essay that scored a 3 in Language Use:

Machines are good at doing there jobs quickly and precisely. Also because machines aren't human or self-aware they don't get bored so they can do the same thing over & over again without getting worse.

While the meaning of the sentences is clear, there are several errors: the first sentence uses "there" instead of "their," the second sentence is a run-on sentence, and the second sentence also uses the abbreviation "&" in place of "and." Now take a look at an example from a 6-scoring essay:

Machines excel at performing their jobs both quickly and precisely. In addition, since machines are not self-aware they are unable to get "bored." This means that they can perform the same task over and over without a decrease in quality.

This example solves the abbreviation and "there/their" issue. The second sentence is missing a comma (after "self-aware"), but the worse of the run-on sentence issue is absent.

Our Complete Guide to ACT Grammar might be helpful if you just need a general refresh on grammar rules. In addition, we have several articles that focus in on specific grammar rules, as they are tested on ACT English; while the specific ways in which ACT English tests you on these rules isn't something you'll need to know for the essay, the explanations of the grammar rules themselves are quite helpful.

Sentence Structure

Once you've gotten down basic grammar, usage, and mechanics, you can turn your attention to sentence structure. Here's an example of what a 3-scoring essay in Language Use (based on sentence structure alone) might look like:

Machines are more efficient than humans at many tasks. Machines are not causing us to lose our humanity. Instead, machines help us to be human by making things more efficient so that we can, for example, feed the needy with technological advances.

The sentence structures in the above example are not particularly varied (two sentences in a row start with "Machines are"), and the last sentence has a very complicated/convoluted structure, which makes it hard to understand. For comparison, here's a 6-scoring essay:

Machines are more efficient than humans at many tasks, but that does not mean that machines are causing us to lose our humanity. In fact, machines may even assist us in maintaining our humanity by providing more effective and efficient ways to feed the needy.

For whatever reason, I find that when I'm under time pressure, my sentences maintain variety in their structures but end up getting really awkward and strange. A real life example: once I described a method of counteracting dementia as "supporting persons of the elderly persuasion" during a hastily written psychology paper. I've found the best ways to counteract this are as follows:

#1: Look over what you've written and change any weird wordings that you notice.

#2: If you're just writing a practice essay, get a friend/teacher/relative who is good at writing (in English) to look over what you've written and point out issues (this is how my own awkward wording was caught before I handed in the paper). This point obviously does not apply when you're actually taking the ACT, but it very helpful to ask for someone else to take a look over any practice essays you write to point out issues you may not notice yourself.

Vocabulary and Word Choice