Data Modeling in Action: Case Studies from Real-World Projects

Data modeling is the art of creating a representation of a complex system, which helps organizations make informed decisions. The practical value of data modeling becomes most evident when it is used to solve real-world problems.

In this article, we present case studies from real-world projects that illustrate the power of data modeling. From detecting fraud in financial transactions to predicting equipment failures in manufacturing plants, these stories will show you how data modeling can make a difference in the success of your projects. So buckle up and get ready to learn how data modeling can transform your business operations.

Case Study 1: Financial Institution Data Modeling

In the realm of data modeling, a financial institution is an excellent place to start when it comes to developing a comprehensive approach. In this case study, we'll dive into the details of how a financial institution created an effective data modeling strategy.

The primary objective for this financial institution was to modernize its existing data management systems. This entailed identifying key areas that required improvement, such as data structure, integration, and management. The next step was to develop a data modeling strategy that could streamline processes and minimize errors.

The strategy was built around the use of a data modeling tool that could automate much of the process of creating data models. The tool had the ability to automatically generate code based on the data models, which cut down on development time and helped to ensure consistency across the organization.

The financial institution also made sure to involve key stakeholders from across the company in the modeling process. This allowed the team to identify and address potential issues and ensure that the final data models fully met the needs of all stakeholders.

Additionally, the institution recognized the need to keep the data models up to date as the needs of the business changed. To accomplish this, they established a process for ongoing maintenance of the models. This involved regular reviews of the models and adjustments as needed.

Overall, the financial institution's data modeling strategy was a success. It helped them to modernize their data management systems, streamline processes and minimize errors. By involving key stakeholders and implementing ongoing maintenance processes, they were able to create a comprehensive data modeling approach that meets all their needs.

Case Study 2: E-Commerce Data Modeling

In this case study, we examine a hypothetical e-commerce company and its data modeling needs. The company, which sells various products online, has a constantly growing volume of data that needs to be managed effectively.

To address this issue, the company decides to implement a data warehouse that will serve as a central repository for all its data. The warehouse will consist of several tables, including:

- Product Table: containing all the products being sold by the company, including their name, description, price, and category.

- Customer Table: containing all the customers who have made purchases, including their name, address, and contact information.

- Order Table: containing all the orders placed, including the customer who placed the order, the product(s) ordered, and the order status.

Other tables will contain data related to inventory, shipping, payments, and promotions.

One of the key challenges facing the e-commerce company is ensuring that its data is accurate and up-to-date. To address this issue, the company plans to use several data validation and verification techniques, including:

- Regular data scrubbing: removing any duplicate data or incorrect entries.

- Data mining: analyzing the data to identify patterns or trends.

- Automated data quality checks: using software to perform regular checks and alert staff if any anomalies are detected.

By implementing a robust data modeling strategy, the e-commerce company will be able to effectively manage its growing volume of data and make better-informed decisions based on accurate, up-to-date information.

Case Study 3: Healthcare Data Modeling

Healthcare data model ing is a crucial aspect of healthcare management. It involves creating database structures that accurately represent healthcare data to facilitate data analysis, decision making, and the management of patient care. The following are some details about Case Study 3: Healthcare Data Modeling:

- The healthcare industry is data-intensive, which makes the need for an efficient data modeling process an essential requirement.

- In this case study, the focus was to develop a data model that captures patient information, hospital procedures, and necessary medical personnel data.

- The team of data modelers collaborated with different healthcare stakeholders to gather diverse healthcare-related information.

- They utilized entities relevant to the medical field to create the data model, such as patients, physicians, procedures, diagnoses, and medications.

- The resultant data model offered an all-encompassing view of the healthcare system, improving physicians' and patients' connectivity.

- The data model's success led to more efficient operations, optimization of healthcare outcomes, improved patient care, and an overall positive impact on the healthcare system.

- Furthermore, the data model's flexibility enabled easy data migration to different health information systems to support healthcare interoperability.

- Healthcare data modeling not only facilitates medical procedures but also contributes to strategic decision-making in the industry's administration and planning.

In conclusion, healthcare data modeling has proven to be an essential component of healthcare management. Its seamless integration in healthcare systems, such as in Case Study 3, has undoubtedly contributed to more smooth operations, better communication, medical decision-making, and improved healthcare outcomes.

Case Study 4: Manufacturing Data Modeling

Manufacturing data modeling is a technique used by manufacturing companies to improve their operations. A case study of a manufacturing company's data modeling project can be very informative.

The case study may include information about the company's goals, challenges, and the approach taken to improve the manufacturing process. It may also include details about the data modeling tools and techniques used, such as entity-relationship modeling and process modeling.

The manufacturing data model may be used to identify inefficiencies in the manufacturing process, such as bottlenecks and supplier delays. By analyzing this data, the company may be able to make changes to its operations and reduce costs.

One possible example of a manufacturing data modeling project might involve the analysis of the production line process. The data model could capture the different processes involved in assembling products, and identify where adjustments could be made to improve efficiency and reduce waste.

Ultimately, a successful manufacturing data modeling project can lead to improved productivity, better product quality, and ultimately, cost savings for the company.

Case Study 5: Education Data Modeling

In Case Study 5, we explore data modeling in the realm of education. Here are some key points about this particular case study:

- Data modeling for education involves organizing and analyzing data related to student performance, teacher effectiveness, and institutional program effectiveness.

- In this case study, we will look at a project that involved creating a data model for a higher education institution in order to improve student outcomes and retention rates.

- The project involved collecting and integrating data from a variety of sources, including student records, course evaluations, and surveys of both students and faculty.

- After the data was collected and organized, the team used various modeling techniques to identify patterns and correlations within the data.

- One key finding of the project was that certain courses and instructors were consistently associated with higher student retention rates.

- The team was able to use this information to make recommendations to the institution about which programs and instructors were most effective at retaining students.

- Overall, the project highlights the importance of data modeling in education, as it can help institutions improve student outcomes and identify areas for improvement.

Final thoughts

This article outlines several case studies showcasing the practical application of data modeling in real-world projects. The first case study explores how a healthcare organization used data modeling to implement a new electronic health records system, resulting in improved efficiency and patient care. The second case study highlights how a financial services company utilized data modeling to streamline their data management and reduce errors.

The third case study illustrates how a retail company used data modeling to improve their supply chain management and inventory forecasting, leading to increased sales.

Overall, these case studies demonstrate the value and effectiveness of data modeling in solving complex business problems.

Interested?

Leave your email and we'll send you occasional, honest promo material and more relevant content.

What Is Data Modeling? Tips, Examples And Use Cases

May 4, 2023 21 mins read

Data modeling can be considered the foundational stone of data analytics and data science. It gives meaning to the enormous amount of data that organizations produce. It generates an effectively organized representation of the data to assist the organizations with better insights into data understanding and analysis .

The domain of data utilization is vast beyond the limitations of a human. It is being used as a source for personalized social media advertisement, discovering treatments for numerous diseases, and more. The data is readable by software machines but generates significant results with maximized accuracy. It simplifies the data by implementing rational rules assignment.

The task of getting the required data, transforming it into an understandable representation, and using it as needed for the average user is simplified through data modeling. It plays a pivotal role in transforming data into valuable analytics that helps organizations make business strategies and essential decisions in this fast-paced era of transformation.

Data modeling provides in-depth insights into organizations’ daily data despite the process’s complexity. It helps organizations in efficient and innovative business growth.

Data Modeling Definition

Let us understand what data modeling is. So, data modeling conceptualizes the data and relationships among data entities in any sphere. It describes the data structure , organization, storage methods, and constraints of the data.

- Data modeling promotes uniformity in naming, rules, meanings, and security, ultimately improving data analysis. These models represent data conceptually using symbols, text, or diagrams to visualize relationships. The main goal is to make the data available and organized however it is used.

- Data modeling helps store and organize data to fulfill business needs and allow for the processing and retrieving of information of use. Thus, it is a crucial element in designing and developing information systems.

Firstly, data modeling signifies the arrangements of the data that already exist. Then this process proceeds to define the data structure, relationship of entities, and data scope that is reusable and can be encrypted.

Data modeling creates a conceptual representation of data and its relationships to other data within a specific domain. It involves defining the structure, relationships, constraints, and rules of data to understand and organize information meaningfully. So, data modeling conceptualizes the data and relationships among data entities in any sphere. It describes the data structure, organization, storage methods, and constraints of the data.

- Data modeling signifies the data arrangements of the data that already exist. Then this process proceeds to define the data structure, relationship of entities, and data scope that is reusable and can be encrypted.

- Data modeling creates a conceptual representation of data and its relationships to other data within a specific domain. It involves defining the structure, relationships, constraints, and rules of data to understand and organize information meaningfully.

Data modeling is essential in software engineering, database design, and other fields that require the organization and analysis of large amounts of data. It enables developers to create accurate, efficient, and scalable systems by ensuring the data is properly structured, normalized, and stored to support the organization’s business requirements.

Importance of Data Modeling

Data modeling is the stepping stone of the data management process to achieve business objectives and other essential utilization. It is the fundamental phase of the data management process to achieve crucial business objectives and other vital usages that assist in decision-making driven by data analysis.

The following insights can help comprehend the importance of data modeling.

- We may comprehend the data structure, relationships, and limitations by building a data model.

- By making it easier to ensure everyone working on the project is familiar with the data.

- You can avoid uncertainties and inaccuracies.

- Data continuity, reliability, and validity are improved by addressing issues.

- Provides a common language and a framework or schema for better data management practices.

- Processing insights from raw data to discover patterns, trends, and relationships in data.

- Improved data storage efficiency to cancel out useless data.

- Streamlined data retrieval with organized storage.

- Good database schema designs can significantly reduce data redundancy issues.

- Cost efficiency and an increase in system performance due to reduced and optimized data storage.

Steps of the Data Modeling Process

What we select to make a data model depends mainly on the data characteristics and the individual business requirements. The steps of the data modeling process for data engineering include the following:

Step 1: Requirements gathering

Gathering requirements from analysts, developers, and other stakeholders and then realizing how they need the data, how they plan to use it, and any blockers they face regarding the quality or other data specifics.

Step 2: Conceptual data modeling

In this step, you must map entities, attributes, and the relationship among them in a generalized concept of understanding the data.

Step 3: Logical data modeling

The third step of the data modeling process is to develop a logical interpretation of the data entities and the relationship among them. The logical rules definition is also defined in this step.

Step 4: Physical data modeling

A database based on the logical rules defined in the previous step is implemented physically, where attributes are defined with primary and foreign keys of a data entity table.

Types of Data Modeling

Below are the types of data modeling that are being implemented:

1. Conceptual Data Modeling

Data entities are modeled as high-level entities with relationships when using this method. Rather than focusing on specific technologies or implementations, it focuses on business needs.

2. Logical Data Modeling

This type of data modeling focuses on just the high-level view of the data entities and relationships. It has comprehensive data models in which entities, relationships, and attributes are stipulated in detail, along with constraints and implementation rules.

3. Physical Data Modeling

It is the type of data modeling in which the model is defined physically, constituting tables, database objects, data in tables and columns, and indexes defined appropriately. It mainly focuses on the physical storage of data, data access requirements, and other database management.

4. Dimensional Data Modeling

Dimensional data modeling requires data arrangement into ‘facts’ and ‘dimensions.’ Where ‘facts’ mean metrics of interest and ‘dimensions’ mean attributes for facts’ context

5. Object-Oriented Data Modeling

This specific data model is based on realistic scenarios represented as objects and independent attributes, with several relationships in between.

Data Modeling Techniques

Several techniques are used to model data, of which some are and would tell you what is data modeling in general:

1. Entity-relationship Modeling

This technique uses entities and relationships to represent their associations to perform conceptual data modeling. It utilizes subtypes and supertypes to represent hierarchies of entities that share common attributes and distinct properties, cardinality constraints to identify the number of entities that can take part in a relationship and are expressed in the form of symbols, weak entities depend on another entity for existence, recursive relationships that occur when an entity has a relationship with itself and attributes to help describe entities and are their properties.

2. Object-oriented Modeling

Object-oriented data modeling is linked to relational databases and broadly used in software development and data engineering. It represents data as objects with attributes and behaviors, and relationships between objects are defined by inheritance, composition, or association.

3. NoSQL Modeling

NoSQL modeling is a technique that uses non-relational databases to store semi-structured, flexible data in an unstructured format which usually utilizes key-value pairs, documents, or graph structures. Since the database is non-relational, the modeling technique implemented differs from relational database modeling techniques. With column-family modeling, data is usually stored as columns where each column family is a group of relevant columns. With graph modeling, data is usually stored as nodes and edges which represent entities and the relationship between entities, respectively.

4. Unified Modeling Language (UML) Modeling

A data modeling technique that uses visual modeling to describe software systems with diagrams and models and is used for complex data flow modeling and for defining relationships between multiple data entities. Used as a standard to visualize, design, and document systems, it constitutes dynamic diagrams like sequence, class, and use case diagrams used to model data and system behavior. One possible way to extend UML is by using class diagrams and by representing data entities and their attributes.

5. Data Flow Modeling

Data flow among different processes utilizes the data flow modeling technique, constituting different diagrams showing how a process and its sub-processes are interlinked and how the data flows in between.

6. Data Warehousing Modeling

This technique is used to design data warehouses and data marts, which are used for business intelligence and reporting. It involves creating dimensional models that organize data into facts and dimensions and creating a star or snowflake schema that supports efficient querying and reporting.

Each method has its own pros and cons. Ensure that the technique you use is per your project’s requirements and the data available.

Data Modeling Use Cases

Data modeling is used in various industries and contexts to support various business objectives. Some everyday use cases of data modeling include:

- Predictive Modeling: Creating a statistical or mathematical model to predict the future based on data for sales forecasting, resource allocation, quality controlling and demand planning. Identifying new patterns and relationships will lead to new insights and possibly better opportunities.

- Customer Segmentation: Through the division of customers into different groups on the basis of behaviors, preferences, demographics or other characteristics, you can do customer segmentation which is a popular data modeling use case.

- Fraud Detection: Identifying fraudulent activities by analyzing patterns and data inconsistency is now possible due to data models that can detect fraud patterns like an individual filing multiple claims immediately after they get the policy.

- Recommendation Engines: Recommendation engines for eCommerce, search engines, movies, and TV shows, and many more industries use data models that rely on quick data access, storage and manipulation which keeps them up-to-date at all times without affecting the performance and user experience.

- Natural Language Processing: Utilizing topic modeling that auto-learns to analyze word clusters through text and Named Entity Recognition (NER) that detects and classifies significant information from text, we can perform Natural Language Processing (NLP) on social media, messaging apps and other data sources.

- Data governance: A process of ensuring that a company’s data is extracted, stored, processed and discarded as per data governance policies. It has a data quality management process to ensure monitoring and improvement of data gathering. Tracking data from the original state to a final state, maintaining metadata that ensures a track record of data for accuracy and completion, ensuring data security and compliance. Data stewards are responsible for the integrity and accuracy of specific data sets.

- Data integration: If any data has ambiguity or inconsistency, then the data integration use case is ideal for identifying those gaps and modeling the data entities, attributes, and relationships into a database.

- Application development: Data modeling plays a key role in data management and intelligence reports, data filtration, and other uses while developing web applications, mobile apps, and dynamic user experience interfaces like business intelligence applications and data dashboards. Data modeling is a versatile tool supporting various business objectives, from database design to data governance and application development.

Also, see: How to Download Images from Amazon? Tools and Tips Explained

Tips for Effective Data Modeling

Practical data modeling tips are as follows:

1. Identify the purpose and scope of the data model

To build a data model that not only addresses users’ needs but also high-performance and scalable, you need to know what problem it is solving, the data sources for the model, the type of data the model would store, the kind of people who would be using the model, level of details required for them, key entities, attributes and their relationships. You would also need to address the data quality requirements by all stakeholders.

2. Involve stakeholders and subject matter experts

Involving stakeholders and subject matter experts is crucial when designing a data model as they provide valuable insight into the business needs and can help identify potential issues early on.

3. Follow best practices and standards

There are a few things that you need to make sure are right and up to their standards when creating a data model. Firstly, choose an industry-wide accepted standardized modeling notations like Entity-Relationship (ER) diagrams, and Unified Modeling Language (UML), Business Process Model and Notation (BPMN), etc consistently to make sure things are clear and understandable.

4. Use a collaborative approach

Make sure you encourage stakeholders to let you know of their input in the form of thoughts and opinions so that all outlooks are considered. All stakeholders including IT staff, subject matters, end-users, etc are represented to maintain group diversity. Use diagrams and flowcharts to help stakeholders understand data model and give feedback in an efficient manner. Regularly schedule meetings to discuss progress, review blockers or concerns and give an update to all stakeholders.

5. Document and communicate the data model

Documenting business requirements play a vital role when a project is initiated. In the first step, when requirements are gathered and analyzed, it is important to map them in official documents. Similarly, documenting a data model is important when implementing a collaborative approach because it provides coherent guidelines to the teammates working on a project.

Avoid using technical jargon and acronyms that all stakeholders are not familiar with. Instead, use clear and concise language to define data model and its components. Use diagrams and flowcharts with a standardized notation to explain data model of how it relates to business processes to the stakeholders.

Official documents of data models bridge the communication gap between application developers and stakeholders and bring everyone on a coherent approach of what has been implemented along with all data entities, attributes, relationships, and the rules defined on a logical layer of the data model. Overall, documenting and communicating the data model is an essential aspect of data modeling and helps to ensure its effectiveness and long-term viability.

Data Modeling Tools

A wide range of data modeling tools is being used for data modeling, out of which six are mentioned below:

A popular tool utilized by developers to create custom applications through its API which lets them create custom data modeling tools that can be integrated with ERwin to provide additional functionality for users. This allows the users to customize the tool as per their needs.

2. SAP PowerDesigner:

SAP PowerDesigner tool meant to be customized and used per the user’s specific needs. It has the option to use script in VBScript, JScript and PerlScript to automate tasks, apply validation rules and perform complex calculations. Adding macros to automate repetitive tasks can be done in a snap. Add-ins can be custom-developed using .NET or Java and interacted via API. Templates of data models define entities, attributes, relationships and other key elements. With the model extensions, a user can create custom extensions to store specific domain concepts and customize the tool as per their needs.

3. Oracle SQL Developer Data Modeler:

Oracle SQL Data Modeler is a powerful data models design and management tool that allows the user to create and alter data structures like ER diagrams, data types and constraints so the users may utilize it as needed. Custom plug-ins can be developed using Java to support custom reports, implement specific data modeling conventions, etc, and can be shared across teams for easier collaboration and to maintain a consistent data model.

4. Toad Data Modeler:

This tool supports relational and NoSQL data modeling, including entity relationship diagramming, reverse engineering, and database schema generation. It also supports integration with other data management tools like Toad for Oracle. According to db-engine , Oracle is the most used database management system.

Microsoft Visio is a general-purpose diagramming tool that can use for data modeling. It includes templates for entity relationship diagrams, data flow diagrams, and other types commonly used in data modeling.

6. MySQL Workbench:

MySQL Workbench is an open-source tool explicitly designed to allow users to create and interact with MySQL databases by adding new features and functionalities like Entity-Relationship diagrams, forward and reverse engineering, and database schema generation.

Many other data modeling tools are available, and the choice of tool depends on the project’s specific requirements and the user’s preferences.

Benefits of Data Modeling

Data modeling has several benefits, including that data modeling can help ensure that the database is designed to quickly accommodate future growth and changes in business requirements. Data modeling assists in identifying data redundancies, errors, and irregularities for better insights.

It equips data scientists with an in-depth understanding of data structure, attributes of data, relationships, and constraints of the data. Data modeling also helps in data storage optimization, which plays a significant role in minimizing data storage costs.

Related: Best Web Scraping Tools For Data Gathering In 2023

Final Remarks

Finally, we shed light on the fact that data modeling is the stepping stone of the data management process to achieve business objectives and other essential utilization. We may comprehend the data structure, relationships, and limitations by building a data model.

By making it easier to ensure everyone working on the project is familiar with the data. It is the fundamental phase of the data management process to achieve crucial business objectives and other vital usages that assist in decision-making driven by data analysis.

You can avoid uncertainties and inaccuracies. Data continuity, reliability, and validity are improved by addressing issues. Provides a common language and a framework or schema for better data management practices.

The examples and discussion of this writing provided insight into how data modeling processes raw data to discover patterns, trends, and relationships in data. Also, it provides improved data storage efficiency to cancel out useless data.

Streamlined data retrieval with organized storage. By adopting best practices and leveraging the right tools and techniques, data professionals can help organizations unlock their data’s full potential, driving business growth and innovation.

Our solution

Scraper api.

Easily scrape search engines and avoid being blocked

Share this post

Similar to "What Is Data Modeling? Tips, Examples And Use Cases"

Web scraping for machine learning 2024.

Feb 8, 2024 12 mins read

Most read from web scraping for beginners

How to use a backconnect proxy.

Nov 26, 2019 2 mins read

Best Time to Send Marketing Emails to Boost Open Rate

Apr 4, 2023 15 mins read

How To Build A Java Web Crawler

Jan 20, 2021 16 mins read

Start crawling and scraping the web today

Try it free. No credit card required. Instant set-up.

The Analytics Setup Guidebook

Book content

Chapter 1. High-level Overview of an Analytics Setup

- Start here - Introduction

- A Simple Setup for People Just Starting Out

- A Modern Analytics Stack

- Our Biases of a Good Analytics Stack

Chapter 2. Centralizing Data

- Consolidating Data from Sources Systems

- Understanding The Data Warehouse

- ELT vs ETL: What's The Big Deal?

- Transforming Data in the ELT paradigm

Chapter 3. Data Modeling for Analytics

- Data Modeling Layer and Concepts

- Kimball's Dimensional Data Modeling

Modeling Example: A Real-world Use Case

Chapter 4. Using Data

- Data Servicing — A Tale of Three Jobs

- Navigating The Business Intelligence Tool Space

- The Arc of Adoption

Chapter 5. Conclusion

In this section we are going to walk through a real world data modeling effort that we executed in Holistics, so that you may gain a better understanding of the ideas we’ve presented in the previous two segments. The purpose of this piece is two-fold:

- We want to give you a taste of what it’s like to model data using a data modeling layer tool. Naturally, we will be using Holistics, since that is what we use internally to measure our business. But the general approach we present here is what is important, as the ideas we apply are similar regardless of whether you’re using Holistics, or some other data modeling layer tool like dbt or Looker .

- We want to show you how we think about combining the Kimball-style, heavy, dimensional data modeling approach with the more ‘just-in-time’, lightweight, ‘model how you like’ approach. This example will show how we’ve evolved our approach to modeling a particular section of our data over the period of a few months.

By the end of this segment, we hope to convince you that using a data modeling layer-type tool along with the ELT approach is the right way to go.

The Problem

In the middle of 2019, we began to adopt Snowplow as an alternative to Google Analytics for all our front-facing marketing sites. Snowplow is an open-source event analytics tool. It allows us to define and record events for any number of things on https://www.holistics.io/ — if you go to the website and click a link, watch a video, or navigate to our blog, a Javascript event is created and sent to the Snowplow event collector that runs on our servers.

Our Snowplow installation captures and delivers such event data to BigQuery. And our internal Holistics instance sits on top of this BigQuery data warehouse.

Snowplow raw event data is fairly complex. The first step we did was to take the raw event data and model it, like so:

Note that there are over 130 columns in the underlying table, and about 221 fields in the data model. This is a large fact table by most measures.

Our data team quickly realized two things: first, this data was going to be referenced a lot by the marketing team, as they checked the performance of our various blog posts and landing pages. Second, the cost of processing gigabytes of raw event data was going to be significant given that these reports would be assessed so regularly.

Within a few days of setting up Snowplow, we decided to create a new data model on which to run the majority of our reports. This data model would aggregate raw event data to the grain of the pageview , which is the level that most of our marketers operated at.

Notice a few things that went into this decision. In the previous section on Kimball data modeling we argued that it wasn’t strictly necessary to write aggregation tables when working with large fact tables on modern data warehouses. Our work with the Snowplow data happened within BigQuery — an extremely powerful MPP data warehouse — so it was actually pretty doable to just run aggregations off the raw event data.

But our reasoning to write a new data model was as follows:

- The series of dashboards to be built on top of the Snowplow data would be used very regularly. We knew this because various members of the sales & marketing teams were already asking questions in the week that we had Snowplow installed. This meant that the time cost of setting up the model would be justified over the course of doing business.

- We took into account the costs from running aggregation queries across hundreds of thousands of rows every time a marketer opened a Snowplow-related report. If this data wasn’t so regularly accessed, we might have let it be (our reasoning: don’t waste employee time to reduce BigQuery compute costs if a report isn’t going to be used much!) but we thought the widespread use of these reports justified the additional work.

Notice how we made the decision to model data by considering multiple factors: the time costs to create a new model, the expected usage rate, and our infrastructure costs. This is very different from a pure Kimball approach, where every data warehousing project necessarily demanded a data modeling effort up-front.

Creating The Pageview Model

So how did we do this? In Holistics, we created this pageview-level data model by writing some custom SQL (don’t read the whole thing, just skim — this is for illustration purposes only):

Within the Holistics user interface, the above query generated a model that looked like this:

We then persisted this model to a new table within BigQuery. The persistence settings below means that the SQL query you saw above would be rerun by the Holistics data modeling layer once ever two hours. We could modify this refresh schedule as we saw fit.

We could also sanity check the data lineage of our new model, by peeking at the dependency graph generated by Holistics:

In this particular case, our pageview-level data model was generated from our Snowplow event fact table in BigQuery, along with a dbdocs_orgs dimension table stored in PostgreSQL. (dbdocs is a separate product in our company, but our landing pages and marketing materials on Holistics occasionally link out to dbdocs.io — this meant it was important for the same people to check marketing performance for that asset as well).

Our reports were then switched over to this data model, instead of the raw event fact table that they used earlier. The total time taken for this effort: half a week.

Evolving The Model To A Different Grain

A few months later, members of our marketing team began to ask about funnel fall-off rates. We were running a couple of new campaigns across a handful of new landing pages, and the product side of the business began toying with the idea of freemium pricing for certain early-stage startup customers.

However, running such marketing efforts meant watching the bounce rates (or fall-off rates) of our various funnels very carefully. As it turned out, this information was difficult to query using the pageview model. Our data analysts found that they were writing rather convoluted queries because they had to express all sorts of complicated business logic within the queries themselves. For instance, a ‘bounced session’ at Holistics is defined as a session with:

- only one page view, with no activities in any other sessions, or

- a session in which the visitor did not scroll down the page, or

- a session in which the visitor scrolled down but spent less than 20 seconds on the page.

Including complex business logic in one’s SQL queries was a ‘ code smell ’ if there ever was one.

The solution our data team settled on was to create a new data model — one that operated at a higher grain than the pageview model. We wanted to capture 'sessions’, and build reports on top of this session data.

So, we created a new model that we named session_aggr . This was a data model that was derived from the pageview data model that we had created earlier. The lineage graph thus looked like this:

And the SQL used to generate this new data model from the pageview model was as follows (again, skim it, but don’t worry if you don’t understand):

And in the Holistics user interface, this is what that query looked like (note how certain fields were annotated by our data analysts; this made it easier for marketing staff to navigate in our self-service UI later):

This session model is regenerated from the pageview model once every 3 hours, and persisted into BigQuery with the table name persisted_models.persisted_session_aggr . The Holistics data modeling layer would take care to regenerate the pageview model first, before regenerating the session model.

With this new session data model, it became relatively easy for our analysts to create new reports for the marketing team. Their queries were now very simple SELECT statements from the session data model, and contained no business logic. This made it a lot easier to create and maintain new marketing dashboards, especially since all the hard work had already been captured at the data modeling layer.

Exposing self-service analytics to business users

It’s worth it to take a quick look at what all of this effort leads to.

In The Data Warehouse Toolkit , Ralph Kimball championed data modeling as a way to help business users navigate data within the data warehouse. In this, he hit on one of the lasting benefits of data modeling.

Data modeling in Kimball’s day really was necessary to help business users make sense of data. When presented with a BI tool, non-technical users could orient themselves using the labels on the dimensional tables.

Data modeling serves a similar purpose for us. We don’t think it’s very smart to have data analysts spend all their time writing new reports for business users. It’s better if their work could become reusable components for business users to help themselves.

In Holistics, the primary way this happens is through Holistics Datasets — a term we use to describe self-service data marts. After model creation, an analyst is able to package a set of data models into a (waitforit) dataset. This dataset is then made available to business users. The user interface for a dataset looks like this:

On the leftmost column are the fields of the models collected within the data set. These fields are usually self-describing, though analysts take care to add textual descriptions where the field names are ambiguous.

In Holistics, we train business users to help themselves to data. This interface is key to that experience. Our business users drag whatever field they are interested in exploring to the second column, and then generate results or visualizations in the third column.

This allows us to serve measurements throughout the entire organization, despite having a tiny data team.

What are some lessons we may take away from this case study? Here are a few that we want to highlight.

Let Usage Determine Modeling, Not The Reverse

Notice how sparingly we’ve used Kimball-style dimensional data modeling throughout the example, above. We only have one dimension table that is related to dbdocs (the aforementioned dbdoc.org table). As of right now, most dimensional data is stored within the Snowplow fact table itself.

Is this ideal? No. But is it enough for the reports that our marketing team uses? Yes, it is.

The truth is that if our current data model poses problems for us down the line, we can always spend a day or two splitting out the dimensions into a bunch of new dimension tables according to Kimball’s methodology. Because all of our raw analytical data is captured in the same data warehouse, we need not fear losing the data required for future changes. We can simply redo our models within Holistics’s data modeling layer, set a persistence setting, and then let the data warehouse do the heavy lifting for us.

Model Just Enough, But No More

Notice how we modeled pageviews first from our event data, and sessions later, only when we were requested to do so by our marketing colleagues. We could have speculatively modeled sessions early on in our Snowplow adoption, but we didn’t. We chose to guard our data team’s time judiciously.

When you are in a fast-moving startup, it is better to do just enough to deliver business insights today, as opposed to crafting beautiful data models for tomorrow. When it came time to create the session data model, it took an analyst only two days to come up with the SQL and to materialize it within Holistics. It then took only another day or so to attach reports to this new data model.

Use such speed to your advantage. Model only what you must.

Embed Business Logic in Data Models, Not Queries

Most of the data modeling layer tools out there encourage you to pre-calculate business metrics within your data model. This allows you to keep your queries simple. It also prevents human errors from occurring.

Let’s take the example of our ‘bounced session’ definition, above. If we had not included it in the sessions model, this would mean that all the data analysts in our company would need to remember exactly how a bounced session is defined by our marketing people. They would write their queries according to this definition, but would risk making subtle errors that might not be caught for months.

Having our bounced sessions defined in our sessions data model meant that our reports could simply SELECT off our model. It also meant that if our marketing team changed their definition of a bounced session, we would only have to update that definition in a single place.

The Goal of Modeling Is Self Service

Like Kimball, we believe that the end goal of modeling is self-service. Self-service is important because it means that your organization is no longer bottlenecked at the data team.

At Holistics, we’ve built our software to shorten the gap between modeling and delivery. But it’s important to note that these ideas aren’t limited to just our software alone. A similar approach using slightly different tools are just as good. For instance, Looker is known for its self-service capabilities. There, the approach is somewhat similar: data analysts model up their raw tables, and then use these models to service business users. The reusability of such models is what gives Looker its power.

Going Forward

We hope this case study has given you a taste of data modeling in this new paradigm.

Use a data modeling layer tool. Use ELT. And what you’ll get from adopting the two is a flexible, easy approach to data modeling. We think this is the future. We hope you’ll agree.

Data modeling is the process of creating a visual representation of either a whole information system or parts of it to communicate connections between data points and structures.

The goal of data modeling to illustrate the types of data used and stored within the system, the relationships among these data types, the ways the data can be grouped and organized and its formats and attributes.

Data models are built around business needs. Rules and requirements are defined upfront through feedback from business stakeholders so they can be incorporated into the design of a new system or adapted in the iteration of an existing one.

Data can be modeled at various levels of abstraction. The process begins by collecting information about business requirements from stakeholders and end users. These business rules are then translated into data structures to formulate a concrete database design. A data model can be compared to a roadmap, an architect’s blueprint or any formal diagram that facilitates a deeper understanding of what is being designed.

Data modeling employs standardized schemas and formal techniques. This provides a common, consistent, and predictable way of defining and managing data resources across an organization, or even beyond.

Ideally, data models are living documents that evolve along with changing business needs. They play an important role in supporting business processes and planning IT architecture and strategy. Data models can be shared with vendors, partners, and/or industry peers.

Learn the building blocks and best practices to help your teams accelerate responsible AI.

Read the guide for data leaders

Like any design process, database and information system design begins at a high level of abstraction and becomes increasingly more concrete and specific. Data models can generally be divided into three categories, which vary according to their degree of abstraction. The process will start with a conceptual model, progress to a logical model and conclude with a physical model. Each type of data model is discussed in more detail in subsequent sections:

They are also referred to as domain models and offer a big-picture view of what the system will contain, how it will be organized, and which business rules are involved. Conceptual models are usually created as part of the process of gathering initial project requirements. Typically, they include entity classes (defining the types of things that are important for the business to represent in the data model), their characteristics and constraints, the relationships between them and relevant security and data integrity requirements. Any notation is typically simple.

They are less abstract and provide greater detail about the concepts and relationships in the domain under consideration. One of several formal data modeling notation systems is followed. These indicate data attributes, such as data types and their corresponding lengths, and show the relationships among entities. Logical data models don’t specify any technical system requirements. This stage is frequently omitted in agile or DevOps practices. Logical data models can be useful in highly procedural implementation environments, or for projects that are data-oriented by nature, such as data warehouse design or reporting system development.

They provide a schema for how the data will be physically stored within a database. As such, they’re the least abstract of all. They offer a finalized design that can be implemented as a relational database , including associative tables that illustrate the relationships among entities as well as the primary keys and foreign keys that will be used to maintain those relationships. Physical data models can include database management system (DBMS)-specific properties, including performance tuning.

As a discipline, data modeling invites stakeholders to evaluate data processing and storage in painstaking detail. Data modeling techniques have different conventions that dictate which symbols are used to represent the data, how models are laid out, and how business requirements are conveyed. All approaches provide formalized workflows that include a sequence of tasks to be performed in an iterative manner. Those workflows generally look like this:

- Identify the entities. The process of data modeling begins with the identification of the things, events or concepts that are represented in the data set that is to be modeled. Each entity should be cohesive and logically discrete from all others.

- Identify key properties of each entity. Each entity type can be differentiated from all others because it has one or more unique properties, called attributes. For instance, an entity called “customer” might possess such attributes as a first name, last name, telephone number and salutation, while an entity called “address” might include a street name and number, a city, state, country and zip code.

- Identify relationships among entities. The earliest draft of a data model will specify the nature of the relationships each entity has with the others. In the above example, each customer “lives at” an address. If that model were expanded to include an entity called “orders,” each order would be shipped to and billed to an address as well. These relationships are usually documented via unified modeling language (UML).

- Map attributes to entities completely. This will ensure the model reflects how the business will use the data. Several formal data modeling patterns are in widespread use. Object-oriented developers often apply analysis patterns or design patterns, while stakeholders from other business domains may turn to other patterns.

- Assign keys as needed, and decide on a degree of normalization that balances the need to reduce redundancy with performance requirements. Normalization is a technique for organizing data models (and the databases they represent) in which numerical identifiers, called keys, are assigned to groups of data to represent relationships between them without repeating the data. For instance, if customers are each assigned a key, that key can be linked to both their address and their order history without having to repeat this information in the table of customer names. Normalization tends to reduce the amount of storage space a database will require, but it can at cost to query performance.

- Finalize and validate the data model. Data modeling is an iterative process that should be repeated and refined as business needs change.

Data modeling has evolved alongside database management systems, with model types increasing in complexity as businesses' data storage needs have grown. Here are several model types:

- Hierarchical data models represent one-to-many relationships in a treelike format. In this type of model, each record has a single root or parent which maps to one or more child tables. This model was implemented in the IBM Information Management System (IMS), which was introduced in 1966 and rapidly found widespread use, especially in banking. Though this approach is less efficient than more recently developed database models, it’s still used in Extensible Markup Language (XML) systems and geographic information systems (GISs).

- Relational data models were initially proposed by IBM researcher E.F. Codd in 1970. They are still implemented today in the many different relational databases commonly used in enterprise computing. Relational data modeling doesn’t require a detailed understanding of the physical properties of the data storage being used. In it, data segments are explicitly joined through the use of tables, reducing database complexity.

Relational databases frequently employ structured query language (SQL) for data management. These databases work well for maintaining data integrity and minimizing redundancy. They’re often used in point-of-sale systems, as well as for other types of transaction processing.

- Entity-relationship (ER) data models use formal diagrams to represent the relationships between entities in a database. Several ER modeling tools are used by data architects to create visual maps that convey database design objectives.

- Object-oriented data models gained traction as object-oriented programming and it became popular in the mid-1990s. The “objects” involved are abstractions of real-world entities. Objects are grouped in class hierarchies, and have associated features. Object-oriented databases can incorporate tables, but can also support more complex data relationships. This approach is employed in multimedia and hypertext databases as well as other use cases.

- Dimensional data models were developed by Ralph Kimball, and they were designed to optimize data retrieval speeds for analytic purposes in a data warehouse . While relational and ER models emphasize efficient storage, dimensional models increase redundancy in order to make it easier to locate information for reporting and retrieval. This modeling is typically used across OLAP systems.

Two popular dimensional data models are the star schema, in which data is organized into facts (measurable items) and dimensions (reference information), where each fact is surrounded by its associated dimensions in a star-like pattern. The other is the snowflake schema, which resembles the star schema but includes additional layers of associated dimensions, making the branching pattern more complex.

Data modeling makes it easier for developers, data architects, business analysts, and other stakeholders to view and understand relationships among the data in a database or data warehouse. In addition, it can:

- Reduce errors in software and database development.

- Increase consistency in documentation and system design across the enterprise.

- Improve application and database performance.

- Ease data mapping throughout the organization.

- Improve communication between developers and business intelligence teams.

- Ease and speed the process of database design at the conceptual, logical and physical levels.

Data modeling tools

Numerous commercial and open source computer-aided software engineering (CASE) solutions are widely used today, including multiple data modeling, diagramming and visualization tools. Here are several examples:

- erwin Data Modeler is a data modeling tool based on the Integration DEFinition for information modeling (IDEF1X) data modeling language that now supports other notation methodologies, including a dimensional approach.

- Enterprise Architect is a visual modeling and design tool that supports the modeling of enterprise information systems and architectures as well as software applications and databases. It’s based on object-oriented languages and standards.

- ER/Studio is database design software that’s compatible with several of today’s most popular database management systems. It supports both relational and dimensional data modeling.

- Free data modeling tools include open source solutions such as Open ModelSphere.

A fully managed, elastic cloud data warehouse built for high-performance analytics and AI.

Hybrid. Open. Resilient. Your platform and partner for digital transformation.

AI-powered hybrid cloud software.

Introducing SPSS Modeler 18.4, Collaboration & Deployment Services 8.4 and Analytic Server 3.4.

Explore how SPSS Modeler helps customers accelerate time to value with visual data science and machine learning.

Scale AI workloads for all your data, anywhere, with IBM watsonx.data, a fit-for-purpose data store built on an open data lakehouse architecture.

Data Modeling Case Studies

Data modeling in databases: exploring case studies.

Database design plays a crucial role in the success of any software application. It involves the process of data modeling, which aims to create a logical representation of the data and its relationships within a database. In this tutorial, we will dive into the world of data modeling, exploring various concepts and techniques through real-life case studies.

What is Data Modeling?

Data modeling is the process of designing a database schema that accurately captures the organization's data requirements. It involves identifying entities, attributes, and relationships to create a structured and organized representation of the system.

Entities and Attributes

Entities represent real-world objects such as customers, products, or orders. Attributes, on the other hand, describe the characteristics of these entities. For instance, a customer entity may have attributes like name, email, and address.

Relationships

Relationships define the associations between entities. They help establish connectivity and dependencies between different objects in the database. Relationships can be one-to-one, one-to-many, or many-to-many, depending on the nature of the data.

Advantages of Data Modeling

Proper data modeling offers numerous advantages to software developers, including:

- Improved Data Integrity: By structuring data and defining relationships, data modeling ensures integrity and consistency within the database.

- Efficient Querying: Well-designed databases optimize query execution, resulting in faster and more efficient retrieval of information.

- Scalability: Data modeling aids in scaling the database as the application grows, accommodating increased data volume and complexity.

- Easier Maintenance: A well-defined data model simplifies maintenance tasks such as updates, modifications, and data migration.

- Collaboration: Data modeling provides a common platform for collaboration between developers, designers, and stakeholders, enhancing understanding and communication.

Common Data Modeling Techniques

Let's explore some commonly used data modeling techniques:

Entity-Relationship Diagrams (ERDs)

ERDs visually represent entities, attributes, and relationships using symbols like rectangles for entities, diamonds for relationships, and ellipses for attributes. They provide a quick overview of the database structure and its components.

Relational Model

The relational model represents data using tables, where each table consists of rows (tuples) and columns (attributes). Primary and foreign keys establish relationships between different tables, ensuring referential integrity.

Normalization

Normalization is the process of organizing data to eliminate data redundancy and anomalous dependencies. It involves breaking down large tables into smaller, more manageable ones, while ensuring data integrity and minimizing data redundancy.

Real-life Data Modeling Case Studies

Let's dive into real-life case studies to understand how data modeling is applied in practice.

Case Study 1: Social Media Platform

In a social media platform, we would typically have entities like users, posts, comments, and likes. Relationships can be established between users and their posts, between posts and comments, and between users and their followers.

Case Study 2: E-commerce Platform

In an e-commerce platform, entities would include customers, products, orders, and payments. Relationships can be defined between customers and their orders, products and orders, and orders and payments.

Data modeling is an essential aspect of database design, aiding in creating efficient, scalable, and maintainable databases. By understanding the concepts and techniques of data modeling, developers can ensure the integrity and performance of their applications. Remember to analyze real-life case studies and adapt the learned principles to your specific scenarios. Happy data modeling!

Please note that the above content is written in Markdown format. You can convert it to HTML using any Markdown to HTML converter.

Hi, I'm Ada, your personal AI tutor. I can help you with any coding tutorial. Go ahead and ask me anything.

I have a question about this topic

Give more examples

Data-Driven Modeling: Concept, Techniques, Challenges and a Case Study

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Home » UML » A Comprehensive Guide to Use Case Modeling

A Comprehensive Guide to Use Case Modeling

- Posted on September 12, 2023

- / Under UML , Use Case Analysis

What is Use Case Modeling?

This is a technique used in software development and systems engineering to describe the functional requirements of a system. It focuses on understanding and documenting how a system is supposed to work from the perspective of the end users. In essence, it helps answer the question: “What should the system do to meet the needs and goals of its users?”

Key Concepts of Use Case Modeling

Functional Requirements : Functional requirements are the features, actions, and behaviors a system must have to fulfill its intended purpose. Use case modeling is primarily concerned with defining and capturing these requirements in a structured manner.

End User’s Perspective : Use case modeling starts by looking at the system from the viewpoint of the people or entities (referred to as “actors”) who will interact with the system. It’s essential to understand how these actors will use the system to achieve their objectives or perform their tasks.

Interactions : Use case modeling emphasizes capturing the interactions between these end users (actors) and the system. It’s not just about what the system does in isolation; it’s about how it responds to user actions or requests.

The Basics of Use Cases:

- A use case is a description of how a system interacts with one or more external entities, called actors, to achieve a specific goal.

- A use case can be written in textual or diagrammatic form, depending on the level of detail and complexity required.

- A use case should capture the essential and relevant aspects of the interaction, such as the preconditions, postconditions, main flow, alternative flows, and exceptions.

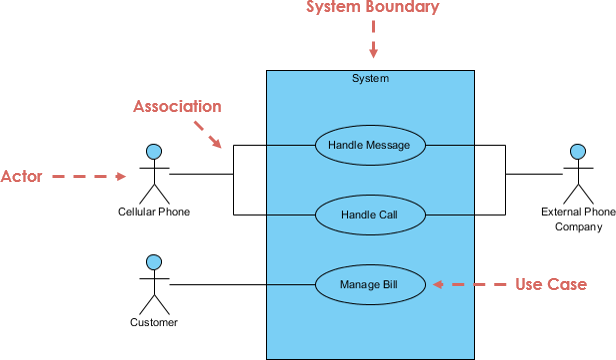

What is a Use Case Diagram?

A use case diagram is a graphical representation used in use case modeling to visualize and communicate these interactions and relationships. In a use case diagram, you’ll typically see actors represented as stick figures, and the use cases (specific functionalities or features) as ovals or rectangles. Lines and arrows connect the actors to the use cases, showing how they interact.

- Actors : These are the entities or users outside the system who interact with it. They can be people, other systems, or even external hardware devices. Each actor has specific roles or responsibilities within the system.

- Use Cases : Use cases represent specific functionalities or processes that the system can perform to meet the needs of the actors. Each use case typically has a name and a description, which helps in understanding what it accomplishes.

- Relationships : The lines and arrows connecting actors and use cases in the diagram depict how the actors interact with the system through these use cases. Different types of relationships, such as associations, extend relationships, and include relationships, can be used to specify the nature of these interactions.

How to Perform Use Case Modeling?

- To understand a use case, you need to identify the actors and the use cases involved in the system. An actor is an external entity that has a role in the interaction with the system. An actor can be a person, another system, or a time event.

- A use case is a set of scenarios that describe how the system and the actor collaborate to achieve a common goal1. A scenario is a sequence of steps that describe what happens in a specific situation1. Actors in Use Case Modeling:

- Actors are represented by stick figures in a Use Case diagram. Actors can have generalization relationships, which indicate that one actor inherits the characteristics and behaviors of another actor. For example, a Student actor can be a generalization of an Undergraduate Student actor and a Graduate Student actor.

- Actors can also have association relationships, which indicate that an actor is involved in a use case. For example, an Instructor actor can be associated with a Grade Assignment use case.

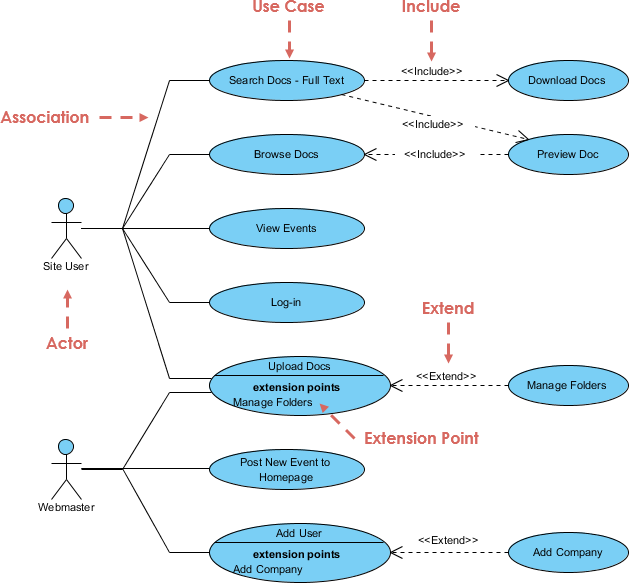

Relationships Between Actors and Use Cases:

- An include relationship is a dependency between two use cases, where one use case (the base) incorporates the behavior of another use case (the inclusion) as part of its normal execution.

- An include relationship is represented by a dashed arrow with the stereotype «include» from the base to the inclusion.

- An include relationship can be used to reuse common functionality, simplify complex use cases, or abstract low-level details

- An extend relationship is a dependency between two use cases, where one use case (the extension) adds some optional or exceptional behavior to another use case (the base) under certain conditions.

- An extend relationship is represented by a dashed arrow with the stereotype «extend» from the extension to the base.

- An extend relationship can have an extension point, which is a location in the base use case where the extension can be inserted.

- An extension point can be labeled with a name and a condition

Creating Effective Use Cases:

- A system boundary is a box that encloses the use cases and shows the scope of the system.

- A system boundary helps to distinguish what is inside the system (the use cases) and what is outside the system (the actors).

- A system boundary should be clearly labeled with the name of the system and its version1.

- A use case goal is a statement that summarizes what the use case accomplishes for the actor.

- A use case goal should be specific, measurable, achievable, relevant, and testable.

- A use case scenario is a sequence of steps that describes how the actor and the system interact to achieve the goal.

- A use case scenario should be complete, consistent, realistic, and traceable.

- A use case description is a textual document that provides more details about the use case, such as the preconditions, postconditions, main flow, alternative flows, and exceptions.

- A use case description should be clear and concise, using simple and precise language, avoiding jargon and ambiguity, and following a consistent format.

- A use case description should also be coherent and comprehensive, covering all possible scenarios, outcomes, and variations, and addressing all relevant requirements.

- A use case template is a standardized format that helps to organize and present the use case information in a consistent and structured way.

- A use case template can include various sections, such as the use case name, ID, goal, actors, priority, assumptions, preconditions, postconditions, main flow, alternative flows, exceptions, etc.

- A use case documentation is a collection of use cases that describes the functionality of the system from different perspectives.

- A use case documentation can be used for various purposes, such as communication, validation, verification, testing, maintenance, etc.

Use Case Modeling Best Practices:

- Identify the key stakeholders and their goals, and involve them in the use case development process

- Use a top-down approach to identify and prioritize the most important use cases

- Use a naming convention that is consistent, meaningful, and descriptive for the use cases and actors

- Use diagrams and textual descriptions to complement each other and provide different levels of detail

- Use relationships such as extend, include, and generalization to show dependencies and commonalities among use cases

- Review and validate the use cases with the stakeholders and ensure that they are aligned with the system requirements

Use Case Modeling using Use Case Template

Problem description: university library system.

The University Library System is facing a range of operational challenges that impact its efficiency and the quality of service it provides to students, faculty, and staff. These challenges include:

- Manual Borrowing and Return Processes : The library relies on paper-based processes for book borrowing, return, and tracking of due dates. This manual approach is prone to errors, leading to discrepancies in record-keeping and occasional disputes between library staff and users.

- Inventory Management : The current system for managing the library’s extensive collection of books and materials is outdated. The lack of an efficient inventory management system makes it difficult to locate specific items, leading to frustration among library patrons and unnecessary delays.

- Late Fee Tracking : Tracking and collecting late fees for overdue books are challenging tasks. The library staff lacks an automated system to monitor due dates and assess fines accurately. This results in a loss of revenue and inconvenience for users.

- User Account Management : User accounts, including library card issuance and management, rely on manual processes. This leads to delays in providing access to library resources for new students and difficulties in updating user information for existing members.

- Limited Accessibility : The current library system lacks online access for users to search for books, place holds, or renew checked-out items remotely. This limitation hinders the convenience and accessibility that modern students and faculty expect.

- Inefficient Resource Allocation : The library staff often face challenges in optimizing the allocation of resources, such as books, journals, and study spaces. The lack of real-time data and analytics makes it difficult to make informed decisions about resource distribution.

- Communication Gaps : There is a communication gap between library staff and users. Users are often unaware of library policies, new arrivals, or changes in operating hours, leading to misunderstandings and frustration.

- Security Concerns : The library system lacks adequate security measures to protect user data and prevent theft or unauthorized access to library resources.

These challenges collectively contribute to a suboptimal library experience for both library staff and users. Addressing these issues and modernizing the University Library System is essential to provide efficient services, enhance user satisfaction, and improve the overall academic experience within the university community.

Here’s a list of candidate use cases for the University Library System based on the problem description provided:

- Create User Account

- Update User Information

- Delete User Account

- Issue Library Cards

- Add New Books to Inventory

- Update Book Information

- Remove Books from Inventory

- Search for Books

- Check Book Availability

- Reserve Books

- Renew Borrowed Books

- Process Book Returns

- Catalog and Categorize Books

- Manage Book Copies

- Track Book Location

- Inventory Reconciliation

- Calculate Late Fees

- Notify Users of Overdue Books

- Accept Late Fee Payments

- Search for Books Online

- Place Holds on Books

- Request Book Delivery

- Renew Books Online

- Reserve Study Spaces

- Allocate Study Materials (e.g., Reserve Books)

- Manage Study Space Reservations

- Notify Users of Library Policies

- Announce New Arrivals

- Provide Operating Hours Information

- User Authentication and Authorization

- Data Security and Privacy

- Generate Usage Reports

- Analyze Borrowing Trends

- Predict Demand for Specific Materials

- Request Materials from Other Libraries

- Manage Interlibrary Loan Requests

- Staff Authentication and Authorization

- Training and Onboarding

- Staff Scheduling

- Provide Services for Users with Special Needs (e.g., Braille Materials)

- Assistive Technology Support

- Reserve Audio/Visual Equipment

- Check Out Equipment

- Suggest Books and Resources Based on User Preferences

- Organize and Promote Library Workshops and Events

These candidate use cases cover a wide range of functionalities that address the issues identified in the problem description. They serve as a foundation for further analysis, design, and development of the University Library System to enhance its efficiency and user satisfaction. The specific use cases to prioritize and implement will depend on the system’s requirements and stakeholders’ needs.

Use Case Template:

Here’s the use case template and example for borrowing a book from a university library in tabular format:

Example Use Case: Borrowing a Book from University Library

These tables above presents the use case template and example in a structured and organized way, making it easier to read and understand the key elements of the use case.

Granularity of Use Cases

Use Case Granularity Definition : Use case granularity refers to the degree of detail and organization within use case specifications. It essentially describes how finely you break down the functionality of a system when documenting use cases. In simpler terms, it’s about how much or how little you decompose a use case into smaller parts or steps.

Importance of Use Case Granularity :

- Communication Enhancement : Use case granularity plays a crucial role in improving communication between different stakeholders involved in a software project, such as business analysts, developers, testers, and end-users. When use cases are well-defined and appropriately granulated, everyone can better understand the system’s functionality and requirements.

- Project Planning : The level of granularity in use cases impacts project planning. Smaller, more finely grained use cases can make it easier to estimate the time and effort required for development tasks. This aids project managers in creating more accurate project schedules and resource allocation.

- Clarity and Precision : Achieving the right level of granularity ensures that use cases are clear and precise. If use cases are too high-level and abstract, they might lack the necessary detail for effective development. Conversely, overly detailed use cases can become unwieldy and difficult to manage.

Example : Let’s illustrate use case granularity with an example related to a “User Registration” functionality in an e-commerce application:

- High Granularity : A single use case titled “User Registration” covers the entire registration process from start to finish. It includes every step, such as entering personal information, creating a password, confirming the password, and submitting the registration form.

- Medium Granularity : Use cases are divided into smaller, more focused parts. For instance, “Enter Personal Information,” “Create Password,” and “Submit Registration” could be separate use cases. Each of these focuses on a specific aspect of user registration.

- Low Granularity : The lowest level of granularity might involve breaking down actions within a single step. For example, “Enter Personal Information” could further decompose into “Enter First Name,” “Enter Last Name,” “Enter Email Address,” and so on.

The appropriate level of granularity depends on project requirements and the specific needs of stakeholders. Finding the right balance is essential to ensure that use cases are understandable, manageable, and effective in conveying system functionality to all involved parties.

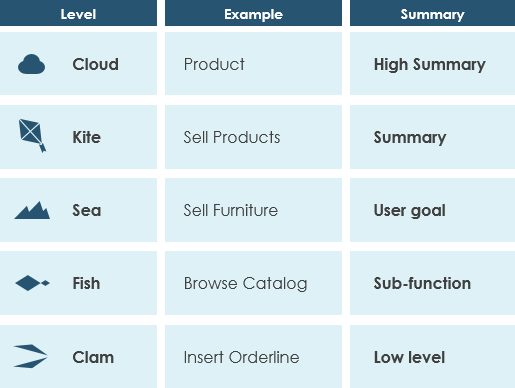

In his book ‘Writing Effective Use Cases,’ Alastair Cockburn provides a simple analogy to help us visualize various levels of goal attainment. He suggests thinking about these levels using the analogy of the sea

References:

- What is Use Case Diagram? (visual-paradigm.com)

- What is Use Case Specification?

Leave a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Visual Paradigm Online

- Request Help

- Customer Service

- Community Circle

- Demo Videos

- Visual Paradigm

- YouTube Channel

- Academic Partnership

Data Topics

- Data Architecture

- Data Literacy

- Data Science

- Data Strategy

- Data Modeling

- Governance & Quality

- Education Resources For Use & Management of Data

Data Modeling 101

Data Modeling creates a visual representation of a data system as a whole or as parts of it. The goal is to communicate the kinds of data being used and saved within the system. A data model should also show the data’s relationships, how the data can be organized, and the formats used. A data model can […]