What Is Problem Solving? How Software Engineers Approach Complex Challenges

From debugging an existing system to designing an entirely new software application, a day in the life of a software engineer is filled with various challenges and complexities. The one skill that glues these disparate tasks together and makes them manageable? Problem solving .

Throughout this blog post, we’ll explore why problem-solving skills are so critical for software engineers, delve into the techniques they use to address complex challenges, and discuss how hiring managers can identify these skills during the hiring process.

What Is Problem Solving?

But what exactly is problem solving in the context of software engineering? How does it work, and why is it so important?

Problem solving, in the simplest terms, is the process of identifying a problem, analyzing it, and finding the most effective solution to overcome it. For software engineers, this process is deeply embedded in their daily workflow. It could be something as simple as figuring out why a piece of code isn’t working as expected, or something as complex as designing the architecture for a new software system.

In a world where technology is evolving at a blistering pace, the complexity and volume of problems that software engineers face are also growing. As such, the ability to tackle these issues head-on and find innovative solutions is not only a handy skill — it’s a necessity.

The Importance of Problem-Solving Skills for Software Engineers

Problem-solving isn’t just another ability that software engineers pull out of their toolkits when they encounter a bug or a system failure. It’s a constant, ongoing process that’s intrinsic to every aspect of their work. Let’s break down why this skill is so critical.

Driving Development Forward

Without problem solving, software development would hit a standstill. Every new feature, every optimization, and every bug fix is a problem that needs solving. Whether it’s a performance issue that needs diagnosing or a user interface that needs improving, the capacity to tackle and solve these problems is what keeps the wheels of development turning.

It’s estimated that 60% of software development lifecycle costs are related to maintenance tasks, including debugging and problem solving. This highlights how pivotal this skill is to the everyday functioning and advancement of software systems.

Innovation and Optimization

The importance of problem solving isn’t confined to reactive scenarios; it also plays a major role in proactive, innovative initiatives . Software engineers often need to think outside the box to come up with creative solutions, whether it’s optimizing an algorithm to run faster or designing a new feature to meet customer needs. These are all forms of problem solving.

Consider the development of the modern smartphone. It wasn’t born out of a pre-existing issue but was a solution to a problem people didn’t realize they had — a device that combined communication, entertainment, and productivity into one handheld tool.

Increasing Efficiency and Productivity

Good problem-solving skills can save a lot of time and resources. Effective problem-solvers are adept at dissecting an issue to understand its root cause, thus reducing the time spent on trial and error. This efficiency means projects move faster, releases happen sooner, and businesses stay ahead of their competition.

Improving Software Quality

Problem solving also plays a significant role in enhancing the quality of the end product. By tackling the root causes of bugs and system failures, software engineers can deliver reliable, high-performing software. This is critical because, according to the Consortium for Information and Software Quality, poor quality software in the U.S. in 2022 cost at least $2.41 trillion in operational issues, wasted developer time, and other related problems.

Problem-Solving Techniques in Software Engineering

So how do software engineers go about tackling these complex challenges? Let’s explore some of the key problem-solving techniques, theories, and processes they commonly use.

Decomposition

Breaking down a problem into smaller, manageable parts is one of the first steps in the problem-solving process. It’s like dealing with a complicated puzzle. You don’t try to solve it all at once. Instead, you separate the pieces, group them based on similarities, and then start working on the smaller sets. This method allows software engineers to handle complex issues without being overwhelmed and makes it easier to identify where things might be going wrong.

Abstraction

In the realm of software engineering, abstraction means focusing on the necessary information only and ignoring irrelevant details. It is a way of simplifying complex systems to make them easier to understand and manage. For instance, a software engineer might ignore the details of how a database works to focus on the information it holds and how to retrieve or modify that information.

Algorithmic Thinking

At its core, software engineering is about creating algorithms — step-by-step procedures to solve a problem or accomplish a goal. Algorithmic thinking involves conceiving and expressing these procedures clearly and accurately and viewing every problem through an algorithmic lens. A well-designed algorithm not only solves the problem at hand but also does so efficiently, saving computational resources.

Parallel Thinking

Parallel thinking is a structured process where team members think in the same direction at the same time, allowing for more organized discussion and collaboration. It’s an approach popularized by Edward de Bono with the “ Six Thinking Hats ” technique, where each “hat” represents a different style of thinking.

In the context of software engineering, parallel thinking can be highly effective for problem solving. For instance, when dealing with a complex issue, the team can use the “White Hat” to focus solely on the data and facts about the problem, then the “Black Hat” to consider potential problems with a proposed solution, and so on. This structured approach can lead to more comprehensive analysis and more effective solutions, and it ensures that everyone’s perspectives are considered.

This is the process of identifying and fixing errors in code . Debugging involves carefully reviewing the code, reproducing and analyzing the error, and then making necessary modifications to rectify the problem. It’s a key part of maintaining and improving software quality.

Testing and Validation

Testing is an essential part of problem solving in software engineering. Engineers use a variety of tests to verify that their code works as expected and to uncover any potential issues. These range from unit tests that check individual components of the code to integration tests that ensure the pieces work well together. Validation, on the other hand, ensures that the solution not only works but also fulfills the intended requirements and objectives.

Explore verified tech roles & skills.

The definitive directory of tech roles, backed by machine learning and skills intelligence.

Explore all roles

Evaluating Problem-Solving Skills

We’ve examined the importance of problem-solving in the work of a software engineer and explored various techniques software engineers employ to approach complex challenges. Now, let’s delve into how hiring teams can identify and evaluate problem-solving skills during the hiring process.

Recognizing Problem-Solving Skills in Candidates

How can you tell if a candidate is a good problem solver? Look for these indicators:

- Previous Experience: A history of dealing with complex, challenging projects is often a good sign. Ask the candidate to discuss a difficult problem they faced in a previous role and how they solved it.

- Problem-Solving Questions: During interviews, pose hypothetical scenarios or present real problems your company has faced. Ask candidates to explain how they would tackle these issues. You’re not just looking for a correct solution but the thought process that led them there.

- Technical Tests: Coding challenges and other technical tests can provide insight into a candidate’s problem-solving abilities. Consider leveraging a platform for assessing these skills in a realistic, job-related context.

Assessing Problem-Solving Skills

Once you’ve identified potential problem solvers, here are a few ways you can assess their skills:

- Solution Effectiveness: Did the candidate solve the problem? How efficient and effective is their solution?

- Approach and Process: Go beyond whether or not they solved the problem and examine how they arrived at their solution. Did they break the problem down into manageable parts? Did they consider different perspectives and possibilities?

- Communication: A good problem solver can explain their thought process clearly. Can the candidate effectively communicate how they arrived at their solution and why they chose it?

- Adaptability: Problem-solving often involves a degree of trial and error. How does the candidate handle roadblocks? Do they adapt their approach based on new information or feedback?

Hiring managers play a crucial role in identifying and fostering problem-solving skills within their teams. By focusing on these abilities during the hiring process, companies can build teams that are more capable, innovative, and resilient.

Key Takeaways

As you can see, problem solving plays a pivotal role in software engineering. Far from being an occasional requirement, it is the lifeblood that drives development forward, catalyzes innovation, and delivers of quality software.

By leveraging problem-solving techniques, software engineers employ a powerful suite of strategies to overcome complex challenges. But mastering these techniques isn’t simple feat. It requires a learning mindset, regular practice, collaboration, reflective thinking, resilience, and a commitment to staying updated with industry trends.

For hiring managers and team leads, recognizing these skills and fostering a culture that values and nurtures problem solving is key. It’s this emphasis on problem solving that can differentiate an average team from a high-performing one and an ordinary product from an industry-leading one.

At the end of the day, software engineering is fundamentally about solving problems — problems that matter to businesses, to users, and to the wider society. And it’s the proficient problem solvers who stand at the forefront of this dynamic field, turning challenges into opportunities, and ideas into reality.

This article was written with the help of AI. Can you tell which parts?

Get started with HackerRank

Over 2,500 companies and 40% of developers worldwide use HackerRank to hire tech talent and sharpen their skills.

Recommended topics

- Hire Developers

- Problem Solving

What Factors Actually Impact a Developer’s Decision to Accept an Offer?

Find out how Siemens has benefited from our services

of the DAX 30 companies work with us

- Solutions Use cases Answer to your HR questions. smartData Market Intelligence Access to the world’s largest labor market database to tune your business and HR. smartPlan Future Workforce Planning Design your future workforce & uncover skills risks and gaps. smartPeople Skills Fulfillment Discover your internal skills and build a future-fit workforce.

- Podcasts & interviews

- ROI calculator

- HR Glossary

See why 100+ companies choose HRForecast.

- Book a demo

How to assess problem-solving skills

Human beings have been fascinated and motivated by problem-solving for as long as time. Let’s start with the classic ancient legend of Oedipus. The Sphinx aggressively addressed anyone who dared to enter Thebes by posing a riddle. If the traveler failed to answer the riddle correctly, the result was death. However, the Sphinx would be destroyed when the answer was finally correct.

Alas, along came Oedipus. He answered correctly. He unlocked this complex riddle and killed the Sphinx.

However, rationality was hardly defined at that time. Today, though, most people assume that it simply takes raw intelligence to be a great problem solver. However, it’s not the only crucial element.

Introduction to key problem-solving skills

You’ve surely noticed that many of the skills listed in the problem-solving process are repeated. This is because having these abilities and talents are so crucial to the entire course of getting a problem solved. Let’s look at some key problem-solving skills that are essential in the workplace.

Communication, listening, and customer service skills

In all the stages of problem-solving, you need to listen and engage to understand what the problem is and come to a conclusion as to what the solution may be. Another challenge is being able to communicate effectively so that people understand what you’re saying. It further rolls into interpersonal communication and customer service skills, which really are all about listening and responding appropriately.

Data analysis, research, and topic understanding skills

To produce the best solutions, employees must be able to understand the problem thoroughly. This is possible when the workforce studies the topic and the process correctly. In the workplace, this knowledge comes from years of relevant experience.

Dependability, believability, trustworthiness, and follow-through

To make change happen and take the following steps towards problem-solving, the qualities of dependability, trustworthiness, and diligence are a must. For example, if a person is known for not keeping their word, laziness, and committing blunders, that is not someone you’ll depend on when they provide you with a solution, will you?

Leadership, team-building, and decision-making

A true leader can learn and grow from the problems that arise in their jobs and utilize each challenge to hone their leadership skills. Problem-solving is an important skill for leaders who want to eliminate challenges that can otherwise hinder their people’s or their business’ growth. Let’s take a look at some statistics that prove just how important these skills are:

A Harvard Business Review study states that of all the skills that influence a leader’s success, problem-solving ranked third out of 16.

According to a survey by Goremotely.net, only 10% of CEOs are leaders who guide staff by example .

Another study at Havard Business Review found a direct link between teambuilding as a social activity and employee motivation.

Are you looking for a holistic way to develop leaders in your workplace?

Numerous skills and attributes define a successful one from a rookie when it comes to leaders. Our leadership development plan (with examples!) can help HR leaders identify potential leaders that are in sync with your company’s future goals.

Why is problem solving important in the workplace?

As a business leader, when too much of your time is spent managing escalations, the lack of problem-solving skills may hurt your business. While you may be hiring talented and capable employees and paying them well, it is only when you harness their full potential and translate that into business value that it is considered a successful hire.

The impact of continuing with poor problem-solving skills may show up in your organization as operational inefficiencies that may also manifest in product quality issues, defects, re-work and non-conformance to design specifications. When the product is defective, or the service is not up to the mark, it directly affects your customer’s experience and consequently reflects on the company’s profile.

At times, poor problem-solving skills could lead to missed market opportunities, slow time to market, customer dissatisfaction, regulatory compliance issues, and declining employee morale.

Problem-solving skills are important for individual business leaders as well. Suppose you’re busy responding to frequent incidents that have the same variables. In that case, this prevents you from focusing your time and effort on improving the future success of business outcomes.

Proven methods to assess and improve problem-solving skills

Pre-employment problem-solving skill assessment .

Recent research indicates that up to 85% of resumes contain misleading statements. Similarly, interviews are subjective and ultimately serve as poor predictors of job performance .

To provide a reliable and objective means of gathering job-related information on candidates, you must validate and develop pre-employment problem-solving assessments. You can further use the data from pre-employment tests to make informed and defensible hiring decisions.

Depending on the job profile, below are examples of pre-employment problem-solving assessment tests:

Personality tests: The rise of personality testing in the 20th century was an endeavor to maximize employee potential. Personality tests help to identify workplace patterns, relevant characteristics, and traits, and to assess how people may respond to different situations.

Examples of personality tests include the Big five personality traits test and Mercer | Mettl’s Dark Personality Inventory .

Cognitive ability test: A pre-employment aptitude test assesses individuals’ abilities such as critical thinking, verbal reasoning, numerical ability, problem-solving, decision-making, etc., which are indicators of a person’s intelligence quotient (IQ). The test results provide data about on-the-job performance. It also assesses current and potential employees for different job levels.

Criteria Cognitive Aptitude test , McQuaig Mental Agility Test , and Hogan Business Reasoning Inventory are commonly used cognitive ability assessment tests.

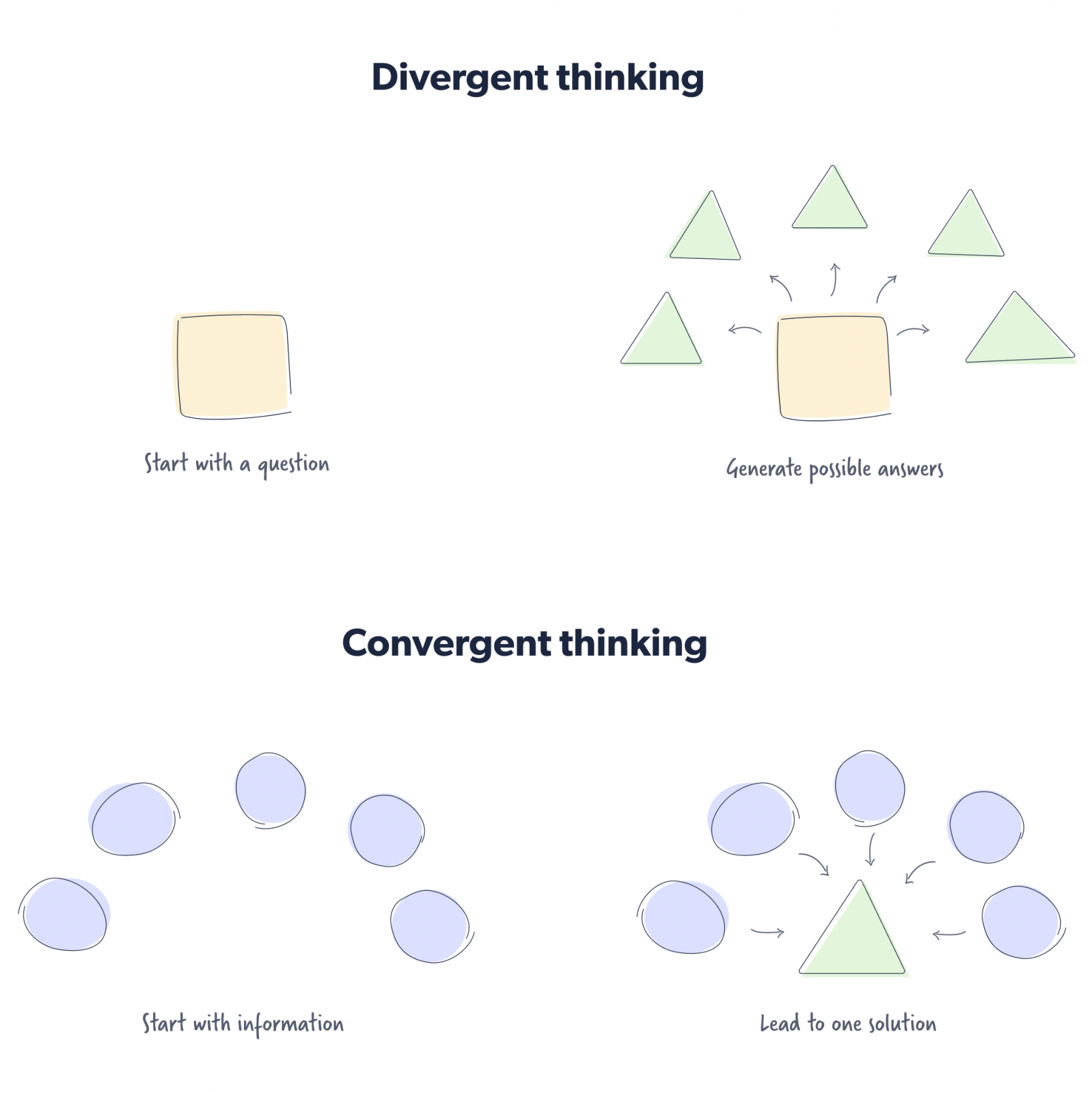

Convergent and divergent thinking methods

American psychologist JP Guilford coined the terms “convergent thinking” and “divergent thinking” in the 1950s.

Convergent thinking involves starting with pieces of information and then converging around a solution. An example would be determining the correct answer to a multiple-choice question.

The nature of the question does not demand creativity but rather inherently encourages a person to consider the veracity of each answer provided before selecting the single correct one.

Divergent thinking, on the other hand, starts with a prompt that encourages people to think critically, diverging towards distinct answers. An example of divergent thinking would be asking open-ended questions.

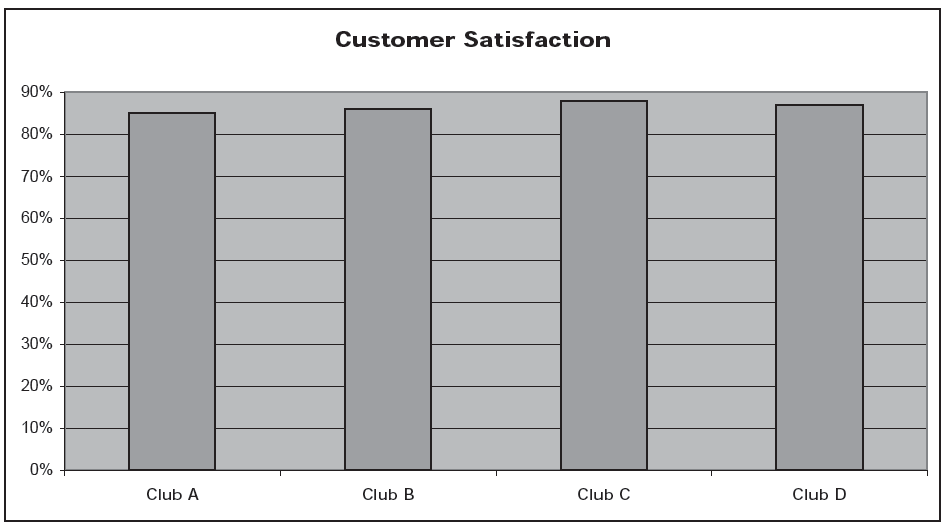

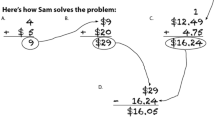

Here’s an example of what convergent thinking and a divergent problem-solving model would look like.

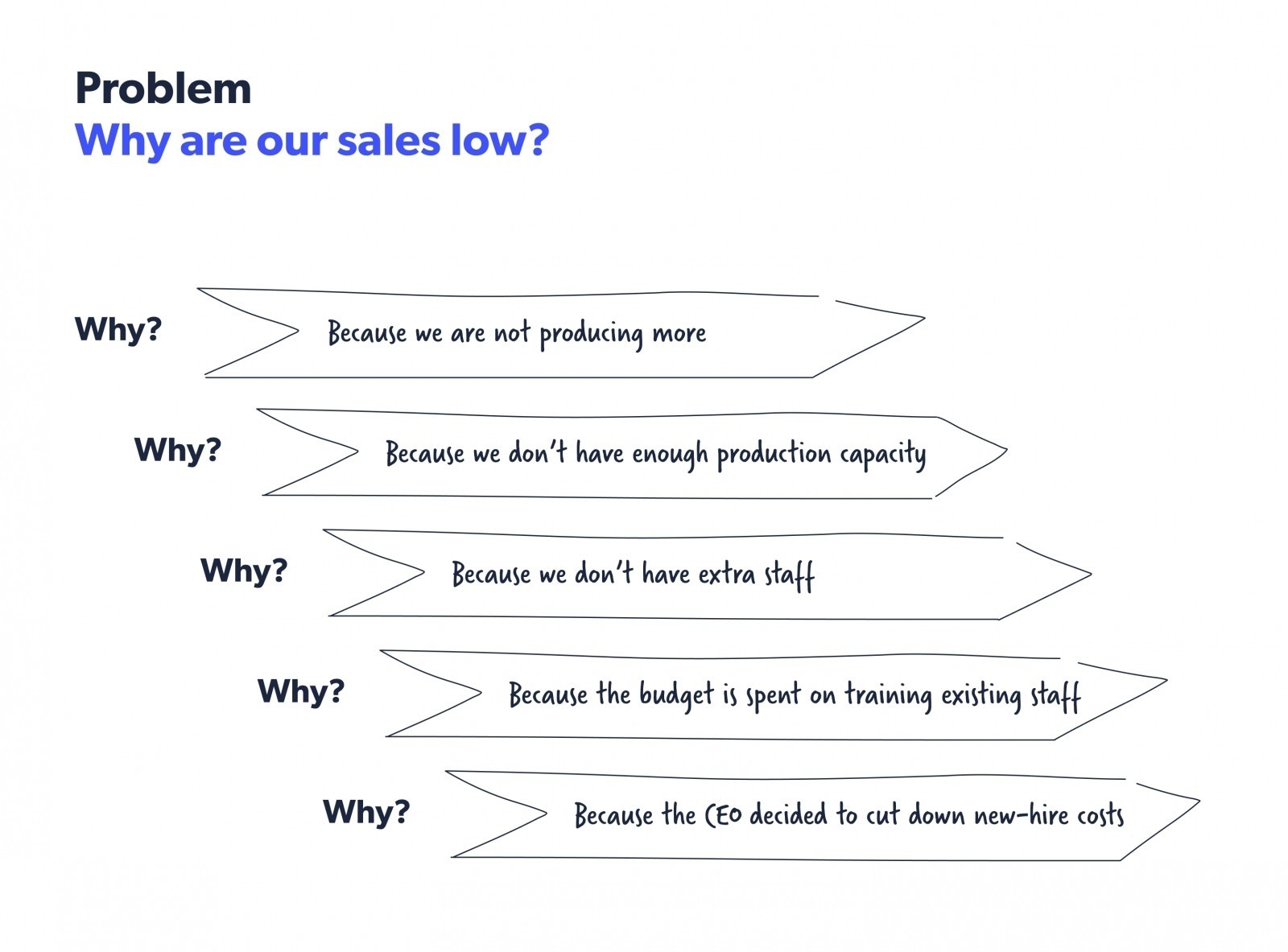

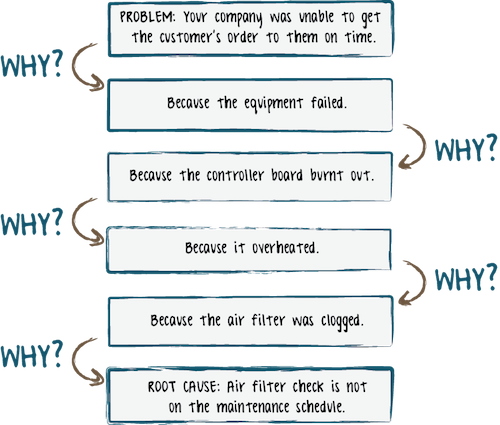

The 5 whys method , developed by Sakichi Toyoda, is part of the Toyota production system. In this method, when you come across a problem, you analyze the root cause by asking “Why?” five times. By recognizing the countermeasure, you can prevent the problem from recurring. Here’s an example of the 5 whys method.

Source: Kanbanzie

This method is specifically useful when you have a recurring problem that reoccurs despite repeated actions to address it. It indicates that you are treating the symptoms of the problem and not the actual problem itself.

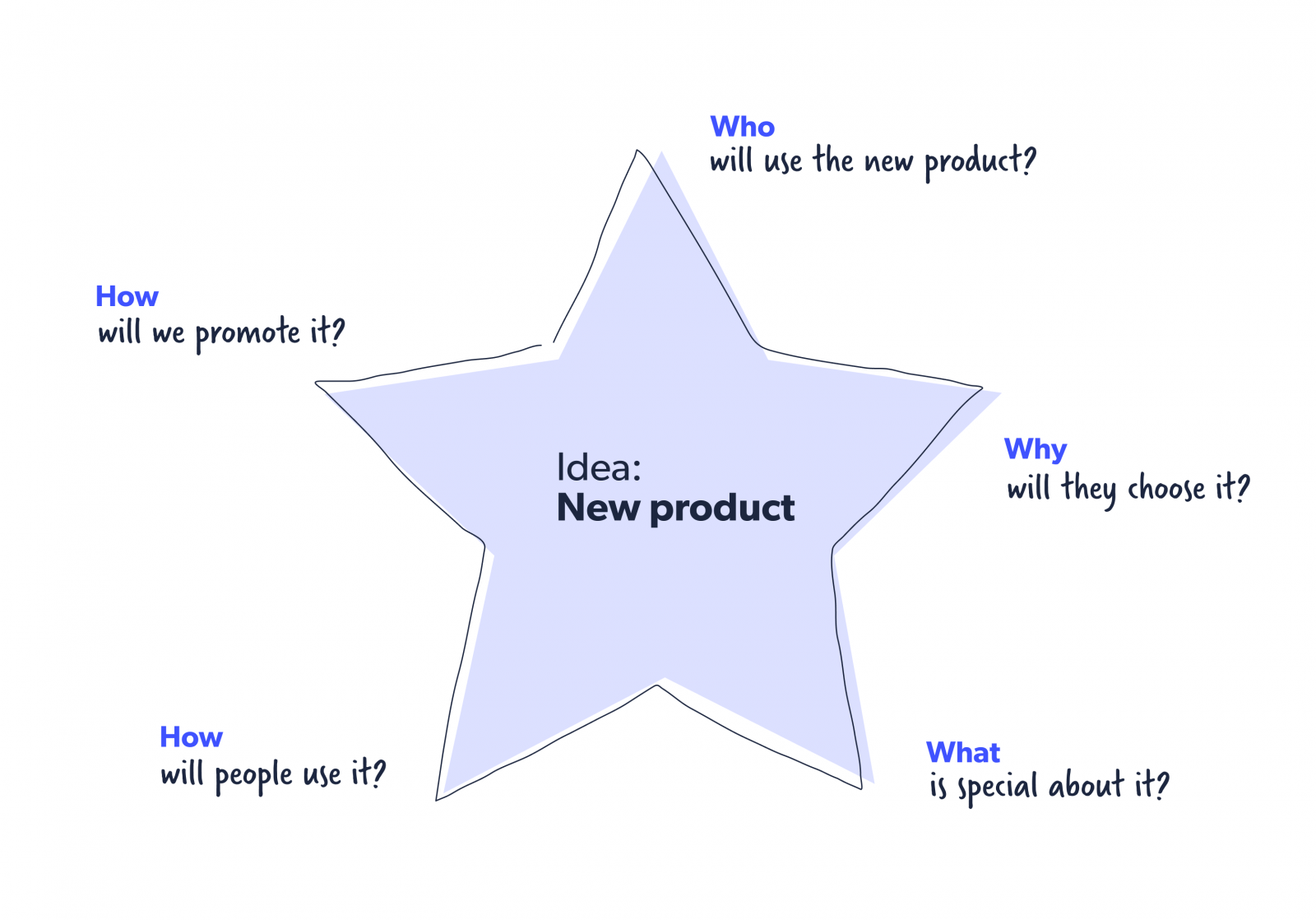

Starbursting

While brainstorming is about the team coming together to try to find answers, starbursting flips it over and asks everyone to think of questions instead. Here’s an example of the starbursting method.

The idea of this method is to go and expand from here, layering more and more questions until you’ve covered every eventuality of the problem.

Use of data analysis to measure improvement in problem-solving skills for your organization

Problem-solving and data analytics are often used together. Supporting data is very handy whenever a particular problem occurs. By using data analytics, you can find the supporting data and analyze it to use for solving a specific problem.

However, we must emphasize that the data you’re using to solve the problem is accurate and complete. Otherwise, misleading data may take you off track of the problem at hand or even make it appear more complex than it is. Moreover, as you gain knowledge about the current problem, it further eases the way to solve it.

Let’s dig deeper into the top 3 reasons data analytics is important in problem-solving.

1. Uncover hidden details

Modern data analytics tools have numerous features that let you analyze the given data thoroughly and find hidden or repeating trends without needing any extra human effort. These automated tools are great at extracting the depths of data, going back way into the past.

2. Automated models

Automation is the future. Businesses don’t have enough time or the budget to encourage manual workforces to go through loads of data to solve business problems. Instead, the tools can collect, combine, clean, and transform the relevant data all by themselves and finally use it to predict the solutions.

3. Explore similar problems

When you use a data analytics approach to solve problems, you can collect all the data available and store it. It can assist you when you find yourself in similar problems, providing references for how such issues were tackled in the past.

If you’re looking for ways to help develop problem-solving skills in the workplace and want to build a team of employees who can solve their own problems, contact us to learn how we can help you achieve it.

Stay up to date with our newsletter

Every month, we’ll send you a curated newsletter with our updates and the latest industry news.

More stories we think you will like

How to measure your succession planning strategy: 4 metrics you need to track

External vs internal hiring: pros and cons of every method

The importance of design thinking and Agile methods in today’s HR

HRForecast newsletter

Get only relevant and insightful letters from us every month

Not a customer yet? Contact us

Career at HRForecast

Why hrforecast.

- Customer Stories

- Trust and Security

- Data Analytics Approach

- IT Skills Analytics

- smartPeople

2023 © Copyright - HRForecast | Imprint | Privacy policy | Terms and conditions (MSA)

Introduction to Problem Solving Skills

What is problem solving and why is it important.

The ability to solve problems is a basic life skill and is essential to our day-to-day lives, at home, at school, and at work. We solve problems every day without really thinking about how we solve them. For example: it’s raining and you need to go to the store. What do you do? There are lots of possible solutions. Take your umbrella and walk. If you don't want to get wet, you can drive, or take the bus. You might decide to call a friend for a ride, or you might decide to go to the store another day. There is no right way to solve this problem and different people will solve it differently.

Problem solving is the process of identifying a problem, developing possible solution paths, and taking the appropriate course of action.

Why is problem solving important? Good problem solving skills empower you not only in your personal life but are critical in your professional life. In the current fast-changing global economy, employers often identify everyday problem solving as crucial to the success of their organizations. For employees, problem solving can be used to develop practical and creative solutions, and to show independence and initiative to employers.

Throughout this case study you will be asked to jot down your thoughts in idea logs. These idea logs are used for reflection on concepts and for answering short questions. When you click on the "Next" button, your responses will be saved for that page. If you happen to close the webpage, you will lose your work on the page you were on, but previous pages will be saved. At the end of the case study, click on the "Finish and Export to PDF" button to acknowledge completion of the case study and receive a PDF document of your idea logs.

What Does Problem Solving Look Like?

The ability to solve problems is a skill, and just like any other skill, the more you practice, the better you get. So how exactly do you practice problem solving? Learning about different problem solving strategies and when to use them will give you a good start. Problem solving is a process. Most strategies provide steps that help you identify the problem and choose the best solution. There are two basic types of strategies: algorithmic and heuristic.

Algorithmic strategies are traditional step-by-step guides to solving problems. They are great for solving math problems (in algebra: multiply and divide, then add or subtract) or for helping us remember the correct order of things (a mnemonic such as “Spring Forward, Fall Back” to remember which way the clock changes for daylight saving time, or “Righty Tighty, Lefty Loosey” to remember what direction to turn bolts and screws). Algorithms are best when there is a single path to the correct solution.

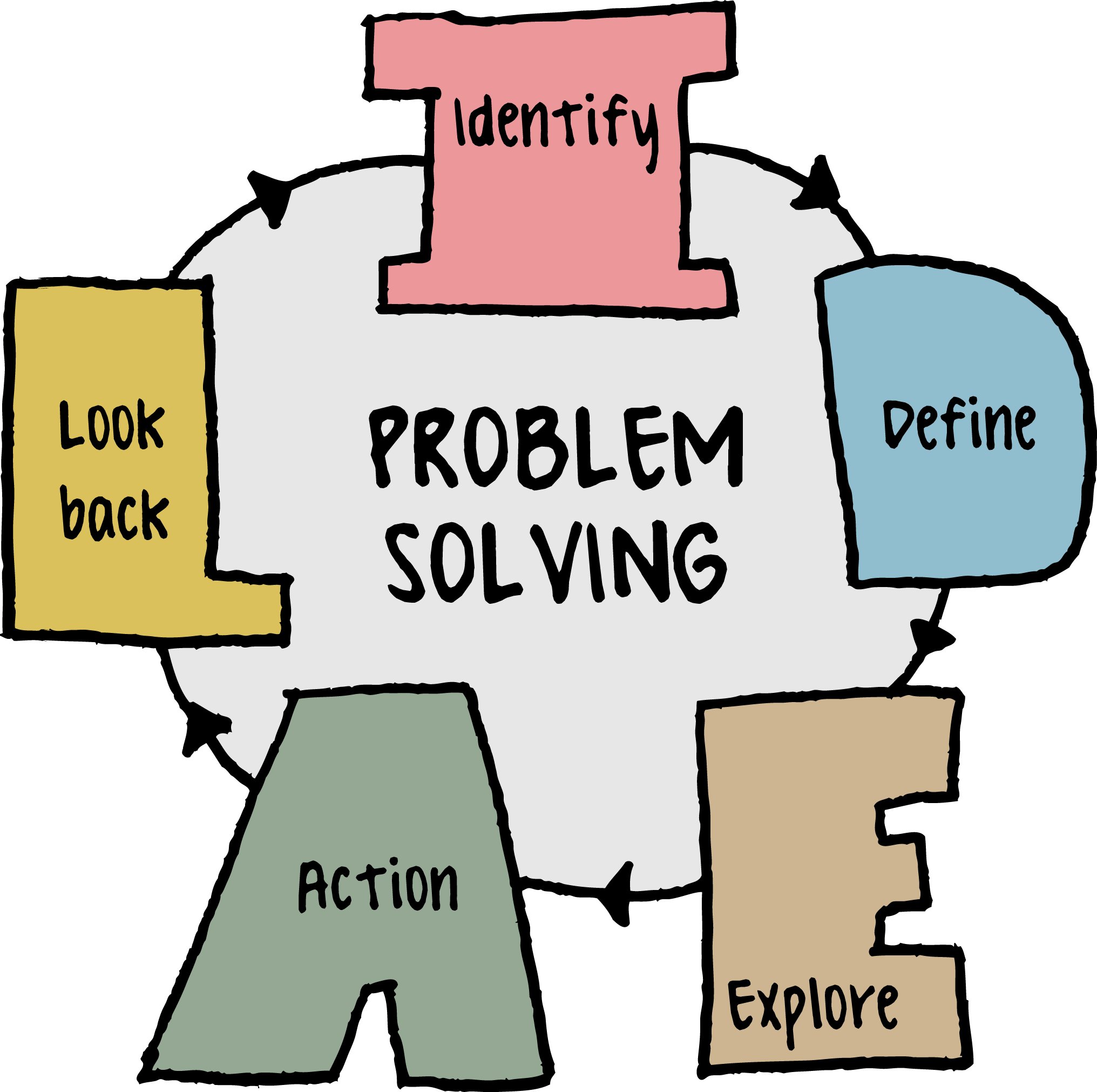

But what do you do when there is no single solution for your problem? Heuristic methods are general guides used to identify possible solutions. A popular one that is easy to remember is IDEAL [ Bransford & Stein, 1993 ] :

- I dentify the problem

- D efine the context of the problem

- E xplore possible strategies

- A ct on best solution

IDEAL is just one problem solving strategy. Building a toolbox of problem solving strategies will improve your problem solving skills. With practice, you will be able to recognize and use multiple strategies to solve complex problems.

Watch the video

What is the best way to get a peanut out of a tube that cannot be moved? Watch a chimpanzee solve this problem in the video below [ Geert Stienissen, 2010 ].

[PDF transcript]

Describe the series of steps you think the chimpanzee used to solve this problem.

- [Page 2: What does Problem Solving Look Like?] Describe the series of steps you think the chimpanzee used to solve this problem.

Think of an everyday problem you've encountered recently and describe your steps for solving it.

- [Page 2: What does Problem Solving Look Like?] Think of an everyday problem you've encountered recently and describe your steps for solving it.

Developing Problem Solving Processes

Problem solving is a process that uses steps to solve problems. But what does that really mean? Let's break it down and start building our toolbox of problem solving strategies.

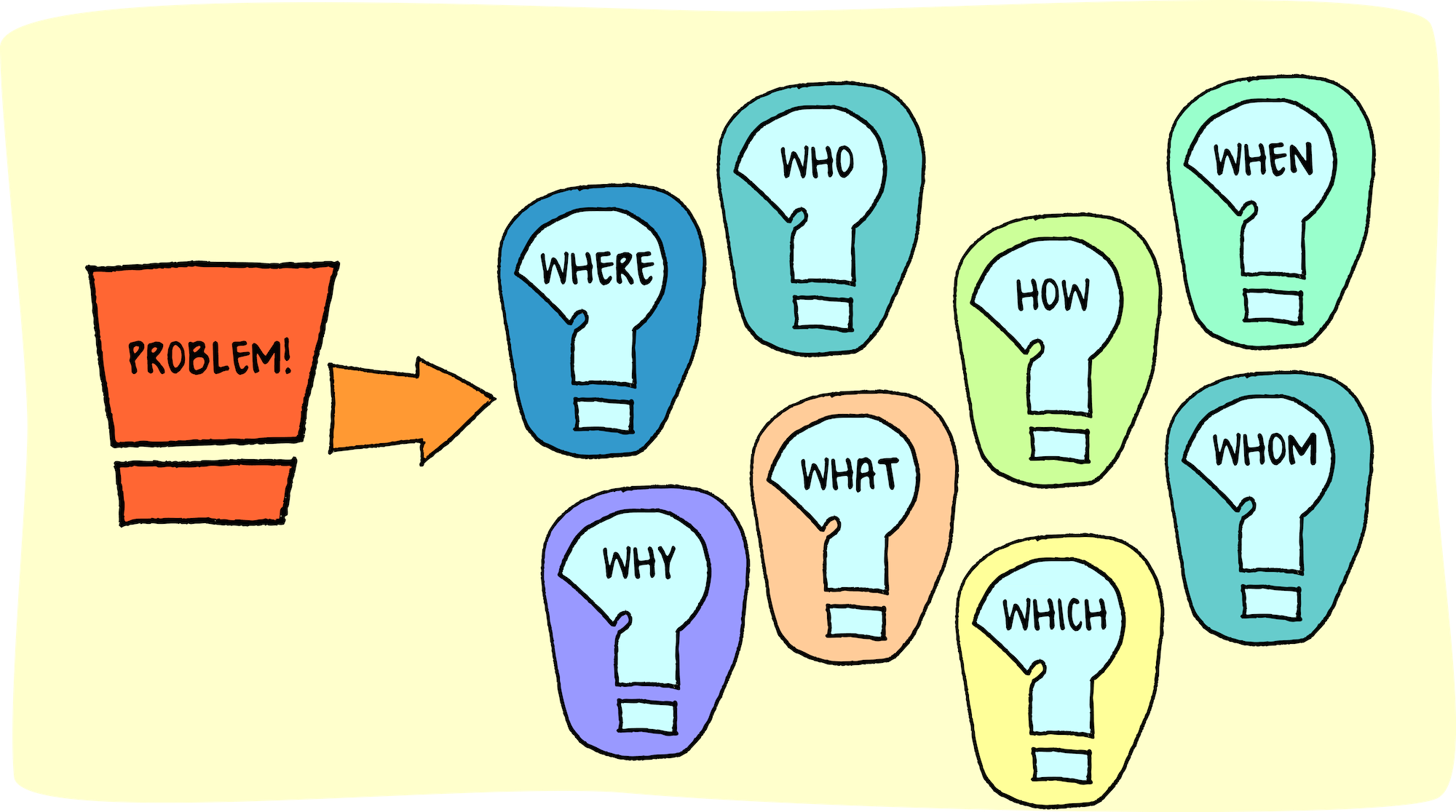

What is the first step of solving any problem? The first step is to recognize that there is a problem and identify the right cause of the problem. This may sound obvious, but similar problems can arise from different events, and the real issue may not always be apparent. To really solve the problem, it's important to find out what started it all. This is called identifying the root cause .

Example: You and your classmates have been working long hours on a project in the school's workshop. The next afternoon, you try to use your student ID card to access the workshop, but discover that your magnetic strip has been demagnetized. Since the card was a couple of years old, you chalk it up to wear and tear and get a new ID card. Later that same week you learn that several of your classmates had the same problem! After a little investigation, you discover that a strong magnet was stored underneath a workbench in the workshop. The magnet was the root cause of the demagnetized student ID cards.

The best way to identify the root cause of the problem is to ask questions and gather information. If you have a vague problem, investigating facts is more productive than guessing a solution. Ask yourself questions about the problem. What do you know about the problem? What do you not know? When was the last time it worked correctly? What has changed since then? Can you diagram the process into separate steps? Where in the process is the problem occurring? Be curious, ask questions, gather facts, and make logical deductions rather than assumptions.

Watch Adam Savage from Mythbusters, describe his problem solving process [ ForaTv, 2010 ]. As you watch this section of the video, try to identify the questions he asks and the different strategies he uses.

Adam Savage shared many of his problem solving processes. List the ones you think are the five most important. Your list may be different from other people in your class—that's ok!

- [Page 3: Developing Problem Solving Processes] Adam Savage shared many of his problem solving processes. List the ones you think are the five most important.

“The ability to ask the right question is more than half the battle of finding the answer.” — Thomas J. Watson , founder of IBM

Voices From the Field: Solving Problems

In manufacturing facilities and machine shops, everyone on the floor is expected to know how to identify problems and find solutions. Today's employers look for the following skills in new employees: to analyze a problem logically, formulate a solution, and effectively communicate with others.

In this video, industry professionals share their own problem solving processes, the problem solving expectations of their employees, and an example of how a problem was solved.

Meet the Partners:

- Taconic High School in Pittsfield, Massachusetts, is a comprehensive, fully accredited high school with special programs in Health Technology, Manufacturing Technology, and Work-Based Learning.

- Berkshire Community College in Pittsfield, Massachusetts, prepares its students with applied manufacturing technical skills, providing hands-on experience at industrial laboratories and manufacturing facilities, and instructing them in current technologies.

- H.C. Starck in Newton, Massachusetts, specializes in processing and manufacturing technology metals, such as tungsten, niobium, and tantalum. In almost 100 years of experience, they hold over 900 patents, and continue to innovate and develop new products.

- Nypro Healthcare in Devens, Massachusetts, specializes in precision injection-molded healthcare products. They are committed to good manufacturing processes including lean manufacturing and process validation.

Making Decisions

Now that you have a couple problem solving strategies in your toolbox, let's practice. In this exercise, you are given a scenario and you will be asked to decide what steps you would take to identify and solve the problem.

Scenario: You are a new employee and have just finished your training. As your first project, you have been assigned the milling of several additional components for a regular customer. Together, you and your trainer, Bill, set up for the first run. Checking your paperwork, you gather the tools and materials on the list. As you are mounting the materials on the table, you notice that you didn't grab everything and hurriedly grab a few more items from one of the bins. Once the material is secured on the CNC table, you load tools into the tool carousel in the order listed on the tool list and set the fixture offsets.

Bill tells you that since this is a rerun of a job several weeks ago, the CAD/CAM model has already been converted to CNC G-code. Bill helps you download the code to the CNC machine. He gives you the go-ahead and leaves to check on another employee. You decide to start your first run.

What problems did you observe in the video?

- [Page 5: Making Decisions] What problems did you observe in the video?

- What do you do next?

- Try to fix it yourself.

- Ask your trainer for help.

As you are cleaning up, you think about what happened and wonder why it happened. You try to create a mental picture of what happened. You are not exactly sure what the end mill hit, but it looked like it might have hit the dowel pin. You wonder if you grabbed the correct dowel pins from the bins earlier.

You can think of two possible next steps. You can recheck the dowel pin length to make sure it is the correct length, or do a dry run using the CNC single step or single block function with the spindle empty to determine what actually happened.

- Check the dowel pins.

- Use the single step/single block function to determine what happened.

You notice that your trainer, Bill, is still on the floor and decide to ask him for help. You describe the problem to him. Bill asks if you know what the end mill ran into. You explain that you are not sure but you think it was the dowel pin. Bill reminds you that it is important to understand what happened so you can fix the correct problem. He suggests that you start all over again and begin with a dry run using the single step/single block function, with the spindle empty, to determine what it hit. Or, since it happened at the end, he mentions that you can also check the G-code to make sure the Z-axis is raised before returning to the home position.

- Run the single step/single block function.

- Edit the G-code to raise the Z-axis.

You finish cleaning up and check the CNC for any damage. Luckily, everything looks good. You check your paperwork and gather the components and materials again. You look at the dowel pins you used earlier, and discover that they are not the right length. As you go to grab the correct dowel pins, you have to search though several bins. For the first time, you are aware of the mess - it looks like the dowel pins and other items have not been put into the correctly labeled bins. You spend 30 minutes straightening up the bins and looking for the correct dowel pins.

Finally finding them, you finish setting up. You load tools into the tool carousel in the order listed on the tool list and set the fixture offsets. Just to make sure, you use the CNC single step/single block function, to do a dry run of the part. Everything looks good! You are ready to create your first part. The first component is done, and, as you admire your success, you notice that the part feels hotter than it should.

You wonder why? You go over the steps of the process to mentally figure out what could be causing the residual heat. You wonder if there is a problem with the CNC's coolant system or if the problem is in the G-code.

- Look at the G-code.

After thinking about the problem, you decide that maybe there's something wrong with the setup. First, you clean up the damaged materials and remove the broken tool. You check the CNC machine carefully for any damage. Luckily, everything looks good. It is time to start over again from the beginning.

You again check your paperwork and gather the tools and materials on the setup sheet. After securing the new materials, you use the CNC single step/single block function with the spindle empty, to do a dry run of the part. You watch carefully to see if you can figure out what happened. It looks to you like the spindle barely misses hitting the dowel pin. You determine that the end mill was broken when it hit the dowel pin while returning to the start position.

After conducting a dry run using the single step/single block function, you determine that the end mill was damaged when it hit the dowel pin on its return to the home position. You discuss your options with Bill. Together, you decide the best thing to do would be to edit the G-code and raise the Z-axis before returning to home. You open the CNC control program and edit the G-code. Just to make sure, you use the CNC single step/single block function, to do another dry run of the part. You are ready to create your first part. It works. You first part is completed. Only four more to go.

As you are cleaning up, you notice that the components are hotter than you expect and the end mill looks more worn than it should be. It dawns on you that while you were milling the component, the coolant didn't turn on. You wonder if it is a software problem in the G-code or hardware problem with the CNC machine.

It's the end of the day and you decide to finish the rest of the components in the morning.

- You decide to look at the G-code in the morning.

- You leave a note on the machine, just in case.

You decide that the best thing to do would be to edit the G-code and raise the Z-axis of the spindle before it returns to home. You open the CNC control program and edit the G-code.

While editing the G-code to raise the Z-axis, you notice that the coolant is turned off at the beginning of the code and at the end of the code. The coolant command error caught your attention because your coworker, Mark, mentioned having a similar issue during lunch. You change the coolant command to turn the mist on.

- You decide to talk with your supervisor.

- You discuss what happened with a coworker over lunch.

As you reflect on the residual heat problem, you think about the machining process and the factors that could have caused the issue. You try to think of anything and everything that could be causing the issue. Are you using the correct tool for the specified material? Are you using the specified material? Is it running at the correct speed? Is there enough coolant? Are there chips getting in the way?

Wait, was the coolant turned on? As you replay what happened in your mind, you wonder why the coolant wasn't turned on. You decide to look at the G-code to find out what is going on.

From the milling machine computer, you open the CNC G-code. You notice that there are no coolant commands. You add them in and on the next run, the coolant mist turns on and the residual heat issues is gone. Now, its on to creating the rest of the parts.

Have you ever used brainstorming to solve a problem? Chances are, you've probably have, even if you didn't realize it.

You notice that your trainer, Bill, is on the floor and decide to ask him for help. You describe the problem with the end mill breaking, and how you discovered that items are not being returned to the correctly labeled bins. You think this caused you to grab the incorrect length dowel pins on your first run. You have sorted the bins and hope that the mess problem is fixed. You then go on to tell Bill about the residual heat issue with the completed part.

Together, you go to the milling machine. Bill shows you how to check the oil and coolant levels. Everything looks good at the machine level. Next, on the CNC computer, you open the CNC G-code. While looking at the code, Bill points out that there are no coolant commands. Bill adds them in and when you rerun the program, it works.

Bill is glad you mentioned the problem to him. You are the third worker to mention G-code issues over the last week. You noticed the coolant problems in your G-code, John noticed a Z-axis issue in his G-code, and Sam had issues with both the Z-axis and the coolant. Chances are, there is a bigger problem and Bill will need to investigate the root cause .

Talking with Bill, you discuss the best way to fix the problem. Bill suggests editing the G-code to raise the Z-axis of the spindle before it returns to its home position. You open the CNC control program and edit the G-code. Following the setup sheet, you re-setup the job and use the CNC single step/single block function, to do another dry run of the part. Everything looks good, so you run the job again and create the first part. It works. Since you need four of each component, you move on to creating the rest of them before cleaning up and leaving for the day.

It's a new day and you have new components to create. As you are setting up, you go in search of some short dowel pins. You discover that the bins are a mess and components have not been put away in the correctly labeled bins. You wonder if this was the cause of yesterday's problem. As you reorganize the bins and straighten up the mess, you decide to mention the mess issue to Bill in your afternoon meeting.

You describe the bin mess and using the incorrect length dowels to Bill. He is glad you mentioned the problem to him. You are not the first person to mention similar issues with tools and parts not being put away correctly. Chances are there is a bigger safety issue here that needs to be addressed in the next staff meeting.

In any workplace, following proper safety and cleanup procedures is always important. This is especially crucial in manufacturing where people are constantly working with heavy, costly and sometimes dangerous equipment. When issues and problems arise, it is important that they are addressed in an efficient and timely manner. Effective communication is an important tool because it can prevent problems from recurring, avoid injury to personnel, reduce rework and scrap, and ultimately, reduce cost, and save money.

You now know that the end mill was damaged when it hit the dowel pin. It seems to you that the easiest thing to do would be to edit the G-code and raise the Z-axis position of the spindle before it returns to the home position. You open the CNC control program and edit the G-code, raising the Z-axis. Starting over, you follow the setup sheet and re-setup the job. This time, you use the CNC single step/single block function, to do another dry run of the part. Everything looks good, so you run the job again and create the first part.

At the end of the day, you are reviewing your progress with your trainer, Bill. After you describe the day's events, he reminds you to always think about safety and the importance of following work procedures. He decides to bring the issue up in the next morning meeting as a reminder to everyone.

In any workplace, following proper procedures (especially those that involve safety) is always important. This is especially crucial in manufacturing where people are constantly working with heavy, costly, and sometimes dangerous equipment. When issues and problems arise, it is important that they are addressed in an efficient and timely manner. Effective communication is an important tool because it can prevent problems from recurring, avoid injury to personnel, reduce rework and scrap, and ultimately, reduce cost, and save money. One tool to improve communication is the morning meeting or huddle.

The next morning, you check the G-code to determine what is wrong with the coolant. You notice that the coolant is turned off at the beginning of the code and also at the end of the code. This is strange. You change the G-code to turn the coolant on at the beginning of the run and off at the end. This works and you create the rest of the parts.

Throughout the day, you keep wondering what caused the G-code error. At lunch, you mention the G-code error to your coworker, John. John is not surprised. He said that he encountered a similar problem earlier this week. You decide to talk with your supervisor the next time you see him.

You are in luck. You see your supervisor by the door getting ready to leave. You hurry over to talk with him. You start off by telling him about how you asked Bill for help. Then you tell him there was a problem and the end mill was damaged. You describe the coolant problem in the G-code. Oh, and by the way, John has seen a similar problem before.

Your supervisor doesn't seem overly concerned, errors happen. He tells you "Good job, I am glad you were able to fix the issue." You are not sure whether your supervisor understood your explanation of what happened or that it had happened before.

The challenge of communicating in the workplace is learning how to share your ideas and concerns. If you need to tell your supervisor that something is not going well, it is important to remember that timing, preparation, and attitude are extremely important.

It is the end of your shift, but you want to let the next shift know that the coolant didn't turn on. You do not see your trainer or supervisor around. You decide to leave a note for the next shift so they are aware of the possible coolant problem. You write a sticky note and leave it on the monitor of the CNC control system.

How effective do you think this solution was? Did it address the problem?

In this scenario, you discovered several problems with the G-code that need to be addressed. When issues and problems arise, it is important that they are addressed in an efficient and timely manner. Effective communication is an important tool because it can prevent problems from recurring and avoid injury to personnel. The challenge of communicating in the workplace is learning how and when to share your ideas and concerns. If you need to tell your co-workers or supervisor that there is a problem, it is important to remember that timing and the method of communication are extremely important.

You are able to fix the coolant problem in the G-code. While you are glad that the problem is fixed, you are worried about why it happened in the first place. It is important to remember that if a problem keeps reappearing, you may not be fixing the right problem. You may only be addressing the symptoms.

You decide to talk to your trainer. Bill is glad you mentioned the problem to him. You are the third worker to mention G-code issues over the last week. You noticed the coolant problems in your G-code, John noticed a Z-axis issue in his G-code, and Sam had issues with both the Z-axis and the coolant. Chances are, there is a bigger problem and Bill will need to investigate the root cause .

Over lunch, you ask your coworkers about the G-code problem and what may be causing the error. Several people mention having similar problems but do not know the cause.

You have now talked to three coworkers who have all experienced similar coolant G-code problems. You make a list of who had the problem, when they had the problem, and what each person told you.

When you see your supervisor later that afternoon, you are ready to talk with him. You describe the problem you had with your component and the damaged bit. You then go on to tell him about talking with Bill and discovering the G-code issue. You show him your notes on your coworkers' coolant issues, and explain that you think there might be a bigger problem.

You supervisor thanks you for your initiative in identifying this problem. It sounds like there is a bigger problem and he will need to investigate the root cause. He decides to call a team huddle to discuss the issue, gather more information, and talk with the team about the importance of communication.

Root Cause Analysis

Root cause analysis ( RCA ) is a method of problem solving that identifies the underlying causes of an issue. Root cause analysis helps people answer the question of why the problem occurred in the first place. RCA uses clear cut steps in its associated tools, like the "5 Whys Analysis" and the "Cause and Effect Diagram," to identify the origin of the problem, so that you can:

- Determine what happened.

- Determine why it happened.

- Fix the problem so it won’t happen again.

RCA works under the idea that systems and events are connected. An action in one area triggers an action in another, and another, and so on. By tracing back these actions, you can discover where the problem started and how it developed into the problem you're now facing. Root cause analysis can prevent problems from recurring, reduce injury to personnel, reduce rework and scrap, and ultimately, reduce cost and save money. There are many different RCA techniques available to determine the root cause of a problem. These are just a few:

- Root Cause Analysis Tools

- 5 Whys Analysis

- Fishbone or Cause and Effect Diagram

- Pareto Analysis

How Huddles Work

Communication is a vital part of any setting where people work together. Effective communication helps employees and managers form efficient teams. It builds trusts between employees and management, and reduces unnecessary competition because each employee knows how their part fits in the larger goal.

One tool that management can use to promote communication in the workplace is the huddle . Just like football players on the field, a huddle is a short meeting where everyone is standing in a circle. A daily team huddle ensures that team members are aware of changes to the schedule, reiterated problems and safety issues, and how their work impacts one another. When done right, huddles create collaboration, communication, and accountability to results. Impromptu huddles can be used to gather information on a specific issue and get each team member's input.

The most important thing to remember about huddles is that they are short, lasting no more than 10 minutes, and their purpose is to communicate and identify. In essence, a huddle’s purpose is to identify priorities, communicate essential information, and discover roadblocks to productivity.

Who uses huddles? Many industries and companies use daily huddles. At first thought, most people probably think of hospitals and their daily patient update meetings, but lots of managers use daily meetings to engage their employees. Here are a few examples:

- Brian Scudamore, CEO of 1-800-Got-Junk? , uses the daily huddle as an operational tool to take the pulse of his employees and as a motivational tool. Watch a morning huddle meeting .

- Fusion OEM, an outsourced manufacturing and production company. What do employees take away from the daily huddle meeting .

- Biz-Group, a performance consulting group. Tips for a successful huddle .

Brainstorming

One tool that can be useful in problem solving is brainstorming . Brainstorming is a creativity technique designed to generate a large number of ideas for the solution to a problem. The method was first popularized in 1953 by Alex Faickney Osborn in the book Applied Imagination . The goal is to come up with as many ideas as you can in a fixed amount of time. Although brainstorming is best done in a group, it can be done individually. Like most problem solving techniques, brainstorming is a process.

- Define a clear objective.

- Have an agreed a time limit.

- During the brainstorming session, write down everything that comes to mind, even if the idea sounds crazy.

- If one idea leads to another, write down that idea too.

- Combine and refine ideas into categories of solutions.

- Assess and analyze each idea as a potential solution.

When used during problem solving, brainstorming can offer companies new ways of encouraging staff to think creatively and improve production. Brainstorming relies on team members' diverse experiences, adding to the richness of ideas explored. This means that you often find better solutions to the problems. Team members often welcome the opportunity to contribute ideas and can provide buy-in for the solution chosen—after all, they are more likely to be committed to an approach if they were involved in its development. What's more, because brainstorming is fun, it helps team members bond.

- Watch Peggy Morgan Collins, a marketing executive at Power Curve Communications discuss How to Stimulate Effective Brainstorming .

- Watch Kim Obbink, CEO of Filter Digital, a digital content company, and her team share their top five rules for How to Effectively Generate Ideas .

Importance of Good Communication and Problem Description

Communication is one of the most frequent activities we engage in on a day-to-day basis. At some point, we have all felt that we did not effectively communicate an idea as we would have liked. The key to effective communication is preparation. Rather than attempting to haphazardly improvise something, take a few minutes and think about what you want say and how you will say it. If necessary, write yourself a note with the key points or ideas in the order you want to discuss them. The notes can act as a reminder or guide when you talk to your supervisor.

Tips for clear communication of an issue:

- Provide a clear summary of your problem. Start at the beginning, give relevant facts, timelines, and examples.

- Avoid including your opinion or personal attacks in your explanation.

- Avoid using words like "always" or "never," which can give the impression that you are exaggerating the problem.

- If this is an ongoing problem and you have collected documentation, give it to your supervisor once you have finished describing the problem.

- Remember to listen to what's said in return; communication is a two-way process.

Not all communication is spoken. Body language is nonverbal communication that includes your posture, your hands and whether you make eye contact. These gestures can be subtle or overt, but most importantly they communicate meaning beyond what is said. When having a conversation, pay attention to how you stand. A stiff position with arms crossed over your chest may imply that you are being defensive even if your words state otherwise. Shoving your hands in your pockets when speaking could imply that you have something to hide. Be wary of using too many hand gestures because this could distract listeners from your message.

The challenge of communicating in the workplace is learning how and when to share your ideas or concerns. If you need to tell your supervisor or co-worker about something that is not going well, keep in mind that good timing and good attitude will go a long way toward helping your case.

Like all skills, effective communication needs to be practiced. Toastmasters International is perhaps the best known public speaking organization in the world. Toastmasters is open to anyone who wish to improve their speaking skills and is willing to put in the time and effort to do so. To learn more, visit Toastmasters International .

Methods of Communication

Communication of problems and issues in any workplace is important, particularly when safety is involved. It is therefore crucial in manufacturing where people are constantly working with heavy, costly, and sometimes dangerous equipment. As issues and problems arise, they need to be addressed in an efficient and timely manner. Effective communication is an important skill because it can prevent problems from recurring, avoid injury to personnel, reduce rework and scrap, and ultimately, reduce cost and save money.

There are many different ways to communicate: in person, by phone, via email, or written. There is no single method that fits all communication needs, each one has its time and place.

In person: In the workplace, face-to-face meetings should be utilized whenever possible. Being able to see the person you need to speak to face-to-face gives you instant feedback and helps you gauge their response through their body language. Be careful of getting sidetracked in conversation when you need to communicate a problem.

Email: Email has become the communication standard for most businesses. It can be accessed from almost anywhere and is great for things that don’t require an immediate response. Email is a great way to communicate non-urgent items to large amounts of people or just your team members. One thing to remember is that most people's inboxes are flooded with emails every day and unless they are hyper vigilant about checking everything, important items could be missed. For issues that are urgent, especially those around safety, email is not always be the best solution.

Phone: Phone calls are more personal and direct than email. They allow us to communicate in real time with another person, no matter where they are. Not only can talking prevent miscommunication, it promotes a two-way dialogue. You don’t have to worry about your words being altered or the message arriving on time. However, mobile phone use and the workplace don't always mix. In particular, using mobile phones in a manufacturing setting can lead to a variety of problems, cause distractions, and lead to serious injury.

Written: Written communication is appropriate when detailed instructions are required, when something needs to be documented, or when the person is too far away to easily speak with over the phone or in person.

There is no "right" way to communicate, but you should be aware of how and when to use the appropriate form of communication for your situation. When deciding the best way to communicate with a co-worker or manager, put yourself in their shoes, and think about how you would want to learn about the issue. Also, consider what information you would need to know to better understand the issue. Use your good judgment of the situation and be considerate of your listener's viewpoint.

Did you notice any other potential problems in the previous exercise?

- [Page 6:] Did you notice any other potential problems in the previous exercise?

Summary of Strategies

In this exercise, you were given a scenario in which there was a problem with a component you were creating on a CNC machine. You were then asked how you wanted to proceed. Depending on your path through this exercise, you might have found an easy solution and fixed it yourself, asked for help and worked with your trainer, or discovered an ongoing G-code problem that was bigger than you initially thought.

When issues and problems arise, it is important that they are addressed in an efficient and timely manner. Communication is an important tool because it can prevent problems from recurring, avoid injury to personnel, reduce rework and scrap, and ultimately, reduce cost, and save money. Although, each path in this exercise ended with a description of a problem solving tool for your toolbox, the first step is always to identify the problem and define the context in which it happened.

There are several strategies that can be used to identify the root cause of a problem. Root cause analysis (RCA) is a method of problem solving that helps people answer the question of why the problem occurred. RCA uses a specific set of steps, with associated tools like the “5 Why Analysis" or the “Cause and Effect Diagram,” to identify the origin of the problem, so that you can:

Once the underlying cause is identified and the scope of the issue defined, the next step is to explore possible strategies to fix the problem.

If you are not sure how to fix the problem, it is okay to ask for help. Problem solving is a process and a skill that is learned with practice. It is important to remember that everyone makes mistakes and that no one knows everything. Life is about learning. It is okay to ask for help when you don’t have the answer. When you collaborate to solve problems you improve workplace communication and accelerates finding solutions as similar problems arise.

One tool that can be useful for generating possible solutions is brainstorming . Brainstorming is a technique designed to generate a large number of ideas for the solution to a problem. The method was first popularized in 1953 by Alex Faickney Osborn in the book Applied Imagination. The goal is to come up with as many ideas as you can, in a fixed amount of time. Although brainstorming is best done in a group, it can be done individually.

Depending on your path through the exercise, you may have discovered that a couple of your coworkers had experienced similar problems. This should have been an indicator that there was a larger problem that needed to be addressed.

In any workplace, communication of problems and issues (especially those that involve safety) is always important. This is especially crucial in manufacturing where people are constantly working with heavy, costly, and sometimes dangerous equipment. When issues and problems arise, it is important that they be addressed in an efficient and timely manner. Effective communication is an important tool because it can prevent problems from recurring, avoid injury to personnel, reduce rework and scrap, and ultimately, reduce cost and save money.

One strategy for improving communication is the huddle . Just like football players on the field, a huddle is a short meeting with everyone standing in a circle. A daily team huddle is a great way to ensure that team members are aware of changes to the schedule, any problems or safety issues are identified and that team members are aware of how their work impacts one another. When done right, huddles create collaboration, communication, and accountability to results. Impromptu huddles can be used to gather information on a specific issue and get each team member's input.

To learn more about different problem solving strategies, choose an option below. These strategies accompany the outcomes of different decision paths in the problem solving exercise.

- View Problem Solving Strategies Select a strategy below... Root Cause Analysis How Huddles Work Brainstorming Importance of Good Problem Description Methods of Communication

Communication is one of the most frequent activities we engage in on a day-to-day basis. At some point, we have all felt that we did not effectively communicate an idea as we would have liked. The key to effective communication is preparation. Rather than attempting to haphazardly improvise something, take a few minutes and think about what you want say and how you will say it. If necessary, write yourself a note with the key points or ideas in the order you want to discuss them. The notes can act as a reminder or guide during your meeting.

- Provide a clear summary of the problem. Start at the beginning, give relevant facts, timelines, and examples.

In person: In the workplace, face-to-face meetings should be utilized whenever possible. Being able to see the person you need to speak to face-to-face gives you instant feedback and helps you gauge their response in their body language. Be careful of getting sidetracked in conversation when you need to communicate a problem.

There is no "right" way to communicate, but you should be aware of how and when to use the appropriate form of communication for the situation. When deciding the best way to communicate with a co-worker or manager, put yourself in their shoes, and think about how you would want to learn about the issue. Also, consider what information you would need to know to better understand the issue. Use your good judgment of the situation and be considerate of your listener's viewpoint.

"Never try to solve all the problems at once — make them line up for you one-by-one.” — Richard Sloma

Problem Solving: An Important Job Skill

Problem solving improves efficiency and communication on the shop floor. It increases a company's efficiency and profitability, so it's one of the top skills employers look for when hiring new employees. Recent industry surveys show that employers consider soft skills, such as problem solving, as critical to their business’s success.

The 2011 survey, "Boiling Point? The skills gap in U.S. manufacturing ," polled over a thousand manufacturing executives who reported that the number one skill deficiency among their current employees is problem solving, which makes it difficult for their companies to adapt to the changing needs of the industry.

In this video, industry professionals discuss their expectations and present tips for new employees joining the manufacturing workforce.

Quick Summary

- [Quick Summary: Question1] What are two things you learned in this case study?

- What question(s) do you still have about the case study?

- [Quick Summary: Question2] What question(s) do you still have about the case study?

- Is there anything you would like to learn more about with respect to this case study?

- [Quick Summary: Question3] Is there anything you would like to learn more about with respect to this case study?

- Join Mind Tools

How Good Is Your Problem Solving?

© iStockphoto Entienou

Use a systematic approach.

Good problem solving skills are fundamentally important if you're going to be successful in your career.

But problems are something that we don't particularly like.

They're time-consuming.

They muscle their way into already packed schedules.

They force us to think about an uncertain future.

And they never seem to go away!

That's why, when faced with problems, most of us try to eliminate them as quickly as possible. But have you ever chosen the easiest or most obvious solution – and then realized that you have entirely missed a much better solution? Or have you found yourself fixing just the symptoms of a problem, only for the situation to get much worse?

To be an effective problem-solver, you need to be systematic and logical in your approach. This quiz helps you assess your current approach to problem solving. By improving this, you'll make better overall decisions. And as you increase your confidence with solving problems, you'll be less likely to rush to the first solution – which may not necessarily be the best one.

Once you've completed the quiz, we'll direct you to tools and resources that can help you make the most of your problem-solving skills.

How Good Are You at Solving Problems?

Instructions.

For each statement, click the button in the column that best describes you. Please answer questions as you actually are (rather than how you think you should be), and don't worry if some questions seem to score in the 'wrong direction'. When you are finished, please click the 'Calculate My Total' button at the bottom of the test.

Your last quiz results are shown.

You last completed this quiz on , at .

Score Interpretation

Answering these questions should have helped you recognize the key steps associated with effective problem solving.

This quiz is based on Dr Min Basadur's Simplexity Thinking problem-solving model. This eight-step process follows the circular pattern shown below, within which current problems are solved and new problems are identified on an ongoing basis. This assessment has not been validated and is intended for illustrative purposes only.

Figure 1 – The Simplexity Thinking Process

Reproduced with permission from Dr Min Basadur from "The Power of Innovation: How to Make Innovation a Part of Life & How to Put Creative Solutions to Work" Copyright ©1995

Below, we outline the tools and strategies you can use for each stage of the problem-solving process. Enjoy exploring these stages!

Step 1: Find the Problem

(Questions 7, 12)

Some problems are very obvious, however others are not so easily identified. As part of an effective problem-solving process, you need to look actively for problems – even when things seem to be running fine. Proactive problem solving helps you avoid emergencies and allows you to be calm and in control when issues arise.

These techniques can help you do this:

- PEST Analysis helps you pick up changes to your environment that you should be paying attention to. Make sure too that you're watching changes in customer needs and market dynamics, and that you're monitoring trends that are relevant to your industry.

- Risk Analysis helps you identify significant business risks.

- Failure Modes and Effects Analysis helps you identify possible points of failure in your business process, so that you can fix these before problems arise.

- After Action Reviews help you scan recent performance to identify things that can be done better in the future.

- Where you have several problems to solve, our articles on Prioritization and Pareto Analysis help you think about which ones you should focus on first.

Step 2: Find the Facts

(Questions 10, 14)

After identifying a potential problem, you need information. What factors contribute to the problem? Who is involved with it? What solutions have been tried before? What do others think about the problem?

If you move forward to find a solution too quickly, you risk relying on imperfect information that's based on assumptions and limited perspectives, so make sure that you research the problem thoroughly.

Step 3: Define the Problem

(Questions 3, 9)

Now that you understand the problem, define it clearly and completely. Writing a clear problem definition forces you to establish specific boundaries for the problem. This keeps the scope from growing too large, and it helps you stay focused on the main issues.

A great tool to use at this stage is CATWOE . With this process, you analyze potential problems by looking at them from six perspectives, those of its Customers; Actors (people within the organization); the Transformation, or business process; the World-view, or top-down view of what's going on; the Owner; and the wider organizational Environment. By looking at a situation from these perspectives, you can open your mind and come to a much sharper and more comprehensive definition of the problem.

Cause and Effect Analysis is another good tool to use here, as it helps you think about the many different factors that can contribute to a problem. This helps you separate the symptoms of a problem from its fundamental causes.

Step 4: Find Ideas

(Questions 4, 13)

With a clear problem definition, start generating ideas for a solution. The key here is to be flexible in the way you approach a problem. You want to be able to see it from as many perspectives as possible. Looking for patterns or common elements in different parts of the problem can sometimes help. You can also use metaphors and analogies to help analyze the problem, discover similarities to other issues, and think of solutions based on those similarities.

Traditional brainstorming and reverse brainstorming are very useful here. By taking the time to generate a range of creative solutions to the problem, you'll significantly increase the likelihood that you'll find the best possible solution, not just a semi-adequate one. Where appropriate, involve people with different viewpoints to expand the volume of ideas generated.

Don't evaluate your ideas until step 5. If you do, this will limit your creativity at too early a stage.

Step 5: Select and Evaluate

(Questions 6, 15)

After finding ideas, you'll have many options that must be evaluated. It's tempting at this stage to charge in and start discarding ideas immediately. However, if you do this without first determining the criteria for a good solution, you risk rejecting an alternative that has real potential.

Decide what elements are needed for a realistic and practical solution, and think about the criteria you'll use to choose between potential solutions.

Paired Comparison Analysis , Decision Matrix Analysis and Risk Analysis are useful techniques here, as are many of the specialist resources available within our Decision-Making section . Enjoy exploring these!

Step 6: Plan

(Questions 1, 16)

You might think that choosing a solution is the end of a problem-solving process. In fact, it's simply the start of the next phase in problem solving: implementation. This involves lots of planning and preparation. If you haven't already developed a full Risk Analysis in the evaluation phase, do so now. It's important to know what to be prepared for as you begin to roll out your proposed solution.

The type of planning that you need to do depends on the size of the implementation project that you need to set up. For small projects, all you'll often need are Action Plans that outline who will do what, when, and how. Larger projects need more sophisticated approaches – you'll find out more about these in the Mind Tools Project Management section. And for projects that affect many other people, you'll need to think about Change Management as well.

Here, it can be useful to conduct an Impact Analysis to help you identify potential resistance as well as alert you to problems you may not have anticipated. Force Field Analysis will also help you uncover the various pressures for and against your proposed solution. Once you've done the detailed planning, it can also be useful at this stage to make a final Go/No-Go Decision , making sure that it's actually worth going ahead with the selected option.

Step 7: Sell the Idea

(Questions 5, 8)

As part of the planning process, you must convince other stakeholders that your solution is the best one. You'll likely meet with resistance, so before you try to “sell” your idea, make sure you've considered all the consequences.

As you begin communicating your plan, listen to what people say, and make changes as necessary. The better the overall solution meets everyone's needs, the greater its positive impact will be! For more tips on selling your idea, read our article on Creating a Value Proposition and use our Sell Your Idea Bite-Sized Training session.

Step 8: Act

(Questions 2, 11)

Finally, once you've convinced your key stakeholders that your proposed solution is worth running with, you can move on to the implementation stage. This is the exciting and rewarding part of problem solving, which makes the whole process seem worthwhile.

This action stage is an end, but it's also a beginning: once you've completed your implementation, it's time to move into the next cycle of problem solving by returning to the scanning stage. By doing this, you'll continue improving your organization as you move into the future.

Problem solving is an exceptionally important workplace skill.

Being a competent and confident problem solver will create many opportunities for you. By using a well-developed model like Simplexity Thinking for solving problems, you can approach the process systematically, and be comfortable that the decisions you make are solid.

Given the unpredictable nature of problems, it's very reassuring to know that, by following a structured plan, you've done everything you can to resolve the problem to the best of your ability.

This site teaches you the skills you need for a happy and successful career; and this is just one of many tools and resources that you'll find here at Mind Tools. Subscribe to our free newsletter , or join the Mind Tools Club and really supercharge your career!

Rate this resource

The Mind Tools Club gives you exclusive tips and tools to boost your career - plus a friendly community and support from our career coaches!

Comments (220)

- Over a month ago Sonia_H wrote Hi PANGGA, This is great news! Thanks for sharing your experience. We hope these 8 steps outlined will help you in multiple ways. ~Sonia Mind Tools Coach

- Over a month ago PANGGA wrote Thank you for this mind tool. I got to know my skills in solving problem. It will serve as my guide on facing and solving problem that I might encounter.

- Over a month ago Sarah_H wrote Wow, thanks for your very detailed feedback HardipG. The Mind Tools team will take a look at your feedback and suggestions for improvement. Best wishes, Sarah Mind Tools Coach

Please wait...

- Brain Development

- Childhood & Adolescence

- Diet & Lifestyle

- Emotions, Stress & Anxiety

- Learning & Memory

- Thinking & Awareness

- Alzheimer's & Dementia

- Childhood Disorders

- Immune System Disorders

- Mental Health

- Neurodegenerative Disorders

- Infectious Disease

- Neurological Disorders A-Z

- Body Systems

- Cells & Circuits

- Genes & Molecules

- The Arts & the Brain

- Law, Economics & Ethics

- Neuroscience in the News

- Supporting Research

- Tech & the Brain

- Animals in Research

- BRAIN Initiative

- Meet the Researcher

- Neuro-technologies

- Tools & Techniques

- Core Concepts

- For Educators

- Ask an Expert

- The Brain Facts Book

Test Your Problem-Solving Skills

Personalize your emails.

Personalize your monthly updates from BrainFacts.org by choosing the topics that you care about most!

Find a Neuroscientist

Engage local scientists to educate your community about the brain.

Image of the Week

Check out the Image of the Week Archive.

SUPPORTING PARTNERS

- Privacy Policy

- Accessibility Policy

- Terms and Conditions

- Manage Cookies

Some pages on this website provide links that require Adobe Reader to view.

How it works

For Business

Join Mind Tools

Self-Assessment • 20 min read

How Good Is Your Problem Solving?

Use a systematic approach..

By the Mind Tools Content Team

Good problem solving skills are fundamentally important if you're going to be successful in your career.

But problems are something that we don't particularly like.

They're time-consuming.

They muscle their way into already packed schedules.

They force us to think about an uncertain future.

And they never seem to go away!

That's why, when faced with problems, most of us try to eliminate them as quickly as possible. But have you ever chosen the easiest or most obvious solution – and then realized that you have entirely missed a much better solution? Or have you found yourself fixing just the symptoms of a problem, only for the situation to get much worse?

To be an effective problem-solver, you need to be systematic and logical in your approach. This quiz helps you assess your current approach to problem solving. By improving this, you'll make better overall decisions. And as you increase your confidence with solving problems, you'll be less likely to rush to the first solution – which may not necessarily be the best one.

Once you've completed the quiz, we'll direct you to tools and resources that can help you make the most of your problem-solving skills.

How Good Are You at Solving Problems?

Instructions.

For each statement, click the button in the column that best describes you. Please answer questions as you actually are (rather than how you think you should be), and don't worry if some questions seem to score in the 'wrong direction'. When you are finished, please click the 'Calculate My Total' button at the bottom of the test.

Answering these questions should have helped you recognize the key steps associated with effective problem solving.

This quiz is based on Dr Min Basadur's Simplexity Thinking problem-solving model. This eight-step process follows the circular pattern shown below, within which current problems are solved and new problems are identified on an ongoing basis. This assessment has not been validated and is intended for illustrative purposes only.

Below, we outline the tools and strategies you can use for each stage of the problem-solving process. Enjoy exploring these stages!