- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Verywell Mind Insights

- 2023 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

The Asch Conformity Experiments

What These Experiments Say About Group Behavior

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Emily is a board-certified science editor who has worked with top digital publishing brands like Voices for Biodiversity, Study.com, GoodTherapy, Vox, and Verywell.

:max_bytes(150000):strip_icc():format(webp)/Emily-Swaim-1000-0f3197de18f74329aeffb690a177160c.jpg)

What Is Conformity?

Factors that influence conformity.

The Asch conformity experiments were a series of psychological experiments conducted by Solomon Asch in the 1950s. The experiments revealed the degree to which a person's own opinions are influenced by those of a group . Asch found that people were willing to ignore reality and give an incorrect answer in order to conform to the rest of the group.

At a Glance

The Asch conformity experiments are among the most famous in psychology's history and have inspired a wealth of additional research on conformity and group behavior. This research has provided important insight into how, why, and when people conform and the effects of social pressure on behavior.

Do you think of yourself as a conformist or a non-conformist? Most people believe that they are non-conformist enough to stand up to a group when they know they are right, but conformist enough to blend in with the rest of their peers.

Research suggests that people are often much more prone to conform than they believe they might be.

Imagine yourself in this situation: You've signed up to participate in a psychology experiment in which you are asked to complete a vision test.

Seated in a room with the other participants, you are shown a line segment and then asked to choose the matching line from a group of three segments of different lengths.

The experimenter asks each participant individually to select the matching line segment. On some occasions, everyone in the group chooses the correct line, but occasionally, the other participants unanimously declare that a different line is actually the correct match.

So what do you do when the experimenter asks you which line is the right match? Do you go with your initial response, or do you choose to conform to the rest of the group?

Conformity in Psychology

In psychological terms, conformity refers to an individual's tendency to follow the unspoken rules or behaviors of the social group to which they belong. Researchers have long been been curious about the degree to which people follow or rebel against social norms.

Asch was interested in looking at how pressure from a group could lead people to conform, even when they knew that the rest of the group was wrong. The purpose of the Asch conformity experiment was to demonstrate the power of conformity in groups.

Methodology of Asch's Experiments

Asch's experiments involved having people who were in on the experiment pretend to be regular participants alongside those who were actual, unaware subjects of the study. Those that were in on the experiment would behave in certain ways to see if their actions had an influence on the actual experimental participants.

In each experiment, a naive student participant was placed in a room with several other confederates who were in on the experiment. The subjects were told that they were taking part in a "vision test." All told, a total of 50 students were part of Asch’s experimental condition.

The confederates were all told what their responses would be when the line task was presented. The naive participant, however, had no inkling that the other students were not real participants. After the line task was presented, each student verbally announced which line (either 1, 2, or 3) matched the target line.

Critical Trials

There were 18 different trials in the experimental condition , and the confederates gave incorrect responses in 12 of them, which Asch referred to as the "critical trials." The purpose of these critical trials was to see if the participants would change their answer in order to conform to how the others in the group responded.

During the first part of the procedure, the confederates answered the questions correctly. However, they eventually began providing incorrect answers based on how they had been instructed by the experimenters.

Control Condition

The study also included 37 participants in a control condition . In order to ensure that the average person could accurately gauge the length of the lines, the control group was asked to individually write down the correct match. According to these results, participants were very accurate in their line judgments, choosing the correct answer 99% of the time.

Results of the Asch Conformity Experiments

Nearly 75% of the participants in the conformity experiments went along with the rest of the group at least one time.

After combining the trials, the results indicated that participants conformed to the incorrect group answer approximately one-third of the time.

The experiments also looked at the effect that the number of people present in the group had on conformity. When just one confederate was present, there was virtually no impact on participants' answers. The presence of two confederates had only a tiny effect. The level of conformity seen with three or more confederates was far more significant.

Asch also found that having one of the confederates give the correct answer while the rest of the confederates gave the incorrect answer dramatically lowered conformity. In this situation, just 5% to 10% of the participants conformed to the rest of the group (depending on how often the ally answered correctly). Later studies have also supported this finding, suggesting that having social support is an important tool in combating conformity.

At the conclusion of the Asch experiments, participants were asked why they had gone along with the rest of the group. In most cases, the students stated that while they knew the rest of the group was wrong, they did not want to risk facing ridicule. A few of the participants suggested that they actually believed the other members of the group were correct in their answers.

These results suggest that conformity can be influenced both by a need to fit in and a belief that other people are smarter or better informed.

Given the level of conformity seen in Asch's experiments, conformity can be even stronger in real-life situations where stimuli are more ambiguous or more difficult to judge.

Asch went on to conduct further experiments in order to determine which factors influenced how and when people conform. He found that:

- Conformity tends to increase when more people are present . However, there is little change once the group size goes beyond four or five people.

- Conformity also increases when the task becomes more difficult . In the face of uncertainty, people turn to others for information about how to respond.

- Conformity increases when other members of the group are of a higher social status . When people view the others in the group as more powerful, influential, or knowledgeable than themselves, they are more likely to go along with the group.

- Conformity tends to decrease, however, when people are able to respond privately . Research has also shown that conformity decreases if people have support from at least one other individual in a group.

Criticisms of the Asch Conformity Experiments

One of the major criticisms of Asch's conformity experiments centers on the reasons why participants choose to conform. According to some critics, individuals may have actually been motivated to avoid conflict, rather than an actual desire to conform to the rest of the group.

Another criticism is that the results of the experiment in the lab may not generalize to real-world situations.

Many social psychology experts believe that while real-world situations may not be as clear-cut as they are in the lab, the actual social pressure to conform is probably much greater, which can dramatically increase conformist behaviors.

Asch SE. Studies of independence and conformity: I. A minority of one against a unanimous majority . Psychological Monographs: General and Applied . 1956;70(9):1-70. doi:10.1037/h0093718

Morgan TJH, Laland KN, Harris PL. The development of adaptive conformity in young children: effects of uncertainty and consensus . Dev Sci. 2015;18(4):511-524. doi:10.1111/desc.12231

Asch SE. Effects of group pressure upon the modification and distortion of judgments . In: Guetzkow H, ed. Groups, Leadership and Men; Research in Human Relations. Carnegie Press. 1951:177–190.

Britt MA. Psych Experiments: From Pavlov's Dogs to Rorschach's Inkblots . Adams Media.

Myers DG. Exploring Psychology (9th ed.). Worth Publishers.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

6.6C: The Asch Experiment- The Power of Peer Pressure

- Last updated

- Save as PDF

- Page ID 130621

The Asch conformity experiments were a series of studies conducted in the 1950s that demonstrated the power of conformity in groups.

Learning Objectives

- Explain how the Asch experiment sought to measure conformity in groups

- The Asch conformity experiments consisted of a group “vision test”, where study participants were found to be more likely to conform to obviously wrong answers if first given by other “participants”, who were actually working for the experimenter.

- The experiment found that over a third of subjects conformed to giving a wrong answer.

In terms of gender, males show around half the effect of females (tested in same-sex groups). Conformity is also higher among members of an in-group.

- conformity : the ideology of adhering to one standard or social uniformity

Conducted by social psychologist Solomon Asch of Swarthmore College, the Asch conformity experiments were a series of studies published in the 1950s that demonstrated the power of conformity in groups. They are also known as the Asch paradigm. In the experiment, students were asked to participate in a group “vision test. ” In reality, all but one of the participants were working for Asch (i.e. confederates), and the study was really about how the remaining student would react to their behavior.

The original experiment was conducted with 123 male participants. Each participant was put into a group with five to seven confederates. The participants were shown a card with a line on it (the reference line), followed by another card with three lines on it labeled a, b, and c. The participants were then asked to say out loud which of the three lines matched in length the reference line, as well as other responses such as the length of the reference line to an everyday object, which lines were the same length, and so on.

Each line question was called a “trial. ” The “real” participant answered last or next to last. For the first two trials, the subject would feel at ease in the experiment, as he and the other “participants” gave the obvious, correct answer. On the third trial, all the confederates would start giving the same wrong answer. There were 18 trials in total and the confederates answered incorrectly for 12 of them. These 12 were known as the “critical trials. ”

The aim was to see whether the real participants would conform to the wrong answers of the confederates and change their answer to respond in the same way, despite it being the wrong answer.

Dr. Asch thought that the majority of people would not conform to something obviously wrong, but the results showed that only 24% of the participants did not conform on any trial. Seventy five percent conformed at least once, 5% conformed every time, and when surrounded by individuals all voicing an incorrect answer, participants provided incorrect responses on a high proportion of the questions (32%). Overall, there was a 37% conformity rate by subjects averaged across all critical trials. In a control group, with no pressure to conform to an erroneous answer, only one subject out of 35 ever gave an incorrect answer.

Study Variations

Variations of the basic paradigm tested how many cohorts were necessary to induce conformity, examining the influence of just one cohort and as many as fifteen. Results indicated that one cohort has virtually no influence and two cohorts have only a small influence. When three or more cohorts are present, the tendency to conform increases only modestly. The maximum effect occurs with four cohorts. Adding additional cohorts does not produce a stronger effect.

The unanimity of the confederates has also been varied. When the confederates are not unanimous in their judgment, even if only one confederate voices a different opinion, participants are much more likely to resist the urge to conform (only 5% to 10% conform) than when the confederates all agree. This result holds whether or not the dissenting confederate gives the correct answer. As long as the dissenting confederate gives an answer that is different from the majority, participants are more likely to give the correct answer.

This finding illuminates the power that even a small dissenting minority can have upon a larger group. This demonstrates the importance of privacy in answering important and life-changing questions, so that people do not feel pressured to conform. For example, anonymous surveys can allow people to fully express how they feel about a particular subject without fear of retribution or retaliation from others in the group or the larger society. Having a witness or ally (someone who agrees with the point of view) also makes it less likely that conformity will occur.

Interpretations

Asch suggested that this reflected poorly on factors such as education, which he thought must over-train conformity. Other researchers have argued that it is rational to use other people’s judgments as evidence. Others have suggested that the high conformity rate was due to social norms regarding politeness, which is consistent with subjects’ own claims that they did not actually believe the others’ judgments and were indeed merely conforming.

12.4 Conformity, Compliance, and Obedience

Learning objectives.

By the end of this section, you will be able to:

- Explain the Asch effect

- Define conformity and types of social influence

- Describe Stanley Milgram’s experiment and its implications

- Define groupthink, social facilitation, and social loafing

In this section, we discuss additional ways in which people influence others. The topics of conformity, social influence, obedience, and group processes demonstrate the power of the social situation to change our thoughts, feelings, and behaviors. We begin this section with a discussion of a famous social psychology experiment that demonstrated how susceptible humans are to outside social pressures.

Solomon Asch conducted several experiments in the 1950s to determine how people are affected by the thoughts and behaviors of other people. In one study, a group of participants was shown a series of printed line segments of different lengths: a, b, and c ( Figure 12.17 ). Participants were then shown a fourth line segment: x. They were asked to identify which line segment from the first group (a, b, or c) most closely resembled the fourth line segment in length.

Each group of participants had only one true, naïve subject. The remaining members of the group were confederates of the researcher. A confederate is a person who is aware of the experiment and works for the researcher. Confederates are used to manipulate social situations as part of the research design, and the true, naïve participants believe that confederates are, like them, uninformed participants in the experiment. In Asch’s study, the confederates identified a line segment that was obviously shorter than the target line—a wrong answer. The naïve participant then had to identify aloud the line segment that best matched the target line segment.

How often do you think the true participant aligned with the confederates’ response? That is, how often do you think the group influenced the participant, and the participant gave the wrong answer? Asch (1955) found that 76% of participants conformed to group pressure at least once by indicating the incorrect line. Conformity is the change in a person’s behavior to go along with the group, even if he does not agree with the group. Why would people give the wrong answer? What factors would increase or decrease someone giving in or conforming to group pressure?

The Asch effect is the influence of the group majority on an individual’s judgment.

What factors make a person more likely to yield to group pressure? Research shows that the size of the majority, the presence of another dissenter, and the public or relatively private nature of responses are key influences on conformity.

- The size of the majority: The greater the number of people in the majority, the more likely an individual will conform. There is, however, an upper limit: a point where adding more members does not increase conformity. In Asch’s study, conformity increased with the number of people in the majority—up to seven individuals. At numbers beyond seven, conformity leveled off and decreased slightly (Asch, 1955).

- The presence of another dissenter: If there is at least one dissenter, conformity rates drop to near zero (Asch, 1955).

- The public or private nature of the responses: When responses are made publicly (in front of others), conformity is more likely; however, when responses are made privately (e.g., writing down the response), conformity is less likely (Deutsch & Gerard, 1955).

The finding that conformity is more likely to occur when responses are public than when they are private is the reason government elections require voting in secret, so we are not coerced by others ( Figure 12.18 ). The Asch effect can be easily seen in children when they have to publicly vote for something. For example, if the teacher asks whether the children would rather have extra recess, no homework, or candy, once a few children vote, the rest will comply and go with the majority. In a different classroom, the majority might vote differently, and most of the children would comply with that majority. When someone’s vote changes if it is made in public versus private, this is known as compliance. Compliance can be a form of conformity. Compliance is going along with a request or demand, even if you do not agree with the request. In Asch’s studies, the participants complied by giving the wrong answers, but privately did not accept that the obvious wrong answers were correct.

Now that you have learned about the Asch line experiments, why do you think the participants conformed? The correct answer to the line segment question was obvious, and it was an easy task. Researchers have categorized the motivation to conform into two types: normative social influence and informational social influence (Deutsch & Gerard, 1955).

In normative social influence , people conform to the group norm to fit in, to feel good, and to be accepted by the group. However, with informational social influence , people conform because they believe the group is competent and has the correct information, particularly when the task or situation is ambiguous. What type of social influence was operating in the Asch conformity studies? Since the line judgment task was unambiguous, participants did not need to rely on the group for information. Instead, participants complied to fit in and avoid ridicule, an instance of normative social influence.

An example of informational social influence may be what to do in an emergency situation. Imagine that you are in a movie theater watching a film and what seems to be smoke comes in the theater from under the emergency exit door. You are not certain that it is smoke—it might be a special effect for the movie, such as a fog machine. When you are uncertain you will tend to look at the behavior of others in the theater. If other people show concern and get up to leave, you are likely to do the same. However, if others seem unconcerned, you are likely to stay put and continue watching the movie ( Figure 12.19 ).

How would you have behaved if you were a participant in Asch’s study? Many students say they would not conform, that the study is outdated, and that people nowadays are more independent. To some extent this may be true. Research suggests that overall rates of conformity may have reduced since the time of Asch’s research. Furthermore, efforts to replicate Asch’s study have made it clear that many factors determine how likely it is that someone will demonstrate conformity to the group. These factors include the participant’s age, gender, and socio-cultural background (Bond & Smith, 1996; Larsen, 1990; Walker & Andrade, 1996).

Link to Learning

Watch this video of a replication of the Asch experiment to learn more.

Stanley Milgram’s Experiment

Conformity is one effect of the influence of others on our thoughts, feelings, and behaviors. Another form of social influence is obedience to authority. Obedience is the change of an individual’s behavior to comply with a demand by an authority figure. People often comply with the request because they are concerned about a consequence if they do not comply. To demonstrate this phenomenon, we review another classic social psychology experiment.

Stanley Milgram was a social psychology professor at Yale who was influenced by the trial of Adolf Eichmann, a Nazi war criminal. Eichmann’s defense for the atrocities he committed was that he was “just following orders.” Milgram (1963) wanted to test the validity of this defense, so he designed an experiment and initially recruited 40 men for his experiment. The volunteer participants were led to believe that they were participating in a study to improve learning and memory. The participants were told that they were to teach other students (learners) correct answers to a series of test items. The participants were shown how to use a device that they were told delivered electric shocks of different intensities to the learners. The participants were told to shock the learners if they gave a wrong answer to a test item—that the shock would help them to learn. The participants believed they gave the learners shocks, which increased in 15-volt increments, all the way up to 450 volts. The participants did not know that the learners were confederates and that the confederates did not actually receive shocks.

In response to a string of incorrect answers from the learners, the participants obediently and repeatedly shocked them. The confederate learners cried out for help, begged the participant teachers to stop, and even complained of heart trouble. Yet, when the researcher told the participant-teachers to continue the shock, 65% of the participants continued the shock to the maximum voltage and to the point that the learner became unresponsive ( Figure 12.20 ). What makes someone obey authority to the point of potentially causing serious harm to another person?

Several variations of the original Milgram experiment were conducted to test the boundaries of obedience. When certain features of the situation were changed, participants were less likely to continue to deliver shocks (Milgram, 1965). For example, when the setting of the experiment was moved to an off-campus office building, the percentage of participants who delivered the highest shock dropped to 48%. When the learner was in the same room as the teacher, the highest shock rate dropped to 40%. When the teachers’ and learners’ hands were touching, the highest shock rate dropped to 30%. When the researcher gave the orders by phone, the rate dropped to 23%. These variations show that when the humanity of the person being shocked was increased, obedience decreased. Similarly, when the authority of the experimenter decreased, so did obedience.

This case is still very applicable today. What does a person do if an authority figure orders something done? What if the person believes it is incorrect, or worse, unethical? In a study by Martin and Bull (2008), midwives privately filled out a questionnaire regarding best practices and expectations in delivering a baby. Then, a more senior midwife and supervisor asked the junior midwives to do something they had previously stated they were opposed to. Most of the junior midwives were obedient to authority, going against their own beliefs. Burger (2009) partially replicated this study. He found among a multicultural sample of women and men that their levels of obedience matched Milgram's research. Doliński et al. (2017) performed a replication of Burger's work in Poland and controlled for the gender of both participants and learners, and once again, results that were consistent with Milgram's original work were observed.

When in group settings, we are often influenced by the thoughts, feelings, and behaviors of people around us. Whether it is due to normative or informational social influence, groups have power to influence individuals. Another phenomenon of group conformity is groupthink. Groupthink is the modification of the opinions of members of a group to align with what they believe is the group consensus (Janis, 1972). In group situations, the group often takes action that individuals would not perform outside the group setting because groups make more extreme decisions than individuals do. Moreover, groupthink can hinder opposing trains of thought. This elimination of diverse opinions contributes to faulty decision by the group.

Groupthink in the U.S. Government

There have been several instances of groupthink in the U.S. government. One example occurred when the United States led a small coalition of nations to invade Iraq in March 2003. This invasion occurred because a small group of advisors and former President George W. Bush were convinced that Iraq represented a significant terrorism threat with a large stockpile of weapons of mass destruction at its disposal. Although some of these individuals may have had some doubts about the credibility of the information available to them at the time, in the end, the group arrived at a consensus that Iraq had weapons of mass destruction and represented a significant threat to national security. It later came to light that Iraq did not have weapons of mass destruction, but not until the invasion was well underway. As a result, 6000 American soldiers were killed and many more civilians died. How did the Bush administration arrive at their conclusions? View this video of Colin Powell, 10 years after his famous United Nations speech, discussing the information he had at the time that his decisions were based on. ("CNN Official Interview: Colin Powell now regrets UN speech about WMDs," 2010).

Do you see evidence of groupthink?

Why does groupthink occur? There are several causes of groupthink, which makes it preventable. When the group is highly cohesive, or has a strong sense of connection, maintaining group harmony may become more important to the group than making sound decisions. If the group leader is directive and makes his opinions known, this may discourage group members from disagreeing with the leader. If the group is isolated from hearing alternative or new viewpoints, groupthink may be more likely. How do you know when groupthink is occurring?

There are several symptoms of groupthink including the following:

- perceiving the group as invulnerable or invincible—believing it can do no wrong

- believing the group is morally correct

- self-censorship by group members, such as withholding information to avoid disrupting the group consensus

- the quashing of dissenting group members’ opinions

- the shielding of the group leader from dissenting views

- perceiving an illusion of unanimity among group members

- holding stereotypes or negative attitudes toward the out-group or others’ with differing viewpoints (Janis, 1972)

Given the causes and symptoms of groupthink, how can it be avoided? There are several strategies that can improve group decision making including seeking outside opinions, voting in private, having the leader withhold position statements until all group members have voiced their views, conducting research on all viewpoints, weighing the costs and benefits of all options, and developing a contingency plan (Janis, 1972; Mitchell & Eckstein, 2009).

Group Polarization

Another phenomenon that occurs within group settings is group polarization. Group polarization (Teger & Pruitt, 1967) is the strengthening of an original group attitude after the discussion of views within a group. That is, if a group initially favors a viewpoint, after discussion the group consensus is likely a stronger endorsement of the viewpoint. Conversely, if the group was initially opposed to a viewpoint, group discussion would likely lead to stronger opposition. Group polarization explains many actions taken by groups that would not be undertaken by individuals. Group polarization can be observed at political conventions, when platforms of the party are supported by individuals who, when not in a group, would decline to support them. Recently, some theorists have argued that group polarization may be partly responsible for the extreme political partisanship that seems ubiquitous in modern society. Given that people can self-select media outlets that are most consistent with their own political views, they are less likely to encounter opposing viewpoints. Over time, this leads to a strengthening of their own perspective and of hostile attitudes and behaviors towards those with different political ideals. Remarkably, political polarization leads to open levels of discrimination that are on par with, or perhaps exceed, racial discrimination (Iyengar & Westwood, 2015). A more everyday example is a group’s discussion of how attractive someone is. Does your opinion change if you find someone attractive, but your friends do not agree? If your friends vociferously agree, might you then find this person even more attractive?

Social traps refer to situations that arise when individuals or groups of individuals behave in ways that are not in their best interest and that may have negative, long-term consequences. However, once established, a social trap is very difficult to escape. For example, following World War II, the United States and the former Soviet Union engaged in a nuclear arms race. While the presence of nuclear weapons is not in either party's best interest, once the arms race began, each country felt the need to continue producing nuclear weapons to protect itself from the other.

Social Loafing

Imagine you were just assigned a group project with other students whom you barely know. Everyone in your group will get the same grade. Are you the type who will do most of the work, even though the final grade will be shared? Or are you more likely to do less work because you know others will pick up the slack? Social loafing involves a reduction in individual output on tasks where contributions are pooled. Because each individual's efforts are not evaluated, individuals can become less motivated to perform well. Karau and Williams (1993) and Simms and Nichols (2014) reviewed the research on social loafing and discerned when it was least likely to happen. The researchers noted that social loafing could be alleviated if, among other situations, individuals knew their work would be assessed by a manager (in a workplace setting) or instructor (in a classroom setting), or if a manager or instructor required group members to complete self-evaluations.

The likelihood of social loafing in student work groups increases as the size of the group increases (Shepperd & Taylor, 1999). According to Karau and Williams (1993), college students were the population most likely to engage in social loafing. Their study also found that women and participants from collectivistic cultures were less likely to engage in social loafing, explaining that their group orientation may account for this.

College students could work around social loafing or “free-riding” by suggesting to their professors use of a flocking method to form groups. Harding (2018) compared groups of students who had self-selected into groups for class to those who had been formed by flocking, which involves assigning students to groups who have similar schedules and motivations. Not only did she find that students reported less “free riding,” but that they also did better in the group assignments compared to those whose groups were self-selected.

Interestingly, the opposite of social loafing occurs when the task is complex and difficult (Bond & Titus, 1983; Geen, 1989). In a group setting, such as the student work group, if your individual performance cannot be evaluated, there is less pressure for you to do well, and thus less anxiety or physiological arousal (Latané, Williams, & Harkens, 1979). This puts you in a relaxed state in which you can perform your best, if you choose (Zajonc, 1965). If the task is a difficult one, many people feel motivated and believe that their group needs their input to do well on a challenging project (Jackson & Williams, 1985).

Deindividuation

Another way that being part of a group can affect behavior is exhibited in instances in which deindividuation occurs. Deindividuation refers to situations in which a person may feel a sense of anonymity and therefore a reduction in accountability and sense of self when among others. Deindividuation is often pointed to in cases in which mob or riot-like behaviors occur (Zimbardo, 1969), but research on the subject and the role that deindividuation plays in such behaviors has resulted in inconsistent results (as discussed in Granström, Guvå, Hylander, & Rosander, 2009).

Table 12.2 summarizes the types of social influence you have learned about in this chapter.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/psychology-2e/pages/1-introduction

- Authors: Rose M. Spielman, William J. Jenkins, Marilyn D. Lovett

- Publisher/website: OpenStax

- Book title: Psychology 2e

- Publication date: Apr 22, 2020

- Location: Houston, Texas

- Book URL: https://openstax.org/books/psychology-2e/pages/1-introduction

- Section URL: https://openstax.org/books/psychology-2e/pages/12-4-conformity-compliance-and-obedience

© Jan 6, 2024 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

The Asch Conformity Experiments

What Solomon Asch Demonstrated About Social Pressure

- Recommended Reading

- Key Concepts

- Major Sociologists

- News & Issues

- Research, Samples, and Statistics

- Archaeology

The Asch Conformity Experiments, conducted by psychologist Solomon Asch in the 1950s, demonstrated the power of conformity in groups and showed that even simple objective facts cannot withstand the distorting pressure of group influence.

The Experiment

In the experiments, groups of male university students were asked to participate in a perception test. In reality, all but one of the participants were "confederates" (collaborators with the experimenter who only pretended to be participants). The study was about how the remaining student would react to the behavior of the other "participants."

The participants of the experiment (the subject as well as the confederates) were seated in a classroom and were presented with a card with a simple vertical black line drawn on it. Then, they were given a second card with three lines of varying length labeled "A," "B," and "C." One line on the second card was the same length as that on the first, and the other two lines were obviously longer and shorter.

Participants were asked to state out loud in front of each other which line, A, B, or C, matched the length of the line on the first card. In each experimental case, the confederates answered first, and the real participant was seated so that he would answer last. In some cases, the confederates answered correctly, while in others, the answered incorrectly.

Asch's goal was to see if the real participant would be pressured to answer incorrectly in the instances when the Confederates did so, or whether their belief in their own perception and correctness would outweigh the social pressure provided by the responses of the other group members.

Asch found that one-third of real participants gave the same wrong answers as the Confederates at least half the time. Forty percent gave some wrong answers, and only one-fourth gave correct answers in defiance of the pressure to conform to the wrong answers provided by the group.

In interviews he conducted following the trials, Asch found that those that answered incorrectly, in conformance with the group, believed that the answers given by the Confederates were correct, some thought that they were suffering a lapse in perception for originally thinking an answer that differed from the group, while others admitted that they knew that they had the correct answer, but conformed to the incorrect answer because they didn't want to break from the majority.

The Asch experiments have been repeated many times over the years with students and non-students, old and young, and in groups of different sizes and different settings. The results are consistently the same with one-third to one-half of the participants making a judgment contrary to fact, yet in conformity with the group, demonstrating the strong power of social influences.

Connection to Sociology

The results of Asch's experiment resonate with what we know to be true about the nature of social forces and norms in our lives. The behavior and expectations of others shape how we think and act on a daily basis because what we observe among others teaches us what is normal , and expected of us. The results of the study also raise interesting questions and concerns about how knowledge is constructed and disseminated, and how we can address social problems that stem from conformity, among others.

Updated by Nicki Lisa Cole, Ph.D.

- 15 Major Sociological Studies and Publications

- High School Science Experiment Ideas

- Adult Ice Breaker Games for Classrooms, Meetings, and Conferences

- Understanding Socialization in Sociology

- 10 Common Test Mistakes

- Homeschool Myths

- What to Expect From MBA Classes

- The Milgram Experiment: How Far Will You Go to Obey an Order?

- Teaching Strategies to Promote Student Equity and Engagement

- The Slave Boy Experiment in Plato's 'Meno'

- The 49 Techniques from Teach Like a Champion

- Introduction to Sociology

- Notes on 'Ain't'

- Social Surveys: Questionnaires, Interviews, and Telephone Polls

- 5 Bubble Sheet Tips for Test Answers

- The Original 13 U.S. States

Learn More Psychology

Asch: social influence, conforming in groups.

The Asch conformity experiments were a series of studies that starkly demonstrated the power of conformity in groups.

Permalink Print |

Experimenters led by Solomon Asch asked students to participate in a "vision test." In reality, all but one of the partipants were shills of the experimenter, and the study was really about how the remaining student would react to the confederates' behavior.

Zimbardo's Stanford Prison Experiment

- Social Influence

- Minority Influence

Asch's Experiments

The participants -- the real subjects and the confederates -- were all seated in a classroom where they were told to announce their judgment of the length of several lines drawn on a series of displays. They were asked which line was longer than the other, which were the same length, etc. The confederates had been prearranged to all give an incorrect answer to the tests.

Many subjects showed extreme discomfort, but most conformed to the majority view of the others in the room, even when the majority said that two lines different in length by several inches were the same length. Control subjects with no exposure to a majority view had no trouble giving the correct answer.

One difference between the Asch conformity experiments and the (also famous in social psychology) Milgram experiment noted by Milgram is that subjects in these studies attributed themselves and their own poor eyesight and judgment while those in the Milgram experiment blamed the experimenter in explaining their behavior. Conformity may be much less salient than authority pressure.

The Asch experiments may provide some vivid empirical evidence relevant to some of the ideas raised in George Orwell's Nineteen Eighty-Four.

Learn More:

Discover the Psychology of Influence

- https://en.wikipedia.org/wiki/Asch_conformity_experiments

Which Archetype Are You?

Are You Angry?

Windows to the Soul

Are You Stressed?

Attachment & Relationships

Memory Like A Goldfish?

31 Defense Mechanisms

Slave To Your Role?

Are You Fixated?

Interpret Your Dreams

How to Read Body Language

How to Beat Stress and Succeed in Exams

More on Influence

Zimbardo's Stanford prison experiment revealed how social roles can influence...

Are You Authoritarian?

How Theodor Adorno's F-scale aimed to identify fascism and authoritarian...

False Memories

How false memories are created and can affect our ability to recall events.

Brainwashed

Brainwashing, its origins and its use in cults and media.

Psychology Of Influence

What causes us to obey to authority figures such as police, teachers and...

Sign Up for Unlimited Access

- Psychology approaches, theories and studies explained

- Body Language Reading Guide

- How to Interpret Your Dreams Guide

- Self Hypnosis Downloads

- Plus More Member Benefits

You May Also Like...

Psychology of color, nap for performance, dark sense of humor linked to intelligence, why do we dream, master body language, making conversation, persuasion with ingratiation, psychology guides.

Learn Body Language Reading

How To Interpret Your Dreams

Overcome Your Fears and Phobias

Psychology topics, learn psychology.

- Access 2,200+ insightful pages of psychology explanations & theories

- Insights into the way we think and behave

- Body Language & Dream Interpretation guides

- Self hypnosis MP3 downloads and more

- Behavioral Approach

- Eye Reading

- Stress Test

- Cognitive Approach

- Fight-or-Flight Response

- Neuroticism Test

© 2024 Psychologist World. Parts licensed under GNU FDL . Home About Contact Us Terms of Use Privacy & Cookies Hypnosis Scripts Sign Up

Loading metrics

Open Access

Essays articulate a specific perspective on a topic of broad interest to scientists.

See all article types »

Contesting the “Nature” Of Conformity: What Milgram and Zimbardo's Studies Really Show

* E-mail: [email protected]

Affiliation School of Psychology, University of Queensland, St. Lucia, Australia

Affiliation School of Psychology, University of St. Andrews, St Andrews, Scotland

- S. Alexander Haslam,

- Stephen. D. Reicher

Published: November 20, 2012

- https://doi.org/10.1371/journal.pbio.1001426

- Reader Comments

Understanding of the psychology of tyranny is dominated by classic studies from the 1960s and 1970s: Milgram's research on obedience to authority and Zimbardo's Stanford Prison Experiment. Supporting popular notions of the banality of evil, this research has been taken to show that people conform passively and unthinkingly to both the instructions and the roles that authorities provide, however malevolent these may be. Recently, though, this consensus has been challenged by empirical work informed by social identity theorizing. This suggests that individuals' willingness to follow authorities is conditional on identification with the authority in question and an associated belief that the authority is right.

Citation: Haslam SA, Reicher SD (2012) Contesting the “Nature” Of Conformity: What Milgram and Zimbardo's Studies Really Show. PLoS Biol 10(11): e1001426. https://doi.org/10.1371/journal.pbio.1001426

Copyright: © 2012 Haslam, Reicher. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: The authors received no specific funding for this work.

Competing interests: The authors have declared that no competing interests exist.

Introduction

If men make war in slavish obedience to rules, they will fail. Ulysses S. Grant [1]

Conformity is often criticized on grounds of morality. Many, if not all, of the greatest human atrocities have been described as “crimes of obedience” [2] . However, as the victorious American Civil War General and later President Grant makes clear, conformity is equally problematic on grounds of efficacy. Success requires leaders and followers who do not adhere rigidly to a pre-determined script. Rigidity cannot steel them for the challenges of their task or for the creativity of their opponents.

Given these problems, it would seem even more unfortunate if human beings were somehow programmed for conformity. Yet this is a view that has become dominant over the last half-century. Its influence can be traced to two landmark empirical programs led by social psychologists in the 1960s and early 1970s: Milgram's Obedience to Authority research and Zimbardo's Stanford Prison Experiment. These studies have not only had influence in academic spheres. They have spilled over into our general culture and shaped popular understanding, such that “everyone knows” that people inevitably succumb to the demands of authority, however immoral the consequences [3] , [4] . As Parker puts it, “the hopeless moral of the [studies'] story is that resistance is futile” [5] . What is more, this work has shaped our understanding not only of conformity but of human nature more broadly [6] .

Building on an established body of theorizing in the social identity tradition—which sees group-based influence as meaningful and conditional [7] , [8] —we argue, however, that these understandings are mistaken. Moreover, we contend that evidence from the studies themselves (as well as from subsequent research) supports a very different analysis of the psychology of conformity.

The Classic Studies: Conformity, Obedience, and the Banality Of Evil

In Milgram's work [9] , [10] members of the general public (predominantly men) volunteered to take part in a scientific study of memory. They found themselves cast in the role of a “Teacher” with the task of administering shocks of increasing magnitude (from 15 V to 450 V in 15-V increments) to another man (the “Learner”) every time he failed to recall the correct word in a previously learned pair. Unbeknown to the Teacher, the Learner was Milgram's confederate, and the shocks were not real. Moreover, rather than being interested in memory, Milgram was actually interested in seeing how far the men would go in carrying out the task. To his—and everyone else's [11] —shock, the answer was “very far.” In what came to be termed the “baseline” study [12] all participants proved willing to administer shocks of 300 V and 65% went all the way to 450 V. This appeared to provide compelling evidence that normal well-adjusted men would be willing to kill a complete stranger simply because they were ordered to do so by an authority.

Zimbardo's Stanford Prison Experiment took these ideas further by exploring the destructive behaviour of groups of men over an extended period [13] , [14] . Students were randomly assigned to be either guards or prisoners within a mock prison that had been constructed in the Stanford Psychology Department. In contrast to Milgram's studies, the objective was to observe the interaction within and between the two groups in the absence of an obviously malevolent authority. Here, again, the results proved shocking. Such was the abuse meted out to the prisoners by the guards that the study had to be terminated after just 6 days. Zimbardo's conclusion from this was even more alarming than Milgram's. People descend into tyranny, he suggested, because they conform unthinkingly to the toxic roles that authorities prescribe without the need for specific orders: brutality was “a ‘natural’ consequence of being in the uniform of a ‘guard’ and asserting the power inherent in that role” [15] .

Within psychology, Milgram and Zimbardo helped consolidate a growing “conformity bias” [16] in which the focus on compliance is so strong as to obscure evidence of resistance and disobedience [17] . However their arguments proved particularly potent because they seemed to mesh with real-world examples—particularly evidence of the “banality of evil.” This term was coined in Hannah Arendt's account of the trial of Adolf Eichmann [18] , a chief architect of the Nazis' “final solution to the Jewish question” [19] . Despite being responsible for the transportation of millions of people to their death, Arendt suggested that Eichmann was no psychopathic monster. Instead his trial revealed him to be a diligent and efficient bureaucrat—a man more concerned with following orders than with asking deep questions about their morality or consequence.

Much of the power of Milgram and Zimbardo's research derives from the fact that it appears to give empirical substance to this claim that evil is banal [3] . It seems to show that tyranny is a natural and unavoidable consequence of humans' inherent motivation to bend to the wishes of those in authority—whoever they may be and whatever it is that they want us to do. Put slightly differently, it operationalizes an apparent tragedy of the human condition: our desire to be good subjects is stronger than our desire to be subjects who do good.

Questioning the Consensus: Conformity Isn't Natural and It Doesn't Explain Tyranny

The banality of evil thesis appears to be a truth almost universally acknowledged. Not only is it given prominence in social psychology textbooks [20] , but so too it informs the thinking of historians [21] , [22] , political scientists [23] , economists [24] , and neuroscientists [25] . Indeed, via a range of social commentators, it has shaped the public consciousness much more broadly [26] , and, in this respect, can lay claim to being the most influential data-driven thesis in the whole of psychology [27] , [28] .

Yet despite the breadth of this consensus, in recent years, we and others have reinterrogated its two principal underpinnings—the archival evidence pertaining to Eichmann and his ilk, and the specifics of Milgram and Zimbardo's empirical demonstrations—in ways that tell a very different story [29] .

First, a series of thoroughgoing historical examinations have challenged the idea that Nazi bureaucrats were ever simply following orders [19] , [26] , [30] . This may have been the defense they relied upon when seeking to minimize their culpability [31] , but evidence suggests that functionaries like Eichmann had a very good understanding of what they were doing and took pride in the energy and application that they brought to their work. Typically too, roles and orders were vague, and hence for those who wanted to advance the Nazi cause (and not all did), creativity and imagination were required in order to work towards the regime's assumed goals and to overcome the challenges associated with any given task [32] . Emblematic of this, the practical details of “the final solution” were not handed down from on high, but had to be elaborated by Eichmann himself. He then felt compelled to confront and disobey his superiors—most particularly Himmler—when he believed that they were not sufficiently faithful to eliminationist Nazi principles [19] .

Second, much the same analysis can be used to account for behavior in the Stanford Prison Experiment. So while it may be true that Zimbardo gave his guards no direct orders, he certainly gave them a general sense of how he expected them to behave [33] . During the orientation session he told them, amongst other things, “You can create in the prisoners feelings of boredom, a sense of fear to some degree, you can create a notion of arbitrariness that their life is totally controlled by us, by the system, you, me… We're going to take away their individuality in various ways. In general what all this leads to is a sense of powerlessness” [34] . This contradicts Zimbardo's assertion that “behavioral scripts associated with the oppositional roles of prisoner and guard [were] the sole source of guidance” [35] and leads us to question the claim that conformity to these role-related scripts was the primary cause of guard brutality.

But even with such guidance, not all guards acted brutally. And those who did used ingenuity and initiative in responding to Zimbardo's brief. Accordingly, after the experiment was over, one prisoner confronted his chief tormentor with the observation that “If I had been a guard I don't think it would have been such a masterpiece” [34] . Contrary to the banality of evil thesis, the Zimbardo-inspired tyranny was made possible by the active engagement of enthusiasts rather than the leaden conformity of automatons.

Turning, third, to the specifics of Milgram's studies, the first point to note is that the primary dependent measure (flicking a switch) offers few opportunities for creativity in carrying out the task. Nevertheless, several of Milgram's findings typically escape standard reviews in which the paradigm is portrayed as only yielding up evidence of obedience. Initially, it is clear that the “baseline study” is not especially typical of the 30 or so variants of the paradigm that Milgram conducted. Here the percentage of participants going to 450 V varied from 0% to nearly 100%, but across the studies as a whole, a majority of participants chose not to go this far [10] , [36] , [37] .

Furthermore, close analysis of the experimental sessions shows that participants are attentive to the demands made on them by the Learner as well as the Experimenter [38] . They are torn between two voices confronting them with irreconcilable moral imperatives, and the fact that they have to choose between them is a source of considerable anguish. They sweat, they laugh, they try to talk and argue their way out of the situation. But the experimental set-up does not allow them to do so. Ultimately, they tend to go along with the Experimenter if he justifies their actions in terms of the scientific benefits of the study (as he does with the prod “The experiment requires that you continue”) [39] . But if he gives them a direct order (“You have no other choice, you must go on”) participants typically refuse. Once again, received wisdom proves questionable. The Milgram studies seem to be less about people blindly conforming to orders than about getting people to believe in the importance of what they are doing [40] .

Tyranny as a Product of Identification-Based Followership

Our suspicions about the plausibility of the banality of evil thesis and its various empirical substrates were first raised through our work on the BBC Prison Study (BPS [41] ). Like the Stanford study, this study randomly assigned men to groups as guards and prisoners and examined their behaviour with a specially created “prison.” Unlike Zimbardo, however, we took no leadership role in the study. Without this, would participants conform to a hierarchical script or resist it?

The study generated three clear findings. First, participants did not conform automatically to their assigned role. Second, they only acted in terms of group membership to the extent that they actively identified with the group (such that they took on a social identification) [42] . Third, group identity did not mean that people simply accepted their assigned position; instead, it empowered them to resist it. Early in the study, the Prisoners' identification as a group allowed them successfully to challenge the authority of the Guards and create a more egalitarian system. Later on, though, a highly committed group emerged out of dissatisfaction with this system and conspired to create a new hierarchy that was far more draconian.

Ultimately, then, the BBC Prison Study came close to recreating the tyranny of the Stanford Prison Experiment. However it was neither passive conformity to roles nor blind obedience to rules that brought the study to this point. On the contrary, it was only when they had internalized roles and rules as aspects of a system with which they identified that participants used them as a guide to action. Moreover, on the basis of this shared identification, the hallmark of the tyrannical regime was not conformity but creative leadership and engaged followership within a group of true believers (see also [43] , [44] ). As we have seen, this analysis mirrors recent conclusions about the Nazi tyranny. To complete the argument, we suggest that it is also applicable to Milgram's paradigm.

The evidence, noted above, about the efficacy of different “prods” already points to the fact that compliance is bound up with a sense of commitment to the experiment and the experimenter over and above commitment to the learner (S. Haslam, SD Reicher, M. Birney, unpublished data) [39] . This use of prods is but one aspect of Milgram's careful management of the paradigm [13] that is aimed at securing participants' identification with the scientific enterprise.

Significantly, though, the degree of identification is not constant across all variants of the study. For instance, when the study is conducted in commercial premises as opposed to prestigious Yale University labs one might expect the identification to diminish and (as our argument implies) compliance to decrease. It does. More systematically, we have examined variations in participants' identification with the Experimenter and the science that he represents as opposed to their identification with the Learner and the general community. They always identify with both to some degree—hence the drama and the tension of the paradigm. But the degree matters, and greater identification with the Experimenter is highly predictive of a greater willingness among Milgram's participants to administer the maximum shock across the paradigm's many variants [37] .

However, some of the most compelling evidence that participants' administration of shocks results from their identification with Milgram's scientific goals comes from what happened after the study had ended. In his debriefing, Milgram praised participants for their commitment to the advancement of science, especially as it had come at the cost of personal discomfort. This inoculated them against doubts concerning their own punitive actions, but it also it led them to support more of such actions in the future. “I am happy to have been of service,” one typical participant responded, “Continue your experiments by all means as long as good can come of them. In this crazy mixed up world of ours, every bit of goodness is needed” (S. Haslam, SD Reicher, K Millward, R MacDonald, unpublished data).

The banality of evil thesis shocks us by claiming that decent people can be transformed into oppressors as a result of their “natural” conformity to the roles and rules handed down by authorities. More particularly, the inclination to conform is thought to suppress oppressors' ability to engage intellectually with the fact that what they are doing is wrong.

Although it remains highly influential, this thesis loses credibility under close empirical scrutiny. On the one hand, it ignores copious evidence of resistance even in studies held up as demonstrating that conformity is inevitable [17] . On the other hand, it ignores the evidence that those who do heed authority in doing evil do so knowingly not blindly, actively not passively, creatively not automatically. They do so out of belief not by nature, out of choice not by necessity. In short, they should be seen—and judged—as engaged followers not as blind conformists [45] .

What was truly frightening about Eichmann was not that he was unaware of what he was doing, but rather that he knew what he was doing and believed it to be right. Indeed, his one regret, expressed prior to his trial, was that he had not killed more Jews [19] . Equally, what is shocking about Milgram's experiments is that rather than being distressed by their actions [46] , participants could be led to construe them as “service” in the cause of “goodness.”

To understand tyranny, then, we need to transcend the prevailing orthodoxy that this derives from something for which humans have a natural inclination—a “Lucifer effect” to which they succumb thoughtlessly and helplessly (and for which, therefore, they cannot be held accountable). Instead, we need to understand two sets of inter-related processes: those by which authorities advocate oppression of others and those that lead followers to identify with these authorities. How did Milgram and Zimbardo justify the harmful acts they required of their participants and why did participants identify with them—some more than others?

These questions are complex and full answers fall beyond the scope of this essay. Yet, regarding advocacy, it is striking how destructive acts were presented as constructive, particularly in Milgram's case, where scientific progress was the warrant for abuse. Regarding identification, this reflects several elements: the personal histories of individuals that render some group memberships more plausible than others as a source of self-definition; the relationship between the identities on offer in the immediate context and other identities that are held and valued in other contexts; and the structure of the local context that makes certain ways of orienting oneself to the social world seem more “fitting” than others [41] , [47] , [48] .

At root, the fundamental point is that tyranny does not flourish because perpetrators are helpless and ignorant of their actions. It flourishes because they actively identify with those who promote vicious acts as virtuous [49] . It is this conviction that steels participants to do their dirty work and that makes them work energetically and creatively to ensure its success. Moreover, this work is something for which they actively wish to be held accountable—so long as it secures the approbation of those in power.

- 1. Strachan H (1983) European armies and the conduct of war. London: Unwin Hyman (p.3).

- 2. Kelman HC, Hamilton VL (1990) Crimes of obedience. New Haven: Yale University Press.

- 3. Novick P (1999) The Holocaust in American life. Boston: Houghton Mifflin.

- 4. Jetten J, Hornsey MJ (Eds.) (2011) Rebels in groups: dissent, deviance, difference and defiance. Chichester, UK: Wiley-Blackwell.

- 5. Parker I (2007) Revolution in social psychology: alienation to emancipation. London: Pluto Press. (p.84)

- 6. Smith, JR, Haslam SA. (Eds.) (2012) Social psychology: revisiting the classic studies. London: Sage.

- 7. Turner JC (1991) Social influence. Buckingham, UK: Open University Press.

- 8. Turner JC, Hogg MA, Oakes PJ, Reicher SD, Wetherell MS (1987). Rediscovering the social group: A self-categorization theory. Oxford: Blackwell.

- View Article

- Google Scholar

- 10. Milgram S (1974) Obedience to authority: an experimental view. New York: Harper & Row.

- 11. Blass T (2004) The man who shocked the world: the life and legacy of Stanley Milgram. New York, NY: Basic Books.

- 14. Zimbardo P (2007) The Lucifer effect: how good people turn evil. London, UK: Random House.

- 16. Moscovici S (1976) Social influence and social change. London, UK: Academic Press.

- 18. Arendt H (1963) Eichmann in Jerusalem: a report on the banality of evil. New York: Penguin.

- 19. Cesarani D (2004) Eichmann: his life and crimes. London: Heinemann.

- 20. Miller A (2004). What can the Milgram obedience experiments tell us about the Holocaust? Generalizing from the social psychology laboratory. Miller A, ed. The social psychology of good and evil. New York: Guilford. pp. 193–239.

- 21. Browning C (1992) Ordinary men: Reserve Police Battalion 101 and the Final Solution in Poland. London: Penguin Books.

- 25. Harris LT (2009) The influence of social group and context on punishment decisions: insights from social neuroscience. Gruter Institute Squaw Valley Conference May 21, 2009. Law, Behavior & the Brain. Available at SSRN: http://ssrn.com/abstract=1405319 .

- 26. Lozowick Y (2002) Hitler's bureaucrats: the Nazi Security Police and the banality of evil. H. Watzman, translator. London: Continuum.

- 27. Blass T (Ed.) (2000) Obedience to authority. Current perspectives on the Milgram Paradigm. Mahwah (New Jersey): Erlbaum.

- 30. Vetlesen AJ (2005) Evil and human agency: understanding collective evildoing. Cambridge: Cambridge University Press.

- 34. Zimbardo P (1989) Quiet rage: The Stanford Prison Study [video]. Stanford: Stanford University.

- 35. Zimbardo P (2004) A situationist perspective on the psychology of evil: understanding how good people are transformed into perpetrators. Miller A, editor. The social psychology of good and evil. New York: Guilford. pp. 21–50.

- 42. Tajfel H, Turner JC (1979) An integrative theory of intergroup conflict. Austin WG, Worchel S, editors. The social psychology of intergroup relations. Monterey (California): Brooks/Cole. pp.33–47.

- 45. Haslam SA, Reicher SD, Platow MJ (2008) The new psychology of leadership: identity, influence and power. Hove, UK: Psychology Press.

- 48. Oakes PJ, Haslam SA, Turner JC (1994) Stereotyping and social reality. Oxford: Blackwell.

February 19, 2016

How Nazi's Defense of "Just Following Orders" Plays Out in the Mind

Modern-day Milgram experiment shows that people obeying commands feel less responsible for their actions

By Joshua Barajas & PBS NewsHour

In a 1962 letter , as a last-ditch effort for clemency, Holocaust organizer Adolf Eichmann wrote that he and other low-level officers were “forced to serve as mere instruments,” shifting the responsibility for the deaths of millions of Jews to his superiors. The “just following orders” defense, made famous in the post-WWII Nuremberg trials , featured heavily in Eichmann’s court hearings.

But that same year Stanley Milgram, a Yale University psychologist, conducted a series of famous experiments that tested whether “ordinary” folks would inflict harm on another person after following orders from an authoritative figure. Shockingly, the results suggested any human was capable of a heart of darkness.

Milgram’s research tackled whether a person could be coerced into behaving heinously, but new research released Thursday offers one explanation as to why.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing . By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

“In particular, acting under orders caused participants to perceive a distance from outcomes that they themselves caused,” said study co-author Patrick Haggard, a cognitive neuroscientist at University College London, in an email.

In other words, people actually feel disconnected from their actions when they comply with orders, even though they’re the ones committing the act.

The study, published in the journal Current Biology , described this distance as people experiencing their actions more as “passive movements than fully voluntary actions” when they follow orders.

Researchers at University College London and Université libre de Bruxelles in Belgium arrived at this conclusion by investigating how coercion could change someone’s “sense of agency,” a psychological phenomenon that refers to one’s awareness of their actions causing some external outcome.

More simply, Haggard described the phenomenon as flipping a switch (action) to turn on a light (external outcome). The time between the action and its outcome is typically experienced as a simultaneous event. Through two experiments, however, Haggard and the other researchers showed that people experienced a longer lapse in time in between the action and outcome, even if the outcome was unpleasant. It’s like you flip the switch, but it takes a beat or two for the light to appear.

“This [disconnect] suggests a reduced sense of agency, as if the participants’ actions under coercion began to feel more passive,” Haggard said.

Unlike Milgram’s classic research, Haggard’s team introduced a shocking element that was missing in the original 1960s experiments: actual shocks. Haggard said they used “moderately painful, but tolerable, shocks.” Milgram feigned shocks up to 450 volts.

According to Milgram’s experiments, 65 percent of his volunteers, described as “teachers,” were willing (sometimes reluctantly) to press a button that delivered shocks up to 450 volts to an unseen person, a “learner” in another room. Although pleas from the unknown person could be heard, including mentions of a heart condition, Milgram’s study said his volunteers continued to shock the “learner” when ordered to do so. At no point, however, did someone truly experience an electric shock.

“Milgram’s studies rested on a deception: Participants were instructed to administer ‘severe shocks’ to an actor, who in fact merely feigned being shocked,” Haggard said. “It’s difficult to ascertain whether participants are really deceived or not in such situations.”

When Yale received reams of Milgram’s documents in the 2000s, other psychologists started to criticize the famous electric-shock study when they sifted through the notes more closely.

Gina Perry, author of “Shock Machine: The Untold Story of the Notorious Milgram Psychology Experiments,” found a litany of methodological problems with the study. Perry said Milgram’s experiments were far less controlled than originally thought and introduced variables that appeared to goose the numbers.

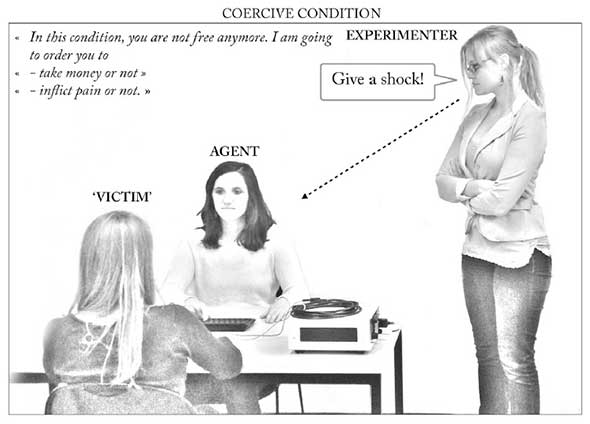

Haggard said his team’s study was more transparent. In the first experiment, he said participants—an “agent” and a “victim”—took turns delivering mild shocks or inflicting a financial penalty on each other. In some cases, a third person—an “experimenter”—sat in the room and gave orders on whether to inflict harm. In other cases, the experimenter looked away, while the agent acted on their own volition.

In this test, the “agent” can shock or take money from the “victim,” either acting on orders or by their own choice. Image courtesy of Caspar et al., Current Biology (2016)

The result? Researchers measured a “small, but significant” increase in the perceived time between a person’s action and outcome when coercion was involved. That is, when people act “under orders,” they seem to experience less agency over their actions and outcomes than when they choose for themselves, Haggard said.

In this test, the “agent” is not under the watchful gaze of an authority figure. They’re free to shock or take money from the “victim,” if they want. Image courtesy of Caspar et al., Current Biology (2016)

In a second experiment, the team explored whether the loss of agency could also be seen in the brain activity of subjects. Prior work had found that brain activity is dampened when people are forced to follow orders.

So akin to before, subjects had to decide whether to shock a person with or without coercion, but now they heard an audible tone while making the choice. This tone elicited a brain response that could be measured by an electroencephalogram (EEG) cap.

Haggard’s team found that brain activity in response to this tone is indeed dampened when being coerced. Haggard’s team also used a questionnaire in the second experiment to get explicit judgments from the volunteers, who explained they felt less responsible when they acted under orders.

Haggard said his team’s findings do not legitimate the Nuremberg defense and that anyone who claims they were “just following orders” ought to be viewed with skepticism .

But, “our study does suggest that this claim might potentially correspond to the basic experience that the person had of their action at the time,” Haggard said.

“If people acting under orders really do feel reduced responsibility, this seems important to understand. For a start, people who give orders should perhaps be held more responsible for the actions and outcomes of those they coerce,” he said.

This article is reprinted with permission from PBS NewsHour . It was first published on February 18, 2016.

Academia’s Response to Milgram’s Findings and Explanation

- First Online: 18 September 2018

Cite this chapter

- Nestar Russell 2

1001 Accesses

In this chapter, Russell provides a brief overview of the key issues that Stanley Milgram’s academic peers debated after the publication of his Obedience to Authority research. More specifically, Russell presents and assesses the prominent ethical and methodological critiques of Milgram’s research. Then with a focus on the Holocaust, Russell explores the debate over the generalizability of Milgram’s results beyond the laboratory walls. Finally, Russell examines the scholarly reaction to Milgram’s agentic state theory, particularly with reference to its application to the Milgram-Holocaust linkage.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Ancona, L., & Pareyson, R. (1968). Contributo allo studio della aggressione: La dinamica della obbedienza distruttiva [Contribution to the study of aggression: The dynamics of destructive obedience]. Archivio di Psicologiu. Neurologia. e Psichiatria, 29 (4), 340–372.

Google Scholar

Askenasy, H. (1978). Are we all Nazis? Secaucus, NJ: Lyle Stuart Inc.

Bandura, A. (1999). Moral disengagement in the perpetration of inhumanities. Personality and Social Psychology Review, 3 (3), 193–209.

Article PubMed Google Scholar

Bandura, A., Underwood, B., & Fromson, M. E. (1975). Disinhibition of aggression through diffusion of responsibility and dehumanization of victims. Journal of Research in Personality, 9 (4), 253–269.

Article Google Scholar

Bartov, O. (2001). The Eastern Front, 1941–45, German troops and the barbarization of warfare (2nd ed.). New York: Palgrave.

Bauman, Z. (1989). Modernity and the Holocaust . Ithaca, NY: Cornell University Press.

Baumrind, D. (1964). Some thoughts on ethics of research: After reading Milgram’s ‘behavioral study of obedience’. American Psychologist, 19 (6), 421–423.

Baumrind, D. (2013). Is Milgram’s deceptive research ethically acceptable? Theoretical and Applied Ethics, 2 (2), 1–18.

Baumrind, D. (2015). When subjects become objects: The lies behind the Milgram legend. Theory and Psychology, 25 (5), 690–696.

Beauvois, J. L., Courbet, D., & Oberlé, D. (2012). The prescriptive power of the television host. A transposition of Milgram’s obedience paradigm to the context of TV game show. European Review of Applied Psychology, 62 (3), 111–119.

Berger, L. (1983). A psychological perspective on the Holocaust: Is mass murder part of human behavior? In R. L. Braham (Ed.), Perspectives on the Holocaust (pp. 19–32). Boston, MA: Kluwer-Nijhoff Publishing.

Berkowitz, L. (1999). Evil is more than banal: Situationism and the concept of evil. Personality and Social Psychology Review, 3 (3), 246–253.

Blass, T. (1991). Understanding behavior in the Milgram obedience experiment: The role of personality, situations, and their interactions. Journal of Personality and Social Psychology, 60 (3), 398–413.

Blass, T. (1993). Psychological perspectives on the perpetrators of the Holocaust: The role of situational pressures, personal dispositions, and their interactions. Holocaust and Genocide Studies, 7 (1), 30–50.

Blass, T. (2004). The man who shocked the world: The life and legacy of Stanley Milgram . New York: Basic Books.