6 Usability Testing Examples & Case Studies

Interested in analyzing real-world examples of successful usability tests?

In this article, we’ll be examining six examples of usability testing being conducted with substantial results.

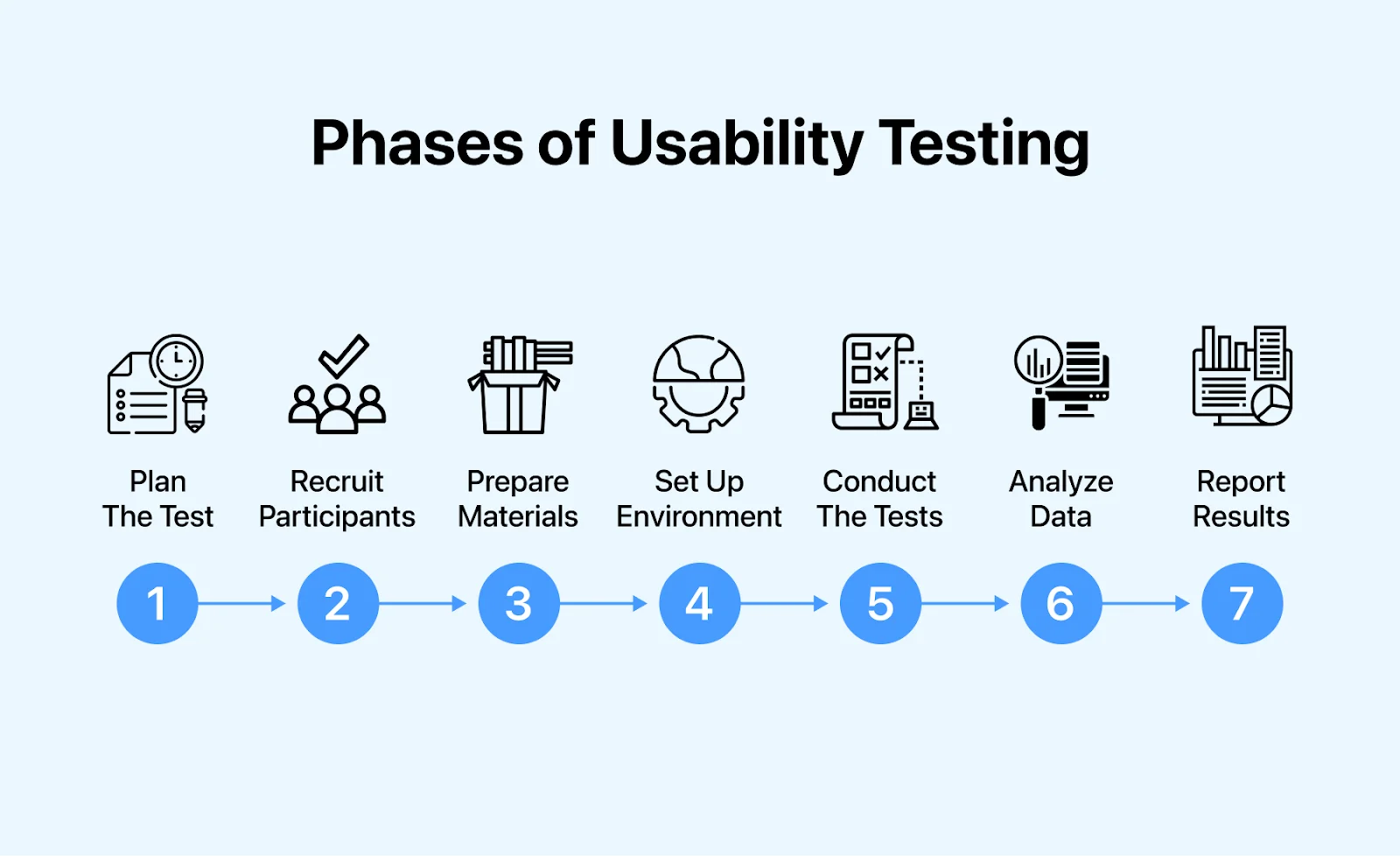

Conducting usability testing takes only seven simple steps and does not have to require a massive budget. Yet it can achieve remarkable results for companies across all industries.

If you’re someone who cannot be convinced by theory alone, this is the guide for you. These are tried-and-tested case studies from well-known companies that showcase the true power of a successful usability test.

Here are the usability testing examples and case studies we’ll be covering in this article:

- McDonald’s

- AutoTrader.com

- Halo: Combat Evolved

Example #1: Ryanair

Ryanair is one of the world’s largest airline groups, carrying 152 million passengers each year. In 2014, the company launched Ryanair Labs, a digital innovation hub seeking to “reinvent online traveling”. To make this dream a reality, they went on a recruiting spree that resulted in a team of 200+ members. This team included user experience specialists, data analysts, software developers, and digital marketers – all working towards a common goal of improving the user experience of the Ryanair website.

What made matters more complicated, however, is that Ryanair’s website and app together received 1 billion visits per year. Working with a website this large, combined with the paper-thin profit margins of around 5% for the airline industry, Ryanair had no room for errors. To make matters even more stressful, one of the first missions for the new team included launching an entirely new website with a superior user experience.

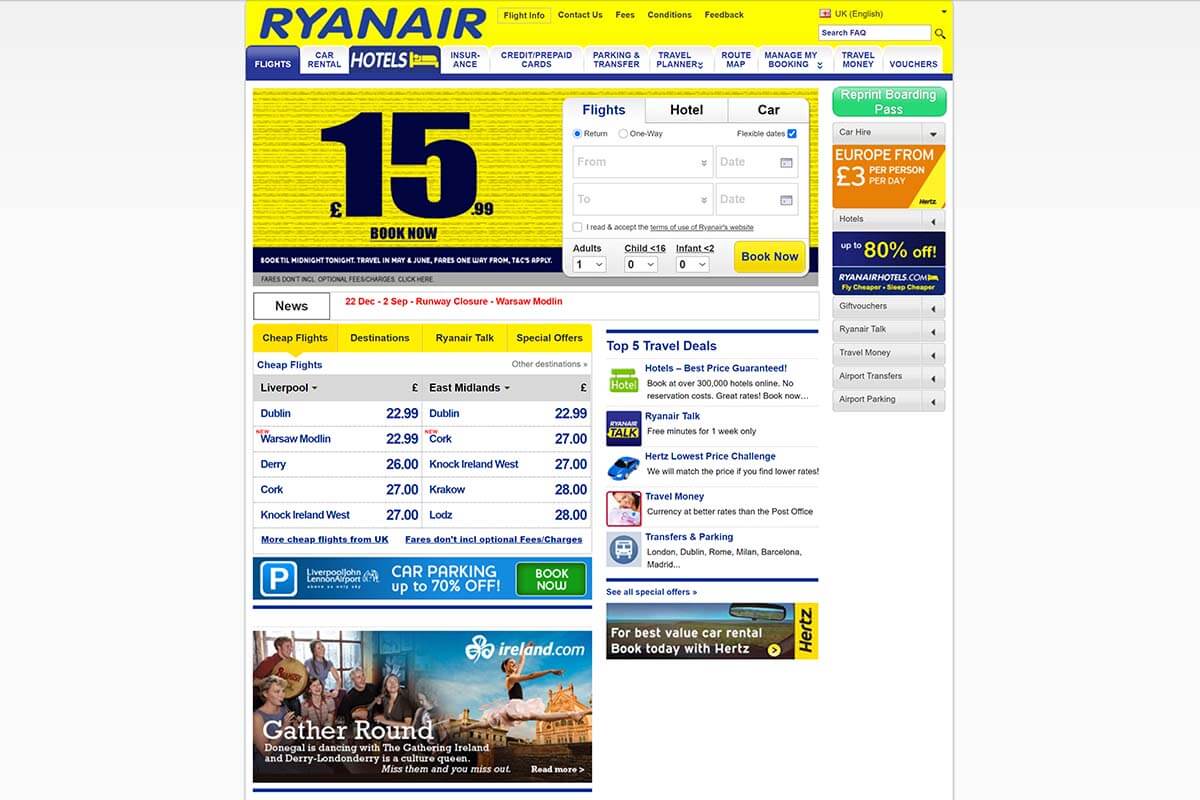

To give you a visual idea of what they were up against, take a look at their old website design:

Not great, not terrible. But the website undoubtedly needed a redesign for the 21st century.

This is what the Ryanair team set out to accomplish:

- Reducing the number of steps needed to book a flight on the website;

- Allowing customers to store their travel documents and payment cards on the website;

- Delivering a better mobile device user experience for both the website and app.

With these goals in mind, they chose remote and unmoderated usability testing types for their user tests. This by itself was a change for the team, as the Ryanair team had relied on in-lab, face-to-face testing until that point.

By collaborating with the UX agency UserZoom , however, new opportunities opened up for Ryanair. With UzerZoom’s massive roster of user testers, Ryanair could access large amounts of qualitative and quantitative usability data. Data that they badly needed during the design process of the new website.

By going with remote unmoderated usability testing, the Ryanair team managed to:

- Reduce the time spent on usability testing;

- Conduct simultaneous usability tests with hundreds of users and without geographical barriers;

- Increase the overall reach and scale of the tests;

- Carry out tests across many devices, operating systems, and multiple focus groups.

With continuous user testing, the new website was taken through alpha and beta testing in 2015. The end result of all work this was the vastly improved look, functionality, and user experience of the new website:

Even before launch, Ryanair knew that the new website was superior. Usability tests had shown that to be the case and they had no need to rely on “educated guesses”. This usability testing example demonstrates that a well-executed testing plan can give remarkable results.

Source: Ryanair case study by UserZoom

Example #2: McDonald’s

McDonald’s is one of the world’s largest fast-food restaurant chains, with a staggering 62 million daily customers . Yet, McDonald’s was late to embrace the mobile revolution as their smartphone app launched rather recently – in August 2015. In comparison, Starbucks’ smartphone app was already a booming success and accounted for 20% of its’ overall revenue in 2015.

Considering the competition, McDonald’s had some catching up to do. Before the launch of their app in the UK, they decided to hire UK-based SimpleUsability to identify any usability problems before release. The test plan involved conducting 20 usability tests, where the task scenarios covered the entire customer journey from end-to-end. In addition to that, the test plan included 225 end-user interviews.

Not exactly a large-scale usability study considering the massive size of McDonald’s, but it turned out to be valuable nonetheless. A number of usability issues were detected during the study:

- Poor visibility and interactivity of the call-to-action buttons;

- Communication problems between restaurants and the smartphone app;

- Lack of order customization and favoriting impaired the overall user experience.

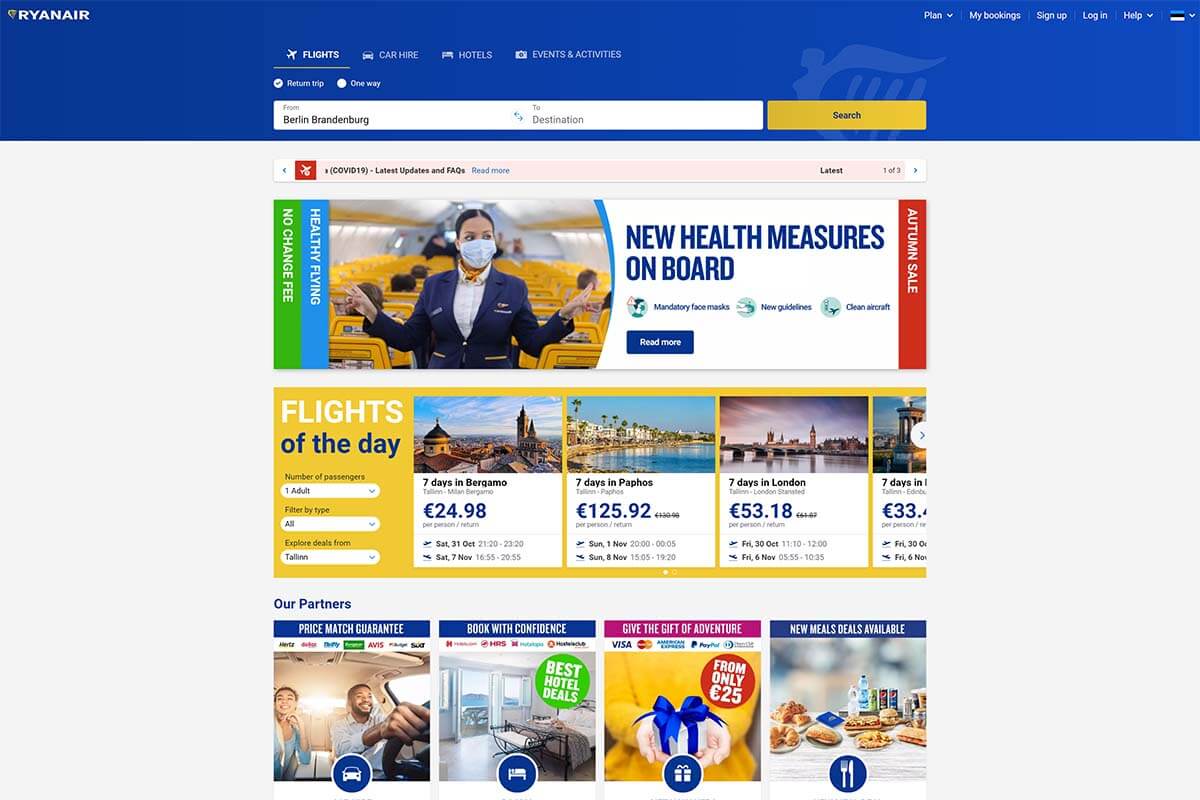

Here’s what the McDonald’s mobile app looks like today:

This case study demonstrates that investing even a tiny percentage of a company’s resources into usability testing can result in meaningful insights.

Source: McDonald’s case study by SimpleUsability

Example #3: SoundCloud

SoundCloud is the world’s largest music and audio distribution platform, with over 175 million unique monthly listeners . In 2019, SoundCloud hired test IO , a Berlin-based usability testing agency, to conduct continuous usability testing for the SoundCloud mobile app. With SoundCloud’s rigorous development schedule, the company needed regular human user testers to make sure that all new updates work across all devices and OS versions.

The key research objectives for SoundCloud’s regular usability studies were to:

- Provide a user-friendly listening experience for mobile app users;

- Identify and fix software bugs before wide release;

- Improve the mobile app development cycle.

In the very first usability tests, more than 150 usability issues (including 11 critical issues) were discovered. These issues likely wouldn’t have been discovered through internal bug testing. That is because the user testers experimented on the app from a plethora of devices and geographical locations (144 devices and 22 countries). Without remote usability testing, a testing scale as large as this would have been very difficult and expensive to achieve.

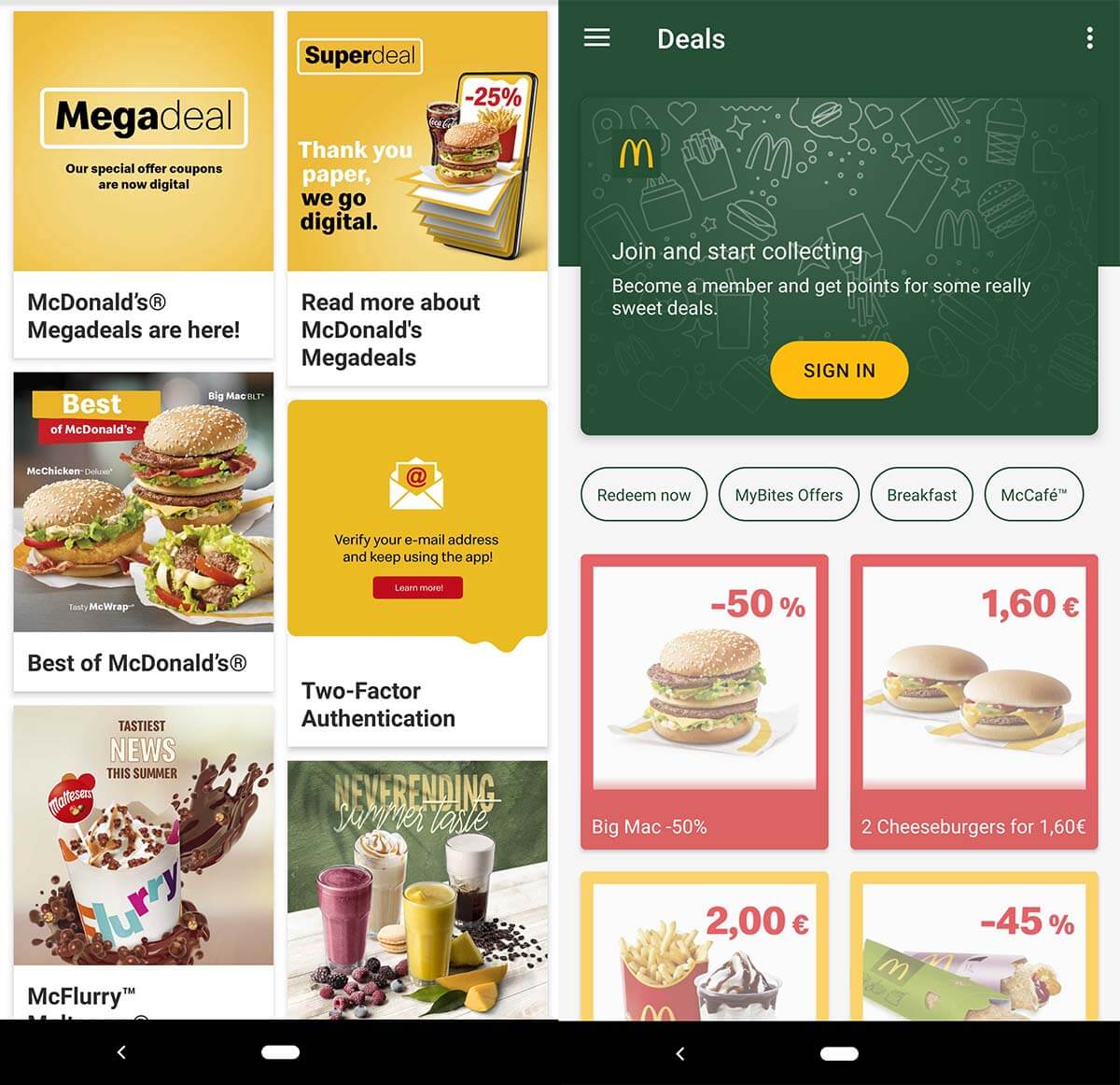

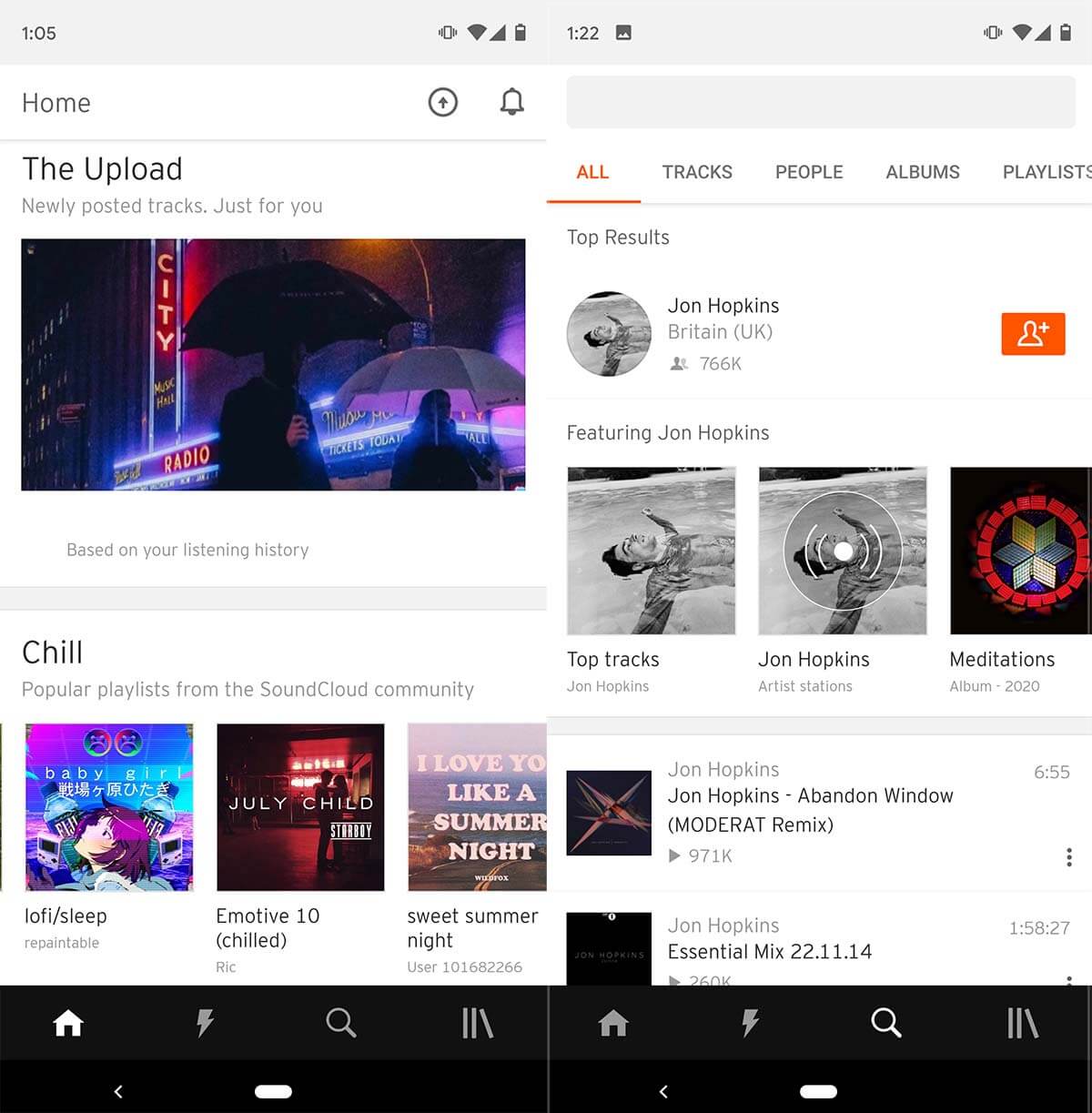

Today, SoundCloud’s mobile app looks like this:

This case study demonstrates the power of regular usability testing in products with frequent updates.

Source: SoundCloud case study (.pdf) by test IO

Example #4: AutoTrader.com

AutoTrader.com is one of the world’s largest online marketplaces for buying and selling used cars, with over 28 million monthly visitors . The mission of AutoTrader’s website is to empower car shoppers in the researching process by giving them all the tools necessary to make informed decisions about vehicle purchases.

Sounds fantastic.

However, with competitors such as CarGurus gaining increasing amounts of market share in the online car shopping industry, AutoTrader had to do reinvent itself to stay competitive.

In e-commerce, competitors with a superior website can gain massive followings in an instant. Fifty years ago this was not the case – well-established car marketplaces had massive car parks all over the country, and a newcomer would have little in ways to compete.

Nowadays, however, it’s all about user experience. Digital shoppers will flock to whichever site offers a better user experience. Websites unwilling or unable to improve their user experience over time will get left in the dust. No matter how big or small they are.

Going back to AutoTrader, the majority of its website traffic comes from organic Google search, meaning that in addition to website usability, search engine optimization (SEO) is a major priority for the company. According to John Muller from Google, changing the layout of a website can affect rankings , and that is why AutoTrader had to be careful with making any large-scale changes to their website.

AutoTrader did not have a large team of user researchers nor a massive budget dedicated to usability testing. But they did have Bradley Miller – Senior User Experience Researcher at the company. To test the usability of AutoTrader, Miller decided to partner with UserTesting.com to conduct live user interviews with AutoTrader users.

Through these live user interviews, Miller was able to:

- Find and connect with target personas;

- Communicate with car buyers from across the country;

- Reduce the costs of conducting usability tests while increasing the insights gained.

From these remote usability live interviews, Miller learned that the customer journey almost always begins from a single source: search engines. Here, it’s important to note that search engines rarely direct users to the homepage. Instead, they drive traffic to the inner pages of websites. In the case of AutoTrader, for example, only around 20% of search engine traffic goes to the homepage (data from SEMrush ).

These insights helped AutoTrader redesign their inner pages to better match the customer journey. They no longer assumed that any inner page visitor already has a greater contextual knowledge of the website. Instead, they started to treat each page as if it’s the initial point of entry by providing more contextual information right then and there inside the inner page.

This usability testing example demonstrates not only the power of user interviews but also the importance of understanding your customer journey and SEO.

Source: AutoTrader case study by UserTesting.com

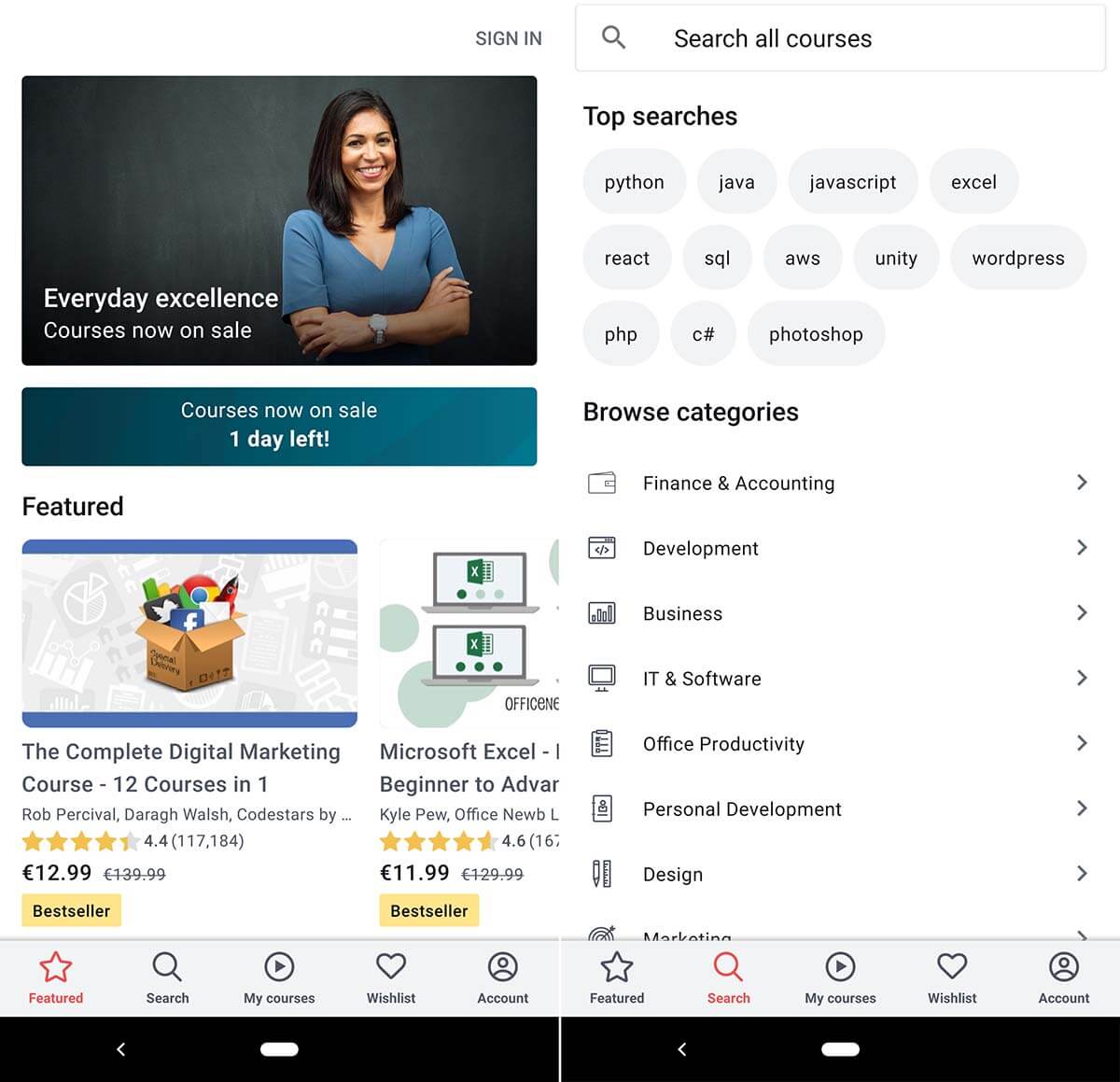

Example #5: Udemy

Udemy is one of the world’s largest online learning platforms with over 40 million students across the world. The e-learning giant also has a massively popular smartphone app, and the usability testing example in question was aimed at the smartphone users of Udemy.

To find out when, where, and why Udemy users chose to opt for the mobile app rather than the desktop version, Udemy conducted user tests. As Udemy is a 100% digital company, they chose fully remote unmoderated user testing as their testing method.

Test participants were asked to take small videos showing where they were located and what tasks they were focused on at the time of learning and recording.

What the user researchers found was that their initial theory of “users prefer using the mobile app while on the go” was false. Instead, what they found was that the majority of mobile app users were stationary. Udemy users, for various reasons, used the mobile app at home on the couch, or in a cafeteria. The key findings of this user test were utilized for the next year’s product and feature development.

This is what Udemy’s mobile app looks like today:

This usability testing case study demonstrates that a company’s perception of target audience behavior does not always match the behavior of the real end-users. And, that is why user testing is crucial.

Source: Udemy case study by UserTesting.com

Example #6: Halo: Combat Evolved

“Halo: Combat Evolved” was the first video game in the massively popular Halo franchise. It was developed by Bungie and published by Microsoft Game Studios in 2001. Within 10 years after its’ release, the Halo games sold more than 46 million copies worldwide and generated Microsoft more than $5 billion in video game and hardware sales. Owing it all to the usability test we’re about to discuss may be a bit of stretch, but usability testing the game during development was undeniably one of the factors that helped the franchise take off like a rocket.

In this usability study, the Halo team gathered a focus group of console gamers to try out their game’s prototype to see if they had fun playing the game. And, if they did not have fun – they wanted to find out what prevented them from doing so.

In the usability sessions, the researchers placed test subjects (players) in a large outdoor environment with enemies waiting for them across the open space.

The designers of the game expected the players to sprint closer towards the enemies, sparking a massive battle full of action and excitement. But, the test participants had a different plan in mind. Instead of putting themselves in danger by springing closer, they would stay at a maximum distance from the enemies and shoot from far across the outdoor space. While this was a safe and effective strategy, it proved to be rather uneventful and boring for the players.

To entice players to enjoy combat up close, the user researchers decided that changes would have to be made. Their solution – changing the size and color of the aiming indicator in the center of the screen to notify players when they were too far away from enemies.

Here, you can see the finalized aiming indicator in action:

Subsequent usability tests proved these changes to be effective, as the majority of user testers now engaged in combat from a closer distance.

User testing is not restricted to any particular industry, OS, or platform. Testing user experience is an invaluable tool for any product – not just for websites or mobile apps.

This example of usability testing from the video game industry shows that players (users) will optimize the fun out of a game if given the chance. It’s up to the designers to bring the fun back through well-designed game mechanics and notifications.

Source: “ Designing for Fun – User-Testing Case Studies ” by Randy J. Pagulayan

The Beginner’s Guide to Usability Testing [+ Sample Questions]

Published: July 28, 2021

In practically any discipline, it's a good idea to have others evaluate your work with fresh eyes, and this is especially true in user experience and web design. Otherwise, your partiality for your own work can skew your perception of it. Learning directly from the people that your work is actually for — your users — is what enables you to craft the best user experience possible.

UX and design professionals leverage usability testing to get user feedback on their product or website’s user experience all the time. In this post, you'll learn:

What usability testing is

- Its purpose and goals

- Scenarios where it can work

- Real-life examples and case studies

- How to conduct one of your own

- Scripted questions you can use along the way

What is usability testing?

Usability testing is a method of evaluating a product or website’s user experience. By testing the usability of their product or website with a representative group of their users or customers, UX researchers can determine if their actual users can easily and intuitively use their product or website.

UX researchers will usually conduct usability studies on each iteration of their product from its early development to its release.

During a usability study, the moderator asks participants in their individual user session to complete a series of tasks while the rest of the team observes and takes notes. By watching their actual users navigate their product or website and listening to their praises and concerns about it, they can see when the participants can quickly and successfully complete tasks and where they’re enjoying the user experience, encountering problems, and experiencing confusion.

After conducting their study, they’ll analyze the results and report any interesting insights to the project lead.

.png)

Free UX Research Kit + Templates

3 templates for conducting user tests, summarizing your UX research, and presenting your findings.

- User Testing Template

- UX Research Testing Report Template

- UX Research Presentation Template

Download Free

All fields are required.

You're all set!

Click this link to access this resource at any time.

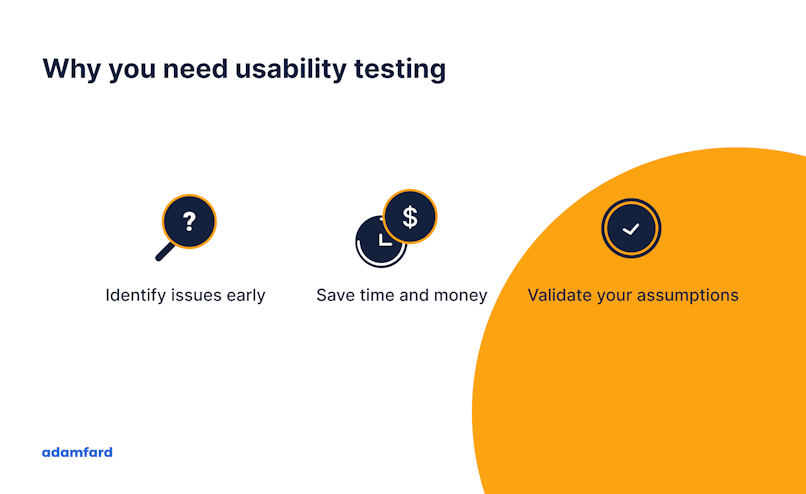

What is the purpose of usability testing?

Usability testing allows researchers to uncover any problems with their product's user experience, decide how to fix these problems, and ultimately determine if the product is usable enough.

Identifying and fixing these early issues saves the company both time and money: Developers don’t have to overhaul the code of a poorly designed product that’s already built, and the product team is more likely to release it on schedule.

Benefits of Usability Testing

Usability testing has five major advantages over the other methods of examining a product's user experience (such as questionnaires or surveys):

- Usability testing provides an unbiased, accurate, and direct examination of your product or website’s user experience. By testing its usability on a sample of actual users who are detached from the amount of emotional investment your team has put into creating and designing the product or website, their feedback can resolve most of your team’s internal debates.

- Usability testing is convenient. To conduct your study, all you have to do is find a quiet room and bring in portable recording equipment. If you don’t have recording equipment, someone on your team can just take notes.

- Usability testing can tell you what your users do on your site or product and why they take these actions.

- Usability testing lets you address your product’s or website’s issues before you spend a ton of money creating something that ends up having a poor design.

- For your business, intuitive design boosts customer usage and their results, driving demand for your product.

Usability Testing Scenario Examples

Usability testing sounds great in theory, but what value does it provide in practice? Here's what it can do to actually make a difference for your product:

1. Identify points of friction in the usability of your product.

As Brian Halligan said at INBOUND 2019, "Dollars flow where friction is low." This just as true in UX as it is in sales or customer service. The more friction your product has, the more reason your users will have to find something that's easier to use.

Usability testing can uncover points of friction from customer feedback.

For example: "My process begins in Google Drive. I keep switching between windows and making multiple clicks just to copy and paste from Drive into this interface."

Even though the product team may have had that task in mind when they created the tool, seeing it in action and hearing the user's frustration uncovered a use case that the tool didn't compensate for. It might lead the team to solve for this problem by creating an easy import feature or way to access Drive within the interface to reduce the number of clicks the user needs to make to accomplish their task.

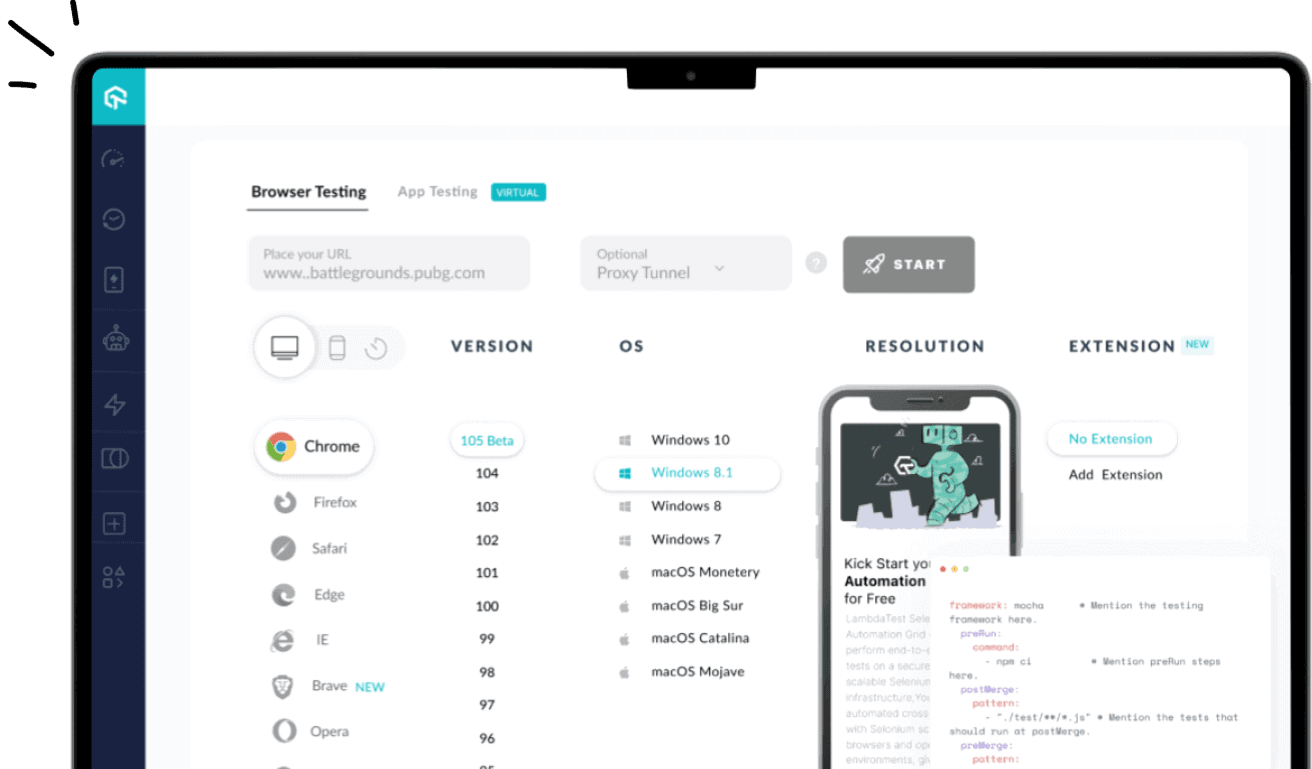

2. Stress test across many environments and use cases.

Our products don't exist in a vacuum, and sometimes development environments are unable to compensate for all the variables. Getting the product out and tested by users can uncover bugs that you may not have noticed while testing internally.

For example: "The check boxes disappear when I click on them."

Let's say that the team investigates why this might be, and they discover that the user is on a browser that's not commonly used (or a browser version that's outdated).

If the developers only tested across the browsers used in-house, they may have missed this bug, and it could have resulted in customer frustration.

3. Provide diverse perspectives from your user base.

While individuals in our customer bases have a lot in common (in particular, the things that led them to need and use our products), each individual is unique and brings a different perspective to the table. These perspectives are invaluable in uncovering issues that may not have occurred to your team.

For example: "I can't find where I'm supposed to click."

Upon further investigation, it's possible that this feedback came from a user who is color blind, leading your team to realize that the color choices did not create enough contrast for this user to navigate properly.

Insights from diverse perspectives can lead to design, architectural, copy, and accessibility improvements.

4. Give you clear insights into your product's strengths and weaknesses.

You likely have competitors in your industry whose products are better than yours in some areas and worse than yours in others. These variations in the market lead to competitive differences and opportunities. User feedback can help you close the gap on critical issues and identify what positioning is working.

For example: "This interface is so much easier to use and more attractive than [competitor product]. I just wish that I could also do [task] with it."

Two scenarios are possible based on that feedback:

- Your product can already accomplish the task the user wants. You just have to make it clear that the feature exists by improving copy or navigation.

- You have a really good opportunity to incorporate such a feature in future iterations of the product.

5. Inspire you with potential future additions or enhancements.

Speaking of future iterations, that comes to the next example of how usability testing can make a difference for your product: The feedback that you gather can inspire future improvements to your tool.

It's not just about rooting out issues but also envisioning where you can go next that will make the most difference for your customers. And who best to ask but your prospective and current customers themselves?

Usability Testing Examples & Case Studies

Now that you have an idea of the scenarios in which usability testing can help, here are some real-life examples of it in action:

1. User Fountain + Satchel

Satchel is a developer of education software, and their goal was to improve the experience of the site for their users. Consulting agency User Fountain conducted a usability test focusing on one question: "If you were interested in Satchel's product, how would you progress with getting more information about the product and its pricing?"

During the test, User Fountain noted significant frustration as users attempted to complete the task, particularly when it came to locating pricing information. Only 80% of users were successful.

Image Source

This led User Fountain to create the hypothesis that a "Get Pricing" link would make the process clearer for users. From there, they tested a new variation with such a link against a control version. The variant won, resulting in a 34% increase in demo requests.

By testing a hypothesis based on real feedback, friction was eliminated for the user, bringing real value to Satchel.

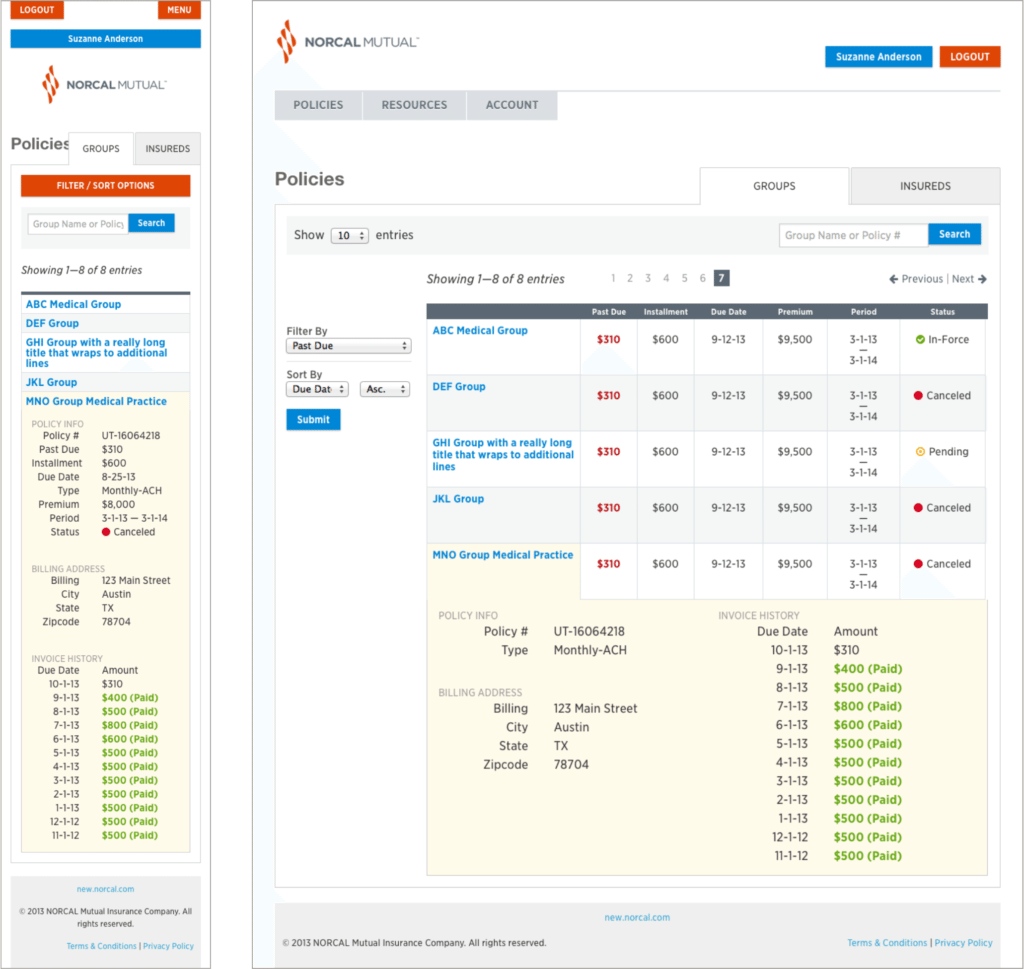

2. Kylie.Design + Digi-Key

Ecommerce site Digi-Key approached consultant Kylie.Design to uncover which site interactions had the highest success rates and what features those interactions had in common.

They conducted more than 120 tests and recorded:

- Click paths from each user

- Which actions were most common

- The success rates for each

This as well as the written and verbal feedback provided by participants informed the new design, which resulted in increasing purchaser success rates from 68.2% to 83.3%.

In essence, Digi-Key was able to identify their most successful features and double-down on them, improving the experience and their bottom line.

3. Sparkbox + An Academic Medical Center

An academic medical center in the midwest partnered with consulting agency Sparkbox to improve the patient experience on their homepage, where some features were suffering from low engagement.

Sparkbox conducted a usability study to determine what users wanted from the homepage and what didn't meet their expectations. From there, they were able to propose solutions to increase engagement.

For example, one key action was the ability to access electronic medical records. The new design based on user feedback increased the success rate from 45% to 94%.

This is a great example of putting the user's pains and desires front-and-center in a design.

The 9 Phases of a Usability Study

1. decide which part of your product or website you want to test..

Do you have any pressing questions about how your users will interact with certain parts of your design, like a particular interaction or workflow? Or are you wondering what users will do first when they land on your product page? Gather your thoughts about your product or website’s pros, cons, and areas of improvement, so you can create a solid hypothesis for your study.

2. Pick your study’s tasks.

Your participants' tasks should be your user’s most common goals when they interact with your product or website, like making a purchase.

3. Set a standard for success.

Once you know what to test and how to test it, make sure to set clear criteria to determine success for each task. For instance, when I was in a usability study for HubSpot’s Content Strategy tool, I had to add a blog post to a cluster and report exactly what I did. Setting a threshold of success and failure for each task lets you determine if your product's user experience is intuitive enough or not.

4. Write a study plan and script.

At the beginning of your script, you should include the purpose of the study, if you’ll be recording, some background on the product or website, questions to learn about the participants’ current knowledge of the product or website, and, finally, their tasks. To make your study consistent, unbiased, and scientific, moderators should follow the same script in each user session.

5. Delegate roles.

During your usability study, the moderator has to remain neutral, carefully guiding the participants through the tasks while strictly following the script. Whoever on your team is best at staying neutral, not giving into social pressure, and making participants feel comfortable while pushing them to complete the tasks should be your moderator

Note-taking during the study is also just as important. If there’s no recorded data, you can’t extract any insights that’ll prove or disprove your hypothesis. Your team’s most attentive listener should be your note-taker during the study.

6. Find your participants.

Screening and recruiting the right participants is the hardest part of usability testing. Most usability experts suggest you should only test five participants during each study , but your participants should also closely resemble your actual user base. With such a small sample size, it’s hard to replicate your actual user base in your study.

To recruit the ideal participants for your study, create the most detailed and specific persona as you possibly can and incentivize them to participate with a gift card or another monetary reward.

Recruiting colleagues from other departments who would potentially use your product is also another option. But you don’t want any of your team members to know the participants because their personal relationship can create bias -- since they want to be nice to each other, the researcher might help a user complete a task or the user might not want to constructively criticize the researcher’s product design.

7. Conduct the study.

During the actual study, you should ask your participants to complete one task at a time, without your help or guidance. If the participant asks you how to do something, don’t say anything. You want to see how long it takes users to figure out your interface.

Asking participants to “think out loud” is also an effective tactic -- you’ll know what’s going through a user’s head when they interact with your product or website.

After they complete each task, ask for their feedback, like if they expected to see what they just saw, if they would’ve completed the task if it wasn’t a test, if they would recommend your product to a friend, and what they would change about it. This qualitative data can pinpoint more pros and cons of your design.

8. Analyze your data.

You’ll collect a ton of qualitative data after your study. Analyzing it will help you discover patterns of problems, gauge the severity of each usability issue, and provide design recommendations to the engineering team.

When you analyze your data, make sure to pay attention to both the users’ performance and their feelings about the product. It’s not unusual for a participant to quickly and successfully achieve your goal but still feel negatively about the product experience.

9. Report your findings.

After extracting insights from your data, report the main takeaways and lay out the next steps for improving your product or website’s design and the enhancements you expect to see during the next round of testing.

The 3 Most Common Types of Usability Tests

1. hallway/guerilla usability testing.

This is where you set up your study somewhere with a lot of foot traffic. It allows you to ask randomly-selected people who have most likely never even heard of your product or website -- like passers-by -- to evaluate its user-experience.

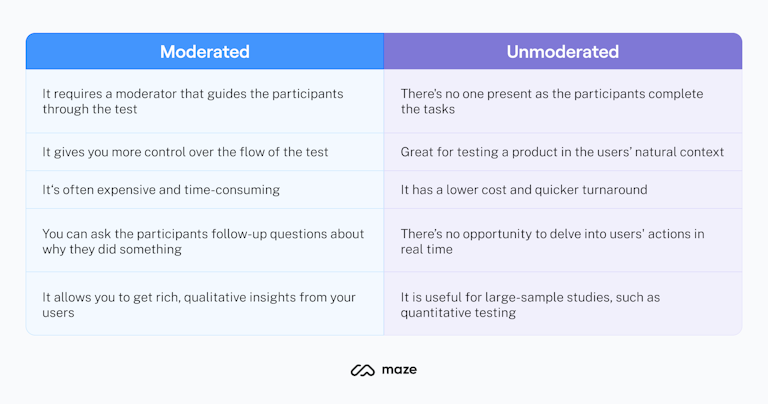

2. Remote/Unmoderated Usability Testing

Remote/unmoderated usability testing has two main advantages: it uses third-party software to recruit target participants for your study, so you can spend less time recruiting and more time researching. It also allows your participants to interact with your interface by themselves and in their natural environment -- the software can record video and audio of your user completing tasks.

Letting participants interact with your design in their natural environment with no one breathing down their neck can give you more realistic, objective feedback. When you’re in the same room as your participants, it can prompt them to put more effort into completing your tasks since they don’t want to seem incompetent around an expert. Your perceived expertise can also lead to them to please you instead of being honest when you ask for their opinion, skewing your user experience's reactions and feedback.

3. Moderated Usability Testing

Moderated usability testing also has two main advantages: interacting with participants in person or through a video a call lets you ask them to elaborate on their comments if you don’t understand them, which is impossible to do in an unmoderated usability study. You’ll also be able to help your users understand the task and keep them on track if your instructions don’t initially register with them.

Usability Testing Script & Questions

Following one script or even a template of questions for every one of your usability studies wouldn't make any sense -- each study's subject matter is different. You'll need to tailor your questions to the things you want to learn, but most importantly, you'll need to know how to ask good questions.

1. When you [action], what's the first thing you do to [goal]?

Questions such as this one give insight into how users are inclined to interact with the tool and what their natural behavior is.

Julie Fischer, one of HubSpot's Senior UX researchers, gives this advice: "Don't ask leading questions that insert your own bias or opinion into the participants' mind. They'll end up doing what you want them to do instead of what they would do by themselves."

For example, "Find [x]" is a better than "Are you able to easily find [x]?" The latter inserts connotation that may affect how they use the product or answer the question.

2. How satisfied are you with the [attribute] of [feature]?

Avoid leading the participants by asking questions like "Is this feature too complicated?" Instead, gauge their satisfaction on a Likert scale that provides a number range from highly unsatisfied to highly satisfied. This will provide a less biased result than leading them to a negative answer they may not otherwise have had.

3. How do you use [feature]?

There may be multiple ways to achieve the same goal or utilize the same feature. This question will help uncover how users interact with a specific aspect of the product and what they find valuable.

4. What parts of [the product] do you use the most? Why?

This question is meant to help you understand the strengths of the product and what about it creates raving fans. This will indicate what you should absolutely keep and perhaps even lead to insights into what you can improve for other features.

5. What parts of [the product] do you use the least? Why?

This question is meant to uncover the weaknesses of the product or the friction in its use. That way, you can rectify any issues or plan future improvements to close the gap between user expectations and reality.

6. If you could change one thing about [feature] what would it be?

Because it's so similar to #5, you may get some of the same answers. However, you'd be surprised about the aspirational things that your users might say here.

7. What do you expect [action/feature] to do?

Here's another tip from Julie Fischer:

"When participants ask 'What will this do?' it's best to reply with the question 'What do you expect it do?' rather than telling them the answer."

Doing this can uncover user expectation as well as clarity issues with the copy.

Your Work Could Always Use a Fresh Perspective

Letting another person review and possibly criticize your work takes courage -- no one wants a bruised ego. But most of the time, when you allow people to constructively criticize or even rip apart your article or product design, especially when your work is intended to help these people, your final result will be better than you could've ever imagined.

Editor's note: This post was originally published in August 2018 and has been updated for comprehensiveness.

Don't forget to share this post!

Related articles.

The Top 13 Paid & Free Alternatives to Adobe Illustrator of 2024

Using Human-Centered Design to Create Better Products (with Examples)

![the case study of usability testing 9 Breadcrumb Tips to Make Your Site Easier to Navigate [+ Examples]](https://53.fs1.hubspotusercontent-na1.net/hubfs/53/breadcrumb-navigation-tips.jpg)

9 Breadcrumb Tips to Make Your Site Easier to Navigate [+ Examples]

UX vs. UI: What's the Difference?

The 10 Best Storyboarding Software of 2024 for Any Budget

The Ultimate Guide to Designing for the User Experience

It’s the Little Things: How To Write Microcopy

10 Tips That Can Drastically Improve Your Website's User Experience

Intro to Adobe Fireworks: 6 Great Ways Designers Can Use This Software

Fitts's Law: The UX Hack that Will Strengthen Your Design

3 templates for conducting user tests, summarizing UX research, and presenting findings.

Marketing software that helps you drive revenue, save time and resources, and measure and optimize your investments — all on one easy-to-use platform

- User Experience (UX) Testing User Interface (UI) Testing Ecommerce Testing Remote Usability Testing About the company ' data-html="true"> Why Trymata

- Usability testing

Run remote usability tests on any digital product to deep dive into your key user flows

- Product analytics

Learn how users are behaving on your website in real time and uncover points of frustration

- Research repository

A tool for collaborative analysis of qualitative data and for building your research repository and database.

See an example

Trymata Blog

How-to articles, expert tips, and the latest news in user testing & user experience

Knowledge Hub

Detailed explainers of Trymata’s features & plans, and UX research terms & topics

Visit Knowledge Hub

- Plans & Pricing

Get paid to test

- For UX & design teams

- For product teams

- For marketing teams

- For ecommerce teams

- For agencies

- For startups & VCs

- Customer Stories

How do you want to use Trymata?

Conduct user testing, desktop usability video.

You’re on a business trip in Oakland, CA. You've been working late in downtown and now you're looking for a place nearby to grab a late dinner. You decided to check Zomato to try and find somewhere to eat. (Don't begin searching yet).

- Look around on the home page. Does anything seem interesting to you?

- How would you go about finding a place to eat near you in Downtown Oakland? You want something kind of quick, open late, not too expensive, and with a good rating.

- What do the reviews say about the restaurant you've chosen?

- What was the most important factor for you in choosing this spot?

- You're currently close to the 19th St Bart station, and it's 9PM. How would you get to this restaurant? Do you think you'll be able to make it before closing time?

- Your friend recommended you to check out a place called Belly while you're in Oakland. Try to find where it is, when it's open, and what kind of food options they have.

- Now go to any restaurant's page and try to leave a review (don't actually submit it).

What was the worst thing about your experience?

It was hard to find the bart station. The collections not being able to be sorted was a bit of a bummer

What other aspects of the experience could be improved?

Feedback from the owners would be nice

What did you like about the website?

The flow was good, lots of bright photos

What other comments do you have for the owner of the website?

I like that you can sort by what you are looking for and i like the idea of collections

You're going on a vacation to Italy next month, and you want to learn some basic Italian for getting around while there. You decided to try Duolingo.

- Please begin by downloading the app to your device.

- Choose Italian and get started with the first lesson (stop once you reach the first question).

- Now go all the way through the rest of the first lesson, describing your thoughts as you go.

- Get your profile set up, then view your account page. What information and options are there? Do you feel that these are useful? Why or why not?

- After a week in Italy, you're going to spend a few days in Austria. How would you take German lessons on Duolingo?

- What other languages does the app offer? Do any of them interest you?

I felt like there could have been a little more of an instructional component to the lesson.

It would be cool if there were some feature that could allow two learners studying the same language to take lessons together. I imagine that their screens would be synced and they could go through lessons together and chat along the way.

Overall, the app was very intuitive to use and visually appealing. I also liked the option to connect with others.

Overall, the app seemed very helpful and easy to use. I feel like it makes learning a new language fun and almost like a game. It would be nice, however, if it contained more of an instructional portion.

All accounts, tests, and data have been migrated to our new & improved system!

Use the same email and password to log in:

Legacy login: Our legacy system is still available in view-only mode, login here >

What’s the new system about? Read more about our transition & what it-->

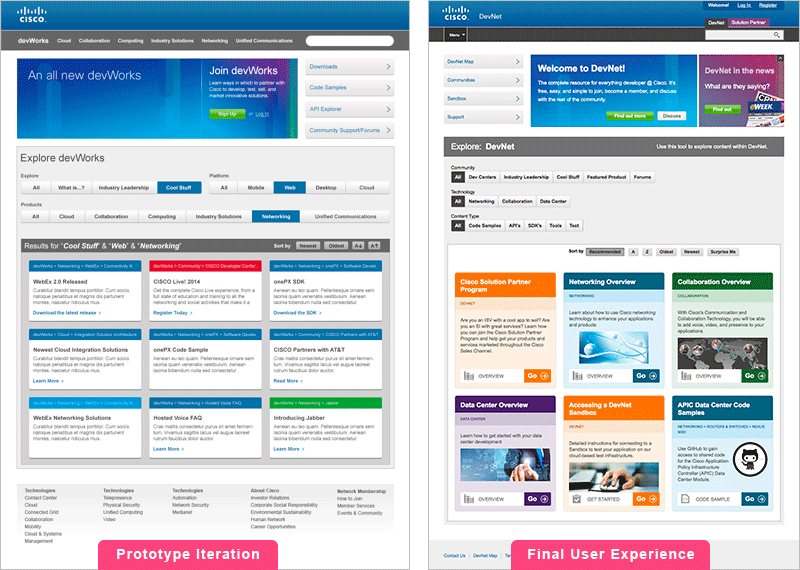

A case study in competitive usability testing (Part 1)

This post is Part 1 of a 2-part competitive usability study. In Part 1, we deal with how to set up a competitive usability testing study. In Part 2 , we showcase results from the study we ran, with insights on how to approach your data and what to look for in a competitive UX study.

There are many good reasons to do competitive usability testing. Watching users try out a competitor’s website or app can show you what their designs are doing well, and where they’re lacking; which features competitors have that users really like; how they display and organize information and options, and how well it works.

A less obvious, but perhaps even more valuable reason, is that competitive usability testing improves the quality of feedback on your own website or app. By giving users something to compare your interface to, it sharpens their critiques and increases their awareness.

Read more: 5 secrets to comparative usability testing

If a user has only experienced your website’s way of doing something, for example, it’s easy for them to take it for granted. As long as they were able to complete what was asked of them, they may have relatively little to say about how it could be improved. But send them to a competitor’s site and have them complete the same tasks, and they’ll almost certainly have a lot more to say about whose way was better, in what ways, and why they liked it more.

Thanks to this effect alone, the feedback you collect about your own designs will be much more useful and insight-dense.

Quantifying the differences

Not only can competitive user testing get you more incisive feedback on what and how users think, it’s also a great opportunity to quantitatively measure the effectiveness of different pages, flows, and features on your site or app, and to quantify users’ attitudes towards them.

Quantitative metrics and hard data provides landmarks of objectivity as you plan your roadmap and make decisions about your designs. They deepen your understanding of user preferences, and strengthen your ability to gauge the efficacy of different design choices.

When doing competitive UX testing – whether between your products and a competitor’s, or between multiple versions of your own products – quantitative metrics are a valuable baseline that provide quick, unambiguous answers and lay the groundwork for a thorough qualitative analysis.

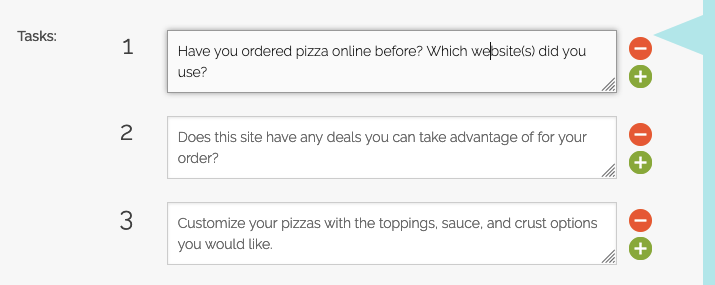

Domino’s vs Pizza Hut: A competitive user testing case study

We revisited our old Domino’s vs Pizza Hut UX faceoff , this time with 20 test participants, to see what we would find – not just about the UX of ordering pizza online, but also about how to run competitive usability tests, and how to use quantitative data in your competitive study.

Why 20 users? It’s the minimum sample size to get statistically reliable quantitative data, as NNGroup and other UX research experts have demonstrated. In our post-test survey, we included a number of new multiple choice, checkbox-style, and slider rating questions to get some statistically sound quantitative data points.

Read more: How many users should I test with?

Setup of the study

The first choice you need to make when setting up a competitive UX study is whether to test each interface with separate groups of users, or send the same users to each one.

As described above, we prefer sending the same users to both if possible, so that they can directly compare their experiences with a sharp and keenly aware eye. We recommend trying this method if it’s feasible for your situation, but there are a few things to consider:

1. Time: How long will it take users to go through both (or all) of the interfaces you’re testing? If the flows aren’t too long and the tasks aren’t too complicated, you can safely fit 2 or even 3 different sites or apps into a single session.

The default session duration for TryMyUI tests is 30 minutes, which we’ve found to be a good upper limit. The longer the session goes, the more your results could degrade due to tester fatigue, so keep this in mind and make sure you’re not asking too much of your participants.

2. Depth: There will necessarily be a trade-off between how many different sites or apps users visit in a single session, and how deeply they interact with each one. If you need users to go into serious depth, it may be better to use separate groups for each different interface.

3. Scale: To get statistically reliable quantitative data, at least 20 users should be reviewing each interface. If every tester tries out both sites during their session, you only need 20 in all. If you use different batches of testers per site, you would need 40 total users to compare two sites.

So if you don’t have the ability or bandwidth to recruit and test with lots of users, you may want to simplify each flow such that they can fit into a single session; but if your team can handle larger numbers, you can have 20 visit each site separately (or even have some users visit multiple sites, and others go deeper into a single one).

For our Domino’s vs Pizza Hut test, we chose to send the same users to both sites so they could directly compare their experience on each. This wasn’t too much of a challenge, as ordering pizza is a relatively simple flow that doesn’t require intense or deep interaction, and the experience of both sites could fit easily into a 30-minute window.

Learn more: user testing better products and user testing new products

Accounting for bias

As with any kind of usability testing, it’s critical to be aware of potential sources of bias in your test setup. In addition to the typical sources, competitive testing can also be biased by the order of the websites.

There’s several ways that this bias can play out: in many cases, users are biased in favor of the first site they use, as this site gets to set their expectations of how things will look and work, and where different options or features might be found. When the user moves on to the next website, they may have a harder time simply because it’s different from the first one.

On the other hand, users may end up finding the second site easier if they had to struggle through a learning curve on the first one . In such cases, the extra effort they put in to understand key functions or concepts on the first site might make it seem harder, while simultaneously giving them a jump-start on understanding the second site.

Lastly, due to simple recency effects , the last interface might be more salient in users’ minds and therefore viewed more favorably (or perhaps just more extremely).

To account for bias, we set up 2 tests: one going from A→B, and one from B→A , with 10 users per flow. This way, both sites would get 20 total pairs of eyes checking them out, but half would see each site first and half of them second.

No matter whether the site order would bias users in favor of the second platform or the first, the 10/10 split would balance these effects out as much as possible.

The other benefit of setting up the study this way is that we would get to observe how brand new visitors and visitors with prior expectations would view and interact with each site. Both Domino’s and Pizza Hut would get their share of open-minded new orderers and judging, sharp-eyed pizza veterans.

Writing the task script

We re-used the same task script from our previous Domino’s vs Pizza Hut test, which has been dissected and explained in an old blog post here . You can read all about how we chose the wording for those tasks in that post.

You can do a quick skim of the task list below:

Scenario: You’re having a late night in with a few friends and people are starting to get hungry, so you decide to order a couple of pizzas for delivery.

- Have you ordered pizza online before? Which website(s) did you use?

- Does this site have any deals you can take advantage of for your order?

- Customize your pizzas with the toppings, sauce, and crust options you would like.

- Finalize your order with any other items you want besides your pizzas.

- Go through the checkout until you are asked to enter billing information.

- Please now go to [link to second site] and go through the pizza ordering process there too. Compare your experience as you go.

- Which site was easier to use, and why? Which would you use next time you order pizza online?

We also could have broken down Task 6 into several more discrete steps – for example, mirroring the exact same steps we wrote for the first website. This would have allowed us to collect task usability ratings , time on task, and other user testing metric s that could be compared between the sites.

However, we decided to keep the flow more free-form and let users chart their own course through the second site. You can choose between a looser task script and a more structured one based on the kinds of data you want to collect for your study.

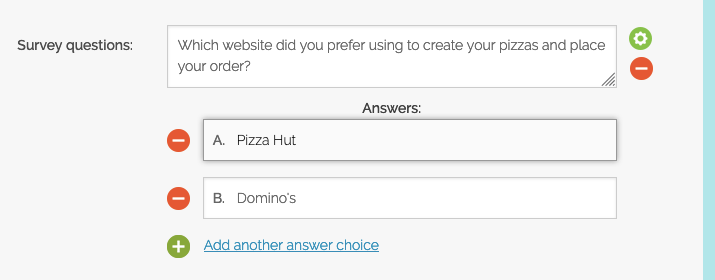

The post-test survey

After users complete the tasks during their video session, we have them respond to a post-test survey . This is where we posed a number of different rating-style and multiple-choice type questions to try and quantify users’ attitudes and determine which site performed better in which areas.

Our post-test survey:

After completing both flows and giving feedback on each step, we wanted the users to unequivocally choose one of the websites. This way we could instantly see the final outcome from each of the tests, without trying to parse unemphatic verbal responses from the videos.

For each test, we listed the sites in the order they were experienced, to avoid creating any additional variables between the test.

- How would you rate your experience on the Domino’s website, on a scale of 1 (Hated it!) to 10 (Loved it!)? (slider rating, 1-10)

- How would you rate your experience on the Pizza Hut website, on a scale of 1 (Hated it!) to 10 (Loved it!)? (slider rating, 1-10)

Here again we showed the questions in an order corresponding to the order from the video session. First users rated the site they started on, then they rated the site they finished on.

- Overall mood/feel of the site

- Attractive pictures, images, and illustrations

- Ease of navigating around the site

- Clarity of information provided by the site

- None of the above

For the fourth question, we listed several different aspects of the user experience to see which site held the edge in each. Users could check any number of options, and we also included a “none of the above” option.

In this case, we asked users to select areas in which the second site they had tested was superior to the first. We felt that since users might tend to favor the first site they experienced, it would be most illuminating to see where they felt the second site had been better.

If we were to run this test again, we would include more options to pick from that later came up in our results, such as the availability of appealing promotions/deals, and the choices of pizza toppings and customizations and other food options.

- What is the #1 most important thing about a pizza website, to you? (free response)

Since we knew that we probably wouldn’t think of every possible area of the experience that users cared about, we followed up by asking a free-response question about what users prioritized the most in their online ordering experience. This allowed us to get more insight into the previous question, and build a deeper understanding of each user’s mindset while viewing their videos and other responses.

- Several times a week

- About once a week

- Once or twice a month

- Less than once a month

- Domino’s

- Papa John’s

- Little Caesars

The final 2 questions were just general information-gathering questions. We were interested to see what kind of backgrounds the testers had (and were maybe also a little excited to try out more of the new post-test question types ).

Besides expanding the options in question 4, the other thing we would change about the post-test survey if we ran this study again would be to ask more free-response type questions. We found that with so many quantitative type questions, we actually missed out on some qualitative answers that would have been useful (especially in conjunction with the data we did get).

Some example questions we would add, which we thought of after getting the results in, are:

- What did you like the best about the [Domino’s/Pizza Hut] website?

- What did you like the least about the [Domino’s/Pizza Hut] website?

- Do you feel that your experience on the two sites would influence your choice between Domino’s and Pizza Hut if you were going to order online from one of them in the future?

Wrapping up Part 1

Besides the task script and post-test survey, the rest of the setup just consisted of choosing a target demographic – we selected users in the US, ages 18-34, making under $50,000. Once the tests were finalized, we launched them and collected the 20 results in less than a day.

In Part 2 of this series, we’ll go over the results of the study, including the quantitative data we got, the contents of the videos, and what we learned about doing competitive usability testing.

Part2: Results

By Tim Rotolo

Tim Rotolo is a co-founder at Trymata, and the company's Chief Growth Officer. He is a born researcher whose diverse interests include design, architecture, history, psychology, biology, and more. Tim holds a Bachelor's Degree in International Relations from Claremont McKenna College in southern California. You can reach him on Linkedin at linkedin.com/in/trotolo/ or on Twitter at @timoroto

Related Post

What is persona mapping definition, process and examples.

What is Content Experience? Definition, Examples and Best Practices

What is explanatory research definition, method and examples, leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

What is Exploratory Research? Definition, Method and Examples

Usability Testing: Everything You Need to Know (Methods, Tools, and Examples)

As you crack into the world of UX design, there’s one thing you absolutely must understand and learn to practice like a pro: usability testing.

Precisely because it’s such a critical skill to master, it can be a lot to wrap your head around. What is it exactly, and how do you do it? How is it different from user testing? What are some actual methods that you can employ?

In this guide, we’ll give you everything you need to know about usability testing—the what, the why, and the how.

Here’s what we’ll cover:

- What is usability testing and why does it matter?

- Usability testing vs. user testing

- Formative vs. summative usability testing

- Attitudinal vs. behavioral research

Performance testing

Card sorting, tree testing, 5-second test, eye tracking.

- How to learn more about usability testing

Ready? Let’s dive in.

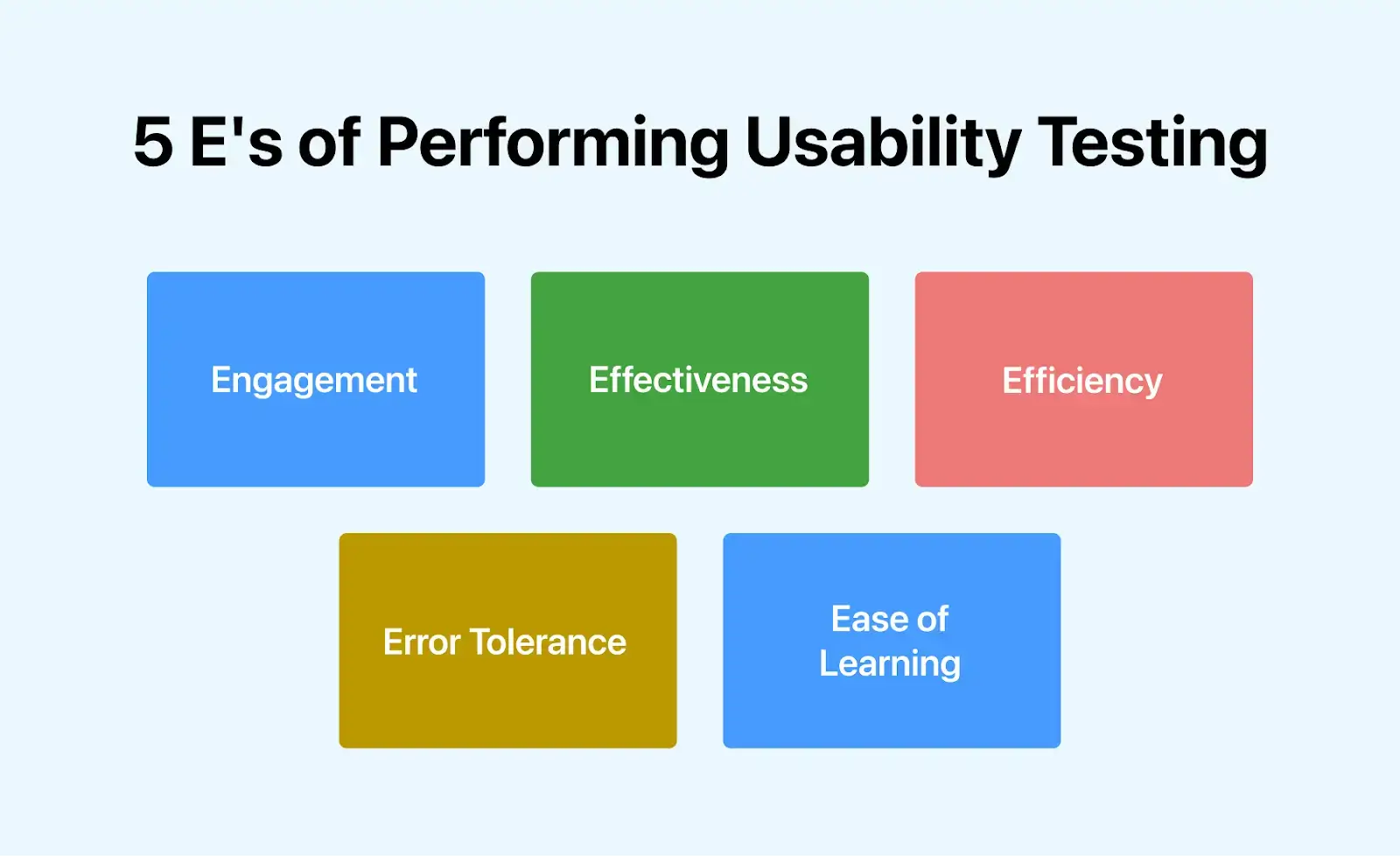

1. What is usability testing and why does it matter?

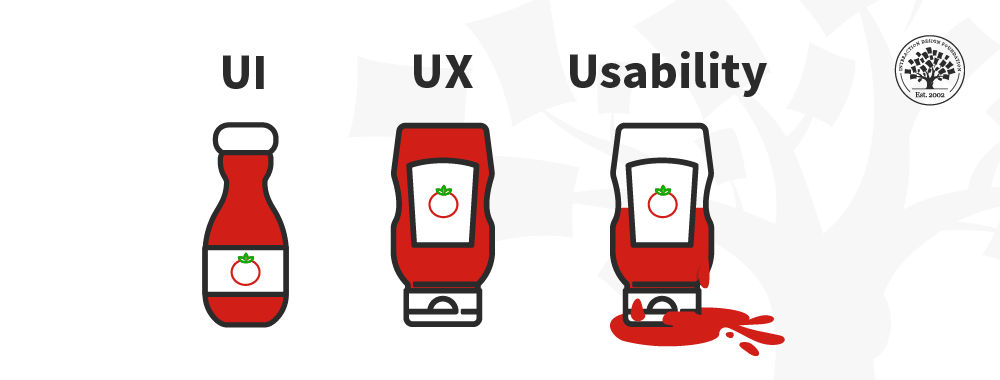

Simply put, usability testing is the process of discovering ways to improve your product by observing users as they engage with the product itself (or a prototype of the product). It’s a UX research method specifically trained on—you guessed it—the usability of your products. And what is usability ? Usability is a measure of how easily users can accomplish a given task with your product.

Usability testing, when executed well, uncovers pain points in the user journey and highlights barriers to good usability. It will also help you learn about your users’ behaviors and preferences as these relate to your product, and to discover opportunities to design for needs that you may have overlooked.

You can conduct usability testing at any point in the design process when you’ve turned initial ideas into design solutions, but the earlier the better. Test early and test often! You can conduct some kind of usability testing with low- and high- fidelity prototypes alike—and testing should continue after you’ve got a live, out-in-the-world product.

2. Usability testing vs. user testing

Though they sound similar and share a somewhat similar end goal, usability testing and user testing are two different things. We’ll look at the differences in a moment, but first, here’s what they have in common:

- Both share the end goal of creating a design solution to meet real user needs

- Both take the time to observe and listen to the user to hear from them what needs/pain points they experience

- Both look for feasible ways of meeting those needs or addressing those pain points

User testing essentially asks if this particular kind of user would want this particular kind of product—or what kind of product would benefit them in the first place. It is entirely user-focused.

Usability testing, on the other hand, is more product-focused and looks at users’ needs in the context of an existing product (even if that product is still in prototype stages of development). Usability testing takes your existing product and places it in the hands of your users (or potential users) to see how the product actually works for them—how they’re able to accomplish what they need to do with the product.

3. Formative vs. summative usability testing

Alright! Now that you understand what usability testing is, and what it isn’t, let’s get into the various types of usability testing out there.

There are two broad categories of usability testing that are important to understand— formative and summative . These have to do with when you conduct the testing and what your broad objectives are—what the overarching impact the testing should have on your product.

Formative usability testing:

- Is a qualitative research process

- Happens earlier in the design, development, or iteration process

- Seeks to understand what about the product needs to be improved

- Results in qualitative findings and ideation that you can incorporate into prototypes and wireframes

Summative usability testing:

- Is a research process that’s more quantitative in nature

- Happens later in the design, development, or iteration process

- Seeks to understand whether the solutions you are implementing (or have implemented) are effective

- Results in quantitative findings that can help determine broad areas for improvement or specific areas to fine-tune (this can go hand in hand with competitive analysis )

4. Attitudinal vs. behavioral research

Alongside the timing and purpose of the testing (formative vs. summative), it’s important to understand two broad categories that your research (both your objectives and your findings) will fall into: behavioral and attitudinal.

Attitudinal research is all about what people say—what they think and communicate about your product and how it works. Behavioral research focuses on what people do—how they actually do interact with your product and the feelings that surface as a result.

What people say and what people do are often two very different things. These two categories help define those differences, choose our testing methods more intentionally, and categorize our findings more effectively.

5. Five essential usability testing methods

Some usability testing methods are geared more towards uncovering either behavioral or attitudinal findings; but many have the potential to result in both.

Of the methods you’ll learn about in this section, performance testing has the greatest potential for targeting both—and will perhaps require the greatest amount of thoughtfulness regarding how you approach it.

Naturally, then, we’ll spend a little more time on that method than the other four, though that in no way diminishes their usefulness! Here are the methods we’ll cover:

These are merely five common and/or interesting methods—it is not a comprehensive list of every method you can use to get inside the hearts and minds of your users. But it’s a place to start. So here we go!

In performance testing, you sit down with a user and give them a task (or set of tasks) to complete with the product.

This is often a combination of methods and approaches that will allow you to interview users, see how they use your product, and find out how they feel about the experience afterward. Depending on your approach, you’ll observe them, take notes, and/or ask usability testing questions before, after, or along the way.

Performance testing is by far the most talked-about form of usability testing—especially as it’s often combined with other methods. Performance testing is what most commonly comes to mind in discussions of usability testing as a whole, and it’s what many UX design certification programs focus on—because it’s so broadly useful and adaptive.

While there’s no one right way to conduct performance testing, there are a number of approaches and combinations of methods you can use, and you’ll want to be intentional about it.

It’s a method that you can adapt to your objectives—so make sure you do! Ask yourself what kind of attitudinal or behavioral findings you’re really looking for, how much time you’ll have for each testing session, and what methods or approaches will help you reach your objectives most efficiently.

Performance testing is often combined with user interviews . For a quick guide on how to ask great questions during this part of a testing session, watch this video:

Even if you choose not to combine performance testing with user interviews, good performance testing will still involve some degree of questioning and moderating.

Performance testing typically results in a pretty massive chunk of qualitative insights, so you’ll need to devote a fair amount of intention and planning before you jump in.

Maximize the usefulness of your research by being thoughtful about the task(s) you assign and what approach you take to moderating the sessions. As your test participants go about the task(s) you assign, you’ll watch, take notes, and ask questions either during or after the test—depending on your approach.

Four approaches to performance testing

There are four ways you can go about moderating a performance test , and it’s worth understanding and choosing your approach (or combination of approaches) carefully and intentionally. As you choose, take time to consider:

- How much guidance the participant will actually need

- How intently participants will need to focus

- How guidance or prompting from you might affect results or observations

With these things in mind, let’s look at the four approaches.

Concurrent Think Aloud (CTA)

With this approach, you’ll encourage participants to externalize their thought process—to think out loud. Your job during the session will be to keep them talking through what they’re looking for, what they’re doing and why, and what they think about the results of their actions.

A CTA approach often uncovers a lot of nuanced details in the user journey, but if your objectives include anything related to the accuracy or time for task completion, you might be better off with a Retrospective Think Aloud.

Retrospective Think Aloud (RTA)

Here, you’ll allow participants to complete their tasks and recount the journey afterward . They can complete tasks in a more realistic time frame and degree of accuracy, though there will certainly be nuanced details of participants’ thoughts and feelings you’ll miss out on.

Concurrent Probing (CP)

With Concurrent Probing, you ask participants about their experience as they’re having it. You prompt them for details on their expectations, reasons for particular actions, and feeling about results.

This approach can be distracting, but used in combination with CTA, you can allow participants to complete the tasks and prompt only when you see a particularly interesting aspect of their experience, and you’d like to know more. Again, if accuracy and timing are critical objectives, you might be better off with Retrospective Probing.

Retrospective Probing (RP)

If you note that a participant says or does something interesting as they complete their task(s), you can note it and ask them about it later—this is Retrospective Probing. This is an approach very often combined with CTA or RTA to ensure that you’re not missing out on those nuanced details of their experience without distracting them from actually completing the task.

Whew! There’s your quick overview of performance testing. To learn more about it, read to the final section of this article: How to learn more about usability testing.

With this under our belts, let’s move on to our other four essential usability testing methods.

Card sorting is a way of testing the usability of your information architecture. You give users blank cards (open card sorting) or cards labeled with the names and short descriptions of the main items/sections of the product (closed card sorting), then ask them to sort the cards into piles according to which items seem to go best together. You can go even further by asking them to sort the cards into larger groups and to name the groups or piles.

Rather than structuring your site or app according to your understanding of the product, card sorting allows the information architecture to mirror the way your users are thinking.

This is a great technique to employ very early in the design process as it is inexpensive and will save the time and expense of making structural adjustments later in the process. And there’s no technology required! If you want to conduct it remotely, though, there are tools like OptimalSort that do this effectively.

For more on how to conduct card sorting, watch this video:

Tree testing is a great follow up to card sorting, but it can be conducted on its own as well. In tree testing, you create a visual information hierarchy (or “tree) and ask users to complete a task using the tree. For example, you might ask users, “You want to accomplish X with this product. Where do you go to do that?” Then you observe how easily users are able to find what they’re looking for.

This is another great technique to employ early in the design process. It can be conducted with paper prototypes or spreadsheets, but you can also use tools such as TreeJack to accomplish this digitally and remotely.

In the 5-second test, you expose your users to one portion of your product (one screen, probably the top half of it) for five seconds and then interview them to see what they took away regarding:

- The product/page’s purpose and main features or elements

- The intended audience and trustworthiness of the brand

- Their impression of the usability and design of the product

You can conduct this kind of testing in person rather simply, or remotely with tools like UsabilityHub .

This one may seem somewhat new, but it’s been around for a while–though the tools and technology around it have evolved. Eye tracking on its own isn’t enough to determine usability, but it’s a great compliment to your other usability testing measures.

In eye tracking you literally track where most users’ eyes land on the screen you’re designing. The reason this is important is that you want to make sure that the elements users’ eyes are drawn to are the ones that communicate the most important information. This is a difficult one to conduct in any kind of analog fashion, but there are a lot of tools out there that make it simple— CrazyEgg and HotJar are both great places to start.

6. How to learn more about usability testing

There you have it: your 15-minute overview of the what, why, and how of usability testing. But don’t stop here! Usability testing and UX research as a whole have a deeply humanizing impact on the design process. It’s a fascinating field to discover and the result of this kind of work has the power of keeping companies, design teams, and even the lone designer accountable to what matters most: the needs of the end user.

If you’d like to learn more about usability testing and UX research, take the free UX Research for Beginners Course with CareerFoundry. This tutorial is jam-packed with information that will give you a deeper understanding of the value of this kind of testing as well as a number of other UX research methods.

You can also enroll in a UX design course or bootcamp to get a comprehensive understanding of the entire UX design process (to which usability testing and UX research are an integral part). For guidance on the best programs, check out our list of the 10 best UX design certification programs . And if you’ve already started your learning process, and you’re thinking about the job hunt, here are the top 5 UX research interview questions to be ready for.

For further reading about usability testing and UX research, check out these other articles:

- How to conduct usability testing: a step-by-step guide

- What does a UX researcher actually do? The ultimate career guide

- 11 usability heuristics every designer should know

- How to conduct a UX audit

- Reviews / Why join our community?

- For companies

- Frequently asked questions

Usability Testing

What is usability testing.

Usability testing is the practice of testing how easy a design is to use with a group of representative users. It usually involves observing users as they attempt to complete tasks and can be done for different types of designs. It is often conducted repeatedly, from early development until a product’s release.

“It’s about catching customers in the act, and providing highly relevant and highly contextual information.”

— Paul Maritz, CEO at Pivotal

- Transcript loading…

Usability Testing Leads to the Right Products

Through usability testing, you can find design flaws you might otherwise overlook. When you watch how test users behave while they try to execute tasks, you’ll get vital insights into how well your design/product works. Then, you can leverage these insights to make improvements. Whenever you run a usability test, your chief objectives are to:

1) Determine whether testers can complete tasks successfully and independently .

2) Assess their performance and mental state as they try to complete tasks, to see how well your design works.

3) See how much users enjoy using it.

4) Identify problems and their severity .

5) Find solutions .

While usability tests can help you create the right products, they shouldn’t be the only tool in your UX research toolbox. If you just focus on the evaluation activity, you won’t improve the usability overall.

There are different methods for usability testing. Which one you choose depends on your product and where you are in your design process.

Usability Testing is an Iterative Process

To make usability testing work best, you should:

a. Define what you want to test . Ask yourself questions about your design/product. What aspect/s of it do you want to test? You can make a hypothesis from each answer. With a clear hypothesis, you’ll have the exact aspect you want to test.

b. Decide how to conduct your test – e.g., remotely. Define the scope of what to test (e.g., navigation) and stick to it throughout the test. When you test aspects individually, you’ll eventually build a broader view of how well your design works overall.

2) Set user tasks –

a. Prioritize the most important tasks to meet objectives (e.g., complete checkout), no more than 5 per participant. Allow a 60-minute timeframe.

b. Clearly define tasks with realistic goals .

c. Create scenarios where users can try to use the design naturally . That means you let them get to grips with it on their own rather than direct them with instructions.

3) Recruit testers – Know who your users are as a target group. Use screening questionnaires (e.g., Google Forms) to find suitable candidates. You can advertise and offer incentives . You can also find contacts through community groups , etc. If you test with only 5 users, you can still reveal 85% of core issues.

4) Facilitate/Moderate testing – Set up testing in a suitable environment . Observe and interview users . Notice issues . See if users fail to see things, go in the wrong direction or misinterpret rules. When you record usability sessions, you can more easily count the number of times users become confused. Ask users to think aloud and tell you how they feel as they go through the test. From this, you can check whether your designer’s mental model is accurate: Does what you think users can do with your design match what these test users show?

If you choose remote testing , you can moderate via Google Hangouts, etc., or use unmoderated testing. You can use this software to carry out remote moderated and unmoderated testing and have the benefit of tools such as heatmaps.

Keep usability tests smooth by following these guidelines.

1) Assess user behavior – Use these metrics:

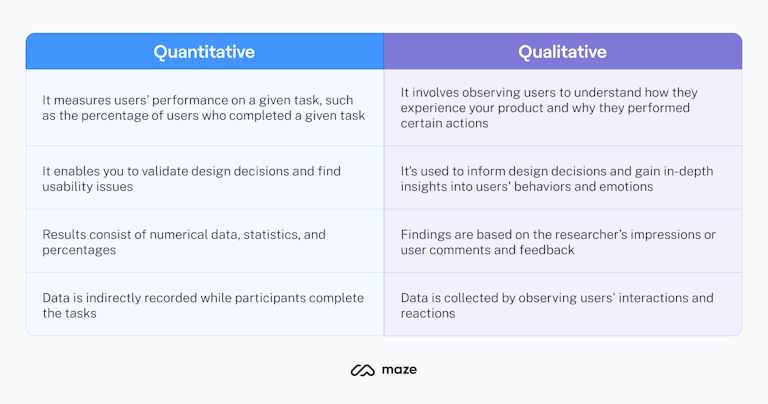

Quantitative – time users take on a task, success and failure rates, effort (how many clicks users take, instances of confusion, etc.)

Qualitative – users’ stress responses (facial reactions, body-language changes, squinting, etc.), subjective satisfaction (which they give through a post-test questionnaire) and perceived level of effort/difficulty

2) Create a test report – Review video footage and analyzed data. Clearly define design issues and best practices. Involve the entire team.

Overall, you should test not your design’s functionality, but users’ experience of it . Some users may be too polite to be entirely honest about problems. So, always examine all data carefully.

Learn More about Usability Testing

Take our course on usability testing .

Here’s a quick-fire method to conduct usability testing .

See some real-world examples of usability testing .

Take some helpful usability testing tips .

Questions related to Usability Testing

To conduct usability testing effectively:

Start by defining clear, objective goals and recruit representative users.

Develop realistic tasks for participants to perform and set up a controlled, neutral environment for testing.

Observe user interactions, noting difficulties and successes, and gather qualitative and quantitative data.

After testing, analyze the results to identify areas for improvement.

For a comprehensive understanding and step-by-step guidance on conducting usability testing, refer to our specialized course on Conducting Usability Testing .

Conduct usability testing early and often, from the design phase to development and beyond. Early design testing uncovers issues when they are more accessible and less costly to fix. Regular assessments throughout the project lifecycle ensure continued alignment with user needs and preferences. Usability testing is crucial for new products and when redesigning existing ones to verify improvements and discover new problem areas. Dive deeper into optimal timing and methods for usability testing in our detailed article “Usability: A part of the User Experience.”

Incorporate insights from William Hudson, CEO of Syntagm, to enhance usability testing strategies. William recommends techniques like tree testing and first-click testing for early design phases to scrutinize navigation frameworks. These methods are exceptionally suitable for isolating and evaluating specific components without visual distractions, focusing strictly on user understanding of navigation. They're advantageous for their quantitative nature, producing actionable numbers and statistics rapidly, and being applicable at any project stage. Ideal for both new and existing solutions, they help identify problem areas and assess design elements effectively.

To conduct usability testing for a mobile application:

Start by identifying the target users and creating realistic tasks for them.

Collect data on their interactions and experiences to uncover issues and areas for improvement.

For instance, consider the concept of ‘tappability’ as explained by Frank Spillers, CEO: focusing on creating task-oriented, clear, and easily tappable elements is crucial.

Employing correct affordances and signifiers, like animations, can clarify interactions and enhance user experience, avoiding user frustration and errors. Dive deeper into mobile usability testing techniques and insights by watching our insightful video with Frank Spillers.

For most usability tests, the ideal number of participants depends on your project’s scope and goals. Our video featuring William Hudson, CEO of Syntagm, emphasizes the importance of quality in choosing participants as it significantly impacts the usability test's results.

He shares insightful experiences and stresses on carefully selecting and recruiting participants to ensure constructive and reliable feedback. The process involves meticulous planning and execution to identify and discard data from non-contributive participants and to provide meaningful and trustworthy insights are gathered to improve the interactive solution, be it an app or a website. Remember the emphasis on participant's attentiveness and consistency while performing tasks to avoid compromising the results. Watch the full video for a more comprehensive understanding of participant recruitment and usability testing.

To analyze usability test results effectively, first collate the data meticulously. Next, identify patterns and recurrent issues that indicate areas needing improvement. Utilize quantitative data for measurable insights and qualitative data for understanding user behavior and experience. Prioritize findings based on their impact on user experience and the feasibility of implementation. For a deeper understanding of analysis methods and to ensure thorough interpretation, refer to our comprehensive guides on Analyzing Qualitative Data and Usability Testing . These resources provide detailed insights, aiding in systematically evaluating and optimizing user interaction and interface design.

Usability testing is predominantly qualitative, focusing on understanding users' thoughts and experiences, as highlighted in our video featuring William Hudson, CEO of Syntagm.

It enables insights into users' minds, asking why things didn't work and what's going through their heads during the testing phase. However, specific methods, like tree testing and first-click testing , present quantitative aspects, providing hard numbers and statistics on user performance. These methods can be executed at any design stage, providing actionable feedback and revealing navigation and visual design efficacy.