Quantitative Data Analysis: A Comprehensive Guide

By: Ofem Eteng Published: May 18, 2022

Related Articles

A healthcare giant successfully introduces the most effective drug dosage through rigorous statistical modeling, saving countless lives. A marketing team predicts consumer trends with uncanny accuracy, tailoring campaigns for maximum impact.

Table of Contents

These trends and dosages are not just any numbers but are a result of meticulous quantitative data analysis. Quantitative data analysis offers a robust framework for understanding complex phenomena, evaluating hypotheses, and predicting future outcomes.

In this blog, we’ll walk through the concept of quantitative data analysis, the steps required, its advantages, and the methods and techniques that are used in this analysis. Read on!

What is Quantitative Data Analysis?

Quantitative data analysis is a systematic process of examining, interpreting, and drawing meaningful conclusions from numerical data. It involves the application of statistical methods, mathematical models, and computational techniques to understand patterns, relationships, and trends within datasets.

Quantitative data analysis methods typically work with algorithms, mathematical analysis tools, and software to gain insights from the data, answering questions such as how many, how often, and how much. Data for quantitative data analysis is usually collected from close-ended surveys, questionnaires, polls, etc. The data can also be obtained from sales figures, email click-through rates, number of website visitors, and percentage revenue increase.

Quantitative Data Analysis vs Qualitative Data Analysis

When we talk about data, we directly think about the pattern, the relationship, and the connection between the datasets – analyzing the data in short. Therefore when it comes to data analysis, there are broadly two types – Quantitative Data Analysis and Qualitative Data Analysis.

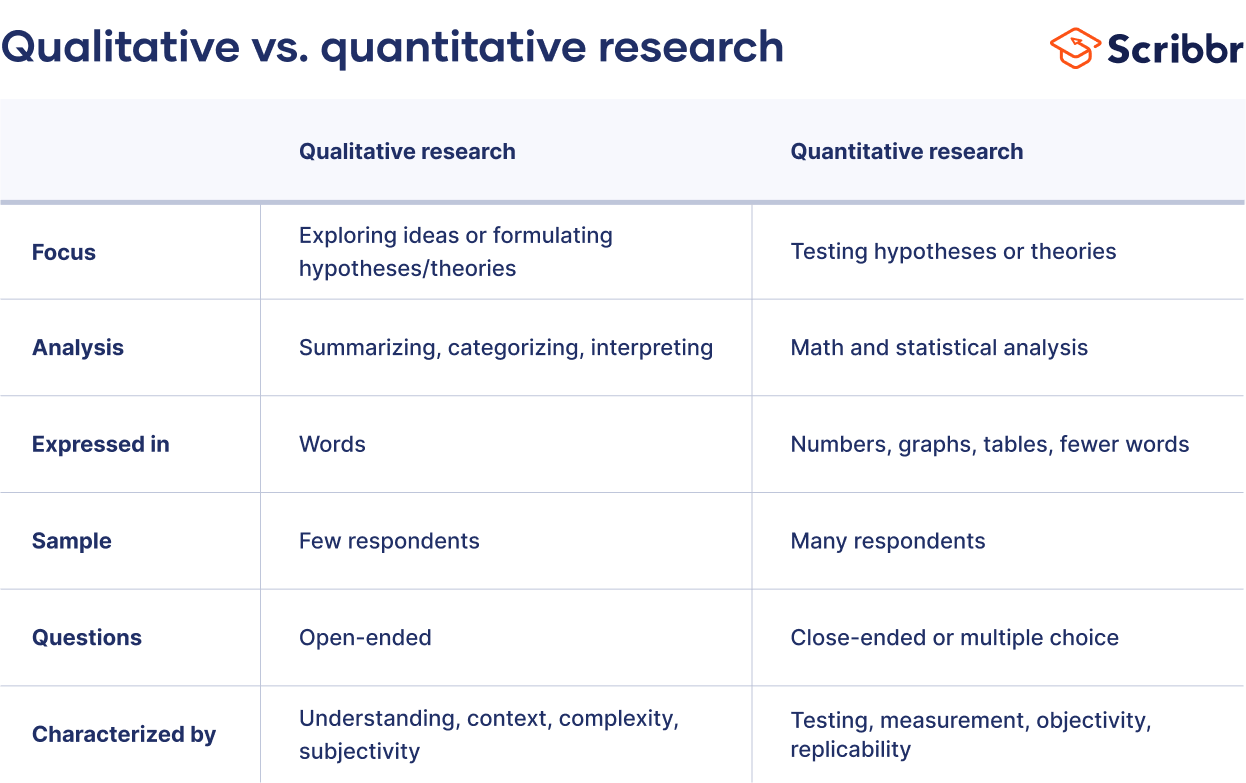

Quantitative data analysis revolves around numerical data and statistics, which are suitable for functions that can be counted or measured. In contrast, qualitative data analysis includes description and subjective information – for things that can be observed but not measured.

Let us differentiate between Quantitative Data Analysis and Quantitative Data Analysis for a better understanding.

Data Preparation Steps for Quantitative Data Analysis

Quantitative data has to be gathered and cleaned before proceeding to the stage of analyzing it. Below are the steps to prepare a data before quantitative research analysis:

- Step 1: Data Collection

Before beginning the analysis process, you need data. Data can be collected through rigorous quantitative research, which includes methods such as interviews, focus groups, surveys, and questionnaires.

- Step 2: Data Cleaning

Once the data is collected, begin the data cleaning process by scanning through the entire data for duplicates, errors, and omissions. Keep a close eye for outliers (data points that are significantly different from the majority of the dataset) because they can skew your analysis results if they are not removed.

This data-cleaning process ensures data accuracy, consistency and relevancy before analysis.

- Step 3: Data Analysis and Interpretation

Now that you have collected and cleaned your data, it is now time to carry out the quantitative analysis. There are two methods of quantitative data analysis, which we will discuss in the next section.

However, if you have data from multiple sources, collecting and cleaning it can be a cumbersome task. This is where Hevo Data steps in. With Hevo, extracting, transforming, and loading data from source to destination becomes a seamless task, eliminating the need for manual coding. This not only saves valuable time but also enhances the overall efficiency of data analysis and visualization, empowering users to derive insights quickly and with precision

Hevo is the only real-time ELT No-code Data Pipeline platform that cost-effectively automates data pipelines that are flexible to your needs. With integration with 150+ Data Sources (40+ free sources), we help you not only export data from sources & load data to the destinations but also transform & enrich your data, & make it analysis-ready.

Start for free now!

Now that you are familiar with what quantitative data analysis is and how to prepare your data for analysis, the focus will shift to the purpose of this article, which is to describe the methods and techniques of quantitative data analysis.

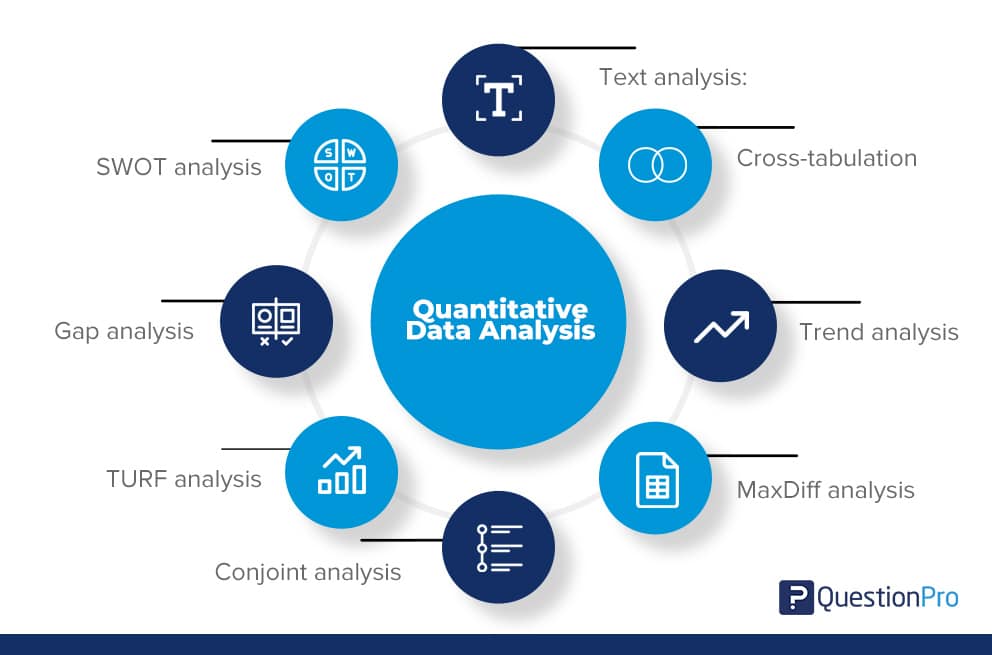

Methods and Techniques of Quantitative Data Analysis

Quantitative data analysis employs two techniques to extract meaningful insights from datasets, broadly. The first method is descriptive statistics, which summarizes and portrays essential features of a dataset, such as mean, median, and standard deviation.

Inferential statistics, the second method, extrapolates insights and predictions from a sample dataset to make broader inferences about an entire population, such as hypothesis testing and regression analysis.

An in-depth explanation of both the methods is provided below:

- Descriptive Statistics

- Inferential Statistics

1) Descriptive Statistics

Descriptive statistics as the name implies is used to describe a dataset. It helps understand the details of your data by summarizing it and finding patterns from the specific data sample. They provide absolute numbers obtained from a sample but do not necessarily explain the rationale behind the numbers and are mostly used for analyzing single variables. The methods used in descriptive statistics include:

- Mean: This calculates the numerical average of a set of values.

- Median: This is used to get the midpoint of a set of values when the numbers are arranged in numerical order.

- Mode: This is used to find the most commonly occurring value in a dataset.

- Percentage: This is used to express how a value or group of respondents within the data relates to a larger group of respondents.

- Frequency: This indicates the number of times a value is found.

- Range: This shows the highest and lowest values in a dataset.

- Standard Deviation: This is used to indicate how dispersed a range of numbers is, meaning, it shows how close all the numbers are to the mean.

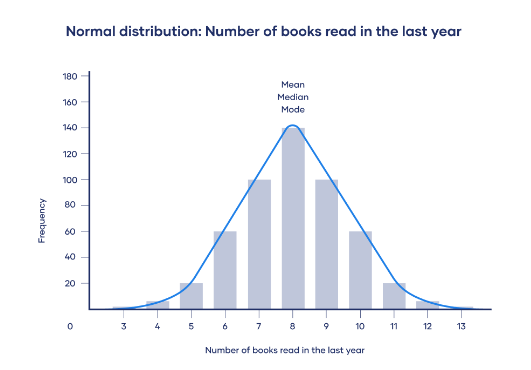

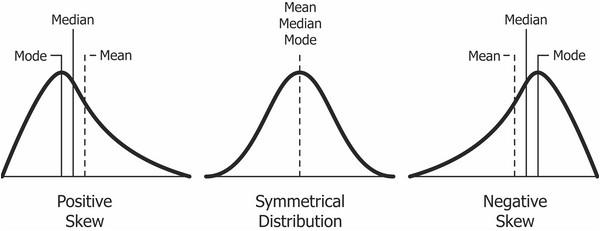

- Skewness: It indicates how symmetrical a range of numbers is, showing if they cluster into a smooth bell curve shape in the middle of the graph or if they skew towards the left or right.

2) Inferential Statistics

In quantitative analysis, the expectation is to turn raw numbers into meaningful insight using numerical values, and descriptive statistics is all about explaining details of a specific dataset using numbers, but it does not explain the motives behind the numbers; hence, a need for further analysis using inferential statistics.

Inferential statistics aim to make predictions or highlight possible outcomes from the analyzed data obtained from descriptive statistics. They are used to generalize results and make predictions between groups, show relationships that exist between multiple variables, and are used for hypothesis testing that predicts changes or differences.

There are various statistical analysis methods used within inferential statistics; a few are discussed below.

- Cross Tabulations: Cross tabulation or crosstab is used to show the relationship that exists between two variables and is often used to compare results by demographic groups. It uses a basic tabular form to draw inferences between different data sets and contains data that is mutually exclusive or has some connection with each other. Crosstabs help understand the nuances of a dataset and factors that may influence a data point.

- Regression Analysis: Regression analysis estimates the relationship between a set of variables. It shows the correlation between a dependent variable (the variable or outcome you want to measure or predict) and any number of independent variables (factors that may impact the dependent variable). Therefore, the purpose of the regression analysis is to estimate how one or more variables might affect a dependent variable to identify trends and patterns to make predictions and forecast possible future trends. There are many types of regression analysis, and the model you choose will be determined by the type of data you have for the dependent variable. The types of regression analysis include linear regression, non-linear regression, binary logistic regression, etc.

- Monte Carlo Simulation: Monte Carlo simulation, also known as the Monte Carlo method, is a computerized technique of generating models of possible outcomes and showing their probability distributions. It considers a range of possible outcomes and then tries to calculate how likely each outcome will occur. Data analysts use it to perform advanced risk analyses to help forecast future events and make decisions accordingly.

- Analysis of Variance (ANOVA): This is used to test the extent to which two or more groups differ from each other. It compares the mean of various groups and allows the analysis of multiple groups.

- Factor Analysis: A large number of variables can be reduced into a smaller number of factors using the factor analysis technique. It works on the principle that multiple separate observable variables correlate with each other because they are all associated with an underlying construct. It helps in reducing large datasets into smaller, more manageable samples.

- Cohort Analysis: Cohort analysis can be defined as a subset of behavioral analytics that operates from data taken from a given dataset. Rather than looking at all users as one unit, cohort analysis breaks down data into related groups for analysis, where these groups or cohorts usually have common characteristics or similarities within a defined period.

- MaxDiff Analysis: This is a quantitative data analysis method that is used to gauge customers’ preferences for purchase and what parameters rank higher than the others in the process.

- Cluster Analysis: Cluster analysis is a technique used to identify structures within a dataset. Cluster analysis aims to be able to sort different data points into groups that are internally similar and externally different; that is, data points within a cluster will look like each other and different from data points in other clusters.

- Time Series Analysis: This is a statistical analytic technique used to identify trends and cycles over time. It is simply the measurement of the same variables at different times, like weekly and monthly email sign-ups, to uncover trends, seasonality, and cyclic patterns. By doing this, the data analyst can forecast how variables of interest may fluctuate in the future.

- SWOT analysis: This is a quantitative data analysis method that assigns numerical values to indicate strengths, weaknesses, opportunities, and threats of an organization, product, or service to show a clearer picture of competition to foster better business strategies

How to Choose the Right Method for your Analysis?

Choosing between Descriptive Statistics or Inferential Statistics can be often confusing. You should consider the following factors before choosing the right method for your quantitative data analysis:

1. Type of Data

The first consideration in data analysis is understanding the type of data you have. Different statistical methods have specific requirements based on these data types, and using the wrong method can render results meaningless. The choice of statistical method should align with the nature and distribution of your data to ensure meaningful and accurate analysis.

2. Your Research Questions

When deciding on statistical methods, it’s crucial to align them with your specific research questions and hypotheses. The nature of your questions will influence whether descriptive statistics alone, which reveal sample attributes, are sufficient or if you need both descriptive and inferential statistics to understand group differences or relationships between variables and make population inferences.

Pros and Cons of Quantitative Data Analysis

1. Objectivity and Generalizability:

- Quantitative data analysis offers objective, numerical measurements, minimizing bias and personal interpretation.

- Results can often be generalized to larger populations, making them applicable to broader contexts.

Example: A study using quantitative data analysis to measure student test scores can objectively compare performance across different schools and demographics, leading to generalizable insights about educational strategies.

2. Precision and Efficiency:

- Statistical methods provide precise numerical results, allowing for accurate comparisons and prediction.

- Large datasets can be analyzed efficiently with the help of computer software, saving time and resources.

Example: A marketing team can use quantitative data analysis to precisely track click-through rates and conversion rates on different ad campaigns, quickly identifying the most effective strategies for maximizing customer engagement.

3. Identification of Patterns and Relationships:

- Statistical techniques reveal hidden patterns and relationships between variables that might not be apparent through observation alone.

- This can lead to new insights and understanding of complex phenomena.

Example: A medical researcher can use quantitative analysis to pinpoint correlations between lifestyle factors and disease risk, aiding in the development of prevention strategies.

1. Limited Scope:

- Quantitative analysis focuses on quantifiable aspects of a phenomenon , potentially overlooking important qualitative nuances, such as emotions, motivations, or cultural contexts.

Example: A survey measuring customer satisfaction with numerical ratings might miss key insights about the underlying reasons for their satisfaction or dissatisfaction, which could be better captured through open-ended feedback.

2. Oversimplification:

- Reducing complex phenomena to numerical data can lead to oversimplification and a loss of richness in understanding.

Example: Analyzing employee productivity solely through quantitative metrics like hours worked or tasks completed might not account for factors like creativity, collaboration, or problem-solving skills, which are crucial for overall performance.

3. Potential for Misinterpretation:

- Statistical results can be misinterpreted if not analyzed carefully and with appropriate expertise.

- The choice of statistical methods and assumptions can significantly influence results.

This blog discusses the steps, methods, and techniques of quantitative data analysis. It also gives insights into the methods of data collection, the type of data one should work with, and the pros and cons of such analysis.

Gain a better understanding of data analysis with these essential reads:

- Data Analysis and Modeling: 4 Critical Differences

- Exploratory Data Analysis Simplified 101

- 25 Best Data Analysis Tools in 2024

Carrying out successful data analysis requires prepping the data and making it analysis-ready. That is where Hevo steps in.

Want to give Hevo a try? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite first hand. You may also have a look at the amazing Hevo price , which will assist you in selecting the best plan for your requirements.

Share your experience of understanding Quantitative Data Analysis in the comment section below! We would love to hear your thoughts.

Ofem is a freelance writer specializing in data-related topics, who has expertise in translating complex concepts. With a focus on data science, analytics, and emerging technologies.

No-code Data Pipeline for your Data Warehouse

- Data Analysis

- Data Warehouse

- Quantitative Data Analysis

Continue Reading

Sarad Mohanan

Best Data Reconciliation Tools: Complete Guide

Satyam Agrawal

What is Data Reconciliation? Everything to Know

Sarthak Bhardwaj

Data Observability vs Data Quality: Difference and Relationships Explored

I want to read this e-book.

Quantitative Data Analysis 101

The lingo, methods and techniques, explained simply.

By: Derek Jansen (MBA) and Kerryn Warren (PhD) | December 2020

Quantitative data analysis is one of those things that often strikes fear in students. It’s totally understandable – quantitative analysis is a complex topic, full of daunting lingo , like medians, modes, correlation and regression. Suddenly we’re all wishing we’d paid a little more attention in math class…

The good news is that while quantitative data analysis is a mammoth topic, gaining a working understanding of the basics isn’t that hard , even for those of us who avoid numbers and math . In this post, we’ll break quantitative analysis down into simple , bite-sized chunks so you can approach your research with confidence.

Overview: Quantitative Data Analysis 101

- What (exactly) is quantitative data analysis?

- When to use quantitative analysis

- How quantitative analysis works

The two “branches” of quantitative analysis

- Descriptive statistics 101

- Inferential statistics 101

- How to choose the right quantitative methods

- Recap & summary

What is quantitative data analysis?

Despite being a mouthful, quantitative data analysis simply means analysing data that is numbers-based – or data that can be easily “converted” into numbers without losing any meaning.

For example, category-based variables like gender, ethnicity, or native language could all be “converted” into numbers without losing meaning – for example, English could equal 1, French 2, etc.

This contrasts against qualitative data analysis, where the focus is on words, phrases and expressions that can’t be reduced to numbers. If you’re interested in learning about qualitative analysis, check out our post and video here .

What is quantitative analysis used for?

Quantitative analysis is generally used for three purposes.

- Firstly, it’s used to measure differences between groups . For example, the popularity of different clothing colours or brands.

- Secondly, it’s used to assess relationships between variables . For example, the relationship between weather temperature and voter turnout.

- And third, it’s used to test hypotheses in a scientifically rigorous way. For example, a hypothesis about the impact of a certain vaccine.

Again, this contrasts with qualitative analysis , which can be used to analyse people’s perceptions and feelings about an event or situation. In other words, things that can’t be reduced to numbers.

How does quantitative analysis work?

Well, since quantitative data analysis is all about analysing numbers , it’s no surprise that it involves statistics . Statistical analysis methods form the engine that powers quantitative analysis, and these methods can vary from pretty basic calculations (for example, averages and medians) to more sophisticated analyses (for example, correlations and regressions).

Sounds like gibberish? Don’t worry. We’ll explain all of that in this post. Importantly, you don’t need to be a statistician or math wiz to pull off a good quantitative analysis. We’ll break down all the technical mumbo jumbo in this post.

Need a helping hand?

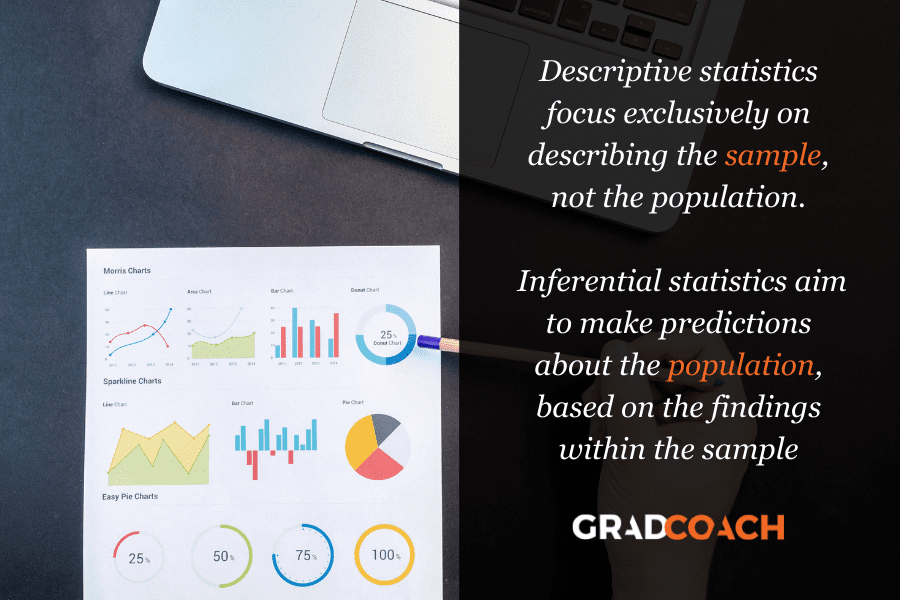

As I mentioned, quantitative analysis is powered by statistical analysis methods . There are two main “branches” of statistical methods that are used – descriptive statistics and inferential statistics . In your research, you might only use descriptive statistics, or you might use a mix of both , depending on what you’re trying to figure out. In other words, depending on your research questions, aims and objectives . I’ll explain how to choose your methods later.

So, what are descriptive and inferential statistics?

Well, before I can explain that, we need to take a quick detour to explain some lingo. To understand the difference between these two branches of statistics, you need to understand two important words. These words are population and sample .

First up, population . In statistics, the population is the entire group of people (or animals or organisations or whatever) that you’re interested in researching. For example, if you were interested in researching Tesla owners in the US, then the population would be all Tesla owners in the US.

However, it’s extremely unlikely that you’re going to be able to interview or survey every single Tesla owner in the US. Realistically, you’ll likely only get access to a few hundred, or maybe a few thousand owners using an online survey. This smaller group of accessible people whose data you actually collect is called your sample .

So, to recap – the population is the entire group of people you’re interested in, and the sample is the subset of the population that you can actually get access to. In other words, the population is the full chocolate cake , whereas the sample is a slice of that cake.

So, why is this sample-population thing important?

Well, descriptive statistics focus on describing the sample , while inferential statistics aim to make predictions about the population, based on the findings within the sample. In other words, we use one group of statistical methods – descriptive statistics – to investigate the slice of cake, and another group of methods – inferential statistics – to draw conclusions about the entire cake. There I go with the cake analogy again…

With that out the way, let’s take a closer look at each of these branches in more detail.

Branch 1: Descriptive Statistics

Descriptive statistics serve a simple but critically important role in your research – to describe your data set – hence the name. In other words, they help you understand the details of your sample . Unlike inferential statistics (which we’ll get to soon), descriptive statistics don’t aim to make inferences or predictions about the entire population – they’re purely interested in the details of your specific sample .

When you’re writing up your analysis, descriptive statistics are the first set of stats you’ll cover, before moving on to inferential statistics. But, that said, depending on your research objectives and research questions , they may be the only type of statistics you use. We’ll explore that a little later.

So, what kind of statistics are usually covered in this section?

Some common statistical tests used in this branch include the following:

- Mean – this is simply the mathematical average of a range of numbers.

- Median – this is the midpoint in a range of numbers when the numbers are arranged in numerical order. If the data set makes up an odd number, then the median is the number right in the middle of the set. If the data set makes up an even number, then the median is the midpoint between the two middle numbers.

- Mode – this is simply the most commonly occurring number in the data set.

- In cases where most of the numbers are quite close to the average, the standard deviation will be relatively low.

- Conversely, in cases where the numbers are scattered all over the place, the standard deviation will be relatively high.

- Skewness . As the name suggests, skewness indicates how symmetrical a range of numbers is. In other words, do they tend to cluster into a smooth bell curve shape in the middle of the graph, or do they skew to the left or right?

Feeling a bit confused? Let’s look at a practical example using a small data set.

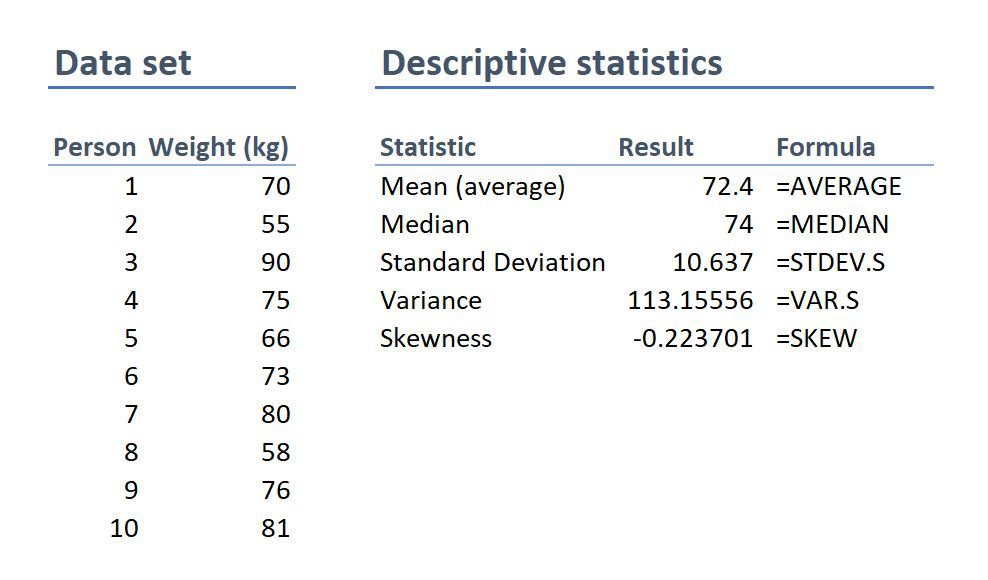

On the left-hand side is the data set. This details the bodyweight of a sample of 10 people. On the right-hand side, we have the descriptive statistics. Let’s take a look at each of them.

First, we can see that the mean weight is 72.4 kilograms. In other words, the average weight across the sample is 72.4 kilograms. Straightforward.

Next, we can see that the median is very similar to the mean (the average). This suggests that this data set has a reasonably symmetrical distribution (in other words, a relatively smooth, centred distribution of weights, clustered towards the centre).

In terms of the mode , there is no mode in this data set. This is because each number is present only once and so there cannot be a “most common number”. If there were two people who were both 65 kilograms, for example, then the mode would be 65.

Next up is the standard deviation . 10.6 indicates that there’s quite a wide spread of numbers. We can see this quite easily by looking at the numbers themselves, which range from 55 to 90, which is quite a stretch from the mean of 72.4.

And lastly, the skewness of -0.2 tells us that the data is very slightly negatively skewed. This makes sense since the mean and the median are slightly different.

As you can see, these descriptive statistics give us some useful insight into the data set. Of course, this is a very small data set (only 10 records), so we can’t read into these statistics too much. Also, keep in mind that this is not a list of all possible descriptive statistics – just the most common ones.

But why do all of these numbers matter?

While these descriptive statistics are all fairly basic, they’re important for a few reasons:

- Firstly, they help you get both a macro and micro-level view of your data. In other words, they help you understand both the big picture and the finer details.

- Secondly, they help you spot potential errors in the data – for example, if an average is way higher than you’d expect, or responses to a question are highly varied, this can act as a warning sign that you need to double-check the data.

- And lastly, these descriptive statistics help inform which inferential statistical techniques you can use, as those techniques depend on the skewness (in other words, the symmetry and normality) of the data.

Simply put, descriptive statistics are really important , even though the statistical techniques used are fairly basic. All too often at Grad Coach, we see students skimming over the descriptives in their eagerness to get to the more exciting inferential methods, and then landing up with some very flawed results.

Don’t be a sucker – give your descriptive statistics the love and attention they deserve!

Branch 2: Inferential Statistics

As I mentioned, while descriptive statistics are all about the details of your specific data set – your sample – inferential statistics aim to make inferences about the population . In other words, you’ll use inferential statistics to make predictions about what you’d expect to find in the full population.

What kind of predictions, you ask? Well, there are two common types of predictions that researchers try to make using inferential stats:

- Firstly, predictions about differences between groups – for example, height differences between children grouped by their favourite meal or gender.

- And secondly, relationships between variables – for example, the relationship between body weight and the number of hours a week a person does yoga.

In other words, inferential statistics (when done correctly), allow you to connect the dots and make predictions about what you expect to see in the real world population, based on what you observe in your sample data. For this reason, inferential statistics are used for hypothesis testing – in other words, to test hypotheses that predict changes or differences.

Of course, when you’re working with inferential statistics, the composition of your sample is really important. In other words, if your sample doesn’t accurately represent the population you’re researching, then your findings won’t necessarily be very useful.

For example, if your population of interest is a mix of 50% male and 50% female , but your sample is 80% male , you can’t make inferences about the population based on your sample, since it’s not representative. This area of statistics is called sampling, but we won’t go down that rabbit hole here (it’s a deep one!) – we’ll save that for another post .

What statistics are usually used in this branch?

There are many, many different statistical analysis methods within the inferential branch and it’d be impossible for us to discuss them all here. So we’ll just take a look at some of the most common inferential statistical methods so that you have a solid starting point.

First up are T-Tests . T-tests compare the means (the averages) of two groups of data to assess whether they’re statistically significantly different. In other words, do they have significantly different means, standard deviations and skewness.

This type of testing is very useful for understanding just how similar or different two groups of data are. For example, you might want to compare the mean blood pressure between two groups of people – one that has taken a new medication and one that hasn’t – to assess whether they are significantly different.

Kicking things up a level, we have ANOVA, which stands for “analysis of variance”. This test is similar to a T-test in that it compares the means of various groups, but ANOVA allows you to analyse multiple groups , not just two groups So it’s basically a t-test on steroids…

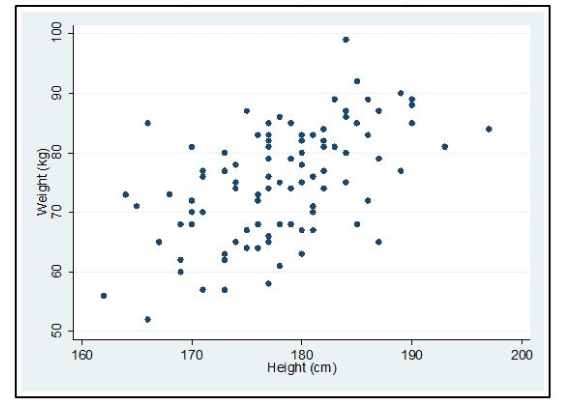

Next, we have correlation analysis . This type of analysis assesses the relationship between two variables. In other words, if one variable increases, does the other variable also increase, decrease or stay the same. For example, if the average temperature goes up, do average ice creams sales increase too? We’d expect some sort of relationship between these two variables intuitively , but correlation analysis allows us to measure that relationship scientifically .

Lastly, we have regression analysis – this is quite similar to correlation in that it assesses the relationship between variables, but it goes a step further to understand cause and effect between variables, not just whether they move together. In other words, does the one variable actually cause the other one to move, or do they just happen to move together naturally thanks to another force? Just because two variables correlate doesn’t necessarily mean that one causes the other.

Stats overload…

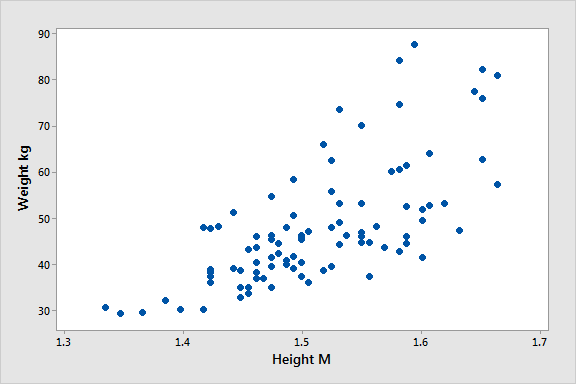

I hear you. To make this all a little more tangible, let’s take a look at an example of a correlation in action.

Here’s a scatter plot demonstrating the correlation (relationship) between weight and height. Intuitively, we’d expect there to be some relationship between these two variables, which is what we see in this scatter plot. In other words, the results tend to cluster together in a diagonal line from bottom left to top right.

As I mentioned, these are are just a handful of inferential techniques – there are many, many more. Importantly, each statistical method has its own assumptions and limitations.

For example, some methods only work with normally distributed (parametric) data, while other methods are designed specifically for non-parametric data. And that’s exactly why descriptive statistics are so important – they’re the first step to knowing which inferential techniques you can and can’t use.

How to choose the right analysis method

To choose the right statistical methods, you need to think about two important factors :

- The type of quantitative data you have (specifically, level of measurement and the shape of the data). And,

- Your research questions and hypotheses

Let’s take a closer look at each of these.

Factor 1 – Data type

The first thing you need to consider is the type of data you’ve collected (or the type of data you will collect). By data types, I’m referring to the four levels of measurement – namely, nominal, ordinal, interval and ratio. If you’re not familiar with this lingo, check out the video below.

Why does this matter?

Well, because different statistical methods and techniques require different types of data. This is one of the “assumptions” I mentioned earlier – every method has its assumptions regarding the type of data.

For example, some techniques work with categorical data (for example, yes/no type questions, or gender or ethnicity), while others work with continuous numerical data (for example, age, weight or income) – and, of course, some work with multiple data types.

If you try to use a statistical method that doesn’t support the data type you have, your results will be largely meaningless . So, make sure that you have a clear understanding of what types of data you’ve collected (or will collect). Once you have this, you can then check which statistical methods would support your data types here .

If you haven’t collected your data yet, you can work in reverse and look at which statistical method would give you the most useful insights, and then design your data collection strategy to collect the correct data types.

Another important factor to consider is the shape of your data . Specifically, does it have a normal distribution (in other words, is it a bell-shaped curve, centred in the middle) or is it very skewed to the left or the right? Again, different statistical techniques work for different shapes of data – some are designed for symmetrical data while others are designed for skewed data.

This is another reminder of why descriptive statistics are so important – they tell you all about the shape of your data.

Factor 2: Your research questions

The next thing you need to consider is your specific research questions, as well as your hypotheses (if you have some). The nature of your research questions and research hypotheses will heavily influence which statistical methods and techniques you should use.

If you’re just interested in understanding the attributes of your sample (as opposed to the entire population), then descriptive statistics are probably all you need. For example, if you just want to assess the means (averages) and medians (centre points) of variables in a group of people.

On the other hand, if you aim to understand differences between groups or relationships between variables and to infer or predict outcomes in the population, then you’ll likely need both descriptive statistics and inferential statistics.

So, it’s really important to get very clear about your research aims and research questions, as well your hypotheses – before you start looking at which statistical techniques to use.

Never shoehorn a specific statistical technique into your research just because you like it or have some experience with it. Your choice of methods must align with all the factors we’ve covered here.

Time to recap…

You’re still with me? That’s impressive. We’ve covered a lot of ground here, so let’s recap on the key points:

- Quantitative data analysis is all about analysing number-based data (which includes categorical and numerical data) using various statistical techniques.

- The two main branches of statistics are descriptive statistics and inferential statistics . Descriptives describe your sample, whereas inferentials make predictions about what you’ll find in the population.

- Common descriptive statistical methods include mean (average), median , standard deviation and skewness .

- Common inferential statistical methods include t-tests , ANOVA , correlation and regression analysis.

- To choose the right statistical methods and techniques, you need to consider the type of data you’re working with , as well as your research questions and hypotheses.

Psst… there’s more (for free)

This post is part of our dissertation mini-course, which covers everything you need to get started with your dissertation, thesis or research project.

You Might Also Like:

74 Comments

Hi, I have read your article. Such a brilliant post you have created.

Thank you for the feedback. Good luck with your quantitative analysis.

Thank you so much.

Thank you so much. I learnt much well. I love your summaries of the concepts. I had love you to explain how to input data using SPSS

Amazing and simple way of breaking down quantitative methods.

This is beautiful….especially for non-statisticians. I have skimmed through but I wish to read again. and please include me in other articles of the same nature when you do post. I am interested. I am sure, I could easily learn from you and get off the fear that I have had in the past. Thank you sincerely.

Send me every new information you might have.

i need every new information

Thank you for the blog. It is quite informative. Dr Peter Nemaenzhe PhD

It is wonderful. l’ve understood some of the concepts in a more compréhensive manner

Your article is so good! However, I am still a bit lost. I am doing a secondary research on Gun control in the US and increase in crime rates and I am not sure which analysis method I should use?

Based on the given learning points, this is inferential analysis, thus, use ‘t-tests, ANOVA, correlation and regression analysis’

Well explained notes. Am an MPH student and currently working on my thesis proposal, this has really helped me understand some of the things I didn’t know.

I like your page..helpful

wonderful i got my concept crystal clear. thankyou!!

This is really helpful , thank you

Thank you so much this helped

Wonderfully explained

thank u so much, it was so informative

THANKYOU, this was very informative and very helpful

This is great GRADACOACH I am not a statistician but I require more of this in my thesis

Include me in your posts.

This is so great and fully useful. I would like to thank you again and again.

Glad to read this article. I’ve read lot of articles but this article is clear on all concepts. Thanks for sharing.

Thank you so much. This is a very good foundation and intro into quantitative data analysis. Appreciate!

You have a very impressive, simple but concise explanation of data analysis for Quantitative Research here. This is a God-send link for me to appreciate research more. Thank you so much!

Avery good presentation followed by the write up. yes you simplified statistics to make sense even to a layman like me. Thank so much keep it up. The presenter did ell too. i would like more of this for Qualitative and exhaust more of the test example like the Anova.

This is a very helpful article, couldn’t have been clearer. Thank you.

Awesome and phenomenal information.Well done

The video with the accompanying article is super helpful to demystify this topic. Very well done. Thank you so much.

thank you so much, your presentation helped me a lot

I don’t know how should I express that ur article is saviour for me 🥺😍

It is well defined information and thanks for sharing. It helps me a lot in understanding the statistical data.

I gain a lot and thanks for sharing brilliant ideas, so wish to be linked on your email update.

Very helpful and clear .Thank you Gradcoach.

Thank for sharing this article, well organized and information presented are very clear.

VERY INTERESTING AND SUPPORTIVE TO NEW RESEARCHERS LIKE ME. AT LEAST SOME BASICS ABOUT QUANTITATIVE.

An outstanding, well explained and helpful article. This will help me so much with my data analysis for my research project. Thank you!

wow this has just simplified everything i was scared of how i am gonna analyse my data but thanks to you i will be able to do so

simple and constant direction to research. thanks

This is helpful

Great writing!! Comprehensive and very helpful.

Do you provide any assistance for other steps of research methodology like making research problem testing hypothesis report and thesis writing?

Thank you so much for such useful article!

Amazing article. So nicely explained. Wow

Very insightfull. Thanks

I am doing a quality improvement project to determine if the implementation of a protocol will change prescribing habits. Would this be a t-test?

The is a very helpful blog, however, I’m still not sure how to analyze my data collected. I’m doing a research on “Free Education at the University of Guyana”

tnx. fruitful blog!

So I am writing exams and would like to know how do establish which method of data analysis to use from the below research questions: I am a bit lost as to how I determine the data analysis method from the research questions.

Do female employees report higher job satisfaction than male employees with similar job descriptions across the South African telecommunications sector? – I though that maybe Chi Square could be used here. – Is there a gender difference in talented employees’ actual turnover decisions across the South African telecommunications sector? T-tests or Correlation in this one. – Is there a gender difference in the cost of actual turnover decisions across the South African telecommunications sector? T-tests or Correlation in this one. – What practical recommendations can be made to the management of South African telecommunications companies on leveraging gender to mitigate employee turnover decisions?

Your assistance will be appreciated if I could get a response as early as possible tomorrow

This was quite helpful. Thank you so much.

wow I got a lot from this article, thank you very much, keep it up

Thanks for yhe guidance. Can you send me this guidance on my email? To enable offline reading?

Thank you very much, this service is very helpful.

Every novice researcher needs to read this article as it puts things so clear and easy to follow. Its been very helpful.

Wonderful!!!! you explained everything in a way that anyone can learn. Thank you!!

I really enjoyed reading though this. Very easy to follow. Thank you

Many thanks for your useful lecture, I would be really appreciated if you could possibly share with me the PPT of presentation related to Data type?

Thank you very much for sharing, I got much from this article

This is a very informative write-up. Kindly include me in your latest posts.

Very interesting mostly for social scientists

Thank you so much, very helpfull

You’re welcome 🙂

woow, its great, its very informative and well understood because of your way of writing like teaching in front of me in simple languages.

I have been struggling to understand a lot of these concepts. Thank you for the informative piece which is written with outstanding clarity.

very informative article. Easy to understand

Beautiful read, much needed.

Always greet intro and summary. I learn so much from GradCoach

Quite informative. Simple and clear summary.

I thoroughly enjoyed reading your informative and inspiring piece. Your profound insights into this topic truly provide a better understanding of its complexity. I agree with the points you raised, especially when you delved into the specifics of the article. In my opinion, that aspect is often overlooked and deserves further attention.

Absolutely!!! Thank you

Thank you very much for this post. It made me to understand how to do my data analysis.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

8 Types of Data Analysis

Data analysis is an aspect of data science and data analytics that is all about analyzing data for different kinds of purposes. The data analysis process involves inspecting, cleaning, transforming and modeling data to draw useful insights from it.

What Are the Different Types of Data Analysis?

- Descriptive analysis

- Diagnostic analysis

- Exploratory analysis

- Inferential analysis

- Predictive analysis

- Causal analysis

- Mechanistic analysis

- Prescriptive analysis

With its multiple facets, methodologies and techniques, data analysis is used in a variety of fields, including business, science and social science, among others. As businesses thrive under the influence of technological advancements in data analytics, data analysis plays a huge role in decision-making , providing a better, faster and more efficacious system that minimizes risks and reduces human biases .

That said, there are different kinds of data analysis catered with different goals. We’ll examine each one below.

Two Camps of Data Analysis

Data analysis can be divided into two camps, according to the book R for Data Science :

- Hypothesis Generation — This involves looking deeply at the data and combining your domain knowledge to generate hypotheses about why the data behaves the way it does.

- Hypothesis Confirmation — This involves using a precise mathematical model to generate falsifiable predictions with statistical sophistication to confirm your prior hypotheses.

Types of Data Analysis

Data analysis can be separated and organized into types, arranged in an increasing order of complexity.

1. Descriptive Analysis

The goal of descriptive analysis is to describe or summarize a set of data. Here’s what you need to know:

- Descriptive analysis is the very first analysis performed in the data analysis process.

- It generates simple summaries about samples and measurements.

- It involves common, descriptive statistics like measures of central tendency, variability, frequency and position.

Descriptive Analysis Example

Take the Covid-19 statistics page on Google, for example. The line graph is a pure summary of the cases/deaths, a presentation and description of the population of a particular country infected by the virus.

Descriptive analysis is the first step in analysis where you summarize and describe the data you have using descriptive statistics, and the result is a simple presentation of your data.

More on Data Analysis: Data Analyst vs. Data Scientist: Similarities and Differences Explained

2. Diagnostic Analysis

Diagnostic analysis seeks to answer the question “Why did this happen?” by taking a more in-depth look at data to uncover subtle patterns. Here’s what you need to know:

- Diagnostic analysis typically comes after descriptive analysis, taking initial findings and investigating why certain patterns in data happen.

- Diagnostic analysis may involve analyzing other related data sources, including past data, to reveal more insights into current data trends.

- Diagnostic analysis is ideal for further exploring patterns in data to explain anomalies.

Diagnostic Analysis Example

A footwear store wants to review its website traffic levels over the previous 12 months. Upon compiling and assessing the data, the company’s marketing team finds that June experienced above-average levels of traffic while July and August witnessed slightly lower levels of traffic.

To find out why this difference occurred, the marketing team takes a deeper look. Team members break down the data to focus on specific categories of footwear. In the month of June, they discovered that pages featuring sandals and other beach-related footwear received a high number of views while these numbers dropped in July and August.

Marketers may also review other factors like seasonal changes and company sales events to see if other variables could have contributed to this trend.

3. Exploratory Analysis (EDA)

Exploratory analysis involves examining or exploring data and finding relationships between variables that were previously unknown. Here’s what you need to know:

- EDA helps you discover relationships between measures in your data, which are not evidence for the existence of the correlation, as denoted by the phrase, “ Correlation doesn’t imply causation .”

- It’s useful for discovering new connections and forming hypotheses. It drives design planning and data collection.

Exploratory Analysis Example

Climate change is an increasingly important topic as the global temperature has gradually risen over the years. One example of an exploratory data analysis on climate change involves taking the rise in temperature over the years from 1950 to 2020 and the increase of human activities and industrialization to find relationships from the data. For example, you may increase the number of factories, cars on the road and airplane flights to see how that correlates with the rise in temperature.

Exploratory analysis explores data to find relationships between measures without identifying the cause. It’s most useful when formulating hypotheses.

4. Inferential Analysis

Inferential analysis involves using a small sample of data to infer information about a larger population of data.

The goal of statistical modeling itself is all about using a small amount of information to extrapolate and generalize information to a larger group. Here’s what you need to know:

- Inferential analysis involves using estimated data that is representative of a population and gives a measure of uncertainty or standard deviation to your estimation.

- The accuracy of inference depends heavily on your sampling scheme. If the sample isn’t representative of the population, the generalization will be inaccurate. This is known as the central limit theorem .

Inferential Analysis Example

The idea of drawing an inference about the population at large with a smaller sample size is intuitive. Many statistics you see on the media and the internet are inferential; a prediction of an event based on a small sample. For example, a psychological study on the benefits of sleep might have a total of 500 people involved. When they followed up with the candidates, the candidates reported to have better overall attention spans and well-being with seven-to-nine hours of sleep, while those with less sleep and more sleep than the given range suffered from reduced attention spans and energy. This study drawn from 500 people was just a tiny portion of the 7 billion people in the world, and is thus an inference of the larger population.

Inferential analysis extrapolates and generalizes the information of the larger group with a smaller sample to generate analysis and predictions.

5. Predictive Analysis

Predictive analysis involves using historical or current data to find patterns and make predictions about the future. Here’s what you need to know:

- The accuracy of the predictions depends on the input variables.

- Accuracy also depends on the types of models. A linear model might work well in some cases, and in other cases it might not.

- Using a variable to predict another one doesn’t denote a causal relationship.

Predictive Analysis Example

The 2020 US election is a popular topic and many prediction models are built to predict the winning candidate. FiveThirtyEight did this to forecast the 2016 and 2020 elections. Prediction analysis for an election would require input variables such as historical polling data, trends and current polling data in order to return a good prediction. Something as large as an election wouldn’t just be using a linear model, but a complex model with certain tunings to best serve its purpose.

Predictive analysis takes data from the past and present to make predictions about the future.

More on Data: Explaining the Empirical for Normal Distribution

6. Causal Analysis

Causal analysis looks at the cause and effect of relationships between variables and is focused on finding the cause of a correlation. Here’s what you need to know:

- To find the cause, you have to question whether the observed correlations driving your conclusion are valid. Just looking at the surface data won’t help you discover the hidden mechanisms underlying the correlations.

- Causal analysis is applied in randomized studies focused on identifying causation.

- Causal analysis is the gold standard in data analysis and scientific studies where the cause of phenomenon is to be extracted and singled out, like separating wheat from chaff.

- Good data is hard to find and requires expensive research and studies. These studies are analyzed in aggregate (multiple groups), and the observed relationships are just average effects (mean) of the whole population. This means the results might not apply to everyone.

Causal Analysis Example

Say you want to test out whether a new drug improves human strength and focus. To do that, you perform randomized control trials for the drug to test its effect. You compare the sample of candidates for your new drug against the candidates receiving a mock control drug through a few tests focused on strength and overall focus and attention. This will allow you to observe how the drug affects the outcome.

Causal analysis is about finding out the causal relationship between variables, and examining how a change in one variable affects another.

7. Mechanistic Analysis

Mechanistic analysis is used to understand exact changes in variables that lead to other changes in other variables. Here’s what you need to know:

- It’s applied in physical or engineering sciences, situations that require high precision and little room for error, only noise in data is measurement error.

- It’s designed to understand a biological or behavioral process, the pathophysiology of a disease or the mechanism of action of an intervention.

Mechanistic Analysis Example

Many graduate-level research and complex topics are suitable examples, but to put it in simple terms, let’s say an experiment is done to simulate safe and effective nuclear fusion to power the world. A mechanistic analysis of the study would entail a precise balance of controlling and manipulating variables with highly accurate measures of both variables and the desired outcomes. It’s this intricate and meticulous modus operandi toward these big topics that allows for scientific breakthroughs and advancement of society.

Mechanistic analysis is in some ways a predictive analysis, but modified to tackle studies that require high precision and meticulous methodologies for physical or engineering science .

8. Prescriptive Analysis

Prescriptive analysis compiles insights from other previous data analyses and determines actions that teams or companies can take to prepare for predicted trends. Here’s what you need to know:

- Prescriptive analysis may come right after predictive analysis, but it may involve combining many different data analyses.

- Companies need advanced technology and plenty of resources to conduct prescriptive analysis. AI systems that process data and adjust automated tasks are an example of the technology required to perform prescriptive analysis.

Prescriptive Analysis Example

Prescriptive analysis is pervasive in everyday life, driving the curated content users consume on social media. On platforms like TikTok and Instagram, algorithms can apply prescriptive analysis to review past content a user has engaged with and the kinds of behaviors they exhibited with specific posts. Based on these factors, an algorithm seeks out similar content that is likely to elicit the same response and recommends it on a user’s personal feed.

When to Use the Different Types of Data Analysis

- Descriptive analysis summarizes the data at hand and presents your data in a comprehensible way.

- Diagnostic analysis takes a more detailed look at data to reveal why certain patterns occur, making it a good method for explaining anomalies.

- Exploratory data analysis helps you discover correlations and relationships between variables in your data.

- Inferential analysis is for generalizing the larger population with a smaller sample size of data.

- Predictive analysis helps you make predictions about the future with data.

- Causal analysis emphasizes finding the cause of a correlation between variables.

- Mechanistic analysis is for measuring the exact changes in variables that lead to other changes in other variables.

- Prescriptive analysis combines insights from different data analyses to develop a course of action teams and companies can take to capitalize on predicted outcomes.

A few important tips to remember about data analysis include:

- Correlation doesn’t imply causation.

- EDA helps discover new connections and form hypotheses.

- Accuracy of inference depends on the sampling scheme.

- A good prediction depends on the right input variables.

- A simple linear model with enough data usually does the trick.

- Using a variable to predict another doesn’t denote causal relationships.

- Good data is hard to find, and to produce it requires expensive research.

- Results from studies are done in aggregate and are average effects and might not apply to everyone.

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

Learn / Guides / Quantitative data analysis guide

Back to guides

8 quantitative data analysis methods to turn numbers into insights

Setting up a few new customer surveys or creating a fresh Google Analytics dashboard feels exciting…until the numbers start rolling in. You want to turn responses into a plan to present to your team and leaders—but which quantitative data analysis method do you use to make sense of the facts and figures?

Last updated

Reading time.

This guide lists eight quantitative research data analysis techniques to help you turn numeric feedback into actionable insights to share with your team and make customer-centric decisions.

To pick the right technique that helps you bridge the gap between data and decision-making, you first need to collect quantitative data from sources like:

Google Analytics

Survey results

On-page feedback scores

Fuel your quantitative analysis with real-time data

Use Hotjar’s tools to collect quantitative data that helps you stay close to customers.

Then, choose an analysis method based on the type of data and how you want to use it.

Descriptive data analysis summarizes results—like measuring website traffic—that help you learn about a problem or opportunity. The descriptive analysis methods we’ll review are:

Multiple choice response rates

Response volume over time

Net Promoter Score®

Inferential data analyzes the relationship between data—like which customer segment has the highest average order value—to help you make hypotheses about product decisions. Inferential analysis methods include:

Cross-tabulation

Weighted customer feedback

You don’t need to worry too much about these specific terms since each quantitative data analysis method listed below explains when and how to use them. Let’s dive in!

1. Compare multiple-choice response rates

The simplest way to analyze survey data is by comparing the percentage of your users who chose each response, which summarizes opinions within your audience.

To do this, divide the number of people who chose a specific response by the total respondents for your multiple-choice survey. Imagine 100 customers respond to a survey about what product category they want to see. If 25 people said ‘snacks’, 25% of your audience favors that category, so you know that adding a snacks category to your list of filters or drop-down menu will make the purchasing process easier for them.

💡Pro tip: ask open-ended survey questions to dig deeper into customer motivations.

A multiple-choice survey measures your audience’s opinions, but numbers don’t tell you why they think the way they do—you need to combine quantitative and qualitative data to learn that.

One research method to learn about customer motivations is through an open-ended survey question. Giving customers space to express their thoughts in their own words—unrestricted by your pre-written multiple-choice questions—prevents you from making assumptions.

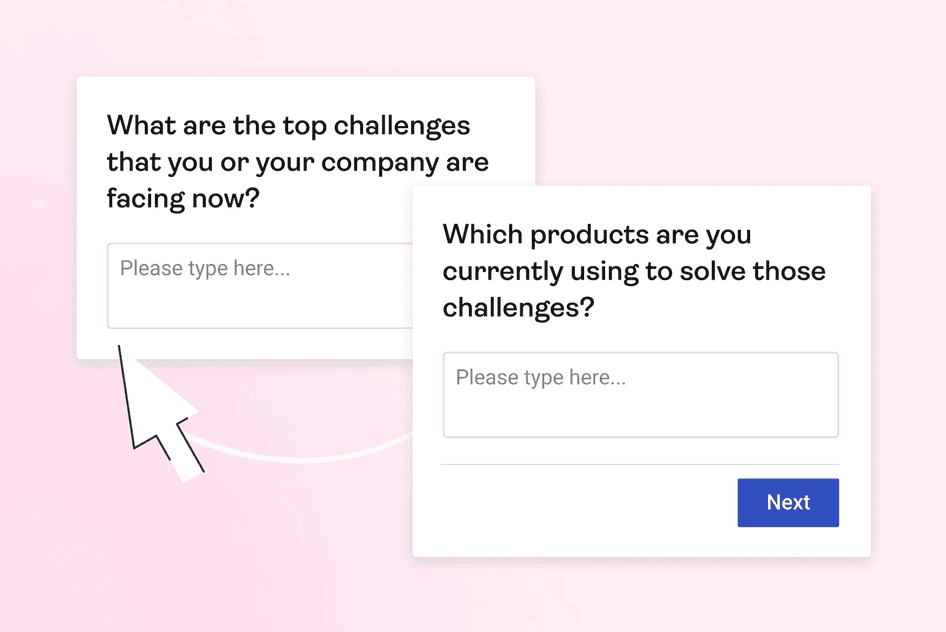

Hotjar’s open-ended surveys have a text box for customers to type a response

2. Cross-tabulate to compare responses between groups

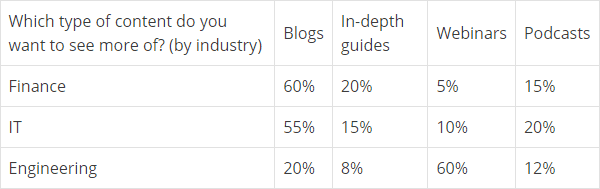

To understand how responses and behavior vary within your audience, compare your quantitative data by group. Use raw numbers, like the number of website visitors, or percentages, like questionnaire responses, across categories like traffic sources or customer segments.

Let’s say you ask your audience what their most-used feature is because you want to know what to highlight on your pricing page. Comparing the most common response for free trial users vs. established customers lets you strategically introduce features at the right point in the customer journey .

💡Pro tip: get some face-to-face time to discover nuances in customer feedback.

Rather than treating your customers as a monolith, use Hotjar to conduct interviews to learn about individuals and subgroups. If you aren’t sure what to ask, start with your quantitative data results. If you notice competing trends between customer segments, have a few conversations with individuals from each group to dig into their unique motivations.

Hotjar Engage lets you identify specific customer segments you want to talk to

Mode is the most common answer in a data set, which means you use it to discover the most popular response for questions with numeric answer options. Mode and median (that's next on the list) are useful to compare to the average in case responses on extreme ends of the scale (outliers) skew the outcome.

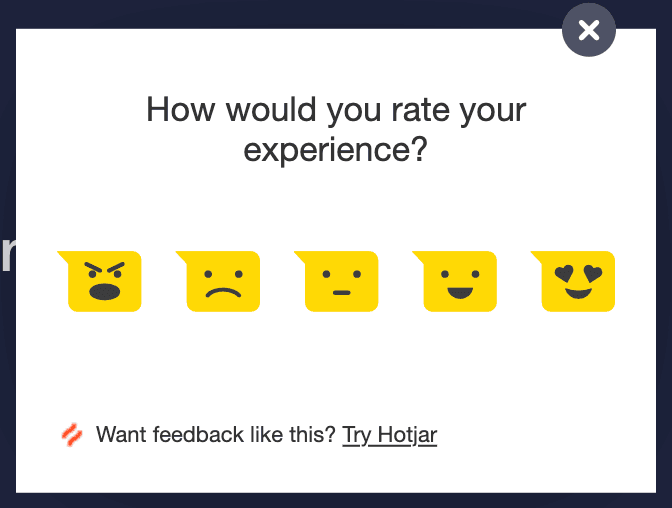

Let’s say you want to know how most customers feel about your website, so you use an on-page feedback widget to collect ratings on a scale of one to five.

If the mode, or most common response, is a three, you can assume most people feel somewhat positive. But suppose the second-most common response is a one (which would bring the average down). In that case, you need to investigate why so many customers are unhappy.

💡Pro tip: watch recordings to understand how customers interact with your website.

So you used on-page feedback to learn how customers feel about your website, and the mode was two out of five. Ouch. Use Hotjar Recordings to see how customers move around on and interact with your pages to find the source of frustration.

Hotjar Recordings lets you watch individual visitors interact with your site, like how they scroll, hover, and click

Median reveals the middle of the road of your quantitative data by lining up all numeric values in ascending order and then looking at the data point in the middle. Use the median method when you notice a few outliers that bring the average up or down and compare the analysis outcomes.

For example, if your price sensitivity survey has outlandish responses and you want to identify a reasonable middle ground of what customers are willing to pay—calculate the median.

💡Pro-tip: review and clean your data before analysis.

Take a few minutes to familiarize yourself with quantitative data results before you push them through analysis methods. Inaccurate or missing information can complicate your calculations, and it’s less frustrating to resolve issues at the start instead of problem-solving later.

Here are a few data-cleaning tips to keep in mind:

Remove or separate irrelevant data, like responses from a customer segment or time frame you aren’t reviewing right now

Standardize data from multiple sources, like a survey that let customers indicate they use your product ‘daily’ vs. on-page feedback that used the phrasing ‘more than once a week’

Acknowledge missing data, like some customers not answering every question. Just note that your totals between research questions might not match.

Ensure you have enough responses to have a statistically significant result

Decide if you want to keep or remove outlying data. For example, maybe there’s evidence to support a high-price tier, and you shouldn’t dismiss less price-sensitive respondents. Other times, you might want to get rid of obviously trolling responses.

5. Mean (AKA average)

Finding the average of a dataset is an essential quantitative data analysis method and an easy task. First, add all your quantitative data points, like numeric survey responses or daily sales revenue. Then, divide the sum of your data points by the number of responses to get a single number representing the entire dataset.

Use the average of your quant data when you want a summary, like the average order value of your transactions between different sales pages. Then, use your average to benchmark performance, compare over time, or uncover winners across segments—like which sales page design produces the most value.

💡Pro tip: use heatmaps to find attention-catching details numbers can’t give you.

Calculating the average of your quant data set reveals the outcome of customer interactions. However, you need qualitative data like a heatmap to learn about everything that led to that moment. A heatmap uses colors to illustrate where most customers look and click on a page to reveal what drives (or drops) momentum.

Hotjar Heatmaps uses color to visualize what most visitors see, ignore, and click on

6. Measure the volume of responses over time

Some quantitative data analysis methods are an ongoing project, like comparing top website referral sources by month to gauge the effectiveness of new channels. Analyzing the same metric at regular intervals lets you compare trends and changes.

Look at quantitative survey results, website sessions, sales, cart abandons, or clicks regularly to spot trouble early or monitor the impact of a new initiative.

Here are a few areas you can measure over time (and how to use qualitative research methods listed above to add context to your results):

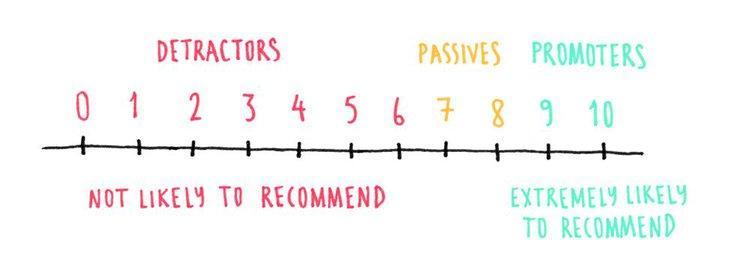

7. Net Promoter Score®

Net Promoter Score® ( NPS ®) is a popular customer loyalty and satisfaction measurement that also serves as a quantitative data analysis method.

NPS surveys ask customers to rate how likely they are to recommend you on a scale of zero to ten. Calculate it by subtracting the percentage of customers who answer the NPS question with a six or lower (known as ‘detractors’) from those who respond with a nine or ten (known as ‘promoters’). Your NPS score will fall between -100 and 100, and you want a positive number indicating more promoters than detractors.

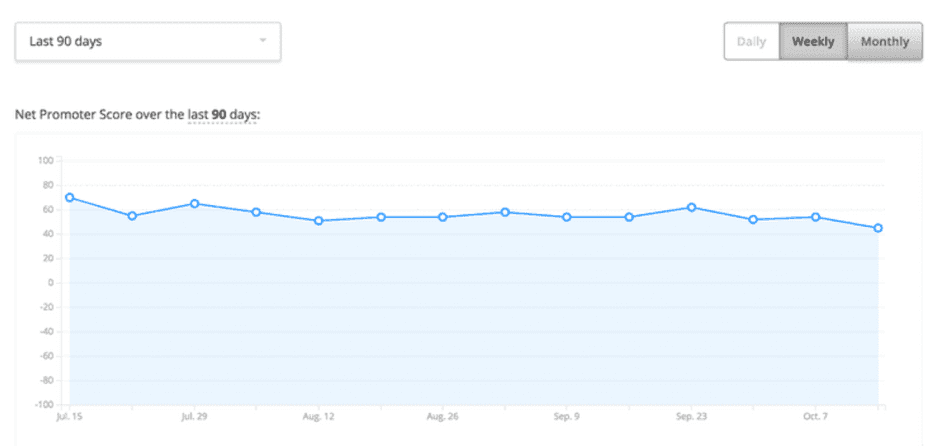

💡Pro tip : like other quantitative data analysis methods, you can review NPS scores over time as a satisfaction benchmark. You can also use it to understand which customer segment is most satisfied or which customers may be willing to share their stories for promotional materials.

Review NPS score trends with Hotjar to spot any sudden spikes and benchmark performance over time

8. Weight customer feedback

So far, the quantitative data analysis methods on this list have leveraged numeric data only. However, there are ways to turn qualitative data into quantifiable feedback and to mix and match data sources. For example, you might need to analyze user feedback from multiple surveys.

To leverage multiple data points, create a prioritization matrix that assigns ‘weight’ to customer feedback data and company priorities and then multiply them to reveal the highest-scoring option.

Let’s say you identify the top four responses to your churn survey . Rate the most common issue as a four and work down the list until one—these are your customer priorities. Then, rate the ease of fixing each problem with a maximum score of four for the easy wins down to one for difficult tasks—these are your company priorities. Finally, multiply the score of each customer priority with its coordinating company priority scores and lead with the highest scoring idea.

💡Pro-tip: use a product prioritization framework to make decisions.

Try a product prioritization framework when the pressure is on to make high-impact decisions with limited time and budget. These repeatable decision-making tools take the guesswork out of balancing goals, customer priorities, and team resources. Four popular frameworks are:

RICE: weighs four factors—reach, impact, confidence, and effort—to weigh initiatives differently

MoSCoW: considers stakeholder opinions on 'must-have', 'should-have', 'could-have', and 'won't-have' criteria

Kano: ranks ideas based on how likely they are to satisfy customer needs

Cost of delay analysis: determines potential revenue loss by not working on a product or initiative

Share what you learn with data visuals

Data visualization through charts and graphs gives you a new perspective on your results. Plus, removing the clutter of the analysis process helps you and stakeholders focus on the insight over the method.

Data visualization helps you:

Get buy-in with impactful charts that summarize your results

Increase customer empathy and awareness across your company with digestible insights

Use these four data visualization types to illustrate what you learned from your quantitative data analysis:

Bar charts reveal response distribution across multiple options

Line graphs compare data points over time

Scatter plots showcase how two variables interact

Matrices contrast data between categories like customer segments, product types, or traffic source

Use a variety of customer feedback types to get the whole picture

Quantitative data analysis pulls the story out of raw numbers—but you shouldn’t take a single result from your data collection and run with it. Instead, combine numbers-based quantitative data with descriptive qualitative research to learn the what, why, and how of customer experiences.

Looking at an opportunity from multiple angles helps you make more customer-centric decisions with less guesswork.

Stay close to customers with Hotjar

Hotjar’s tools offer quantitative and qualitative insights you can use to make customer-centric decisions, get buy-in, and highlight your team’s impact.

Frequently asked questions about quantitative data analysis

What is quantitative data.

Quantitative data is numeric feedback and information that you can count and measure. For example, you can calculate multiple-choice response rates, but you can’t tally a customer’s open-ended product feedback response. You have to use qualitative data analysis methods for non-numeric feedback.

What are quantitative data analysis methods?

Quantitative data analysis either summarizes or finds connections between numerical data feedback. Here are eight ways to analyze your online business’s quantitative data:

Compare multiple-choice response rates

Cross-tabulate to compare responses between groups

Measure the volume of response over time

Net Promoter Score

Weight customer feedback

How do you visualize quantitative data?

Data visualization makes it easier to spot trends and share your analysis with stakeholders. Bar charts, line graphs, scatter plots, and matrices are ways to visualize quantitative data.

What are the two types of statistical analysis for online businesses?

Quantitative data analysis is broken down into two analysis technique types:

Descriptive statistics summarize your collected data, like the number of website visitors this month

Inferential statistics compare relationships between multiple types of quantitative data, like survey responses between different customer segments

Quantitative data analysis process

Previous chapter

Quantitative data analysis software

Next chapter

- Privacy Policy

Buy Me a Coffee

Home » Quantitative Data – Types, Methods and Examples

Quantitative Data – Types, Methods and Examples

Table of Contents

Quantitative Data

Definition:

Quantitative data refers to numerical data that can be measured or counted. This type of data is often used in scientific research and is typically collected through methods such as surveys, experiments, and statistical analysis.

Quantitative Data Types

There are two main types of quantitative data: discrete and continuous.

- Discrete data: Discrete data refers to numerical values that can only take on specific, distinct values. This type of data is typically represented as whole numbers and cannot be broken down into smaller units. Examples of discrete data include the number of students in a class, the number of cars in a parking lot, and the number of children in a family.

- Continuous data: Continuous data refers to numerical values that can take on any value within a certain range or interval. This type of data is typically represented as decimal or fractional values and can be broken down into smaller units. Examples of continuous data include measurements of height, weight, temperature, and time.

Quantitative Data Collection Methods

There are several common methods for collecting quantitative data. Some of these methods include:

- Surveys : Surveys involve asking a set of standardized questions to a large number of people. Surveys can be conducted in person, over the phone, via email or online, and can be used to collect data on a wide range of topics.

- Experiments : Experiments involve manipulating one or more variables and observing the effects on a specific outcome. Experiments can be conducted in a controlled laboratory setting or in the real world.

- Observational studies : Observational studies involve observing and collecting data on a specific phenomenon without intervening or manipulating any variables. Observational studies can be conducted in a natural setting or in a laboratory.

- Secondary data analysis : Secondary data analysis involves using existing data that was collected for a different purpose to answer a new research question. This method can be cost-effective and efficient, but it is important to ensure that the data is appropriate for the research question being studied.

- Physiological measures: Physiological measures involve collecting data on biological or physiological processes, such as heart rate, blood pressure, or brain activity.

- Computerized tracking: Computerized tracking involves collecting data automatically from electronic sources, such as social media, online purchases, or website analytics.

Quantitative Data Analysis Methods

There are several methods for analyzing quantitative data, including:

- Descriptive statistics: Descriptive statistics are used to summarize and describe the basic features of the data, such as the mean, median, mode, standard deviation, and range.

- Inferential statistics : Inferential statistics are used to make generalizations about a population based on a sample of data. These methods include hypothesis testing, confidence intervals, and regression analysis.

- Data visualization: Data visualization involves creating charts, graphs, and other visual representations of the data to help identify patterns and trends. Common types of data visualization include histograms, scatterplots, and bar charts.

- Time series analysis: Time series analysis involves analyzing data that is collected over time to identify patterns and trends in the data.

- Multivariate analysis : Multivariate analysis involves analyzing data with multiple variables to identify relationships between the variables.

- Factor analysis : Factor analysis involves identifying underlying factors or dimensions that explain the variation in the data.

- Cluster analysis: Cluster analysis involves identifying groups or clusters of observations that are similar to each other based on multiple variables.

Quantitative Data Formats

Quantitative data can be represented in different formats, depending on the nature of the data and the purpose of the analysis. Here are some common formats:

- Tables : Tables are a common way to present quantitative data, particularly when the data involves multiple variables. Tables can be used to show the frequency or percentage of data in different categories or to display summary statistics.

- Charts and graphs: Charts and graphs are useful for visualizing quantitative data and can be used to highlight patterns and trends in the data. Some common types of charts and graphs include line charts, bar charts, scatterplots, and pie charts.

- Databases : Quantitative data can be stored in databases, which allow for easy sorting, filtering, and analysis of large amounts of data.

- Spreadsheets : Spreadsheets can be used to organize and analyze quantitative data, particularly when the data is relatively small in size. Spreadsheets allow for calculations and data manipulation, as well as the creation of charts and graphs.

- Statistical software : Statistical software, such as SPSS, R, and SAS, can be used to analyze quantitative data. These programs allow for more advanced statistical analyses and data modeling, as well as the creation of charts and graphs.

Quantitative Data Gathering Guide

Here is a basic guide for gathering quantitative data:

- Define the research question: The first step in gathering quantitative data is to clearly define the research question. This will help determine the type of data to be collected, the sample size, and the methods of data analysis.

- Choose the data collection method: Select the appropriate method for collecting data based on the research question and available resources. This could include surveys, experiments, observational studies, or other methods.

- Determine the sample size: Determine the appropriate sample size for the research question. This will depend on the level of precision needed and the variability of the population being studied.

- Develop the data collection instrument: Develop a questionnaire or survey instrument that will be used to collect the data. The instrument should be designed to gather the specific information needed to answer the research question.

- Pilot test the data collection instrument : Before collecting data from the entire sample, pilot test the instrument on a small group to identify any potential problems or issues.