8 case studies and real world examples of how Big Data has helped keep on top of competition

Fast, data-informed decision-making can drive business success. Managing high customer expectations, navigating marketing challenges, and global competition – many organizations look to data analytics and business intelligence for a competitive advantage.

Using data to serve up personalized ads based on browsing history, providing contextual KPI data access for all employees and centralizing data from across the business into one digital ecosystem so processes can be more thoroughly reviewed are all examples of business intelligence.

Organizations invest in data science because it promises to bring competitive advantages.

Data is transforming into an actionable asset, and new tools are using that reality to move the needle with ML. As a result, organizations are on the brink of mobilizing data to not only predict the future but also to increase the likelihood of certain outcomes through prescriptive analytics.

Here are some case studies that show some ways BI is making a difference for companies around the world:

1) Starbucks:

With 90 million transactions a week in 25,000 stores worldwide the coffee giant is in many ways on the cutting edge of using big data and artificial intelligence to help direct marketing, sales and business decisions

Through its popular loyalty card program and mobile application, Starbucks owns individual purchase data from millions of customers. Using this information and BI tools, the company predicts purchases and sends individual offers of what customers will likely prefer via their app and email. This system draws existing customers into its stores more frequently and increases sales volumes.

The same intel that helps Starbucks suggest new products to try also helps the company send personalized offers and discounts that go far beyond a special birthday discount. Additionally, a customized email goes out to any customer who hasn’t visited a Starbucks recently with enticing offers—built from that individual’s purchase history—to re-engage them.

2) Netflix:

The online entertainment company’s 148 million subscribers give it a massive BI advantage.

Netflix has digitized its interactions with its 151 million subscribers. It collects data from each of its users and with the help of data analytics understands the behavior of subscribers and their watching patterns. It then leverages that information to recommend movies and TV shows customized as per the subscriber’s choice and preferences.

As per Netflix, around 80% of the viewer’s activity is triggered by personalized algorithmic recommendations. Where Netflix gains an edge over its peers is that by collecting different data points, it creates detailed profiles of its subscribers which helps them engage with them better.

The recommendation system of Netflix contributes to more than 80% of the content streamed by its subscribers which has helped Netflix earn a whopping one billion via customer retention. Due to this reason, Netflix doesn’t have to invest too much on advertising and marketing their shows. They precisely know an estimate of the people who would be interested in watching a show.

3) Coca-Cola:

Coca Cola is the world’s largest beverage company, with over 500 soft drink brands sold in more than 200 countries. Given the size of its operations, Coca Cola generates a substantial amount of data across its value chain – including sourcing, production, distribution, sales and customer feedback which they can leverage to drive successful business decisions.

Coca Cola has been investing extensively in research and development, especially in AI, to better leverage the mountain of data it collects from customers all around the world. This initiative has helped them better understand consumer trends in terms of price, flavors, packaging, and consumer’ preference for healthier options in certain regions.

With 35 million Twitter followers and a whopping 105 million Facebook fans, Coca-Cola benefits from its social media data. Using AI-powered image-recognition technology, they can track when photographs of its drinks are posted online. This data, paired with the power of BI, gives the company important insights into who is drinking their beverages, where they are and why they mention the brand online. The information helps serve consumers more targeted advertising, which is four times more likely than a regular ad to result in a click.

Coca Cola is increasingly betting on BI, data analytics and AI to drive its strategic business decisions. From its innovative free style fountain machine to finding new ways to engage with customers, Coca Cola is well-equipped to remain at the top of the competition in the future. In a new digital world that is increasingly dynamic, with changing customer behavior, Coca Cola is relying on Big Data to gain and maintain their competitive advantage.

4) American Express GBT

The American Express Global Business Travel company, popularly known as Amex GBT, is an American multinational travel and meetings programs management corporation which operates in over 120 countries and has over 14,000 employees.

Challenges:

Scalability – Creating a single portal for around 945 separate data files from internal and customer systems using the current BI tool would require over 6 months to complete. The earlier tool was used for internal purposes and scaling the solution to such a large population while keeping the costs optimum was a major challenge

Performance – Their existing system had limitations shifting to Cloud. The amount of time and manual effort required was immense

Data Governance – Maintaining user data security and privacy was of utmost importance for Amex GBT

The company was looking to protect and increase its market share by differentiating its core services and was seeking a resource to manage and drive their online travel program capabilities forward. Amex GBT decided to make a strategic investment in creating smart analytics around their booking software.

The solution equipped users to view their travel ROI by categorizing it into three categories cost, time and value. Each category has individual KPIs that are measured to evaluate the performance of a travel plan.

Reducing travel expenses by 30%

Time to Value – Initially it took a week for new users to be on-boarded onto the platform. With Premier Insights that time had now been reduced to a single day and the process had become much simpler and more effective.

Savings on Spends – The product notifies users of any available booking offers that can help them save on their expenditure. It recommends users of possible saving potential such as flight timings, date of the booking, date of travel, etc.

Adoption – Ease of use of the product, quick scale-up, real-time implementation of reports, and interactive dashboards of Premier Insights increased the global online adoption for Amex GBT

5) Airline Solutions Company: BI Accelerates Business Insights

Airline Solutions provides booking tools, revenue management, web, and mobile itinerary tools, as well as other technology, for airlines, hotels and other companies in the travel industry.

Challenge: The travel industry is remarkably dynamic and fast paced. And the airline solution provider’s clients needed advanced tools that could provide real-time data on customer behavior and actions.

They developed an enterprise travel data warehouse (ETDW) to hold its enormous amounts of data. The executive dashboards provide near real-time insights in user-friendly environments with a 360-degree overview of business health, reservations, operational performance and ticketing.

Results: The scalable infrastructure, graphic user interface, data aggregation and ability to work collaboratively have led to more revenue and increased client satisfaction.

6) A specialty US Retail Provider: Leveraging prescriptive analytics

Challenge/Objective: A specialty US Retail provider wanted to modernize its data platform which could help the business make real-time decisions while also leveraging prescriptive analytics. They wanted to discover true value of data being generated from its multiple systems and understand the patterns (both known and unknown) of sales, operations, and omni-channel retail performance.

We helped build a modern data solution that consolidated their data in a data lake and data warehouse, making it easier to extract the value in real-time. We integrated our solution with their OMS, CRM, Google Analytics, Salesforce, and inventory management system. The data was modeled in such a way that it could be fed into Machine Learning algorithms; so that we can leverage this easily in the future.

The customer had visibility into their data from day 1, which is something they had been wanting for some time. In addition to this, they were able to build more reports, dashboards, and charts to understand and interpret the data. In some cases, they were able to get real-time visibility and analysis on instore purchases based on geography!

7) Logistics startup with an objective to become the “Uber of the Trucking Sector” with the help of data analytics

Challenge: A startup specializing in analyzing vehicle and/or driver performance by collecting data from sensors within the vehicle (a.k.a. vehicle telemetry) and Order patterns with an objective to become the “Uber of the Trucking Sector”

Solution: We developed a customized backend of the client’s trucking platform so that they could monetize empty return trips of transporters by creating a marketplace for them. The approach used a combination of AWS Data Lake, AWS microservices, machine learning and analytics.

- Reduced fuel costs

- Optimized Reloads

- More accurate driver / truck schedule planning

- Smarter Routing

- Fewer empty return trips

- Deeper analysis of driver patterns, breaks, routes, etc.

8) Challenge/Objective: A niche segment customer competing against market behemoths looking to become a “Niche Segment Leader”

Solution: We developed a customized analytics platform that can ingest CRM, OMS, Ecommerce, and Inventory data and produce real time and batch driven analytics and AI platform. The approach used a combination of AWS microservices, machine learning and analytics.

- Reduce Customer Churn

- Optimized Order Fulfillment

- More accurate demand schedule planning

- Improve Product Recommendation

- Improved Last Mile Delivery

How can we help you harness the power of data?

At Systems Plus our BI and analytics specialists help you leverage data to understand trends and derive insights by streamlining the searching, merging, and querying of data. From improving your CX and employee performance to predicting new revenue streams, our BI and analytics expertise helps you make data-driven decisions for saving costs and taking your growth to the next level.

Most Popular Blogs

Elevating User Transitions: JML Automation Mastery at Work, Saving Hundreds of Manual Hours

Smooth transition – navigating a seamless servicenow® upgrade, seamless integration excellence: integrating products and platforms with servicenow®.

TechEnablers Episode 4: Transforming IT Service Managem

TechEnablers Episode 3: Unlocking Efficiency: Accelerat

TechEnablers Episode 2: POS Transformation: Achieving S

Diving into Data and Diversity

Navigating the Future: Global Innovation, Technology, a

Revolutionizing Retail Supply Chains by Spearheading Di

AWS Named as a Leader for the 11th Consecutive Year…

Introducing amazon route 53 application recovery controller, amazon sagemaker named as the outright leader in enterprise mlops….

- Made To Order

- Cloud Solutions

- Salesforce Commerce Cloud

- Distributed Agile

- IT Strategy & Consulting

- Data Warehouse & BI

- Security Assessment & Mitigation

- Case Studies

- News and Events

Quick Links

- Privacy Statement

- +91-22-61591100

- [email protected]

How companies are using big data and analytics

Few dispute that organizations have more data than ever at their disposal. But actually deriving meaningful insights from that data—and converting knowledge into action—is easier said than done. We spoke with six senior leaders from major organizations and asked them about the challenges and opportunities involved in adopting advanced analytics: Murli Buluswar, chief science officer at AIG; Vince Campisi, chief information officer at GE Software; Ash Gupta, chief risk officer at American Express; Zoher Karu, vice president of global customer optimization and data at eBay; Victor Nilson, senior vice president of big data at AT&T; and Ruben Sigala, chief analytics officer at Caesars Entertainment. An edited transcript of their comments follows.

Interview transcript

Challenges organizations face in adopting analytics.

Murli Buluswar, chief science officer, AIG: The biggest challenge of making the evolution from a knowing culture to a learning culture—from a culture that largely depends on heuristics in decision making to a culture that is much more objective and data driven and embraces the power of data and technology—is really not the cost. Initially, it largely ends up being imagination and inertia.

What I have learned in my last few years is that the power of fear is quite tremendous in evolving oneself to think and act differently today, and to ask questions today that we weren’t asking about our roles before. And it’s that mind-set change—from an expert-based mind-set to one that is much more dynamic and much more learning oriented, as opposed to a fixed mind-set—that I think is fundamental to the sustainable health of any company, large, small, or medium.

Ruben Sigala, chief analytics officer, Caesars Entertainment: What we found challenging, and what I find in my discussions with a lot of my counterparts that is still a challenge, is finding the set of tools that enable organizations to efficiently generate value through the process. I hear about individual wins in certain applications, but having a more sort of cohesive ecosystem in which this is fully integrated is something that I think we are all struggling with, in part because it’s still very early days. Although we’ve been talking about it seemingly quite a bit over the past few years, the technology is still changing; the sources are still evolving.

Zoher Karu, vice president, global customer optimization and data, eBay: One of the biggest challenges is around data privacy and what is shared versus what is not shared. And my perspective on that is consumers are willing to share if there’s value returned. One-way sharing is not going to fly anymore. So how do we protect and how do we harness that information and become a partner with our consumers rather than kind of just a vendor for them?

Capturing impact from analytics

Ruben Sigala: You have to start with the charter of the organization. You have to be very specific about the aim of the function within the organization and how it’s intended to interact with the broader business. There are some organizations that start with a fairly focused view around support on traditional functions like marketing, pricing, and other specific areas. And then there are other organizations that take a much broader view of the business. I think you have to define that element first.

That helps best inform the appropriate structure, the forums, and then ultimately it sets the more granular levels of operation such as training, recruitment, and so forth. But alignment around how you’re going to drive the business and the way you’re going to interact with the broader organization is absolutely critical. From there, everything else should fall in line. That’s how we started with our path.

Vince Campisi, chief information officer, GE Software: One of the things we’ve learned is when we start and focus on an outcome, it’s a great way to deliver value quickly and get people excited about the opportunity. And it’s taken us to places we haven’t expected to go before. So we may go after a particular outcome and try and organize a data set to accomplish that outcome. Once you do that, people start to bring other sources of data and other things that they want to connect. And it really takes you in a place where you go after a next outcome that you didn’t anticipate going after before. You have to be willing to be a little agile and fluid in how you think about things. But if you start with one outcome and deliver it, you’ll be surprised as to where it takes you next.

The need to lead in data and analytics

Ash Gupta, chief risk officer, American Express: The first change we had to make was just to make our data of higher quality. We have a lot of data, and sometimes we just weren’t using that data and we weren’t paying as much attention to its quality as we now need to. That was, one, to make sure that the data has the right lineage, that the data has the right permissible purpose to serve the customers. This, in my mind, is a journey. We made good progress and we expect to continue to make this progress across our system.

The second area is working with our people and making certain that we are centralizing some aspects of our business. We are centralizing our capabilities and we are democratizing its use. I think the other aspect is that we recognize as a team and as a company that we ourselves do not have sufficient skills, and we require collaboration across all sorts of entities outside of American Express. This collaboration comes from technology innovators, it comes from data providers, it comes from analytical companies. We need to put a full package together for our business colleagues and partners so that it’s a convincing argument that we are developing things together, that we are colearning, and that we are building on top of each other.

Examples of impact

Victor Nilson, senior vice president, big data, AT&T: We always start with the customer experience. That’s what matters most. In our customer care centers now, we have a large number of very complex products. Even the simple products sometimes have very complex potential problems or solutions, so the workflow is very complex. So how do we simplify the process for both the customer-care agent and the customer at the same time, whenever there’s an interaction?

We’ve used big data techniques to analyze all the different permutations to augment that experience to more quickly resolve or enhance a particular situation. We take the complexity out and turn it into something simple and actionable. Simultaneously, we can then analyze that data and then go back and say, “Are we optimizing the network proactively in this particular case?” So, we take the optimization not only for the customer care but also for the network, and then tie that together as well.

Vince Campisi: I’ll give you one internal perspective and one external perspective. One is we are doing a lot in what we call enabling a digital thread—how you can connect innovation through engineering, manufacturing, and all the way out to servicing a product. [For more on the company’s “digital thread” approach, see “ GE’s Jeff Immelt on digitizing in the industrial space .”] And, within that, we’ve got a focus around brilliant factory. So, take driving supply-chain optimization as an example. We’ve been able to take over 60 different silos of information related to direct-material purchasing, leverage analytics to look at new relationships, and use machine learning to identify tremendous amounts of efficiency in how we procure direct materials that go into our product.

An external example is how we leverage analytics to really make assets perform better. We call it asset performance management. And we’re starting to enable digital industries, like a digital wind farm, where you can leverage analytics to help the machines optimize themselves. So you can help a power-generating provider who uses the same wind that’s come through and, by having the turbines pitch themselves properly and understand how they can optimize that level of wind, we’ve demonstrated the ability to produce up to 10 percent more production of energy off the same amount of wind. It’s an example of using analytics to help a customer generate more yield and more productivity out of their existing capital investment.

Winning the talent war

Ruben Sigala: Competition for analytical talent is extreme. And preserving and maintaining a base of talent within an organization is difficult, particularly if you view this as a core competency. What we’ve focused on mostly is developing a platform that speaks to what we think is a value proposition that is important to the individuals who are looking to begin a career or to sustain a career within this field.

When we talk about the value proposition, we use terms like having an opportunity to truly affect the outcomes of the business, to have a wide range of analytical exercises that you’ll be challenged with on a regular basis. But, by and large, to be part of an organization that views this as a critical part of how it competes in the marketplace—and then to execute against that regularly. In part, and to do that well, you have to have good training programs, you have to have very specific forms of interaction with the senior team. And you also have to be a part of the organization that actually drives the strategy for the company.

Murli Buluswar: I have found that focusing on the fundamentals of why science was created, what our aspirations are, and how being part of this team will shape the professional evolution of the team members has been pretty profound in attracting the caliber of talent that we care about. And then, of course, comes the even harder part of living that promise on a day-in, day-out basis.

Yes, money is important. My philosophy on money is I want to be in the 75th percentile range; I don’t want to be in the 99th percentile. Because no matter where you are, most people—especially people in the data-science function—have the ability to get a 20 to 30 percent increase in their compensation, should they choose to make a move. My intent is not to try and reduce that gap. My intent is to create an environment and a culture where they see that they’re learning; they see that they’re working on problems that have a broader impact on the company, on the industry, and, through that, on society; and they’re part of a vibrant team that is inspired by why it exists and how it defines success. Focusing on that, to me, is an absolutely critical enabler to attracting the caliber of talent that I need and, for that matter, anyone else would need.

Developing the right expertise

Victor Nilson: Talent is everything, right? You have to have the data, and, clearly, AT&T has a rich wealth of data. But without talent, it’s meaningless. Talent is the differentiator. The right talent will go find the right technologies; the right talent will go solve the problems out there.

We’ve helped contribute in part to the development of many of the new technologies that are emerging in the open-source community. We have the legacy advanced techniques from the labs, we have the emerging Silicon Valley. But we also have mainstream talent across the country, where we have very advanced engineers, we have managers of all levels, and we want to develop their talent even further.

So we’ve delivered over 50,000 big data related training courses just this year alone. And we’re continuing to move forward on that. It’s a whole continuum. It might be just a one-week boot camp, or it might be advanced, PhD-level data science. But we want to continue to develop that talent for those who have the aptitude and interest in it. We want to make sure that they can develop their skills and then tie that together with the tools to maximize their productivity.

Zoher Karu: Talent is critical along any data and analytics journey. And analytics talent by itself is no longer sufficient, in my opinion. We cannot have people with singular skills. And the way I build out my organization is I look for people with a major and a minor. You can major in analytics, but you can minor in marketing strategy. Because if you don’t have a minor, how are you going to communicate with other parts of the organization? Otherwise, the pure data scientist will not be able to talk to the database administrator, who will not be able to talk to the market-research person, who which will not be able to talk to the email-channel owner, for example. You need to make sound business decisions, based on analytics, that can scale.

Murli Buluswar is chief science officer at AIG, Vince Campisi is chief information officer at GE Software, Ash Gupta is chief risk officer at American Express, Zoher Karu is vice president of global customer optimization and data at eBay, Victor Nilson is senior vice president of big data at AT&T, and Ruben Sigala is chief analytics officer at Caesars Entertainment.

Explore a career with us

Related articles.

Big data: Getting a better read on performance

Transforming into an analytics-driven insurance carrier

tableau.com is not available in your region.

- Data Center

- Applications

- Open Source

Datamation content and product recommendations are editorially independent. We may make money when you click on links to our partners. Learn More .

A growing number of enterprises are pooling terabytes and petabytes of data, but many of them are grappling with ways to apply their big data as it grows.

How can companies determine what big data solutions will work best for their industry, business model, and specific data science goals?

Check out these big data enterprise case studies from some of the top big data companies and their clients to learn about the types of solutions that exist for big data management.

Enterprise case studies

Netflix on aws, accuweather on microsoft azure, china eastern airlines on oracle cloud, etsy on google cloud, mlogica on sap hana cloud.

Read next: Big Data Market Review 2021

Netflix is one of the largest media and technology enterprises in the world, with thousands of shows that its hosts for streaming as well as its growing media production division. Netflix stores billions of data sets in its systems related to audiovisual data, consumer metrics, and recommendation engines. The company required a solution that would allow it to store, manage, and optimize viewers’ data. As its studio has grown, Netflix also needed a platform that would enable quicker and more efficient collaboration on projects.

“Amazon Kinesis Streams processes multiple terabytes of log data each day. Yet, events show up in our analytics in seconds,” says John Bennett, senior software engineer at Netflix.

“We can discover and respond to issues in real-time, ensuring high availability and a great customer experience.”

Industries: Entertainment, media streaming

Use cases: Computing power, storage scaling, database and analytics management, recommendation engines powered through AI/ML, video transcoding, cloud collaboration space for production, traffic flow processing, scaled email and communication capabilities

- Now using over 100,000 server instances on AWS for different operational functions

- Used AWS to build a studio in the cloud for content production that improves collaborative capabilities

- Produced entire seasons of shows via the cloud during COVID-19 lockdowns

- Scaled and optimized mass email capabilities with Amazon Simple Email Service (Amazon SES)

- Netflix’s Amazon Kinesis Streams-based solution now processes billions of traffic flows daily

Read the full Netflix on AWS case study here .

AccuWeather is one of the oldest and most trusted providers of weather forecast data. The weather company provides an API that other companies can use to embed their weather content into their own systems. AccuWeather wanted to move its data processes to the cloud. However, the traditional GRIB 2 data format for weather data is not supported by most data management platforms. With Microsoft Azure, Azure Data Lake Storage, and Azure Databricks (AI), AccuWeather was able to find a solution that would convert the GRIB 2 data, analyze it in more depth than before, and store this data in a scalable way.

“With some types of severe weather forecasts, it can be a life-or-death scenario,” says Christopher Patti, CTO at AccuWeather.

“With Azure, we’re agile enough to process and deliver severe weather warnings rapidly and offer customers more time to respond, which is important when seconds count and lives are on the line.”

Industries: Media, weather forecasting, professional services

Use cases: Making legacy and traditional data formats usable for AI-powered analysis, API migration to Azure, data lakes for storage, more precise reporting and scaling

- GRIB 2 weather data made operational for AI-powered next-generation forecasting engine, via Azure Databricks

- Delta lake storage layer helps to create data pipelines and more accessibility

- Improved speed, accuracy, and localization of forecasts via machine learning

- Real-time measurement of API key usage and performance

- Ability to extract weather-related data from smart-city systems and self-driving vehicles

Read the full AccuWeather on Microsoft Azure case study here .

China Eastern Airlines is one of the largest airlines in the world that is working to improve safety, efficiency, and overall customer experience through big data analytics. With Oracle’s cloud setup and a large portfolio of analytics tools, it now has access to more in-flight, aircraft, and customer metrics.

“By processing and analyzing over 100 TB of complex daily flight data with Oracle Big Data Appliance, we gained the ability to easily identify and predict potential faults and enhanced flight safety,” says Wang Xuewu, head of China Eastern Airlines’ data lab.

“The solution also helped to cut fuel consumption and increase customer experience.”

Industries: Airline, travel, transportation

Use cases: Increased flight safety and fuel efficiency, reduced operational costs, big data analytics

- Optimized big data analysis to analyze flight angle, take-off speed, and landing speed, maximizing predictive analytics for engine and flight safety

- Multi-dimensional analysis on over 60 attributes provides advanced metrics and recommendations to improve aircraft fuel use

- Advanced spatial analytics on the travelers’ experience, with metrics covering in-flight cabin service, baggage, ground service, marketing, flight operation, website, and call center

- Using Oracle Big Data Appliance to integrate Hadoop data from aircraft sensors, unifying and simplifying the process for evaluating device health across an aircraft

- Central interface for daily management of real-time flight data

Read the full China Eastern Airlines on Oracle Cloud case study here .

Etsy is an e-commerce site for independent artisan sellers. With its goal to create a buying and selling space that puts the individual first, Etsy wanted to advance its platform to the cloud to keep up with needed innovations. But it didn’t want to lose the personal touches or values that drew customers in the first place. Etsy chose Google for cloud migration and big data management for several primary reasons: Google’s advanced features that back scalability, its commitment to sustainability, and the collaborative spirit of the Google team.

Mike Fisher, CTO at Etsy, explains how Google’s problem-solving approach won them over.

“We found that Google would come into meetings, pull their chairs up, meet us halfway, and say, ‘We don’t do that, but let’s figure out a way that we can do that for you.'”

Industries: Retail, E-commerce

Use cases: Data center migration to the cloud, accessing collaboration tools, leveraging machine learning (ML) and artificial intelligence (AI), sustainability efforts

- 5.5 petabytes of data migrated from existing data center to Google Cloud

- >50% savings in compute energy, minimizing total carbon footprint and energy usage

- 42% reduced compute costs and improved cost predictability through virtual machine (VM), solid state drive (SSD), and storage optimizations

- Democratization of cost data for Etsy engineers

- 15% of Etsy engineers moved from system infrastructure management to customer experience, search, and recommendation optimization

Read the full Etsy on Google Cloud case study here .

mLogica is a technology and product consulting firm that wanted to move to the cloud, in order to better support its customers’ big data storage and analytics needs. Although it held on to its existing data analytics platform, CAP*M, mLogica relied on SAP HANA Cloud to move from on-premises infrastructure to a more scalable cloud structure.

“More and more of our clients are moving to the cloud, and our solutions need to keep pace with this trend,” says Michael Kane, VP of strategic alliances and marketing, mLogica

“With CAP*M on SAP HANA Cloud, we can future-proof clients’ data setups.”

Industry: Professional services

Use cases: Manage growing pools of data from multiple client accounts, improve slow upload speeds for customers, move to the cloud to avoid maintenance of on-premises infrastructure, integrate the company’s existing big data analytics platform into the cloud

- SAP HANA Cloud launched as the cloud platform for CAP*M, mLogica’s big data analytics tool, to improve scalability

- Data analysis now enabled on a petabyte scale

- Simplified database administration and eliminated additional hardware and maintenance needs

- Increased control over total cost of ownership

- Migrated existing customer data setups through SAP IQ into SAP HANA, without having to adjust those setups for a successful migration

Read the full mLogica on SAP HANA Cloud case study here .

Read next: Big Data Trends in 2021 and The Future of Big Data

Subscribe to Data Insider

Learn the latest news and best practices about data science, big data analytics, artificial intelligence, data security, and more.

Similar articles

Hubspot crm vs. salesforce: head-to-head comparison (2024), 15 top cloud computing companies: get cloud service in 2024, ultimate guide to data visualization jobs, get the free newsletter.

Subscribe to Data Insider for top news, trends & analysis

Latest Articles

Best open source software..., hubspot crm vs. salesforce:..., 15 top cloud computing..., ultimate guide to data....

A new initiative at UPS will use real-time data, advanced analytics and artificial intelligence to help employees make better decisions.

As chief information and engineering officer for logistics giant UPS, Juan Perez is placing analytics and insight at the heart of business operations.

Big data and digital transformation: How one enables the other

Drowning in data is not the same as big data. Here's the true definition of big data and a powerful example of how it's being used to power digital transformation.

"Big data at UPS takes many forms because of all the types of information we collect," he says. "We're excited about the opportunity of using big data to solve practical business problems. We've already had some good experience of using data and analytics and we're very keen to do more."

Perez says UPS is using technology to improve its flexibility, capability, and efficiency, and that the right insight at the right time helps line-of-business managers to improve performance.

The aim for UPS, says Perez, is to use the data it collects to optimise processes, to enable automation and autonomy, and to continue to learn how to improve its global delivery network.

Leading data-fed projects that change the business for the better

Perez says one of his firm's key initiatives, known as Network Planning Tools, will help UPS to optimise its logistics network through the effective use of data. The system will use real-time data, advanced analytics and artificial intelligence to help employees make better decisions. The company expects to begin rolling out the initiative from the first quarter of 2018.

"That will help all our business units to make smart use of our assets and it's just one key project that's being supported in the organisation as part of the smart logistics network," says Perez, who also points to related and continuing developments in Orion (On-road Integrated Optimization and Navigation), which is the firm's fleet management system.

Orion uses telematics and advanced algorithms to create optimal routes for delivery drivers. The IT team is currently working on the third version of the technology, and Perez says this latest update to Orion will provide two key benefits to UPS.

First, the technology will include higher levels of route optimisation which will be sent as navigation advice to delivery drivers. "That will help to boost efficiency," says Perez.

Second, Orion will use big data to optimise delivery routes dynamically.

"Today, Orion creates delivery routes before drivers leave the facility and they stay with that static route throughout the day," he says. "In the future, our system will continually look at the work that's been completed, and that still needs to be completed, and will then dynamically optimise the route as drivers complete their deliveries. That approach will ensure we meet our service commitments and reduce overall delivery miles."

Once Orion is fully operational for more than 55,000 drivers this year, it will lead to a reduction of about 100 million delivery miles -- and 100,000 metric tons of carbon emissions. Perez says these reductions represent a key measure of business efficiency and effectiveness, particularly in terms of sustainability.

Projects such as Orion and Network Planning Tools form part of a collective of initiatives that UPS is using to improve decision making across the package delivery network. The firm, for example, recently launched the third iteration of its chatbot that uses artificial intelligence to help customers find rates and tracking information across a series of platforms, including Facebook and Amazon Echo.

"That project will continue to evolve, as will all our innovations across the smart logistics network," says Perez. "Everything runs well today but we also recognise there are opportunities for continuous improvement."

Overcoming business challenges to make the most of big data

"Big data is all about the business case -- how effective are we as an IT team in defining a good business case, which includes how to improve our service to our customers, what is the return on investment and how will the use of data improve other aspects of the business," says Perez.

These alternative use cases are not always at the forefront of executive thinking. Consultant McKinsey says too many organisations drill down on a single data set in isolation and fail to consider what different data sets mean for other parts of the business.

However, Perez says the re-use of information can have a significant impact at UPS. Perez talks, for example, about using delivery data to help understand what types of distribution solutions work better in different geographical locations.

"Should we have more access points? Should we introduce lockers? Should we allow drivers to release shipments without signatures? Data, technology, and analytics will improve our ability to answer those questions in individual locations -- and those benefits can come from using the information we collect from our customers in a different way," says Perez.

Perez says this fresh, open approach creates new opportunities for other data-savvy CIOs. "The conversation in the past used to be about buying technology, creating a data repository and discovering information," he says. "Now the conversation is changing and it's exciting. Every time we talk about a new project, the start of the conversation includes data."

By way of an example, Perez says senior individuals across the organisation now talk as a matter of course about the potential use of data in their line-of-business and how that application of insight might be related to other models across the organisation.

These senior executive, he says, also ask about the availability of information and whether the existence of data in other parts of the business will allow the firm to avoid a duplication of effort.

"The conversation about data is now much more active," says Perez. "That higher level of collaboration provides benefits for everyone because the awareness across the organisation means we'll have better repositories, less duplication and much more effective data models for new business cases in the future."

Read more about big data

- Turning big data into business insights: The state of play

- Choosing the best big data partners: Eight questions to ask

- Report shows that AI is more important to IoT than big data insights

Better than Ring? This video doorbell has all the benefits and no subscription fees

You can make big money from ai - but only if people trust your data, instacart users will soon be able to order takeout from local restaurants too.

- 12 min read

26 Big Data Use Cases and Examples for Business

Importance of Big Data

Benefits of big data use cases, big data use cases in business, customer analytics and marketing, fraud detection and prevention, supply chain optimization, predictive maintenance, operational efficiency, risk management and mitigation, big data use cases in healthcare, electronic health records (ehrs), clinical decision-making, disease surveillance and prevention, personalized medicine, drug discovery and development, big data use cases in finance, credit risk analysis and management, trading and portfolio optimization, regulatory compliance, big data use cases in government, law enforcement and public safety, environmental monitoring and management, disaster response and recovery, social program management and optimization, transportation and traffic management, big data use cases in science and research, astronomy and cosmology, genomics and bioinformatics, climate science, particle physics, social science and humanities, challenges and limitations of big data use cases, best practices for implementing big data use cases.

Big Data is a term that refers to the large volume of data, both structured and unstructured, that inundates a business on a day-to-day basis. The use of Big Data has become a vital component of business strategy as organizations seek to harness the enormous amount of data generated by various sources. Big Data has become essential for businesses to gain a competitive advantage, make better-informed decisions, and create new products and services.

Big Data has become more essential in today's world than ever due to the explosion of data from various sources, including social media, sensors, and devices. The ability to collect and analyze large volumes of data has become a critical factor in decision-making, innovation, and growth. Big Data helps businesses to:

- Understand customer needs and preferences

- Improve operational efficiency

- Reduce costs

- Identify new market opportunities

- Manage risks

- Create new revenue streams

Big Data use cases provide several benefits for organizations. These include:

- Improved decision-making: Big Data provides businesses with insights to help them make better decisions.

- Enhanced customer experiences: Big Data helps organizations to understand customer needs and preferences, enabling them to offer more personalized experiences.

- Improved operational efficiency: Big Data helps organizations to streamline their operations, optimize supply chains, and reduce costs.

- Increased revenue: Big Data enables organizations to identify new market opportunities, create new products and services, and drive revenue growth.

Businesses are among the most prominent users of Big Data. There are several ways in which organizations use Big Data to achieve their business objectives. Some of the most common Big Data use cases in business include:

Big Data helps organizations to analyze customer behavior, preferences, and purchasing patterns. This enables businesses to offer personalized marketing and sales campaigns that increase customer engagement and loyalty. With Big Data, companies can:

- Identify high-value customers

- Improve customer retention

- Increase sales

- Enhance customer experiences

Big Data is an essential tool for detecting and preventing fraud. Businesses can detect patterns and anomalies that indicate fraudulent activities by analyzing large volumes of data. Big Data helps organizations to:

- Identify fraudulent transactions

- Monitor suspicious activities

- Prevent financial losses

- Protect brand reputation

Big Data helps organizations to optimize their supply chain operations. By analyzing data from various sources, including sensors and logistics systems, businesses can streamline their supply chain processes and reduce costs. With Big Data, businesses can:

- Optimize inventory management

- Improve delivery times

- Reduce transportation costs

- Improve supplier performance

Big Data enables organizations to predict equipment failures before they occur, reducing downtime and maintenance costs. Businesses can identify patterns that indicate potential equipment failures by analyzing sensor data and other sources. With Big Data, companies can:

- Reduce maintenance costs

- Improve equipment uptime

- Increase operational efficiency

- Enhance safety and compliance

Big Data helps organizations to improve their operational efficiency by providing insights into their business processes. By analyzing data from various sources, businesses can identify areas of inefficiency and optimize their operations. With Big Data, businesses can:

- Reduce waste

- Increase productivity

- Improve quality

Big Data helps organizations to manage and mitigate risks by providing insights into potential risks and their impact on the business. By analyzing data from various sources, including financial and operational data, businesses can identify potential risks and take proactive measures to mitigate them. With Big Data, businesses can:

- Reduce financial losses

- Enhance business continuity

- Improve regulatory compliance

Google Data Studio is a powerful data visualization tool. Learn how to create beautiful dashboards in our Google Data Studio tutorial for beginners.

The healthcare industry has also embraced Big Data to improve patient outcomes, enhance clinical decision-making, and drive operational efficiency. Some of the most common Big Data use cases in healthcare include:

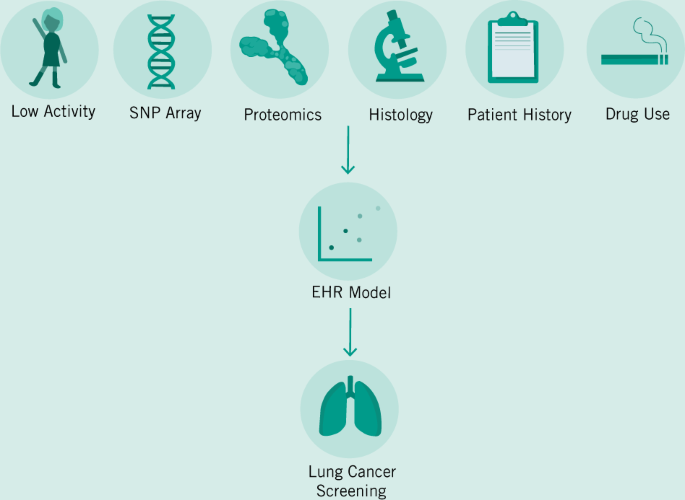

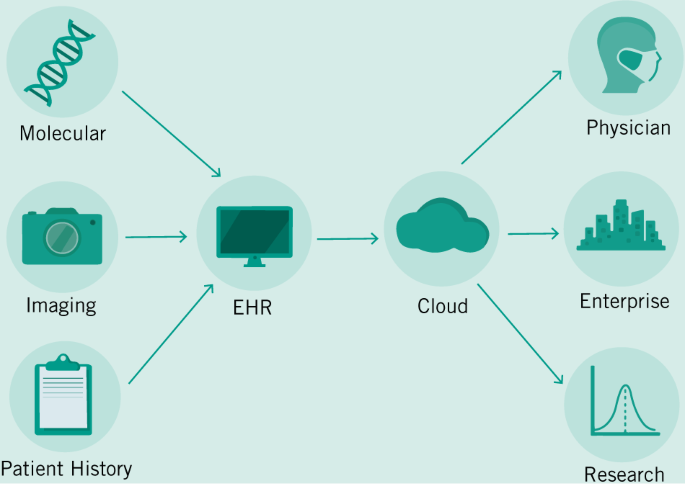

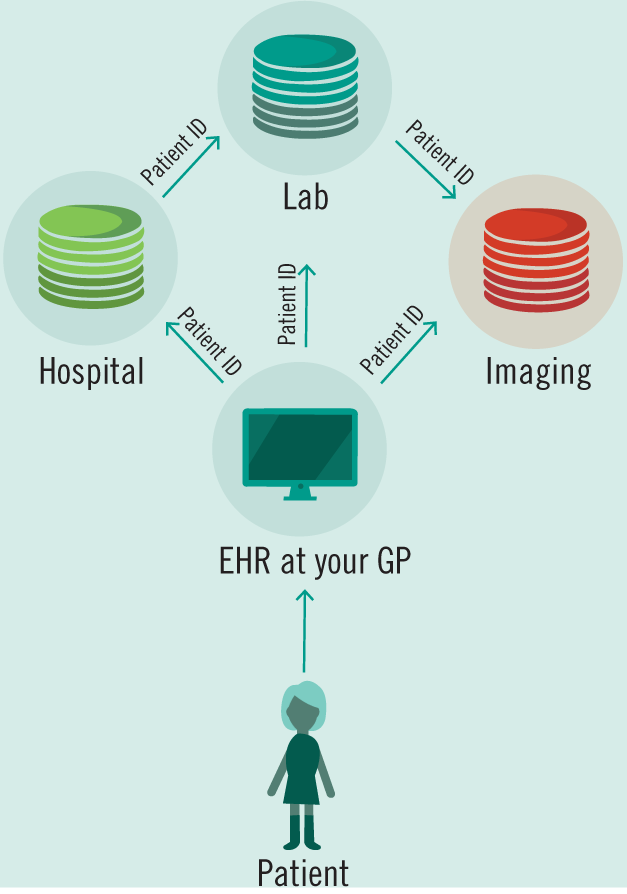

Big Data helps healthcare providers to manage and analyze electronic health records, providing insights that can improve patient care and outcomes. With Big Data, healthcare providers can:

- Monitor patient health status

- Identify potential health risks

- Improve disease management

- Enhance clinical decision-making

Big Data helps healthcare providers to make more informed clinical decisions by providing insights into patient health status, treatment effectiveness, and outcomes. With Big Data, healthcare providers can:

- Improve diagnosis accuracy

- Personalize treatment plans

- Enhance patient outcomes

- Reduce healthcare costs

Big Data helps public health officials to monitor and track disease outbreaks, enabling them to take proactive measures to prevent the spread of diseases. With Big Data, public health officials can:

- Monitor disease incidence and prevalence

- Predict disease outbreaks

- Plan and implement disease prevention and control strategies

- Improve public health outcomes

Big Data helps healthcare providers to develop personalized treatment plans based on patient characteristics, including genetic makeup, lifestyle, and health status. With Big Data, healthcare providers can:

- Improve treatment effectiveness

- Reduce side effects

- Personalize healthcare delivery

Big Data helps pharmaceutical companies to identify new drug targets, develop more effective drugs, and reduce drug development costs. With Big Data, pharmaceutical companies can:

- Identify disease biomarkers

- Improve drug safety and efficacy

- Optimize clinical trial design

- Reduce drug development time and costs

The finance industry has adopted Big Data to improve risk management, reduce fraud, and enhance customer experiences. Some of the most common Big Data use cases in finance include:

Big Data helps financial institutions to detect and prevent fraud by analyzing transaction data and identifying patterns that indicate fraudulent activities. With Big Data, financial institutions can:

- Monitor transaction activities

- Identify suspicious transactions

Big Data helps financial institutions to analyze customer credit risk and make informed lending decisions. With Big Data, financial institutions can:

- Evaluate creditworthiness

- Identify potential credit risks

- Optimize credit risk management

- Reduce loan defaults

Big Data helps financial institutions to analyze market trends and identify profitable investment opportunities. With Big Data, financial institutions can:

- Analyze market trends

- Identify profitable investment opportunities

- Optimize trading and portfolio management

- Improve investment returns

Big Data helps financial institutions to analyze customer behavior and preferences, enabling them to offer personalized marketing and sales campaigns that increase customer engagement and loyalty. With Big Data, financial institutions can:

Big Data helps financial institutions to comply with regulatory requirements by providing insights into compliance risks and potential violations. With Big Data, financial institutions can:

- Monitor regulatory compliance

- Identify potential compliance risks

- Improve regulatory reporting

- Reduce regulatory penalties

Government agencies have also adopted Big Data to improve public services, enhance public safety, and drive economic growth. Some of the most common Big Data use cases in government include:

Big Data helps law enforcement agencies to prevent and solve crimes by analyzing crime data and identifying patterns that indicate criminal activities. With Big Data, law enforcement agencies can:

- Monitor criminal activities

- Identify potential criminal activities

- Predict criminal behavior

- Improve public safety outcomes

Big Data helps government agencies to monitor and manage the environment, enabling them to identify potential environmental risks and take proactive measures to mitigate them. With Big Data, government agencies can:

- Monitor air and water quality

- Identify potential environmental risks

- Plan and implement environmental mitigation strategies

- Enhance public health and environmental outcomes

Big Data helps government agencies to respond to and recover from disasters by providing real-time data and analytics that enable them to make informed decisions. With Big Data, government agencies can:

- Monitor disaster events

- Assess damage and impact

- Plan and implement disaster response and recovery strategies

- Enhance public safety and disaster recovery outcomes

Big Data helps government agencies to manage and optimize social programs, enabling them to improve service delivery and outcomes for citizens. With Big Data, government agencies can:

- Monitor program effectiveness

- Identify potential program improvements

- Optimize program management

- Enhance program outcomes and citizen satisfaction

Big Data helps government agencies manage transportation and traffic systems, improving safety, reducing congestion, and optimizing transportation infrastructure. With Big Data, government agencies can:

- Monitor traffic flows

- Optimize transportation infrastructure

- Improve safety and reduce congestion

- Enhance transportation outcomes and citizen satisfaction

Discover what Data Analysis is, its methods, examples, best practices, and top tools used to gain insights and make informed decisions with your data.

Scientists and researchers have also adopted Big Data to advance knowledge and discovery in various fields. Some of the most common Big Data use cases in science and research include:

Big Data helps astronomers and cosmologists to analyze large volumes of data from telescopes and other sources, enabling them to gain insights into the universe's origin and evolution. With Big Data, astronomers and cosmologists can:

- Identify new celestial objects

- Understand the structure and evolution of the universe

- Test cosmological theories

- Discover new phenomena

Big Data helps geneticists and bioinformaticians analyze large volumes of genetic data, enabling them to identify genetic variations contributing to diseases and develop personalized treatment plans. With Big Data, geneticists and bioinformaticians can:

- Analyze genetic data

- Identify genetic variations that contribute to diseases

- Develop personalized treatment plans

- Improve patient outcomes

Big Data helps climate scientists to monitor and analyze large volumes of climate data, enabling them to understand climate patterns, predict weather events, and develop mitigation strategies. With Big Data, climate scientists can:

- Monitor climate data

- Analyze climate patterns

- Predict weather events

- Develop climate mitigation strategies

Big Data helps particle physicists to analyze large volumes of data from particle accelerators and other sources, enabling them to gain insights into the fundamental nature of matter and the universe. With Big Data, particle physicists can:

- Analyze particle accelerator data

- Test fundamental physics theories

- Discover new particles and phenomena

- Understand the fundamental nature of matter and the universe

Big Data helps social scientists, and humanists analyze large volumes of data from various sources, including social media and archives, to gain insights into human behavior, culture, and history. With Big Data, social scientists and humanists can:

- Analyze social media data

- Study cultural trends and patterns

- Understand historical events and processes

- Develop new theories and insights

Despite the numerous benefits of Big Data use cases, several challenges and limitations exist. These include:

- Data Quality and Reliability: Big Data is only useful if the data is accurate, complete, and reliable. Poor data quality can lead to erroneous insights and decisions.

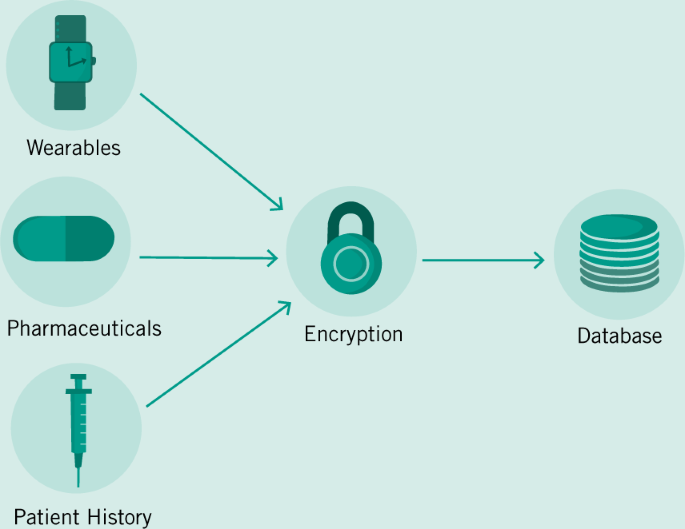

- Privacy and Security: Big Data contains sensitive information, and its analysis and storage raise privacy and security concerns. Organizations must implement robust security measures to protect against data breaches and unauthorized access.

- Technical Infrastructure and Expertise: Big Data analysis requires specialized technical infrastructure, tools, and expertise. Organizations must invest in the proper hardware, software, and personnel to ensure successful Big Data analysis.

- Data Integration and Interoperability: Big Data comes from various sources and formats, making data integration and interoperability a significant challenge. Organizations must develop robust data integration and management strategies to ensure data consistency and usability.

- Ethical and Social Issues: Big Data analysis raises ethical and social issues, including privacy, fairness, bias, and transparency. Organizations must ensure that their Big Data analysis practices align with moral and social standards.

Organizations must follow best practices to realize the full benefits of Big Data use cases. These include:

- Define Clear Objectives and Goals: Organizations must define clear objectives and goals for their Big Data use cases, ensuring they align with their overall business strategy.

- Develop a Comprehensive Data Strategy: Organizations must develop a comprehensive data strategy that outlines data collection, storage, analysis, and governance processes.

- Choose the Right Tools and Technologies: Organizations must choose the right tools and technologies for their Big Data use cases, ensuring they meet their data processing and analysis needs.

- Ensure Data Quality and Governance: Organizations must ensure data quality and governance, ensuring that their data is accurate, complete, and reliable and that their data practices align with regulatory and ethical standards.

- Foster a Culture of Data-Driven Decision-Making: Organizations must foster a culture of data-driven decision-making, ensuring that decision-makers have the necessary data and analysis to make informed decisions.

Big Data is a powerful tool that provides organizations with insights and opportunities to improve their operations, drive innovation, and enhance customer experiences. Big Data use cases are numerous and span various industries, including business, healthcare, finance, government, science, and research.

Despite the countless benefits of Big Data and practical Big Data use cases, several challenges and limitations exist, including data quality and reliability, privacy and security, technical infrastructure and expertise, data integration and interoperability, and ethical and social issues. Organizations must follow best practices to ensure successful Big Data use cases, including defining clear objectives and goals, developing a comprehensive data strategy, choosing the right tools and technologies, ensuring data quality and governance, and fostering a culture of data-driven decision-making.

As Big Data continues to grow and evolve, organizations embracing and using it effectively will gain a competitive advantage and drive growth and innovation.

Hady has a passion for tech, marketing, and spreadsheets. Besides his Computer Science degree, he has vast experience in developing, launching, and scaling content marketing processes at SaaS startups.

Layer is now Sheetgo

Automate your procesess on top of spreadsheets.

Data and Analytics Case Study

Made possible by ey, exclusive global insights case study sponsor.

GE’s Big Bet on Data and Analytics

Seeking opportunities in the internet of things, ge expands into industrial analytics., february 18, 2016, by: laura winig.

If software experts truly knew what Jeff Immelt and GE Digital were doing, there’s no other software company on the planet where they would rather be. –Bill Ruh, CEO of GE Digital and CDO for GE

In September 2015, multinational conglomerate General Electric (GE) launched an ad campaign featuring a recent college graduate, Owen, excitedly breaking the news to his parents and friends that he has just landed a computer programming job — with GE. Owen tries to tell them that he will be writing code to help machines communicate, but they’re puzzled; after all, GE isn’t exactly known for its software. In one ad, his friends feign excitement, while in another, his father implies Owen may not be macho enough to work at the storied industrial manufacturing company.

Owen's Hammer

Ge's ad campaign aimed at millennials emphasizes its new digital direction..

The campaign was designed to recruit Millennials to join GE as Industrial Internet developers and remind them — using GE’s new watchwords, “The digital company. That’s also an industrial company.” — of GE’s massive digital transformation effort. GE has bet big on the Industrial Internet — the convergence of industrial machines, data, and the Internet (also referred to as the Internet of Things) — committing $1 billion to put sensors on gas turbines, jet engines, and other machines; connect them to the cloud; and analyze the resulting flow of data to identify ways to improve machine productivity and reliability. “GE has made significant investment in the Industrial Internet,” says Matthias Heilmann, Chief Digital Officer of GE Oil & Gas Digital Solutions. “It signals this is real, this is our future.”

While many software companies like SAP, Oracle, and Microsoft have traditionally been focused on providing technology for the back office, GE is leading the development of a new breed of operational technology (OT) that literally sits on top of industrial machinery.

About the Author

Laura Winig is a contributing editor to MIT Sloan Management Review .

1. Predix is a trademark of General Electric Company.

2. M. LaWell, “Building the Industrial Internet With GE,” IndustryWeek, October 5, 2015.

3. D. Floyer, “Defining and Sizing the Industrial Internet,” June 27, 2013, http://wikibon.org.

i. S. Higginbotham, “BP Teams Up With GE to Make Its Oil Wells Smart,” Fortune, July 8, 2015.

More Like This

Add a comment cancel reply.

You must sign in to post a comment. First time here? Sign up for a free account : Comment on articles and get access to many more articles.

Comment (1)

Big Data Use Case: How Amazon uses Big Data to drive eCommerce revenue

Amazon is no stranger to big data. In this big data use case, we’ll look at how Amazon is leveraging data analytic technologies to improve products and services and drive overall revenue.

Big data has changed how we interact with the world and continue strengthening its hold on businesses worldwide. New data sets can be mined, managed, and analyzed using a combination of technologies.

These applications leverage the fallacy-prone human brain with computers. If you can think of applications for machine learning to predict things, optimize systems/processes, or automatically sequence tasks – this is relevant to big data.

Amazon’s algorithm is another secret to its success. The online shop has not only made it possible to order products with just one mouse click, but it also uses personalization data combined with big data to achieve excellent conversion rates.

On this page:

Amazon and Big data

Amazon’s big data strategy, amazon collection of data and its use, big data use case: the key points.

The fascinating world of Big Data can help you gain a competitive edge over your competitors. The data collected by networks of sensors, smart meters, and other means can provide insights into customer spending behavior and help retailers better target their services and products.

RELATED: Big Data Basics: Understanding Big Data

Machine Learning (a type of artificial intelligence) processes data through a learning algorithm to spot trends and patterns while continually refining the algorithms.

Amazon is one of the world’s largest businesses, estimated to have over 310 million active customers worldwide. They recently accomplished transactions that reached a value of $90 billion. This shows the popularity of online shopping on different continents. They provide services like payments, shipping, and new ideas for their customers.

Amazon is a giant – it has its own clouds. Amazon Web Services (AWS) offers individuals, companies, and governments cloud computing platforms . Amazon became interested in cloud computing after its Amazon Web Services was launched in 2003.

Amazon Web Services has expanded its business lines since then. Amazon hired some brilliant minds in the field of analytics and predictive modeling to aid in further data mining of Amazon’s massive volume of data that it has accumulated. Amazon innovates by introducing new products and strategies based on customer experience and feedback.

Big Data has assisted Amazon in ascending to the top of the e-commerce heap.

Amazon uses an anticipatory delivery model that predicts the products most likely to be purchased by its customers based on vast amounts of data.

This leads to Amazon assessing your purchase pattern and shipping things to your closest warehouse, which you may use in the future.

Amazon stores and processes as much customer and product information as possible – collecting specific information on every customer who visits its website. It also monitors the products a customer views, their shipping address, and whether or not they post reviews.

Amazon optimizes the prices on its websites by considering other factors, such as user activity, order history, rival prices, product availability, etc., providing discounts on popular items and earning a profit on less popular things using this strategy. This is how Amazon utilizes big data in its business operations.

Data science has established its preeminent place in industries and contributed to industries’ growth and improvement.

RELATED: How Artificial Intelligence Is Used for Data Analytics

Ever wonder how Amazon knows what you want before you even order it? The answer is mathematics, but you know that.

You may not know that the company has been running a data-gathering program for almost 15 years now that reaches back to the site’s earliest days.

In the quest to make every single interaction between buyers and sellers as efficient as possible, getting down to the most minute levels of detail has been essential, with data collection coming from a variety of sources – from sellers themselves and customers with apps on their phones – giving Amazon insights into every step along the way.

Voice recording by Alexa

Alexa is a speech interaction service developed by Amazon.com. It uses a cloud-based service to create voice-controlled smart devices. Through voice commands, Alexa can respond to queries, play music, read the news, and manage smart home devices such as lights and appliances.

Users may subscribe to an Alexa Voice Service (AVS) or use AWS Lambda to embed the system into other hardware and software.

You can spend all day with your microphone, smartphone, or barcode scanner recording every interaction, receipt, and voice note. But you don’t have to with tools like Amazon Echo.

With its always-on Alexa Voice Service, say what you need to add to your shopping list when you need it. It’s fast and straightforward.

Single click order

There is a big competition between companies using big data. Using big data, Amazon realized that customers might prefer alternative vendors if they experience a delay in their orders. So, Amazon has created Single click ordering.

You need to mention the address and payment method by this method. Every customer is given a time of 30 minutes to decide whether to place the order or not. After that, it is automatically determined.

Persuade Customers

Persuasive technology is a new area at Amazon. It’s an intersection of AI, UX, and the business goal of getting customers to take action at any point in the shopping journey.

One of the most significant ways Amazon utilizes data is through its recommendation engine. When a client searches for a specific item, Amazon can better anticipate other items the buyer may be interested in.

Consequently, Amazon can expedite the process of convincing a buyer to purchase the product. It is estimated that its personalized recommendation system accounts for 35 percent of the company’s annual sales.

The Amazon Assistant helps you discover new and exciting products, browse best sellers, and shop by department—there’s no place on the web with a better selection of stuff. Plus, it automatically notifies you when price drops or items you’ve been watching get marked down, so customers get the best deal possible.

Price dropping

Amazon constantly changes the price of its products by using Big data trends. On many competitor sites, the product’s price remains the same.

But Amazon has created another way to attract customers by constantly changing the price of the products. Amazon continually updates prices to deliver you the best deals.

Customers now check the site constantly that the price of the product they want can be low at any time, and they can buy it easily.

Shipping optimization

Shipping optimization by Amazon allows you to choose your preferred carrier, service options, and expected delivery time for millions of items on Amazon.com. With Shipping optimization by Amazon, you can end surprises like unexpected carrier selection, unnecessary service fees, or delays that can happen with even standard shipping.

Today, Amazon offers customers the choice to pick up their packages at over 400 U.S. locations. Whether you need one-day delivery or same-day pickup in select metro areas, Prime members can choose how fast they want to get their goods in an easy-to-use mobile app.

RELATED: Amazon Supply Chain: Understanding how Amazon’s supply chain works

Using shipping partners makes this selection possible, allowing Amazon to offer the most comprehensive selection in the industry and provide customers with multiple options for picking up their orders.

To better serve the customer, Amazon has adopted a technology that allows them to receive information from shoppers’ web browsing habits and use it to improve existing products and introduce new ones.

Amazon is only one example of a corporation that uses big data. Airbnb is another industry leader that employs big data in its operations; you can also review their case study. Below are four ways big data plays a significant role in every organization.

1. Helps you understand the market condition: Big Data assists you in comprehending market circumstances, trends, and wants, as well as your competitors, through data analysis.

It helps you to research customer interests and behaviors so that you may adjust your products and services to their requirements.

2. It helps you increase customer satisfaction: Using big data analytics, you may determine the demographics of your target audience, the products and services they want, and much more.

This information enables you to design business plans and strategies with the needs and demands of customers in mind. Customer satisfaction will grow immediately if your business strategy is based on consumer requirements.

3. Increase sales: Once you thoroughly understand the market environment and client needs, you can develop products, services, and marketing tactics accordingly. This helps you dramatically enhance your sales.

4. Optimize costs: By analyzing the data acquired from client databases, services, and internet resources, you may determine what prices benefit customers, how cost increases or decreases will impact your business, etc.

You can determine the optimal price for your items and services, which will benefit your customers and your company.

Businesses need to adapt to the ever-changing needs of their customers. Within this dynamic online marketplace, competitive advantage is often gained by those players who can adapt to market changes faster than others. Big data analytics provides that advantage.

RELATED: Top 5 Big Data Privacy Issues Businesses Must Consider

However, the sheer volume of data generated at all levels — from individual consumer click streams to the aggregate public opinions of millions of individuals — provides a considerable barrier to companies that would like to customize their offerings or efficiently interact with customers.

James joined BusinessTechWeekly.com in 2018, following a 19-year career in IT where he covered a wide range of support, management and consultancy roles across a wide variety of industry sectors. He has a broad technical knowledge base backed with an impressive list of technical certifications. with a focus on applications, cloud and infrastructure.

10 tips for better wireless network security

The Importance of Digitally Transforming your Business

What is Threat Hunting: Proactively Defending against Cyber Threats

How Multi-Factor Authentication (MFA) keeps business secure

How to get Etsy Sales? 20 Valuable tips and insights to grow your Etsy Sales

TikTok Ads for eCommerce: Everything you need to Know to start advertising on TikTok

Effective Big Data Analytics Use Cases in 20+ Industries

Arya bharti.

- January 06, 2022

If we have to talk about the modern technologies and industry disruptions that can benefit every industry and every business organization, then Big Data Analytics fits the bill perfectly.

The big data analytics market is slated to hit 103 bn USD by 2023 and 70% of the large enterprise business setups are using big data.

Organizations continue to generate heaps of data every year, and the global amount of data created, stored, and consumed by 2025 is slated to surpass 180 zettabytes.

However, they are unable to put this huge amount of data to the right use because they are clueless about putting their big data to work.

Here, we are discussing the top big data analytics use cases for a wide range of industries. So, take a thorough read and get started with your big data journey.

Let us begin with understanding the term Big Data Analytics.

What is Big Data Analytics?

Big data analytics is the process of using advanced analytical techniques against extremely large and diverse data sets, with huge blocks of unstructured or semi-structured, or structured data. It is a complex process where the data is processed and parsed to discover hidden patterns, market trends, and correlations and draw actionable insights from them.

The following image shows some benefits of big data analytics:

Big data analytics enables business organizations to make sense of the data they are accumulating and leverage the insights drawn from it for various business activities.

The following visual shows some of the direct benefits of using big data analytics:

Before we move on to discuss the use cases of big data analytics, it is important to address one more thing – What makes big data analytics so versatile?

Core Strengths of Big Data Analytics

Big data analytics is a combination of multiple advanced technologies that work together to help business organizations use the best set of technologies to get the best value out of their data.

Some of these technologies are machine learning, data mining, data management, Hadoop, etc.

Below, we discuss the core strengths of big data.

1. Cost Reduction

Big data analytics offers data-driven insights for the business stakeholders and they can take better strategic decisions, streamline and optimize the operational processes and understand their customers better. All this helps in cost-cutting and adds efficiency to the business model.

Big data analytics also streamline the supply chains to reduce time, effort, and resource consumption.

Studies also reveal that big data analytics solutions can help companies reduce the cost of failure by 35% via:

- Real-time monitoring

- Real-time visualization

- In-memory Analytics

- Product Monitoring

- Effective Fleet Management

2. Reliable and Continuous Data

As big data analytics allows business enterprises to make use of organizational data, they don’t have to rely upon third-party market research or tools for the same. Further, as the organizational data expands continually, having a reliable and robust big data analytics platform ensures reliable and continuous data streams.

3. New Products and Services

Because of the availability of a set of diverse and advanced technologies in the form of big data analytics, you can take better decisions related to developing new products and services.

Also, you always have the best market and customer or end-user insights to steer the development processes in the right direction.

Hence, big data analytics also facilitates faster decision-making stemming from data-driven actionable insights.

4. Improved Efficiency

Big data analytics improves accuracy, efficiency, and overall decision-making in business organizations. You can analyze the customer behavior via the shopping data and leverage the power of predictive analytics to make certain calculations, such as checkout wait times, etc. Stats reveal that 38% of companies use big data for organizational efficiency.

Actionable Advice for Data-Driven Leaders

Struggling to reap the right kind of insights from your business data? Get expert tips, latest trends, insights, case studies, recommendations and more in your inbox.

5. Better Monitoring and Tracking

Big data analytics also empowers organizations with real-time monitoring and tracking functionalities and amplifies the results by suggesting the appropriate actions or strategizing nudges stemming from predictive data analytics.

These tracking and monitoring capabilities are of extreme importance in:

- Security posture management

- Mitigating cybersecurity attacks and minimizing the damage

- Database backup

- IT infrastructure management

6. Better Remote Resource Management

Be it hiring or remote team management and monitoring, big data analytics offers a wide range of capabilities to enterprises. Big data analytics can empower business owners with core insights to make better decisions regarding employee tracking, employee hiring, performance management, etc.

This remote resource management capability works well for IT infrastructure management as well.

7. Taking Right Organizational Decisions

Take a look at the following visual that shows how big data analytics can help companies take better and data-driven organizational decisions.

Now, we discuss the top big data analytics use cases in various industries.

Big Data Analytics Use Cases in Various Industries

1. banking and finance (fraud detection, risk & insurance, and asset management).