- Search Menu

- Advance articles

- Author Guidelines

- Submission Site

- Open Access

- Why Submit?

- About Journal of Survey Statistics and Methodology

- About the American Association for Public Opinion Research

- About the American Statistical Association

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Editors-in-Chief

Kristen Olson

Katherine Jenny Thompson

Editorial board

Why Submit to JSSAM ?

The Journal of Survey Statistics and Methodology is an international, high-impact journal sponsored by the American Association for Public Opinion Research (AAPOR) and the American Statistical Association. Published since 2013, the journal has quickly become a trusted source for a wide range of high quality research in the field.

Latest articles

Latest posts on x.

Virtual Issues

These specially curated, themed collections from JSSAM feature a selection of papers previously published in the journal.

Browse all virtual issues

Email alerts

Register to receive table of contents email alerts as soon as new issues of Journal of Survey Statistics and Methodology are published online.

Publish in JSSAM

Want to publish in Journal of Survey Statistics and Methodology ? The journal publishes articles on statistical and methodological issues for sample surveys, censuses, administrative record systems, and other related data.

View instructions to authors

Related Titles

- Recommend to your Library

Affiliations

- Online ISSN 2325-0992

- Copyright © 2024 American Association for Public Opinion Research

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Doing Survey Research | A Step-by-Step Guide & Examples

Doing Survey Research | A Step-by-Step Guide & Examples

Published on 6 May 2022 by Shona McCombes . Revised on 10 October 2022.

Survey research means collecting information about a group of people by asking them questions and analysing the results. To conduct an effective survey, follow these six steps:

- Determine who will participate in the survey

- Decide the type of survey (mail, online, or in-person)

- Design the survey questions and layout

- Distribute the survey

- Analyse the responses

- Write up the results

Surveys are a flexible method of data collection that can be used in many different types of research .

Table of contents

What are surveys used for, step 1: define the population and sample, step 2: decide on the type of survey, step 3: design the survey questions, step 4: distribute the survey and collect responses, step 5: analyse the survey results, step 6: write up the survey results, frequently asked questions about surveys.

Surveys are used as a method of gathering data in many different fields. They are a good choice when you want to find out about the characteristics, preferences, opinions, or beliefs of a group of people.

Common uses of survey research include:

- Social research: Investigating the experiences and characteristics of different social groups

- Market research: Finding out what customers think about products, services, and companies

- Health research: Collecting data from patients about symptoms and treatments

- Politics: Measuring public opinion about parties and policies

- Psychology: Researching personality traits, preferences, and behaviours

Surveys can be used in both cross-sectional studies , where you collect data just once, and longitudinal studies , where you survey the same sample several times over an extended period.

Prevent plagiarism, run a free check.

Before you start conducting survey research, you should already have a clear research question that defines what you want to find out. Based on this question, you need to determine exactly who you will target to participate in the survey.

Populations

The target population is the specific group of people that you want to find out about. This group can be very broad or relatively narrow. For example:

- The population of Brazil

- University students in the UK

- Second-generation immigrants in the Netherlands

- Customers of a specific company aged 18 to 24

- British transgender women over the age of 50

Your survey should aim to produce results that can be generalised to the whole population. That means you need to carefully define exactly who you want to draw conclusions about.

It’s rarely possible to survey the entire population of your research – it would be very difficult to get a response from every person in Brazil or every university student in the UK. Instead, you will usually survey a sample from the population.

The sample size depends on how big the population is. You can use an online sample calculator to work out how many responses you need.

There are many sampling methods that allow you to generalise to broad populations. In general, though, the sample should aim to be representative of the population as a whole. The larger and more representative your sample, the more valid your conclusions.

There are two main types of survey:

- A questionnaire , where a list of questions is distributed by post, online, or in person, and respondents fill it out themselves

- An interview , where the researcher asks a set of questions by phone or in person and records the responses

Which type you choose depends on the sample size and location, as well as the focus of the research.

Questionnaires

Sending out a paper survey by post is a common method of gathering demographic information (for example, in a government census of the population).

- You can easily access a large sample.

- You have some control over who is included in the sample (e.g., residents of a specific region).

- The response rate is often low.

Online surveys are a popular choice for students doing dissertation research , due to the low cost and flexibility of this method. There are many online tools available for constructing surveys, such as SurveyMonkey and Google Forms .

- You can quickly access a large sample without constraints on time or location.

- The data is easy to process and analyse.

- The anonymity and accessibility of online surveys mean you have less control over who responds.

If your research focuses on a specific location, you can distribute a written questionnaire to be completed by respondents on the spot. For example, you could approach the customers of a shopping centre or ask all students to complete a questionnaire at the end of a class.

- You can screen respondents to make sure only people in the target population are included in the sample.

- You can collect time- and location-specific data (e.g., the opinions of a shop’s weekday customers).

- The sample size will be smaller, so this method is less suitable for collecting data on broad populations.

Oral interviews are a useful method for smaller sample sizes. They allow you to gather more in-depth information on people’s opinions and preferences. You can conduct interviews by phone or in person.

- You have personal contact with respondents, so you know exactly who will be included in the sample in advance.

- You can clarify questions and ask for follow-up information when necessary.

- The lack of anonymity may cause respondents to answer less honestly, and there is more risk of researcher bias.

Like questionnaires, interviews can be used to collect quantitative data : the researcher records each response as a category or rating and statistically analyses the results. But they are more commonly used to collect qualitative data : the interviewees’ full responses are transcribed and analysed individually to gain a richer understanding of their opinions and feelings.

Next, you need to decide which questions you will ask and how you will ask them. It’s important to consider:

- The type of questions

- The content of the questions

- The phrasing of the questions

- The ordering and layout of the survey

Open-ended vs closed-ended questions

There are two main forms of survey questions: open-ended and closed-ended. Many surveys use a combination of both.

Closed-ended questions give the respondent a predetermined set of answers to choose from. A closed-ended question can include:

- A binary answer (e.g., yes/no or agree/disagree )

- A scale (e.g., a Likert scale with five points ranging from strongly agree to strongly disagree )

- A list of options with a single answer possible (e.g., age categories)

- A list of options with multiple answers possible (e.g., leisure interests)

Closed-ended questions are best for quantitative research . They provide you with numerical data that can be statistically analysed to find patterns, trends, and correlations .

Open-ended questions are best for qualitative research. This type of question has no predetermined answers to choose from. Instead, the respondent answers in their own words.

Open questions are most common in interviews, but you can also use them in questionnaires. They are often useful as follow-up questions to ask for more detailed explanations of responses to the closed questions.

The content of the survey questions

To ensure the validity and reliability of your results, you need to carefully consider each question in the survey. All questions should be narrowly focused with enough context for the respondent to answer accurately. Avoid questions that are not directly relevant to the survey’s purpose.

When constructing closed-ended questions, ensure that the options cover all possibilities. If you include a list of options that isn’t exhaustive, you can add an ‘other’ field.

Phrasing the survey questions

In terms of language, the survey questions should be as clear and precise as possible. Tailor the questions to your target population, keeping in mind their level of knowledge of the topic.

Use language that respondents will easily understand, and avoid words with vague or ambiguous meanings. Make sure your questions are phrased neutrally, with no bias towards one answer or another.

Ordering the survey questions

The questions should be arranged in a logical order. Start with easy, non-sensitive, closed-ended questions that will encourage the respondent to continue.

If the survey covers several different topics or themes, group together related questions. You can divide a questionnaire into sections to help respondents understand what is being asked in each part.

If a question refers back to or depends on the answer to a previous question, they should be placed directly next to one another.

Before you start, create a clear plan for where, when, how, and with whom you will conduct the survey. Determine in advance how many responses you require and how you will gain access to the sample.

When you are satisfied that you have created a strong research design suitable for answering your research questions, you can conduct the survey through your method of choice – by post, online, or in person.

There are many methods of analysing the results of your survey. First you have to process the data, usually with the help of a computer program to sort all the responses. You should also cleanse the data by removing incomplete or incorrectly completed responses.

If you asked open-ended questions, you will have to code the responses by assigning labels to each response and organising them into categories or themes. You can also use more qualitative methods, such as thematic analysis , which is especially suitable for analysing interviews.

Statistical analysis is usually conducted using programs like SPSS or Stata. The same set of survey data can be subject to many analyses.

Finally, when you have collected and analysed all the necessary data, you will write it up as part of your thesis, dissertation , or research paper .

In the methodology section, you describe exactly how you conducted the survey. You should explain the types of questions you used, the sampling method, when and where the survey took place, and the response rate. You can include the full questionnaire as an appendix and refer to it in the text if relevant.

Then introduce the analysis by describing how you prepared the data and the statistical methods you used to analyse it. In the results section, you summarise the key results from your analysis.

A Likert scale is a rating scale that quantitatively assesses opinions, attitudes, or behaviours. It is made up of four or more questions that measure a single attitude or trait when response scores are combined.

To use a Likert scale in a survey , you present participants with Likert-type questions or statements, and a continuum of items, usually with five or seven possible responses, to capture their degree of agreement.

Individual Likert-type questions are generally considered ordinal data , because the items have clear rank order, but don’t have an even distribution.

Overall Likert scale scores are sometimes treated as interval data. These scores are considered to have directionality and even spacing between them.

The type of data determines what statistical tests you should use to analyse your data.

A questionnaire is a data collection tool or instrument, while a survey is an overarching research method that involves collecting and analysing data from people using questionnaires.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, October 10). Doing Survey Research | A Step-by-Step Guide & Examples. Scribbr. Retrieved 29 April 2024, from https://www.scribbr.co.uk/research-methods/surveys/

Is this article helpful?

Shona McCombes

Other students also liked, qualitative vs quantitative research | examples & methods, construct validity | definition, types, & examples, what is a likert scale | guide & examples.

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Survey Research: Definition, Examples and Methods

Survey Research is a quantitative research method used for collecting data from a set of respondents. It has been perhaps one of the most used methodologies in the industry for several years due to the multiple benefits and advantages that it has when collecting and analyzing data.

LEARN ABOUT: Behavioral Research

In this article, you will learn everything about survey research, such as types, methods, and examples.

Survey Research Definition

Survey Research is defined as the process of conducting research using surveys that researchers send to survey respondents. The data collected from surveys is then statistically analyzed to draw meaningful research conclusions. In the 21st century, every organization’s eager to understand what their customers think about their products or services and make better business decisions. Researchers can conduct research in multiple ways, but surveys are proven to be one of the most effective and trustworthy research methods. An online survey is a method for extracting information about a significant business matter from an individual or a group of individuals. It consists of structured survey questions that motivate the participants to respond. Creditable survey research can give these businesses access to a vast information bank. Organizations in media, other companies, and even governments rely on survey research to obtain accurate data.

The traditional definition of survey research is a quantitative method for collecting information from a pool of respondents by asking multiple survey questions. This research type includes the recruitment of individuals collection, and analysis of data. It’s useful for researchers who aim to communicate new features or trends to their respondents.

LEARN ABOUT: Level of Analysis Generally, it’s the primary step towards obtaining quick information about mainstream topics and conducting more rigorous and detailed quantitative research methods like surveys/polls or qualitative research methods like focus groups/on-call interviews can follow. There are many situations where researchers can conduct research using a blend of both qualitative and quantitative strategies.

LEARN ABOUT: Survey Sampling

Survey Research Methods

Survey research methods can be derived based on two critical factors: Survey research tool and time involved in conducting research. There are three main survey research methods, divided based on the medium of conducting survey research:

- Online/ Email: Online survey research is one of the most popular survey research methods today. The survey cost involved in online survey research is extremely minimal, and the responses gathered are highly accurate.

- Phone: Survey research conducted over the telephone ( CATI survey ) can be useful in collecting data from a more extensive section of the target population. There are chances that the money invested in phone surveys will be higher than other mediums, and the time required will be higher.

- Face-to-face: Researchers conduct face-to-face in-depth interviews in situations where there is a complicated problem to solve. The response rate for this method is the highest, but it can be costly.

Further, based on the time taken, survey research can be classified into two methods:

- Longitudinal survey research: Longitudinal survey research involves conducting survey research over a continuum of time and spread across years and decades. The data collected using this survey research method from one time period to another is qualitative or quantitative. Respondent behavior, preferences, and attitudes are continuously observed over time to analyze reasons for a change in behavior or preferences. For example, suppose a researcher intends to learn about the eating habits of teenagers. In that case, he/she will follow a sample of teenagers over a considerable period to ensure that the collected information is reliable. Often, cross-sectional survey research follows a longitudinal study .

- Cross-sectional survey research: Researchers conduct a cross-sectional survey to collect insights from a target audience at a particular time interval. This survey research method is implemented in various sectors such as retail, education, healthcare, SME businesses, etc. Cross-sectional studies can either be descriptive or analytical. It is quick and helps researchers collect information in a brief period. Researchers rely on the cross-sectional survey research method in situations where descriptive analysis of a subject is required.

Survey research also is bifurcated according to the sampling methods used to form samples for research: Probability and Non-probability sampling. Every individual in a population should be considered equally to be a part of the survey research sample. Probability sampling is a sampling method in which the researcher chooses the elements based on probability theory. The are various probability research methods, such as simple random sampling , systematic sampling, cluster sampling, stratified random sampling, etc. Non-probability sampling is a sampling method where the researcher uses his/her knowledge and experience to form samples.

LEARN ABOUT: Survey Sample Sizes

The various non-probability sampling techniques are :

- Convenience sampling

- Snowball sampling

- Consecutive sampling

- Judgemental sampling

- Quota sampling

Process of implementing survey research methods:

- Decide survey questions: Brainstorm and put together valid survey questions that are grammatically and logically appropriate. Understanding the objective and expected outcomes of the survey helps a lot. There are many surveys where details of responses are not as important as gaining insights about what customers prefer from the provided options. In such situations, a researcher can include multiple-choice questions or closed-ended questions . Whereas, if researchers need to obtain details about specific issues, they can consist of open-ended questions in the questionnaire. Ideally, the surveys should include a smart balance of open-ended and closed-ended questions. Use survey questions like Likert Scale , Semantic Scale, Net Promoter Score question, etc., to avoid fence-sitting.

LEARN ABOUT: System Usability Scale

- Finalize a target audience: Send out relevant surveys as per the target audience and filter out irrelevant questions as per the requirement. The survey research will be instrumental in case the target population decides on a sample. This way, results can be according to the desired market and be generalized to the entire population.

LEARN ABOUT: Testimonial Questions

- Send out surveys via decided mediums: Distribute the surveys to the target audience and patiently wait for the feedback and comments- this is the most crucial step of the survey research. The survey needs to be scheduled, keeping in mind the nature of the target audience and its regions. Surveys can be conducted via email, embedded in a website, shared via social media, etc., to gain maximum responses.

- Analyze survey results: Analyze the feedback in real-time and identify patterns in the responses which might lead to a much-needed breakthrough for your organization. GAP, TURF Analysis , Conjoint analysis, Cross tabulation, and many such survey feedback analysis methods can be used to spot and shed light on respondent behavior. Researchers can use the results to implement corrective measures to improve customer/employee satisfaction.

Reasons to conduct survey research

The most crucial and integral reason for conducting market research using surveys is that you can collect answers regarding specific, essential questions. You can ask these questions in multiple survey formats as per the target audience and the intent of the survey. Before designing a study, every organization must figure out the objective of carrying this out so that the study can be structured, planned, and executed to perfection.

LEARN ABOUT: Research Process Steps

Questions that need to be on your mind while designing a survey are:

- What is the primary aim of conducting the survey?

- How do you plan to utilize the collected survey data?

- What type of decisions do you plan to take based on the points mentioned above?

There are three critical reasons why an organization must conduct survey research.

- Understand respondent behavior to get solutions to your queries: If you’ve carefully curated a survey, the respondents will provide insights about what they like about your organization as well as suggestions for improvement. To motivate them to respond, you must be very vocal about how secure their responses will be and how you will utilize the answers. This will push them to be 100% honest about their feedback, opinions, and comments. Online surveys or mobile surveys have proved their privacy, and due to this, more and more respondents feel free to put forth their feedback through these mediums.

- Present a medium for discussion: A survey can be the perfect platform for respondents to provide criticism or applause for an organization. Important topics like product quality or quality of customer service etc., can be put on the table for discussion. A way you can do it is by including open-ended questions where the respondents can write their thoughts. This will make it easy for you to correlate your survey to what you intend to do with your product or service.

- Strategy for never-ending improvements: An organization can establish the target audience’s attributes from the pilot phase of survey research . Researchers can use the criticism and feedback received from this survey to improve the product/services. Once the company successfully makes the improvements, it can send out another survey to measure the change in feedback keeping the pilot phase the benchmark. By doing this activity, the organization can track what was effectively improved and what still needs improvement.

Survey Research Scales

There are four main scales for the measurement of variables:

- Nominal Scale: A nominal scale associates numbers with variables for mere naming or labeling, and the numbers usually have no other relevance. It is the most basic of the four levels of measurement.

- Ordinal Scale: The ordinal scale has an innate order within the variables along with labels. It establishes the rank between the variables of a scale but not the difference value between the variables.

- Interval Scale: The interval scale is a step ahead in comparison to the other two scales. Along with establishing a rank and name of variables, the scale also makes known the difference between the two variables. The only drawback is that there is no fixed start point of the scale, i.e., the actual zero value is absent.

- Ratio Scale: The ratio scale is the most advanced measurement scale, which has variables that are labeled in order and have a calculated difference between variables. In addition to what interval scale orders, this scale has a fixed starting point, i.e., the actual zero value is present.

Benefits of survey research

In case survey research is used for all the right purposes and is implemented properly, marketers can benefit by gaining useful, trustworthy data that they can use to better the ROI of the organization.

Other benefits of survey research are:

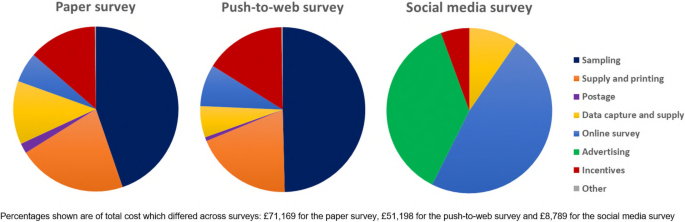

- Minimum investment: Mobile surveys and online surveys have minimal finance invested per respondent. Even with the gifts and other incentives provided to the people who participate in the study, online surveys are extremely economical compared to paper-based surveys.

- Versatile sources for response collection: You can conduct surveys via various mediums like online and mobile surveys. You can further classify them into qualitative mediums like focus groups , and interviews and quantitative mediums like customer-centric surveys. Due to the offline survey response collection option, researchers can conduct surveys in remote areas with limited internet connectivity. This can make data collection and analysis more convenient and extensive.

- Reliable for respondents: Surveys are extremely secure as the respondent details and responses are kept safeguarded. This anonymity makes respondents answer the survey questions candidly and with absolute honesty. An organization seeking to receive explicit responses for its survey research must mention that it will be confidential.

Survey research design

Researchers implement a survey research design in cases where there is a limited cost involved and there is a need to access details easily. This method is often used by small and large organizations to understand and analyze new trends, market demands, and opinions. Collecting information through tactfully designed survey research can be much more effective and productive than a casually conducted survey.

There are five stages of survey research design:

- Decide an aim of the research: There can be multiple reasons for a researcher to conduct a survey, but they need to decide a purpose for the research. This is the primary stage of survey research as it can mold the entire path of a survey, impacting its results.

- Filter the sample from target population: Who to target? is an essential question that a researcher should answer and keep in mind while conducting research. The precision of the results is driven by who the members of a sample are and how useful their opinions are. The quality of respondents in a sample is essential for the results received for research and not the quantity. If a researcher seeks to understand whether a product feature will work well with their target market, he/she can conduct survey research with a group of market experts for that product or technology.

- Zero-in on a survey method: Many qualitative and quantitative research methods can be discussed and decided. Focus groups, online interviews, surveys, polls, questionnaires, etc. can be carried out with a pre-decided sample of individuals.

- Design the questionnaire: What will the content of the survey be? A researcher is required to answer this question to be able to design it effectively. What will the content of the cover letter be? Or what are the survey questions of this questionnaire? Understand the target market thoroughly to create a questionnaire that targets a sample to gain insights about a survey research topic.

- Send out surveys and analyze results: Once the researcher decides on which questions to include in a study, they can send it across to the selected sample . Answers obtained from this survey can be analyzed to make product-related or marketing-related decisions.

Survey examples: 10 tips to design the perfect research survey

Picking the right survey design can be the key to gaining the information you need to make crucial decisions for all your research. It is essential to choose the right topic, choose the right question types, and pick a corresponding design. If this is your first time creating a survey, it can seem like an intimidating task. But with QuestionPro, each step of the process is made simple and easy.

Below are 10 Tips To Design The Perfect Research Survey:

- Set your SMART goals: Before conducting any market research or creating a particular plan, set your SMART Goals . What is that you want to achieve with the survey? How will you measure it promptly, and what are the results you are expecting?

- Choose the right questions: Designing a survey can be a tricky task. Asking the right questions may help you get the answers you are looking for and ease the task of analyzing. So, always choose those specific questions – relevant to your research.

- Begin your survey with a generalized question: Preferably, start your survey with a general question to understand whether the respondent uses the product or not. That also provides an excellent base and intro for your survey.

- Enhance your survey: Choose the best, most relevant, 15-20 questions. Frame each question as a different question type based on the kind of answer you would like to gather from each. Create a survey using different types of questions such as multiple-choice, rating scale, open-ended, etc. Look at more survey examples and four measurement scales every researcher should remember.

- Prepare yes/no questions: You may also want to use yes/no questions to separate people or branch them into groups of those who “have purchased” and those who “have not yet purchased” your products or services. Once you separate them, you can ask them different questions.

- Test all electronic devices: It becomes effortless to distribute your surveys if respondents can answer them on different electronic devices like mobiles, tablets, etc. Once you have created your survey, it’s time to TEST. You can also make any corrections if needed at this stage.

- Distribute your survey: Once your survey is ready, it is time to share and distribute it to the right audience. You can share handouts and share them via email, social media, and other industry-related offline/online communities.

- Collect and analyze responses: After distributing your survey, it is time to gather all responses. Make sure you store your results in a particular document or an Excel sheet with all the necessary categories mentioned so that you don’t lose your data. Remember, this is the most crucial stage. Segregate your responses based on demographics, psychographics, and behavior. This is because, as a researcher, you must know where your responses are coming from. It will help you to analyze, predict decisions, and help write the summary report.

- Prepare your summary report: Now is the time to share your analysis. At this stage, you should mention all the responses gathered from a survey in a fixed format. Also, the reader/customer must get clarity about your goal, which you were trying to gain from the study. Questions such as – whether the product or service has been used/preferred or not. Do respondents prefer some other product to another? Any recommendations?

Having a tool that helps you carry out all the necessary steps to carry out this type of study is a vital part of any project. At QuestionPro, we have helped more than 10,000 clients around the world to carry out data collection in a simple and effective way, in addition to offering a wide range of solutions to take advantage of this data in the best possible way.

From dashboards, advanced analysis tools, automation, and dedicated functions, in QuestionPro, you will find everything you need to execute your research projects effectively. Uncover insights that matter the most!

MORE LIKE THIS

Taking Action in CX – Tuesday CX Thoughts

Apr 30, 2024

QuestionPro CX Product Updates – Quarter 1, 2024

Apr 29, 2024

NPS Survey Platform: Types, Tips, 11 Best Platforms & Tools

Apr 26, 2024

User Journey vs User Flow: Differences and Similarities

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

- Research article

- Open access

- Published: 12 September 2013

A survey study of the association between mobile phone use and daytime sleepiness in California high school students

- Nila Nathan 1 &

- Jamie Zeitzer 2 , 3

BMC Public Health volume 13 , Article number: 840 ( 2013 ) Cite this article

139k Accesses

36 Citations

4 Altmetric

Metrics details

Mobile phone use is near ubiquitous in teenagers. Paralleling the rise in mobile phone use is an equally rapid decline in the amount of time teenagers are spending asleep at night. Prior research indicates that there might be a relationship between daytime sleepiness and nocturnal mobile phone use in teenagers in a variety of countries. As such, the aim of this study was to see if there was an association between mobile phone use, especially at night, and sleepiness in a group of U.S. teenagers.

A questionnaire containing an Epworth Sleepiness Scale (ESS) modified for use in teens and questions about qualitative and quantitative use of the mobile phone was completed by students attending Mountain View High School in Mountain View, California (n = 211).

Multivariate regression analysis indicated that ESS score was significantly associated with being female, feeling a need to be accessible by mobile phone all of the time, and a past attempt to reduce mobile phone use. The number of daily texts or phone calls was not directly associated with ESS. Those individuals who felt they needed to be accessible and those who had attempted to reduce mobile phone use were also ones who stayed up later to use the mobile phone and were awakened more often at night by the mobile phone.

Conclusions

The relationship between daytime sleepiness and mobile phone use was not directly related to the volume of texting but may be related to the temporal pattern of mobile phone use.

Peer Review reports

Mobile phone use has drastically increased in recent years, fueled by new technology such as ‘smart phones’. In 2012, it was estimated that 78% of all Americans aged 12–17 years had a mobile phone and 37% had a smart phone [ 1 ]. Despite the growing number of adolescent mobile phone users, there has been limited examination of the behavioral effects of mobile phone usage on adolescents and their sleep and subsequent daytime sleepiness.

Mobile phone use in teens likely compounds the biological causes of sleep loss. With the onset of puberty, there are changes in innate circadian rhythms that lead to a delay in the habitual timing of sleep onset [ 2 ]. As school start times are not correspondingly later, this leads to a reduction in the time available for sleep and is consequently thought to contribute to the endemic sleepiness of teenagers. The use of mobile phones may compound this sleepiness by extending the waking hours further into the night. Munezawa and colleagues [ 3 ] analyzed 94,777 responses to questionnaires sent out to junior and senior high school students in Japan and found that the use of mobile phones for calling or sending text messages after they went to bed was associated with sleep disturbances such as short sleep duration, subjective poor sleep quality, excessive daytime sleepiness and insomnia symptoms. Soderqvist et al. in their study of Swedish adolescents aged 15–19 years, found that regular users of mobile phones reported health symptoms such as tiredness, stress, headache, anxiety, concentration difficulties and sleep disturbances more often than less frequent users [ 4 ]. Van der Bulck studied 1,656 school children in Belgium and found that prevalent mobile phone use in adolescents was related to increased levels of daytime tiredness [ 5 ]. Punamaki et al. studied Finnish teens and found that intensive mobile phone use lead to more health complaints and musculoskeletal symptoms in girls both directly and through deteriorated sleep, as well as increased daytime tiredness [ 6 ]. In one prospective study of young Swedish adults, aged 20–24, those who were high volume mobile phone users and male, but not female, were at greater risk for developing sleep disturbances a year later [ 7 ]. The association of mobile phone utilization and either sleep or sleepiness in teens in the United States has only been described by a telephone poll. In the 2011 National Sleep Foundation poll, 20% of those under the age of 30 reported that they were awakened by a phone call, text or e-mail message at least a few nights a week [ 8 ]. This type of nocturnal awakening was self-reported more frequently by those who also reported that they drove while drowsy.

As there has been limited examination of how mobile phone usage affects the behavior of young children and adolescents, none of which have addressed the effects of such usage on daytime sleepiness in U.S. teens, it seemed worthwhile to attempt a cross-sectional study of sleep and mobile phone utilization in a U.S. high school. As such, it was the purpose of this study to examine the association of mobile phone utilization and sleepiness patterns in a sample of U.S. teens. We hypothesized that an increased number of calls would be associated with increased sleepiness.

We designed a survey that contained questions concerning sleepiness and mobile phone use (see Additional file 1 ). Sleepiness was assessed using a version of the Epworth Sleepiness Scale (ESS) [ 9 ] modified for use in adolescents [ 10 ]. The modified ESS consists of eight questions that assessed the likelihood of dozing in the following circumstances: sitting and reading, watching TV, sitting inactive in a public place, as a passenger in a car for an hour without a break, lying down to rest in the afternoon when circumstances permit, sitting and talking to someone, sitting quietly after a lunch, in a car while stopped for a few minutes in traffic. Responses were limited to a Likert-like scale using the following: no chance of dozing (0), slight chance of dozing (1), moderate chance of dozing (2), or high chance of dozing (3). This yielded total ESS scores ranging from 0 to 24, with scores over 10 being associated with clinically-significant sleepiness [ 9 ]. We also included a set of modified questions, originally designed by Thomée et al., that assess the subjective impact of mobile phone use [ 7 ]. These included the number of mobile calls made or received each day, the number of texts made or received each day, being awakened by the mobile phone at night (never/occasionally/monthly/weekly/daily), staying up late to use the mobile phone (never/occasionally/monthly/weekly/daily), expectations of accessibility by mobile phone (never/occasionally/daily/all day/around-the-clock), stressfulness of accessibility (not at all/a little bit/rather/very), use mobile phone too much (yes/no), and tried and failed to reduce mobile phone use (yes/no).

An email invitation to complete an electronic form of the survey ( http://www.surveymonkey.com ) was sent to the entire student body of the Mountain View High School, located in Mountain View, California, USA, on April 5, 2012. Out of the approximately 2,000 students attending the school, a total of 211 responded by the collection date of April 23, 2012. Data analyses are described below (OrginPro8, OriginLab, Northampton MA). Summary data are provided as mean ± SD for age and ESS and as median (range) for the number of texts and/or phone calls made or received per day as these were non-normally distributed (p’s <; 0.001, Kolmogorov Smirnov test). To examine the relationship between sleepiness and predictor variables, stepwise multivariate regression analyses were performed. Collinearity in the data was examined by calculating the Variance Inflation Factor (VIF). Post hoc t-tests, ANOVA, Mann–Whitney U tests, and Spearman correlations were used, as appropriate, to examine specific components of the model and their relationship to sleepiness. χ 2 tests were used to examine categorical variables. The study was done within the regulations codified by the Declaration of Helsinki and approved by the administration of Mountain View High School.

Sixty-eight males and 143 females responded to the survey. Most (96.7%) respondents owned a mobile phone. The remainder of the analyses presented herein is on the 202 respondents (64 male, 138 female) who indicated that they owned a mobile phone (Tables 1 and 2 ). The youngest participant in the survey was 14 years old and the oldest was 19 years old (16 ± 1.2 years), representative of the age range of this school. The median number of mobile phone calls made or received per day was 2 and ranged from 0 to 60. The median number of text messages sent or received per day was 22.5 and ranged from 0 to 700. While about half of the respondents (53%) had never been awakened by the mobile phone at night, 35% were occasionally awakened, 5.9% were awakened a few times a month, 5.0% were awakened a few times a week, and 1.0% were awakened almost every night. About one-quarter (27%) of respondents had never stayed awake later than a target bedtime in order to use the mobile phone, however 36% occasionally stayed awake, 19% stayed awake a few times a month, 8.5% stayed awake a few times a week, and 10% stayed awake almost every night in order to use the mobile phone. In regards to feeling an expectation of accessibility, 7.5% reported that they needed to be accessible around the clock, 26% reported that they needed to be accessible all day, 52% reported they needed to be accessible daily, 13% reported that they only needed to be accessible now and then, and 1.0% reported they never needed to be accessible. Nearly half (49%) of the survey participants viewed accessibility via mobile phones to be not at all stressful, 45% found it to be a little bit stressful, 4.5% found it rather stressful, and 1.0% found it very stressful. More than one-third (36%) reported that they or someone close to them thought that they used the mobile phone too much. Few (17%) had tried but were unable to reduce their mobile phone use.

Subjective sleepiness on the ESS ranged from 0 to 18 (6.8 ± 3.5, with higher numbers indicating greater sleepiness), with 25% of participants having ESS scores in the excessively sleepy range (ESS ≥ 10). We examined predictors of subjective sleepiness (ESS score) using stepwise multivariate regression analysis with the following independent variables: age, sex, frequency of nocturnal awakening by the phone, frequency of staying up too late to use the phone, self-perceived accessibility by phone, stressfulness of this accessibility, attempted and failed to reduce phone use, excessive phone use determined by others, number of texts per day, and number of phone calls per day. Only subjects with complete data sets were used in our modeling (n = 191 of 202). Our final model (Table 3 ) indicated that sex, frequency of accessibility, and a failed attempt to reduce mobile phone use were all predictive of daytime sleepiness (F 6,194 = 4.35, p <; 0.001, r 2 = 0.12). These model variables lacked collinearity (VIF’s <; 3.9), indicating that they were not likely to represent the same source of variance. Despite the lack of significance in the multivariate model, given previously published data [ 4 – 6 ], we independently tested if there was a relationship between the number of estimated texts and sleepiness, but found no such correlation (r = 0.13, p = 0.07; Spearman correlation). In examining the final model, it appears that those who felt that they needed to be accessible “around the clock” (ESS = 9.2 ± 2.9) were sleepier than all others (ESS = 6.7 ± 3.4) (p <; 0.01, post hoc t -test). The relationship between sleepiness and reporting having tried, but failed, to reduce mobile phone use was such that those who had tried to reduce phone use were more sleepy (ESS = 8.3 ± 3.6) than those who had not (ESS = 6.5 ± 3.4) (p <; 0.01, post hoc t -test). While more females had tried to reduce their mobile phone use, sex did not modify the relationship between the attempt to reduce mobile phone use and sleepiness (p = 0.32, two-way ANOVA), thus retaining attempt and failure to reduce mobile phone use as an independent modifier of ESS scores.

In an attempt to better understand the relationship between ESS and accessibility, we parsed the population into those who felt that they needed to be accessible around the clock (7.4%) and those who did not (92.6%). The most accessible group, as compared to the less accessible group, had a numerically though not statistically significantly higher texting rate (50 vs. 20 per day; p = 0.07, Mann–Whitney U test), but were awakened more at night by the phone (27% vs. 4%, weekly or daily; p <; 0.05, χ 2 test), and stayed awake later than desired more often (40% vs. 17%, weekly or daily; p <; 0.05, χ 2 test). We did a similar analysis, parsing the population into those who had attempted but failed to reduce their use of their mobile phone (17%) with those who had not (83%). Those who had attempted to reduce their mobile phone use had a higher texting rate (60 vs. 20 per day; p <; 0.01, Mann–Whitney U test) and stayed awake later than desired more often (53% vs. 11%, weekly or daily; p <; 0.01, χ 2 test), but were not awakened more at night by the phone (12% vs. 5%, weekly or daily; p = 0.26, χ 2 test).

Given previous research on the topic, our a priori hypothesis was that teenagers who use their phone more often at night are likely to be more prone to daytime sleepiness. We did not, however, observe this simple relationship in this sample of U.S. teens. We did find that being female, perceived need to be accessible by mobile phone, and having tried but failed to reduce mobile phone usage were all predictive of daytime sleepiness, with the latter two likely being moderated by increased use of the phone at night. Previous work has shown that being female was associated with higher ESS scores [ 11 ]. It may be that adolescent females score higher on the ESS without being objectively sleepier, though this remains to be tested. Our analyses revealed that staying up late to use the mobile phone and being awakened by the mobile phone may be involved in the relationship between increased ESS scores and perceived need to be accessible by mobile phone and a past attempt to decrease mobile phone use. These analyses reveal some of the complexity of assessing daytime sleepiness, which is undoubtedly multifactorial. If the sheer number of text messages being sent per day is directly associated daytime sleepiness, it is likely with a small effect size. Our work, of course, is not without its limitations. Data were collected from a sample of convenience at a single, public high school in California. Only 10% of students responded to the survey and this may have introduced some response bias to the data. The data collected were cross-sectional; a longitudinal collection would have enabled a more precise analysis of moderators and mediators as well as a more accurate interpretation of causal relationships. Also, we did not objectively record the number of texts, so there may be a certain degree of bias or uncertainty associated with self-report of number of texts and calls. Several variables that might influence sleepiness both directly and indirectly through mobile phone use (e.g., socioeconomic status, comorbid sleep disorders, medication use) were not assessed. Future studies on the impact of mobile phone use on sleep and sleepiness should take into account the multifactorial and temporal nature of these behaviors.

The endemic sleepiness found in adolescents is multifactorial with both intrinsic and extrinsic factors. Mobile phone use has been assumed to be one source of increased daytime sleepiness in adolescents. Our analyses revealed that use or perceived need of use of the mobile phone during normal sleeping hours may contribute to daytime sleepiness. As overall number of text messages did not significantly contribute to daytime sleepiness, it is possible that a temporal rearrangement of phone use (e.g., limiting phone use during prescribed sleeping hours) might help in alleviating some degree of daytime sleepiness.

Abbreviations

Epworth sleepiness scale

Standard deviation

Analysis of variance.

Madden M, Lenhart A, Duggan M, Cortesi S, Gasser U: Teens and Technology. 2013, http://www.pewinternet.org/Reports/2013/Teens-and-Tech/Summary-of-Findings.aspx ,

Google Scholar

Crowley SJ, Acebo C, Carskadon MA: Sleep, circadian rhythms, and delayed phase in adolescence. Sleep Med. 2007, 8: 602-612. 10.1016/j.sleep.2006.12.002.

Article PubMed Google Scholar

Munezawa T, Kaneita Y, Osaki Y, Kanda H, Minowa M, Suzuki K, Higuchi S, Mori J, Yamamoto R, Ohida T: The association between use of mobile phones after lights out and sleep disturbances among Japanese adolescents: a nationwide cross-sectional survey. Sleep. 2011, 34: 1013-1020.

PubMed PubMed Central Google Scholar

Soderqvist F, Carlberg M, Hardell L: Use of wireless telephones and self-reported health symptoms: a population-based study among Swedish adolescents aged 15–19 years. Environ Health. 2008, 7: 18-10.1186/1476-069X-7-18.

Article PubMed PubMed Central Google Scholar

Van den Bulck J: Adolescent use of mobile phones for calling and for sending text messages after lights out: results from a prospective cohort study with a one-year follow-up. Sleep. 2007, 30: 1220-1223.

Punamaki RL, Wallenius M, Nygård CH, Saarni L, Rimpelä A: Use of information and communication technology (ICT) and perceived health in adolescence: the role of sleeping habits and waking-time tiredness. J Adolescence. 2007, 30: 95-103.

Article Google Scholar

Thomée S, Harenstam A, Hagberg M: Mobile phone use and stress, sleep disturbances and symptoms of depression among young adults – a prospective cohort study. BMC Publ Health. 2011, 11: 66-10.1186/1471-2458-11-66.

The National Sleep Foundation. 2011, http://www.sleepfoundation.org/article/sleep-america-polls/2011-communications-technology-use-and-sleep , Sleep in America poll,

Johns MW: A new method of measuring daytime sleepiness: the Epworth sleepiness scale. Sleep. 1991, 14: 540-545.

CAS PubMed Google Scholar

Melendres MC, Lutz JM, Rubin ED, Marcus CL: Daytime sleepiness and hyperactivity in children with suspected sleep-disordered breathing. Pediatrics. 2004, 114: 768-775. 10.1542/peds.2004-0730.

Gibson ES, Powles ACP, Thabane L, O’Brien S, Molnar DS, Trajanovic N, Ogilvie R, Shapiro C, Yan M, Chilcott-Tanser L: “Sleepiness” is serious in adolescence: two surveys of 3235 Canadian students. BMC Publ Health. 2006, 6: 116-10.1186/1471-2458-6-116.

Pre-publication history

The pre-publication history for this paper can be accessed here: http://www.biomedcentral.com/1471-2458/13/840/prepub

Download references

Acknowledgements

The authors wish to thank the students of Mountain View High School (Mountain View, California) for participating in this study.

Author information

Authors and affiliations.

Mountain View High School, Mountain View, 3535 Truman Avenue, Mountain View, CA, 94040, USA

Nila Nathan

Department of Psychiatry and Behavioral Sciences, Stanford University, 3801 Miranda Avenue (151Y), Stanford CA 94305, Palo Alto, CA, 94304, USA

Jamie Zeitzer

Mental Illness Research, Education, and Clinical Center, VA Palo Alto Health Care System, 3801 Miranda Avenue (151Y), Palo Alto, CA, 94304, USA

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Jamie Zeitzer .

Additional information

Competing interests.

The authors declare that they have no competing interests.

Authors’ contributions

JMZ and NN designed the study, analyzed the data, and drafted the manuscript. Both authors have read and approved the final manuscript.

Electronic supplementary material

Additional file 1: questionnaire.(doc 34 kb), rights and permissions.

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License ( http://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Reprints and permissions

About this article

Cite this article.

Nathan, N., Zeitzer, J. A survey study of the association between mobile phone use and daytime sleepiness in California high school students. BMC Public Health 13 , 840 (2013). https://doi.org/10.1186/1471-2458-13-840

Download citation

Received : 10 November 2012

Accepted : 10 September 2013

Published : 12 September 2013

DOI : https://doi.org/10.1186/1471-2458-13-840

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Sleep deprivation

- Mobile phone

BMC Public Health

ISSN: 1471-2458

- Submission enquiries: [email protected]

- General enquiries: [email protected]

How to Write a Survey Paper: Brief Overview

Every student wishes there was a shortcut to learning about a subject. Writing a survey paper can be an effective tool for synthesizing and consolidating information on a particular topic to gain mastery over it.

There are several techniques and best practices for writing a successful survey paper. Our team is ready to guide you through the writing process and teach you how to write a paper that will benefit your academic and professional career.

What is a Survey Paper

A survey paper is a type of academic writing that aims to give readers a comprehensive understanding of the current state of research on a particular topic. By synthesizing and analyzing already existing research, a survey paper provides good shortcuts highlighting meaningful achievements and recent advances in the field and shows the gaps where further research might be needed.

The survey paper format includes an introduction that defines the scope of the research domain, followed by a thorough literature review section that summarizes and critiques existing research while showcasing areas for further research. A good survey paper must also provide an overview of commonly used methodologies, approaches, key terms, and recent trends in the field and a clear summary that synthesizes the main findings presented.

Our essay writing service team not only provides the best survey paper example but can also write a custom academic paper based on your specific requirements and needs.

How to Write a Survey Paper: Important Steps

If you have your head in your hands, wondering how to write a survey paper, you must be new here. Luckily, our team of experts got you! Below you will find the steps that will guide you to the best approach to writing a successful survey paper. No more worries about how to research a topic . Let's dive in!

Obviously, the first step is to choose a topic that is both interesting to you and relevant to a large audience. If you are struggling with topic selection, go for only the ones that have the most literature to compose a comprehensive research paper.

Once you have selected your topic, define the scope of your survey paper and the specific research questions that will guide your literature review. This will help you establish boundaries and ensure that your paper is focused and well-structured.

Next, start collecting existing research on your topic through various academic databases and literature reviews. Make sure you are up to date with recent discoveries and advances. Before selecting any work for the survey, make sure the database is credible. Determine what sources are considered trustworthy and reputable within the specific domain.

Continue survey paper writing by selecting the most relevant and significant research pieces to include in your literature overview. Make sure to methodically analyze each source and critically evaluate its relevance, rigor, validity, and contribution to the field.

At this point, you have already undertaken half of the job. Maybe even more since collecting and analyzing the literature is often the most challenging part of writing a survey paper. Now it's time to organize and structure your paper. Follow the well-established outline, give a thorough review, and compose compelling body paragraphs. Don't forget to include detailed methodology and highlight key findings and revolutionary ideas.

Finish off your writing with a powerful conclusion that not only summarizes the key arguments but also indicates future research directions.

Feeling Overwhelmed by All the College Essays?

Our expert writers will ensure that you submit top-quality papers without missing any deadlines!

Survey Paper Outline

The following is a general outline of a survey paper.

- Introduction - with background information on the topic and research questions

- Literature Overview - including relevant research studies and their analysis

- Methodologies and Approaches - detailing the methods used to collect and analyze data in the literature overview

- Findings and Trends - summarizing the key findings and trends from the literature review

- Challenges and Gaps - highlighting the limitations of studies reviewed

- Future Research Direction - exploring future research opportunities and recommendations

- Conclusion - a summary of the research conducted and its significance, along with suggestions for further work in this area.

- References - a list of all the sources cited in the paper, including academic articles and reports.

You can always customize this outline to fit your paper's specific requirements, but none of the components can be eliminated. Our custom essay writer

Further, we can explore survey paper example formats to get a better understanding of what a well-written survey paper looks like. Our custom essay writer can assist in crafting a plagiarism-free essay tailored to meet your unique needs.

Survey Paper Format

Having a basic understanding of an outline for a survey paper is just the beginning. To excel in survey paper writing, it's important to become proficient in academic essay formatting techniques. Have the following as a rule of thumb: make sure each section relates to the others and that the flow of your paper is logical and readable.

Title - You need to come up with a clear and concise title that reflects the main objective of your research question.

Survey paper example title: 'The analysis of recommender systems in E-commerce.'

Abstract - Here, you should state the purpose of your research and summarize key findings in a brief paragraph. The abstract is a shortcut to the paper, so make sure it's informative.

Introduction - This section is a crucial element of an academic essay and should be intriguing and provide background information on the topic, feeding the readers' curiosity.

Literature with benefits and limitations - This section dives into the existing literature on the research question, including relevant studies and their analyses. When reviewing the literature, it is important to highlight both benefits and limitations of existing studies to identify gaps for future research.

Result analysis - In this section, you should present and analyze the results of your survey paper. Make sure to include statistical data, graphs, and charts to support your conclusions.

Conclusion - Just like in any other thesis writing, here you need to sum up the key findings of your survey paper. How it helped advance the research topic, what limitations need to be addressed, and important implications for future research.

Future Research Direction - You can either give this a separate section or include it in a conclusion, but you can never overlook the importance of a future research direction. Distinctly point out areas of limitations and suggest possible avenues for future research.

References - Finally, be sure to include a list of all the sources/references you've used in your research. Without a list of references, your work will lose all its credibility and can no longer be beneficial to other researchers.

Writing a Good Survey Paper: Helpful Tips

After mastering the basics of how to write a good survey paper, there are a few tips to keep in mind. They will help you advance your writing and ensure your survey paper stands out among others.

Select Only Relevant Literature

When conducting research, one can easily get carried away and start hoarding all available literature, which may not necessarily be relevant to your research question. Make sure to stay within the scope of your topic. Clearly articulate your research question, and then select only literature that directly addresses the research question. A few initial readings might not reveal the relevance, so you need a systematic review and filter of the literature that is directly related to the research question.

Use Various Sources and Be Up-to-Date

Our team suggests only using up-to-date material that was published within the last 5 years. Additional sources may be used if they contribute significantly to the research question, but it is important to prioritize current literature.

Use more than 10 research papers. Though narrowing your pool of references to only relevant literature is important, it's also crucial that you have a sufficient number of sources.

Rely on Reputable Sources

Writing a survey paper is a challenge. Don't forget that it is quality over quantity. Be sure to choose reputable sources that have been peer-reviewed and are recognized within your field of research. Having a large number of various research papers does not mean that your survey paper is of high quality.

Construct a Concise Research Question

Having a short and to-the-point research question not only helps the audience understand the direction of your paper but also helps you stay focused on a clear goal. With a clear research question, you will have an easier time selecting the relevant literature, avoiding unnecessary information, and maintaining the structure of your paper.

Use an Appropriate Format

The scholarly world appreciates when researchers follow a standard format when presenting their survey papers. Therefore, it is important to use a suitable and consistent format that adheres to the guidelines provided by your academic institution or field.

Our paper survey template offers a clear structure that can aid in organizing your thoughts and sources, as well as ensuring that you cover all the necessary components of a survey paper.

Don't forget to use appropriate heading, font, spacing, margins, and referencing style. If there is a strict word limit, be sure to adhere to it and use concise wording.

Use Logical Sequence

A survey paper is different from a regular research paper. Every element of the essay needs to relate to the research question and tie into the overall objective of the paper.

Writing research papers takes a lot of effort and attention to detail. You will have to revise, edit and proofread your work several times. If you are struggling with any aspect of the writing process, just say, ' Write my research paper for me ,' and our team of tireless writers will be happy to assist you.

Starting Point: Survey Paper Example Topics

Learning how to write a survey paper is important, but it is only one aspect of the process.

Now you need a powerful research question. To help get you started, we have compiled a list of survey paper example topics that may inspire you.

- Survey of Evolution and Challenges of Electronic Search Engines

- A Comprehensive Survey Paper on Machine Learning Algorithms

- Survey of Leaf Image Analysis for Plant Species Recognition

- Advances in Natural Language Processing for Sentiment Analysis

- Emerging Trends in Cybersecurity Threat Detection

- A Comprehensive Survey of Techniques in Big Data Analytics in Healthcare

- A Survey of Advances in Digital Art and Virtual Reality

- A Systematic Review of the Impact of Social Media Marketing Strategies on Consumer Behavior

- A Survey of AI Systems in Artistic Expression

- Exploring New Research Methods and Ethical Considerations in Anthropology

- Exploring Data-driven Approaches for Performance Analysis and Decision Making in Sports

- A Survey of Benefits of Optimizing Performance through Diet and Supplementation

- A Critical Review of Existing Research on The Impact of Climate Change on Biodiversity Conservation Strategies

- Investigating the Future of Blockchain Technology for Secure Data Sharing

- A Critical Review of the Literature on Mental Health and Innovation in the Workplace

Final Thoughts

Next time you are asked to write a survey paper, remember it is not just following an iterative process of gathering and summarizing existing research; it requires a deep understanding of the subject matter as well as critical analysis skills. Creative thinking and innovative approaches also play a key role in producing high-quality survey papers.

Our expert writers can help you navigate the complex process of writing a survey paper, from topic selection to data analysis and interpretation.

Finding It Difficult to Write a Survey Paper?

Our essay writing service offers plagiarism-free papers tailored to your specific needs.

Are you looking for advice on how to create an engaging and informative survey paper? This frequently asked questions (FAQ) section offers valuable responses to common inquiries that researchers frequently come across when writing a survey paper. Let's delve into it!

What is Survey Paper in Ph.D.?

What is the difference between survey paper and literature review paper, related articles.

.webp)

- Privacy Policy

Home » Survey Research – Types, Methods, Examples

Survey Research – Types, Methods, Examples

Table of Contents

Survey Research

Definition:

Survey Research is a quantitative research method that involves collecting standardized data from a sample of individuals or groups through the use of structured questionnaires or interviews. The data collected is then analyzed statistically to identify patterns and relationships between variables, and to draw conclusions about the population being studied.

Survey research can be used to answer a variety of questions, including:

- What are people’s opinions about a certain topic?

- What are people’s experiences with a certain product or service?

- What are people’s beliefs about a certain issue?

Survey Research Methods

Survey Research Methods are as follows:

- Telephone surveys: A survey research method where questions are administered to respondents over the phone, often used in market research or political polling.

- Face-to-face surveys: A survey research method where questions are administered to respondents in person, often used in social or health research.

- Mail surveys: A survey research method where questionnaires are sent to respondents through mail, often used in customer satisfaction or opinion surveys.

- Online surveys: A survey research method where questions are administered to respondents through online platforms, often used in market research or customer feedback.

- Email surveys: A survey research method where questionnaires are sent to respondents through email, often used in customer satisfaction or opinion surveys.

- Mixed-mode surveys: A survey research method that combines two or more survey modes, often used to increase response rates or reach diverse populations.

- Computer-assisted surveys: A survey research method that uses computer technology to administer or collect survey data, often used in large-scale surveys or data collection.

- Interactive voice response surveys: A survey research method where respondents answer questions through a touch-tone telephone system, often used in automated customer satisfaction or opinion surveys.

- Mobile surveys: A survey research method where questions are administered to respondents through mobile devices, often used in market research or customer feedback.

- Group-administered surveys: A survey research method where questions are administered to a group of respondents simultaneously, often used in education or training evaluation.

- Web-intercept surveys: A survey research method where questions are administered to website visitors, often used in website or user experience research.

- In-app surveys: A survey research method where questions are administered to users of a mobile application, often used in mobile app or user experience research.

- Social media surveys: A survey research method where questions are administered to respondents through social media platforms, often used in social media or brand awareness research.

- SMS surveys: A survey research method where questions are administered to respondents through text messaging, often used in customer feedback or opinion surveys.

- IVR surveys: A survey research method where questions are administered to respondents through an interactive voice response system, often used in automated customer feedback or opinion surveys.

- Mixed-method surveys: A survey research method that combines both qualitative and quantitative data collection methods, often used in exploratory or mixed-method research.

- Drop-off surveys: A survey research method where respondents are provided with a survey questionnaire and asked to return it at a later time or through a designated drop-off location.

- Intercept surveys: A survey research method where respondents are approached in public places and asked to participate in a survey, often used in market research or customer feedback.

- Hybrid surveys: A survey research method that combines two or more survey modes, data sources, or research methods, often used in complex or multi-dimensional research questions.

Types of Survey Research

There are several types of survey research that can be used to collect data from a sample of individuals or groups. following are Types of Survey Research:

- Cross-sectional survey: A type of survey research that gathers data from a sample of individuals at a specific point in time, providing a snapshot of the population being studied.

- Longitudinal survey: A type of survey research that gathers data from the same sample of individuals over an extended period of time, allowing researchers to track changes or trends in the population being studied.

- Panel survey: A type of longitudinal survey research that tracks the same sample of individuals over time, typically collecting data at multiple points in time.

- Epidemiological survey: A type of survey research that studies the distribution and determinants of health and disease in a population, often used to identify risk factors and inform public health interventions.

- Observational survey: A type of survey research that collects data through direct observation of individuals or groups, often used in behavioral or social research.

- Correlational survey: A type of survey research that measures the degree of association or relationship between two or more variables, often used to identify patterns or trends in data.

- Experimental survey: A type of survey research that involves manipulating one or more variables to observe the effect on an outcome, often used to test causal hypotheses.

- Descriptive survey: A type of survey research that describes the characteristics or attributes of a population or phenomenon, often used in exploratory research or to summarize existing data.

- Diagnostic survey: A type of survey research that assesses the current state or condition of an individual or system, often used in health or organizational research.

- Explanatory survey: A type of survey research that seeks to explain or understand the causes or mechanisms behind a phenomenon, often used in social or psychological research.

- Process evaluation survey: A type of survey research that measures the implementation and outcomes of a program or intervention, often used in program evaluation or quality improvement.

- Impact evaluation survey: A type of survey research that assesses the effectiveness or impact of a program or intervention, often used to inform policy or decision-making.

- Customer satisfaction survey: A type of survey research that measures the satisfaction or dissatisfaction of customers with a product, service, or experience, often used in marketing or customer service research.

- Market research survey: A type of survey research that collects data on consumer preferences, behaviors, or attitudes, often used in market research or product development.

- Public opinion survey: A type of survey research that measures the attitudes, beliefs, or opinions of a population on a specific issue or topic, often used in political or social research.

- Behavioral survey: A type of survey research that measures actual behavior or actions of individuals, often used in health or social research.

- Attitude survey: A type of survey research that measures the attitudes, beliefs, or opinions of individuals, often used in social or psychological research.

- Opinion poll: A type of survey research that measures the opinions or preferences of a population on a specific issue or topic, often used in political or media research.

- Ad hoc survey: A type of survey research that is conducted for a specific purpose or research question, often used in exploratory research or to answer a specific research question.

Types Based on Methodology

Based on Methodology Survey are divided into two Types:

Quantitative Survey Research

Qualitative survey research.

Quantitative survey research is a method of collecting numerical data from a sample of participants through the use of standardized surveys or questionnaires. The purpose of quantitative survey research is to gather empirical evidence that can be analyzed statistically to draw conclusions about a particular population or phenomenon.