- Reference Manager

- Simple TEXT file

People also looked at

Specialty grand challenge article, grand challenges in image processing.

- Université Paris-Saclay, CNRS, CentraleSupélec, Laboratoire des signaux et Systèmes, Gif-sur-Yvette, France

Introduction

The field of image processing has been the subject of intensive research and development activities for several decades. This broad area encompasses topics such as image/video processing, image/video analysis, image/video communications, image/video sensing, modeling and representation, computational imaging, electronic imaging, information forensics and security, 3D imaging, medical imaging, and machine learning applied to these respective topics. Hereafter, we will consider both image and video content (i.e. sequence of images), and more generally all forms of visual information.

Rapid technological advances, especially in terms of computing power and network transmission bandwidth, have resulted in many remarkable and successful applications. Nowadays, images are ubiquitous in our daily life. Entertainment is one class of applications that has greatly benefited, including digital TV (e.g., broadcast, cable, and satellite TV), Internet video streaming, digital cinema, and video games. Beyond entertainment, imaging technologies are central in many other applications, including digital photography, video conferencing, video monitoring and surveillance, satellite imaging, but also in more distant domains such as healthcare and medicine, distance learning, digital archiving, cultural heritage or the automotive industry.

In this paper, we highlight a few research grand challenges for future imaging and video systems, in order to achieve breakthroughs to meet the growing expectations of end users. Given the vastness of the field, this list is by no means exhaustive.

A Brief Historical Perspective

We first briefly discuss a few key milestones in the field of image processing. Key inventions in the development of photography and motion pictures can be traced to the 19th century. The earliest surviving photograph of a real-world scene was made by Nicéphore Niépce in 1827 ( Hirsch, 1999 ). The Lumière brothers made the first cinematographic film in 1895, with a public screening the same year ( Lumiere, 1996 ). After decades of remarkable developments, the second half of the 20th century saw the emergence of new technologies launching the digital revolution. While the first prototype digital camera using a Charge-Coupled Device (CCD) was demonstrated in 1975, the first commercial consumer digital cameras started appearing in the early 1990s. These digital cameras quickly surpassed cameras using films and the digital revolution in the field of imaging was underway. As a key consequence, the digital process enabled computational imaging, in other words the use of sophisticated processing algorithms in order to produce high quality images.

In 1992, the Joint Photographic Experts Group (JPEG) released the JPEG standard for still image coding ( Wallace, 1992 ). In parallel, in 1993, the Moving Picture Experts Group (MPEG) published its first standard for coding of moving pictures and associated audio, MPEG-1 ( Le Gall, 1991 ), and a few years later MPEG-2 ( Haskell et al., 1996 ). By guaranteeing interoperability, these standards have been essential in many successful applications and services, for both the consumer and business markets. In particular, it is remarkable that, almost 30 years later, JPEG remains the dominant format for still images and photographs.

In the late 2000s and early 2010s, we could observe a paradigm shift with the appearance of smartphones integrating a camera. Thanks to advances in computational photography, these new smartphones soon became capable of rivaling the quality of consumer digital cameras at the time. Moreover, these smartphones were also capable of acquiring video sequences. Almost concurrently, another key evolution was the development of high bandwidth networks. In particular, the launch of 4G wireless services circa 2010 enabled users to quickly and efficiently exchange multimedia content. From this point, most of us are carrying a camera, anywhere and anytime, allowing to capture images and videos at will and to seamlessly exchange them with our contacts.

As a direct consequence of the above developments, we are currently observing a boom in the usage of multimedia content. It is estimated that today 3.2 billion images are shared each day on social media platforms, and 300 h of video are uploaded every minute on YouTube 1 . In a 2019 report, Cisco estimated that video content represented 75% of all Internet traffic in 2017, and this share is forecasted to grow to 82% in 2022 ( Cisco, 2019 ). While Internet video streaming and Over-The-Top (OTT) media services account for a significant bulk of this traffic, other applications are also expected to see significant increases, including video surveillance and Virtual Reality (VR)/Augmented Reality (AR).

Hyper-Realistic and Immersive Imaging

A major direction and key driver to research and development activities over the years has been the objective to deliver an ever-improving image quality and user experience.

For instance, in the realm of video, we have observed constantly increasing spatial and temporal resolutions, with the emergence nowadays of Ultra High Definition (UHD). Another aim has been to provide a sense of the depth in the scene. For this purpose, various 3D video representations have been explored, including stereoscopic 3D and multi-view ( Dufaux et al., 2013 ).

In this context, the ultimate goal is to be able to faithfully represent the physical world and to deliver an immersive and perceptually hyperrealist experience. For this purpose, we discuss hereafter some emerging innovations. These developments are also very relevant in VR and AR applications ( Slater, 2014 ). Finally, while this paper is only focusing on the visual information processing aspects, it is obvious that emerging display technologies ( Masia et al., 2013 ) and audio also plays key roles in many application scenarios.

Light Fields, Point Clouds, Volumetric Imaging

In order to wholly represent a scene, the light information coming from all the directions has to be represented. For this purpose, the 7D plenoptic function is a key concept ( Adelson and Bergen, 1991 ), although it is unmanageable in practice.

By introducing additional constraints, the light field representation collects radiance from rays in all directions. Therefore, it contains a much richer information, when compared to traditional 2D imaging that captures a 2D projection of the light in the scene integrating the angular domain. For instance, this allows post-capture processing such as refocusing and changing the viewpoint. However, it also entails several technical challenges, in terms of acquisition and calibration, as well as computational image processing steps including depth estimation, super-resolution, compression and image synthesis ( Ihrke et al., 2016 ; Wu et al., 2017 ). The resolution trade-off between spatial and angular resolutions is a fundamental issue. With a significant fraction of the earlier work focusing on static light fields, it is also expected that dynamic light field videos will stimulate more interest in the future. In particular, dense multi-camera arrays are becoming more tractable. Finally, the development of efficient light field compression and streaming techniques is a key enabler in many applications ( Conti et al., 2020 ).

Another promising direction is to consider a point cloud representation. A point cloud is a set of points in the 3D space represented by their spatial coordinates and additional attributes, including color pixel values, normals, or reflectance. They are often very large, easily ranging in the millions of points, and are typically sparse. One major distinguishing feature of point clouds is that, unlike images, they do not have a regular structure, calling for new algorithms. To remove the noise often present in acquired data, while preserving the intrinsic characteristics, effective 3D point cloud filtering approaches are needed ( Han et al., 2017 ). It is also important to develop efficient techniques for Point Cloud Compression (PCC). For this purpose, MPEG is developing two standards: Geometry-based PCC (G-PCC) and Video-based PCC (V-PCC) ( Graziosi et al., 2020 ). G-PCC considers the point cloud in its native form and compress it using 3D data structures such as octrees. Conversely, V-PCC projects the point cloud onto 2D planes and then applies existing video coding schemes. More recently, deep learning-based approaches for PCC have been shown to be effective ( Guarda et al., 2020 ). Another challenge is to develop generic and robust solutions able to handle potentially widely varying characteristics of point clouds, e.g. in terms of size and non-uniform density. Efficient solutions for dynamic point clouds are also needed. Finally, while many techniques focus on the geometric information or the attributes independently, it is paramount to process them jointly.

High Dynamic Range and Wide Color Gamut

The human visual system is able to perceive, using various adaptation mechanisms, a broad range of luminous intensities, from very bright to very dark, as experienced every day in the real world. Nonetheless, current imaging technologies are still limited in terms of capturing or rendering such a wide range of conditions. High Dynamic Range (HDR) imaging aims at addressing this issue. Wide Color Gamut (WCG) is also often associated with HDR in order to provide a wider colorimetry.

HDR has reached some levels of maturity in the context of photography. However, extending HDR to video sequences raises scientific challenges in order to provide high quality and cost-effective solutions, impacting the whole imaging processing pipeline, including content acquisition, tone reproduction, color management, coding, and display ( Dufaux et al., 2016 ; Chalmers and Debattista, 2017 ). Backward compatibility with legacy content and traditional systems is another issue. Despite recent progress, the potential of HDR has not been fully exploited yet.

Coding and Transmission

Three decades of standardization activities have continuously improved the hybrid video coding scheme based on the principles of transform coding and predictive coding. The Versatile Video Coding (VVC) standard has been finalized in 2020 ( Bross et al., 2021 ), achieving approximately 50% bit rate reduction for the same subjective quality when compared to its predecessor, High Efficiency Video Coding (HEVC). While substantially outperforming VVC in the short term may be difficult, one encouraging direction is to rely on improved perceptual models to further optimize compression in terms of visual quality. Another direction, which has already shown promising results, is to apply deep learning-based approaches ( Ding et al., 2021 ). Here, one key issue is the ability to generalize these deep models to a wide diversity of video content. The second key issue is the implementation complexity, both in terms of computation and memory requirements, which is a significant obstacle to a widespread deployment. Besides, the emergence of new video formats targeting immersive communications is also calling for new coding schemes ( Wien et al., 2019 ).

Considering that in many application scenarios, videos are processed by intelligent analytic algorithms rather than viewed by users, another interesting track is the development of video coding for machines ( Duan et al., 2020 ). In this context, the compression is optimized taking into account the performance of video analysis tasks.

The push toward hyper-realistic and immersive visual communications entails most often an increasing raw data rate. Despite improved compression schemes, more transmission bandwidth is needed. Moreover, some emerging applications, such as VR/AR, autonomous driving, and Industry 4.0, bring a strong requirement for low latency transmission, with implications on both the imaging processing pipeline and the transmission channel. In this context, the emergence of 5G wireless networks will positively contribute to the deployment of new multimedia applications, and the development of future wireless communication technologies points toward promising advances ( Da Costa and Yang, 2020 ).

Human Perception and Visual Quality Assessment

It is important to develop effective models of human perception. On the one hand, it can contribute to the development of perceptually inspired algorithms. On the other hand, perceptual quality assessment methods are needed in order to optimize and validate new imaging solutions.

The notion of Quality of Experience (QoE) relates to the degree of delight or annoyance of the user of an application or service ( Le Callet et al., 2012 ). QoE is strongly linked to subjective and objective quality assessment methods. Many years of research have resulted in the successful development of perceptual visual quality metrics based on models of human perception ( Lin and Kuo, 2011 ; Bovik, 2013 ). More recently, deep learning-based approaches have also been successfully applied to this problem ( Bosse et al., 2017 ). While these perceptual quality metrics have achieved good performances, several significant challenges remain. First, when applied to video sequences, most current perceptual metrics are applied on individual images, neglecting temporal modeling. Second, whereas color is a key attribute, there are currently no widely accepted perceptual quality metrics explicitly considering color. Finally, new modalities, such as 360° videos, light fields, point clouds, and HDR, require new approaches.

Another closely related topic is image esthetic assessment ( Deng et al., 2017 ). The esthetic quality of an image is affected by numerous factors, such as lighting, color, contrast, and composition. It is useful in different application scenarios such as image retrieval and ranking, recommendation, and photos enhancement. While earlier attempts have used handcrafted features, most recent techniques to predict esthetic quality are data driven and based on deep learning approaches, leveraging the availability of large annotated datasets for training ( Murray et al., 2012 ). One key challenge is the inherently subjective nature of esthetics assessment, resulting in ambiguity in the ground-truth labels. Another important issue is to explain the behavior of deep esthetic prediction models.

Analysis, Interpretation and Understanding

Another major research direction has been the objective to efficiently analyze, interpret and understand visual data. This goal is challenging, due to the high diversity and complexity of visual data. This has led to many research activities, involving both low-level and high-level analysis, addressing topics such as image classification and segmentation, optical flow, image indexing and retrieval, object detection and tracking, and scene interpretation and understanding. Hereafter, we discuss some trends and challenges.

Keypoints Detection and Local Descriptors

Local imaging matching has been the cornerstone of many analysis tasks. It involves the detection of keypoints, i.e. salient visual points that can be robustly and repeatedly detected, and descriptors, i.e. a compact signature locally describing the visual features at each keypoint. It allows to subsequently compute pairwise matching between the features to reveal local correspondences. In this context, several frameworks have been proposed, including Scale Invariant Feature Transform (SIFT) ( Lowe, 2004 ) and Speeded Up Robust Features (SURF) ( Bay et al., 2008 ), and later binary variants including Binary Robust Independent Elementary Feature (BRIEF) ( Calonder et al., 2010 ), Oriented FAST and Rotated BRIEF (ORB) ( Rublee et al., 2011 ) and Binary Robust Invariant Scalable Keypoints (BRISK) ( Leutenegger et al., 2011 ). Although these approaches exhibit scale and rotation invariance, they are less suited to deal with large 3D distortions such as perspective deformations, out-of-plane rotations, and significant viewpoint changes. Besides, they tend to fail under significantly varying and challenging illumination conditions.

These traditional approaches based on handcrafted features have been successfully applied to problems such as image and video retrieval, object detection, visual Simultaneous Localization And Mapping (SLAM), and visual odometry. Besides, the emergence of new imaging modalities as introduced above can also be beneficial for image analysis tasks, including light fields ( Galdi et al., 2019 ), point clouds ( Guo et al., 2020 ), and HDR ( Rana et al., 2018 ). However, when applied to high-dimensional visual data for semantic analysis and understanding, these approaches based on handcrafted features have been supplanted in recent years by approaches based on deep learning.

Deep Learning-Based Methods

Data-driven deep learning-based approaches ( LeCun et al., 2015 ), and in particular the Convolutional Neural Network (CNN) architecture, represent nowadays the state-of-the-art in terms of performances for complex pattern recognition tasks in scene analysis and understanding. By combining multiple processing layers, deep models are able to learn data representations with different levels of abstraction.

Supervised learning is the most common form of deep learning. It requires a large and fully labeled training dataset, a typically time-consuming and expensive process needed whenever tackling a new application scenario. Moreover, in some specialized domains, e.g. medical data, it can be very difficult to obtain annotations. To alleviate this major burden, methods such as transfer learning and weakly supervised learning have been proposed.

In another direction, deep models have been shown to be vulnerable to adversarial attacks ( Akhtar and Mian, 2018 ). Those attacks consist in introducing subtle perturbations to the input, such that the model predicts an incorrect output. For instance, in the case of images, imperceptible pixel differences are able to fool deep learning models. Such adversarial attacks are definitively an important obstacle to the successful deployment of deep learning, especially in applications where safety and security are critical. While some early solutions have been proposed, a significant challenge is to develop effective defense mechanisms against those attacks.

Finally, another challenge is to enable low complexity and efficient implementations. This is especially important for mobile or embedded applications. For this purpose, further interactions between signal processing and machine learning can potentially bring additional benefits. For instance, one direction is to compress deep neural networks in order to enable their more efficient handling. Moreover, by combining traditional processing techniques with deep learning models, it is possible to develop low complexity solutions while preserving high performance.

Explainability in Deep Learning

While data-driven deep learning models often achieve impressive performances on many visual analysis tasks, their black-box nature often makes it inherently very difficult to understand how they reach a predicted output and how it relates to particular characteristics of the input data. However, this is a major impediment in many decision-critical application scenarios. Moreover, it is important not only to have confidence in the proposed solution, but also to gain further insights from it. Based on these considerations, some deep learning systems aim at promoting explainability ( Adadi and Berrada, 2018 ; Xie et al., 2020 ). This can be achieved by exhibiting traits related to confidence, trust, safety, and ethics.

However, explainable deep learning is still in its early phase. More developments are needed, in particular to develop a systematic theory of model explanation. Important aspects include the need to understand and quantify risk, to comprehend how the model makes predictions for transparency and trustworthiness, and to quantify the uncertainty in the model prediction. This challenge is key in order to deploy and use deep learning-based solutions in an accountable way, for instance in application domains such as healthcare or autonomous driving.

Self-Supervised Learning

Self-supervised learning refers to methods that learn general visual features from large-scale unlabeled data, without the need for manual annotations. Self-supervised learning is therefore very appealing, as it allows exploiting the vast amount of unlabeled images and videos available. Moreover, it is widely believed that it is closer to how humans actually learn. One common approach is to use the data to provide the supervision, leveraging its structure. More generally, a pretext task can be defined, e.g. image inpainting, colorizing grayscale images, predicting future frames in videos, by withholding some parts of the data and by training the neural network to predict it ( Jing and Tian, 2020 ). By learning an objective function corresponding to the pretext task, the network is forced to learn relevant visual features in order to solve the problem. Self-supervised learning has also been successfully applied to autonomous vehicles perception. More specifically, the complementarity between analytical and learning methods can be exploited to address various autonomous driving perception tasks, without the prerequisite of an annotated data set ( Chiaroni et al., 2021 ).

While good performances have already been obtained using self-supervised learning, further work is still needed. A few promising directions are outlined hereafter. Combining self-supervised learning with other learning methods is a first interesting path. For instance, semi-supervised learning ( Van Engelen and Hoos, 2020 ) and few-short learning ( Fei-Fei et al., 2006 ) methods have been proposed for scenarios where limited labeled data is available. The performance of these methods can potentially be boosted by incorporating a self-supervised pre-training. The pretext task can also serve to add regularization. Another interesting trend in self-supervised learning is to train neural networks with synthetic data. The challenge here is to bridge the domain gap between the synthetic and real data. Finally, another compelling direction is to exploit data from different modalities. A simple example is to consider both the video and audio signals in a video sequence. In another example in the context of autonomous driving, vehicles are typically equipped with multiple sensors, including cameras, LIght Detection And Ranging (LIDAR), Global Positioning System (GPS), and Inertial Measurement Units (IMU). In such cases, it is easy to acquire large unlabeled multimodal datasets, where the different modalities can be effectively exploited in self-supervised learning methods.

Reproducible Research and Large Public Datasets

The reproducible research initiative is another way to further ensure high-quality research for the benefit of our community ( Vandewalle et al., 2009 ). Reproducibility, referring to the ability by someone else working independently to accurately reproduce the results of an experiment, is a key principle of the scientific method. In the context of image and video processing, it is usually not sufficient to provide a detailed description of the proposed algorithm. Most often, it is essential to also provide access to the code and data. This is even more imperative in the case of deep learning-based models.

In parallel, the availability of large public datasets is also highly desirable in order to support research activities. This is especially critical for new emerging modalities or specific application scenarios, where it is difficult to get access to relevant data. Moreover, with the emergence of deep learning, large datasets, along with labels, are often needed for training, which can be another burden.

Conclusion and Perspectives

The field of image processing is very broad and rich, with many successful applications in both the consumer and business markets. However, many technical challenges remain in order to further push the limits in imaging technologies. Two main trends are on the one hand to always improve the quality and realism of image and video content, and on the other hand to be able to effectively interpret and understand this vast and complex amount of visual data. However, the list is certainly not exhaustive and there are many other interesting problems, e.g. related to computational imaging, information security and forensics, or medical imaging. Key innovations will be found at the crossroad of image processing, optics, psychophysics, communication, computer vision, artificial intelligence, and computer graphics. Multi-disciplinary collaborations are therefore critical moving forward, involving actors from both academia and the industry, in order to drive these breakthroughs.

The “Image Processing” section of Frontier in Signal Processing aims at giving to the research community a forum to exchange, discuss and improve new ideas, with the goal to contribute to the further advancement of the field of image processing and to bring exciting innovations in the foreseeable future.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

1 https://www.brandwatch.com/blog/amazing-social-media-statistics-and-facts/ (accessed on Feb. 23, 2021).

Adadi, A., and Berrada, M. (2018). Peeking inside the black-box: a survey on explainable artificial intelligence (XAI). IEEE access 6, 52138–52160. doi:10.1109/access.2018.2870052

CrossRef Full Text | Google Scholar

Adelson, E. H., and Bergen, J. R. (1991). “The plenoptic function and the elements of early vision” Computational models of visual processing . Cambridge, MA: MIT Press , 3-20.

Google Scholar

Akhtar, N., and Mian, A. (2018). Threat of adversarial attacks on deep learning in computer vision: a survey. IEEE Access 6, 14410–14430. doi:10.1109/access.2018.2807385

Bay, H., Ess, A., Tuytelaars, T., and Van Gool, L. (2008). Speeded-up robust features (SURF). Computer Vis. image understanding 110 (3), 346–359. doi:10.1016/j.cviu.2007.09.014

Bosse, S., Maniry, D., Müller, K. R., Wiegand, T., and Samek, W. (2017). Deep neural networks for no-reference and full-reference image quality assessment. IEEE Trans. Image Process. 27 (1), 206–219. doi:10.1109/TIP.2017.2760518

PubMed Abstract | CrossRef Full Text | Google Scholar

Bovik, A. C. (2013). Automatic prediction of perceptual image and video quality. Proc. IEEE 101 (9), 2008–2024. doi:10.1109/JPROC.2013.2257632

Bross, B., Chen, J., Ohm, J. R., Sullivan, G. J., and Wang, Y. K. (2021). Developments in international video coding standardization after AVC, with an overview of Versatile Video Coding (VVC). Proc. IEEE . doi:10.1109/JPROC.2020.3043399

Calonder, M., Lepetit, V., Strecha, C., and Fua, P. (2010). Brief: binary robust independent elementary features. In K. Daniilidis, P. Maragos, and N. Paragios (eds) European conference on computer vision . Berlin, Heidelberg: Springer , 778–792. doi:10.1007/978-3-642-15561-1_56

Chalmers, A., and Debattista, K. (2017). HDR video past, present and future: a perspective. Signal. Processing: Image Commun. 54, 49–55. doi:10.1016/j.image.2017.02.003

Chiaroni, F., Rahal, M.-C., Hueber, N., and Dufaux, F. (2021). Self-supervised learning for autonomous vehicles perception: a conciliation between analytical and learning methods. IEEE Signal. Process. Mag. 38 (1), 31–41. doi:10.1109/msp.2020.2977269

Cisco, (20192019). Cisco visual networking index: forecast and trends, 2017-2022 (white paper) , Indianapolis, Indiana: Cisco Press .

Conti, C., Soares, L. D., and Nunes, P. (2020). Dense light field coding: a survey. IEEE Access 8, 49244–49284. doi:10.1109/ACCESS.2020.2977767

Da Costa, D. B., and Yang, H.-C. (2020). Grand challenges in wireless communications. Front. Commun. Networks 1 (1), 1–5. doi:10.3389/frcmn.2020.00001

Deng, Y., Loy, C. C., and Tang, X. (2017). Image aesthetic assessment: an experimental survey. IEEE Signal. Process. Mag. 34 (4), 80–106. doi:10.1109/msp.2017.2696576

Ding, D., Ma, Z., Chen, D., Chen, Q., Liu, Z., and Zhu, F. (2021). Advances in video compression system using deep neural network: a review and case studies . Ithaca, NY: Cornell university .

Duan, L., Liu, J., Yang, W., Huang, T., and Gao, W. (2020). Video coding for machines: a paradigm of collaborative compression and intelligent analytics. IEEE Trans. Image Process. 29, 8680–8695. doi:10.1109/tip.2020.3016485

Dufaux, F., Le Callet, P., Mantiuk, R., and Mrak, M. (2016). High dynamic range video - from acquisition, to display and applications . Cambridge, Massachusetts: Academic Press .

Dufaux, F., Pesquet-Popescu, B., and Cagnazzo, M. (2013). Emerging technologies for 3D video: creation, coding, transmission and rendering . Hoboken, NJ: Wiley .

Fei-Fei, L., Fergus, R., and Perona, P. (2006). One-shot learning of object categories. IEEE Trans. Pattern Anal. Mach Intell. 28 (4), 594–611. doi:10.1109/TPAMI.2006.79

Galdi, C., Chiesa, V., Busch, C., Lobato Correia, P., Dugelay, J.-L., and Guillemot, C. (2019). Light fields for face analysis. Sensors 19 (12), 2687. doi:10.3390/s19122687

Graziosi, D., Nakagami, O., Kuma, S., Zaghetto, A., Suzuki, T., and Tabatabai, A. (2020). An overview of ongoing point cloud compression standardization activities: video-based (V-PCC) and geometry-based (G-PCC). APSIPA Trans. Signal Inf. Process. 9, 2020. doi:10.1017/ATSIP.2020.12

Guarda, A., Rodrigues, N., and Pereira, F. (2020). Adaptive deep learning-based point cloud geometry coding. IEEE J. Selected Top. Signal Process. 15, 415-430. doi:10.1109/mmsp48831.2020.9287060

Guo, Y., Wang, H., Hu, Q., Liu, H., Liu, L., and Bennamoun, M. (2020). Deep learning for 3D point clouds: a survey. IEEE transactions on pattern analysis and machine intelligence . doi:10.1109/TPAMI.2020.3005434

Han, X.-F., Jin, J. S., Wang, M.-J., Jiang, W., Gao, L., and Xiao, L. (2017). A review of algorithms for filtering the 3D point cloud. Signal. Processing: Image Commun. 57, 103–112. doi:10.1016/j.image.2017.05.009

Haskell, B. G., Puri, A., and Netravali, A. N. (1996). Digital video: an introduction to MPEG-2 . Berlin, Germany: Springer Science and Business Media .

Hirsch, R. (1999). Seizing the light: a history of photography . New York, NY: McGraw-Hill .

Ihrke, I., Restrepo, J., and Mignard-Debise, L. (2016). Principles of light field imaging: briefly revisiting 25 years of research. IEEE Signal. Process. Mag. 33 (5), 59–69. doi:10.1109/MSP.2016.2582220

Jing, L., and Tian, Y. (2020). “Self-supervised visual feature learning with deep neural networks: a survey,” IEEE transactions on pattern analysis and machine intelligence , Ithaca, NY: Cornell University .

Le Callet, P., Möller, S., and Perkis, A. (2012). Qualinet white paper on definitions of quality of experience. European network on quality of experience in multimedia systems and services (COST Action IC 1003), 3(2012) .

Le Gall, D. (1991). Mpeg: A Video Compression Standard for Multimedia Applications. Commun. ACM 34, 46–58. doi:10.1145/103085.103090

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep learning. nature 521 (7553), 436–444. doi:10.1038/nature14539

Leutenegger, S., Chli, M., and Siegwart, R. Y. (2011). “BRISK: binary robust invariant scalable keypoints,” IEEE International conference on computer vision , Barcelona, Spain , 6-13 Nov, 2011 ( IEEE ), 2548–2555.

Lin, W., and Jay Kuo, C.-C. (2011). Perceptual visual quality metrics: a survey. J. Vis. Commun. image representation 22 (4), 297–312. doi:10.1016/j.jvcir.2011.01.005

Lowe, D. G. (2004). Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60 (2), 91–110. doi:10.1023/b:visi.0000029664.99615.94

Lumiere, L. (1996). 1936 the lumière cinematograph. J. Smpte 105 (10), 608–611. doi:10.5594/j17187

Masia, B., Wetzstein, G., Didyk, P., and Gutierrez, D. (2013). A survey on computational displays: pushing the boundaries of optics, computation, and perception. Comput. & Graphics 37 (8), 1012–1038. doi:10.1016/j.cag.2013.10.003

Murray, N., Marchesotti, L., and Perronnin, F. (2012). “AVA: a large-scale database for aesthetic visual analysis,” IEEE conference on computer vision and pattern recognition , Providence, RI , June, 2012 . ( IEEE ), 2408–2415. doi:10.1109/CVPR.2012.6247954

Rana, A., Valenzise, G., and Dufaux, F. (2018). Learning-based tone mapping operator for efficient image matching. IEEE Trans. Multimedia 21 (1), 256–268. doi:10.1109/TMM.2018.2839885

Rublee, E., Rabaud, V., Konolige, K., and Bradski, G. (2011). “ORB: an efficient alternative to SIFT or SURF,” IEEE International conference on computer vision , Barcelona, Spain , November, 2011 ( IEEE ), 2564–2571. doi:10.1109/ICCV.2011.6126544

Slater, M. (2014). Grand challenges in virtual environments. Front. Robotics AI 1, 3. doi:10.3389/frobt.2014.00003

Van Engelen, J. E., and Hoos, H. H. (2020). A survey on semi-supervised learning. Mach Learn. 109 (2), 373–440. doi:10.1007/s10994-019-05855-6

Vandewalle, P., Kovacevic, J., and Vetterli, M. (2009). Reproducible research in signal processing. IEEE Signal. Process. Mag. 26 (3), 37–47. doi:10.1109/msp.2009.932122

Wallace, G. K. (1992). The JPEG still picture compression standard. IEEE Trans. Consumer Electron.Feb 38 (1), xviii-xxxiv. doi:10.1109/30.125072

Wien, M., Boyce, J. M., Stockhammer, T., and Peng, W.-H. (20192019). Standardization status of immersive video coding. IEEE J. Emerg. Sel. Top. Circuits Syst. 9 (1), 5–17. doi:10.1109/JETCAS.2019.2898948

Wu, G., Masia, B., Jarabo, A., Zhang, Y., Wang, L., Dai, Q., et al. (2017). Light field image processing: an overview. IEEE J. Sel. Top. Signal. Process. 11 (7), 926–954. doi:10.1109/JSTSP.2017.2747126

Xie, N., Ras, G., van Gerven, M., and Doran, D. (2020). Explainable deep learning: a field guide for the uninitiated , Ithaca, NY: Cornell University ..

Keywords: image processing, immersive, image analysis, image understanding, deep learning, video processing

Citation: Dufaux F (2021) Grand Challenges in Image Processing. Front. Sig. Proc. 1:675547. doi: 10.3389/frsip.2021.675547

Received: 03 March 2021; Accepted: 10 March 2021; Published: 12 April 2021.

Reviewed and Edited by:

Copyright © 2021 Dufaux. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Frédéric Dufaux, [email protected]

- Open access

- Published: 05 December 2018

Application research of digital media image processing technology based on wavelet transform

- Lina Zhang 1 ,

- Lijuan Zhang 2 &

- Liduo Zhang 3

EURASIP Journal on Image and Video Processing volume 2018 , Article number: 138 ( 2018 ) Cite this article

7138 Accesses

12 Citations

Metrics details

With the development of information technology, people access information more and more rely on the network, and more than 80% of the information in the network is replaced by multimedia technology represented by images. Therefore, the research on image processing technology is very important, but most of the research on image processing technology is focused on a certain aspect. The research results of unified modeling on various aspects of image processing technology are still rare. To this end, this paper uses image denoising, watermarking, encryption and decryption, and image compression in the process of image processing technology to carry out unified modeling, using wavelet transform as a method to simulate 300 photos from life. The results show that unified modeling has achieved good results in all aspects of image processing.

1 Introduction

With the increase of computer processing power, people use computer processing objects to slowly shift from characters to images. According to statistics, today’s information, especially Internet information, transmits and stores more than 80% of the information. Compared with the information of the character type, the image information is much more complicated, so it is more complicated to process the characters on the computer than the image processing. Therefore, in order to make the use of image information safer and more convenient, it is particularly important to carry out related application research on image digital media. Digital media image processing technology mainly includes denoising, encryption, compression, storage, and many other aspects.

The purpose of image denoising is to remove the noise of the natural frequency in the image to achieve the characteristics of highlighting the meaning of the image itself. Because of the image acquisition, processing, etc., they will damage the original signal of the image. Noise is an important factor that interferes with the clarity of an image. This source of noise is varied and is mainly derived from the transmission process and the quantization process. According to the relationship between noise and signal, noise can be divided into additive noise, multiplicative noise, and quantization noise. In image noise removal, commonly used methods include a mean filter method, an adaptive Wiener filter method, a median filter, and a wavelet transform method. For example, the image denoising method performed by the neighborhood averaging method used in the literature [ 1 , 2 , 3 ] is a mean filtering method which is suitable for removing particle noise in an image obtained by scanning. The neighborhood averaging method strongly suppresses the noise and also causes the ambiguity due to the averaging. The degree of ambiguity is proportional to the radius of the field. The Wiener filter adjusts the output of the filter based on the local variance of the image. The Wiener filter has the best filtering effect on images with white noise. For example, in the literature [ 4 , 5 ], this method is used for image denoising, and good denoising results are obtained. Median filtering is a commonly used nonlinear smoothing filter that is very effective in filtering out the salt and pepper noise of an image. The median filter can both remove noise and protect the edges of the image for a satisfactory recovery. In the actual operation process, the statistical characteristics of the image are not needed, which brings a lot of convenience. For example, the literature [ 6 , 7 , 8 ] is a successful case of image denoising using median filtering. Wavelet analysis is to denoise the image by using the wavelet’s layering coefficient, so the image details can be well preserved, such as the literature [ 9 , 10 ].

Image encryption is another important application area of digital image processing technology, mainly including two aspects: digital watermarking and image encryption. Digital watermarking technology directly embeds some identification information (that is, digital watermark) into digital carriers (including multimedia, documents, software, etc.), but does not affect the use value of the original carrier, and is not easily perceived or noticed by a human perception system (such as a visual or auditory system). Through the information hidden in the carrier, it is possible to confirm the content creator, the purchaser, transmit the secret information, or determine whether the carrier has been tampered with. Digital watermarking is an important research direction of information hiding technology. For example, the literature [ 11 , 12 ] is the result of studying the image digital watermarking method. In terms of digital watermarking, some researchers have tried to use wavelet method to study. For example, AH Paquet [ 13 ] and others used wavelet packet to carry out digital watermark personal authentication in 2003, and successfully introduced wavelet theory into digital watermark research, which opened up a new idea for image-based digital watermarking technology. In order to achieve digital image secrecy, in practice, the two-dimensional image is generally converted into one-dimensional data, and then encrypted by a conventional encryption algorithm. Unlike ordinary text information, images and videos are temporal, spatial, visually perceptible, and lossy compression is also possible. These features make it possible to design more efficient and secure encryption algorithms for images. For example, Z Wen [ 14 ] and others use the key value to generate real-value chaotic sequences, and then use the image scrambling method in the space to encrypt the image. The experimental results show that the technology is effective and safe. YY Wang [ 15 ] et al. proposed a new optical image encryption method using binary Fourier transform computer generated hologram (CGH) and pixel scrambling technology. In this method, the order of pixel scrambling and the encrypted image are used as keys for decrypting the original image. Zhang X Y [ 16 ] et al. combined the mathematical principle of two-dimensional cellular automata (CA) with image encryption technology and proposed a new image encryption algorithm. The image encryption algorithm is convenient to implement, has good security, large key amount, good avalanche effect, high degree of confusion, diffusion characteristics, simple operation, low computational complexity, and high speed.

In order to realize the transmission of image information quickly, image compression is also a research direction of image application technology. The information age has brought about an “information explosion” that has led to an increase in the amount of data, so that data needs to be effectively compressed regardless of transmission or storage. For example, in remote sensing technology, space probes use compression coding technology to send huge amounts of information back to the ground. Image compression is the application of data compression technology on digital images. The purpose of image compression is to reduce redundant information in image data and store and transmit data in a more efficient format. Through the unremitting efforts of researchers, image compression technology is now maturing. For example, Lewis A S [ 17 ] hierarchically encodes the transformed coefficients, and designs a new image compression method based on the local estimation noise sensitivity of the human visual system (HVS). The algorithm can be easily mapped to 2-D orthogonal wavelet transform to decompose the image into spatial and spectral local coefficients. Devore R A [ 18 ] introduced a novel theory to analyze image compression methods based on wavelet decomposition compression. Buccigrossi R W [ 19 ] developed a probabilistic model of natural images based on empirical observations of statistical data in the wavelet transform domain. The wavelet coefficient pairs of the basis functions corresponding to adjacent spatial locations, directions, and scales are found to be non-Gaussian in their edges and joint statistical properties. They proposed a Markov model that uses linear predictors to interpret these dependencies, where amplitude is combined with multiplicative and additive uncertainty and indicates that it can interpret statistical data for various images, including photographic images, graphic images, and medical images. In order to directly prove the efficacy of the model, an image encoder called Embedded Prediction Wavelet Image Coder (EPWIC) was constructed in their research. The subband coefficients use a non-adaptive arithmetic coder to encode a bit plane at a time. The encoder uses the conditional probability calculated from the model to sort the bit plane using a greedy algorithm. The algorithm considers the MSE reduction for each coded bit. The decoder uses a statistical model to predict coefficient values based on the bits it has received. Although the model is simple, the rate-distortion performance of the encoder is roughly equivalent to the best image encoder in the literature.

From the existing research results, we find that today’s digital image-based application research has achieved fruitful results. However, this kind of results mainly focus on methods, such as deep learning [ 20 , 21 ], genetic algorithm [ 22 , 23 ], fuzzy theory, etc. [ 24 , 25 ], which also includes the method of wavelet analysis. However, the biggest problem in the existing image application research is that although the existing research on digital multimedia has achieved good research results, there is also a problem. Digital multimedia processing technology is an organic whole. From denoising, compression, storage, encryption, decryption to retrieval, it should be a whole, but the current research results basically study a certain part of this whole. Therefore, although one method is superior in one of the links, it is not necessary whether this method will be suitable for other links. Therefore, in order to solve this problem, this thesis takes digital image as the research object; realizes unified modeling by three main steps of encryption, compression, and retrieval in image processing; and studies the image processing capability of multiple steps by one method.

Wavelet transform is a commonly used digital signal processing method. Since the existing digital signals are mostly composed of multi-frequency signals, there are noise signals, secondary signals, and main signals in the signal. In the image processing, there are also many research teams using wavelet transform as a processing method, introducing their own research and achieving good results. So, can we use wavelet transform as a method to build a model suitable for a variety of image processing applications?

In this paper, the wavelet transform is used as a method to establish the denoising encryption and compression model in the image processing process, and the captured image is simulated. The results show that the same wavelet transform parameters have achieved good results for different image processing applications.

2.1 Image binarization processing method

The gray value of the point of the image ranges from 0 to 255. In the image processing, in order to facilitate the further processing of the image, the frame of the image is first highlighted by the method of binarization. The so-called binarization is to map the point gray value of the image from the value space of 0–255 to the value of 0 or 255. In the process of binarization, threshold selection is a key step. The threshold used in this paper is the maximum between-class variance method (OTSU). The so-called maximum inter-class variance method means that for an image, when the segmentation threshold of the current scene and the background is t , the pre-attraction image ratio is w0, the mean value is u0, the background point is the image ratio w1, and the mean value is u1. Then the mean of the entire image is:

The objective function can be established according to formula 1:

The OTSU algorithm makes g ( t ) take the global maximum, and the corresponding t when g ( t ) is maximum is called the optimal threshold.

2.2 Wavelet transform method

Wavelet transform (WT) is a research result of the development of Fourier transform technology, and the Fourier transform is only transformed into different frequencies. The wavelet transform not only has the local characteristics of the Fourier transform but also contains the transform frequency result. The advantage of not changing with the size of the window. Therefore, compared with the Fourier transform, the wavelet transform is more in line with the time-frequency transform. The biggest characteristic of the wavelet transform is that it can better represent the local features of certain features with frequency, and the scale of the wavelet transform can be different. The low-frequency and high-frequency division of the signal makes the feature more focused. This paper mainly uses wavelet transform to analyze the image in different frequency bands to achieve the effect of frequency analysis. The method of wavelet transform can be expressed as follows:

Where ψ ( t ) is the mother wavelet, a is the scale factor, and τ is the translation factor.

Because the image signal is a two-dimensional signal, when using wavelet transform for image analysis, it is necessary to generalize the wavelet transform to two-dimensional wavelet transform. Suppose the image signal is represented by f ( x , y ), ψ ( x , y ) represents a two-dimensional basic wavelet, and ψ a , b , c ( x , y ) represents the scale and displacement of the basic wavelet, that is, ψ a , b , c ( x , y ) can be calculated by the following formula:

According to the above definition of continuous wavelet, the two-dimensional continuous wavelet transform can be calculated by the following formula:

Where \( \overline{\psi \left(x,y\right)} \) is the conjugate of ψ ( x , y ).

2.3 Digital water mark

According to different methods of use, digital watermarking technology can be divided into the following types:

Spatial domain approach: A typical watermarking algorithm in this type of algorithm embeds information into the least significant bits (LSB) of randomly selected image points, which ensures that the embedded watermark is invisible. However, due to the use of pixel bits whose images are not important, the robustness of the algorithm is poor, and the watermark information is easily destroyed by filtering, image quantization, and geometric deformation operations. Another common method is to use the statistical characteristics of the pixels to embed the information in the luminance values of the pixels.

The method of transforming the domain: first calculate the discrete cosine transform (DCT) of the image, and then superimpose the watermark on the front k coefficient with the largest amplitude in the DCT domain (excluding the DC component), usually the low-frequency component of the image. If the first k largest components of the DCT coefficients are represented as D =, i = 1, ..., k, and the watermark is a random real sequence W =, i = 1, ..., k obeying the Gaussian distribution, then the watermark embedding algorithm is di = di(1 + awi), where the constant a is a scale factor that controls the strength of the watermark addition. The watermark image I is then obtained by inverse transforming with a new coefficient. The decoding function calculates the discrete cosine transform of the original image I and the watermark image I * , respectively, and extracts the embedded watermark W * , and then performs correlation test to determine the presence or absence of the watermark.

Compressed domain algorithm: The compressed domain digital watermarking system based on JPEG and MPEG standards not only saves a lot of complete decoding and re-encoding process but also has great practical value in digital TV broadcasting and video on demand (VOD). Correspondingly, watermark detection and extraction can also be performed directly in the compressed domain data.

The wavelet transform used in this paper is the method of transform domain. The main process is: assume that x ( m , n ) is a grayscale picture of M * N , the gray level is 2 a , where M , N and a are positive integers, and the range of values of m and n is defined as follows: 1 ≤ m ≤ M , 1 ≤ n ≤ N . For wavelet decomposition of this image, if the number of decomposition layers is L ( L is a positive integer), then 3* L high-frequency partial maps and a low-frequency approximate partial map can be obtained. Then X k , L can be used to represent the wavelet coefficients, where L is the number of decomposition layers, and K can be represented by H , V , and D , respectively, representing the horizontal, vertical, and diagonal subgraphs. Because the sub-picture distortion of the low frequency is large, the picture embedded in the watermark is removed from the picture outside the low frequency.

In order to realize the embedded digital watermark, we must first divide X K , L ( m i , n j ) into a certain size, and use B ( s , t ) to represent the coefficient block of size s * t in X K , L ( m i , n j ). Then the average value can be expressed by the following formula:

Where ∑ B ( s , t ) is the cumulative sum of the magnitudes of the coefficients within the block.

The embedding of the watermark sequence w is achieved by the quantization of AVG.

The interval of quantization is represented by Δ l according to considerations of robustness and concealment. For the low-level L th layer, since the coefficient amplitude is large, a larger interval can be set. For the other layers, starting from the L -1 layer, they are successively decremented.

According to w i = {0, 1}, AVG is quantized to the nearest singular point, even point, D ( i , j ) is used to represent the wavelet coefficients in the block, and the quantized coefficient is represented by D ( i , j ) ' , where i = 1, 2,. .., s ; j = 1,2,..., t . Suppose T = AVG /Δ l , TD = rem(| T |, 2), where || means rounding and rem means dividing by 2 to take the remainder.

According to whether TD and w i are the same, the calculation of the quantized wavelet coefficient D ( i , j ) ' can be as follows:

Using the same wavelet base, an image containing the watermark is generated by inverse wavelet transform, and the wavelet base, the wavelet decomposition layer number, the selected coefficient region, the blocking method, the quantization interval, and the parity correspondence are recorded to form a key.

The extraction of the watermark is determined by the embedded method, which is the inverse of the embedded mode. First, wavelet transform is performed on the image to be detected, and the position of the embedded watermark is determined according to the key, and the inverse operation of the scramble processing is performed on the watermark.

2.4 Evaluation method

Filter normalized mean square error.

In order to measure the effect before and after filtering, this paper chooses the normalized mean square error M description. The calculation method of M is as follows:

where N 1 and N 2 are Pixels before and after normalization.

Normalized cross-correlation function

The normalized cross-correlation function is a classic algorithm of image matching algorithm, which can be used to represent the similarity of images. The normalized cross-correlation is determined by calculating the cross-correlation metric between the reference map and the template graph, generally expressed by NC( i , j ). If the NC value is larger, it means that the similarity between the two is greater. The calculation formula for the cross-correlation metric is as follows:

where T ( m , n ) is the n th row of the template image, the m th pixel value; S ( i , j ) is the part under the template cover, and i , j is the coordinate of the lower left corner of the subgraph in the reference picture S.

Normalize the above formula NC according to the following formula:

Peak signal-to-noise ratio

Peak signal-to-noise ratio is often used as a measure of signal reconstruction quality in areas such as image compression, which is often simply defined by mean square error (MSE). Two m × n monochrome images I and K , if one is another noise approximation, then their mean square error is defined as:

Then the peak signal-to-noise ratio PSNR calculation method is:

Where Max is the maximum value of the pigment representing the image.

Information entropy

For a digital signal of an image, the frequency of occurrence of each pixel is different, so it can be considered that the image digital signal is actually an uncertainty signal. For image encryption, the higher the uncertainty of the image, the more the image tends to be random, the more difficult it is to crack. The lower the rule, the more regular it is, and the more likely it is to be cracked. For a grayscale image of 256 levels, the maximum value of information entropy is 8, so the more the calculation result tends to be 8, the better.

The calculation method of information entropy is as follows:

Correlation

Correlation is a parameter describing the relationship between two vectors. This paper describes the relationship between two images before and after image encryption by correlation. Assuming p ( x , y ) represents the correlation between pixels before and after encryption, the calculation method of p ( x , y ) can be calculated by the following formula:

3 Experiment

3.1 image parameter.

The images used in this article are all from the life photos, the shooting tool is Huawei meta 10, the picture size is 1440*1920, the picture resolution is 96 dbi, the bit depth is 24, no flash mode, there are 300 pictures as simulation pictures, all of which are life photos, and no special photos.

3.2 System environment

The computer system used in this simulation is Windows 10, and the simulation software used is MATLAB 2014B.

3.3 Wavelet transform-related parameters

For unified modeling, the wavelet decomposition used in this paper uses three layers of wavelet decomposition, and Daubechies is chosen as the wavelet base. The Daubechies wavelet is a wavelet function constructed by the world-famous wavelet analyst Ingrid Daubechies. They are generally abbreviated as dbN, where N is the order of the wavelet. The support region in the wavelet function Ψ( t ) and the scale function ϕ ( t ) is 2 N-1, and the vanishing moment of Ψ( t ) is N . The dbN wavelet has good regularity, that is, the smooth error introduced by the wavelet as a sparse basis is not easy to be detected, which makes the signal reconstruction process smoother. The characteristic of the dbN wavelet is that the order of the vanishing moment increases with the increase of the order (sequence N), wherein the higher the vanishing moment, the better the smoothness, the stronger the localization ability of the frequency domain, and the better the band division effect. However, the support of the time domain is weakened, and the amount of calculation is greatly increased, and the real-time performance is deteriorated. In addition, except for N = 1, the dbN wavelet does not have symmetry (i.e., nonlinear phase), that is, a certain phase distortion is generated when the signal is analyzed and reconstructed. N = 3 in this article.

4 Results and discussion

4.1 results 1: image filtering using wavelet transform.

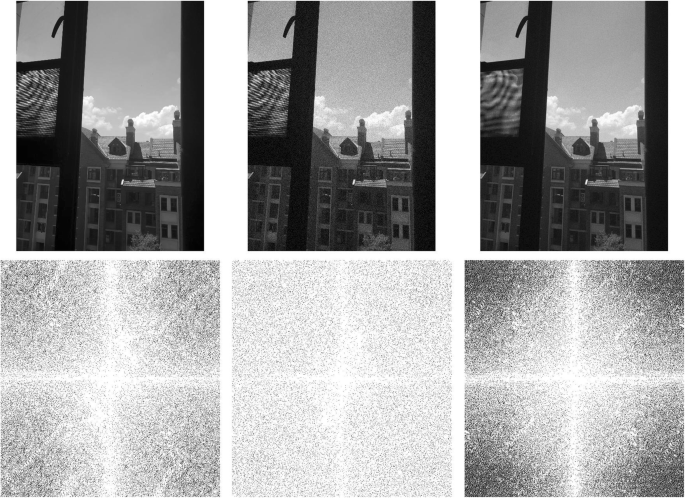

In the process of image recording, transmission, storage, and processing, it is possible to pollute the image signal. The digital signal transmitted to the image will appear as noise. These noise data will often become isolated pixels. One-to-one isolated points, although they do not destroy the overall external frame of the image, but because these isolated points tend to be high in frequency, they are portable on the image as a bright spot, which greatly affects the viewing quality of the image, so to ensure the effect of image processing, the image must be denoised. The effective method of denoising is to remove the noise of a certain frequency of the image by filtering, but the denoising must ensure that the noise data can be removed without destroying the image. Figure 1 is the result of filtering the graph using the wavelet transform method. In order to test the wavelet filtering effect, this paper adds Gaussian white noise to the original image. Comparing the white noise with the frequency analysis of the original image, it can be seen that after the noise is added, the main image frequency segment of the original image is disturbed by the noise frequency, but after filtering using the wavelet transform, the frequency band of the main frame of the original image appears again. However, the filtered image does not change significantly compared to the original image. The normalized mean square error before and after filtering is calculated, and the M value before and after filtering is 0.0071. The wavelet transform is well protected to protect the image details, and the noise data is better removed (the white noise is 20%).

Image denoising results comparison. (The first row from left to right are the original image, plus the noise map and the filtered map. The second row from left to right are the frequency distribution of the original image, the frequency distribution of the noise plus the filtered Frequency distribution)

4.2 Results 2: digital watermark encryption based on wavelet transform

As shown in Fig. 2 , the watermark encryption process based on wavelet transform can be seen from the figure. Watermarking the image by wavelet transform does not affect the structure of the original image. The noise is 40% of the salt and pepper noise. For the original image and the noise map, the wavelet transform method can extract the watermark well.

Comparison of digital watermark before and after. (The first row from left to right are the original image, plus noise and watermark, and the noise is removed; the second row are the watermark original, the watermark extracted from the noise plus watermark, and the watermark extracted after denoising)

According to the method described in this paper, the image correlation coefficient and peak-to-noise ratio of the original image after watermarking are calculated. The correlation coefficient between the original image and the watermark is 0.9871 (the first column and the third column in the first row in the figure). The watermark does not destroy the structure of the original image. The signal-to-noise ratio of the original picture is 33.5 dB, and the signal-to-noise ratio of the water-jet printing is 31.58SdB, which proves that the wavelet transform can achieve watermark hiding well. From the second row of watermarking results, the watermark extracted from the image after noise and denoising, and the original watermark correlation coefficient are (0.9745, 0.9652). This shows that the watermark signal can be well extracted after being hidden by the wavelet transform.

4.3 Results 3: image encryption based on wavelet transform

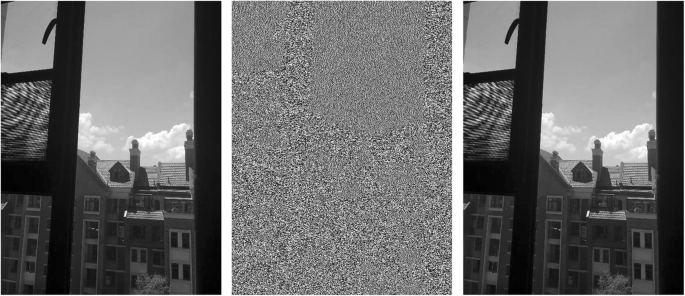

In image transmission, the most common way to protect image content is to encrypt the image. Figure 3 shows the process of encrypting and decrypting an image using wavelet transform. It can be seen from the figure that after the image is encrypted, there is no correlation with the original image at all, but the decrypted image of the encrypted image reproduces the original image.

Image encryption and decryption process diagram comparison. (The left is the original image, the middle is the encrypted image, the right is the decryption map)

The information entropy of Fig. 3 is calculated. The results show that the information entropy of the original image is 3.05, the information entropy of the decrypted graph is 3.07, and the information entropy of the encrypted graph is 7.88. It can be seen from the results of information entropy that before and after encryption. The image information entropy is basically unchanged, but the information entropy of the encrypted image becomes 7.88, indicating that the encrypted image is close to a random signal and has good confidentiality.

4.4 Result 4: image compression

Image data can be compressed because of the redundancy in the data. The redundancy of image data mainly manifests as spatial redundancy caused by correlation between adjacent pixels in an image; time redundancy due to correlation between different frames in an image sequence; spectral redundancy due to correlation of different color planes or spectral bands. The purpose of data compression is to reduce the number of bits required to represent the data by removing these data redundancy. Since the amount of image data is huge, it is very difficult to store, transfer, and process, so the compression of image data is very important. Figure 4 shows the result of two compressions of the original image. It can be seen from the figure that although the image is compressed, the main frame of the image does not change, but the image sharpness is significantly reduced. The Table 1 shows the compressed image properties.

Image comparison before and after compression. (left is the original image, the middle is the first compression, the right is the second compression)

It can be seen from the results in Table 1 that after multiple compressions, the size of the image is significantly reduced and the image is getting smaller and smaller. The original image needs 2,764,800 bytes, which is reduced to 703,009 after a compression, which is reduced by 74.5%. After the second compression, only 182,161 is left, which is 74.1% lower. It can be seen that the wavelet transform can achieve image compression well.

5 Conclusion

With the development of informatization, today’s era is an era full of information. As the visual basis of human perception of the world, image is an important means for humans to obtain information, express information, and transmit information. Digital image processing, that is, processing images with a computer, has a long history of development. Digital image processing technology originated in the 1920s, when a photo was transmitted from London, England to New York, via a submarine cable, using digital compression technology. First of all, digital image processing technology can help people understand the world more objectively and accurately. The human visual system can help humans get more than 3/4 of the information from the outside world, and images and graphics are the carriers of all visual information, despite the identification of the human eye. It is very powerful and can recognize thousands of colors, but in many cases, the image is blurred or even invisible to the human eye. Image enhancement technology can make the blurred or even invisible image clear and bright. There are also some relevant research results on this aspect of research, which proves that relevant research is feasible [ 26 , 27 ].

It is precisely because of the importance of image processing technology that many researchers have begun research on image processing technology and achieved fruitful results. However, with the deepening of image processing technology research, today’s research has a tendency to develop in depth, and this depth is an in-depth aspect of image processing technology. However, the application of image processing technology is a system engineering. In addition to the deep requirements, there are also systematic requirements. Therefore, if the unified model research on multiple aspects of image application will undoubtedly promote the application of image processing technology. Wavelet transform has been successfully applied in many fields of image processing technology. Therefore, this paper uses wavelet transform as a method to establish a unified model based on wavelet transform. Simulation research is carried out by filtering, watermark hiding, encryption and decryption, and image compression of image processing technology. The results show that the model has achieved good results.

Abbreviations

Cellular automata

Computer generated hologram

Discrete cosine transform

Embedded Prediction Wavelet Image Coder

Human visual system

Least significant bits

Video on demand

Wavelet transform

H.W. Zhang, The research and implementation of image Denoising method based on Matlab[J]. Journal of Daqing Normal University 36 (3), 1-4 (2016)

J.H. Hou, J.W. Tian, J. Liu, Analysis of the errors in locally adaptive wavelet domain wiener filter and image Denoising[J]. Acta Photonica Sinica 36 (1), 188–191 (2007)

Google Scholar

M. Lebrun, An analysis and implementation of the BM3D image Denoising method[J]. Image Processing on Line 2 (25), 175–213 (2012)

Article Google Scholar

A. Fathi, A.R. Naghsh-Nilchi, Efficient image Denoising method based on a new adaptive wavelet packet thresholding function[J]. IEEE transactions on image processing a publication of the IEEE signal processing. Society 21 (9), 3981 (2012)

MATH Google Scholar

X. Zhang, X. Feng, W. Wang, et al., Gradient-based wiener filter for image denoising [J]. Comput. Electr. Eng. 39 (3), 934–944 (2013)

T. Chen, K.K. Ma, L.H. Chen, Tri-state median filter for image denoising.[J]. IEEE Transactions on Image Processing A Publication of the IEEE Signal Processing Society 8 (12), 1834 (1999)

S.M.M. Rahman, M.K. Hasan, Wavelet-domain iterative center weighted median filter for image denoising[J]. Signal Process. 83 (5), 1001–1012 (2003)

Article MATH Google Scholar

H.L. Eng, K.K. Ma, Noise adaptive soft-switching median filter for image denoising[C]// IEEE International Conference on Acoustics, Speech, and Signal Processing, 2000. ICASSP '00. Proceedings. IEEE 4 , 2175–2178 (2000)

S.G. Chang, B. Yu, M. Vetterli, Adaptive wavelet thresholding for image denoising and compression[J]. IEEE transactions on image processing a publication of the IEEE signal processing. Society 9 (9), 1532 (2000)

M. Kivanc Mihcak, I. Kozintsev, K. Ramchandran, et al., Low-complexity image Denoising based on statistical modeling of wavelet Coecients[J]. IEEE Signal Processing Letters 6 (12), 300–303 (1999)

J.H. Wu, F.Z. Lin, Image authentication based on digital watermarking[J]. Chinese Journal of Computers 9 , 1153–1161 (2004)

MathSciNet Google Scholar

A. Wakatani, Digital watermarking for ROI medical images by using compressed signature image[C]// Hawaii international conference on system sciences. IEEE (2002), pp. 2043–2048

A.H. Paquet, R.K. Ward, I. Pitas, Wavelet packets-based digital watermarking for image verification and authentication [J]. Signal Process. 83 (10), 2117–2132 (2003)

Z. Wen, L.I. Taoshen, Z. Zhang, An image encryption technology based on chaotic sequences[J]. Comput. Eng. 31 (10), 130–132 (2005)

Y.Y. Wang, Y.R. Wang, Y. Wang, et al., Optical image encryption based on binary Fourier transform computer-generated hologram and pixel scrambling technology[J]. Optics & Lasers in Engineering 45 (7), 761–765 (2007)

X.Y. Zhang, C. Wang, S.M. Li, et al., Image encryption technology on two-dimensional cellular automata[J]. Journal of Optoelectronics Laser 19 (2), 242–245 (2008)

A.S. Lewis, G. Knowles, Image compression using the 2-D wavelet transform[J]. IEEE Trans. Image Process. 1 (2), 244–250 (2002)

R.A. Devore, B. Jawerth, B.J. Lucier, Image compression through wavelet transform coding[J]. IEEE Trans.inf.theory 38 (2), 719–746 (1992)

Article MathSciNet MATH Google Scholar

R.W. Buccigrossi, E.P. Simoncelli, Image compression via joint statistical characterization in the wavelet domain[J]. IEEE transactions on image processing a publication of the IEEE signal processing. Society 8 (12), 1688–1701 (1999)

A.A. Cruzroa, J.E. Arevalo Ovalle, A. Madabhushi, et al., A deep learning architecture for image representation, visual interpretability and automated basal-cell carcinoma cancer detection. Med Image Comput Comput Assist Interv. 16 , 403–410 (2013)

S.P. Mohanty, D.P. Hughes, M. Salathé, Using deep learning for image-based plant disease detection[J]. Front. Plant Sci. 7 , 1419 (2016)

B. Sahiner, H. Chan, D. Wei, et al., Image feature selection by a genetic algorithm: application to classification of mass and normal breast tissue[J]. Med. Phys. 23 (10), 1671 (1996)

B. Bhanu, S. Lee, J. Ming, Adaptive image segmentation using a genetic algorithm[J]. IEEE Transactions on Systems Man & Cybernetics 25 (12), 1543–1567 (2002)

Y. Egusa, H. Akahori, A. Morimura, et al., An application of fuzzy set theory for an electronic video camera image stabilizer[J]. IEEE Trans. Fuzzy Syst. 3 (3), 351–356 (1995)

K. Hasikin, N.A.M. Isa, Enhancement of the low contrast image using fuzzy set theory[C]// Uksim, international conference on computer modelling and simulation. IEEE (2012), pp. 371–376

P. Yang, Q. Li, Wavelet transform-based feature extraction for ultrasonic flaw signal classification. Neural Comput. & Applic. 24 (3–4), 817–826 (2014)

R.K. Lama, M.-R. Choi, G.-R. Kwon, Image interpolation for high-resolution display based on the complex dual-tree wavelet transform and hidden Markov model. Multimedia Tools Appl. 75 (23), 16487–16498 (2016)

Download references

Acknowledgements

The authors thank the editor and anonymous reviewers for their helpful comments and valuable suggestions.

This work was supported by

Shandong social science planning research project in 2018

Topic: The Application of Shandong Folk Culture in Animation in The View of Digital Media (No. 18CCYJ14).

Shandong education science 12th five-year plan 2015

Topic: Innovative Research on Stop-motion Animation in The Digital Media Age (No. YB15068).

Shandong education science 13th five-year plan 2016–2017

Approval of “Ports and Arts Education Special Fund”: BCA2017017.

Topic: Reform of Teaching Methods of Hand Drawn Presentation Techniques (No. BCA2017017).

National Research Youth Project of state ethnic affairs commission in 2018

Topic: Protection and Development of Villages with Ethnic Characteristics Under the Background of Rural Revitalization Strategy (No. 2018-GMC-020).

Availability of data and materials

Authors can provide the data.

About the authors

Zaozhuang University, No. 1 Beian Road., Shizhong District, Zaozhuang City, Shandong, P.R. China.

Lina, Zhang was born in Jining, Shandong, P.R. China, in 1983. She received a Master degree from Bohai University, P.R. China. Now she works in School of Media, Zaozhuang University, P.R. China. Her research interests include animation and Digital media art.

Lijuan, Zhang was born in Jining, Shandong, P.R. China, in 1983. She received a Master degree from Jingdezhen Ceramic Institute, P.R. China. Now she works in School of Fine Arts and Design, Zaozhuang University, P.R. China. Her research interests include Interior design and Digital media art.

Liduo, Zhang was born in Zaozhuang, Shandong, P.R. China, in 1982. He received a Master degree from Monash University, Australia. Now he works in School of economics and management, Zaozhuang University. His research interests include Internet finance and digital media.

Author information

Authors and affiliations.

School of Media, Zaozhuang University, Zaozhuang, Shandong, China

School of Fine Arts and Design, Zaozhuang University, Zaozhuang, Shandong, China

Lijuan Zhang

School of Economics and Management, Zaozhuang University, Zaozhuang, Shandong, China

Liduo Zhang

You can also search for this author in PubMed Google Scholar

Contributions

All authors take part in the discussion of the work described in this paper. The author LZ wrote the first version of the paper. The author LZ and LZ did part experiments of the paper, LZ revised the paper in different version of the paper, respectively. All authors read and approved the final manuscript.

Corresponding author

Correspondence to Lijuan Zhang .

Ethics declarations

Competing interests.

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Reprints and permissions

About this article

Cite this article.

Zhang, L., Zhang, L. & Zhang, L. Application research of digital media image processing technology based on wavelet transform. J Image Video Proc. 2018 , 138 (2018). https://doi.org/10.1186/s13640-018-0383-6

Download citation

Received : 28 September 2018

Accepted : 23 November 2018

Published : 05 December 2018

DOI : https://doi.org/10.1186/s13640-018-0383-6

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Image processing

- Digital watermark

- Image denoising

- Image encryption

- Image compression

digital image processing Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Developing Digital Photomicroscopy

(1) The need for efficient ways of recording and presenting multicolour immunohistochemistry images in a pioneering laboratory developing new techniques motivated a move away from photography to electronic and ultimately digital photomicroscopy. (2) Initially broadcast quality analogue cameras were used in the absence of practical digital cameras. This allowed the development of digital image processing, storage and presentation. (3) As early adopters of digital cameras, their advantages and limitations were recognised in implementation. (4) The adoption of immunofluorescence for multiprobe detection prompted further developments, particularly a critical approach to probe colocalization. (5) Subsequently, whole-slide scanning was implemented, greatly enhancing histology for diagnosis, research and teaching.

Parallel Algorithm of Digital Image Processing Based on GPU

Quantitative identification cracks of heritage rock based on digital image technology.