- Python for Machine Learning

- Machine Learning with R

- Machine Learning Algorithms

- Math for Machine Learning

- Machine Learning Interview Questions

- ML Projects

- Deep Learning

- Computer vision

- Data Science

- Artificial Intelligence

Hypothesis in Machine Learning

- Demystifying Machine Learning

- Bayes Theorem in Machine learning

- What is Machine Learning?

- Best IDEs For Machine Learning

- Learn Machine Learning in 45 Days

- Interpolation in Machine Learning

- How does Machine Learning Works?

- Machine Learning for Healthcare

- Applications of Machine Learning

- Machine Learning - Learning VS Designing

- Continual Learning in Machine Learning

- Meta-Learning in Machine Learning

- P-value in Machine Learning

- Why Machine Learning is The Future?

- How Does NASA Use Machine Learning?

- Few-shot learning in Machine Learning

- Machine Learning Jobs in Hyderabad

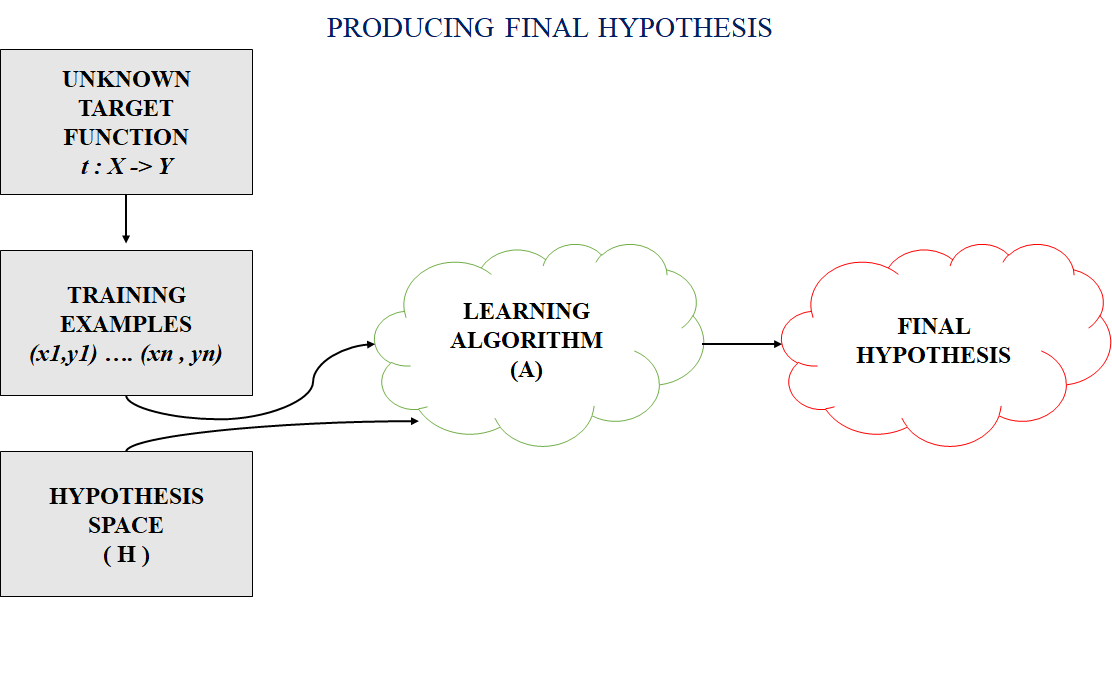

The concept of a hypothesis is fundamental in Machine Learning and data science endeavours. In the realm of machine learning, a hypothesis serves as an initial assumption made by data scientists and ML professionals when attempting to address a problem. Machine learning involves conducting experiments based on past experiences, and these hypotheses are crucial in formulating potential solutions.

It’s important to note that in machine learning discussions, the terms “hypothesis” and “model” are sometimes used interchangeably. However, a hypothesis represents an assumption, while a model is a mathematical representation employed to test that hypothesis. This section on “Hypothesis in Machine Learning” explores key aspects related to hypotheses in machine learning and their significance.

Table of Content

How does a Hypothesis work?

Hypothesis space and representation in machine learning, hypothesis in statistics, faqs on hypothesis in machine learning.

A hypothesis in machine learning is the model’s presumption regarding the connection between the input features and the result. It is an illustration of the mapping function that the algorithm is attempting to discover using the training set. To minimize the discrepancy between the expected and actual outputs, the learning process involves modifying the weights that parameterize the hypothesis. The objective is to optimize the model’s parameters to achieve the best predictive performance on new, unseen data, and a cost function is used to assess the hypothesis’ accuracy.

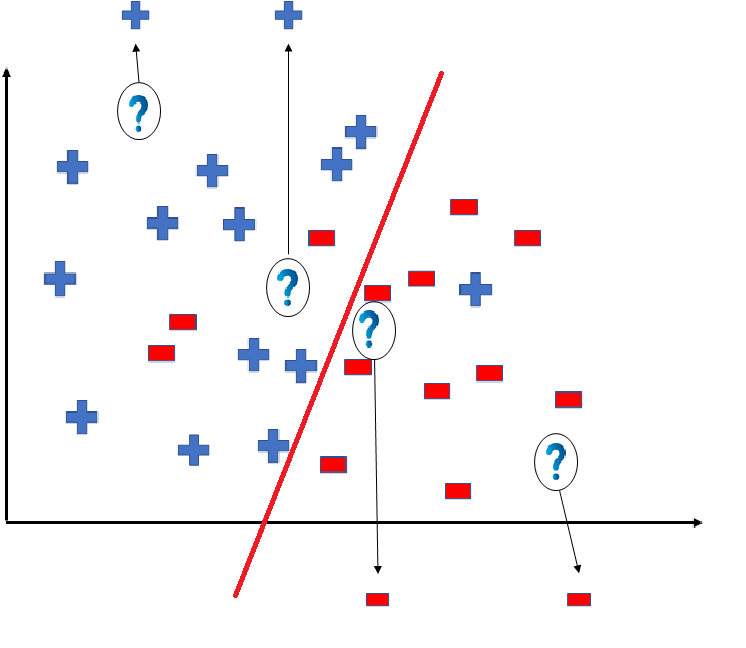

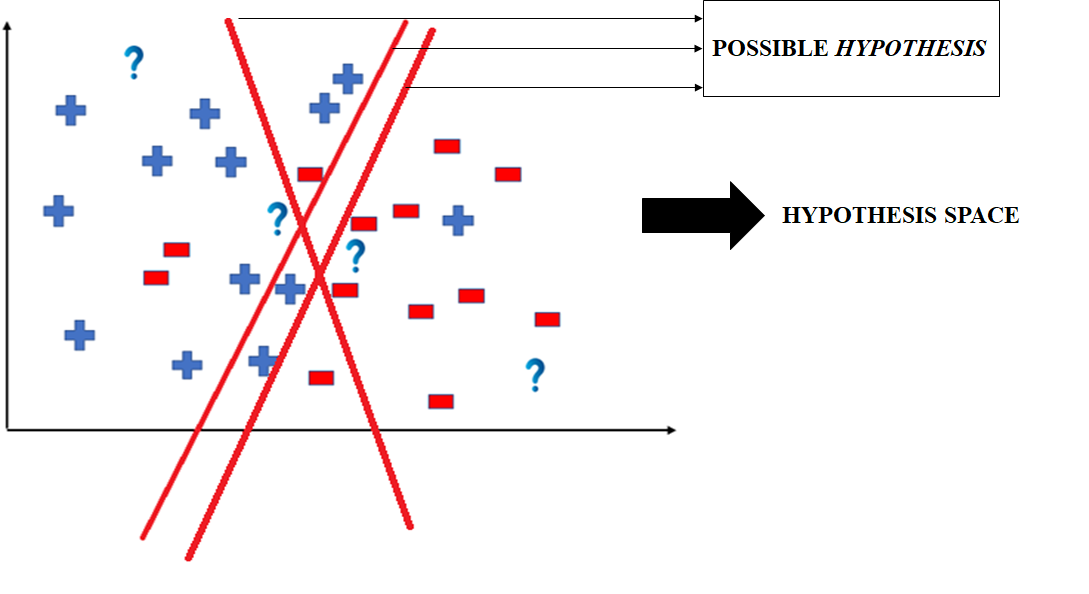

In most supervised machine learning algorithms, our main goal is to find a possible hypothesis from the hypothesis space that could map out the inputs to the proper outputs. The following figure shows the common method to find out the possible hypothesis from the Hypothesis space:

Hypothesis Space (H)

Hypothesis space is the set of all the possible legal hypothesis. This is the set from which the machine learning algorithm would determine the best possible (only one) which would best describe the target function or the outputs.

Hypothesis (h)

A hypothesis is a function that best describes the target in supervised machine learning. The hypothesis that an algorithm would come up depends upon the data and also depends upon the restrictions and bias that we have imposed on the data.

The Hypothesis can be calculated as:

[Tex]y = mx + b [/Tex]

- m = slope of the lines

- b = intercept

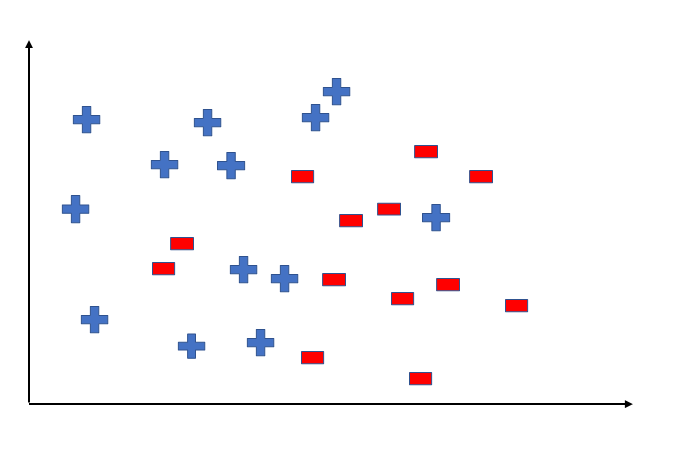

To better understand the Hypothesis Space and Hypothesis consider the following coordinate that shows the distribution of some data:

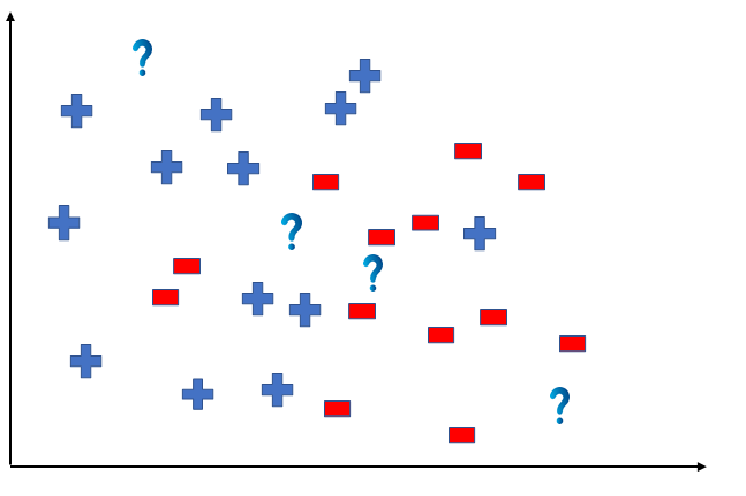

Say suppose we have test data for which we have to determine the outputs or results. The test data is as shown below:

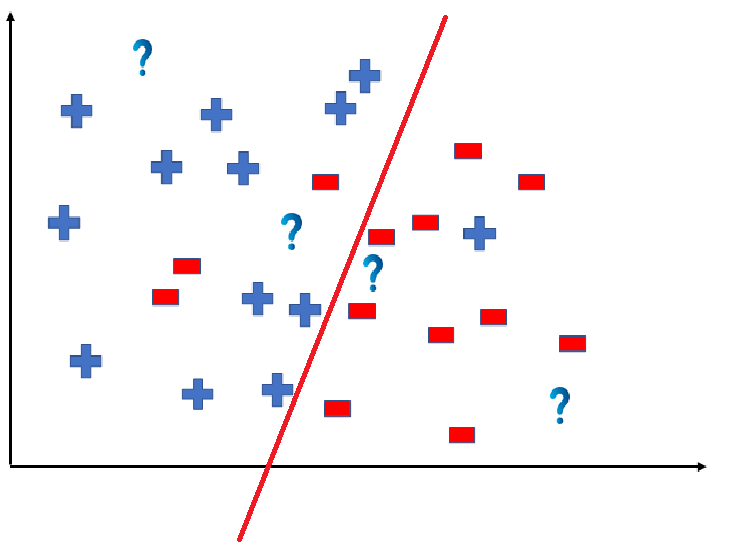

We can predict the outcomes by dividing the coordinate as shown below:

So the test data would yield the following result:

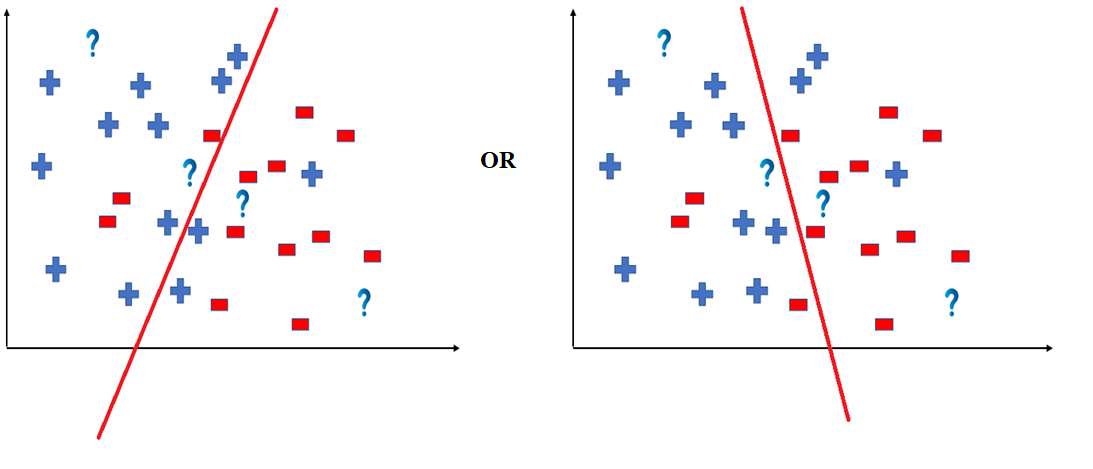

But note here that we could have divided the coordinate plane as:

The way in which the coordinate would be divided depends on the data, algorithm and constraints.

- All these legal possible ways in which we can divide the coordinate plane to predict the outcome of the test data composes of the Hypothesis Space.

- Each individual possible way is known as the hypothesis.

Hence, in this example the hypothesis space would be like:

The hypothesis space comprises all possible legal hypotheses that a machine learning algorithm can consider. Hypotheses are formulated based on various algorithms and techniques, including linear regression, decision trees, and neural networks. These hypotheses capture the mapping function transforming input data into predictions.

Hypothesis Formulation and Representation in Machine Learning

Hypotheses in machine learning are formulated based on various algorithms and techniques, each with its representation. For example:

- Linear Regression : [Tex] h(X) = \theta_0 + \theta_1 X_1 + \theta_2 X_2 + … + \theta_n X_n[/Tex]

- Decision Trees : [Tex]h(X) = \text{Tree}(X)[/Tex]

- Neural Networks : [Tex]h(X) = \text{NN}(X)[/Tex]

In the case of complex models like neural networks, the hypothesis may involve multiple layers of interconnected nodes, each performing a specific computation.

Hypothesis Evaluation:

The process of machine learning involves not only formulating hypotheses but also evaluating their performance. This evaluation is typically done using a loss function or an evaluation metric that quantifies the disparity between predicted outputs and ground truth labels. Common evaluation metrics include mean squared error (MSE), accuracy, precision, recall, F1-score, and others. By comparing the predictions of the hypothesis with the actual outcomes on a validation or test dataset, one can assess the effectiveness of the model.

Hypothesis Testing and Generalization:

Once a hypothesis is formulated and evaluated, the next step is to test its generalization capabilities. Generalization refers to the ability of a model to make accurate predictions on unseen data. A hypothesis that performs well on the training dataset but fails to generalize to new instances is said to suffer from overfitting. Conversely, a hypothesis that generalizes well to unseen data is deemed robust and reliable.

The process of hypothesis formulation, evaluation, testing, and generalization is often iterative in nature. It involves refining the hypothesis based on insights gained from model performance, feature importance, and domain knowledge. Techniques such as hyperparameter tuning, feature engineering, and model selection play a crucial role in this iterative refinement process.

In statistics , a hypothesis refers to a statement or assumption about a population parameter. It is a proposition or educated guess that helps guide statistical analyses. There are two types of hypotheses: the null hypothesis (H0) and the alternative hypothesis (H1 or Ha).

- Null Hypothesis(H 0 ): This hypothesis suggests that there is no significant difference or effect, and any observed results are due to chance. It often represents the status quo or a baseline assumption.

- Aternative Hypothesis(H 1 or H a ): This hypothesis contradicts the null hypothesis, proposing that there is a significant difference or effect in the population. It is what researchers aim to support with evidence.

Q. How does the training process use the hypothesis?

The learning algorithm uses the hypothesis as a guide to minimise the discrepancy between expected and actual outputs by adjusting its parameters during training.

Q. How is the hypothesis’s accuracy assessed?

Usually, a cost function that calculates the difference between expected and actual values is used to assess accuracy. Optimising the model to reduce this expense is the aim.

Q. What is Hypothesis testing?

Hypothesis testing is a statistical method for determining whether or not a hypothesis is correct. The hypothesis can be about two variables in a dataset, about an association between two groups, or about a situation.

Q. What distinguishes the null hypothesis from the alternative hypothesis in machine learning experiments?

The null hypothesis (H0) assumes no significant effect, while the alternative hypothesis (H1 or Ha) contradicts H0, suggesting a meaningful impact. Statistical testing is employed to decide between these hypotheses.

Please Login to comment...

Similar reads.

- Machine Learning

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Machine Learning Theory - Part 2: Generalization Bounds

Last time we concluded by noticing that minimizing the empirical risk (or the training error) is not in itself a solution to the learning problem, it could only be considered a solution if we can guarantee that the difference between the training error and the generalization error (which is also called the generalization gap ) is small enough. We formalized such requirement using the probability:

That is if this probability is small, we can guarantee that the difference between the errors is not much, and hence the learning problem can be solved.

In this part we’ll start investigating that probability at depth and see if it indeed can be small, but before starting you should note that I skipped a lot of the mathematical proofs here. You’ll often see phrases like “It can be proved that …”, “One can prove …”, “It can be shown that …”, … etc without giving the actual proof. This is to make the post easier to read and to focus all the effort on the conceptual understanding of the subject. In case you wish to get your hands dirty with proofs, you can find all of them in the additional readings, or on the Internet of course!

Independently, and Identically Distributed

The world can be a very messy place! This is a problem that faces any theoretical analysis of a real world phenomenon; because usually we can’t really capture all the messiness in mathematical terms, and even if we’re able to; we usually don’t have the tools to get any results from such a messy mathematical model.

So in order for theoretical analysis to move forward, some assumptions must be made to simplify the situation at hand, we can then use the theoretical results from that simplification to infer about reality.

Assumptions are common practice in theoretical work. Assumptions are not bad in themselves, only bad assumptions are bad! As long as our assumptions are reasonable and not crazy, they’ll hold significant truth about reality.

A reasonable assumption we can make about the problem we have at hand is that our training dataset samples are independently, and identically distributed (or i.i.d. for short), that means that all the samples are drawn from the same probability distribution and that each sample is independent from the others.

This assumption is essential for us. We need it to start using the tools form probability theory to investigate our generalization probability, and it’s a very reasonable assumption because:

- It’s more likely for a dataset used for inferring about an underlying probability distribution to be all sampled for that same distribution. If this is not the case, then the statistics we get from the dataset will be noisy and won’t correctly reflect the target underlying distribution.

- It’s more likely that each sample in the dataset is chosen without considering any other sample that has been chosen before or will be chosen after. If that’s not the case and the samples are dependent, then the dataset will suffer from a bias towards a specific direction in the distribution, and hence will fail to reflect the underlying distribution correctly.

So we can build upon that assumption with no fear.

The Law of Large Numbers

Most of us, since we were kids, know that if we tossed a fair coin a large number of times, roughly half of the times we’re gonna get heads. This is an instance of wildly known fact about probability that if we retried an experiment for a sufficiency large amount of times, the average outcome of these experiments (or, more formally, the sample mean ) will be very close to the true mean of the underlying distribution. This fact is formally captured into what we call The law of large numbers :

If $x_1, x_2, …, x_m$ are $m$ i.i.d. samples of a random variable $X$ distributed by $P$. then for a small positive non-zero value $\epsilon$: \[\lim_{m \rightarrow \infty} \mathbb{P}\left[\left|\mathop{\mathbb{E}}_{X \sim P}[X] - \frac{1}{m}\sum_{i=1}^{m}x_i \right| > \epsilon\right] = 0\]

This version of the law is called the weak law of large numbers . It’s weak because it guarantees that as the sample size goes larger, the sample and true means will likely be very close to each other by a non-zero distance no greater than epsilon. On the other hand, the strong version says that with very large sample size, the sample mean is almost surely equal to the true mean.

The formulation of the weak law lends itself naturally to use with our generalization probability. By recalling that the empirical risk is actually the sample mean of the errors and the risk is the true mean, for a single hypothesis $h$ we can say that:

Well, that’s a progress, A pretty small one, but still a progress! Can we do any better?

Hoeffding’s inequality

The law of large numbers is like someone pointing the directions to you when you’re lost, they tell you that by following that road you’ll eventually reach your destination, but they provide no information about how fast you’re gonna reach your destination, what is the most convenient vehicle, should you walk or take a cab, and so on.

To our destination of ensuring that the training and generalization errors do not differ much, we need to know more info about the how the road down the law of large numbers look like. These info are provided by what we call the concentration inequalities . This is a set of inequalities that quantifies how much random variables (or function of them) deviate from their expected values (or, also, functions of them). One inequality of those is Heoffding’s inequality :

If $x_1, x_2, …, x_m$ are $m$ i.i.d. samples of a random variable $X$ distributed by $P$, and $a \leq x_i \leq b$ for every $i$, then for a small positive non-zero value $\epsilon$: \[\mathbb{P}\left[\left|\mathop{\mathbb{E}}_{X \sim P}[X] - \frac{1}{m}\sum_{i=0}^{m}x_i\right| > \epsilon\right] \leq 2\exp\left(\frac{-2m\epsilon^2}{(b -a)^2}\right)\]

You probably see why we specifically chose Heoffding’s inequality from among the others. We can naturally apply this inequality to our generalization probability, assuming that our errors are bounded between 0 and 1 (which is a reasonable assumption, as we can get that using a 0/1 loss function or by squashing any other loss between 0 and 1) and get for a single hypothesis $h$:

This means that the probability of the difference between the training and the generalization errors exceeding $\epsilon$ exponentially decays as the dataset size goes larger. This should align well with our practical experience that the bigger the dataset gets, the better the results become.

If you noticed, all our analysis up till now was focusing on a single hypothesis $h$. But the learning problem doesn’t know that single hypothesis beforehand, it needs to pick one out of an entire hypothesis space $\mathcal{H}$, so we need a generalization bound that reflects the challenge of choosing the right hypothesis.

Generalization Bound: 1st Attempt

In order for the entire hypothesis space to have a generalization gap bigger than $\epsilon$, at least one of its hypothesis: $h_1$ or $h_2$ or $h_3$ or … etc should have. This can be expressed formally by stating that:

Where $\bigcup$ denotes the union of the events, which also corresponds to the logical OR operator. Using the union bound inequality , we get:

We exactly know the bound on the probability under the summation from our analysis using the Heoffding’s inequality, so we end up with:

Where $|\mathcal{H}|$ is the size of the hypothesis space. By denoting the right hand side of the above inequality by $\delta$, we can say that with a confidence $1 - \delta$:

And with some basic algebra, we can express $\epsilon$ in terms of $\delta$ and get:

This is our first generalization bound, it states that the generalization error is bounded by the training error plus a function of the hypothesis space size and the dataset size. We can also see that the the bigger the hypothesis space gets, the bigger the generalization error becomes. This explains why the memorization hypothesis form last time, which theoretically has $|\mathcal{H}| = \infty$, fails miserably as a solution to the learning problem despite having $R_\text{emp} = 0$; because for the memorization hypothesis $h_\text{mem}$:

But wait a second! For a linear hypothesis of the form $h(x) = wx + b$, we also have $|\mathcal{H}| = \infty$ as there is infinitely many lines that can be drawn. So the generalization error of the linear hypothesis space should be unbounded just as the memorization hypothesis! If that’s true, why does perceptrons, logistic regression, support vector machines and essentially any ML model that uses a linear hypothesis work?

Our theoretical result was able to account for some phenomena (the memorization hypothesis, and any finite hypothesis space) but not for others (the linear hypothesis, or other infinite hypothesis spaces that empirically work). This means that there’s still something missing from our theoretical model, and it’s time for us to revise our steps. A good starting point is from the source of the problem itself, which is the infinity in $|\mathcal{H}|$.

Notice that the term $|\mathcal{H}|$ resulted from our use of the union bound. The basic idea of the union bound is that it bounds the probability by the worst case possible, which is when all the events under union are mutually independent. This bound gets more tight as the events under consideration get less dependent. In our case, for the bound to be tight and reasonable, we need the following to be true:

For every two hypothesis $h_1, h_2 \in \mathcal{H}$ the two events $|R(h_1) - R_\text{emp}(h_1)| > \epsilon$ and $|R(h_2) - R_\text{emp}(h_2)| > \epsilon$ are likely to be independent. This means that the event that $h_1$ has a generalization gap bigger than $\epsilon$ should be independent of the event that also $h_2$ has a generalization gap bigger than $\epsilon$, no matter how much $h_1$ and $h_2$ are close or related; the events should be coincidental.

But is that true?

Examining the Independence Assumption

The first question we need to ask here is why do we need to consider every possible hypothesis in $\mathcal{H}$? This may seem like a trivial question; as the answer is simply that because the learning algorithm can search the entire hypothesis space looking for its optimal solution. While this answer is correct, we need a more formal answer in light of the generalization inequality we’re studying.

The formulation of the generalization inequality reveals a main reason why we need to consider all the hypothesis in $\mathcal{H}$. It has to do with the existence of $\sup_{h \in \mathcal{H}}$. The supremum in the inequality guarantees that there’s a very little chance that the biggest generalization gap possible is greater than $\epsilon$; this is a strong claim and if we omit a single hypothesis out of $\mathcal{H}$, we might miss that “biggest generalization gap possible” and lose that strength, and that’s something we cannot afford to lose. We need to be able to make that claim to ensure that the learning algorithm would never land on a hypothesis with a bigger generalization gap than $\epsilon$.

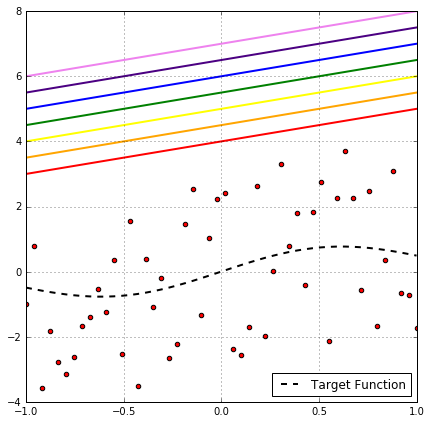

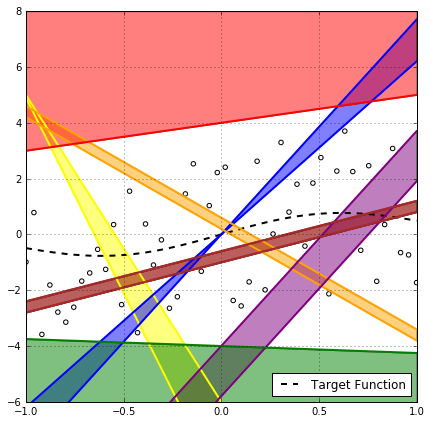

Looking at the above plot of binary classification problem, it’s clear that this rainbow of hypothesis produces the same classification on the data points, so all of them have the same empirical risk. So one might think, as they all have the same $R_\text{emp}$, why not choose one and omit the others?!

This would be a very good solution if we’re only interested in the empirical risk, but our inequality takes into its consideration the out-of-sample risk as well, which is expressed as:

This is an integration over every possible combination of the whole input and output spaces $\mathcal{X, Y}$. So in order to ensure our supremum claim, we need the hypothesis to cover the whole of $\mathcal{X \times Y}$, hence we need all the possible hypotheses in $\mathcal{H}$.

Now that we’ve established that we do need to consider every single hypothesis in $\mathcal{H}$, we can ask ourselves: are the events of each hypothesis having a big generalization gap are likely to be independent?

Well, Not even close! Take for example the rainbow of hypotheses in the above plot, it’s very clear that if the red hypothesis has a generalization gap greater than $\epsilon$, then, with 100% certainty, every hypothesis with the same slope in the region above it will also have that. The same argument can be made for many different regions in the $\mathcal{X \times Y}$ space with different degrees of certainty as in the following figure.

But this is not helpful for our mathematical analysis, as the regions seems to be dependent on the distribution of the sample points and there is no way we can precisely capture these dependencies mathematically, and we cannot make assumptions about them without risking to compromise the supremum claim.

So the union bound and the independence assumption seem like the best approximation we can make,but it highly overestimates the probability and makes the bound very loose, and very pessimistic!

However, what if somehow we can get a very good estimate of the risk $R(h)$ without needing to go over the whole of the $\mathcal{X \times Y}$ space, would there be any hope to get a better bound?

The Symmetrization Lemma

Let’s think for a moment about something we do usually in machine learning practice. In order to measure the accuracy of our model, we hold out a part of the training set to evaluate the model on after training, and we consider the model’s accuracy on this left out portion as an estimate for the generalization error. This works because we assume that this test set is drawn i.i.d. from the same distribution of the training set (this is why we usually shuffle the whole dataset beforehand to break any correlation between the samples).

It turns out that we can do a similar thing mathematically, but instead of taking out a portion of our dataset $S$, we imagine that we have another dataset $S’$ with also size $m$, we call this the ghost dataset . Note that this has no practical implications, we don’t need to have another dataset at training, it’s just a mathematical trick we’re gonna use to git rid of the restrictions of $R(h)$ in the inequality.

We’re not gonna go over the proof here, but using that ghost dataset one can actually prove that:

where $R_\text{emp}’(h)$ is the empirical risk of hypothesis $h$ on the ghost dataset. This means that the probability of the largest generalization gap being bigger than $\epsilon$ is at most twice the probability that the empirical risk difference between $S, S’$ is larger than $\frac{\epsilon}{2}$. Now that the right hand side in expressed only in terms of empirical risks, we can bound it without needing to consider the the whole of $\mathcal{X \times Y}$, and hence we can bound the term with the risk $R(h)$ without considering the whole of input and output spaces!

This, which is called the symmetrization lemma , was one of the two key parts in the work of Vapnik-Chervonenkis (1971).

The Growth Function

Now that we are bounding only the empirical risk, if we have many hypotheses that have the same empirical risk (a.k.a. producing the same labels/values on the data points), we can safely choose one of them as a representative of the whole group, we’ll call that an effective hypothesis, and discard all the others.

By only choosing the distinct effective hypotheses on the dataset $S$, we restrict the hypothesis space $\mathcal{H}$ to a smaller subspace that depends on the dataset $\mathcal{H}_{|S}$.

We can assume the independence of the hypotheses in $\mathcal{H}_{|S}$ like we did before with $\mathcal{H}$ (but it’s more plausible now), and use the union bound to get that:

Notice that the hypothesis space is restricted by $S \cup S’$ because we using the empirical risk on both the original dataset $S$ and the ghost $S’$. The question now is what is the maximum size of a restricted hypothesis space? The answer is very simple; we consider a hypothesis to be a new effective one if it produces new labels/values on the dataset samples, then the maximum number of distinct hypothesis (a.k.a the maximum number of the restricted space) is the maximum number of distinct labels/values the dataset points can take. A cool feature about that maximum size is that its a combinatorial measure, so we don’t need to worry about how the samples are distributed!

For simplicity, we’ll focus now on the case of binary classification, in which $\mathcal{Y}=\{-1, +1\}$. Later we’ll show that the same concepts can be extended to both multiclass classification and regression. In that case, for a dataset with $m$ samples, each of which can take one of two labels: either -1 or +1, the maximum number of distinct labellings is $2^m$.

We’ll define the maximum number of distinct labellings/values on a dataset $S$ of size $m$ by a hypothesis space $\mathcal{H}$ as the growth function of $\mathcal{H}$ given $m$, and we’ll denote that by $\Delta_\mathcal{H}(m)$. It’s called the growth function because it’s value for a single hypothesis space $\mathcal{H}$ (aka the size of the restricted subspace $\mathcal{H_{|S}}$) grows as the size of the dataset grows. Now we can say that:

Notice that we used $2m$ because we have two datasets $S,S’$ each with size $m$.

For the binary classification case, we can say that:

But $2^m$ is exponential in $m$ and would grow too fast for large datasets, which makes the odds in our inequality go too bad too fast! Is that the best bound we can get on that growth function?

The VC-Dimension

The $2^m$ bound is based on the fact that the hypothesis space $\mathcal{H}$ can produce all the possible labellings on the $m$ data points. If a hypothesis space can indeed produce all the possible labels on a set of data points, we say that the hypothesis space shatters that set.

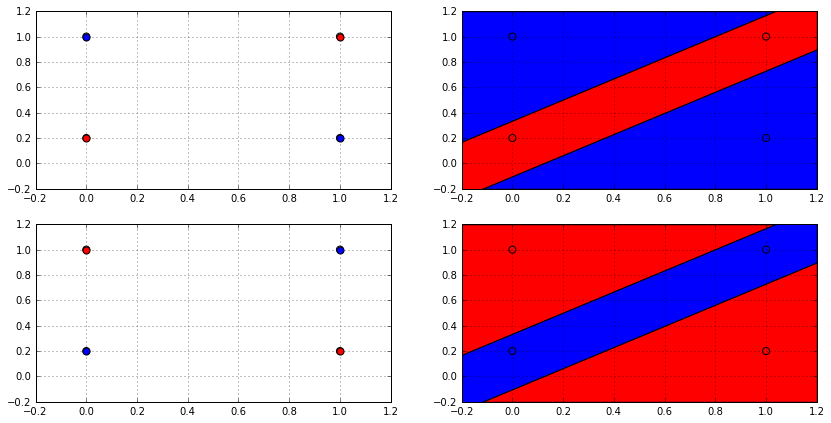

But can any hypothesis space shatter any dataset of any size? Let’s investigate that with the binary classification case and the $\mathcal{H}$ of linear classifiers $\mathrm{sign}(wx + b)$. The following animation shows how many ways a linear classifier in 2D can label 3 points (on the left) and 4 points (on the right).

In the animation, the whole space of possible effective hypotheses is swept. For the the three points, the hypothesis shattered the set of points and produced all the possible $2^3 = 8$ labellings. However for the four points,the hypothesis couldn’t get more than 14 and never reached $2^4 = 16$, so it failed to shatter this set of points. Actually, no linear classifier in 2D can shatter any set of 4 points, not just that set; because there will always be two labellings that cannot be produced by a linear classifier which is depicted in the following figure.

From the decision boundary plot (on the right), it’s clear why no linear classifier can produce such labellings; as no linear classifier can divide the space in this way. So it’s possible for a hypothesis space $\mathcal{H}$ to be unable to shatter all sizes. This fact can be used to get a better bound on the growth function, and this is done using Sauer’s lemma :

If a hypothesis space $\mathcal{H}$ cannot shatter any dataset with size more than $k$, then: \[\Delta_{\mathcal{H}}(m) \leq \sum_{i=0}^{k}\binom{m}{i}\]

This was the other key part of Vapnik-Chervonenkis work (1971), but it’s named after another mathematician, Norbert Sauer; because it was independently proved by him around the same time (1972). However, Vapnik and Chervonenkis weren’t completely left out from this contribution; as that $k$, which is the maximum number of points that can be shattered by $\mathcal{H}$, is now called the Vapnik-Chervonenkis-dimension or the VC-dimension $d_{\mathrm{vc}}$ of $\mathcal{H}$.

For the case of the linear classifier in 2D, $d_\mathrm{vc} = 3$. In general, it can be proved that hyperplane classifiers (the higher-dimensional generalization of line classifiers) in $\mathbb{R}^n$ space has $d_\mathrm{vc} = n + 1$.

The bound on the growth function provided by sauer’s lemma is indeed much better than the exponential one we already have, it’s actually polynomial! Using algebraic manipulation, we can prove that:

Where $O$ refers to the Big-O notation for functions asymptotic (near the limits) behavior, and $e$ is the mathematical constant.

Thus we can use the VC-dimension as a proxy for growth function and, hence, for the size of the restricted space $\mathcal{H_{|S}}$. In that case, $d_\mathrm{vc}$ would be a measure of the complexity or richness of the hypothesis space.

The VC Generalization Bound

With a little change in the constants, it can be shown that Heoffding’s inequality is applicable on the probability $\mathbb{P}\left[|R_\mathrm{emp}(h) - R_\mathrm{emp}’(h)| > \frac{\epsilon}{2}\right]$. With that, and by combining inequalities (1) and (2), the Vapnik-Chervonenkis theory follows:

This can be re-expressed as a bound on the generalization error, just as we did earlier with the previous bound, to get the VC generalization bound :

or, by using the bound on growth function in terms of $d_\mathrm{vc}$ as:

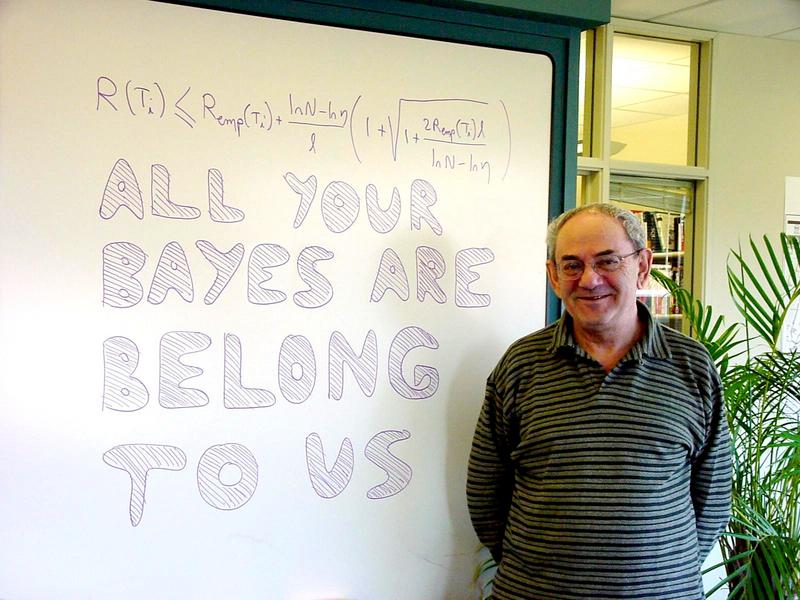

Professor Vapnik standing in front of a white board that has a form of the VC-bound and the phrase “All your bayes are belong to us”, which is a play on the broken english phrase found in the classic video game Zero Wing in a claim that the VC framework of inference is superior to that of Bayesian inference . [Courtesy of Yann LeCunn ].

This is a significant result! It’s a clear and concise mathematical statement that the learning problem is solvable, and that for infinite hypotheses spaces there is a finite bound on the their generalization error! Furthermore, this bound can be described in term of a quantity ($d_\mathrm{vc}$), that solely depends on the hypothesis space and not on the distribution of the data points!

Now, in light of these results, is there’s any hope for the memorization hypothesis?

It turns out that there’s still no hope! The memorization hypothesis can shatter any dataset no matter how big it is, that means that its $d_\mathrm{vc}$ is infinite, yielding an infinite bound on $R(h_\mathrm{mem})$ as before. However, the success of linear hypothesis can now be explained by the fact that they have a finite $d_\mathrm{vc} = n + 1$ in $\mathbb{R}^n$. The theory is now consistent with the empirical observations.

Distribution-Based Bounds

The fact that $d_\mathrm{vc}$ is distribution-free comes with a price: by not exploiting the structure and the distribution of the data samples, the bound tends to get loose. Consider for example the case of linear binary classifiers in a very higher n-dimensional feature space, using the distribution-free $d_\mathrm{vc} = n + 1$ means that the bound on the generalization error would be poor unless the size of the dataset $N$ is also very large to balance the effect of the large $d_\mathrm{vc}$. This is the good old curse of dimensionality we all know and endure.

However, a careful investigation into the distribution of the data samples can bring more hope to the situation. For example, For data points that are linearly separable, contained in a ball of radius $R$, with a margin $\rho$ between the closest points in the two classes, one can prove that for a hyperplane classifier:

It follows that the larger the margin, the lower the $d_\mathrm{vc}$ of the hypothesis. This is theoretical motivation behind Support Vector Machines (SVMs) which attempts to classify data using the maximum margin hyperplane. This was also proved by Vapnik and Chervonenkis.

One Inequality to Rule Them All

Up until this point, all our analysis was for the case of binary classification. And it’s indeed true that the form of the vc bound we arrived at here only works for the binary classification case. However, the conceptual framework of VC (that is: shattering, growth function and dimension) generalizes very well to both multi-class classification and regression.

Due to the work of Natarajan (1989), the Natarajan dimension is defined as a generalization of the VC-dimension for multiple classes classification, and a bound similar to the VC-Bound is derived in terms of it. Also, through the work of Pollard (1984), the pseudo-dimension generalizes the VC-dimension for the regression case with a bound on the generalization error also similar to VC’s.

There is also Rademacher’s complexity , which is a relatively new tool (devised in the 2000s) that measures the richness of a hypothesis space by measuring how well it can fit to random noise. The cool thing about Rademacher’s complexity is that it’s flexible enough to be adapted to any learning problem, and it yields very similar generalization bounds to the other methods mentioned.

However, no matter what the exact form of the bound produced by any of these methods is, it always takes the form:

where $C$ is a function of the hypothesis space complexity (or size, or richness), $N$ the size of the dataset, and the confidence $1 - \delta$ about the bound. This inequality basically says the generalization error can be decomposed into two parts: the empirical training error, and the complexity of the learning model.

This form of the inequality holds to any learning problem no matter the exact form of the bound, and this is the one we’re gonna use throughout the rest of the series to guide us through the process of machine learning.

References and Additional Readings

- Mohri, Mehryar, Afshin Rostamizadeh, and Ameet Talwalkar. Foundations of machine learning. MIT press, 2012.

- Shalev-Shwartz, Shai, and Shai Ben-David. Understanding machine learning: From theory to algorithms. Cambridge University Press, 2014.

- Abu-Mostafa, Y. S., Magdon-Ismail, M., & Lin, H. (2012). Learning from data: a short course.

Mostafa Samir

Wandering in a lifelong journey seeking after truth.

ID3 Algorithm and Hypothesis space in Decision Tree Learning

The collection of potential decision trees is the hypothesis space searched by ID3. ID3 searches this hypothesis space in a hill-climbing fashion, starting with the empty tree and moving on to increasingly detailed hypotheses in pursuit of a decision tree that properly classifies the training data.

In this blog, we’ll have a look at the Hypothesis space in Decision Trees and the ID3 Algorithm.

ID3 Algorithm:

The ID3 algorithm (Iterative Dichotomiser 3) is a classification technique that uses a greedy approach to create a decision tree by picking the optimal attribute that delivers the most Information Gain (IG) or the lowest Entropy (H).

What is Information Gain and Entropy?

Information gain: .

The assessment of changes in entropy after segmenting a dataset based on a characteristic is known as information gain.

It establishes how much information a feature provides about a class.

We divided the node and built the decision tree based on the value of information gained.

The greatest information gain node/attribute is split first in a decision tree method, which always strives to maximize the value of information gain.

The formula for Information Gain:

Entropy is a metric for determining the degree of impurity in a particular property. It denotes the unpredictability of data. The following formula may be used to compute entropy:

S stands for “total number of samples.”

P(yes) denotes the likelihood of a yes answer.

P(no) denotes the likelihood of a negative outcome.

- Calculate the dataset’s entropy.

- For each feature/attribute.

Determine the entropy for each of the category values.

Calculate the feature’s information gain.

- Find the feature that provides the most information.

- Repeat it till we get the tree we want.

Characteristics of ID3:

- ID3 takes a greedy approach, which means it might become caught in local optimums and hence cannot guarantee an optimal result.

- ID3 has the potential to overfit the training data (to avoid overfitting, smaller decision trees should be preferred over larger ones).

- This method creates tiny trees most of the time, however, it does not always yield the shortest tree feasible.

- On continuous data, ID3 is not easy to use (if the values of any given attribute are continuous, then there are many more places to split the data on this attribute, and searching for the best value to split by takes a lot of time).

Over Fitting:

Good generalization is the desired property in our decision trees (and, indeed, in all classification problems), as we noted before.

This implies we want the model fit on the labeled training data to generate predictions that are as accurate as they are on new, unseen observations.

Capabilities and Limitations of ID3:

- In relation to the given characteristics, ID3’s hypothesis space for all decision trees is a full set of finite discrete-valued functions.

- As it searches across the space of decision trees, ID3 keeps just one current hypothesis. This differs from the prior version space candidate Elimination approach, which keeps the set of all hypotheses compatible with the training instances provided.

- ID3 loses the capabilities that come with explicitly describing all consistent hypotheses by identifying only one hypothesis. It is unable to establish how many different decision trees are compatible with the supplied training data.

- One benefit of incorporating all of the instances’ statistical features (e.g., information gain) is that the final search is less vulnerable to faults in individual training examples.

- By altering its termination criterion to allow hypotheses that inadequately match the training data, ID3 may simply be modified to handle noisy training data.

- In its purest form, ID3 does not go backward in its search. It never goes back to evaluate a choice after it has chosen an attribute to test at a specific level in the tree. As a result, it is vulnerable to the standard dangers of hill-climbing search without backtracking, resulting in local optimum but not globally optimal solutions.

- At each stage of the search, ID3 uses all training instances to make statistically based judgments on how to refine its current hypothesis. This is in contrast to approaches that make incremental judgments based on individual training instances (e.g., FIND-S or CANDIDATE-ELIMINATION ).

Hypothesis Space Search by ID3:

- ID3 climbs the hill of knowledge acquisition by searching the space of feasible decision trees.

- It looks for all finite discrete-valued functions in the whole space. Every function is represented by at least one tree.

- It only holds one theory (unlike Candidate-Elimination). It is unable to inform us how many more feasible options exist.

- It’s possible to get stranded in local optima.

- At each phase, all training examples are used. Errors have a lower impact on the outcome.

Help | Advanced Search

Statistics > Machine Learning

Title: hypothesis spaces for deep learning.

Abstract: This paper introduces a hypothesis space for deep learning that employs deep neural networks (DNNs). By treating a DNN as a function of two variables, the physical variable and parameter variable, we consider the primitive set of the DNNs for the parameter variable located in a set of the weight matrices and biases determined by a prescribed depth and widths of the DNNs. We then complete the linear span of the primitive DNN set in a weak* topology to construct a Banach space of functions of the physical variable. We prove that the Banach space so constructed is a reproducing kernel Banach space (RKBS) and construct its reproducing kernel. We investigate two learning models, regularized learning and minimum interpolation problem in the resulting RKBS, by establishing representer theorems for solutions of the learning models. The representer theorems unfold that solutions of these learning models can be expressed as linear combination of a finite number of kernel sessions determined by given data and the reproducing kernel.

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

Candidate Elimination Algorithm Program in Python

What is candidate elimination algorithm in machine learning.

- Before Implementing the Candidate Elimination Algorithm in Python we Shall discuss about this briefly.

- The Candidate Elimination Algorithm is a Machine Learning Algorithm used for concept learning and hypothesis space search in the context of content Classification.

- This algorithm incrementally builds the version space given a hypothesis space H and a set E of examples.

- The examples are added one by one; each example possibly shrinks the version space by removing the hypotheses that are inconsistent with the example.

- It does this by updating the general and specific boundary for each new example.

The Primary Goal is to generate a set of hypothesis, known as the “Version Space” that represents a range of possible concepts based on observed training data.

Version Space: It is an intermediate of general hypothesis and Specific hypothesis. It not only just writes one hypothesis but a set of all possible hypotheses based on training dataset.

How It Updates the Version Space?

- Version Space Update: The version space represents the set of all hypotheses that are consistent with the training data encountered so far. It is the intersection of S and G. As more examples are processed, the version space narrows down to a smaller set of consistent hypotheses.

- Termination: The algorithm continues processing examples until convergence, meaning that the version space becomes a single hypothesis or remains unchanged. At this point, the algorithm terminates.

- The Candidate Elimination Algorithm is used in concept learning tasks where there is uncertainty about the true concept being learned. It efficiently explores the hypothesis space and helps maintain a range of potential concepts, making it a valuable tool in machine learning and artificial intelligence, particularly in the early days of concept learning research.

Steps to Follow for the Candidate Elimination Algorithm:

- Start with two hypotheses:

- The most specific hypothesis, denoted as S, initially assumes that no attribute value can be generalized (usually initialized with the most specific values, like ‘Φ’ for each attribute).

- The most general hypothesis, denoted as G, initially assumes that all attribute values can be generalized (initialized with the least specific values, like ‘?’for each attribute).

- For each training example:

- When a positive example is encountered, the algorithm refines both S and G as follows:

- If the attribute value in the example is different from the value in S, replace the value in S with ‘Φ’ (indicating uncertainty).

- If the attribute value in the example matches the value in G, keep it unchanged.

- If the attribute value in the example is different from the value in G, generalize the value in G to include the value in the example.

- This process makes S more specific and G more general.

- When a negative example is encountered, the algorithm only updates G

- If the attribute value in the example does not match the value in G, keep it unchanged.

- If the attribute value in the example matches the value in G, replace the value in G with ‘?’ (indicating uncertainty).

- This process makes G more general.

- Continue the iteration through all training examples until no more updates can be made to S and G , or a single set of Hypothesis with consistent space comes.

- The final hypothesis is a conjunction of the most specific S and the most general G is updated throughout the process.

The Candidate Elimination Algorithm maintains a set of hypotheses that are consistent with the training data seen so far. It gradually narrows down the set of possible hypotheses as it processes more examples.

The specific hypothesis S represents the current “best guess” of the target concept, while the general hypothesis G maintains a set of potential generalizations of the concept.

It’s important to note that the Candidate Elimination Algorithm works well for learning simple concepts, but it may struggle with more complex concepts or noisy data.

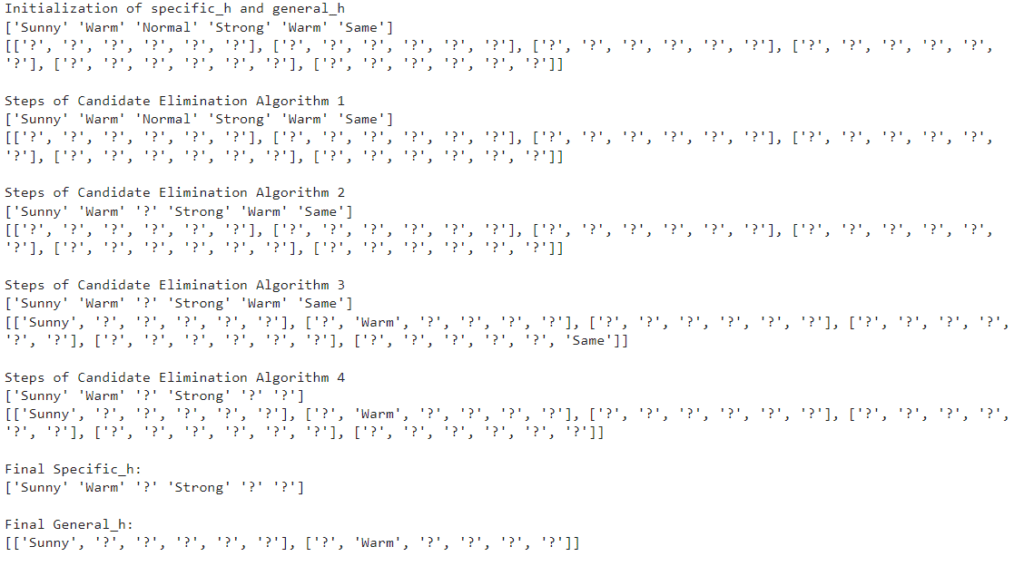

Step 1: Load the Data set. (Enjoysport dataset is taken from Kaggle website as .csv file) Step 2: Initialize General Hypothesis ‘G’ and Specific Hypothesis ‘S’. Step 3: For each training example, if example is positive example: Make specific hypothesis more general. if attribute_value == hypothesis_value: Do nothing else: replace attribute value with ‘?’ (Basically generalizing it) Step 4: If example is Negative example: Make generalize hypothesis more specific.

Step 1: Importing the dataset

The Dataset can be downloaded from the given link :

EnjoySport Dataset :

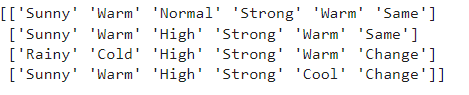

Step 2: Initialize the Given General Hypothesis ‘G’ and Specific Hypothesis ‘S’.

S={Φ,Φ,Φ,Φ,Φ,Φ} Because Six instance of enjoy sport are given in the dataset. G={?,?,?,?,?,?} Because Six instance of enjoy sport are given in the dataset.

Step 3: Python Source code

# import the packages import numpy as np import pandas as pd

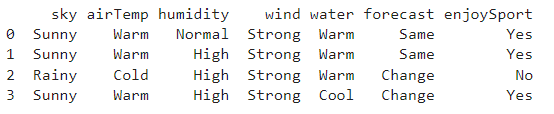

# Loading Data from a CSV File data=pd.DataFrame(data=pd.read_csv(‘training data.csv’)) print(data)

# Separating concept features from Target concepts = np.array(data.iloc[:,0:-1]) print(concepts)

# Isolating target into a separate DataFrame # copying last column to target array target = np.array(data.iloc[:,-1]) print(target)

[‘Yes’ ‘Yes’ ‘No’ ‘Yes’]

# building the algorithm

Step 4: The Final Hypothesis For S and G

G = [[‘sunny’, ?, ?, ?, ?, ?], [?, ‘warm’, ?, ?, ?, ?]] S = [‘sunny’,’warm’,?,’strong’, ?, ?]

Related Posts

The Rise of Generative AI: Unlocking Possibilities Across Industries

Top Machine Learning Projects for Final Year

Markov Decision Process in Machine Learning

30 thoughts on “candidate elimination algorithm program in python”.

Great Content

Hi would you mind sharing which blog platform you’re working with? I’m going to start my own blog soon but I’m having a hard time making a decision between BlogEngine/Wordpress/B2evolution and Drupal. The reason I ask is because your layout seems different then most blogs and I’m looking for something completely unique. P.S My apologies for getting off-topic but I had to ask!

could you please briefly explain what you are expecting?

Home | learnfreeblog

Every weekend i used to pay a visit this web site, because i wish for enjoyment, as this this web page conations in fact fastidious funny stuff too.

Every weekend i used to go to see this site, because i wish for enjoyment, since this this web page conations actually fastidious funny material too.

Nice post. I learn something totally new and challenging on websites I stumbleupon everyday. It’s always helpful to read through content from other writers and practice a little something from their web sites.

“Great read! The information provided is not only insightful but also practical. I appreciate the effort you’ve put into explaining Candidate Elimination Algorithm in such a clear and concise manner. It’s evident that you have a deep understanding of the subject, making it easy for readers like me to grasp the concepts. Looking forward to more valuable content from your blog!”

I, for my friends in the class, wish to express our own thanks for the truly wonderful secrets revealed through your article. Your own clear explanation helped bring comfort and optimism to all of us and would certainly really help us in a research we are at this time doing. I think if still come across web pages like yours, my own stay in college is an easy one. Thanks

Hello – I must say, I’m impressed with your site. I had no trouble navigating through all the tabs and information was very easy to access. I found what I wanted in no time at all. Pretty awesome. Would appreciate it if you add forums or something, it would be a perfect way for your clients to interact. Great job

Very good blog! Do you have any hints for aspiring writers? I’m planning to start my own site soon but I’m a little lost on everything. Would you suggest starting with a free platform like WordPress or go for a paid option? There are so many choices out there that I’m totally confused .. Any tips? Bless you!

hi Dear Monty! kindly contact us. I will help you!

Reading your post made me think. Mission accomplished I guess. I will write something about this on my blog. .

– Gulvafslibning | Kurt Gulvmand After research a few of the blog posts in your web site now, and I truly like your way of blogging. I bookmarked it to my bookmark website checklist and might be checking again soon. Pls try my website online as nicely and let me know what you think. Regards, Fine Teak Chair

I always was interested in this subject and still am, thankyou for posting .

Howdy sir” you have a really nice blog layout “

Your blog is amazing dude, i love to visit it everyday. very nice layout and content “

Thank you friend!

I will right away grasp your rss as I can not to find your email subscription link or newsletter service. Do you have any? Kindly allow me recognise in order that I may subscribe. Thanks.

It¡¦s actually a great and helpful piece of information. I¡¦m glad that you just shared this helpful information with us. Please stay us informed like this. Thank you for sharing.

Your blog never ceases to amaze me, it is very well written and organized.”\”*”-

Outstanding read, I just passed this onto a colleague who was doing a little research on that. And he actually bought me lunch because I discovered it for him smile So let me rephrase that: Thanks for lunch!

There is noticeably a bundle to learn about this. I assume you’ve made specific nice points in functions also.

I actually wanted to make a brief word to say thanks to you for all the superb guidelines you are writing on this website. My considerable internet investigation has now been paid with good quality facts to exchange with my family members. I ‘d express that most of us site visitors are extremely fortunate to be in a useful website with very many brilliant people with interesting pointers. I feel really fortunate to have discovered the site and look forward to many more entertaining moments reading here. Thanks once more for a lot of things.

Nice site, nice and easy on the eyes and great content too. How long have you been blogging for?

Just six months only friend!

What a lovely blog. I’ll surely be back again. Please preserve writing!

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Machine Learning

Artificial Intelligence

Control System

Supervised Learning

Classification, miscellaneous, related tutorials.

Interview Questions

- Send your Feedback to [email protected]

Help Others, Please Share

Learn Latest Tutorials

Transact-SQL

Reinforcement Learning

R Programming

React Native

Python Design Patterns

Python Pillow

Python Turtle

Preparation

Verbal Ability

Company Questions

Trending Technologies

Cloud Computing

Data Science

B.Tech / MCA

Data Structures

Operating System

Computer Network

Compiler Design

Computer Organization

Discrete Mathematics

Ethical Hacking

Computer Graphics

Software Engineering

Web Technology

Cyber Security

C Programming

Data Mining

Data Warehouse

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 08 May 2024

Predicting equilibrium distributions for molecular systems with deep learning

- Shuxin Zheng ORCID: orcid.org/0000-0001-7828-5374 1 na1 ,

- Jiyan He ORCID: orcid.org/0009-0003-4539-1826 1 , 2 na1 ,

- Chang Liu ORCID: orcid.org/0000-0001-5207-5440 1 na1 ,

- Yu Shi 1 na1 ,

- Ziheng Lu 1 na1 ,

- Weitao Feng 1 , 2 ,

- Fusong Ju ORCID: orcid.org/0000-0002-0467-7858 1 ,

- Jiaxi Wang 1 ,

- Jianwei Zhu ORCID: orcid.org/0000-0002-8272-9190 1 ,

- Yaosen Min 1 ,

- He Zhang 1 ,

- Shidi Tang ORCID: orcid.org/0000-0001-5493-7411 1 ,

- Hongxia Hao ORCID: orcid.org/0000-0002-4382-200X 1 ,

- Peiran Jin 1 ,

- Chi Chen ORCID: orcid.org/0000-0001-8008-7043 3 ,

- Frank Noé 4 ,

- Haiguang Liu ORCID: orcid.org/0000-0001-7324-6632 1 &

- Tie-Yan Liu ORCID: orcid.org/0000-0002-0476-8020 1

Nature Machine Intelligence ( 2024 ) Cite this article

1686 Accesses

24 Altmetric

Metrics details

- Computational methods

- Computational science

- Molecular modelling

Advances in deep learning have greatly improved structure prediction of molecules. However, many macroscopic observations that are important for real-world applications are not functions of a single molecular structure but rather determined from the equilibrium distribution of structures. Conventional methods for obtaining these distributions, such as molecular dynamics simulation, are computationally expensive and often intractable. Here we introduce a deep learning framework, called Distributional Graphormer (DiG), in an attempt to predict the equilibrium distribution of molecular systems. Inspired by the annealing process in thermodynamics, DiG uses deep neural networks to transform a simple distribution towards the equilibrium distribution, conditioned on a descriptor of a molecular system such as a chemical graph or a protein sequence. This framework enables the efficient generation of diverse conformations and provides estimations of state densities, orders of magnitude faster than conventional methods. We demonstrate applications of DiG on several molecular tasks, including protein conformation sampling, ligand structure sampling, catalyst–adsorbate sampling and property-guided structure generation. DiG presents a substantial advancement in methodology for statistically understanding molecular systems, opening up new research opportunities in the molecular sciences.

Similar content being viewed by others

Molecular Geometry Prediction using a Deep Generative Graph Neural Network

State-specific protein–ligand complex structure prediction with a multiscale deep generative model

Language models can learn complex molecular distributions

Deep learning methods excel at predicting molecular structures with high efficiency. For example, AlphaFold predicts protein structures with atomic accuracy 1 , enabling new structural biology applications 2 , 3 , 4 ; neural network-based docking methods predict ligand binding structures 5 , 6 , supporting drug discovery virtual screening 7 , 8 ; and deep learning models predict adsorbate structures on catalyst surfaces 9 , 10 , 11 , 12 . These developments demonstrate the potential of deep learning in modelling molecular structures and states.

However, predicting the most probable structure only reveals a fraction of the information about a molecular system in equilibrium. Molecules can be very flexible, and the equilibrium distribution is essential for the accurate calculation of macroscopic properties. For example, biomolecule functions can be inferred from structure probabilities to identify metastable states; and thermodynamic properties, such as entropy and free energies, can be computed from probabilistic densities in the structure space using statistical mechanics.

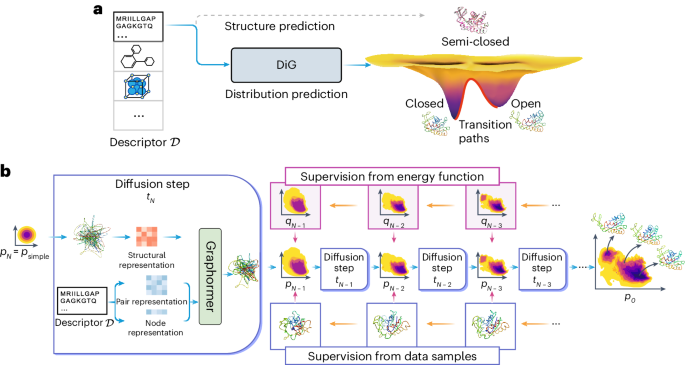

Figure 1a shows the difference between conventional structure prediction and distribution prediction of molecular systems. Adenylate kinase has two distinct functional conformations (open and closed states), both experimentally determined, but a predicted structure usually corresponds to a highly probable metastable state or an intermediate state (as shown in this figure). A method is desired to sample the equilibrium distribution of proteins with multiple functional states, such as adenylate kinase.

a , DiG takes the basic descriptor \({{{\mathcal{D}}}}\) of a target molecular system as input—for example, an amino acid sequence—to generate a probability distribution of structures that aims at approximating the equilibrium distribution and sampling different metastable or intermediate states. In contrast, static structure prediction methods, such as AlphaFold 1 , aim at predicting one single high-probability structure of a molecule. b , The DiG framework for predicting distributions of molecular structures. A deep learning model (Graphormer 10 ) is used as modules to predict a diffusion process (→) that gradually transforms a simple distribution towards the target distribution. The model is trained so that the derived distribution p i in each intermediate diffusion time step i matches the corresponding distribution q i in a predefined diffusion process (←) that is set to transform the target distribution to the simple distribution. Supervision can be obtained from both samples (workflow in the top row) and a molecular energy function (workflow shown in the bottom row).

Unlike single structure prediction, equilibrium distribution research still depends on classical and costly simulation methods, while deep learning methods are underdeveloped. Commonly, equilibrium distributions are sampled with molecular dynamics (MD) simulations, which are expensive or infeasible 13 . Enhanced sampling simulations 14 , 15 and Markov state modelling 16 can accelerate rare event sampling but need system-specific collective variables and are not easily generalized. Another approach is coarse-grained MD 17 , 18 , where deep learning approaches have been proposed 19 , 20 . These deep learning coarse-grained methods have worked well for individual molecular systems but have not yet demonstrated generalization. Boltzmann generators 21 are a deep learning approach to generate equilibrium distributions by creating a probability flow from a simple reference state, but this also hard to generalize to different molecules. Generalization has been demonstrated for flows generating simulations with longer time steps for small peptides but has not yet been scaled to large proteins 22 .

In this Article, we develop DiG, a deep learning approach to approximately predict the equilibrium distribution and efficiently sample diverse and function-relevant structures of molecular systems. We show that DiG can generalize across molecular systems and propose diverse structures that resemble observations in experiments. DiG draws inspiration from simulated annealing 23 , 24 , 25 , 26 , which transforms a uniform distribution to a complex one through a simulated annealing process. DiG simulates a diffusion process that gradually transforms a simple distribution to the target one, approximating the equilibrium distribution of the given molecular system 27 , 28 (Fig. 1b , right arrow symbol). As the simple distribution is chosen to enable independent sampling and have a closed-form density function, DiG enables independent sampling of the equilibrium distribution and also provides a density function for the distribution by tracking the process. The diffusion process can also be biased towards a desired property for inverse design and allows interpolation between structures that passes through high-probability regions. This diffusion process is implemented by a deep learning model based upon the Graphormer architecture 10 (Fig. 1b ), conditioned on a descriptor of the target molecule, such as a chemical graph or a protein sequence. DiG can be trained with structure data from experiments and MD simulations. For data-scarce cases, we develop a physics-informed diffusion pre-training (PIDP) method to train DiG with energy functions (such as force fields) of the systems. In both data-based or energy-supervised modes, the model gets a training signal in each diffusion step independently (Fig. 1b , left arrow symbol), enabling efficient training that avoids long-chain back-propagation.

We evaluate DiG on three predictive tasks: protein structure distribution, the ligand conformation distribution in binding pockets and the molecular adsorption distribution on catalyst surfaces. DiG generates realistic and diverse molecular structures in these tasks. For the proteins in this Article, DiG efficiently generated structures resembling major functional states. We further demonstrate that DiG can facilitate the inverse design of molecular structures by applying biased distributions that favour structures with desired properties. This capability can expand molecular design for properties that lack enough data. These results indicate that DiG advances deep learning for molecules from predicting a single structure towards predicting structure distributions, paving the way for efficient prediction of the thermodynamic properties of molecules.

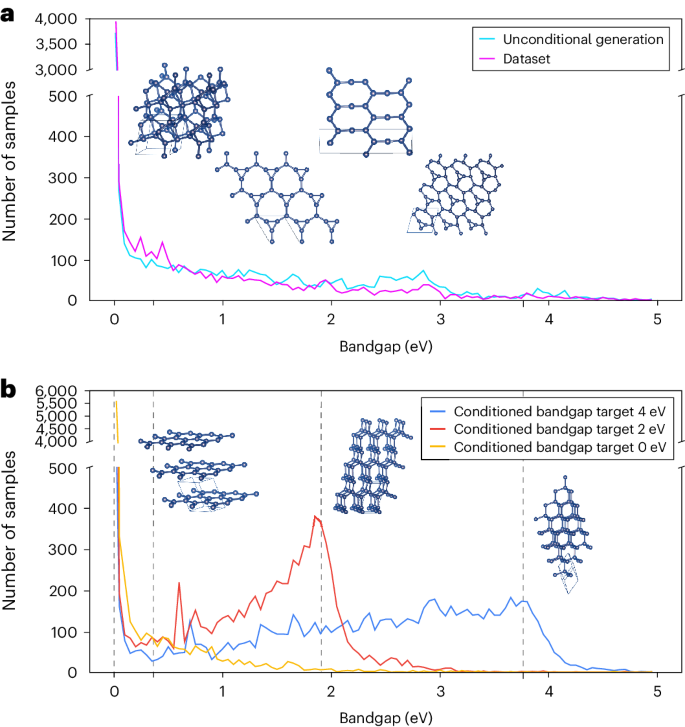

Here, we demonstrate that DiG can be applied to study protein conformations, protein–ligand interactions and molecule adsorption on catalyst surfaces. In addition, we investigate the inverse design capability of DiG through its application to carbon allotrope generation for desired electronic band gaps.

Protein conformation sampling

At physiological conditions, most protein molecules exhibit multiple functional states that are linked via dynamical processes. Sampling of these conformations is crucial for the understanding of protein properties and their interactions with other molecules. Recently, it was reported that AlphaFold 1 can generate alternative conformations for certain proteins by manipulating input information such as multiple sequence alignments (MSAs) 29 . However, this approach is developed on the basis of varying the depth of MSAs, and it is hard to generalize to all proteins (especially those with a small number of homologous sequences). Therefore, it is highly desirable to develop advanced artificial intelligence (AI) models that can sample diverse structures consistent with the energy landscape in the conformational space 29 . Here, we show that DiG is capable of generating diverse and functionally relevant protein structures, which is a key capability for being able to efficiently sample equilibrium distributions.

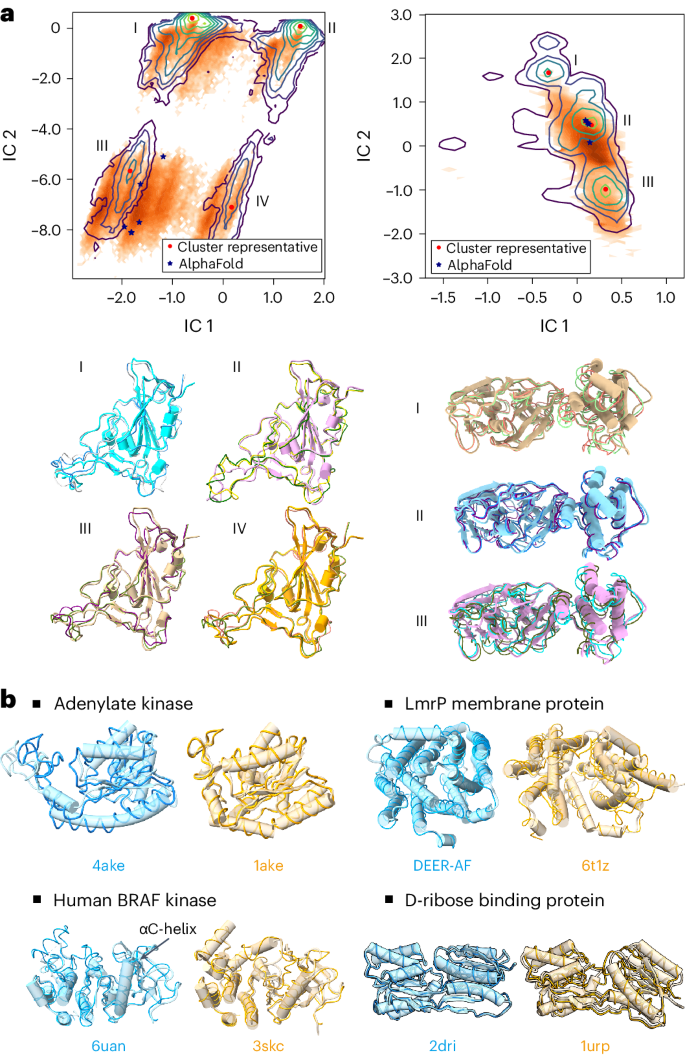

Because the equilibrium distribution of protein conformations is difficult to obtain experimentally or computationally, there is a lack of high-quality data for training or benchmarking. To train this model, we collect experimental and simulated structures from public databases. To mitigate the data scarcity, we generated an MD simulation dataset and developed the PIDP training method (see Supplementary Information sections A.1.1 and D.1 for the training procedure and the dataset). The performance of DiG was assessed at two levels: (1) by comparing the conformational distributions against those obtained from extensive (millisecond timescale) atomistic MD simulations and (2) by validating on proteins with multiple conformations. As shown in Fig. 2a , the conformational distributions are obtained from MD simulations for two proteins from the SARS-CoV-2 virus 30 (the receptor-binding domain (RBD) of the spike protein and the main protease, also known as 3CL protease; see Supplementary Information section A.7 for details on the MD simulation data). These two proteins are the crucial components of the SARS-CoV-2 and key targets for drug development in treating COVID-19 31 , 32 . The millisecond-timescale MD simulations extensively sample conformation space, and we therefore regard the resulting distribution as a proxy for the equilibrium distribution.

a , Structures generated by DiG resemble the diverse conformations of millisecond MD simulations. MD-simulated structures are projected onto the reduced space spanned by two time-lagged independent component analysis (TICA) coordinates (that is, independent component (IC) 1 and 2), and the probability densities are depicted using contour lines. Left: for the RBD protein, MD simulation reveals four highly populated regions in the 2D space spanned by TICA coordinates. DiG-generated structures are mapped to this 2D space (shown as orange dots), with a distribution reflected by the colour intensity. Under the distribution plot, structures generated by DiG (thin ribbons) are superposed on representative structures. AlphaFold-predicted structures (stars) are shown in the plot. Right: the results for the SARS-CoV-2 main protease, compared with MD simulation and AlphaFold prediction results. The contour map reveals three clusters, DiG-generated structures overlap with clusters II and III, whereas structures in cluster I are underrepresented. b , The performance of DiG on generating multiple conformations of proteins. Structures generated by DiG (thin ribbons) are compared with the experimentally determined structures (each structure is labelled by its PDB ID, except DEER-AF, which is an AlphaFold predicted model, shown as cylindrical cartoons). For the four proteins (adenylate kinase, LmrP membrane protein, human BRAF kinase and D-ribose binding protein), structures in two functional states (distinguished by cyan and brown) are well reproduced by DiG (ribbons).

Taking protein sequences as the descriptor inputs for DiG, structures were generated and compared with simulation data. Although simulation data of RBD and the main protease were not used for DiG training, generated structures resemble the conformational distributions (Fig. 2a ). In the two-dimensional (2D) projection space of RBD conformations, MD simulations populate four regions, which are all sampled by DiG (Fig. 2a , left). Four representative structures are well reproduced by DiG. Similarly, three representative structures from main protease simulations are predicted by DiG (Fig. 2a ). We noticed that conformations in cluster I are not well recovered by DiG, indicating room for improvement. In terms of conformational coverage, we compared the regions sampled by DiG with those from simulations in the 2D manifold (Fig. 2a ), observing that about 70% of the RBD conformations sampled by simulations can be covered with just 10,000 DiG-generated structures (Supplementary Fig. 1 ).

Atomistic MD simulations are computationally expensive, therefore millisecond-timescale simulations of proteins are rarely executed, except for simulations on special-purpose hardware such as the Anton supercomputer 13 or extensive distributed simulations combined in Markov state models 16 . To obtain an additional assessment on the diverse structures generated by DiG, we turn to proteins with multiple structures that have been experimentally determined. In Fig. 2b , we show the capability of DiG in generating multiple conformations for four proteins. Experimental structures are shown in cylinder cartoons, each aligned with two structures generated by DiG (thin ribbons). For example, DiG generated structures similar to either open or closed states of the adenylate kinase protein (for example, backbone root mean square difference (r.m.s.d.) < 1.0 Å to the closed state, 1ake). Similarly, for the drug transport protein LmrP, DiG generated structures covering both states (r.m.s.d. < 2.0 ): one structure is experimentally determined, and the other (denoted as DEER-AF) is the AlphaFold prediction 29 supported by double electron electron resonance (DEER) experiments 33 . For human BRAF kinase, the overall structural difference between the two states is less pronounced. The major difference is in the A-loop region and a nearby helix (the αC-helix, indicated in the figure) 34 . Structures generated by DiG accurately capture such regional structural differences. For D-ribose binding protein, the packing of two domains is the major source of structural difference. DiG correctly generates structures corresponding to both the straight-up conformation (cylinder cartoon) and the twisted or tilted conformation. If we align one domain of D-ribose binding protein, the other domain only partially matches the twisted conformation as an ‘intermediate’ state. Furthermore, DiG can generate plausible conformation transition pathways by latent space interpolations (see demonstration cases in Supplementary Videos 1 and 2 ). In summary, beyond static structure prediction for proteins, DiG generates diverse structures corresponding to different functional states.

Ligand structure sampling around binding sites

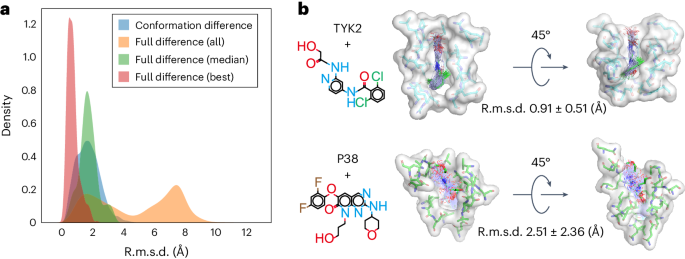

An immediate extension of protein conformational sampling is to predict ligand structures in druggable pockets. To model the interactions between proteins and ligands, we conducted MD simulations for 1,500 complexes to train the DiG model (see Supplementary Information section D.1 for the dataset). We evaluated the performance of DiG with 409 protein–ligand systems 35 , 36 that are not in the training dataset. The inputs of DiG include protein pocket information (atomic type and position) and the ligand descriptor (a SMILES string). We pad the input node and pair representations with zeros to handle the different number of atoms surrounding a pocket and the different length of SMILES strings. The predicted results are the atomic coordinate distributions of both the ligand and the protein pocket. For protein pockets, changes in atomic positions are up to 1.0 Å in terms of r.m.s.d. compared with the input values, reflecting pocket flexibility during ligand structure generation. For the ligand structures, the deviation comes from two sources: (1) the conformational difference between generated and experimental structures, and (2) the difference in the binding pose due to ligand translation and rotation. Among all the tested cases, the conformational differences are small, with an r.m.s.d. value of 1.74 Å on average, indicating that generated structures are highly similar to the ligands resolved in crystal structures (Fig. 3a ). When including the binding pose deviations, larger discrepancies are observed. Yet, the DiG predicts structures that are very similar to the experimental structure for each system. The best matched structure among 50 generated structures for each system is within 2.0 Å r.m.s.d. compared with the experimental data for nearly all 409 testing systems (see Fig. 3a for the r.m.s.d. distribution, with more cases shown in Supplementary Fig. 3 ). The accuracy of generated structures for ligand is related to the characteristics of the binding pocket. For example, in the case of the TYK2 kinase protein, the ligand shown in Fig. 3b (top) deviated from the crystal structure by 0.91 Å (r.m.s.d.) on average. For target P38, the ligand exhibited more diverse binding poses, probably owing to the relatively shallow binding pocket, making the most stable binding pose less dominant compared with other poses (Fig. 3b , bottom). MD simulations reveal similar trends as DiG-generated structures, with ligand binding to TYK2 more tightly than in the case of P38 (Supplementary Fig. 2 ). Overall, we observed that the generated structures resemble experimentally observed poses.

a , The results of DiG on poses of ligands bound to protein pockets. DiG generates ligand structures and binding poses with good accuracy compared with the crystal structures (reflected by the r.m.s.d. statistics shown in the red histogram for the best matching cases and the green histogram for the median r.m.s.d. statistics). When considering all 50 predicted structures for each system, diversity is observed, as reflected in the r.m.s.d. histogram (yellow colour, normalized). All r.m.s.d. values are calculated for ligands with respect to their coordinates in complex structures. b , Representative systems show diversity in ligand structures, and such predicted diversity is related to the properties of the binding pocket. For a deep and narrow binding pocket such as for the TYK2 protein (shown in the surface representation, top panel), DiG predicts highly similar binding poses for the ligand (in atom bond representations, top panel). For the P38 protein, the binding pocket is relatively flat and shallow and predicted ligand poses are more diverse and have large conformational flexibility (bottom panel, following the same representations as in the TYK2 case). The average r.m.s.d. values and the associated standard deviations are indicated next to the complex structures.

Catalyst–adsorbate sampling

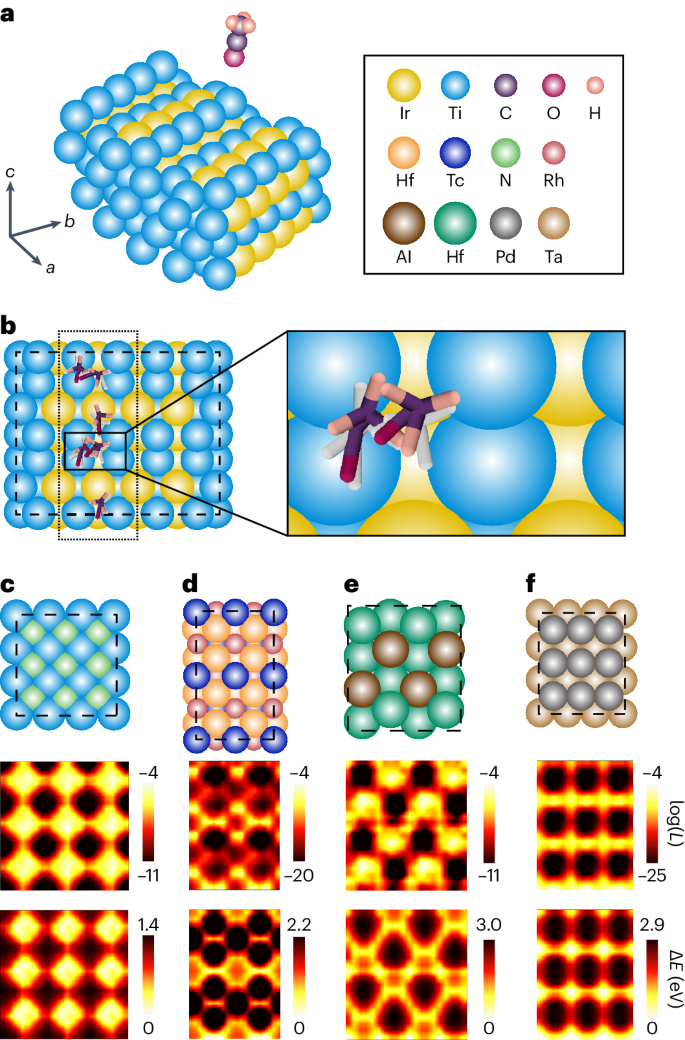

Identifying active adsorption sites is a central task in heterogeneous catalysis. Owing to the complex surface–molecule interactions, such tasks rely heavily on a combination of quantum chemistry methods such as density functional theory (DFT) and sampling techniques such as MD simulations and grid search. These lead to large and sometimes intractable computational costs. We evaluate the capability of DiG for this task by training it on the MD trajectories of catalyst–adsorbate systems from the Open Catalyst Project and carrying out further evaluations on random combinations of adsorbates and surfaces that are not included in the training set 9 . DiG takes the atomic types, initial positions of atoms in substrate, and the lattice vectors of the substrate, with an initial structure of the molecular adsorbate, as joint inputs. Besides, we use a cross-attention sub-layer to handle the periodic boundary conditions, as detailed in Supplementary Information section B.5 . On feeding the model with a substrate and a molecular adsorbate, DiG can predict adsorption sites and stable adsorbate configurations, along with the probability for each configuration (see Supplementary Information sections A.4 and A.7 for training and evaluation details). Figure 4a,b shows the adsorption configurations of an acyl group on a stepped TiIr alloy surface. Multiple adsorption sites are predicted by DiG. To test the plausibility of these predicted configurations and evaluate the coverage of the predictions, we carry out a grid search using DFT methods. The results confirm that DiG predicts all stable sites found by the grid search and that the adsorption configurations are in close agreement, with an r.m.s.d. of 0.5–0.8 Å (Fig. 4b ). It should be noted that the combinations of substrate and adsorbate shown in Fig. 4b are not included in the training dataset. Therefore, the result demonstrates the cross-system generalization capability of DiG in catalyst adsorption predictions. Here we show only the top view. Supplementary Fig. 4 in addition shows the front view of the adsorption configurations.

a , The problem setting: the prediction of the adsorption configuration distribution of an adsorbate on a catalyst surface. b , The adsorption sites and corresponding configurations of the adsorbate found by DiG (in colour) compared with DFT results (in white). DiG finds all adsorption sites, with adsorbate structures close to the DFT calculation results (see Supplementary Information for details of the adsorption sites and configurations). c – f , Adsorption prediction results of single N or O atoms on TiN ( c ), RhTcHf ( d ), AlHf ( e ) and TaPd ( f ) catalyst surfaces compared with DFT calculation results. Top: the catalyst surface. Middle: the probability distribution of adsorbate molecules on the corresponding catalyst surfaces on log scale. Bottom: the interaction energies between the adsorbate molecule and the catalyst calculated using DFT methods. The adsorption sites and predicted probabilities are highly consistent with the energy landscape obtained by DFT.