Artificial Intelligence

Control System

- Interview Q

Intelligent Agent

Problem-solving, adversarial search, knowledge represent, uncertain knowledge r., subsets of ai, artificial intelligence mcq, related tutorials.

- Send your Feedback to [email protected]

Help Others, Please Share

Learn Latest Tutorials

Transact-SQL

Reinforcement Learning

R Programming

React Native

Python Design Patterns

Python Pillow

Python Turtle

Preparation

Verbal Ability

Interview Questions

Company Questions

Trending Technologies

Cloud Computing

Data Science

Machine Learning

B.Tech / MCA

Data Structures

Operating System

Computer Network

Compiler Design

Computer Organization

Discrete Mathematics

Ethical Hacking

Computer Graphics

Software Engineering

Web Technology

Cyber Security

C Programming

Data Mining

Data Warehouse

- Admiral “Amazing Grace” Hopper

Exploring the Intricacies of NP-Completeness in Computer Science

Understanding p vs np problems in computer science: a primer for beginners, understanding key theoretical frameworks in computer science: a beginner’s guide.

Learn Computer Science with Python

CS is a journey, not a destination

- Foundations

Understanding Algorithms: The Key to Problem-Solving Mastery

The world of computer science is a fascinating realm, where intricate concepts and technologies continuously shape the way we interact with machines. Among the vast array of ideas and principles, few are as fundamental and essential as algorithms. These powerful tools serve as the building blocks of computation, enabling computers to solve problems, make decisions, and process vast amounts of data efficiently.

An algorithm can be thought of as a step-by-step procedure or a set of instructions designed to solve a specific problem or accomplish a particular task. It represents a systematic approach to finding solutions and provides a structured way to tackle complex computational challenges. Algorithms are at the heart of various applications, from simple calculations to sophisticated machine learning models and complex data analysis.

Understanding algorithms and their inner workings is crucial for anyone interested in computer science. They serve as the backbone of software development, powering the creation of innovative applications across numerous domains. By comprehending the concept of algorithms, aspiring computer science enthusiasts gain a powerful toolset to approach problem-solving and gain insight into the efficiency and performance of different computational methods.

In this article, we aim to provide a clear and accessible introduction to algorithms, focusing on their importance in problem-solving and exploring common types such as searching, sorting, and recursion. By delving into these topics, readers will gain a solid foundation in algorithmic thinking and discover the underlying principles that drive the functioning of modern computing systems. Whether you’re a beginner in the world of computer science or seeking to deepen your understanding, this article will equip you with the knowledge to navigate the fascinating world of algorithms.

What are Algorithms?

At its core, an algorithm is a systematic, step-by-step procedure or set of rules designed to solve a problem or perform a specific task. It provides clear instructions that, when followed meticulously, lead to the desired outcome.

Consider an algorithm to be akin to a recipe for your favorite dish. When you decide to cook, the recipe is your go-to guide. It lists out the ingredients you need, their exact quantities, and a detailed, step-by-step explanation of the process, from how to prepare the ingredients to how to mix them, and finally, the cooking process. It even provides an order for adding the ingredients and specific times for cooking to ensure the dish turns out perfect.

In the same vein, an algorithm, within the realm of computer science, provides an explicit series of instructions to accomplish a goal. This could be a simple goal like sorting a list of numbers in ascending order, a more complex task such as searching for a specific data point in a massive dataset, or even a highly complicated task like determining the shortest path between two points on a map (think Google Maps). No matter the complexity of the problem at hand, there’s always an algorithm working tirelessly behind the scenes to solve it.

Furthermore, algorithms aren’t limited to specific programming languages. They are universal and can be implemented in any language. This is why understanding the fundamental concept of algorithms can empower you to solve problems across various programming languages.

The Importance of Algorithms

Algorithms are indisputably the backbone of all computational operations. They’re a fundamental part of the digital world that we interact with daily. When you search for something on the web, an algorithm is tirelessly working behind the scenes to sift through millions, possibly billions, of web pages to bring you the most relevant results. When you use a GPS to find the fastest route to a location, an algorithm is computing all possible paths, factoring in variables like traffic and road conditions, to provide you the optimal route.

Consider the world of social media, where algorithms curate personalized feeds based on our previous interactions, or in streaming platforms where they recommend shows and movies based on our viewing habits. Every click, every like, every search, and every interaction is processed by algorithms to serve you a seamless digital experience.

In the realm of computer science and beyond, everything revolves around problem-solving, and algorithms are our most reliable problem-solving tools. They provide a structured approach to problem-solving, breaking down complex problems into manageable steps and ensuring that every eventuality is accounted for.

Moreover, an algorithm’s efficiency is not just a matter of preference but a necessity. Given that computers have finite resources — time, memory, and computational power — the algorithms we use need to be optimized to make the best possible use of these resources. Efficient algorithms are the ones that can perform tasks more quickly, using less memory, and provide solutions to complex problems that might be infeasible with less efficient alternatives.

In the context of massive datasets (the likes of which are common in our data-driven world), the difference between a poorly designed algorithm and an efficient one could be the difference between a solution that takes years to compute and one that takes mere seconds. Therefore, understanding, designing, and implementing efficient algorithms is a critical skill for any computer scientist or software engineer.

Hence, as a computer science beginner, you are starting a journey where algorithms will be your best allies — universal keys capable of unlocking solutions to a myriad of problems, big or small.

Common Types of Algorithms: Searching and Sorting

Two of the most ubiquitous types of algorithms that beginners often encounter are searching and sorting algorithms.

Searching algorithms are designed to retrieve specific information from a data structure, like an array or a database. A simple example is the linear search, which works by checking each element in the array until it finds the one it’s looking for. Although easy to understand, this method isn’t efficient for large datasets, which is where more complex algorithms like binary search come in.

Binary search, on the other hand, is like looking up a word in the dictionary. Instead of checking each word from beginning to end, you open the dictionary in the middle and see if the word you’re looking for should be on the left or right side, thereby reducing the search space by half with each step.

Sorting algorithms, meanwhile, are designed to arrange elements in a particular order. A simple sorting algorithm is bubble sort, which works by repeatedly swapping adjacent elements if they’re in the wrong order. Again, while straightforward, it’s not efficient for larger datasets. More advanced sorting algorithms, such as quicksort or mergesort, have been designed to sort large data collections more efficiently.

Diving Deeper: Graph and Dynamic Programming Algorithms

Building upon our understanding of searching and sorting algorithms, let’s delve into two other families of algorithms often encountered in computer science: graph algorithms and dynamic programming algorithms.

A graph is a mathematical structure that models the relationship between pairs of objects. Graphs consist of vertices (or nodes) and edges (where each edge connects a pair of vertices). Graphs are commonly used to represent real-world systems such as social networks, web pages, biological networks, and more.

Graph algorithms are designed to solve problems centered around these structures. Some common graph algorithms include:

Dynamic programming is a powerful method used in optimization problems, where the main problem is broken down into simpler, overlapping subproblems. The solutions to these subproblems are stored and reused to build up the solution to the main problem, saving computational effort.

Here are two common dynamic programming problems:

Understanding these algorithm families — searching, sorting, graph, and dynamic programming algorithms — not only equips you with powerful tools to solve a variety of complex problems but also serves as a springboard to dive deeper into the rich ocean of algorithms and computer science.

Recursion: A Powerful Technique

While searching and sorting represent specific problem domains, recursion is a broad technique used in a wide range of algorithms. Recursion involves breaking down a problem into smaller, more manageable parts, and a function calling itself to solve these smaller parts.

To visualize recursion, consider the task of calculating factorial of a number. The factorial of a number n (denoted as n! ) is the product of all positive integers less than or equal to n . For instance, the factorial of 5 ( 5! ) is 5 x 4 x 3 x 2 x 1 = 120 . A recursive algorithm for finding factorial of n would involve multiplying n by the factorial of n-1 . The function keeps calling itself with a smaller value of n each time until it reaches a point where n is equal to 1, at which point it starts returning values back up the chain.

Algorithms are truly the heart of computer science, transforming raw data into valuable information and insight. Understanding their functionality and purpose is key to progressing in your computer science journey. As you continue your exploration, remember that each algorithm you encounter, no matter how complex it may seem, is simply a step-by-step procedure to solve a problem.

We’ve just scratched the surface of the fascinating world of algorithms. With time, patience, and practice, you will learn to create your own algorithms and start solving problems with confidence and efficiency.

Related Articles

Three Elegant Algorithms Every Computer Science Beginner Should Know

Smart. Open. Grounded. Inventive. Read our Ideas Made to Matter.

Which program is right for you?

Through intellectual rigor and experiential learning, this full-time, two-year MBA program develops leaders who make a difference in the world.

A rigorous, hands-on program that prepares adaptive problem solvers for premier finance careers.

A 12-month program focused on applying the tools of modern data science, optimization and machine learning to solve real-world business problems.

Earn your MBA and SM in engineering with this transformative two-year program.

Combine an international MBA with a deep dive into management science. A special opportunity for partner and affiliate schools only.

A doctoral program that produces outstanding scholars who are leading in their fields of research.

Bring a business perspective to your technical and quantitative expertise with a bachelor’s degree in management, business analytics, or finance.

A joint program for mid-career professionals that integrates engineering and systems thinking. Earn your master’s degree in engineering and management.

An interdisciplinary program that combines engineering, management, and design, leading to a master’s degree in engineering and management.

Executive Programs

A full-time MBA program for mid-career leaders eager to dedicate one year of discovery for a lifetime of impact.

This 20-month MBA program equips experienced executives to enhance their impact on their organizations and the world.

Non-degree programs for senior executives and high-potential managers.

A non-degree, customizable program for mid-career professionals.

Accelerated research about generative AI

Disciplined entrepreneurship: 6 questions for startup success

Startup tactics: How and when to hire technical talent

Credit: Alejandro Giraldo

Ideas Made to Matter

How to use algorithms to solve everyday problems

Kara Baskin

May 8, 2017

How can I navigate the grocery store quickly? Why doesn’t anyone like my Facebook status? How can I alphabetize my bookshelves in a hurry? Apple data visualizer and MIT System Design and Management graduate Ali Almossawi solves these common dilemmas and more in his new book, “ Bad Choices: How Algorithms Can Help You Think Smarter and Live Happier ,” a quirky, illustrated guide to algorithmic thinking.

For the uninitiated: What is an algorithm? And how can algorithms help us to think smarter?

An algorithm is a process with unambiguous steps that has a beginning and an end, and does something useful.

Algorithmic thinking is taking a step back and asking, “If it’s the case that algorithms are so useful in computing to achieve predictability, might they also be useful in everyday life, when it comes to, say, deciding between alternative ways of solving a problem or completing a task?” In all cases, we optimize for efficiency: We care about time or space.

Note the mention of “deciding between.” Computer scientists do that all the time, and I was convinced that the tools they use to evaluate competing algorithms would be of interest to a broad audience.

Why did you write this book, and who can benefit from it?

All the books I came across that tried to introduce computer science involved coding. My approach to making algorithms compelling was focusing on comparisons. I take algorithms and put them in a scene from everyday life, such as matching socks from a pile, putting books on a shelf, remembering things, driving from one point to another, or cutting an onion. These activities can be mapped to one or more fundamental algorithms, which form the basis for the field of computing and have far-reaching applications and uses.

I wrote the book with two audiences in mind. One, anyone, be it a learner or an educator, who is interested in computer science and wants an engaging and lighthearted, but not a dumbed-down, introduction to the field. Two, anyone who is already familiar with the field and wants to experience a way of explaining some of the fundamental concepts in computer science differently than how they’re taught.

I’m going to the grocery store and only have 15 minutes. What do I do?

Do you know what the grocery store looks like ahead of time? If you know what it looks like, it determines your list. How do you prioritize things on your list? Order the items in a way that allows you to avoid walking down the same aisles twice.

For me, the intriguing thing is that the grocery store is a scene from everyday life that I can use as a launch pad to talk about various related topics, like priority queues and graphs and hashing. For instance, what is the most efficient way for a machine to store a prioritized list, and what happens when the equivalent of you scratching an item from a list happens in the machine’s list? How is a store analogous to a graph (an abstraction in computer science and mathematics that defines how things are connected), and how is navigating the aisles in a store analogous to traversing a graph?

Nobody follows me on Instagram. How do I get more followers?

The concept of links and networks, which I cover in Chapter 6, is relevant here. It’s much easier to get to people whom you might be interested in and who might be interested in you if you can start within the ball of links that connects those people, rather than starting at a random spot.

You mention Instagram: There, the hashtag is one way to enter that ball of links. Tag your photos, engage with users who tag their photos with the same hashtags, and you should be on your way to stardom.

What are the secret ingredients of a successful Facebook post?

I’ve posted things on social media that have died a sad death and then posted the same thing at a later date that somehow did great. Again, if we think of it in terms that are relevant to algorithms, we’d say that the challenge with making something go viral is really getting that first spark. And to get that first spark, a person who is connected to the largest number of people who are likely to engage with that post, needs to share it.

With [my first book], “Bad Arguments,” I spent a month pouring close to $5,000 into advertising for that project with moderate results. And then one science journalist with a large audience wrote about it, and the project took off and hasn’t stopped since.

What problems do you wish you could solve via algorithm but can’t?

When we care about efficiency, thinking in terms of algorithms is useful. There are cases when that’s not the quality we want to optimize for — for instance, learning or love. I walk for several miles every day, all throughout the city, as I find it relaxing. I’ve never asked myself, “What’s the most efficient way I can traverse the streets of San Francisco?” It’s not relevant to my objective.

Algorithms are a great way of thinking about efficiency, but the question has to be, “What approach can you optimize for that objective?” That’s what worries me about self-help: Books give you a silver bullet for doing everything “right” but leave out all the nuances that make us different. What works for you might not work for me.

Which companies use algorithms well?

When you read that the overwhelming majority of the shows that users of, say, Netflix, watch are due to Netflix’s recommendation engine, you know they’re doing something right.

Related Articles

- Runestone in social media: Follow @iRunestone Our Facebook Page

- Table of Contents

- Assignments

- Peer Instruction (Instructor)

- Peer Instruction (Student)

- Change Course

- Instructor's Page

- Progress Page

- Edit Profile

- Change Password

- Scratch ActiveCode

- Scratch Activecode

- Instructors Guide

- About Runestone

- Report A Problem

- 6.1 Objectives

- 6.2 Searching

- 6.3 The Sequential Search

- 6.4 The Binary Search

- 6.5 Hashing

- 6.6 Sorting

- 6.7 The Bubble Sort

- 6.8 The Selection Sort

- 6.9 The Insertion Sort

- 6.10 The Shell Sort

- 6.11 The Merge Sort

- 6.12 The Quick Sort

- 6.13 Summary

- 6.14 Key Terms

- 6.15 Discussion Questions

- 6.16 Programming Exercises

- 5.17. Programming Exercises" data-toggle="tooltip">

- 6.1. Objectives' data-toggle="tooltip" >

6. Sorting and Searching ¶

Sorting and Searching

- 6.1. Objectives

- 6.2. Searching

- 6.3.1. Analysis of Sequential Search

- 6.4.1. Analysis of Binary Search

- 6.5.1. Hash Functions

- 6.5.2. Collision Resolution

- 6.5.3. Implementing the Map Abstract Data Type

- 6.5.4. Analysis of Hashing

- 6.6. Sorting

- 6.7. The Bubble Sort

- 6.8. The Selection Sort

- 6.9. The Insertion Sort

- 6.10. The Shell Sort

- 6.11. The Merge Sort

- 6.12. The Quick Sort

- 6.13. Summary

- 6.14. Key Terms

- 6.15. Discussion Questions

- 6.16. Programming Exercises

Ensure that you are logged in and have the required permissions to access the test.

A server error has occurred. Please refresh the page or try after some time.

An error has occurred. Please refresh the page or try after some time.

Signup and get free access to 100+ Tutorials and Practice Problems Start Now

Linear Search

- Binary Search

- Ternary Search

- Bubble Sort

- Selection Sort

- Insertion Sort

- Counting Sort

- Bucket Sort

- Basics of Greedy Algorithms

- Graph Representation

- Breadth First Search

- Depth First Search

- Minimum Spanning Tree

- Shortest Path Algorithms

- Flood-fill Algorithm

- Articulation Points and Bridges

- Biconnected Components

- Strongly Connected Components

- Topological Sort

- Hamiltonian Path

- Maximum flow

- Minimum Cost Maximum Flow

- Basics of String Manipulation

- String Searching

- Z Algorithm

- Manachar’s Algorithm

- Introduction to Dynamic Programming 1

- 2 Dimensional

- State space reduction

- Dynamic Programming and Bit Masking

Solve Problems

ATTEMPTED BY: 1535 SUCCESS RATE: 67% LEVEL: Easy

- Participate

Equal Strings

ATTEMPTED BY: 714 SUCCESS RATE: 54% LEVEL: Medium

AND Subsequence

ATTEMPTED BY: 490 SUCCESS RATE: 42% LEVEL: Medium

Adjacent Sum Greater than K

ATTEMPTED BY: 887 SUCCESS RATE: 69% LEVEL: Medium

Equal Diverse Teams

ATTEMPTED BY: 1361 SUCCESS RATE: 29% LEVEL: Easy

Permutation Swaps

ATTEMPTED BY: 987 SUCCESS RATE: 35% LEVEL: Easy

Guess Permutation

ATTEMPTED BY: 587 SUCCESS RATE: 69% LEVEL: Hard

ATTEMPTED BY: 478 SUCCESS RATE: 75% LEVEL: Medium

Equal Parity Sum

ATTEMPTED BY: 667 SUCCESS RATE: 59% LEVEL: Medium

Employee rating

ATTEMPTED BY: 2363 SUCCESS RATE: 84% LEVEL: Easy

- An alphabet

- A special character

- Minimum 8 characters

A password reset link will be sent to the following email id

Help | Advanced Search

Computer Science > Neural and Evolutionary Computing

Title: learning from offline and online experiences: a hybrid adaptive operator selection framework.

Abstract: In many practical applications, usually, similar optimisation problems or scenarios repeatedly appear. Learning from previous problem-solving experiences can help adjust algorithm components of meta-heuristics, e.g., adaptively selecting promising search operators, to achieve better optimisation performance. However, those experiences obtained from previously solved problems, namely offline experiences, may sometimes provide misleading perceptions when solving a new problem, if the characteristics of previous problems and the new one are relatively different. Learning from online experiences obtained during the ongoing problem-solving process is more instructive but highly restricted by limited computational resources. This paper focuses on the effective combination of offline and online experiences. A novel hybrid framework that learns to dynamically and adaptively select promising search operators is proposed. Two adaptive operator selection modules with complementary paradigms cooperate in the framework to learn from offline and online experiences and make decisions. An adaptive decision policy is maintained to balance the use of those two modules in an online manner. Extensive experiments on 170 widely studied real-value benchmark optimisation problems and a benchmark set with 34 instances for combinatorial optimisation show that the proposed hybrid framework outperforms the state-of-the-art methods. Ablation study verifies the effectiveness of each component of the framework.

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 10 April 2024

A hybrid particle swarm optimization algorithm for solving engineering problem

- Jinwei Qiao 1 , 2 ,

- Guangyuan Wang 1 , 2 ,

- Zhi Yang 1 , 2 ,

- Xiaochuan Luo 3 ,

- Jun Chen 1 , 2 ,

- Kan Li 4 &

- Pengbo Liu 1 , 2

Scientific Reports volume 14 , Article number: 8357 ( 2024 ) Cite this article

344 Accesses

Metrics details

- Computational science

- Mechanical engineering

To overcome the disadvantages of premature convergence and easy trapping into local optimum solutions, this paper proposes an improved particle swarm optimization algorithm (named NDWPSO algorithm) based on multiple hybrid strategies. Firstly, the elite opposition-based learning method is utilized to initialize the particle position matrix. Secondly, the dynamic inertial weight parameters are given to improve the global search speed in the early iterative phase. Thirdly, a new local optimal jump-out strategy is proposed to overcome the "premature" problem. Finally, the algorithm applies the spiral shrinkage search strategy from the whale optimization algorithm (WOA) and the Differential Evolution (DE) mutation strategy in the later iteration to accelerate the convergence speed. The NDWPSO is further compared with other 8 well-known nature-inspired algorithms (3 PSO variants and 5 other intelligent algorithms) on 23 benchmark test functions and three practical engineering problems. Simulation results prove that the NDWPSO algorithm obtains better results for all 49 sets of data than the other 3 PSO variants. Compared with 5 other intelligent algorithms, the NDWPSO obtains 69.2%, 84.6%, and 84.6% of the best results for the benchmark function ( \({f}_{1}-{f}_{13}\) ) with 3 kinds of dimensional spaces (Dim = 30,50,100) and 80% of the best optimal solutions for 10 fixed-multimodal benchmark functions. Also, the best design solutions are obtained by NDWPSO for all 3 classical practical engineering problems.

Similar content being viewed by others

A clustering-based competitive particle swarm optimization with grid ranking for multi-objective optimization problems

Qianlin Ye, Zheng Wang, … Mengjiao Yu

A modified shuffled frog leaping algorithm with inertia weight

Zhuanzhe Zhao, Mengxian Wang, … Zhibo Liu

Appropriate noise addition to metaheuristic algorithms can enhance their performance

Kwok Pui Choi, Enzio Hai Hong Kam, … Weng Kee Wong

Introduction

In the ever-changing society, new optimization problems arise every moment, and they are distributed in various fields, such as automation control 1 , statistical physics 2 , security prevention and temperature prediction 3 , artificial intelligence 4 , and telecommunication technology 5 . Faced with a constant stream of practical engineering optimization problems, traditional solution methods gradually lose their efficiency and convenience, making it more and more expensive to solve the problems. Therefore, researchers have developed many metaheuristic algorithms and successfully applied them to the solution of optimization problems. Among them, Particle swarm optimization (PSO) algorithm 6 is one of the most widely used swarm intelligence algorithms.

However, the basic PSO has a simple operating principle and solves problems with high efficiency and good computational performance, but it suffers from the disadvantages of easily trapping in local optima and premature convergence. To improve the overall performance of the particle swarm algorithm, an improved particle swarm optimization algorithm is proposed by the multiple hybrid strategy in this paper. The improved PSO incorporates the search ideas of other intelligent algorithms (DE, WOA), so the improved algorithm proposed in this paper is named NDWPSO. The main improvement schemes are divided into the following 4 points: Firstly, a strategy of elite opposition-based learning is introduced into the particle population position initialization. A high-quality initialization matrix of population position can improve the convergence speed of the algorithm. Secondly, a dynamic weight methodology is adopted for the acceleration coefficients by combining the iterative map and linearly transformed method. This method utilizes the chaotic nature of the mapping function, the fast convergence capability of the dynamic weighting scheme, and the time-varying property of the acceleration coefficients. Thus, the global search and local search of the algorithm are balanced and the global search speed of the population is improved. Thirdly, a determination mechanism is set up to detect whether the algorithm falls into a local optimum. When the algorithm is “premature”, the population resets 40% of the position information to overcome the local optimum. Finally, the spiral shrinking mechanism combined with the DE/best/2 position mutation is used in the later iteration, which further improves the solution accuracy.

The structure of the paper is given as follows: Sect. “ Particle swarm optimization (PSO) ” describes the principle of the particle swarm algorithm. Section “ Improved particle swarm optimization algorithm ” shows the detailed improvement strategy and a comparison experiment of inertia weight is set up for the proposed NDWPSO. Section “ Experiment and discussion ” includes the experimental and result discussion sections on the performance of the improved algorithm. Section “ Conclusions and future works ” summarizes the main findings of this study.

Literature review

This section reviews some metaheuristic algorithms and other improved PSO algorithms. A simple discussion about recently proposed research studies is given.

Metaheuristic algorithms

A series of metaheuristic algorithms have been proposed in recent years by using various innovative approaches. For instance, Lin et al. 7 proposed a novel artificial bee colony algorithm (ABCLGII) in 2018 and compared ABCLGII with other outstanding ABC variants on 52 frequently used test functions. Abed-alguni et al. 8 proposed an exploratory cuckoo search (ECS) algorithm in 2021 and carried out several experiments to investigate the performance of ECS by 14 benchmark functions. Brajević 9 presented a novel shuffle-based artificial bee colony (SB-ABC) algorithm for solving integer programming and minimax problems in 2021. The experiments are tested on 7 integer programming problems and 10 minimax problems. In 2022, Khan et al. 10 proposed a non-deterministic meta-heuristic algorithm called Non-linear Activated Beetle Antennae Search (NABAS) for a non-convex tax-aware portfolio selection problem. Brajević et al. 11 proposed a hybridization of the sine cosine algorithm (HSCA) in 2022 to solve 15 complex structural and mechanical engineering design optimization problems. Abed-Alguni et al. 12 proposed an improved Salp Swarm Algorithm (ISSA) in 2022 for single-objective continuous optimization problems. A set of 14 standard benchmark functions was used to evaluate the performance of ISSA. In 2023, Nadimi et al. 13 proposed a binary starling murmuration optimization (BSMO) to select the effective features from different important diseases. In the same year, Nadimi et al. 14 systematically reviewed the last 5 years' developments of WOA and made a critical analysis of those WOA variants. In 2024, Fatahi et al. 15 proposed an Improved Binary Quantum-based Avian Navigation Optimizer Algorithm (IBQANA) for the Feature Subset Selection problem in the medical area. Experimental evaluation on 12 medical datasets demonstrates that IBQANA outperforms 7 established algorithms. Abed-alguni et al. 16 proposed an Improved Binary DJaya Algorithm (IBJA) to solve the Feature Selection problem in 2024. The IBJA’s performance was compared against 4 ML classifiers and 10 efficient optimization algorithms.

Improved PSO algorithms

Many researchers have constantly proposed some improved PSO algorithms to solve engineering problems in different fields. For instance, Yeh 17 proposed an improved particle swarm algorithm, which combines a new self-boundary search and a bivariate update mechanism, to solve the reliability redundancy allocation problem (RRAP) problem. Solomon et al. 18 designed a collaborative multi-group particle swarm algorithm with high parallelism that was used to test the adaptability of Graphics Processing Units (GPUs) in distributed computing environments. Mukhopadhyay and Banerjee 19 proposed a chaotic multi-group particle swarm optimization (CMS-PSO) to estimate the unknown parameters of an autonomous chaotic laser system. Duan et al. 20 designed an improved particle swarm algorithm with nonlinear adjustment of inertia weights to improve the coupling accuracy between laser diodes and single-mode fibers. Sun et al. 21 proposed a particle swarm optimization algorithm combined with non-Gaussian stochastic distribution for the optimal design of wind turbine blades. Based on a multiple swarm scheme, Liu et al. 22 proposed an improved particle swarm optimization algorithm to predict the temperatures of steel billets for the reheating furnace. In 2022, Gad 23 analyzed the existing 2140 papers on Swarm Intelligence between 2017 and 2019 and pointed out that the PSO algorithm still needs further research. In general, the improved methods can be classified into four categories:

Adjusting the distribution of algorithm parameters. Feng et al. 24 used a nonlinear adaptive method on inertia weights to balance local and global search and introduced asynchronously varying acceleration coefficients.

Changing the updating formula of the particle swarm position. Both papers 25 and 26 used chaotic mapping functions to update the inertia weight parameters and combined them with a dynamic weighting strategy to update the particle swarm positions. This improved approach enables the particle swarm algorithm to be equipped with fast convergence of performance.

The initialization of the swarm. Alsaidy and Abbood proposed 27 a hybrid task scheduling algorithm that replaced the random initialization of the meta-heuristic algorithm with the heuristic algorithms MCT-PSO and LJFP-PSO.

Combining with other intelligent algorithms: Liu et al. 28 introduced the differential evolution (DE) algorithm into PSO to increase the particle swarm as diversity and reduce the probability of the population falling into local optimum.

Particle swarm optimization (PSO)

The particle swarm optimization algorithm is a population intelligence algorithm for solving continuous and discrete optimization problems. It originated from the social behavior of individuals in bird and fish flocks 6 . The core of the PSO algorithm is that an individual particle identifies potential solutions by flight in a defined constraint space adjusts its exploration direction to approach the global optimal solution based on the shared information among the group, and finally solves the optimization problem. Each particle \(i\) includes two attributes: velocity vector \({V}_{i}=\left[{v}_{i1},{v}_{i2},{v}_{i3},{...,v}_{ij},{...,v}_{iD},\right]\) and position vector \({X}_{i}=[{x}_{i1},{x}_{i2},{x}_{i3},...,{x}_{ij},...,{x}_{iD}]\) . The velocity vector is used to modify the motion path of the swarm; the position vector represents a potential solution for the optimization problem. Here, \(j=\mathrm{1,2},\dots ,D\) , \(D\) represents the dimension of the constraint space. The equations for updating the velocity and position of the particle swarm are shown in Eqs. ( 1 ) and ( 2 ).

Here \({Pbest}_{i}^{k}\) represents the previous optimal position of the particle \(i\) , and \({Gbest}\) is the optimal position discovered by the whole population. \(i=\mathrm{1,2},\dots ,n\) , \(n\) denotes the size of the particle swarm. \({c}_{1}\) and \({c}_{2}\) are the acceleration constants, which are used to adjust the search step of the particle 29 . \({r}_{1}\) and \({r}_{2}\) are two random uniform values distributed in the range \([\mathrm{0,1}]\) , which are used to improve the randomness of the particle search. \(\omega\) inertia weight parameter, which is used to adjust the scale of the search range of the particle swarm 30 . The basic PSO sets the inertia weight parameter as a time-varying parameter to balance global exploration and local seeking. The updated equation of the inertia weight parameter is given as follows:

where \({\omega }_{max}\) and \({\omega }_{min}\) represent the upper and lower limits of the range of inertia weight parameter. \(k\) and \(Mk\) are the current iteration and maximum iteration.

Improved particle swarm optimization algorithm

According to the no free lunch theory 31 , it is known that no algorithm can solve every practical problem with high quality and efficiency for increasingly complex and diverse optimization problems. In this section, several improvement strategies are proposed to improve the search efficiency and overcome this shortcoming of the basic PSO algorithm.

Improvement strategies

The optimization strategies of the improved PSO algorithm are shown as follows:

The inertia weight parameter is updated by an improved chaotic variables method instead of a linear decreasing strategy. Chaotic mapping performs the whole search at a higher speed and is more resistant to falling into local optimal than the probability-dependent random search 32 . However, the population may result in that particles can easily fly out of the global optimum boundary. To ensure that the population can converge to the global optimum, an improved Iterative mapping is adopted and shown as follows:

Here \({\omega }_{k}\) is the inertia weight parameter in the iteration \(k\) , \(b\) is the control parameter in the range \([\mathrm{0,1}]\) .

The acceleration coefficients are updated by the linear transformation. \({c}_{1}\) and \({c}_{2}\) represent the influential coefficients of the particles by their own and population information, respectively. To improve the search performance of the population, \({c}_{1}\) and \({c}_{2}\) are changed from fixed values to time-varying parameter parameters, that are updated by linear transformation with the number of iterations:

where \({c}_{max}\) and \({c}_{min}\) are the maximum and minimum values of acceleration coefficients, respectively.

The initialization scheme is determined by elite opposition-based learning . The high-quality initial population will accelerate the solution speed of the algorithm and improve the accuracy of the optimal solution. Thus, the elite backward learning strategy 33 is introduced to generate the position matrix of the initial population. Suppose the elite individual of the population is \({X}=[{x}_{1},{x}_{2},{x}_{3},...,{x}_{j},...,{x}_{D}]\) , and the elite opposition-based solution of \(X\) is \({X}_{o}=[{x}_{{\text{o}}1},{x}_{{\text{o}}2},{x}_{{\text{o}}3},...,{x}_{oj},...,{x}_{oD}]\) . The formula for the elite opposition-based solution is as follows:

where \({k}_{r}\) is the random value in the range \((\mathrm{0,1})\) . \({ux}_{oij}\) and \({lx}_{oij}\) are dynamic boundaries of the elite opposition-based solution in \(j\) dimensional variables. The advantage of dynamic boundary is to reduce the exploration space of particles, which is beneficial to the convergence of the algorithm. When the elite opposition-based solution is out of bounds, the out-of-bounds processing is performed. The equation is given as follows:

After calculating the fitness function values of the elite solution and the elite opposition-based solution, respectively, \(n\) high quality solutions were selected to form a new initial population position matrix.

The position updating Eq. ( 2 ) is modified based on the strategy of dynamic weight. To improve the speed of the global search of the population, the strategy of dynamic weight from the artificial bee colony algorithm 34 is introduced to enhance the computational performance. The new position updating equation is shown as follows:

Here \(\rho\) is the random value in the range \((\mathrm{0,1})\) . \(\psi\) represents the acceleration coefficient and \({\omega }{\prime}\) is the dynamic weight coefficient. The updated equations of the above parameters are as follows:

where \(f(i)\) denotes the fitness function value of individual particle \(i\) and u is the average of the population fitness function values in the current iteration. The Eqs. ( 11 , 12 ) are introduced into the position updating equation. And they can attract the particle towards positions of the best-so-far solution in the search space.

New local optimal jump-out strategy is added for escaping from the local optimal. When the value of the fitness function for the population optimal particles does not change in M iterations, the algorithm determines that the population falls into a local optimal. The scheme in which the population jumps out of the local optimum is to reset the position information of the 40% of individuals within the population, in other words, to randomly generate the position vector in the search space. M is set to 5% of the maximum number of iterations.

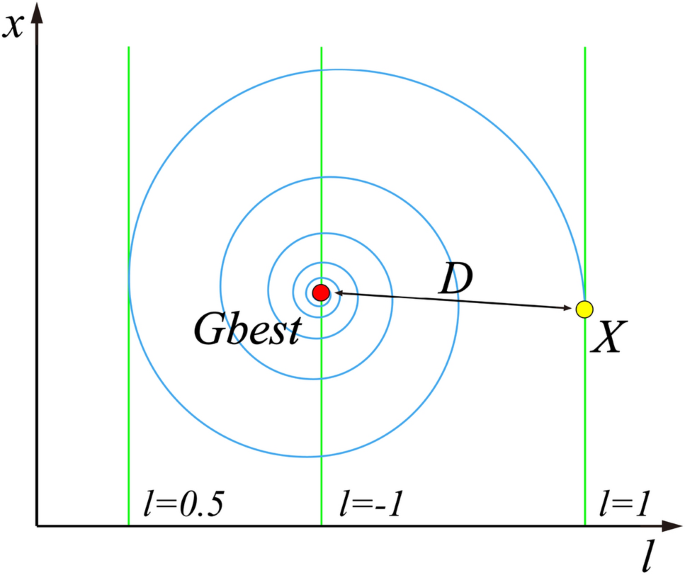

New spiral update search strategy is added after the local optimal jump-out strategy. Since the whale optimization algorithm (WOA) was good at exploring the local search space 35 , the spiral update search strategy in the WOA 36 is introduced to update the position of the particles after the swarm jumps out of local optimal. The equation for the spiral update is as follows:

Here \(D=\left|{x}_{i}\left(k\right)-Gbest\right|\) denotes the distance between the particle itself and the global optimal solution so far. \(B\) is the constant that defines the shape of the logarithmic spiral. \(l\) is the random value in \([-\mathrm{1,1}]\) . \(l\) represents the distance between the newly generated particle and the global optimal position, \(l=-1\) means the closest distance, while \(l=1\) means the farthest distance, and the meaning of this parameter can be directly observed by Fig. 1 .

Spiral updating position.

The DE/best/2 mutation strategy is introduced to form the mutant particle. 4 individuals in the population are randomly selected that differ from the current particle, then the vector difference between them is rescaled, and the difference vector is combined with the global optimal position to form the mutant particle. The equation for mutation of particle position is shown as follows:

where \({x}^{*}\) is the mutated particle, \(F\) is the scale factor of mutation, \({r}_{1}\) , \({r}_{2}\) , \({r}_{3}\) , \({r}_{4}\) are random integer values in \((0,n]\) and not equal to \(i\) , respectively. Specific particles are selected for mutation with the screening conditions as follows:

where \(Cr\) represents the probability of mutation, \(rand\left(\mathrm{0,1}\right)\) is a random number in \(\left(\mathrm{0,1}\right)\) , and \({i}_{rand}\) is a random integer value in \((0,n]\) .

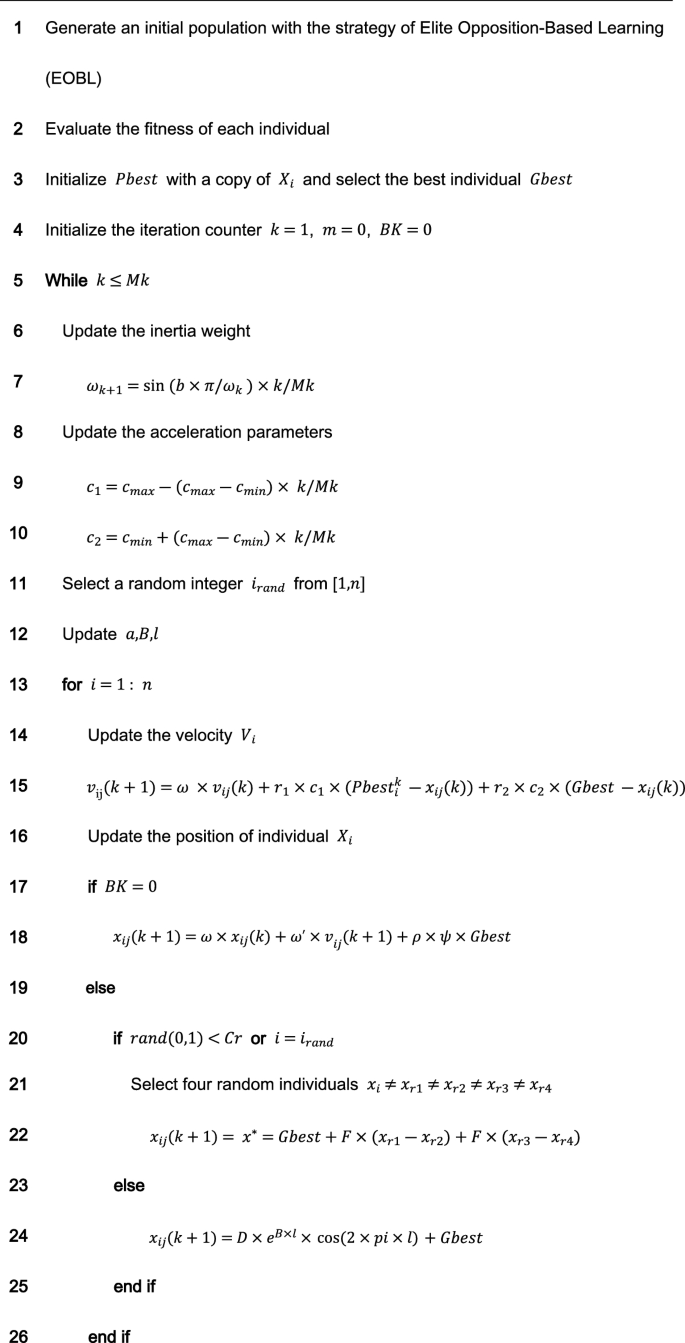

The improved PSO incorporates the search ideas of other intelligent algorithms (DE, WOA), so the improved algorithm proposed in this paper is named NDWPSO. The pseudo-code for the NDWPSO algorithm is given as follows:

The main procedure of NDWPSO.

Comparing the distribution of inertia weight parameters

There are several improved PSO algorithms (such as CDWPSO 25 , and SDWPSO 26 ) that adopt the dynamic weighted particle position update strategy as their improvement strategy. The updated equations of the CDWPSO and the SDWPSO algorithm for the inertia weight parameters are given as follows:

where \({\text{A}}\) is a value in \((\mathrm{0,1}]\) . \({r}_{max}\) and \({r}_{min}\) are the upper and lower limits of the fluctuation range of the inertia weight parameters, \(k\) is the current number of algorithm iterations, and \(Mk\) denotes the maximum number of iterations.

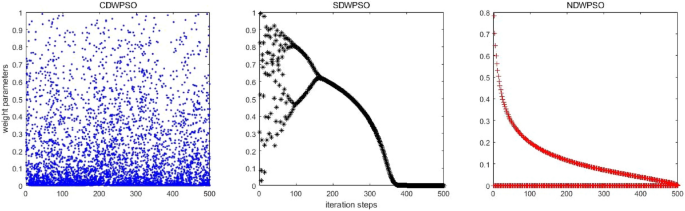

Considering that the update method of inertia weight parameters by our proposed NDWPSO is comparable to the CDWPSO, and SDWPSO, a comparison experiment for the distribution of inertia weight parameters is set up in this section. The maximum number of iterations in the experiment is \(Mk=500\) . The distributions of CDWPSO, SDWPSO, and NDWPSO inertia weights are shown sequentially in Fig. 2 .

The inertial weight distribution of CDWPSO, SDWPSO, and NDWPSO.

In Fig. 2 , the inertia weight value of CDWPSO is a random value in (0,1]. It may make individual particles fly out of the range in the late iteration of the algorithm. Similarly, the inertia weight value of SDWPSO is a value that tends to zero infinitely, so that the swarm no longer can fly in the search space, making the algorithm extremely easy to fall into the local optimal value. On the other hand, the distribution of the inertia weights of the NDWPSO forms a gentle slope by two curves. Thus, the swarm can faster lock the global optimum range in the early iterations and locate the global optimal more precisely in the late iterations. The reason is that the inertia weight values between two adjacent iterations are inversely proportional to each other. Besides, the time-varying part of the inertial weight within NDWPSO is designed to reduce the chaos characteristic of the parameters. The inertia weight value of NDWPSO avoids the disadvantages of the above two schemes, so its design is more reasonable.

Experiment and discussion

In this section, three experiments are set up to evaluate the performance of NDWPSO: (1) the experiment of 23 classical functions 37 between NDWPSO and three particle swarm algorithms (PSO 6 , CDWPSO 25 , SDWPSO 26 ); (2) the experiment of benchmark test functions between NDWPSO and other intelligent algorithms (Whale Optimization Algorithm (WOA) 36 , Harris Hawk Algorithm (HHO) 38 , Gray Wolf Optimization Algorithm (GWO) 39 , Archimedes Algorithm (AOA) 40 , Equilibrium Optimizer (EO) 41 and Differential Evolution (DE) 42 ); (3) the experiment for solving three real engineering problems (welded beam design 43 , pressure vessel design 44 , and three-bar truss design 38 ). All experiments are run on a computer with Intel i5-11400F GPU, 2.60 GHz, 16 GB RAM, and the code is written with MATLAB R2017b.

The benchmark test functions are 23 classical functions, which consist of indefinite unimodal (F1–F7), indefinite dimensional multimodal functions (F8–F13), and fixed-dimensional multimodal functions (F14–F23). The unimodal benchmark function is used to evaluate the global search performance of different algorithms, while the multimodal benchmark function reflects the ability of the algorithm to escape from the local optimal. The mathematical equations of the benchmark functions are shown and found as Supplementary Tables S1 – S3 online.

Experiments on benchmark functions between NDWPSO, and other PSO variants

The purpose of the experiment is to show the performance advantages of the NDWPSO algorithm. Here, the dimensions and corresponding population sizes of 13 benchmark functions (7 unimodal and 6 multimodal) are set to (30, 40), (50, 70), and (100, 130). The population size of 10 fixed multimodal functions is set to 40. Each algorithm is repeated 30 times independently, and the maximum number of iterations is 200. The performance of the algorithm is measured by the mean and the standard deviation (SD) of the results for different benchmark functions. The parameters of the NDWPSO are set as: \({[{\omega }_{min},\omega }_{max}]=[\mathrm{0.4,0.9}]\) , \(\left[{c}_{max},{c}_{min}\right]=\left[\mathrm{2.5,1.5}\right],{V}_{max}=0.1,b={e}^{-50}, M=0.05\times Mk, B=1,F=0.7, Cr=0.9.\) And, \(A={\omega }_{max}\) for CDWPSO; \({[r}_{max},{r}_{min}]=[\mathrm{4,0}]\) for SDWPSO.

Besides, the experimental data are retained to two decimal places, but some experimental data will increase the number of retained data to pursue more accuracy in comparison. The best results in each group of experiments will be displayed in bold font. The experimental data is set to 0 if the value is below 10 –323 . The experimental parameter settings in this paper are different from the references (PSO 6 , CDWPSO 25 , SDWPSO 26 , so the final experimental data differ from the ones within the reference.

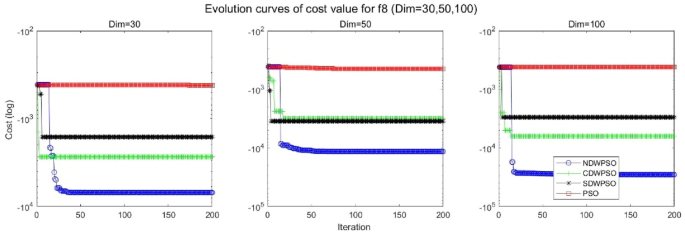

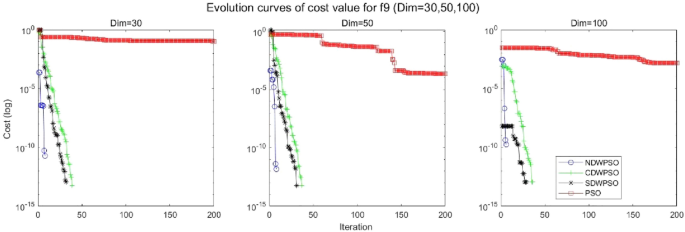

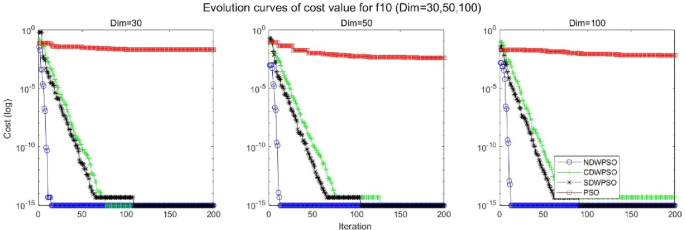

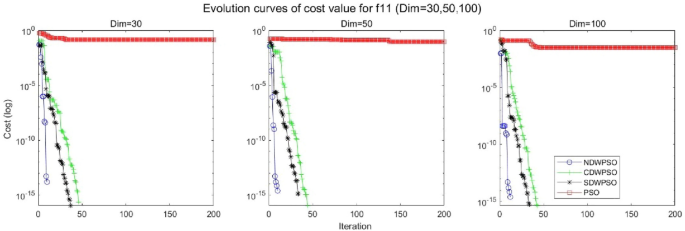

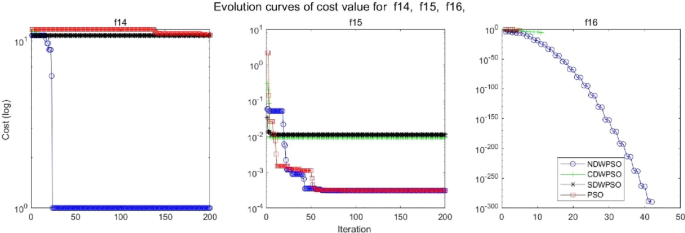

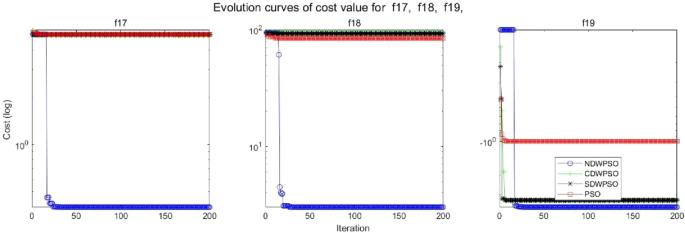

As shown in Tables 1 and 2 , the NDWPSO algorithm obtains better results for all 49 sets of data than other PSO variants, which include not only 13 indefinite-dimensional benchmark functions and 10 fixed-multimodal benchmark functions. Remarkably, the SDWPSO algorithm obtains the same accuracy of calculation as NDWPSO for both unimodal functions f 1 –f 4 and multimodal functions f 9 –f 11 . The solution accuracy of NDWPSO is higher than that of other PSO variants for fixed-multimodal benchmark functions f 14 -f 23 . The conclusion can be drawn that the NDWPSO has excellent global search capability, local search capability, and the capability for escaping the local optimal.

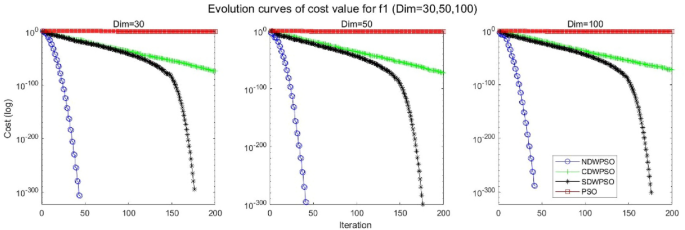

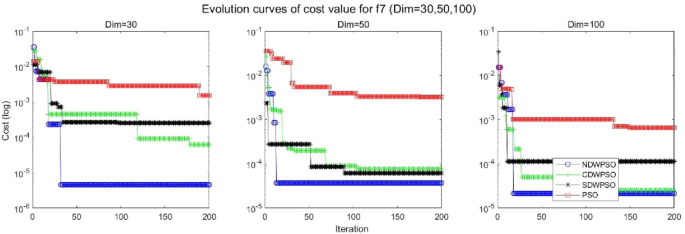

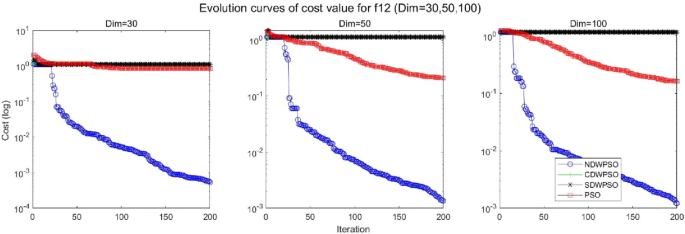

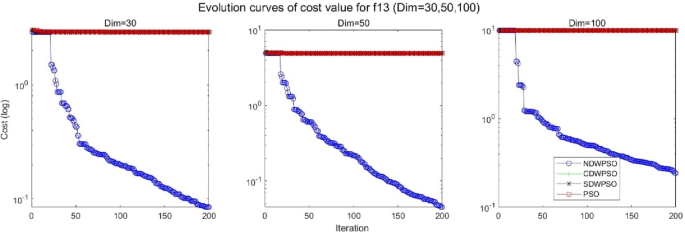

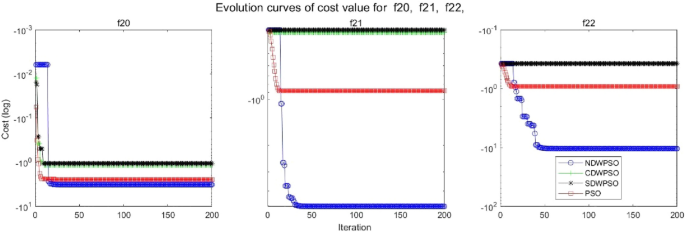

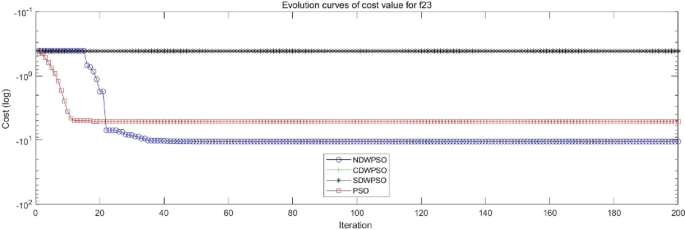

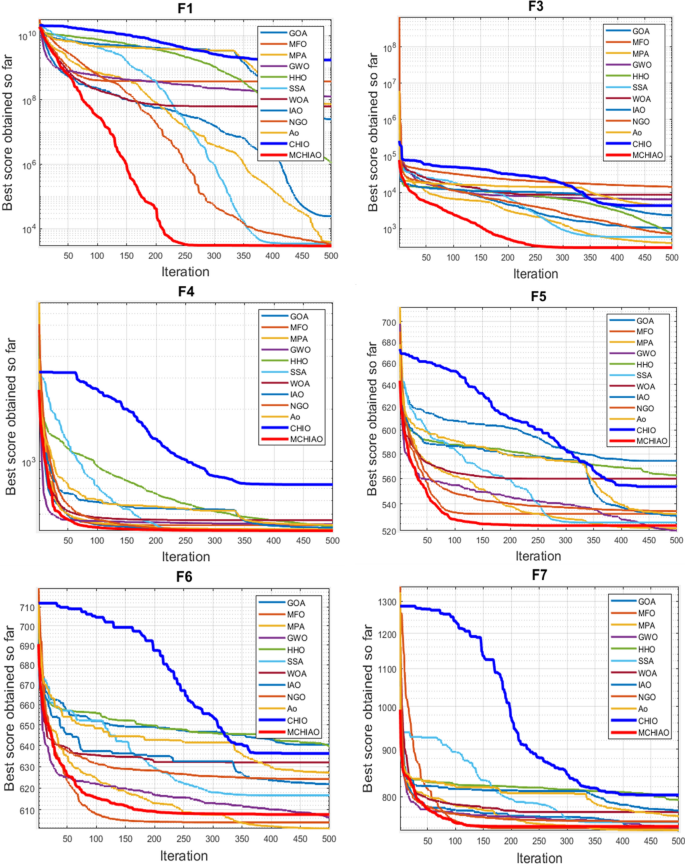

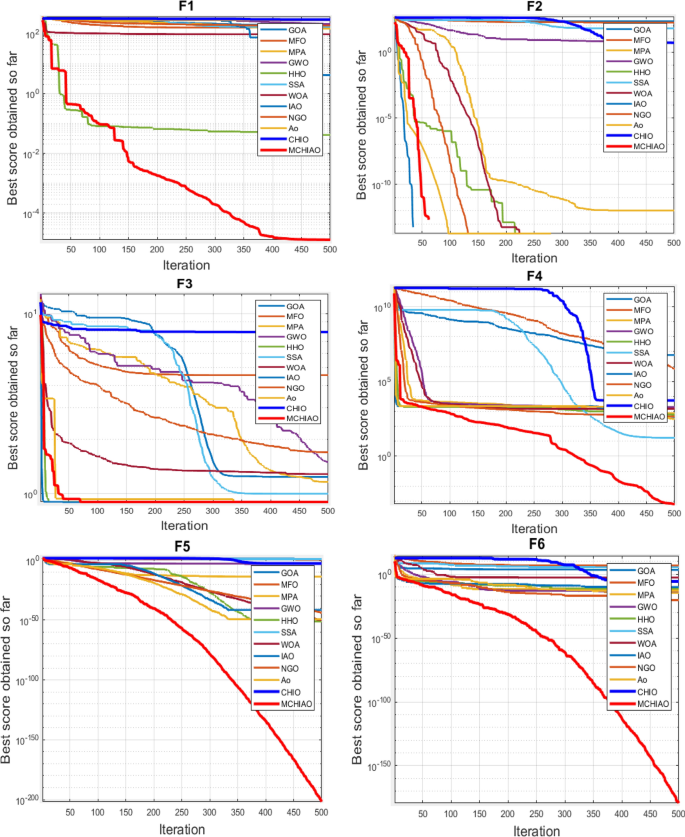

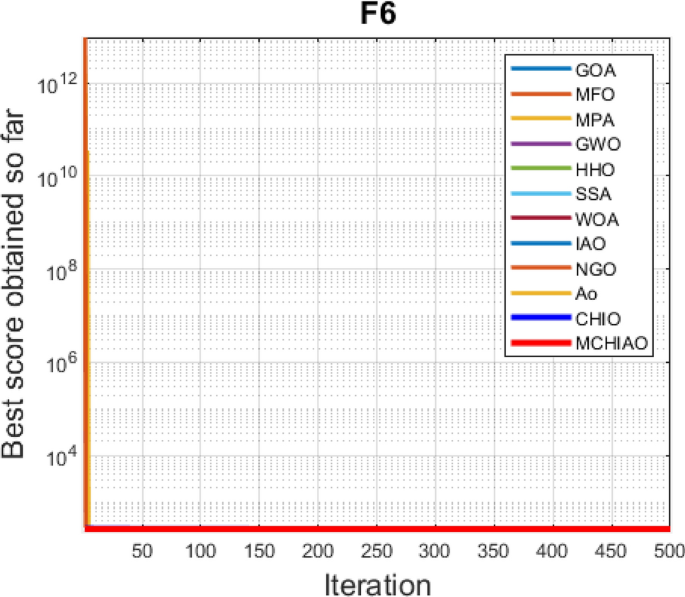

In addition, the convergence curves of the 23 benchmark functions are shown in Figs. 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 , 11 , 12 , 13 , 14 , 15 , 16 , 17 , 18 and 19 . The NDWPSO algorithm has a faster convergence speed in the early stage of the search for processing functions f1-f6, f8-f14, f16, f17, and finds the global optimal solution with a smaller number of iterations. In the remaining benchmark function experiments, the NDWPSO algorithm shows no outstanding performance for convergence speed in the early iterations. There are two reasons of no outstanding performance in the early iterations. On one hand, the fixed-multimodal benchmark function has many disturbances and local optimal solutions in the whole search space. on the other hand, the initialization scheme based on elite opposition-based learning is still stochastic, which leads to the initial position far from the global optimal solution. The inertia weight based on chaotic mapping and the strategy of spiral updating can significantly improve the convergence speed and computational accuracy of the algorithm in the late search stage. Finally, the NDWPSO algorithm can find better solutions than other algorithms in the middle and late stages of the search.

Evolution curve of NDWPSO and other PSO algorithms for f1 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f2 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f3 (Dim = 30,50,100).

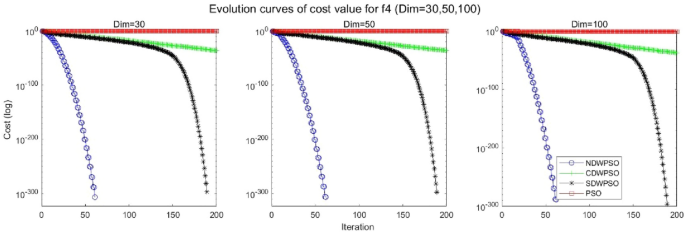

Evolution curve of NDWPSO and other PSO algorithms for f4 (Dim = 30,50,100).

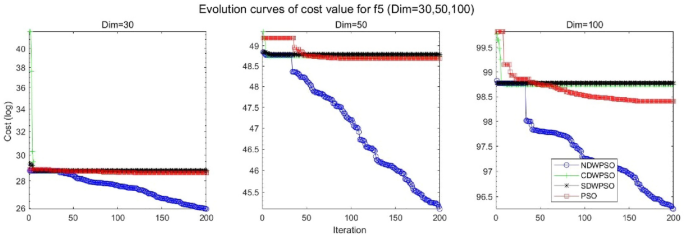

Evolution curve of NDWPSO and other PSO algorithms for f5 (Dim = 30,50,100).

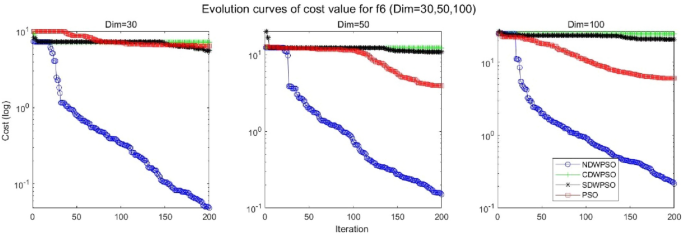

Evolution curve of NDWPSO and other PSO algorithms for f6 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f7 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f8 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f9 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f10 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f11(Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f12 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f13 (Dim = 30,50,100).

Evolution curve of NDWPSO and other PSO algorithms for f14, f15, f16.

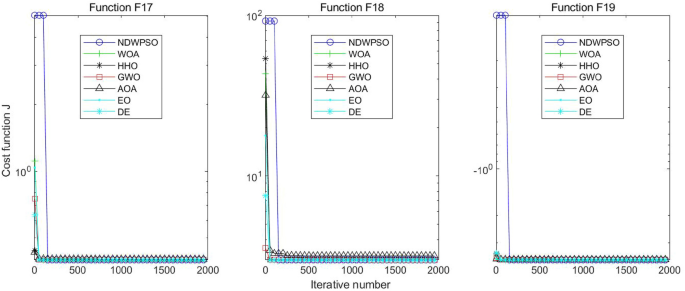

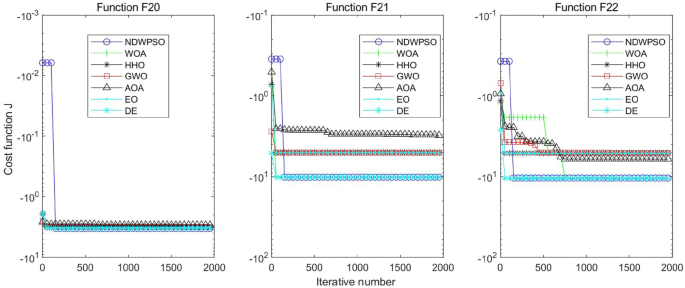

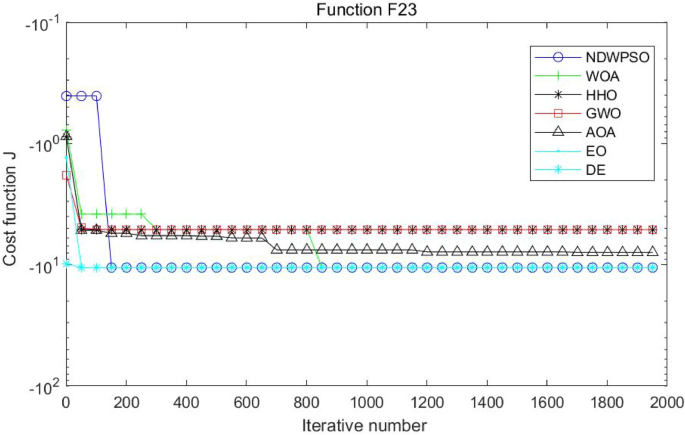

Evolution curve of NDWPSO and other PSO algorithms for f17, f18, f19.

Evolution curve of NDWPSO and other PSO algorithms for f20, f21, f22.

Evolution curve of NDWPSO and other PSO algorithms for f23.

To evaluate the performance of different PSO algorithms, a statistical test is conducted. Due to the stochastic nature of the meta-heuristics, it is not enough to compare algorithms based on only the mean and standard deviation values. The optimization results cannot be assumed to obey the normal distribution; thus, it is necessary to judge whether the results of the algorithms differ from each other in a statistically significant way. Here, the Wilcoxon non-parametric statistical test 45 is used to obtain a parameter called p -value to verify whether two sets of solutions are different to a statistically significant extent or not. Generally, it is considered that p ≤ 0.5 can be considered as a statistically significant superiority of the results. The p -values calculated in Wilcoxon’s rank-sum test comparing NDWPSO and other PSO algorithms are listed in Table 3 for all benchmark functions. The p -values in Table 3 additionally present the superiority of the NDWPSO because all of the p -values are much smaller than 0.5.

In general, the NDWPSO has the fastest convergence rate when finding the global optimum from Figs. 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 , 11 , 12 , 13 , 14 , 15 , 16 , 17 , 18 and 19 , and thus we can conclude that the NDWPSO is superior to the other PSO variants during the process of optimization.

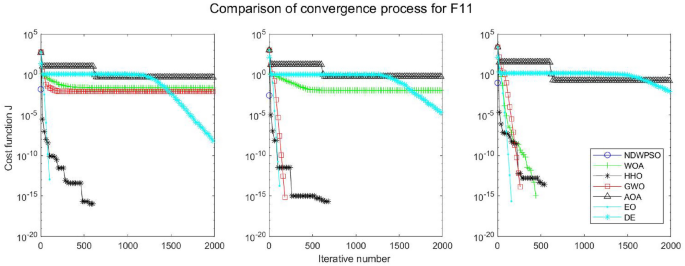

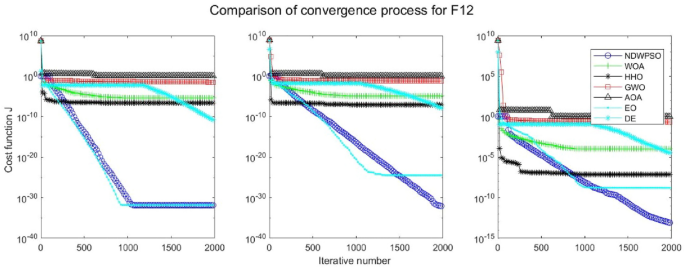

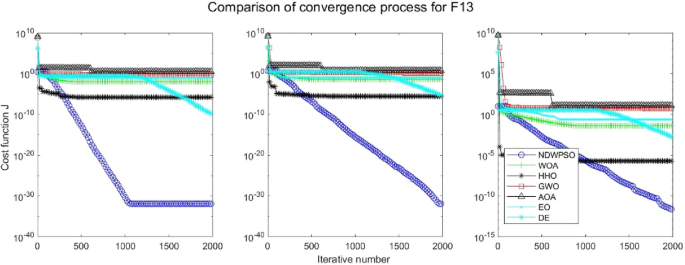

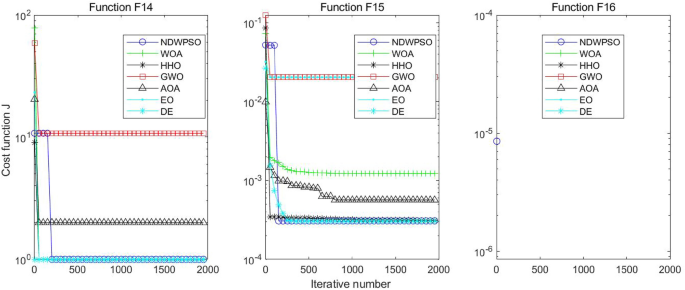

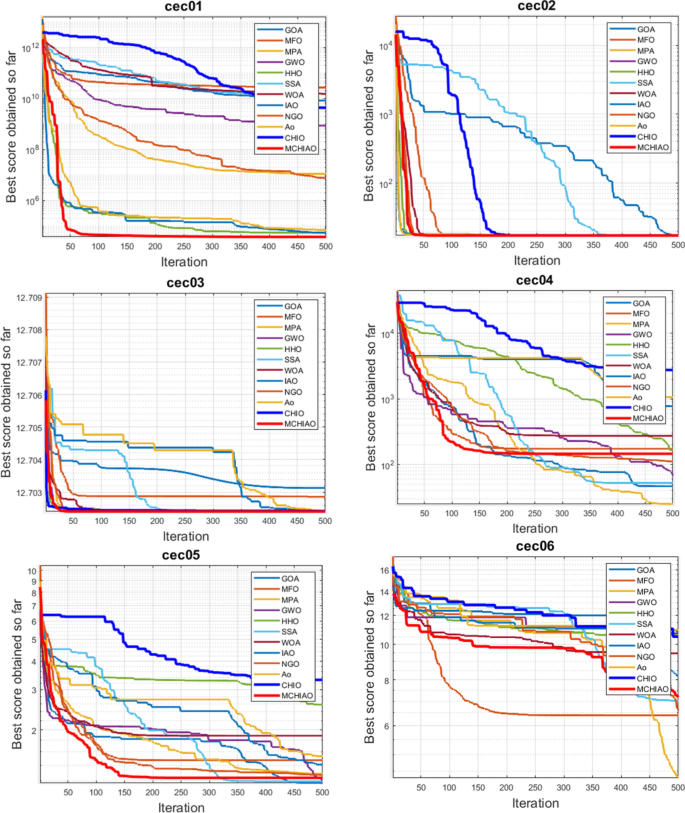

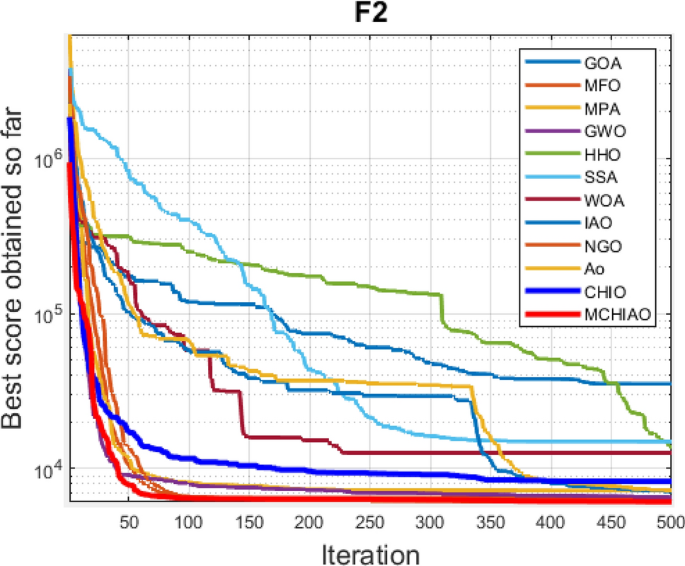

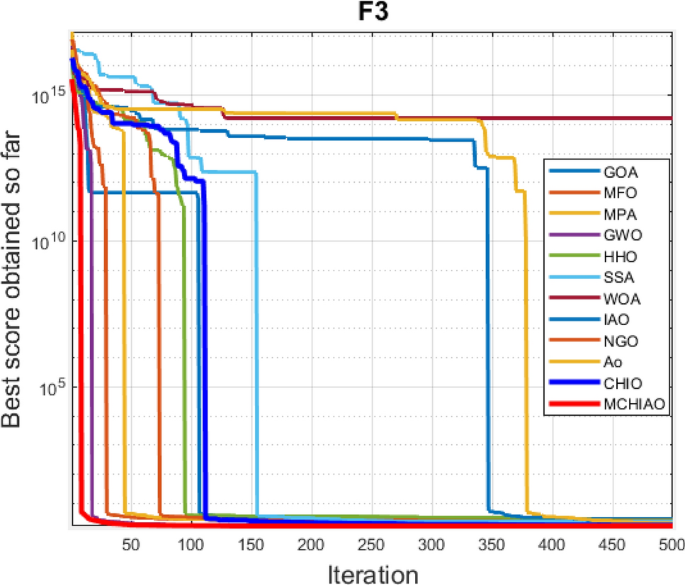

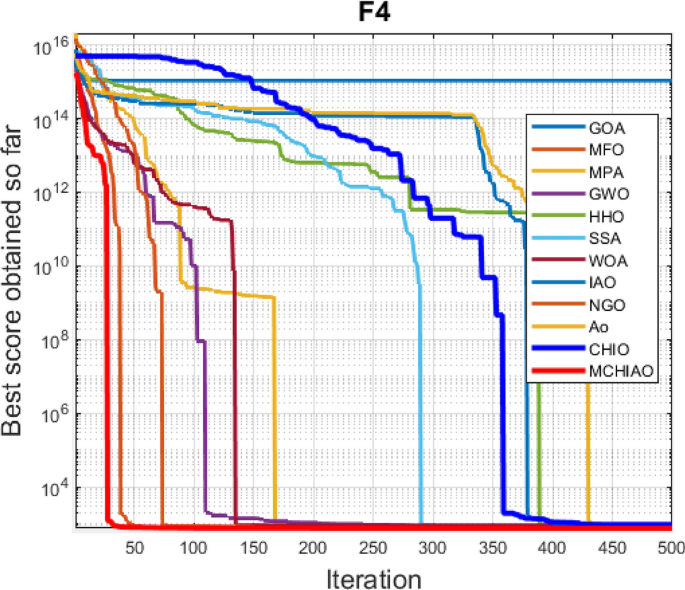

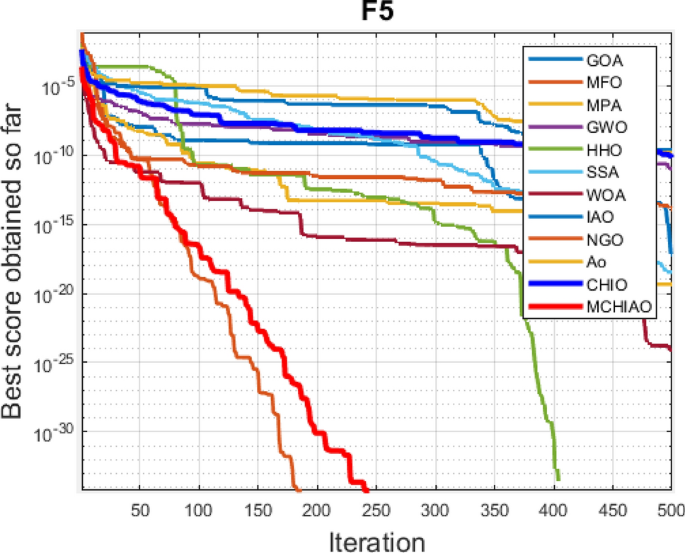

Comparison experiments between NDWPSO and other intelligent algorithms

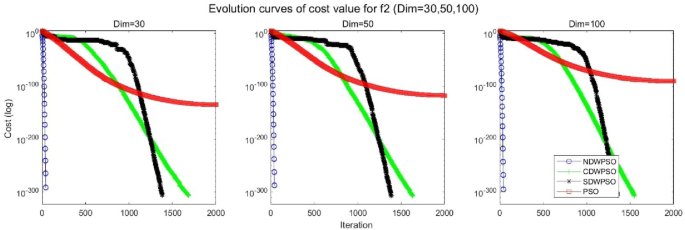

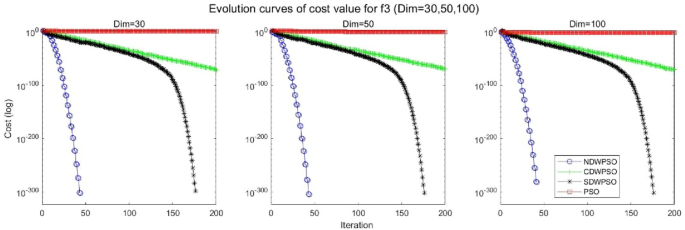

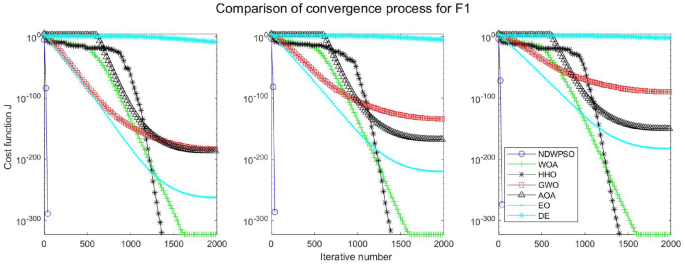

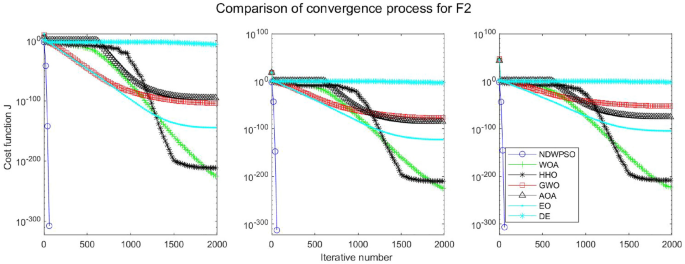

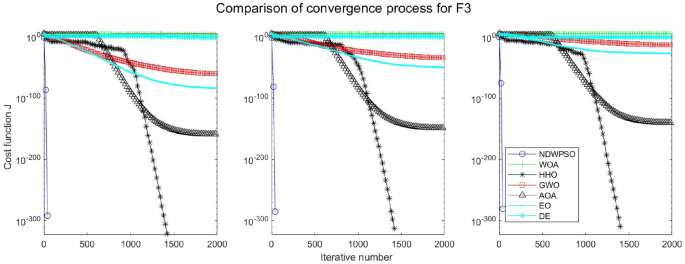

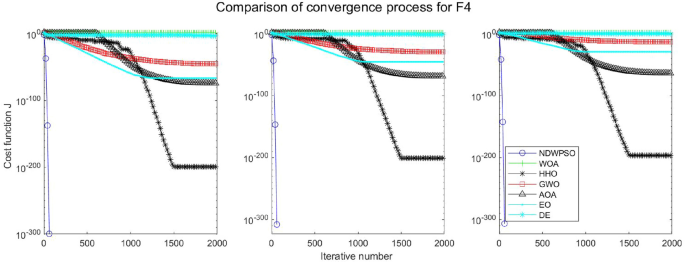

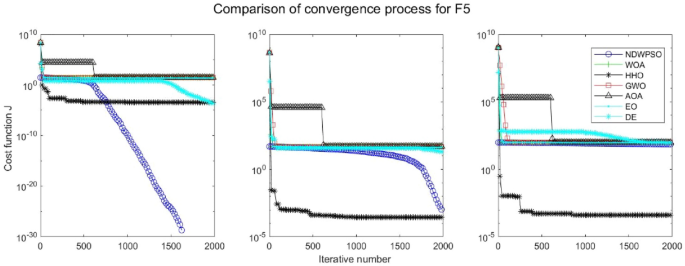

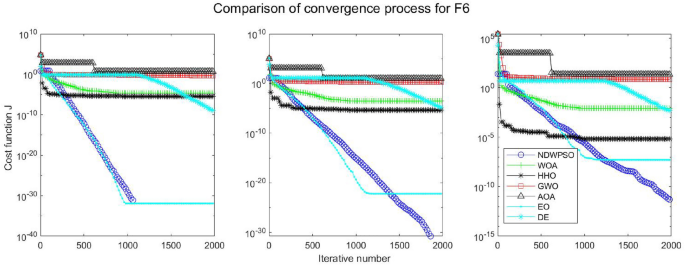

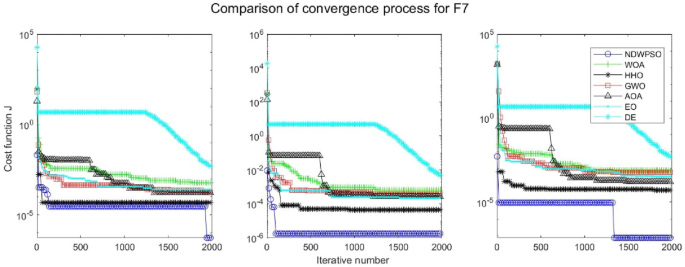

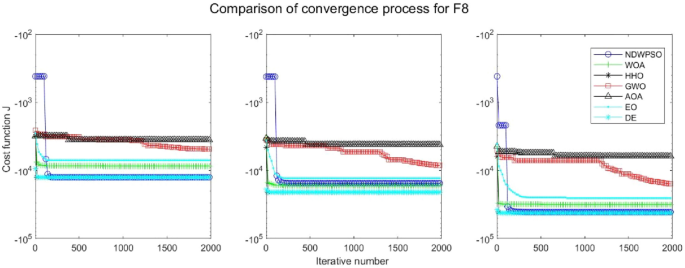

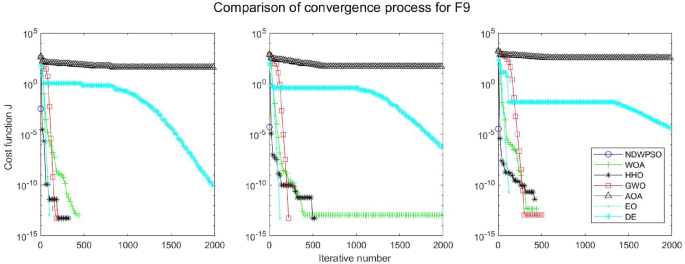

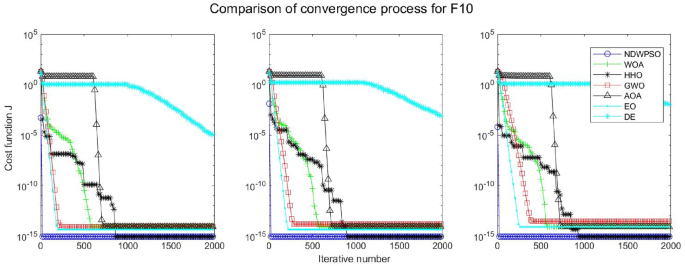

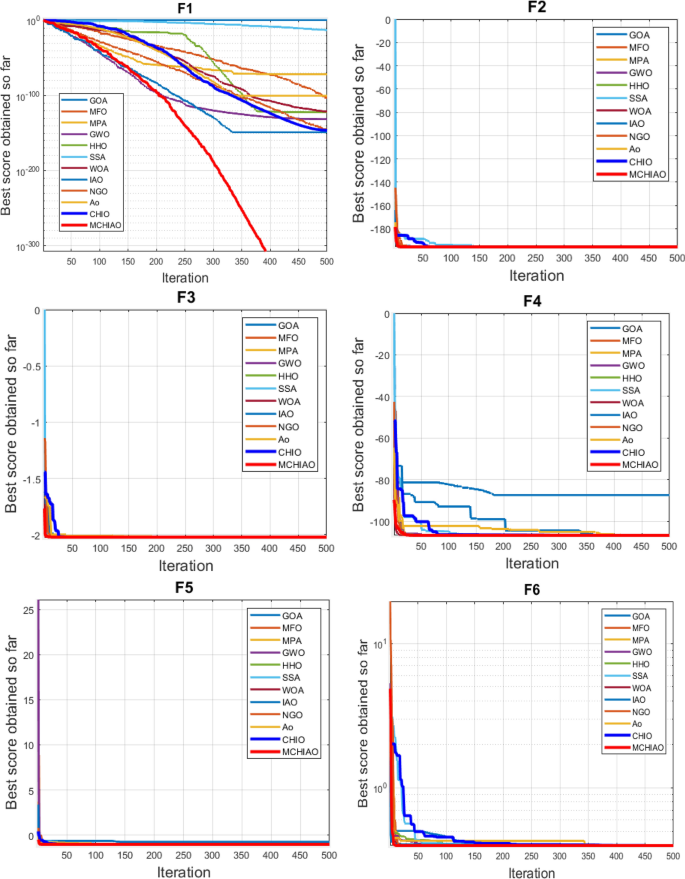

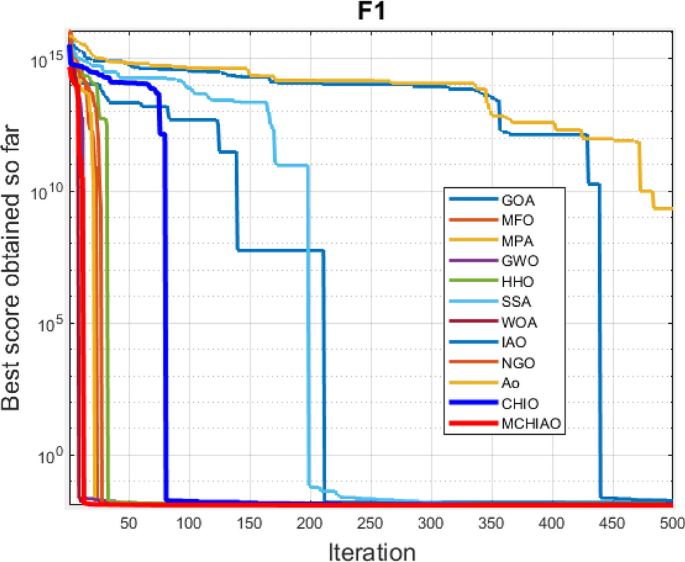

Experiments are conducted to compare NDWPSO with several other intelligent algorithms (WOA, HHO, GWO, AOA, EO and DE). The experimental object is 23 benchmark functions, and the experimental parameters of the NDWPSO algorithm are set the same as in Experiment 4.1. The maximum number of iterations of the experiment is increased to 2000 to fully demonstrate the performance of each algorithm. Each algorithm is repeated 30 times individually. The parameters of the relevant intelligent algorithms in the experiments are set as shown in Table 4 . To ensure the fairness of the algorithm comparison, all parameters are concerning the original parameters in the relevant algorithm literature. The experimental results are shown in Tables 5 , 6 , 7 and 8 and Figs. 20 , 21 , 22 , 23 , 24 , 25 , 26 , 27 , 28 , 29 , 30 , 31 , 32 , 33 , 34 , 35 and 36 .

Evolution curve of NDWPSO and other algorithms for f1 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f2 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f3(Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f4 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f5 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f6 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f7 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f8 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f9(Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f10 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f11 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f12 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f13 (Dim = 30,50,100).

Evolution curve of NDWPSO and other algorithms for f14, f15, f16.

Evolution curve of NDWPSO and other algorithms for f17, f18, f19.

Evolution curve of NDWPSO and other algorithms for f20, f21, f22.

Evolution curve of NDWPSO and other algorithms for f23.

The experimental data of NDWPSO and other intelligent algorithms for handling 30, 50, and 100-dimensional benchmark functions ( \({f}_{1}-{f}_{13}\) ) are recorded in Tables 8 , 9 and 10 , respectively. The comparison data of fixed-multimodal benchmark tests ( \({f}_{14}-{f}_{23}\) ) are recorded in Table 11 . According to the data in Tables 5 , 6 and 7 , the NDWPSO algorithm obtains 69.2%, 84.6%, and 84.6% of the best results for the benchmark function ( \({f}_{1}-{f}_{13}\) ) in the search space of three dimensions (Dim = 30, 50, 100), respectively. In Table 8 , the NDWPSO algorithm obtains 80% of the optimal solutions in 10 fixed-multimodal benchmark functions.

The convergence curves of each algorithm are shown in Figs. 20 , 21 , 22 , 23 , 24 , 25 , 26 , 27 , 28 , 29 , 30 , 31 , 32 , 33 , 34 , 35 and 36 . The NDWPSO algorithm demonstrates two convergence behaviors when calculating the benchmark functions in 30, 50, and 100-dimensional search spaces. The first behavior is the fast convergence of NDWPSO with a small number of iterations at the beginning of the search. The reason is that the Iterative-mapping strategy and the position update scheme of dynamic weighting are used in the NDWPSO algorithm. This scheme can quickly target the region in the search space where the global optimum is located, and then precisely lock the optimal solution. When NDWPSO processes the functions \({f}_{1}-{f}_{4}\) , and \({f}_{9}-{f}_{11}\) , the behavior can be reflected in the convergence trend of their corresponding curves. The second behavior is that NDWPSO gradually improves the convergence accuracy and rapidly approaches the global optimal in the middle and late stages of the iteration. The NDWPSO algorithm fails to converge quickly in the early iterations, which is possible to prevent the swarm from falling into a local optimal. The behavior can be demonstrated by the convergence trend of the curves when NDWPSO handles the functions \({f}_{6}\) , \({f}_{12}\) , and \({f}_{13}\) , and it also shows that the NDWPSO algorithm has an excellent ability of local search.

Combining the experimental data with the convergence curves, it is concluded that the NDWPSO algorithm has a faster convergence speed, so the effectiveness and global convergence of the NDWPSO algorithm are more outstanding than other intelligent algorithms.

Experiments on classical engineering problems

Three constrained classical engineering design problems (welded beam design, pressure vessel design 43 , and three-bar truss design 38 ) are used to evaluate the NDWPSO algorithm. The experiments are the NDWPSO algorithm and 5 other intelligent algorithms (WOA 36 , HHO, GWO, AOA, EO 41 ). Each algorithm is provided with the maximum number of iterations and population size ( \({\text{Mk}}=500,\mathrm{ n}=40\) ), and then repeats 30 times, independently. The parameters of the algorithms are set the same as in Table 4 . The experimental results of three engineering design problems are recorded in Tables 9 , 10 and 11 in turn. The result data is the average value of the solved data.

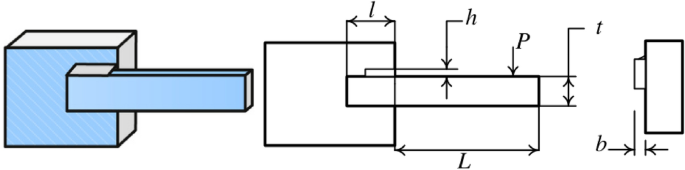

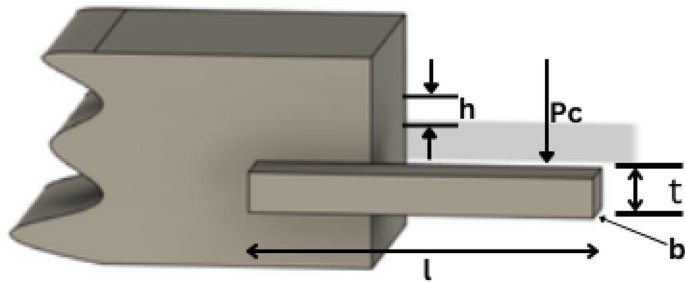

Welded beam design

The target of the welded beam design problem is to find the optimal manufacturing cost for the welded beam with the constraints, as shown in Fig. 37 . The constraints are the thickness of the weld seam ( \({\text{h}}\) ), the length of the clamped bar ( \({\text{l}}\) ), the height of the bar ( \({\text{t}}\) ) and the thickness of the bar ( \({\text{b}}\) ). The mathematical formulation of the optimization problem is given as follows:

Welded beam design.

In Table 9 , the NDWPSO, GWO, and EO algorithms obtain the best optimal cost. Besides, the standard deviation (SD) of t NDWPSO is the lowest, which means it has very good results in solving the welded beam design problem.

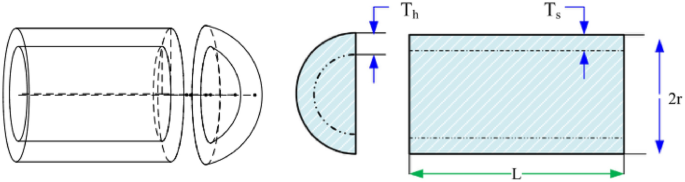

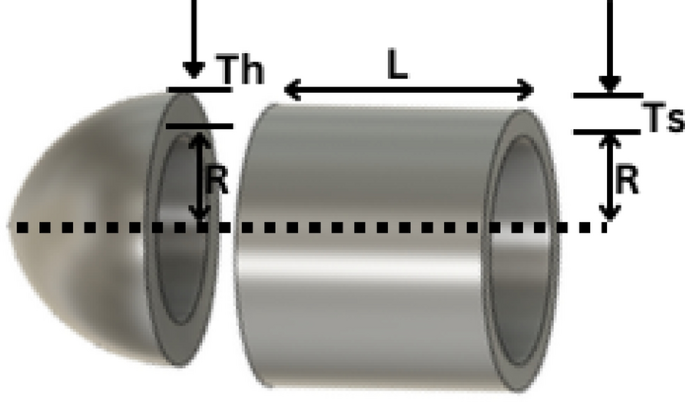

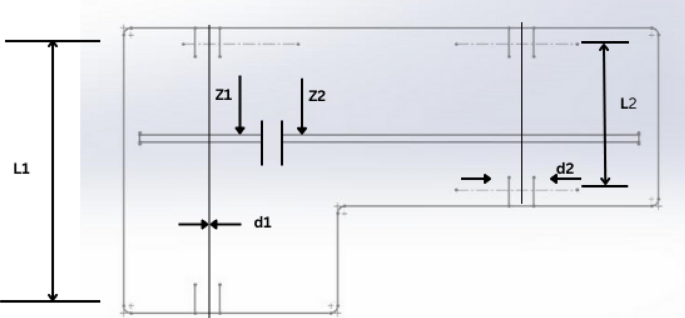

Pressure vessel design

Kannan and Kramer 43 proposed the pressure vessel design problem as shown in Fig. 38 to minimize the total cost, including the cost of material, forming, and welding. There are four design optimized objects: the thickness of the shell \({T}_{s}\) ; the thickness of the head \({T}_{h}\) ; the inner radius \({\text{R}}\) ; the length of the cylindrical section without considering the head \({\text{L}}\) . The problem includes the objective function and constraints as follows:

Pressure vessel design.

The results in Table 10 show that the NDWPSO algorithm obtains the lowest optimal cost with the same constraints and has the lowest standard deviation compared with other algorithms, which again proves the good performance of NDWPSO in terms of solution accuracy.

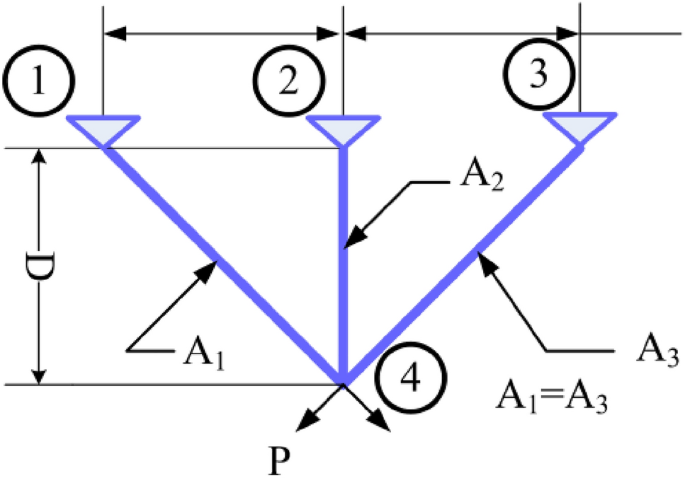

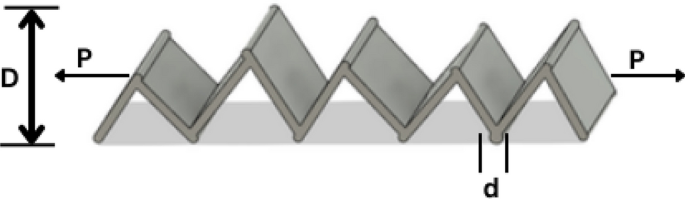

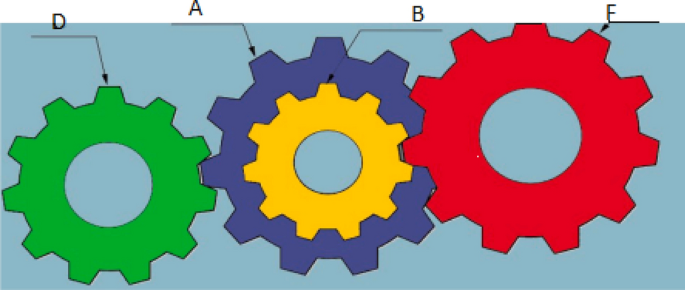

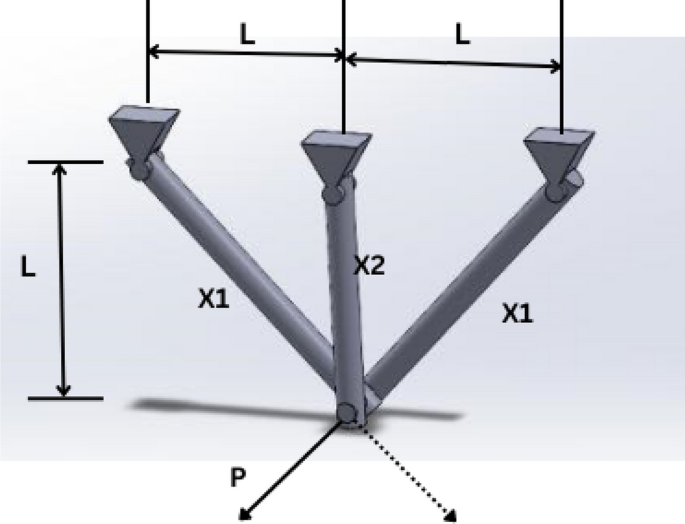

Three-bar truss design

This structural design problem 44 is one of the most widely-used case studies as shown in Fig. 39 . There are two main design parameters: the area of the bar1 and 3 ( \({A}_{1}={A}_{3}\) ) and area of bar 2 ( \({A}_{2}\) ). The objective is to minimize the weight of the truss. This problem is subject to several constraints as well: stress, deflection, and buckling constraints. The problem is formulated as follows:

Three-bar truss design.

From Table 11 , NDWPSO obtains the best design solution in this engineering problem and has the smallest standard deviation of the result data. In summary, the NDWPSO can reveal very competitive results compared to other intelligent algorithms.

Conclusions and future works

An improved algorithm named NDWPSO is proposed to enhance the solving speed and improve the computational accuracy at the same time. The improved NDWPSO algorithm incorporates the search ideas of other intelligent algorithms (DE, WOA). Besides, we also proposed some new hybrid strategies to adjust the distribution of algorithm parameters (such as the inertia weight parameter, the acceleration coefficients, the initialization scheme, the position updating equation, and so on).

23 classical benchmark functions: indefinite unimodal (f1-f7), indefinite multimodal (f8-f13), and fixed-dimensional multimodal(f14-f23) are applied to evaluate the effective line and feasibility of the NDWPSO algorithm. Firstly, NDWPSO is compared with PSO, CDWPSO, and SDWPSO. The simulation results can prove the exploitative, exploratory, and local optima avoidance of NDWPSO. Secondly, the NDWPSO algorithm is compared with 5 other intelligent algorithms (WOA, HHO, GWO, AOA, EO). The NDWPSO algorithm also has better performance than other intelligent algorithms. Finally, 3 classical engineering problems are applied to prove that the NDWPSO algorithm shows superior results compared to other algorithms for the constrained engineering optimization problems.

Although the proposed NDWPSO is superior in many computation aspects, there are still some limitations and further improvements are needed. The NDWPSO performs a limit initialize on each particle by the strategy of “elite opposition-based learning”, it takes more computation time before speed update. Besides, the” local optimal jump-out” strategy also brings some random process. How to reduce the random process and how to improve the limit initialize efficiency are the issues that need to be further discussed. In addition, in future work, researchers will try to apply the NDWPSO algorithm to wider fields to solve more complex and diverse optimization problems.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

Sami, F. Optimize electric automation control using artificial intelligence (AI). Optik 271 , 170085 (2022).

Article ADS Google Scholar

Li, X. et al. Prediction of electricity consumption during epidemic period based on improved particle swarm optimization algorithm. Energy Rep. 8 , 437–446 (2022).

Article Google Scholar

Sun, B. Adaptive modified ant colony optimization algorithm for global temperature perception of the underground tunnel fire. Case Stud. Therm. Eng. 40 , 102500 (2022).

Bartsch, G. et al. Use of artificial intelligence and machine learning algorithms with gene expression profiling to predict recurrent nonmuscle invasive urothelial carcinoma of the bladder. J. Urol. 195 (2), 493–498 (2016).

Article PubMed Google Scholar

Bao, Z. Secure clustering strategy based on improved particle swarm optimization algorithm in internet of things. Comput. Intell. Neurosci. 2022 , 1–9 (2022).

Google Scholar

Kennedy, J. & Eberhart, R. Particle swarm optimization. In: Proceedings of ICNN'95-International Conference on Neural Networks . IEEE, 1942–1948 (1995).

Lin, Q. et al. A novel artificial bee colony algorithm with local and global information interaction. Appl. Soft Comput. 62 , 702–735 (2018).

Abed-alguni, B. H. et al. Exploratory cuckoo search for solving single-objective optimization problems. Soft Comput. 25 (15), 10167–10180 (2021).

Brajević, I. A shuffle-based artificial bee colony algorithm for solving integer programming and minimax problems. Mathematics 9 (11), 1211 (2021).

Khan, A. T. et al. Non-linear activated beetle antennae search: A novel technique for non-convex tax-aware portfolio optimization problem. Expert Syst. Appl. 197 , 116631 (2022).

Brajević, I. et al. Hybrid sine cosine algorithm for solving engineering optimization problems. Mathematics 10 (23), 4555 (2022).

Abed-Alguni, B. H., Paul, D. & Hammad, R. Improved Salp swarm algorithm for solving single-objective continuous optimization problems. Appl. Intell. 52 (15), 17217–17236 (2022).

Nadimi-Shahraki, M. H. et al. Binary starling murmuration optimizer algorithm to select effective features from medical data. Appl. Sci. 13 (1), 564 (2022).

Nadimi-Shahraki, M. H. et al. A systematic review of the whale optimization algorithm: Theoretical foundation, improvements, and hybridizations. Archiv. Comput. Methods Eng. 30 (7), 4113–4159 (2023).

Fatahi, A., Nadimi-Shahraki, M. H. & Zamani, H. An improved binary quantum-based avian navigation optimizer algorithm to select effective feature subset from medical data: A COVID-19 case study. J. Bionic Eng. 21 (1), 426–446 (2024).

Abed-alguni, B. H. & AL-Jarah, S. H. IBJA: An improved binary DJaya algorithm for feature selection. J. Comput. Sci. 75 , 102201 (2024).

Yeh, W.-C. A novel boundary swarm optimization method for reliability redundancy allocation problems. Reliab. Eng. Syst. Saf. 192 , 106060 (2019).

Solomon, S., Thulasiraman, P. & Thulasiram, R. Collaborative multi-swarm PSO for task matching using graphics processing units. In: Proceedings of the 13th Annual Conference on Genetic and Evolutionary Computation 1563–1570 (2011).

Mukhopadhyay, S. & Banerjee, S. Global optimization of an optical chaotic system by chaotic multi swarm particle swarm optimization. Expert Syst. Appl. 39 (1), 917–924 (2012).

Duan, L. et al. Improved particle swarm optimization algorithm for enhanced coupling of coaxial optical communication laser. Opt. Fiber Technol. 64 , 102559 (2021).

Sun, F., Xu, Z. & Zhang, D. Optimization design of wind turbine blade based on an improved particle swarm optimization algorithm combined with non-gaussian distribution. Adv. Civ. Eng. 2021 , 1–9 (2021).

Liu, M. et al. An improved particle-swarm-optimization algorithm for a prediction model of steel slab temperature. Appl. Sci. 12 (22), 11550 (2022).

Article MathSciNet CAS Google Scholar

Gad, A. G. Particle swarm optimization algorithm and its applications: A systematic review. Archiv. Comput. Methods Eng. 29 (5), 2531–2561 (2022).

Article MathSciNet Google Scholar

Feng, H. et al. Trajectory control of electro-hydraulic position servo system using improved PSO-PID controller. Autom. Constr. 127 , 103722 (2021).

Chen, Ke., Zhou, F. & Liu, A. Chaotic dynamic weight particle swarm optimization for numerical function optimization. Knowl. Based Syst. 139 , 23–40 (2018).

Bai, B. et al. Reliability prediction-based improved dynamic weight particle swarm optimization and back propagation neural network in engineering systems. Expert Syst. Appl. 177 , 114952 (2021).

Alsaidy, S. A., Abbood, A. D. & Sahib, M. A. Heuristic initialization of PSO task scheduling algorithm in cloud computing. J. King Saud Univ. –Comput. Inf. Sci. 34 (6), 2370–2382 (2022).

Liu, H., Cai, Z. & Wang, Y. Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Appl. Soft Comput. 10 (2), 629–640 (2010).

Deng, W. et al. A novel intelligent diagnosis method using optimal LS-SVM with improved PSO algorithm. Soft Comput. 23 , 2445–2462 (2019).

Huang, M. & Zhen, L. Research on mechanical fault prediction method based on multifeature fusion of vibration sensing data. Sensors 20 (1), 6 (2019).

Article ADS PubMed PubMed Central Google Scholar

Wolpert, D. H. & Macready, W. G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1 (1), 67–82 (1997).

Gandomi, A. H. et al. Firefly algorithm with chaos. Commun. Nonlinear Sci. Numer. Simul. 18 (1), 89–98 (2013).

Article ADS MathSciNet Google Scholar

Zhou, Y., Wang, R. & Luo, Q. Elite opposition-based flower pollination algorithm. Neurocomputing 188 , 294–310 (2016).

Li, G., Niu, P. & Xiao, X. Development and investigation of efficient artificial bee colony algorithm for numerical function optimization. Appl. Soft Comput. 12 (1), 320–332 (2012).

Xiong, G. et al. Parameter extraction of solar photovoltaic models by means of a hybrid differential evolution with whale optimization algorithm. Solar Energy 176 , 742–761 (2018).

Mirjalili, S. & Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 95 , 51–67 (2016).

Yao, X., Liu, Y. & Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 3 (2), 82–102 (1999).

Heidari, A. A. et al. Harris hawks optimization: Algorithm and applications. Fut. Gener. Comput. Syst. 97 , 849–872 (2019).

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 69 , 46–61 (2014).

Hashim, F. A. et al. Archimedes optimization algorithm: A new metaheuristic algorithm for solving optimization problems. Appl. Intell. 51 , 1531–1551 (2021).

Faramarzi, A. et al. Equilibrium optimizer: A novel optimization algorithm. Knowl. -Based Syst. 191 , 105190 (2020).

Pant, M. et al. Differential evolution: A review of more than two decades of research. Eng. Appl. Artif. Intell. 90 , 103479 (2020).

Coello, C. A. C. Use of a self-adaptive penalty approach for engineering optimization problems. Comput. Ind. 41 (2), 113–127 (2000).

Kannan, B. K. & Kramer, S. N. An augmented lagrange multiplier based method for mixed integer discrete continuous optimization and its applications to mechanical design. J. Mech. Des. 116 , 405–411 (1994).

Derrac, J. et al. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 1 (1), 3–18 (2011).

Download references

Acknowledgements

This work was supported by Key R&D plan of Shandong Province, China (2021CXGC010207, 2023CXGC01020); First batch of talent research projects of Qilu University of Technology in 2023 (2023RCKY116); Introduction of urgently needed talent projects in Key Supported Regions of Shandong Province; Key Projects of Natural Science Foundation of Shandong Province (ZR2020ME116); the Innovation Ability Improvement Project for Technology-based Small- and Medium-sized Enterprises of Shandong Province (2022TSGC2051, 2023TSGC0024, 2023TSGC0931); National Key R&D Program of China (2019YFB1705002), LiaoNing Revitalization Talents Program (XLYC2002041) and Young Innovative Talents Introduction & Cultivation Program for Colleges and Universities of Shandong Province (Granted by Department of Education of Shandong Province, Sub-Title: Innovative Research Team of High Performance Integrated Device).

Author information

Authors and affiliations.

School of Mechanical and Automotive Engineering, Qilu University of Technology (Shandong Academy of Sciences), Jinan, 250353, China

Jinwei Qiao, Guangyuan Wang, Zhi Yang, Jun Chen & Pengbo Liu

Shandong Institute of Mechanical Design and Research, Jinan, 250353, China

School of Information Science and Engineering, Northeastern University, Shenyang, 110819, China

Xiaochuan Luo

Fushun Supervision Inspection Institute for Special Equipment, Fushun, 113000, China

You can also search for this author in PubMed Google Scholar

Contributions

Z.Y., J.Q., and G.W. wrote the main manuscript text and prepared all figures and tables. J.C., P.L., K.L., and X.L. were responsible for the data curation and software. All authors reviewed the manuscript.

Corresponding author

Correspondence to Zhi Yang .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary information., rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Qiao, J., Wang, G., Yang, Z. et al. A hybrid particle swarm optimization algorithm for solving engineering problem. Sci Rep 14 , 8357 (2024). https://doi.org/10.1038/s41598-024-59034-2

Download citation

Received : 11 January 2024

Accepted : 05 April 2024

Published : 10 April 2024

DOI : https://doi.org/10.1038/s41598-024-59034-2

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Particle swarm optimization

- Elite opposition-based learning

- Iterative mapping

- Convergence analysis

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing: AI and Robotics newsletter — what matters in AI and robotics research, free to your inbox weekly.

MCHIAO: a modified coronavirus herd immunity-Aquila optimization algorithm based on chaotic behavior for solving engineering problems

- Original Article

- Open access

- Published: 20 April 2024

Cite this article

You have full access to this open access article

- Heba Selim 1 ,

- Amira Y. Haikal 1 ,

- Labib M. Labib 1 &

- Mahmoud M. Saafan ORCID: orcid.org/0000-0002-9279-1537 1

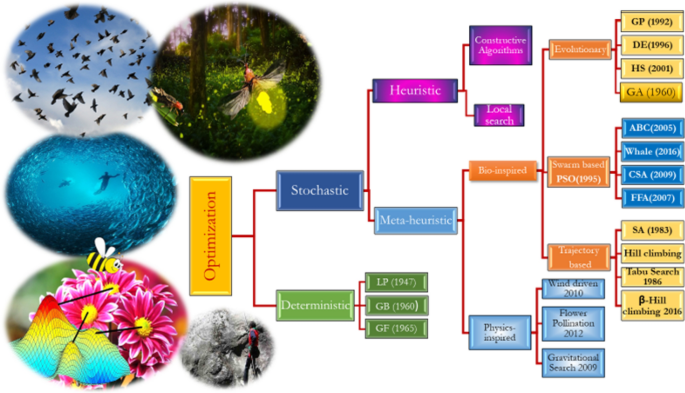

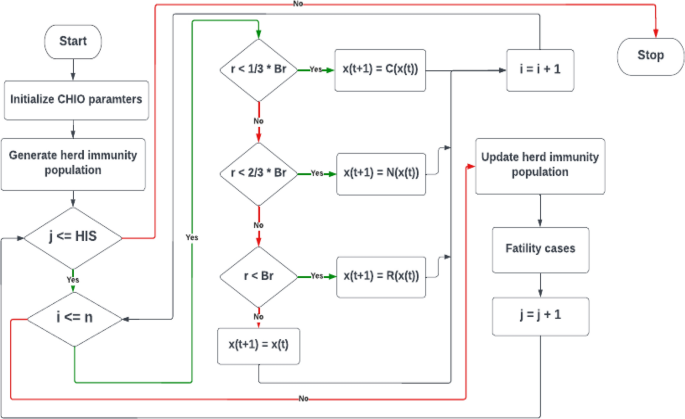

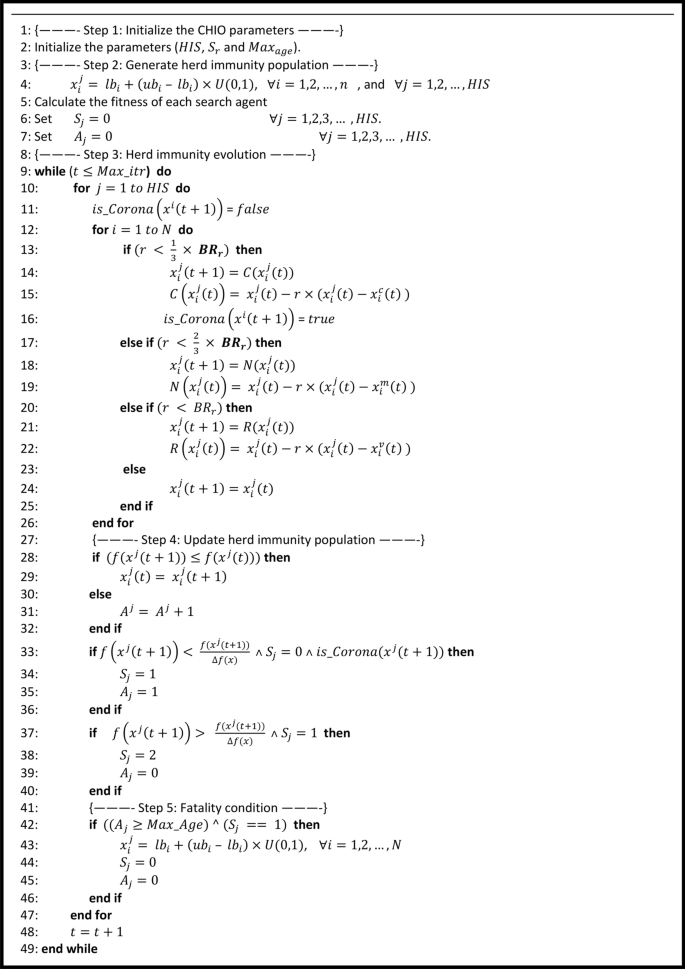

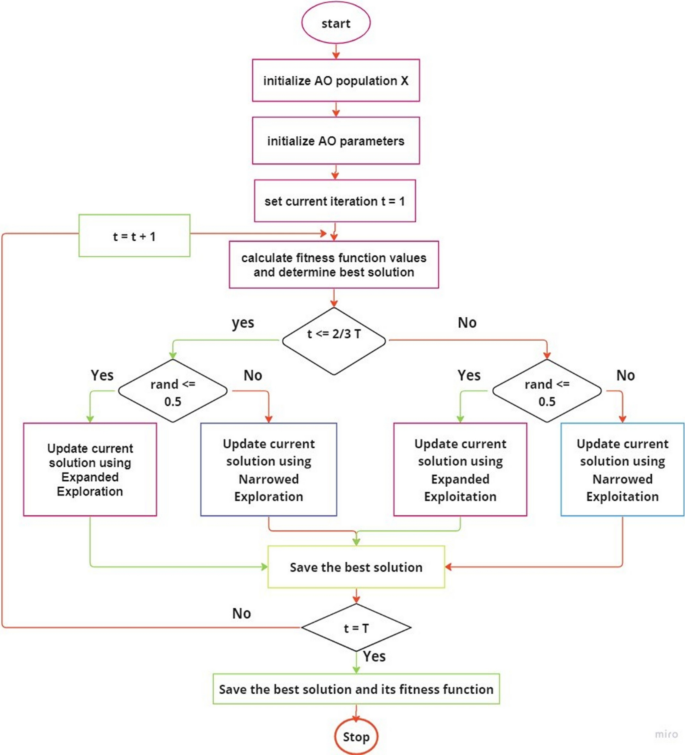

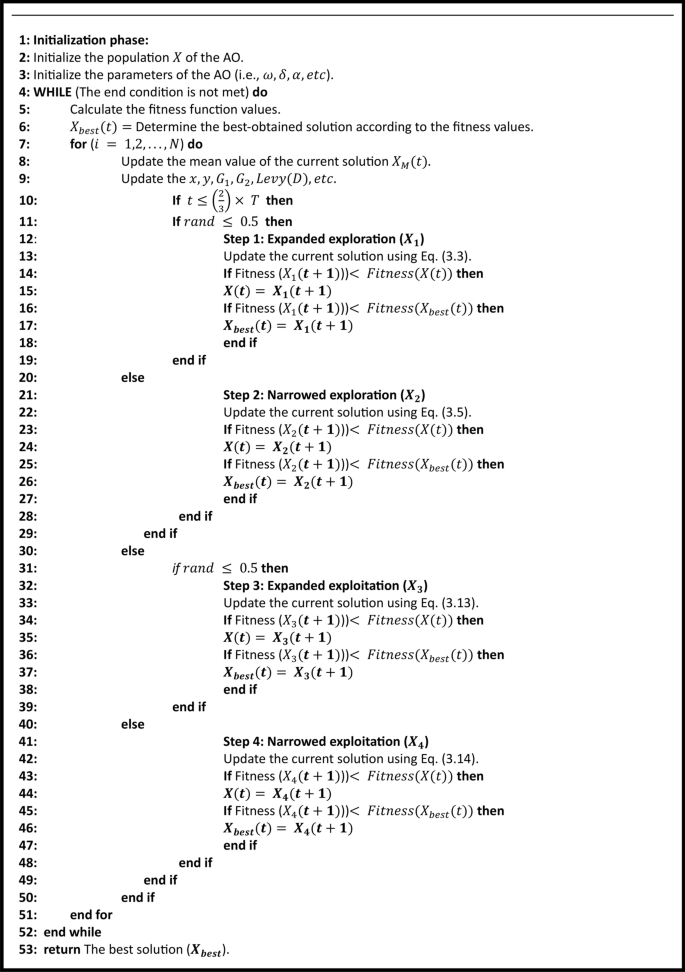

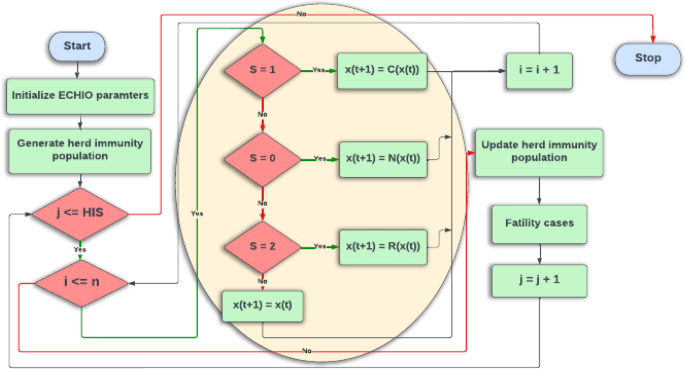

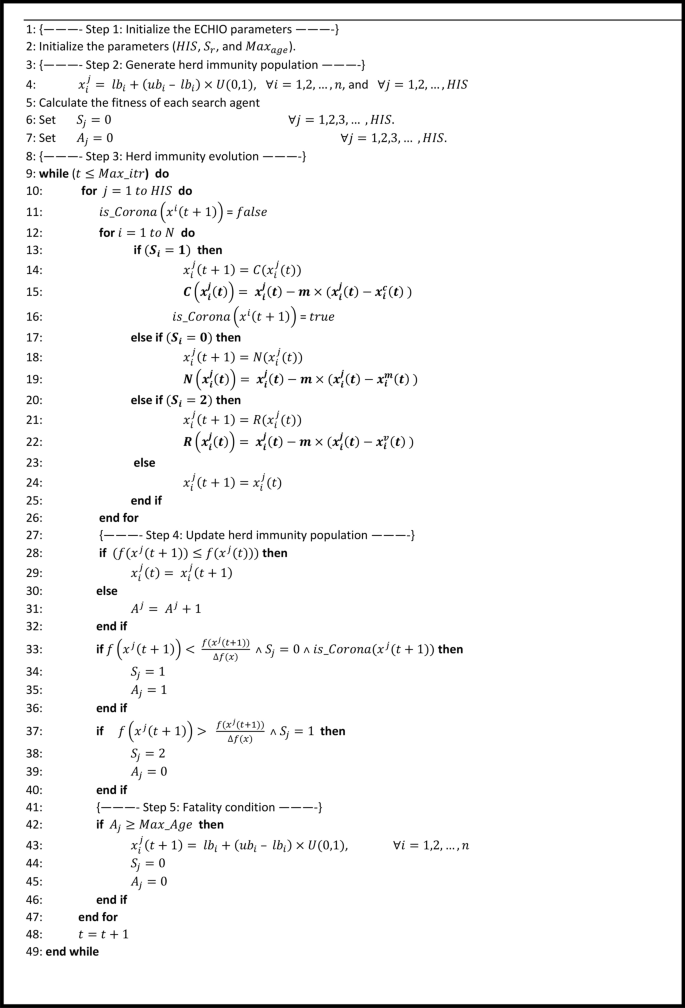

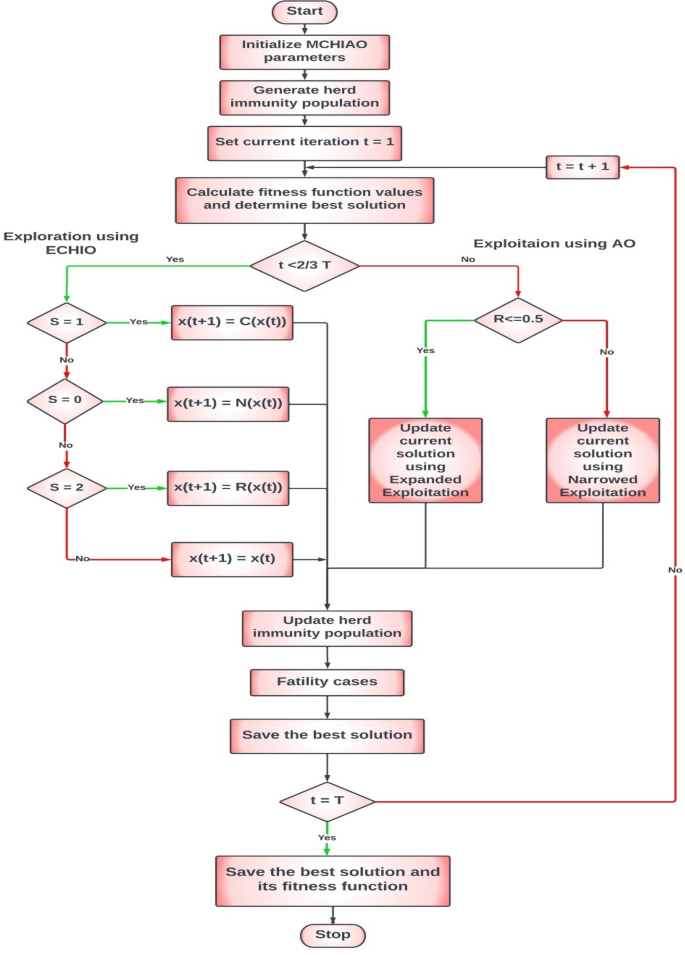

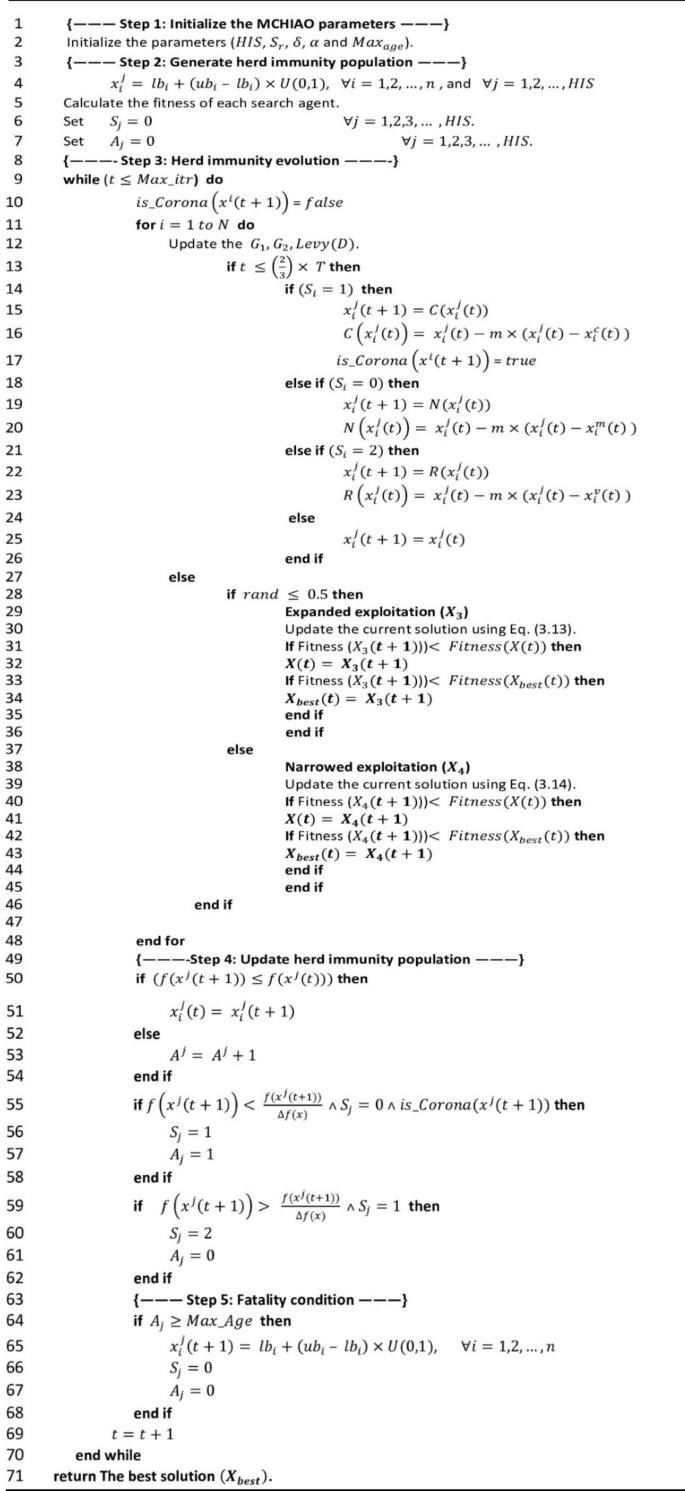

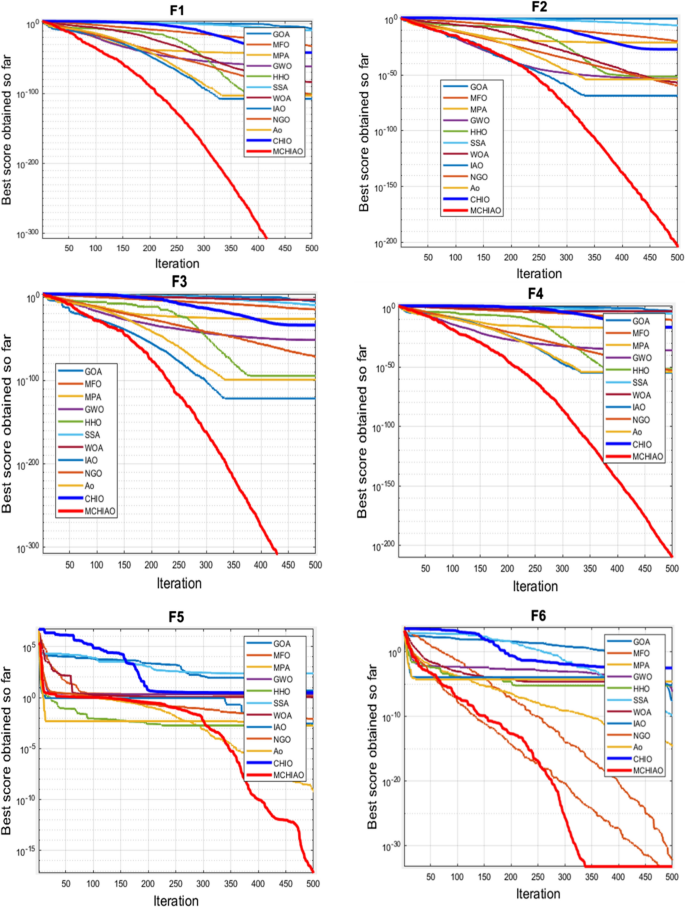

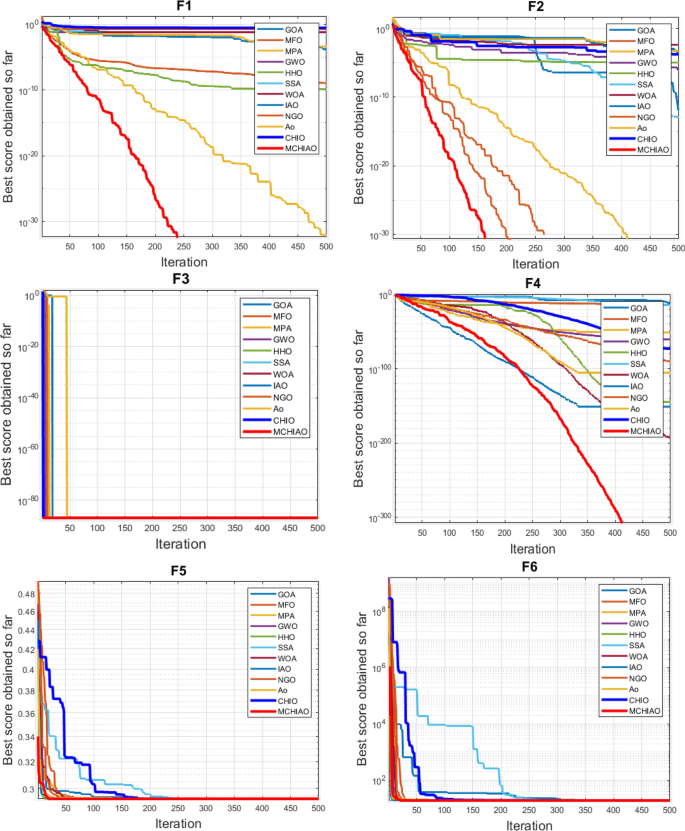

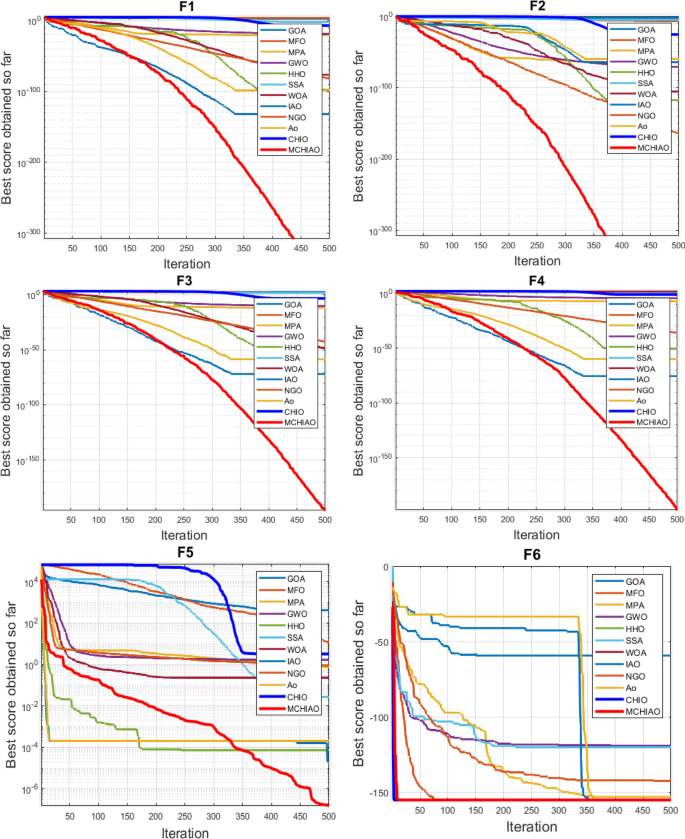

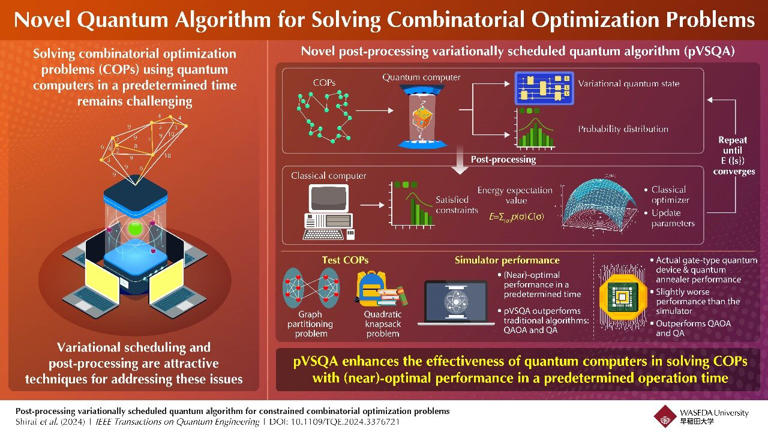

This paper proposes a hybrid Modified Coronavirus Herd Immunity Aquila Optimization Algorithm (MCHIAO) that compiles the Enhanced Coronavirus Herd Immunity Optimizer (ECHIO) algorithm and Aquila Optimizer (AO). As one of the competitive human-based optimization algorithms, the Coronavirus Herd Immunity Optimizer (CHIO) exceeds some other biological-inspired algorithms. Compared to other optimization algorithms, CHIO showed good results. However, CHIO gets confined to local optima, and the accuracy of large-scale global optimization problems is decreased. On the other hand, although AO has significant local exploitation capabilities, its global exploration capabilities are insufficient. Subsequently, a novel metaheuristic optimizer, Modified Coronavirus Herd Immunity Aquila Optimizer (MCHIAO), is presented to overcome these restrictions and adapt it to solve feature selection challenges. In this paper, MCHIAO is proposed with three main enhancements to overcome these issues and reach higher optimal results which are cases categorizing, enhancing the new genes’ value equation using the chaotic system as inspired by the chaotic behavior of the coronavirus and generating a new formula to switch between expanded and narrowed exploitation. MCHIAO demonstrates it’s worth contra ten well-known state-of-the-art optimization algorithms (GOA, MFO, MPA, GWO, HHO, SSA, WOA, IAO, NOA, NGO) in addition to AO and CHIO. Friedman average rank and Wilcoxon statistical analysis ( p -value) are conducted on all state-of-the-art algorithms testing 23 benchmark functions. Wilcoxon test and Friedman are conducted as well on the 29 CEC2017 functions. Moreover, some statistical tests are conducted on the 10 CEC2019 benchmark functions. Six real-world problems are used to validate the proposed MCHIAO against the same twelve state-of-the-art algorithms. On classical functions, including 24 unimodal and 44 multimodal functions, respectively, the exploitative and explorative behavior of the hybrid algorithm MCHIAO is evaluated. The statistical significance of the proposed technique for all functions is demonstrated by the p -values calculated using the Wilcoxon rank-sum test, as these p -values are found to be less than 0.05.

Avoid common mistakes on your manuscript.

1 Introduction

We face a large number of optimization challenges in engineering, for which optimization methods are required [ 1 ]. Optimization algorithms help us in a variety of ways in our daily lives. Optimization algorithms can be used to reduce expenses and faults in any system or to increase profits in a financial firm. Optimization algorithms can be classified into two main categories: deterministic algorithms and stochastic algorithms.