Research LLM

Osgoode’s Research LLM is a full-time, research-intensive program that is ideal for students who want to pursue a specific area of legal study in depth, including those who are considering a PhD. Students conduct their research under the supervision of an Osgoode faculty member .

The Research LLM does not qualify students to practise law in Canada. Students interested in practising law should review the licencing rules of the Law Society of the province in which they intend to practice.

Program Requirements

Graduate seminar i: legal research (gs law 6610).

- One study group

- Elective courses

- A major written research work (thesis or major research paper)

The Graduate Seminar is the core course for the Graduate Program in Law. Designed to complement other courses, the seminar provides a venue for developing critical assessments of the law and facilitating students’ progress on their own research, papers and dissertation proposals. The seminar also provides students with an intellectual community and introduces them to Osgoode research resources.

One Study Group

Students participating in study groups read and discuss a significant number of articles with their groups each week. The groups are not structured as courses but as venues for reflection and discourse. LLM students must participate in one study group. They can choose among five options, depending on their research interests:

- Regulation and Governance

- Law and Economic Relations

- Theoretical Perspectives in Legal Research

- Law and Social Justice

- Law in a Global Context

Elective Courses

Research LLM students can fulfil their elective courses through:

- a variety of graduate courses in law

- integrated courses with the JD program

- independent study

- courses in other programs

Major Written Research Work

A major paper is at the core of the Research LLM program. Most students complete a thesis, but students may also choose to submit a major research paper and complete additional coursework.

All theses and major research papers should contain an analysis of scholarship on the student’s chosen topic and the results of the student’s research – based on primary sources – in the form of a sustained argument. They should have standard scholarly apparatus, footnotes and a bibliography, prepared in accordance with the McGill Guide to Legal Citations.

Thesis Option

Major Research Paper (MRP) Option

100-125 pages

60-70 pages

Additional elective courses required to complete the LLM

Evaluation and defence

Students must succeed in an oral defence of their thesis before an examination committee.

MRPs are evaluated by the student’s supervisor and by one other member of the Graduate Program chosen by the supervisor in consultation with the Graduate Program Director. In exceptional circumstances, the second examiner may be a member of another Graduate Program at York University or another university.

Additional notes

Some students choose to fulfill the program’s thesis requirement with a Portfolio Thesis: one or two published articles (depending on length and scope) developed during their time in the Osgoode graduate degree, submitted in lieu of a traditional thesis.

The MRP is an original piece of scholarly work equivalent to an article of publishable quality for a reputable law journal. It’s typically more substantial than a research paper for a regular course, but less substantial than a thesis.

Additional Courses

Students entering the Research LLM without an LLB or JD may be required to take additional courses on the advice of their supervisor. Completing this extra coursework during their program can be helpful to students whose research relates to fields of law in which they do not have extensive background. The Graduate Program Director determines whether students must pursue additional courses in order to fulfill the requirements of the LLM.

Time to Completion

Both the Thesis and MRP options should be completed in three or four terms. Generally, students take courses in the fall and winter terms, conduct their research in the winter term and write the Thesis or MRP in the summer term. Graduate students must register in each term (fall, winter, summer) from the start of their program to completion.

Residency Requirement

Students must be located such that they are able to progress on all program requirements requiring geographical availability on campus.

More Detail:

Faculty research advisors, related topics:, funding and fees, intellectual life, meet our current doctoral students.

LL.M. Program

5005 Wasserstein Hall (WCC) 1585 Massachusetts Avenue Cambridge , MA 02138

The LL.M. (Master of Laws) program is a one-year degree program that typically includes 180 students from some 65 countries. The Graduate Program is interested in attracting intellectually curious and thoughtful candidates from a variety of legal systems and backgrounds and with various career plans. Harvard’s LL.M. students include lawyers working in firms, government officials, law professors, judges, diplomats, human rights activists, doctoral students, business people, and others. The diversity of the participants in the LL.M. program contributes significantly to the educational experience of all students at the School.

LL.M. Degree Overview

Ll.m. degree requirements, academic resources, ll.m. class profile, modal gallery, gallery block modal gallery.

Support NYU Law

- Graduate Affairs

- Research & Writing

LLM Research and Writing Options

Working as a research assistant for a law school professor.

Faculty members may offer students the opportunity to work as research assistants (RAs) for monetary compensation or, if the professor deems it appropriate based on the nature of the work, for academic credit. For details, review information on serving as a research assistant for faculty .

Directed Research

To undertake Directed Research, students contact individual instructors and agree on a research project. To register, a written proposal must be approved and signed by the instructor, and then submitted to the Office of Graduate Affairs. The written proposal should be at least 1000 words and describe the subject matter of the Directed Research and the issues the student intends to explore in the paper. While any full-time faculty member or visiting faculty member may supervise the research, Adjunct Professors may supervise only with the permission of Vice Dean Hertz.

Directed Research credit may be added through Monday, October 2 for Fall 2023, and Monday, February 5 for Spring 2024.

The usual allocation for Directed Research is two credits. A student may write a one-credit Directed Research. A two-credit Directed Research project should conform to the r equirements for an Option A paper ; a one-credit Directed Research paper should be at least 5,000 words, exclusive of footnotes. A three-credit Directed Research project is highly unusual and requires the approval of Vice Dean Randy Hertz. Students considering a 3-credit Directed Research should contact the Office of Graduate Affairs to discuss.

For non-tax students no more than four of a student's 24 credits may consist of directed research. Tax students may take a maximum of two credits of directed research. Regardless of the type of project involved, students are, of course, expected to submit original, non-duplicative work. When in doubt about proper use of a citation or quotation, discuss the issue with the instructor. Plagiarism is a serious offense that may merit severe discipline. Requests to add Directed Research after the deadline stated above require approval of Vice Dean Hertz. Such requests should be initiated by contacting the Office of Graduate Affairs and will only be considered if your credit load (not including the Directed Research credits) does not drop below minimum requirements after the add/drop period. Students who are granted permission to late-add Directed Research will not be permitted to drop courses if the result is inconsistent with the above; please plan your schedule accordingly. After March 15, the Vice Dean may allow a student to add Directed Research only in exceptional circumstances. No more than two credits can be earned in this manner.

Read further about Requirements for Directed Research

Directed Research During the Summer Semester

Students may register for Directed Research during the summer semester. The summer registration deadlines is July 1, unless there is approval by the Vice Dean to add at a later date. Please note that full-time students will be charged per credit for Directed Research during the summer. All work must be submitted by September 1 or by an earlier deadline established by the supervising faculty member.

Writing Credit

In seminars, colloquia, and courses that offer the option to add an additional writing credit, students may earn one credit for writing a substantial paper (at least 10,000 words in length exclusive of footnotes). To earn the additional credit, students must register for the writing credit section of the course within the same semester the course is offered. The deadline for registering is Monday, October 2 for Fall 2023, and Monday, February 5 for Spring 2024.

LLM Thesis Option

LLM students have the option to write a substantial research paper, in conjunction with a seminar or Directed Research that may be recorded as a "thesis" on their transcript. At the onset of the seminar or Directed Research, the student must obtain approval from the professor that the paper will be completed for a "thesis" designation.

It should be substantial in length (at least 10,000 words exclusive of footnotes) and, like the substantial writing requirement for JD students, must be analytical rather than descriptive in nature, showing original thought and analysis. Please note the thesis designation is for a single research paper agreed upon in advance.

The student is required to submit an outline and at least one FULL PRE-FINAL draft to the faculty member in order to receive the thesis notation. When submitting a final draft of the thesis to the faculty member, the student must give the faculty member an LLM Thesis Certification form . The faculty member is required to return the signed form to the Office of Records and Registration when submitting a grade for the course.

Please note that the student will not receive additional credit for writing the thesis, but will only receive credit for the seminar or Directed Research for which he or she is registered.

International Legal Studies Students should review their program requirements for further information about writing an LLM thesis within their program.

Writing Assistance

Writing resources.

- Guide to Writing

- (excellent guide to legal writing generally)

- So You Want to Write a Research Paper...

- (Recording with Prof. Jose Alvarez)

- So You Want to Write About International Law...

- Some Thoughts on Writing by Barry Friedman (PDF: 106 KB)

- NYU Law Library Guide: Researching and Writing a Law Review Note or Seminar Paper

- NYU Law Library Research Guides

- Why Write a Student Note

© 2024 New York University School of Law. 40 Washington Sq. South, New York, NY 10012. Tel. (212) 998-6100

- Schools & departments

Law LLM by Research

Awards: LLM by Research

Study modes: Full-time, Part-time

Funding opportunities

Programme website: Law

Upcoming Introduction to Postgraduate Study and Research events

Join us online on the 19th June or 26th June to learn more about studying and researching at Edinburgh.

Choose your event and register

Research profile

The Edinburgh Law School is a vibrant, collegial and enriching community of legal, sociolegal and criminology researchers and offers an excellent setting for doctoral research.

We are ranked 3rd in the UK for law for the quality and breadth of our research by Research Professional, based on the 2021 Research Excellence Framework (REF2021).

Our doctoral researchers are key to the School’s research activities and we work hard to ensure that they are fully engaged with staff and projects across all of our legal disciplines.

You will find opportunities in the following fields:

- company and commercial law

- comparative law

- constitutional and administrative law

- criminal law

- criminology and criminal justice

- environmental law

- European law, policy and institutions

- European private law

- evidence and procedure

- gender and sexuality

- human rights law

- information technology law

- intellectual property law

- international law

- legal theory

- medical law and ethics

- obligations

- contract delict

- unjustified enrichment

- property, trusts and successions

- Roman law and legal history

- socio-legal studies

Programme structure

The framework of the LLM by Research allows you time and intellectual space to work in your chosen field, and to refine and develop this initial phase of the project for future doctoral work.

The programme does not have formal coursework elements, other than initial training seminars alongside PhD students.

This makes the LLM by Research a particularly attractive option for those wishing to undertake postgraduate research on a part-time basis, while pursuing legal practice or other employment.

Training and support

Postgraduate researchers enjoy full access to the University’s research skills training which the Law School complements with a tailored research and wider skills programme.

The training programme in Semester One (six seminars) includes workshops on research design, writing and research ethics.

- Find out more about training and support on the LLM by Research

Postgraduate researchers are able to draw upon a fantastic range of resources and facilities to support their research.

The Law School has one of the most significant academic law libraries in the UK which offers outstanding digital resources alongside a world-leading print collection (almost 60,000 items including a unique collection for Scots law research).

You will also have access to the University’s Main Library which has one of the largest and most important collections in Britain, as well as the legal collection of the National Library of Scotland.

Entry requirements

These entry requirements are for the 2024/25 academic year and requirements for future academic years may differ. Entry requirements for the 2025/26 academic year will be published on 1 Oct 2024.

A UK 2:1 honours degree, or its international equivalent, in law, or a social science subject.

Entry to this programme is competitive. Meeting minimum requirements for consideration does not guarantee an offer of study.

International qualifications

Check whether your international qualifications meet our general entry requirements:

- Entry requirements by country

- English language requirements

Regardless of your nationality or country of residence, you must demonstrate a level of English language competency at a level that will enable you to succeed in your studies.

English language tests

We accept the following English language qualifications at the grades specified:

- IELTS Academic: total 7.0 with at least 7.0 in writing and 6.5 in all other components. We do not accept IELTS One Skill Retake to meet our English language requirements.

- TOEFL-iBT (including Home Edition): total 100 with at least 25 in writing and 23 in all other components.

- C1 Advanced ( CAE ) / C2 Proficiency ( CPE ): total 185 with at least 185 in writing and 176 in all other components.

- Trinity ISE : ISE III with passes in all four components.

- PTE Academic: total 70 with at least 70 in writing and 62 in all other components.

Your English language qualification must be no more than three and a half years old from the start date of the programme you are applying to study, unless you are using IELTS , TOEFL, Trinity ISE or PTE , in which case it must be no more than two years old.

Degrees taught and assessed in English

We also accept an undergraduate or postgraduate degree that has been taught and assessed in English in a majority English speaking country, as defined by UK Visas and Immigration:

- UKVI list of majority English speaking countries

We also accept a degree that has been taught and assessed in English from a university on our list of approved universities in non-majority English speaking countries (non-MESC).

- Approved universities in non-MESC

If you are not a national of a majority English speaking country, then your degree must be no more than five years old* at the beginning of your programme of study. (*Revised 05 March 2024 to extend degree validity to five years.)

Find out more about our language requirements:

Fees and costs

Scholarships and funding, featured funding.

* School of Law funding opportunities

Other funding opportunities

Search for scholarships and funding opportunities:

- Search for funding

Further information

- Postgraduate Research Office

- Phone: +44 (0)131 650 2022

- Contact: [email protected]

- School of Law (Postgraduate Research Office)

- Old College

- South Bridge

- Central Campus

- Programme: Law

- School: Law

- College: Arts, Humanities & Social Sciences

Select your programme and preferred start date to begin your application.

LLM by Research Law - 1 Year (Full-time)

Llm by research law - 2 years (part-time), application deadlines.

We encourage you to apply at least one month prior to entry so that we have enough time to process your application. If you are also applying for funding or will require a visa then we strongly recommend you apply as early as possible.

- How to apply

You must submit two references with your application.

Find out more about the general application process for postgraduate programmes:

What’s next in large language model (LLM) research? Here’s what’s coming down the ML pike

- Share on Facebook

- Share on LinkedIn

Join us in returning to NYC on June 5th to collaborate with executive leaders in exploring comprehensive methods for auditing AI models regarding bias, performance, and ethical compliance across diverse organizations. Find out how you can attend here .

There is a lot of excitement around the potential applications of large language models ( LLM ). We’re already seeing LLMs used in several applications, including composing emails and generating software code.

But as interest in LLMs grows, so do concerns about their limits; this can make it difficult to use them in different applications. Some of these include hallucinating false facts, failing at tasks that require commonsense and consuming large amounts of energy.

Here are some of the research areas that can help address these problems and make LLMs available to more domains in the future.

Knowledge retrieval

One of the key problems with LLMs such as ChatGPT and GPT-3 is their tendency to “hallucinate.” These models are trained to generate text that is plausible, not grounded in real facts. This is why they can make up stuff that never happened. Since the release of ChatGPT, many users have pointed out how the model can be prodded into generating text that sounds convincing but is factually incorrect.

The AI Impact Tour: The AI Audit

Join us as we return to NYC on June 5th to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

One method that can help address this problem is a class of techniques known as “knowledge retrieval.” The basic idea behind knowledge retrieval is to provide the LLM with extra context from an external knowledge source such as Wikipedia or a domain-specific knowledge base.

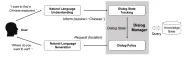

Google introduced “retrieval-augmented language model pre-training” ( REALM ) in 2020. When a user provides a prompt to the model, a “neural retriever” module uses the prompt to retrieve relevant documents from a knowledge corpus. The documents and the original prompt are then passed to the LLM, which generates the final output within the context of the knowledge documents.

Work on knowledge retrieval continues to make progress. Recently, AI21 Labs presented “in-context retrieval augmented language modeling,” a technique that makes it easy to implement knowledge retrieval in different black-box and open-source LLMs.

You can also see knowledge retrieval at work in You.com and the version of ChatGPT used in Bing. After receiving the prompt, the LLM first creates a search query, then retrieves documents and generates its output using those sources. It also provides links to the sources, which is very useful for verifying the information that the model produces. Knowledge retrieval is not a perfect solution and still makes mistakes. But it seems to be one step in the right direction.

Better prompt engineering techniques

Despite their impressive results, LLMs do not understand language and the world — at least not in the way that humans do. Therefore, there will always be instances where they will behave unexpectedly and make mistakes that seem dumb to humans.

One way to address this challenge is “prompt engineering,” a set of techniques for crafting prompts that guide LLMs to produce more reliable output. Some prompt engineering methods involve creating “few-shot learning” examples, where you prepend your prompt with a few similar examples and the desired output. The model uses these examples as guides when producing its output. By creating datasets of few-shot examples, companies can improve the performance of LLMs without the need to retrain or fine-tune them.

Another interesting line of work is “chain-of-thought (COT) prompting,” a series of prompt engineering techniques that enable the model to produce not just an answer but also the steps it uses to reach it. CoT prompting is especially useful for applications that require logical reasoning or step-by-step computation.

There are different CoT methods, including a few-shot technique that prepends the prompt with a few examples of step-by-step solutions. Another method, zero-shot CoT , uses a trigger phrase to force the LLM to produce the steps it reaches the result. And a more recent technique called “ faithful chain-of-thought reasoning ” uses multiple steps and tools to ensure that the LLM’s output is an accurate reflection of the steps it uses to reach the results.

Reasoning and logic are among the fundamental challenges of deep learning that might require new architectures and approaches to AI. But for the moment, better prompting techniques can help reduce the logical errors LLMs make and help troubleshoot their mistakes.

Alignment and fine-tuning techniques

Fine-tuning LLMs with application-specific datasets will improve their robustness and performance in those domains. Fine-tuning is especially useful when an LLM like GPT-3 is deployed in a specialized domain where a general-purpose model would perform poorly.

New fine-tuning techniques can further improve the accuracy of models. Of note is “reinforcement learning from human feedback” ( RLHF ), the technique used to train ChatGPT. In RLHF, human annotators vote on the answers of a pre-trained LLM. Their feedback is then used to train a reward system that further fine-tunes the LLM to become better aligned with user intents. RLHF worked very well for ChatGPT and is the reason that it is so much better than its predecessors in following user instructions.

The next step for the field will be for OpenAI, Microsoft and other providers of LLM platforms to create tools that enable companies to create their own RLHF pipelines and customize models for their applications.

Optimized LLMs

One of the big problems with LLMs is their prohibitive costs. Training and running a model the size of GPT-3 and ChatGPT can be so expensive that it will make them unavailable for certain companies and applications.

There are several efforts to reduce the costs of LLMs. Some of them are centered around creating more efficient hardware, such as special AI processors designed for LLMs.

Another interesting direction is the development of new LLMs that can match the performance of larger models with fewer parameters. One example is LLaMA , a family of small, high-performance LLMs developed by Facebook. LLaMa models are accessible for research labs and organizations that don’t have the infrastructure to run very large models.

According to Facebook, the 13-billion parameter version of LLaMa outperforms the 175-billion parameter version of GPT-3 on major benchmarks, and the 65-billion variant matches the performance of the largest models, including the 540-billion parameter PaLM.

While LLMs have many more challenges to overcome, it will be interesting how these developments will help make them more reliable and accessible to the developer and research community.

Stay in the know! Get the latest news in your inbox daily

By subscribing, you agree to VentureBeat's Terms of Service.

Thanks for subscribing. Check out more VB newsletters here .

An error occured.

Study Postgraduate

Llm by research (law) (2024 entry).

Course code

30 September 2024

1 year full-time; 2 years part-time

Qualification

- LLM by Research

University of Warwick

Find out more about our Law LLM by Research degree.

The University of Warwick's Law School offers a comprehensive LLM by Research. Pursue an extended research project in a wide range of areas, with careful supervision from a specialist.

Course overview

In this programme you will be carefully supervised by an individual specialist in your chosen area of study and supported to generate a research question and produce a thesis. For this degree you are required to write a thesis of up to 40,000 words.

Our Research Degrees attempt to achieve a balance between individual study, academic supervision, and participation in a communal, scholarly learning environment. As a research student, you will be a vital part of our research culture and we will encourage you to fully participate in the life of the Law School.

Teaching and learning

You will attend a research methods and theory course and meet with your supervisor at least once a month throughout your degree.

Each year postgraduate research students get the benefit of, feedback and presentation opportunities, skills workshops as well as a series of ‘masterclass’ events led by world-leading researchers. These workshops and events support a self-critical assessment of research methods and techniques and allow you to learn from others working in your field. In addition, you will be invited to attend research seminars, public lectures and other training opportunities with the Law School and across the University.

General entry requirements

Minimum requirements.

2:1 undergraduate degree (or equivalent) in Law or a related social sciences discipline with significant legal content.

English language requirements

You can find out more about our English language requirements Link opens in a new window . This course requires the following:

- Overall IELTS (Academic) score of 7.0 and component scores.

International qualifications

We welcome applications from students with other internationally recognised qualifications.

For more information, please visit the international entry requirements page Link opens in a new window .

Additional requirements

There are no additional entry requirements for this course.

Our research

Eleven research clusters:

- Contract, Business and Commercial Law

- Comparative Law and Culture

- Development and Human Rights

- Gender and the Law

- International and European Law

- Law and Humanities

- Legal Theory

- Governance and Regulation

- Empirical Approaches

- Arts, Culture and Law

The Law School’s research is rooted in the twin themes of law in context and the international character of law.

Explore our research areas on our Law web pages. Link opens in a new window

Find a supervisor

Find your supervisor using the link below and discuss with them the area you'd like to research.

Explore our School of Law Staff Directory where you will be able see the academic interests and expertise of our staff.

You are welcome to contact our staff directly to see if they can provide any advice on your proposed research, but will still need to submit an application and meet the selection criteria set by the University before any offer is made.

You can also see our general University guidance about finding a supervisor.

Tuition fees

Tuition fees are payable for each year of your course at the start of the academic year, or at the start of your course, if later. Academic fees cover the cost of tuition, examinations and registration and some student amenities.

Find your research course fees

Fee Status Guidance

The University carries out an initial fee status assessment based on information provided in the application and according to the guidance published by UKCISA. Students are classified as either Home or Overseas Fee status and this can determine the tuition fee and eligibility of certain scholarships and financial support.

If you receive an offer, your fee status will be stated with the tuition fee information. If you believe your fee status has been incorrectly classified you can complete a fee status assessment questionnaire (follow the instructions in your offer) and provide the required documentation for this to be reassessed.

The UK Council for International Student Affairs (UKCISA) provides guidance to UK universities on fees status criteria, you can find the latest guidance on the impact of Brexit on fees and student support on the UKCISA website .

Additional course costs

Please contact your academic department for information about department specific costs, which should be considered in conjunction with the more general costs below, such as:

- Core text books

- Printer credits

- Dissertation binding

- Robe hire for your degree ceremony

Scholarships and bursaries

Scholarships and financial support

Find out about the different funding routes available, including; postgraduate loans, scholarships, fee awards and academic department bursaries.

Living costs

Find out more about the cost of living as a postgraduate student at the University of Warwick.

School of Law

From the first intake of students back in 1968, Warwick Law School has developed a reputation for innovative, quality research and consistently highly rated teaching. Study with us is exciting, challenging and rewarding. Pioneers of the 'Law in Context' approach to legal education, and welcoming students and staff from around the world, we offer a friendly, international and enriching environment in which to study law in its many contexts.

Get to know us better by exploring our departmental website. Link opens in a new window

Our Postgraduate courses

- Advanced Legal Studies (LLM)

- International Commercial Law (LLM)

- International Corporate Governance and Financial Regulation (LLM)

- International Development Law and Human Rights (LLM)

- International Economic Law (LLM)

- MPhil/PhD in Law

How to apply

The application process for courses that start in September and October 2024 will open on 2 October 2023.

For research courses that start in September and October 2024 the application deadline for students who require a visa to study in the UK is 2 August 2024. This should allow sufficient time to complete the admissions process and to obtain a visa to study in the UK.

How to apply for a postgraduate research course

After you’ve applied

Find out how we process your application.

Applicant Portal

Track your application and update your details.

Admissions statement

See Warwick’s postgraduate admissions policy.

Join a live chat

Ask questions and engage with Warwick.

Warwick Hosted Events Link opens in a new window

Postgraduate fairs.

Throughout the year we attend exhibitions and fairs online and in-person around the UK. These events give you the chance to explore our range of postgraduate courses, and find out what it’s like studying at Warwick. You’ll also be able to speak directly with our student recruitment team, who will be able to help answer your questions.

Join a live chat with our staff and students, who are here to answer your questions and help you learn more about postgraduate life at Warwick. You can join our general drop-in sessions or talk to your prospective department and student services.

Departmental events

Some academic departments hold events for specific postgraduate programmes, these are fantastic opportunities to learn more about Warwick and your chosen department and course.

See our online departmental events

Warwick Talk and Tours

A Warwick talk and tour lasts around two hours and consists of an overview presentation from one of our Recruitment Officers covering the key features, facilities and activities that make Warwick a leading institution. The talk is followed by a campus tour which is the perfect way to view campus, with a current student guiding you around the key areas on campus.

Connect with us

Learn more about Postgraduate study at the University of Warwick.

We may have revised the information on this page since publication. See the edits we have made and content history .

Why Warwick

Discover why Warwick is one of the best universities in the UK and renowned globally.

9th in the UK (The Guardian University Guide 2024) Link opens in a new window

67th in the world (QS World University Rankings 2024) Link opens in a new window

6th most targeted university by the UK's top 100 graduate employers Link opens in a new window

(The Graduate Market in 2024, High Fliers Research Ltd. Link opens in a new window )

About the information on this page

This information is applicable for 2024 entry. Given the interval between the publication of courses and enrolment, some of the information may change. It is important to check our website before you apply. Please read our terms and conditions to find out more.

Committed to your wellbeing

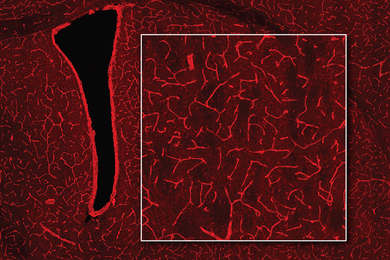

LLM Research has conducted a variety of clinical studies in adult and pediatric patients and has contributed to the development of new therapies for healthy participants and patients struggling with diseases in multiple therapeutic areas including but not limited to pulmonology, gastroenterology, gynecology, oncology, hematology, hepatology, endocrinology, dermatology, and psychiatry. LLM Research conducts Phase I, II, III, and IV clinical trials for both large and small pharmaceutical companies and Contract Research Organizations. Our research center has earned a reputation for excellence. Our physician investigators are Board Certified in Internal Medicine, Pediatrics, Dermatology, Gynecology, and Psychiatry.

Community Partnerships

At LLM we are serious about bringing options home to you and your family! LLM has partnership with local home health organizations capable of performing research activities in your own home no matter where that is. Let nothing stop you from the treatment you and your loved ones deserve

Top Enrolling Research Site

In 2021, LLM was recognized as top enrolling research site for COVID-19 vaccine. Not only do we plan on continuing to fight covid-19 we at LLM Research, look forward to bringing other vaccine options to our community.

Do you have a family member or friend that needs treatment options? Let us know, we have them covered too!

Connect With Us

Copyright © 2024 LLM Research - All Rights Reserved.

Powered by GoDaddy

This website uses cookies.

We use cookies to analyze website traffic and optimize your website experience. By accepting our use of cookies, your data will be aggregated with all other user data.

Guidance for researchers and peer-reviewers on the ethical use of Large Language Models (LLMs) in scientific research workflows

- Opinion Paper

- Published: 16 May 2023

Cite this article

- Ryan Watkins ORCID: orcid.org/0000-0003-0488-4424 1

5070 Accesses

5 Citations

1 Altmetric

Explore all metrics

For researchers interested in exploring the exciting applications of Large Language Models (LLMs) in their scientific investigations, there is currently limited guidance and few norms for them to consult. Similarly, those providing peer-reviews on research articles where LLMs were used are without conventions or standards to apply or guidelines to follow. This situation is understandable given the rapid and recent development of LLMs that are capable of valuable contributions to research workflows (such as OpenAI’s ChatGPT). Nevertheless, now is the time to begin the development of norms, conventions, and standards that can be applied by researchers and peer-reviewers. By applying the principles of Artificial Intelligence (AI) ethics, we can better ensure that the use of LLMs in scientific research aligns with ethical principles and best practices. This editorial hopes to inspire further dialogue and research in this crucial area of scientific investigation.

Avoid common mistakes on your manuscript.

1 Introduction

Recent advancements in Large Language Models (LLMs), such as OpenAI’s ChatGPT 4 [ 1 ] and Google’s LaMDA [ 2 ], have inspired developers and researchers alike to find new applications and uses for these groundbreaking tools. [ 3 ] From applications that summarize one, or one thousand, research papers, to those that let users "chat" with a research publication, many innovative techniques and creative products have been developed in the past few months. Most recently, the first wave of research articles that use LLMs in their scientific research workflows have started to show up – primarily as preprints at this stage (for instance, [ 4 , 5 , 6 , 7 ]). As with many new research methods, statistical techniques, or technologies, the use of new tools "in the wild" routinely precedes agreement on the norms, conventions, and standards that guide their application. LLMs are no exception, with many researchers exploring their possible applications at numerous phases of scientific research workflows. Therefore, now is the time to start establishing norms, conventions, and standards [ 8 , 9 ] for the use of LLMs in scientific research, both as guidance for researchers and peer-reviewers, and as a starting place to guide future research into establishing these as foundations for applying the principles of Artificial Intelligence (AI) ethics in research practice.

The ethical use of LLMs in scientific research requires the development of norms, conventions, and standards. Just as researchers apply norms, conventions, and/or standards to hypothesis testing, regression, or CRISPR applications, researchers can benefit from guidance on how to both use, and report on their use, of LLMs in their research. Footnote 1 Similarly, for those providing peer-reviews of scientific research papers that use LLMs in their methods, guidance on current conventions and standards will be valuable. The implementation of norms, conventions, and standards plays a critical role in ensuring the ethical use of artificial intelligence (AI) in scientific research, bridging the gap between theoretical frameworks and their practical application. This is particularly relevant in research involving Large Language Models (LLMs)..

The creation and study of LLMs is a rapidly advancing field [ 3 ]. With the growing use of LLMs it is expected that the norms, conventions, and standards will evolve as new tools and techniques are introduced. Nevertheless, it is important to begin the foundation building process so that initial guidance can be systematically improved over time. In this editorial I propose an initial set of considerations that can (i) be applied by researchers to guide their use of LLMs in their workflows, and (ii) be utilized by peer-reviewers to assess the quality and ethical implications of LLMs use in the articles they review. These initial norms, conventions, and standards for what should be considered during the research process, and included in reports or articles on research that used LLMs, are a starting place with the goal of providing an ethical foundation for future dialogue on this topic. Footnote 2 The proposed foundation should ideally identify key research questions that will be explored in the coming months, such as determining the appropriate conventions for setting LLM temperature parameters and assessing potential disciplinary and field-specific variations in these conventions.

2 Framework

The following is an initial framework of proposed norms that researchers and peer-reviewers should consider when using LLMs in scientific research. While this framework is not intended to be comprehensive, it provides a foundation on which researchers can build and develop conventions and standards.

The proposed framework (which includes, context, embeddings, fine tuning, agents, ethics) was derived from the key considerations of researchers using LLMs. These considerations range from determining if LLMs are going to used in combination with other research tools and deciding when to customize LLMs with embedding models, to fine tuning the performance of LLMs and ensuring that research retains ethical rigor. As such, the proposed framework captures many unique considerations to using LLMs in the workflows of scientific research. Described first are the up-front considerations for researchers who plan to use LLMs in their workflows, followed by a checklist of questions (within the same framework) peer-reviewers should consider when reviewing articles or reports that apply LLMs in their methods.

2.1 Context

The context in which LLMs are used in research workflows is important to their appropriate and ethical application. Initial considerations of researchers should include:

Are LLMs appropriate for the research questions and data?

Will LLMs be used along with other methods or tools?

Will the study be pre registered?

LLMs are not, of course, appropriate for all research questions or data types. Researchers should begin with their research question(s) and then determine if/how LLMs might be applied. LLMs may, for instance, be an appropriate component of data collection (e.g., writing interview questions), data preparation (e.g., fuzzy joining of data sets), and/or data analysis (e.g., sentiment analysis, optimizing code). For example, in analyzing qualitative data a researcher may choose to use traditional qualitative data analysis software and techniques (such as, coding or word counts with Nvivo or Atlas TI) along with a LLM for comparing semantics across samples. Within this context, the use of the LLM complements other analysis techniques, allowing the researcher to explore more diverse questions of interest. Whereas in other contexts all of the research questions may be best explored with just LLMs or another traditional method. In their reporting, researchers should describe and justify the complete methods applied in their research and the full list of LLM tools selected since each may be specialized for a different task. Likewise, if the research study was pre registered, any subsequent articles or reports should include both the pre registration URL and discussion of any changes made from the original pre registered research plan—especially when those changes are based on the testing and fine tuning of LLMs.

2.2 Embedding Models

Adding a custom embedding model(s) to complement the base LLM (such as OpenAI’s ChatGPT) can enhance the value of LLMs for specific research task(s). Initial considerations of researchers should include:

Will a custom embedding model(s) help meet the goals of the research?

What tool(s) will be used to create the embedding model(s)?

Will multiple embedding models created and tested (i.e., chained)?

What size of chunks will be used in preparing the data for the embedding(s)?

Will overlap across chunks be permitted?

What tool will be used for similarity matching (i.e., vector database)?

Will the code for creating embedding model(s) be made publicly available?

While the web interface for some LLMs (such as ChatGPT) can be valuable for some research questions, many times supplemental content (in addition to a base LLM, such as GPT-3.5 or GPT-4) is important to the research. Custom embedding models allow researchers to extent the base LLM with content of their choosing. Technically, "Embeddings are vectors or arrays of numbers that represent the meaning and the context of the tokens that the model processes and generates. Embeddings are derived from the parameters or the weights of the model, and are used to encode and decode the input and output texts. Embeddings can help the model to understand the semantic and syntactic relationships between the tokens, and to generate more relevant and coherent texts" [ 13 ]. While LLMs use embeddings to create their base models (such as, GPT-4), researchers can also create embeddings with specialized content (such as a corpus of research articles on a topic, a drive of interview transcripts, or a database of automobile descriptors) to expand the inputs used by the LLM. Researchers can also chain together multiple embedding models in improve LLM performance [ 14 ].

There are numerous embedding models [algorithms] that can be used by researchers to create an embeddings file for use in their research [ 15 ]. Embedding models use a variety of algorithms to create the custom embeddings file, and therefore it is important for researchers to be transparent about their procedures in selecting and creating embeddings for use in their workflow. The preparation of data for creating the embedding model(s) can also influence the resulting embeddings and thereby the outputs of the LLMs when used in the workflow. For example, text has be divided into chunks in preparation for creating the embeddings and the size of chunks used will define the cut-off points for creating vectors. Researchers can, for instance, divide the text data into chunks of 1000 tokens, or 500 tokens. Depending on the context of the research, one dividing point for chunking may be more valuable than another. Chunking can also be done using sentence splitting in order to keep sentences together, or not. Likewise, researchers can allow for some overlap between chunks in order to maintain semantic context [ 16 ]. Each of these decisions can influence the output of the LLM when using additional embeddings, and thus should be considered in the research procedures and included in subsequent reporting.

After a embeddings are created for the additional content to be used in conjunction with the base LLM, the embeddings have to be stored in a database so that the data can be managed and searched. Vector databases (or vectorestores) are used, and there are many options researchers can choose amongst [ 17 ]. Vector databases use different heuristics and algorithms to index and search vectors, and can perform differently. Vector databases may use different neural search frameworks, such as FAISS, Jina.AI, or Haystack, and custom algorithms [ 18 ]. While the selection of a vector database mostly influences performance (i.e., speed, more than LLM outputs) it is useful for researchers to be transparent on their selection. In the future, differences in neural search frameworks, algorithms, and vector database technologies may lead to substantive differences in LLM outputs as well.

2.3 Fine Tuning

There are many Large Language Models (LLMs) available to researchers [ 19 ] and the selection of which LLM to use in a specific research workflow requires several decisions, including:

Which language model will used (e.g., OpenAI’s GPT-3.5, GPT-4, open source alternative)?

Will multiple language models be tested for performance in the research task(s)?

Will completion parameters be applied (e.g., temperature, presence penalty, frequency penalty, max tokens, logit bias, stops)?

Will multiple combinations of completion parameters be tested before or during the research?

Will systematic “prompt engineering” be done as part of the research?

What quality review and validation checks will be performed on LLM-generated results?

Will the LLM’s performance be compared with benchmarks or standards for the field or discipline?

Will the code for fine tuning the LLM be made publicly available?

Beyond the standard user interface and default settings offered by many LLMs (such as the ChatGPT website), by using an Application Programming Interface (API) researchers can fine tune LLMs for their research. Fine tuning can be done with or without using a embedding model(s), and is currently done primarily through setting the completion parameters (e.g., temperature) and by conducting “prompt engineering” (i.e., systematically improving LLM prompts to provide outputs with desired characteristics). Additional fine tuning options should however be expected as LLMs evolve and more competing LLMs become available to researchers.

Currently there are no conventions or standards for setting completion parameters when using LLMs in scientific research. For instance, two common parameters used to influence the outputs of LLMs are tokens and temperature.

2.3.1 Tokens

Tokens are unit of analysis of LLMs, and they are roughly equivalent to about a word, but not always. Researchers can select the number of tokens to be returned to complete a request, and the LLM will complete the request within that constraint [ 20 ]. Depending on the size of the LLM there may be limits on the total number of tokens that can be requested. There are no conventions or standards at this time for the ideal maximum number of tokens a researcher should request in order to get results, and this will routinely be dependent on the research context in which they are using the LLM. In general however, LLMs have been observed to ramble on at time (i.e., filling the maximum number of tokens) and to provide less accurate outputs toward the end when the maximum token parameter is set too high.

2.3.2 Temperature

Temperature [ 20 ] is used to provide the LLM with additional flexibility in how it completes a request. At the lowest temperature setting (e.g., 0) then the LLM is limited to selecting the next word/token that has the highest probability in the model (also see, “top p” parameter [ 20 ]). As the researcher increases the temperature ( \(\le\) 2 with OpenAI’s LLMs), the LLM may select from an increasing range of probabilities for the next word/token. Setting an appropriate temperature for the unique research context is therefore important, and in the future we will hopefully have conventions (by field and/or disciplines) on appropriate temperature parameters for research.

Other completion parameters can also influence the outputs of LLMs (e.g., “presence penalty”, “frequency penalty”, “logit bias”) and we should expect that new LLMs will expand the range of completion parameters that researchers can apply. It should be the norm, therefore, for researchers to clearly state the applied completion parameters used in their research, and describe any testing of different parameter settings done in evaluating and selecting the final parameter settings.

Prompts are the inputs provided by researchers to request a LLM response. Prompts are converted to tokens and used to inform predictions about what the following words/tokens should be in the output. Behind the curtain, LLMs are using probabilities for the various permutations and combinations of tokens/words that could follow. Changing the prompt, for instancing changing the wording of the prompt or including more prior prompts from the history of a conversation, can substantially influence the LLM’s outputs [ 21 , 22 ]. Prompt engineering is the systematic manipulation of prompts in order to improve outputs, and researchers should be transparent about both their prompt engineering procedures and the final prompts used to in the research.

At this time, however, “There are no reliable techniques for steering the behavior of LLMs” [ 3 ]. While transparency of research “prompt engineering” practices is essential, when using LLMs in research transparency may not lead to reproducability—and therefore limit generalizability.

The automation of LLM tasks can be important in some research contexts. If using automated LLM tools (i.e., agents) researcher considerations should include:

Will LLM agent(s) used in the research?

How many and in what sequence will LLM agent(s) used?

Will the code for creating the agents be made publicly available?

Many research workflows can utilize a predetermined sequence of prompts or chains of LLMs. Other workflows, however, can’t rely on predetermined sequences and/or decisions to achieve their goals. In these later cases, LLM agents can be used to make decisions about which LLMs and tools (including, for instance, internet searches [ 23 ]) to use in achieving a goal [ 24 ]. A LLM agent utilizes prompts, or LLM responses, as inputs to their (the agent’s) reasoning and decisions about which LLMs or tools to utilize next. Further, LLM agents can learn from their past performance (i.e., successes or failures) leading to improved performance [ 25 , 26 ]. If researchers apply LLM agents in their workflow, details on the agents and tools used in the research should be described. Any intermediate steps, and the sequence of those steps, should also be described since these are essential to how the final outputs of the LLM were achieved.

The use of LLMs in scientific research workflows is a new area of AI ethics that requires emerging considerations for researchers, including:

Is the organization (e.g., company, open source community) that created the LLM transparent about the choices they made in its development and fine tuning?

How will training data for additional embedding model(s) be acquired in a transparent and ethical manner?

What steps for data privacy and protections will be taken?

What will be done to identify and mitigate potential biases in LLM-generated results?

Are there any potential conflicts of interest related to the use of LLMs?

Are there any applicable institutional and/or regulatory guidelines that will be followed?

What steps will be taken for the research to be reproducible and transparent?

Will LLM outputs be described in a non-anthropomorphic manner?

The ethical use of LLMs in research workflows is a crucial consideration that cuts across multiple disciplines. From sociology and psychology to engineering management and business, LLMs have diverse applications in research, and this necessitates attention to a range of issues. These issues include technical concerns such as data privacy and bias, as well as philosophical considerations such as anthropomorphism and the epistemological challenges posed by machine-generated knowledge. Therefore, it is essential to address ethical considerations when using LLMs in research workflows to ensure that the research remains unbiased, transparent, and scientifically rigorous. While researchers may have little control, for example, over the ethical collection of data for the initial training of an LLM (such as OpenAI’s GPT-3.5), they do have choices in which LLMs to utilize in their research and the ethical collection of data used in creating any custom embedding models used in their workflows. Likewise, while there are currently limited institutional and/or regulatory policies guiding the use of LLMs in scientific research, researchers will be responsible for adhering to those AI policies (such as the EU AI Act [ 27 ]) when they are established. In the interim, researchers must be detailed and transparent about their practices, provide proper citations and credit, and disclose any conflicts of interest.

3 Conclusions

As LLMs continue to advance, their potential uses, benefits, and limitations in scientific research workflows are emerging. This presents an opportune moment to establish norms, conventions, and standards for their application in research and reporting their use in scientific publications. In this editorial, I have proposed an initial framework and set of norms for researchers to consider, including a peer-reviewer checklist (see Table 1 ) for assessing research reports and articles that employ LLMs in their methods. These proposals are not meant to be definitive, as we are still in the early stages of learning about the potential uses and limitations of LLMs. Rather, it is hoped that this foundation will stimulate research questions and inform future decisions about the norms, conventions, and standards that should be applied when using LLMs in scientific research workflows.

For example, a norm in international economics research is comparability (i.e.,the desire to compare statistics across countries) [ 10 ], where as a long-standing convention in the social sciences is to use a value of \(\alpha\) = 0.05 to define a statistically significant finding [ 11 ]. While IEEE’s P11073-10426 is a standard that defines a communication framework for interoperability with personal respiratory equipment [ 12 ].

Research and updated guidance for using LLMs in scientific research workflows are available on the clearinghouse website: https://LLMinScience.com .

OpenAI: GPT-4 Technical Report. https://cdn.openai.com/papers/gpt-4.pdf (2023)

Romal Thoppilan, E.A.: LaMDA: language models for dialog applications. arXiv preprint arXiv:2201.08239 (2022)

Bowman, S.R.: Eight things to know about large language models. arXiv preprint arXiv:2304.00612 (2023)

Crokidakis, N., de Menezes, M.A., Cajueiro, D.O.: Questions of science: chatting with ChatGPT about complex systems arXic preprint arXiv:2303.16870 (2023)

Wang, Z., Xie, Q., Ding, Z., Feng, Y., Xia, R.: Is ChatGPT a good sentiment analyzer? A preliminary study. arXiv preprint arXiv:2304.04339 (2023)

Qi, Y., Zhao, X., Huang, X.: safety analysis in the era of large language models: a case study of STPA using ChatGPT. arXiv preprint arXiv:2304.04339 (2023)

Khademi, A.: Can ChatGPT and bard generate aligned assessment items? A reliability analysis against human performance. arXiv preprint arXiv:2304.05372 (2023)

Southwood, N., Eriksson, L.: Norms and conventions. Philos. Explor. 14 (2), 195–217 (2011). https://doi.org/10.1080/13869795.2011.569748

Article Google Scholar

Bowdery, G.J.: Conventions and norms. Philos. Sci. 8 (4), 493–505 (1941). https://doi.org/10.1086/286731

Mügge, D., Linsi, L.: The national accounting paradox: how statistical norms corrode international economic data. Eur. J. Int. Relat. 27 (2), 403–427 (2021). https://doi.org/10.1177/1354066120936339 . ( PMID: 34040493 )

Johnson, V.E.: Revised standards for statistical evidence. Proc. Natl. Acad. Sci. U.S.A. 110 (48), 19313–19317 (2013)

Article MATH Google Scholar

Chang, M.: IEEE standards used in your everyday life-IEEE SA—standards.ieee.org. https://standards.ieee.org/beyond-standards/ieee-standards-used-in-your-everyday-life . Accessed 16 Apr 2023

Maeda, J.: LLM Ai Embeddings. https://learn.microsoft.com/en-us/semantic-kernel/concepts-ai/embeddings

Wu, T., Terry, M., Cai, C.J.: AI chains: transparent and controllable human-AI interaction by chaining large language model prompts. arXiv preprint arXiv:2110.01691 (2022)

Chase, H.: Text embedding models (2023). https://python.langchain.com/en/latest/modules/models/text_embedding.html?highlight=embedding

Chunking Strategies for LLM Applications. https://www.pinecone.io/learn/chunking-strategies/

Chase, H.: Vectorstores (2023). https://python.langchain.com/en/latest/modules/indexes/vectorstores.html

Kan, D.: Not all vector databases are made equal. Towards Data Science (2022). https://towardsdatascience.com/milvus-pinecone-vespa-weaviate-vald-gsi-what-unites-these-buzz-words-and-what-makes-each-9c65a3bd0696

Hannibal046: Hannibal046/Awesome-LLM: Awesome-LLM: a curated list of large language model. https://github.com/Hannibal046/Awesome-LLM

OpenAI: OpenAI API—platform.openai.com. https://platform.openai.com/docs/api-reference/completions/create . Accessed 16 Apr 2023

Si, C.: Prompting gpt-3 to be reliable. In: ICLR 2023 Proceedings. https://openreview.net/pdf?id=98p5x51L5af (2023)

White, J., Fu, Q., Hays, S., Sandborn, M., Olea, C., Gilbert, H., Elnashar, A., Spencer-Smith, J., Schmidt, D.C.: A prompt pattern catalog to enhance prompt engineering with ChatGPT. arXiv preprint arXiv:2302.11382 (2023)

Significant-Gravitas: GitHub-Significant-Gravitas/Auto-GPT: An experimental open-source attempt to make GPT-4 fully autonomous.—github.com. https://github.com/Significant-Gravitas/Auto-GPT . Accessed 16 Apr 2023 (2023)

Chase, H.: Agents. https://python.langchain.com/en/latest/modules/agents.html (2023)

Shinn, N., Labash, B., Gopinath, A.: Reflexion: an autonomous agent with dynamic memory and self-reflection. arXiv preprint arXiv:2303.11366 (2023)

Schick, T., Dwivedi-Yu, J., Dessì, R., Raileanu, R., Lomeli, M., Zettlemoyer, L., Cancedda, N., Scialom, T.: Toolformer: language models can teach themselves to use tools. arXiv preprint arXiv:2302.04761 (2023)

Union, E.: Artificial Intelligence Act (2023). https://artificialintelligenceact.eu/

Download references

Author information

Authors and affiliations.

Educational Technology Leadership, George Washington University, Washington, DC, USA

Ryan Watkins

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Ryan Watkins .

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Watkins, R. Guidance for researchers and peer-reviewers on the ethical use of Large Language Models (LLMs) in scientific research workflows. AI Ethics (2023). https://doi.org/10.1007/s43681-023-00294-5

Download citation

Received : 18 April 2023

Accepted : 02 May 2023

Published : 16 May 2023

DOI : https://doi.org/10.1007/s43681-023-00294-5

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Large Language Model

- Conventions

- Find a journal

- Publish with us

- Track your research

The Future of Large Language Models in 2024

Cem is the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per Similarweb) including 60% of Fortune 500 every month.

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE, NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and media that referenced AIMultiple.

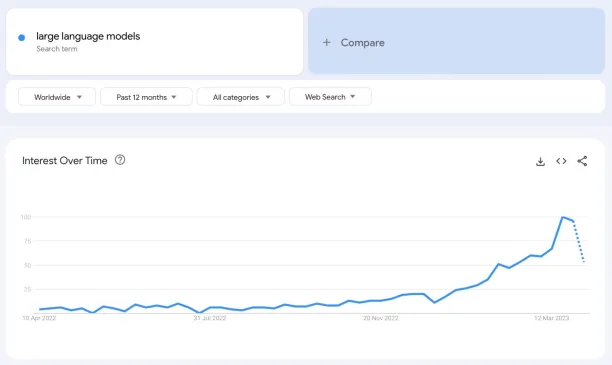

Interest in large language models (LLMs) is on the rise especially after the release of ChatGPT in November 2022 (see Figure 1). In recent years, LLMs have transformed various industries, generating human-like text and addressing a wide range of applications. However, their effectiveness is hindered by concerns surrounding bias, inaccuracy, and toxicity, which limit their broader adoption and raise ethical concerns .

Figure 1. Google search trend for large language models over a year

Source: Google Trends

This article explores the future of large language models by delving into promising approaches, such as self-training, fact-checking, and sparse expertise, to mitigate these issues and unlock the full potential of these models.

What is a large language model?

A large language model is a type of artificial intelligence model designed to generate and understand human-like text by analyzing vast amounts of data. These foundational models are based on deep learning techniques and typically involve neural networks with many layers and a large number of parameters, allowing them to capture complex patterns in the data they are trained on.

The primary goal of a large language model is to understand the structure, syntax, semantics, and context of natural language, so it can generate coherent and contextually appropriate responses or complete given text inputs with relevant information.

These models are trained on diverse sources of text data, including books, articles, websites, and other textual content, which enables them to generate responses to a wide range of topics.

What are the popular large language models?

Bert (google).

BERT, an acronym for Bidirectional Encoder Representations from Transformers, is a foundational model developed by Google in 2018. Based on the Transformer Neural Network architecture introduced by Google in 2017, BERT marked a departure from the prevalent natural language processing ( NLP ) approach that relied on recurrent neural networks ( RNNs ).

Before BERT, RNNs typically processed text in a left-to-right manner or combined both left-to-right and right-to-left analyses. In contrast, BERT is trained bidirectionally, allowing it to gain a more comprehensive understanding of language context and flow compared to its unidirectional predecessors.

GPT-3 & GPT-4 (OpenAI)

OpenAI’s GPT-3 , or Generative Pre-trained Transformer 3, is a large language model that has garnered significant attention for its remarkable capabilities in natural language understanding and generation. Released in June 2020, GPT-3 is the third iteration in the GPT series, building on the success of its predecessors, GPT and GPT-2.

GPT-3 became publicly used when developed into GPT-3.5 for the creation of the conversational AI tool ChatGPT which was released in November 2022.

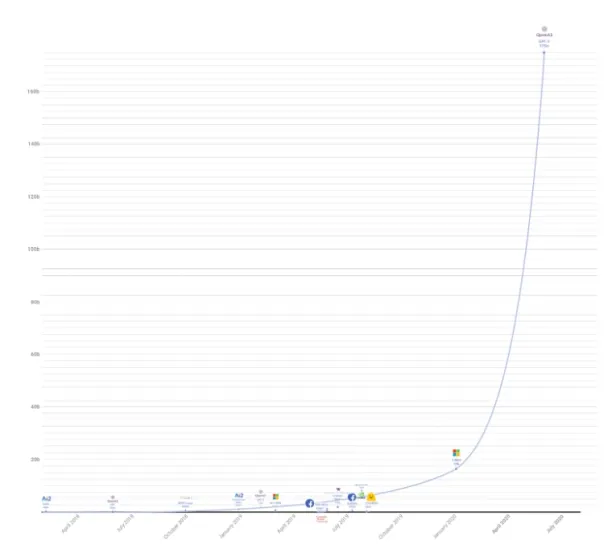

GPT-3 uses billions of parameters, dwarfing its competitors in comparison (Figure 2). This made it the most complex large language model until its successor GPT-4.

Figure 2. The image shows how GPT-3 has a greater parameter analysis capacity than other giant NLP models

The largest language model is now OpenAI’s GPT-4 , released in March 2023. Although the model is more complex than the others in terms of its size, OpenAI didn’t share the technical details of the model.

GPT-4 is a multimodal large language model of significant size that can handle inputs of both images and text and provide outputs of text. Although it may not perform as well as humans in many real-world situations, the new model has demonstrated performance levels on several professional and academic benchmarks that are comparable to those of humans. 1

The model has various distinctive features compared to other LLMs, including:

- Visual input option

- Higher word limit

- Advanced reasoning capability

- Steerability, etc.

For a more detailed account of these capabilities of GPT-4, check our in-depth guide .

BLOOM (BigScience)

BLOOM, an autoregressive large language model, is trained using massive amounts of text data and extensive computational resources to extend text prompts. Released in July 2022, it is built on 176 parameters as a competitor of GPT-3. As a result, it can generate coherent text across 46 languages and 13 programming languages.

For a comparative analysis of the current LLMs, check our large language models examples article .

What is the current stage of large language models?

The current stage of large language models is marked by their impressive ability to understand and generate human-like text across a wide range of topics and applications. Built using advanced deep learning techniques and trained on vast amounts of data, these models, such as OpenAI’s GPT-3 and Google’s BERT, have significantly impacted the field of natural language processing.

Current LLMs have achieved state-of-the-art performance on various tasks like:

- Sentiment analysis

- Text summarization

- Translation

- Question-answering

- Code generation

Despite these achievements, language models still have various limitations that need to be addressed and fixed in the future models.

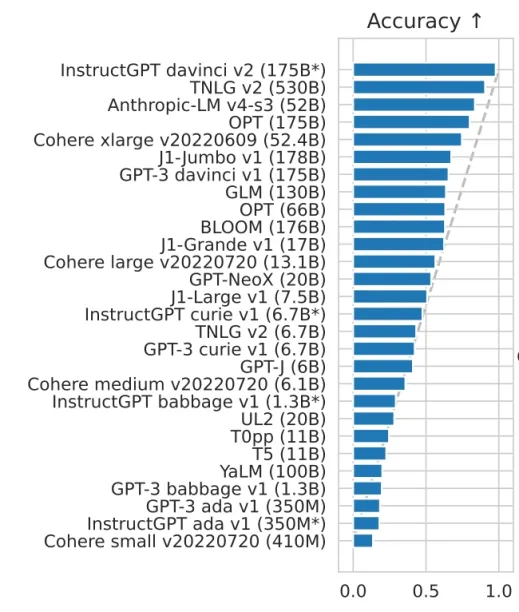

1- Accuracy

Large language models employ machine learning to deduce information, which raises concerns about potential inaccuracies. Additionally, pre-trained large language models struggle to adapt to new information dynamically, leading to potentially erroneous responses that warrant further scrutiny and improvement in future developments. Figure 3 shows the accuracy comparison of some LLMs.

Figure 3. Results for a wide variety of language models on the 5-shot HELM benchmark for accuracy

Source : “BLOOM: A 176B-Parameter Open-Access Multilingual Language Model”

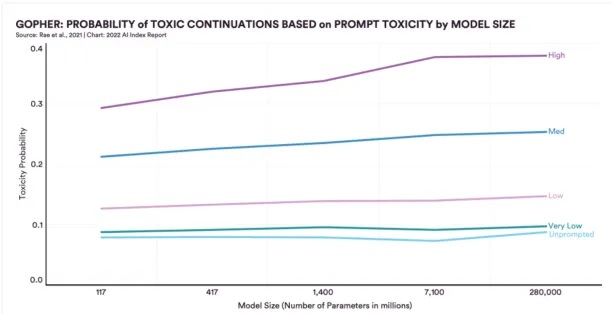

Large language models facilitate human-like communication through speech and text. However, recent findings indicate that more advanced and sizable systems tend to assimilate social biases present in their training data, resulting in sexist, racist, or ableist tendencies within online communities (Figure 4).

Figure 4. Large language models’ toxicity index

Source : Stanford University Artificial Intelligence Index Report 2022

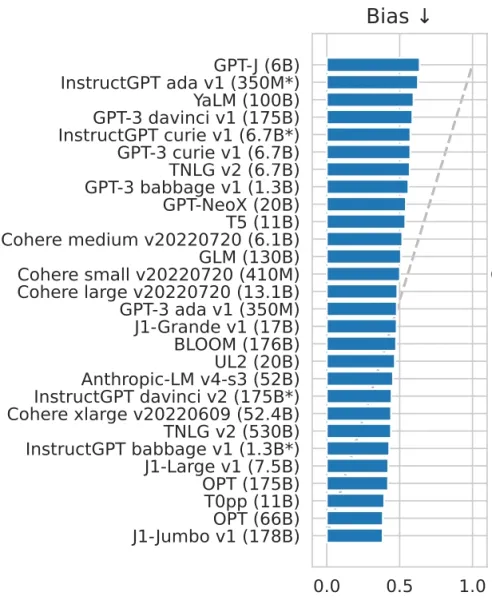

For instance, a recent 280 billion-parameter model exhibited a substantial 29% increase in toxicity levels compared to a 117 million-parameter model from 2018. As these systems continue to advance and become more powerful tools for AI research and development, the potential for escalating bias risks also grows. Figure 5 compares the bias potential of some LLMs.

Figure 5. Results for a wide variety of language models on the 5-shot HELM benchmark for bias

3- Toxicity

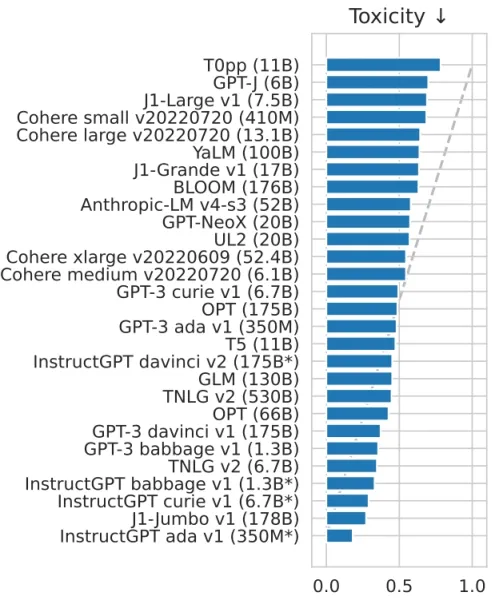

The toxicity problem of large language models refers to the issue where these models inadvertently generate harmful, offensive, or inappropriate content in their responses. This problem arises because these models are trained on vast amounts of text data from the internet, which may contain biases, offensive language, or controversial opinions.

Figure 6. Results for a wide variety of language models on the 5-shot HELM benchmark for toxicity

Addressing the toxicity problem in future large language models requires a multifaceted approach involving research, collaboration, and continuous improvement. Some potential strategies to mitigate toxicity in future models can include:

- Curating and improving training data

- Developing better fine-tuning techniques

- Incorporating user feedback

- Content moderation strategies

4- Capacity limitations

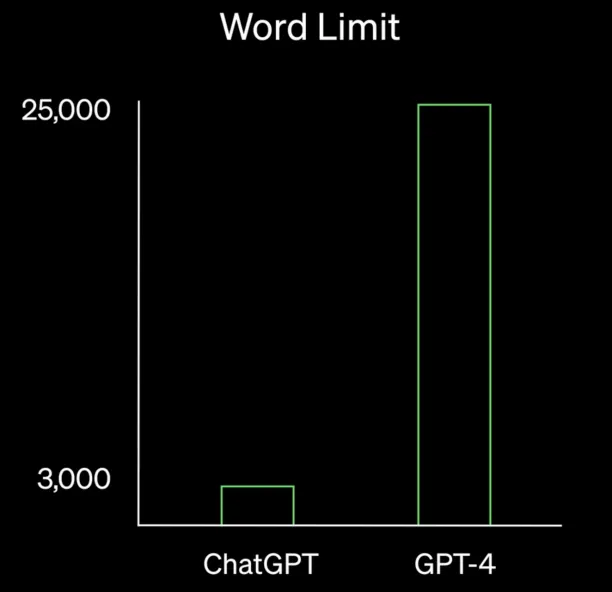

Every large language model has a specific memory capacity, which restricts the number of tokens it can process as input. For example, ChatGPT has a 2048-token limit (approximately 1500 words), preventing it from comprehending and producing outputs for inputs that surpass this token threshold.

GPT-4 extended the capacity to 25,000 words, far exceeding the ChatGPT model depending on GPT-3.5 (Figure 7).

Figure 7. Word limit comparison between ChatGPT and GPT-4

Source: OpenAI

5- Pre-trained knowledge set

Language models are trained on a fixed set of data that represents a snapshot of knowledge at a certain point in time. Once the training is complete, the model’s knowledge is frozen and cannot access up-to-date information. This means that any information or changes that occur after the training data was collected won’t be reflected in how large language models respond.

This leads to several problems regarding such as:

- Outdated or incorrect information

- Inability to handle recent events

- Less relevance in dynamic domains like technology, finance or medicine

What is the future of large language models?

It is not possible to foresee how the future language models will evolve. However, there is promising research on LLMs, focusing on the common problems we explained above. We can pinpoint 3 radical and substantial changes for future language models.

1- Fact-checking themselves

A collection of promising advancements aims to alleviate the factual unreliability and static knowledge limitations of large language models. These novel techniques are crucial for preparing LLMs for extensive real-world implementation. Doing this requires two abilities:

- The ability to access external sources

- The ability to provide citations and references for answers

Significant preliminary research in this domain features models such as Google’s REALM and Facebook’s RAG, both introduced in 2020.

In June 2022, OpenAI introduced a fine-tuned version of its GPT model called WebGPT, which utilizes Microsoft Bing to browse the internet and generate more precise and comprehensive answers to prompts. WebGPT operates similarly to a human user:

- Submitting search queries to Bing

- Clicking on links

- Scrolling web pages

- Employing functions like Ctrl+F to locate terms

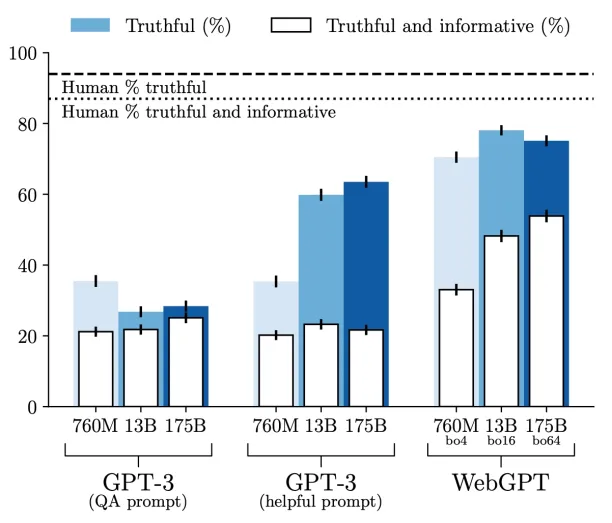

When the model incorporates relevant information from the internet into its output, it includes citations, allowing users to verify the source of the information. The research results show that All WebGPT models surpass every GPT-3 model in terms of the proportion of accurate responses and the percentage of truthful and informative answers provided.

Figure 8. TruthfulQA results comparing GPT-3 and WebGPT models

Source : “WebGPT: Browser-assisted question-answering with human feedback”

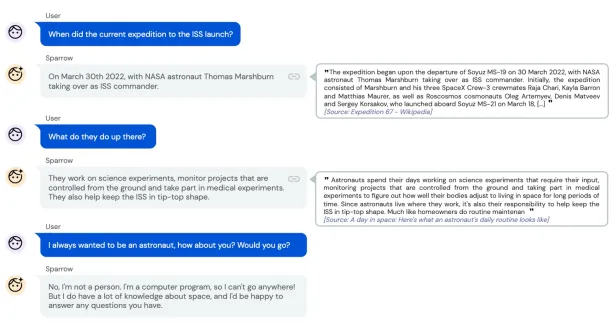

DeepMind is actively exploring similar research avenues. A few months back, they introduced a new model called Sparrow. Like ChatGPT, Sparrow operates in a dialogue-based manner, and akin to WebGPT, it can search the internet for new information and offer citations to support its claims.

Figure 9. Sparrow provides up-to-date answers and evidence for factual claims

Source : “Improving alignment of dialogue agents via targeted human judgements”

Although it is still early to conclude that accuracy, fact-checking and static knowledge base problems can be overcome in the near-future models, current research results are promising for the future. This may reduce the need for using prompt engineering to cross check model output since model will already have cross-checked its results.

2- Synthetic training data

For fixing some of the limitations we mentioned above, such as those resulting from the training data, researchers are working on large language models that can generate their own training data sets (i.e. generating synthetic training data sets).

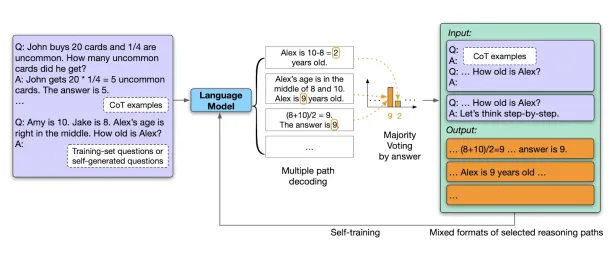

In a recent study, Google researchers developed a large language model capable of creating questions, generating comprehensive answers, filtering its responses for the highest quality output, and fine-tuning itself using the curated answers. Impressively, this resulted in new state-of-the-art performance across multiple language tasks.

Figure 10. Overview of the Google’s self-improving model

Source : “Large Language Models Can Self-Improve”

For example, the model’s performance improved from 74.2% to 82.1% on GSM8K and from 78.2% to 83.0% on DROP, which are two widely used benchmarks for evaluating LLM performance.

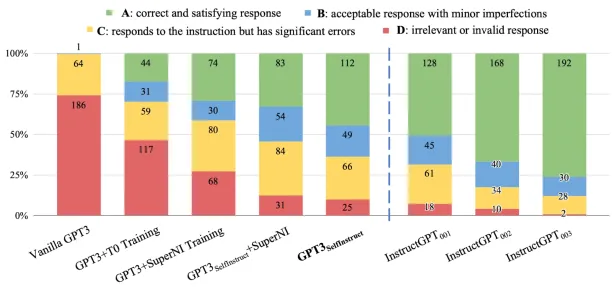

A recent study focuses on enhancing a crucial LLM technique called “instruction fine-tuning,” which forms the foundation of products like ChatGPT. While ChatGPT and similar instruction fine-tuned models depend on human-crafted instructions, the research team developed a model capable of generating its own natural language instructions and subsequently fine-tuning itself using those instructions.

The performance improvements are substantial, as this method boosts the base GPT-3 model’s performance by 33%, nearly equaling the performance of OpenAI’s own instruction-tuned model (Figure 11).

Figure 11. Performance of GPT3 model and its instruction-tuned variants, evaluated by human experts

Source : “Self-Instruct: Aligning Language Model with Self Generated Instructions”

With such models in the future, it is possible to reduce biases and toxicity of the model outputs and increase the efficiency of fine-tuning with desired data sets, meaning that models learn to optimize themselves.

3- Sparse expertise

Although each model’s parameters, training data, algorithms etc. cause performance differences, all of the widely recognized language models today—such as OpenAI’s GPT-3, Nvidia/Microsoft’s Megatron-Turing, Google’s BERT—share a fundamental design in the end. They are:

- Autoregressive

- Self-supervised

- Pre-trained

- Employ densely activated transformer-based architectures

A dense language model means that each of these models use all of their parameters to create a response to a prompt. As you may guess, this is not very effective and troublesome.

A sparse expert model is the idea that a model can be able to activate only a relevant set of its parameters to answer a given prompt. Currently developed LLMs with more than 1 trillion parameters are assumed to be sparse models. 2 An example to these models is Google’s GLam with 1.2 trillion parameters.

According to Forbes, Google’s GLaM is seven times bigger than GPT-3 but consumes two-thirds less energy for training. It demands only half the computing resources for inference and exceeds GPT-3’s performance on numerous natural language tasks.