- Reference Manager

- Simple TEXT file

People also looked at

Specialty grand challenge article, grand challenges in image processing.

- Université Paris-Saclay, CNRS, CentraleSupélec, Laboratoire des signaux et Systèmes, Gif-sur-Yvette, France

Introduction

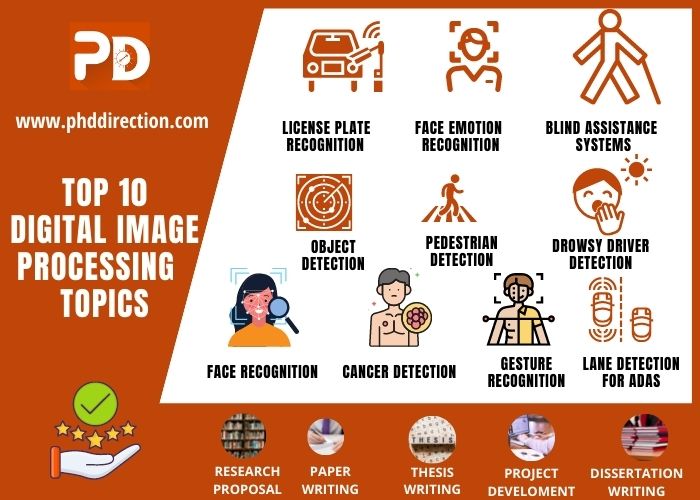

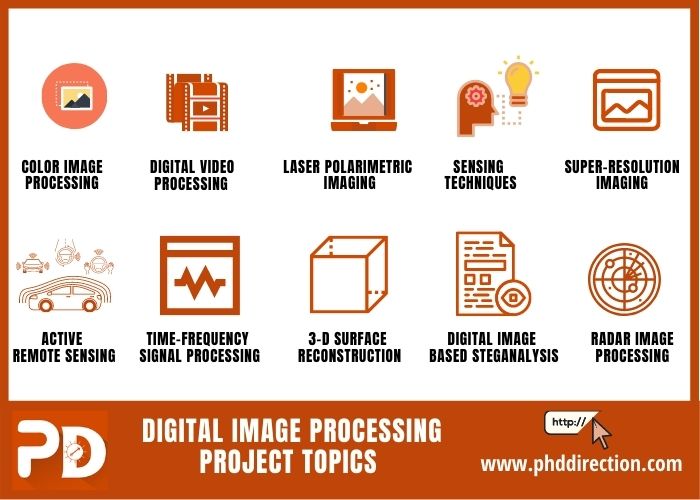

The field of image processing has been the subject of intensive research and development activities for several decades. This broad area encompasses topics such as image/video processing, image/video analysis, image/video communications, image/video sensing, modeling and representation, computational imaging, electronic imaging, information forensics and security, 3D imaging, medical imaging, and machine learning applied to these respective topics. Hereafter, we will consider both image and video content (i.e. sequence of images), and more generally all forms of visual information.

Rapid technological advances, especially in terms of computing power and network transmission bandwidth, have resulted in many remarkable and successful applications. Nowadays, images are ubiquitous in our daily life. Entertainment is one class of applications that has greatly benefited, including digital TV (e.g., broadcast, cable, and satellite TV), Internet video streaming, digital cinema, and video games. Beyond entertainment, imaging technologies are central in many other applications, including digital photography, video conferencing, video monitoring and surveillance, satellite imaging, but also in more distant domains such as healthcare and medicine, distance learning, digital archiving, cultural heritage or the automotive industry.

In this paper, we highlight a few research grand challenges for future imaging and video systems, in order to achieve breakthroughs to meet the growing expectations of end users. Given the vastness of the field, this list is by no means exhaustive.

A Brief Historical Perspective

We first briefly discuss a few key milestones in the field of image processing. Key inventions in the development of photography and motion pictures can be traced to the 19th century. The earliest surviving photograph of a real-world scene was made by Nicéphore Niépce in 1827 ( Hirsch, 1999 ). The Lumière brothers made the first cinematographic film in 1895, with a public screening the same year ( Lumiere, 1996 ). After decades of remarkable developments, the second half of the 20th century saw the emergence of new technologies launching the digital revolution. While the first prototype digital camera using a Charge-Coupled Device (CCD) was demonstrated in 1975, the first commercial consumer digital cameras started appearing in the early 1990s. These digital cameras quickly surpassed cameras using films and the digital revolution in the field of imaging was underway. As a key consequence, the digital process enabled computational imaging, in other words the use of sophisticated processing algorithms in order to produce high quality images.

In 1992, the Joint Photographic Experts Group (JPEG) released the JPEG standard for still image coding ( Wallace, 1992 ). In parallel, in 1993, the Moving Picture Experts Group (MPEG) published its first standard for coding of moving pictures and associated audio, MPEG-1 ( Le Gall, 1991 ), and a few years later MPEG-2 ( Haskell et al., 1996 ). By guaranteeing interoperability, these standards have been essential in many successful applications and services, for both the consumer and business markets. In particular, it is remarkable that, almost 30 years later, JPEG remains the dominant format for still images and photographs.

In the late 2000s and early 2010s, we could observe a paradigm shift with the appearance of smartphones integrating a camera. Thanks to advances in computational photography, these new smartphones soon became capable of rivaling the quality of consumer digital cameras at the time. Moreover, these smartphones were also capable of acquiring video sequences. Almost concurrently, another key evolution was the development of high bandwidth networks. In particular, the launch of 4G wireless services circa 2010 enabled users to quickly and efficiently exchange multimedia content. From this point, most of us are carrying a camera, anywhere and anytime, allowing to capture images and videos at will and to seamlessly exchange them with our contacts.

As a direct consequence of the above developments, we are currently observing a boom in the usage of multimedia content. It is estimated that today 3.2 billion images are shared each day on social media platforms, and 300 h of video are uploaded every minute on YouTube 1 . In a 2019 report, Cisco estimated that video content represented 75% of all Internet traffic in 2017, and this share is forecasted to grow to 82% in 2022 ( Cisco, 2019 ). While Internet video streaming and Over-The-Top (OTT) media services account for a significant bulk of this traffic, other applications are also expected to see significant increases, including video surveillance and Virtual Reality (VR)/Augmented Reality (AR).

Hyper-Realistic and Immersive Imaging

A major direction and key driver to research and development activities over the years has been the objective to deliver an ever-improving image quality and user experience.

For instance, in the realm of video, we have observed constantly increasing spatial and temporal resolutions, with the emergence nowadays of Ultra High Definition (UHD). Another aim has been to provide a sense of the depth in the scene. For this purpose, various 3D video representations have been explored, including stereoscopic 3D and multi-view ( Dufaux et al., 2013 ).

In this context, the ultimate goal is to be able to faithfully represent the physical world and to deliver an immersive and perceptually hyperrealist experience. For this purpose, we discuss hereafter some emerging innovations. These developments are also very relevant in VR and AR applications ( Slater, 2014 ). Finally, while this paper is only focusing on the visual information processing aspects, it is obvious that emerging display technologies ( Masia et al., 2013 ) and audio also plays key roles in many application scenarios.

Light Fields, Point Clouds, Volumetric Imaging

In order to wholly represent a scene, the light information coming from all the directions has to be represented. For this purpose, the 7D plenoptic function is a key concept ( Adelson and Bergen, 1991 ), although it is unmanageable in practice.

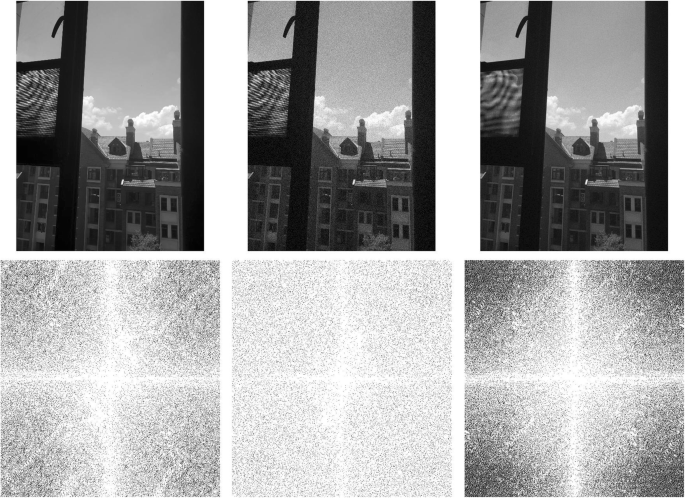

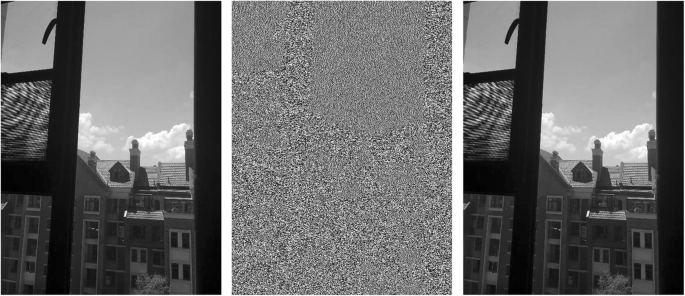

By introducing additional constraints, the light field representation collects radiance from rays in all directions. Therefore, it contains a much richer information, when compared to traditional 2D imaging that captures a 2D projection of the light in the scene integrating the angular domain. For instance, this allows post-capture processing such as refocusing and changing the viewpoint. However, it also entails several technical challenges, in terms of acquisition and calibration, as well as computational image processing steps including depth estimation, super-resolution, compression and image synthesis ( Ihrke et al., 2016 ; Wu et al., 2017 ). The resolution trade-off between spatial and angular resolutions is a fundamental issue. With a significant fraction of the earlier work focusing on static light fields, it is also expected that dynamic light field videos will stimulate more interest in the future. In particular, dense multi-camera arrays are becoming more tractable. Finally, the development of efficient light field compression and streaming techniques is a key enabler in many applications ( Conti et al., 2020 ).

Another promising direction is to consider a point cloud representation. A point cloud is a set of points in the 3D space represented by their spatial coordinates and additional attributes, including color pixel values, normals, or reflectance. They are often very large, easily ranging in the millions of points, and are typically sparse. One major distinguishing feature of point clouds is that, unlike images, they do not have a regular structure, calling for new algorithms. To remove the noise often present in acquired data, while preserving the intrinsic characteristics, effective 3D point cloud filtering approaches are needed ( Han et al., 2017 ). It is also important to develop efficient techniques for Point Cloud Compression (PCC). For this purpose, MPEG is developing two standards: Geometry-based PCC (G-PCC) and Video-based PCC (V-PCC) ( Graziosi et al., 2020 ). G-PCC considers the point cloud in its native form and compress it using 3D data structures such as octrees. Conversely, V-PCC projects the point cloud onto 2D planes and then applies existing video coding schemes. More recently, deep learning-based approaches for PCC have been shown to be effective ( Guarda et al., 2020 ). Another challenge is to develop generic and robust solutions able to handle potentially widely varying characteristics of point clouds, e.g. in terms of size and non-uniform density. Efficient solutions for dynamic point clouds are also needed. Finally, while many techniques focus on the geometric information or the attributes independently, it is paramount to process them jointly.

High Dynamic Range and Wide Color Gamut

The human visual system is able to perceive, using various adaptation mechanisms, a broad range of luminous intensities, from very bright to very dark, as experienced every day in the real world. Nonetheless, current imaging technologies are still limited in terms of capturing or rendering such a wide range of conditions. High Dynamic Range (HDR) imaging aims at addressing this issue. Wide Color Gamut (WCG) is also often associated with HDR in order to provide a wider colorimetry.

HDR has reached some levels of maturity in the context of photography. However, extending HDR to video sequences raises scientific challenges in order to provide high quality and cost-effective solutions, impacting the whole imaging processing pipeline, including content acquisition, tone reproduction, color management, coding, and display ( Dufaux et al., 2016 ; Chalmers and Debattista, 2017 ). Backward compatibility with legacy content and traditional systems is another issue. Despite recent progress, the potential of HDR has not been fully exploited yet.

Coding and Transmission

Three decades of standardization activities have continuously improved the hybrid video coding scheme based on the principles of transform coding and predictive coding. The Versatile Video Coding (VVC) standard has been finalized in 2020 ( Bross et al., 2021 ), achieving approximately 50% bit rate reduction for the same subjective quality when compared to its predecessor, High Efficiency Video Coding (HEVC). While substantially outperforming VVC in the short term may be difficult, one encouraging direction is to rely on improved perceptual models to further optimize compression in terms of visual quality. Another direction, which has already shown promising results, is to apply deep learning-based approaches ( Ding et al., 2021 ). Here, one key issue is the ability to generalize these deep models to a wide diversity of video content. The second key issue is the implementation complexity, both in terms of computation and memory requirements, which is a significant obstacle to a widespread deployment. Besides, the emergence of new video formats targeting immersive communications is also calling for new coding schemes ( Wien et al., 2019 ).

Considering that in many application scenarios, videos are processed by intelligent analytic algorithms rather than viewed by users, another interesting track is the development of video coding for machines ( Duan et al., 2020 ). In this context, the compression is optimized taking into account the performance of video analysis tasks.

The push toward hyper-realistic and immersive visual communications entails most often an increasing raw data rate. Despite improved compression schemes, more transmission bandwidth is needed. Moreover, some emerging applications, such as VR/AR, autonomous driving, and Industry 4.0, bring a strong requirement for low latency transmission, with implications on both the imaging processing pipeline and the transmission channel. In this context, the emergence of 5G wireless networks will positively contribute to the deployment of new multimedia applications, and the development of future wireless communication technologies points toward promising advances ( Da Costa and Yang, 2020 ).

Human Perception and Visual Quality Assessment

It is important to develop effective models of human perception. On the one hand, it can contribute to the development of perceptually inspired algorithms. On the other hand, perceptual quality assessment methods are needed in order to optimize and validate new imaging solutions.

The notion of Quality of Experience (QoE) relates to the degree of delight or annoyance of the user of an application or service ( Le Callet et al., 2012 ). QoE is strongly linked to subjective and objective quality assessment methods. Many years of research have resulted in the successful development of perceptual visual quality metrics based on models of human perception ( Lin and Kuo, 2011 ; Bovik, 2013 ). More recently, deep learning-based approaches have also been successfully applied to this problem ( Bosse et al., 2017 ). While these perceptual quality metrics have achieved good performances, several significant challenges remain. First, when applied to video sequences, most current perceptual metrics are applied on individual images, neglecting temporal modeling. Second, whereas color is a key attribute, there are currently no widely accepted perceptual quality metrics explicitly considering color. Finally, new modalities, such as 360° videos, light fields, point clouds, and HDR, require new approaches.

Another closely related topic is image esthetic assessment ( Deng et al., 2017 ). The esthetic quality of an image is affected by numerous factors, such as lighting, color, contrast, and composition. It is useful in different application scenarios such as image retrieval and ranking, recommendation, and photos enhancement. While earlier attempts have used handcrafted features, most recent techniques to predict esthetic quality are data driven and based on deep learning approaches, leveraging the availability of large annotated datasets for training ( Murray et al., 2012 ). One key challenge is the inherently subjective nature of esthetics assessment, resulting in ambiguity in the ground-truth labels. Another important issue is to explain the behavior of deep esthetic prediction models.

Analysis, Interpretation and Understanding

Another major research direction has been the objective to efficiently analyze, interpret and understand visual data. This goal is challenging, due to the high diversity and complexity of visual data. This has led to many research activities, involving both low-level and high-level analysis, addressing topics such as image classification and segmentation, optical flow, image indexing and retrieval, object detection and tracking, and scene interpretation and understanding. Hereafter, we discuss some trends and challenges.

Keypoints Detection and Local Descriptors

Local imaging matching has been the cornerstone of many analysis tasks. It involves the detection of keypoints, i.e. salient visual points that can be robustly and repeatedly detected, and descriptors, i.e. a compact signature locally describing the visual features at each keypoint. It allows to subsequently compute pairwise matching between the features to reveal local correspondences. In this context, several frameworks have been proposed, including Scale Invariant Feature Transform (SIFT) ( Lowe, 2004 ) and Speeded Up Robust Features (SURF) ( Bay et al., 2008 ), and later binary variants including Binary Robust Independent Elementary Feature (BRIEF) ( Calonder et al., 2010 ), Oriented FAST and Rotated BRIEF (ORB) ( Rublee et al., 2011 ) and Binary Robust Invariant Scalable Keypoints (BRISK) ( Leutenegger et al., 2011 ). Although these approaches exhibit scale and rotation invariance, they are less suited to deal with large 3D distortions such as perspective deformations, out-of-plane rotations, and significant viewpoint changes. Besides, they tend to fail under significantly varying and challenging illumination conditions.

These traditional approaches based on handcrafted features have been successfully applied to problems such as image and video retrieval, object detection, visual Simultaneous Localization And Mapping (SLAM), and visual odometry. Besides, the emergence of new imaging modalities as introduced above can also be beneficial for image analysis tasks, including light fields ( Galdi et al., 2019 ), point clouds ( Guo et al., 2020 ), and HDR ( Rana et al., 2018 ). However, when applied to high-dimensional visual data for semantic analysis and understanding, these approaches based on handcrafted features have been supplanted in recent years by approaches based on deep learning.

Deep Learning-Based Methods

Data-driven deep learning-based approaches ( LeCun et al., 2015 ), and in particular the Convolutional Neural Network (CNN) architecture, represent nowadays the state-of-the-art in terms of performances for complex pattern recognition tasks in scene analysis and understanding. By combining multiple processing layers, deep models are able to learn data representations with different levels of abstraction.

Supervised learning is the most common form of deep learning. It requires a large and fully labeled training dataset, a typically time-consuming and expensive process needed whenever tackling a new application scenario. Moreover, in some specialized domains, e.g. medical data, it can be very difficult to obtain annotations. To alleviate this major burden, methods such as transfer learning and weakly supervised learning have been proposed.

In another direction, deep models have been shown to be vulnerable to adversarial attacks ( Akhtar and Mian, 2018 ). Those attacks consist in introducing subtle perturbations to the input, such that the model predicts an incorrect output. For instance, in the case of images, imperceptible pixel differences are able to fool deep learning models. Such adversarial attacks are definitively an important obstacle to the successful deployment of deep learning, especially in applications where safety and security are critical. While some early solutions have been proposed, a significant challenge is to develop effective defense mechanisms against those attacks.

Finally, another challenge is to enable low complexity and efficient implementations. This is especially important for mobile or embedded applications. For this purpose, further interactions between signal processing and machine learning can potentially bring additional benefits. For instance, one direction is to compress deep neural networks in order to enable their more efficient handling. Moreover, by combining traditional processing techniques with deep learning models, it is possible to develop low complexity solutions while preserving high performance.

Explainability in Deep Learning

While data-driven deep learning models often achieve impressive performances on many visual analysis tasks, their black-box nature often makes it inherently very difficult to understand how they reach a predicted output and how it relates to particular characteristics of the input data. However, this is a major impediment in many decision-critical application scenarios. Moreover, it is important not only to have confidence in the proposed solution, but also to gain further insights from it. Based on these considerations, some deep learning systems aim at promoting explainability ( Adadi and Berrada, 2018 ; Xie et al., 2020 ). This can be achieved by exhibiting traits related to confidence, trust, safety, and ethics.

However, explainable deep learning is still in its early phase. More developments are needed, in particular to develop a systematic theory of model explanation. Important aspects include the need to understand and quantify risk, to comprehend how the model makes predictions for transparency and trustworthiness, and to quantify the uncertainty in the model prediction. This challenge is key in order to deploy and use deep learning-based solutions in an accountable way, for instance in application domains such as healthcare or autonomous driving.

Self-Supervised Learning

Self-supervised learning refers to methods that learn general visual features from large-scale unlabeled data, without the need for manual annotations. Self-supervised learning is therefore very appealing, as it allows exploiting the vast amount of unlabeled images and videos available. Moreover, it is widely believed that it is closer to how humans actually learn. One common approach is to use the data to provide the supervision, leveraging its structure. More generally, a pretext task can be defined, e.g. image inpainting, colorizing grayscale images, predicting future frames in videos, by withholding some parts of the data and by training the neural network to predict it ( Jing and Tian, 2020 ). By learning an objective function corresponding to the pretext task, the network is forced to learn relevant visual features in order to solve the problem. Self-supervised learning has also been successfully applied to autonomous vehicles perception. More specifically, the complementarity between analytical and learning methods can be exploited to address various autonomous driving perception tasks, without the prerequisite of an annotated data set ( Chiaroni et al., 2021 ).

While good performances have already been obtained using self-supervised learning, further work is still needed. A few promising directions are outlined hereafter. Combining self-supervised learning with other learning methods is a first interesting path. For instance, semi-supervised learning ( Van Engelen and Hoos, 2020 ) and few-short learning ( Fei-Fei et al., 2006 ) methods have been proposed for scenarios where limited labeled data is available. The performance of these methods can potentially be boosted by incorporating a self-supervised pre-training. The pretext task can also serve to add regularization. Another interesting trend in self-supervised learning is to train neural networks with synthetic data. The challenge here is to bridge the domain gap between the synthetic and real data. Finally, another compelling direction is to exploit data from different modalities. A simple example is to consider both the video and audio signals in a video sequence. In another example in the context of autonomous driving, vehicles are typically equipped with multiple sensors, including cameras, LIght Detection And Ranging (LIDAR), Global Positioning System (GPS), and Inertial Measurement Units (IMU). In such cases, it is easy to acquire large unlabeled multimodal datasets, where the different modalities can be effectively exploited in self-supervised learning methods.

Reproducible Research and Large Public Datasets

The reproducible research initiative is another way to further ensure high-quality research for the benefit of our community ( Vandewalle et al., 2009 ). Reproducibility, referring to the ability by someone else working independently to accurately reproduce the results of an experiment, is a key principle of the scientific method. In the context of image and video processing, it is usually not sufficient to provide a detailed description of the proposed algorithm. Most often, it is essential to also provide access to the code and data. This is even more imperative in the case of deep learning-based models.

In parallel, the availability of large public datasets is also highly desirable in order to support research activities. This is especially critical for new emerging modalities or specific application scenarios, where it is difficult to get access to relevant data. Moreover, with the emergence of deep learning, large datasets, along with labels, are often needed for training, which can be another burden.

Conclusion and Perspectives

The field of image processing is very broad and rich, with many successful applications in both the consumer and business markets. However, many technical challenges remain in order to further push the limits in imaging technologies. Two main trends are on the one hand to always improve the quality and realism of image and video content, and on the other hand to be able to effectively interpret and understand this vast and complex amount of visual data. However, the list is certainly not exhaustive and there are many other interesting problems, e.g. related to computational imaging, information security and forensics, or medical imaging. Key innovations will be found at the crossroad of image processing, optics, psychophysics, communication, computer vision, artificial intelligence, and computer graphics. Multi-disciplinary collaborations are therefore critical moving forward, involving actors from both academia and the industry, in order to drive these breakthroughs.

The “Image Processing” section of Frontier in Signal Processing aims at giving to the research community a forum to exchange, discuss and improve new ideas, with the goal to contribute to the further advancement of the field of image processing and to bring exciting innovations in the foreseeable future.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

1 https://www.brandwatch.com/blog/amazing-social-media-statistics-and-facts/ (accessed on Feb. 23, 2021).

Adadi, A., and Berrada, M. (2018). Peeking inside the black-box: a survey on explainable artificial intelligence (XAI). IEEE access 6, 52138–52160. doi:10.1109/access.2018.2870052

CrossRef Full Text | Google Scholar

Adelson, E. H., and Bergen, J. R. (1991). “The plenoptic function and the elements of early vision” Computational models of visual processing . Cambridge, MA: MIT Press , 3-20.

Google Scholar

Akhtar, N., and Mian, A. (2018). Threat of adversarial attacks on deep learning in computer vision: a survey. IEEE Access 6, 14410–14430. doi:10.1109/access.2018.2807385

Bay, H., Ess, A., Tuytelaars, T., and Van Gool, L. (2008). Speeded-up robust features (SURF). Computer Vis. image understanding 110 (3), 346–359. doi:10.1016/j.cviu.2007.09.014

Bosse, S., Maniry, D., Müller, K. R., Wiegand, T., and Samek, W. (2017). Deep neural networks for no-reference and full-reference image quality assessment. IEEE Trans. Image Process. 27 (1), 206–219. doi:10.1109/TIP.2017.2760518

PubMed Abstract | CrossRef Full Text | Google Scholar

Bovik, A. C. (2013). Automatic prediction of perceptual image and video quality. Proc. IEEE 101 (9), 2008–2024. doi:10.1109/JPROC.2013.2257632

Bross, B., Chen, J., Ohm, J. R., Sullivan, G. J., and Wang, Y. K. (2021). Developments in international video coding standardization after AVC, with an overview of Versatile Video Coding (VVC). Proc. IEEE . doi:10.1109/JPROC.2020.3043399

Calonder, M., Lepetit, V., Strecha, C., and Fua, P. (2010). Brief: binary robust independent elementary features. In K. Daniilidis, P. Maragos, and N. Paragios (eds) European conference on computer vision . Berlin, Heidelberg: Springer , 778–792. doi:10.1007/978-3-642-15561-1_56

Chalmers, A., and Debattista, K. (2017). HDR video past, present and future: a perspective. Signal. Processing: Image Commun. 54, 49–55. doi:10.1016/j.image.2017.02.003

Chiaroni, F., Rahal, M.-C., Hueber, N., and Dufaux, F. (2021). Self-supervised learning for autonomous vehicles perception: a conciliation between analytical and learning methods. IEEE Signal. Process. Mag. 38 (1), 31–41. doi:10.1109/msp.2020.2977269

Cisco, (20192019). Cisco visual networking index: forecast and trends, 2017-2022 (white paper) , Indianapolis, Indiana: Cisco Press .

Conti, C., Soares, L. D., and Nunes, P. (2020). Dense light field coding: a survey. IEEE Access 8, 49244–49284. doi:10.1109/ACCESS.2020.2977767

Da Costa, D. B., and Yang, H.-C. (2020). Grand challenges in wireless communications. Front. Commun. Networks 1 (1), 1–5. doi:10.3389/frcmn.2020.00001

Deng, Y., Loy, C. C., and Tang, X. (2017). Image aesthetic assessment: an experimental survey. IEEE Signal. Process. Mag. 34 (4), 80–106. doi:10.1109/msp.2017.2696576

Ding, D., Ma, Z., Chen, D., Chen, Q., Liu, Z., and Zhu, F. (2021). Advances in video compression system using deep neural network: a review and case studies . Ithaca, NY: Cornell university .

Duan, L., Liu, J., Yang, W., Huang, T., and Gao, W. (2020). Video coding for machines: a paradigm of collaborative compression and intelligent analytics. IEEE Trans. Image Process. 29, 8680–8695. doi:10.1109/tip.2020.3016485

Dufaux, F., Le Callet, P., Mantiuk, R., and Mrak, M. (2016). High dynamic range video - from acquisition, to display and applications . Cambridge, Massachusetts: Academic Press .

Dufaux, F., Pesquet-Popescu, B., and Cagnazzo, M. (2013). Emerging technologies for 3D video: creation, coding, transmission and rendering . Hoboken, NJ: Wiley .

Fei-Fei, L., Fergus, R., and Perona, P. (2006). One-shot learning of object categories. IEEE Trans. Pattern Anal. Mach Intell. 28 (4), 594–611. doi:10.1109/TPAMI.2006.79

Galdi, C., Chiesa, V., Busch, C., Lobato Correia, P., Dugelay, J.-L., and Guillemot, C. (2019). Light fields for face analysis. Sensors 19 (12), 2687. doi:10.3390/s19122687

Graziosi, D., Nakagami, O., Kuma, S., Zaghetto, A., Suzuki, T., and Tabatabai, A. (2020). An overview of ongoing point cloud compression standardization activities: video-based (V-PCC) and geometry-based (G-PCC). APSIPA Trans. Signal Inf. Process. 9, 2020. doi:10.1017/ATSIP.2020.12

Guarda, A., Rodrigues, N., and Pereira, F. (2020). Adaptive deep learning-based point cloud geometry coding. IEEE J. Selected Top. Signal Process. 15, 415-430. doi:10.1109/mmsp48831.2020.9287060

Guo, Y., Wang, H., Hu, Q., Liu, H., Liu, L., and Bennamoun, M. (2020). Deep learning for 3D point clouds: a survey. IEEE transactions on pattern analysis and machine intelligence . doi:10.1109/TPAMI.2020.3005434

Han, X.-F., Jin, J. S., Wang, M.-J., Jiang, W., Gao, L., and Xiao, L. (2017). A review of algorithms for filtering the 3D point cloud. Signal. Processing: Image Commun. 57, 103–112. doi:10.1016/j.image.2017.05.009

Haskell, B. G., Puri, A., and Netravali, A. N. (1996). Digital video: an introduction to MPEG-2 . Berlin, Germany: Springer Science and Business Media .

Hirsch, R. (1999). Seizing the light: a history of photography . New York, NY: McGraw-Hill .

Ihrke, I., Restrepo, J., and Mignard-Debise, L. (2016). Principles of light field imaging: briefly revisiting 25 years of research. IEEE Signal. Process. Mag. 33 (5), 59–69. doi:10.1109/MSP.2016.2582220

Jing, L., and Tian, Y. (2020). “Self-supervised visual feature learning with deep neural networks: a survey,” IEEE transactions on pattern analysis and machine intelligence , Ithaca, NY: Cornell University .

Le Callet, P., Möller, S., and Perkis, A. (2012). Qualinet white paper on definitions of quality of experience. European network on quality of experience in multimedia systems and services (COST Action IC 1003), 3(2012) .

Le Gall, D. (1991). Mpeg: A Video Compression Standard for Multimedia Applications. Commun. ACM 34, 46–58. doi:10.1145/103085.103090

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep learning. nature 521 (7553), 436–444. doi:10.1038/nature14539

Leutenegger, S., Chli, M., and Siegwart, R. Y. (2011). “BRISK: binary robust invariant scalable keypoints,” IEEE International conference on computer vision , Barcelona, Spain , 6-13 Nov, 2011 ( IEEE ), 2548–2555.

Lin, W., and Jay Kuo, C.-C. (2011). Perceptual visual quality metrics: a survey. J. Vis. Commun. image representation 22 (4), 297–312. doi:10.1016/j.jvcir.2011.01.005

Lowe, D. G. (2004). Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60 (2), 91–110. doi:10.1023/b:visi.0000029664.99615.94

Lumiere, L. (1996). 1936 the lumière cinematograph. J. Smpte 105 (10), 608–611. doi:10.5594/j17187

Masia, B., Wetzstein, G., Didyk, P., and Gutierrez, D. (2013). A survey on computational displays: pushing the boundaries of optics, computation, and perception. Comput. & Graphics 37 (8), 1012–1038. doi:10.1016/j.cag.2013.10.003

Murray, N., Marchesotti, L., and Perronnin, F. (2012). “AVA: a large-scale database for aesthetic visual analysis,” IEEE conference on computer vision and pattern recognition , Providence, RI , June, 2012 . ( IEEE ), 2408–2415. doi:10.1109/CVPR.2012.6247954

Rana, A., Valenzise, G., and Dufaux, F. (2018). Learning-based tone mapping operator for efficient image matching. IEEE Trans. Multimedia 21 (1), 256–268. doi:10.1109/TMM.2018.2839885

Rublee, E., Rabaud, V., Konolige, K., and Bradski, G. (2011). “ORB: an efficient alternative to SIFT or SURF,” IEEE International conference on computer vision , Barcelona, Spain , November, 2011 ( IEEE ), 2564–2571. doi:10.1109/ICCV.2011.6126544

Slater, M. (2014). Grand challenges in virtual environments. Front. Robotics AI 1, 3. doi:10.3389/frobt.2014.00003

Van Engelen, J. E., and Hoos, H. H. (2020). A survey on semi-supervised learning. Mach Learn. 109 (2), 373–440. doi:10.1007/s10994-019-05855-6

Vandewalle, P., Kovacevic, J., and Vetterli, M. (2009). Reproducible research in signal processing. IEEE Signal. Process. Mag. 26 (3), 37–47. doi:10.1109/msp.2009.932122

Wallace, G. K. (1992). The JPEG still picture compression standard. IEEE Trans. Consumer Electron.Feb 38 (1), xviii-xxxiv. doi:10.1109/30.125072

Wien, M., Boyce, J. M., Stockhammer, T., and Peng, W.-H. (20192019). Standardization status of immersive video coding. IEEE J. Emerg. Sel. Top. Circuits Syst. 9 (1), 5–17. doi:10.1109/JETCAS.2019.2898948

Wu, G., Masia, B., Jarabo, A., Zhang, Y., Wang, L., Dai, Q., et al. (2017). Light field image processing: an overview. IEEE J. Sel. Top. Signal. Process. 11 (7), 926–954. doi:10.1109/JSTSP.2017.2747126

Xie, N., Ras, G., van Gerven, M., and Doran, D. (2020). Explainable deep learning: a field guide for the uninitiated , Ithaca, NY: Cornell University ..

Keywords: image processing, immersive, image analysis, image understanding, deep learning, video processing

Citation: Dufaux F (2021) Grand Challenges in Image Processing. Front. Sig. Proc. 1:675547. doi: 10.3389/frsip.2021.675547

Received: 03 March 2021; Accepted: 10 March 2021; Published: 12 April 2021.

Reviewed and Edited by:

Copyright © 2021 Dufaux. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Frédéric Dufaux, [email protected]

digital image processing Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Developing Digital Photomicroscopy

(1) The need for efficient ways of recording and presenting multicolour immunohistochemistry images in a pioneering laboratory developing new techniques motivated a move away from photography to electronic and ultimately digital photomicroscopy. (2) Initially broadcast quality analogue cameras were used in the absence of practical digital cameras. This allowed the development of digital image processing, storage and presentation. (3) As early adopters of digital cameras, their advantages and limitations were recognised in implementation. (4) The adoption of immunofluorescence for multiprobe detection prompted further developments, particularly a critical approach to probe colocalization. (5) Subsequently, whole-slide scanning was implemented, greatly enhancing histology for diagnosis, research and teaching.

Parallel Algorithm of Digital Image Processing Based on GPU

Quantitative identification cracks of heritage rock based on digital image technology.

Abstract Digital image processing technologies are used to extract and evaluate the cracks of heritage rock in this paper. Firstly, the image needs to go through a series of image preprocessing operations such as graying, enhancement, filtering and binaryzation to filter out a large part of the noise. Then, in order to achieve the requirements of accurately extracting the crack area, the image is again divided into the crack area and morphological filtering. After evaluation, the obtained fracture area can provide data support for the restoration and protection of heritage rock. In this paper, the cracks of heritage rock are extracted in three different locations.The results show that the three groups of rock fractures have different effects on the rocks, but they all need to be repaired to maintain the appearance of the heritage rock.

Determination of Optical Rotation Based on Liquid Crystal Polymer Vortex Retarder and Digital Image Processing

Discussion on curriculum reform of digital image processing under the certification of engineering education, influence and application of digital image processing technology on oil painting creation in the era of big data, geometric correction analysis of highly distortion of near equatorial satellite images using remote sensing and digital image processing techniques, color enhancement of low illumination garden landscape images.

The unfavorable shooting environment severely hinders the acquisition of actual landscape information in garden landscape design. Low quality, low illumination garden landscape images (GLIs) can be enhanced through advanced digital image processing. However, the current color enhancement models have poor applicability. When the environment changes, these models are easy to lose image details, and perform with a low robustness. Therefore, this paper tries to enhance the color of low illumination GLIs. Specifically, the color restoration of GLIs was realized based on modified dynamic threshold. After color correction, the low illumination GLI were restored and enhanced by a self-designed convolutional neural network (CNN). In this way, the authors achieved ideal effects of color restoration and clarity enhancement, while solving the difficulty of manual feature design in landscape design renderings. Finally, experiments were carried out to verify the feasibility and effectiveness of the proposed image color enhancement approach.

Discovery of EDA-Complex Photocatalyzed Reactions Using Multidimensional Image Processing: Iminophosphorane Synthesis as a Case Study

Abstract Herein, we report a multidimensional screening strategy for the discovery of EDA-complex photocatalyzed reactions using only photographic devices (webcam, cellphone) and TLC analysis. An algorithm was designed to identify automatically EDA-complex reactive mixtures in solution from digital image processing in a 96-wells microplate and by TLC-analysis. The code highlights the region of absorption of the mixture in the visible spectrum, and the quantity of the color change through grayscale values. Furthermore, the code identifies automatically the blurs on the TLC plate and classifies the mixture as colorimetric reactions, non-reactive or potentially reactive EDA mixtures. This strategy allowed us to discover and then optimize a new EDA-mediated approach for obtaining iminophosphoranes in up to 90% yield.

Mangosteen Quality Grading for Export Markets Using Digital Image Processing Techniques

Export citation format, share document.

We apologize for the inconvenience...

To ensure we keep this website safe, please can you confirm you are a human by ticking the box below.

If you are unable to complete the above request please contact us using the below link, providing a screenshot of your experience.

https://ioppublishing.org/contacts/

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Image processing articles from across Nature Portfolio

Image processing is manipulation of an image that has been digitised and uploaded into a computer. Software programs modify the image to make it more useful, and can for example be used to enable image recognition.

Creating a universal cell segmentation algorithm

Cell segmentation currently involves the use of various bespoke algorithms designed for specific cell types, tissues, staining methods and microscopy technologies. We present a universal algorithm that can segment all kinds of microscopy images and cell types across diverse imaging protocols.

Latest Research and Reviews

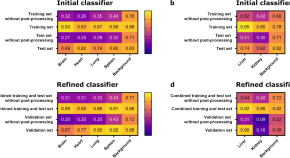

Identification of CT radiomic features robust to acquisition and segmentation variations for improved prediction of radiotherapy-treated lung cancer patient recurrence

- Thomas Louis

- François Lucia

- Roland Hustinx

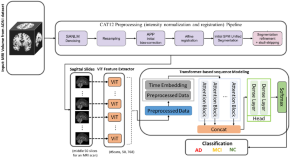

Joint transformer architecture in brain 3D MRI classification: its application in Alzheimer’s disease classification

- Taymaz Akan

- Mohammad A. N. Bhuiyan

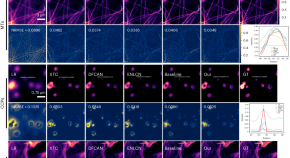

Automated quantification of avian influenza virus antigen in different organs

- Maria Landmann

- David Scheibner

- Reiner Ulrich

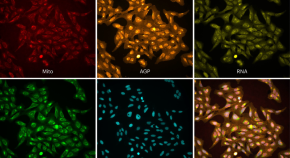

Microenvironmental reorganization in brain tumors following radiotherapy and recurrence revealed by hyperplexed immunofluorescence imaging

Improved imaging techniques are required to help advance our understanding of the complex role of the tumour microenvironment (TME). Here, the authors develop a high-throughput, highly multiplexed tissue visualisation workflow and demonstrate its utility by characterising the response of the TME to radiotherapy in preclinical models of glioblastoma.

- Spencer S. Watson

- Johanna A. Joyce

Pretraining a foundation model for generalizable fluorescence microscopy-based image restoration

A pretrained foundation model (UniFMIR) enables versatile and generalizable performance across diverse fluorescence microscopy image reconstruction tasks.

Three million images and morphological profiles of cells treated with matched chemical and genetic perturbations

The CPJUMP1 Resource comprises Cell Painting images and profiles of 75 million cells treated with hundreds of chemical and genetic perturbations. The dataset enables exploration of their relationships and lays the foundation for the development of advanced methods to match perturbations.

- Srinivas Niranj Chandrasekaran

- Beth A. Cimini

- Anne E. Carpenter

News and Comment

Where imaging and metrics meet.

When it comes to bioimaging and image analysis, details matter. Papers in this issue offer guidance for improved robustness and reproducibility.

EfficientBioAI: making bioimaging AI models efficient in energy and latency

- Jianxu Chen

JDLL: a library to run deep learning models on Java bioimage informatics platforms

- Carlos García López de Haro

- Stéphane Dallongeville

- Jean-Christophe Olivo-Marin

Moving towards a generalized denoising network for microscopy

The visualization and analysis of biological events using fluorescence microscopy is limited by the noise inherent in the images obtained. Now, a self-supervised spatial redundancy denoising transformer is proposed to address this challenge.

- Lachlan Whitehead

Imaging across scales

New twists on established methods and multimodal imaging are poised to bridge gaps between cellular and organismal imaging.

- Rita Strack

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- PMC10607786

Developments in Image Processing Using Deep Learning and Reinforcement Learning

Jorge valente.

1 Techframe-Information Systems, SA, 2785-338 São Domingos de Rana, Portugal; [email protected] (J.V.); [email protected] (J.A.)

João António

Carlos mora.

2 Smart Cities Research Center, Polytechnic Institute of Tomar, 2300-313 Tomar, Portugal; [email protected]

Sandra Jardim

Associated data.

The data presented in this study are available upon request from the corresponding author. The data are not publicly available due to company privacy matters; however, all data contained in the dataset mentioned in the manuscript is publicly available.

The growth in the volume of data generated, consumed, and stored, which is estimated to exceed 180 zettabytes in 2025, represents a major challenge both for organizations and for society in general. In addition to being larger, datasets are increasingly complex, bringing new theoretical and computational challenges. Alongside this evolution, data science tools have exploded in popularity over the past two decades due to their myriad of applications when dealing with complex data, their high accuracy, flexible customization, and excellent adaptability. When it comes to images, data analysis presents additional challenges because as the quality of an image increases, which is desirable, so does the volume of data to be processed. Although classic machine learning (ML) techniques are still widely used in different research fields and industries, there has been great interest from the scientific community in the development of new artificial intelligence (AI) techniques. The resurgence of neural networks has boosted remarkable advances in areas such as the understanding and processing of images. In this study, we conducted a comprehensive survey regarding advances in AI design and the optimization solutions proposed to deal with image processing challenges. Despite the good results that have been achieved, there are still many challenges to face in this field of study. In this work, we discuss the main and more recent improvements, applications, and developments when targeting image processing applications, and we propose future research directions in this field of constant and fast evolution.

1. Introduction

Images constitute one of the most important forms of communication used by society and contain a large amount of important information. The human vision system is usually the first form of contact with media and has the ability to naturally extract important, and sometimes subtle, information, enabling the execution of different tasks, from the simplest, such as identifying objects, to the more complex, such as the creation and integration of knowledge. However, this system is limited to the visible range of the electromagnetic spectrum. On the contrary, computer systems have a more comprehensive coverage capacity, ranging from gamma to radio waves, which makes it possible to process a wide spectrum of images, covering a wide and varied field of applications. On the other hand, the exponential growth in the volume of images created and stored daily makes their analysis and processing a difficult task to implement outside the technological sphere. In this way, image processing through computational systems plays a fundamental role in extracting necessary and relevant information for carrying out different tasks in different contexts and application areas.

Image processing originated in 1964 with the processing of the images of the lunar surface, and in a simple way, we can define the concept of image processing as an area of signal processing dedicated to the development of computational techniques aimed at the analysis, improvement, compression, restoration, and extraction of information from digital images. With a wide range of applications, image processing has been a subject of great interest both from the scientific community and from industry. This interest, combined with the technological evolution of computer systems and the need to have systems with increasingly better levels of performance, both in terms of precision and reliability and in terms of processing speed, has enabled a great evolution of image processing techniques, moving from the use of nonlearning-based methods to the application of machine learning techniques.

Having emerged in the mid-twentieth century, machine learning (ML) is a subset of artificial intelligence (AI), a field of computer science that focuses on designing machines and computational solutions capable of executing, ideally automatically, tasks that include, among others, natural language understanding, speech understanding, and image recognition [ 1 ]. When providing new ways to design AI models [ 2 ], ML, such as other scientific computing applications, commonly uses linear algebra operations on multidimensional arrays, which are computational data structures for representing vectors, matrices, and tensors of a higher order. ML is a data analysis method that automates the construction of analytical models and computer algorithms, which are used in a large range of data types [ 1 ] and are particularly useful for analyzing data and establishing potential patterns to try and predict new information [ 3 ]. This suit of techniques has exploded in use and as a topic of research over the past decade, to the point where almost everyone interacts with modern AI models many times every day [ 4 ].

AI, in particular ML, has revolutionized many areas of technology. One of the areas where the impact of such techniques is noticeable is image processing. The advancement of algorithms and computational capabilities has driven and enabled the performance of complex tasks in the field of image processing, such as facial recognition, object detection and classification, generation of synthetic images, semantic segmentation, image restoration, and image retrieval. The application of ML techniques in image processing brings a set of benefits that impact different sectors of society. This technology has the potential to optimize processes, improve the accuracy of data analysis, and provide new possibilities in different areas. With ML techniques, it is possible to analyze and interpret images with high precision. The advances that have been made in the use of neural networks have made it possible to identify objects, recognize patterns, and carry out complex analyses on images with a high accuracy rate. Pursuing ever-increasing precision is essential in areas such as medicine, where accurate diagnosis can make a difference in patients’ lives.

By applying ML techniques and models to image processing, it is possible to automate tasks that were previously performed manually. In this context, and as an example, we have quality control processes in production lines, where ML allows for the identification of defects in products quickly and accurately, eliminating the need for human inspection, leading to an increase in process efficiency, as well as to a reduction in errors inherent to the human factor and costs.

The recognized ability of ML models to extract valuable information from images enables advanced analysis in several areas, namely public safety, where facial recognition algorithms can be used to identify individuals, and scientific research, such as the inspection of astronomical images, the classification of tissues or tumor cells, and the detection of patterns in large volumes of data.

With so much new research and proposed approaches being published with high frequency, it is a daunting task to keep up with current trends and new research topics, especially if they occur in a research field one is not familiar with. For this purpose, we propose to explore and review publications discussing the new techniques available and the current challenges and point out some of the possible directions for the future. We believe this research can prove helpful for future researchers and provide a modern vision of this fascinating and vast research subject.

On the other hand, and as far as it was possible to verify from the analysis of works published in recent years, there is a lack of studies that highlight machine learning techniques applied to image processing in different areas. There are several works that focus on reviewing the work that has been developed in a given area, and the one that seems to arouse the most interest is the area of medical imaging [ 5 , 6 , 7 , 8 , 9 ]. Therefore, this paper also contributes to presenting an analysis and discussion of ML techniques in a broad context of application.

This document is divided into sections, subsections, and details. Section 2 —Introduction: describes the research methodology used to carry out this review manuscript. Section 3 —Technical Background: presents an overview of the AI models most used in image processing. Section 4 —Image Processing Developments: describes related work and different state-of-the-art approaches used by researchers to solve modern-day challenges. Section 5 —Discussion and Future Directions: presents the main challenges and limitations that still exist in the area covered by this manuscript, pointing out some possible directions for the evolution of the models proposed to date. Finally, Section 6 provides a brief concluding remark with the main conclusions that can be taken from our study.

2. Methodology

In order to carry out this review, we considered a vast number of scientific publications in the scope of ML, particularly those involving image processing methods using DL and RL techniques and applied to real-world problems.

2.1. Search Process and Sources of Information

In order to guarantee the reliability of the documents, the information sources were validated, having been considered reputable publication journals and university repositories. From the selected sources, we attempted to include research from multiple areas and topics to provide a general and detailed representation of the ways image processing research has developed and can be used. Nevertheless, some areas appear to have developed a greater interest in some of the ML methods previously described. The search process involved using a selection of keywords that are closely related to image processing on popular scientific search engines such as Springer Science Direct, and Core. These search engines were selected since they allowed us to make comparable searches, targeting specific terms and filtering results by research area. In order to cover a broad range of topics and magazines, the only search filter that we used was chosen to ensure that the subjects were related to data science and/or artificial intelligence.

As of February 2023, a search using the prompt “image processing AI” returns manuscripts related mostly to “Medicine”, “Computer science”, and “Engineering”. In fact, while searching in the three different research aggregators, the results stayed somewhat consistent. A summary of the results obtained can be observed in Figure 1 .

Main research areas for the tested search inputs for three different academic engines.

Since there is more research available on some topics, the cases described ahead can also have a higher prevalence when compared to others.

2.2. Inclusion and Exclusion Criteria for Article Selection

The research carried out in the different repositories resulted in a large number of research works proposed by different authors. By considering the constant advances made in this subject and the amount of research developed, we opted to mainly focus on research developed in the last 5 years. We analyzed and selected the research sources that provided novel and/or interesting applications of ML in image processing. The objective was to present a broad representation of the recent trends in ML research and provide the information in a more concise form.

3. Technical Background

The growing use of the internet in general, and social networks in particular, has led to the availability of a large increase in digital images; being privileged means being able to express emotions and share information, which enables many diverse applications [ 10 ]. Identifying the interesting parts of a scene is a fundamental step for recognizing and interpreting an image [ 11 ]. To understand how the different techniques are applied in processing images and extracting their features, as well as explaining the main concepts and technicalities of the different types of AI models, we will provide a general technical background review of machine learning and image processing, which will help provide relevant context to the scope of this review, as well as guide the reader through the covered topics.

3.1. Graphics Processing Units

Many advances covered in this paper, along with classical ML and scalable general-purpose graphics processing unit (GPU) computing, have become critical components of AI [ 1 , 10 ], enabling the processing of massive amounts of data generated each day and lowering the barrier to adoption [ 1 ]. In particular, the usage of GPUs revolutionized the landscape of classical ML and DL models. From the 1990s to the late 2000s, ML research was predominantly focused on SVM, which was considered state-of-the-art [ 1 ]. In the following decade, starting in 2010, GPUs brought new life into the field of DL, jumpstarting a high amount of research and development [ 1 ]. State-of-the-art DL algorithms tend to have higher computational complexity, requiring several iterations to make the parameters converge to an optimal value [ 12 , 13 ]. However, the relevance of DL has only become greater over the years, as this technology has gradually become one of the main focuses of ML research [ 14 ].

While research into the use of ML on GPUs predates the recent resurgence of DL, the usage of general-purpose GPUs for computing (GPGPU) became widespread when CUDA was released in 2007 [ 1 ]. Shortly after, CNN started to be implemented on top of GPUs, demonstrating dramatic end-to-end speedup, even over highly optimized CPU implementations. CNNs are a subset of methods that can be used, for example, for image restoration, which has demonstrated outstanding performance [ 1 ]. Some studies have shown that, when compared with traditional neural networks and SVM, the accuracy of recognition using CNNs is notably higher [ 12 ].

Some of these performance gains were accomplished even before the existence of dedicated GPU-accelerated BLAS libraries. The release of the first CUDA Toolkit brought new life to general-purpose parallel computing with GPUs, with one of the main benefits of this approach being the ability of GPUs to enable a multithreaded single-instruction (SIMT) programming paradigm, higher throughput, and more parallel models when compared to SIMD. This process makes several blocks of multiprocessors available, each with many parallel cores (threads), allowing access to high-speed memory [ 1 ].

3.2. Image Processing

For humans, an image is a visual and meaningful arrangement of regions and objects [ 11 ]. Recent advances in image processing methods find application in different contexts of our daily lives, both as citizens and in the professional field, such as compression, enhancement, and noise removal from images [ 10 , 15 ]. In classification tasks, an image can be transformed into millions of pixels, which makes data processing very difficult [ 2 ]. As a complex and difficult image-processing task, segmentation has high importance and application in several areas, namely in automatic visual systems, where precision affects not only the segmentation results but also the results of the following tasks, which, directly or indirectly, depend on it [ 11 ]. In segmentation, the goal is to divide an image into its constituent parts (or objects)—sometimes referred to as regions of interest (ROI)—without overlapping [ 16 , 17 ], which can be achieved through different feature descriptors, such as the texture, color, and edges, as well as a histogram of oriented gradients (HOG) and a global image descriptor (GIST) [ 11 , 17 ]. While the human vision system segments images on a natural basis, without special effort, automatic segmentation is one of the most complex tasks in image processing and computer vision [ 16 ].

Given its high applicability and importance, object detection has been a subject of high interest in the scientific community. Depending on the objective, it may be necessary to detect objects with a significant size compared to the image where they are located or to detect several objects of different sizes. The results of object detection in images vary depending on their dimensions and are generally better for large objects [ 18 ]. Image processing techniques and algorithms find application in the most diverse areas. In the medical field, image processing has grown in many directions, including computer vision, pattern recognition, image mining, and ML [ 19 ].

In order to use some ML models when problems in image processing occur, it is often necessary to reduce the number of data entries to quickly extract valuable information from the data [ 10 ]. In order to facilitate this process, the image can be transformed into a reduced set of features in an operation that selects and measures the representative data properties in a reduced form, representing the original data up to a certain degree of precision, and mimicking the high-level features of the source [ 2 ]. While deep neural networks (DNNs) are often used for processing images, some traditional ML techniques can be applied to improve the data obtained. For example, in Zeng et al. [ 20 ], a deep convolutional neural network (CNN) was used to extract image features, and principal component analysis (PCA) was applied to reduce the dimensionality of the data.

3.3. Machine Learning Overview

ML draws inspiration from a conceptual understanding of how the human brain works, focusing on performing specific tasks that often involve pattern recognition, including image processing [ 1 ], targeted marketing, guiding business decisions, or finding anomalies in business processes [ 4 ]. Its flexibility has allowed it to be used in many fields owing to its high precision, flexible customization, and excellent adaptability, being increasingly more common in the fields of environmental science and engineering, especially in recent years [ 3 ]. When learning from data, deep learning systems acquire the ability to identify and classify patterns, making decisions with minimal human intervention [ 2 ]. Classical techniques are still fairly widespread across different research fields and industries, particularly when working with datasets not appropriate for modern deep learning (DL) methods and architectures [ 1 ]. In fact, some data scientists like to reinforce that no single ML algorithm fits all data, with proper model selection being dependent on the problem being solved [ 21 , 22 ]. In diagnosis modeling that uses the classification paradigm, the learning process is based on observing data as examples. In these situations, the model is constructed by learning from data along with its annotated labels [ 2 ].

While ML models are an important part of data handling, other steps need to be taken in preparation, like data acquisition, the selection of the appropriate algorithm, model training, and model validation [ 3 ]. The selection of relevant features is one of the key prerequisites to designing an efficient classifier, which allows for robust and focused learning models [ 23 ].

There are two main classes of methods in ML: supervised and unsupervised learning, with the primary difference being the presence of labels in the datasets.

- In supervised learning, we can determine predictive functions using labeled training datasets, meaning each data object instance must include an input for both the values and the expected labels or output values [ 21 ]. This class of algorithms tries to identify the relationships between input and output values and generate a predictive model able to determine the result based only on the corresponding input data [ 3 , 21 ]. Supervised learning methods are suitable for regression and data classification, being primarily used for a variety of algorithms like linear regression, artificial neural networks (ANNs), decision trees (DTs), support vector machines (SVMs), k-nearest neighbors (KNNs), random forest (RF), and others [ 3 ]. As an example, systems using RF and DT algorithms have developed a huge impact on areas such as computational biology and disease prediction, while SVM has also been used to study drug–target interactions and to predict several life-threatening diseases, such as cancer or diabetes [ 23 ].

- Unsupervised learning is typically used to solve several problems in pattern recognition based on unlabeled training datasets. Unsupervised learning algorithms are able to classify the training data into different categories according to their different characteristics [ 21 , 24 ], mainly based on clustering algorithms [ 24 ]. The number of categories is unknown, and the meaning of each category is unclear; therefore, unsupervised learning is usually used for classification problems and for association mining. Some commonly employed algorithms include K-means [ 3 ], SVM, or DT classifiers. Data processing tools like PCA, which is used for dimensionality reduction, are often necessary prerequisites before attempting to cluster a set of data.

Some studies make reference to semi-supervised learning, in which a combination of unsupervised and supervised learning methods are used. In theory, a mixture of labeled and unlabeled data is used to help reduce the costs of labeling a large amount of data. The advantage is that the existence of some labeled data should make these models perform better than strictly unsupervised learning [ 21 ].

In addition to the previously mentioned classes of methods, reinforcement learning (RL) can also be regarded as another class of machine learning (ML) algorithms. This class refers to the generalization ability of a machine to correctly answer unlearned problems [ 3 ].

The current availability of large amounts of data has revolutionized data processing and statistical modeling techniques but, in turn, has brought new theoretical and computational challenges. Some problems have complex solutions due to scale, high dimensions, or other factors, which might require the application of multiple ML models [ 4 ] and large datasets [ 25 ]. ML has also drawn attention as a tool in resource management to dynamically manage resource scaling. It can provide data-driven methods for future insights and has been regarded as a promising approach for predicting workload quickly and accurately [ 26 ]. As an example, ML applications in biological fields are growing rapidly in several areas, such as genome annotation, protein binding, and recognizing the key factors of cancer disease prediction [ 23 ]. The deployment of ML algorithms on cloud servers has also offered opportunities for more efficient resource management [ 26 ].

Most classical ML techniques were developed to target structured data, meaning data in a tabular form with data objects stored as rows and the features stored as columns. In contrast, DL is specifically useful when working with larger, unstructured datasets, such as text and images [ 1 ]. Additional hindrances may apply in certain situations, as, for example, in some engineering design applications, heterogeneous data sources can lead to sparsity in the training data [ 25 ]. Since modern problems often require libraries that can scale for larger data sizes, a handful of ML algorithms can be parallelized through multiprocessing. Nevertheless, the final scale of these algorithms is still limited by the amount of memory and number of processing cores available on a single machine [ 1 ].

Some of the limitations in using ML algorithms come from the size and quality of the data. Real datasets are a challenge for ML algorithms since the user may face skewed label distributions [ 1 ]. Such class imbalances can lead to strong predictive biases, as models can optimize the training objective by learning to predict the majority label most of the time. The term “ensemble techniques” in ML is used for combinations of multiple ML algorithms or models. These are known and widely used for providing stability, increasing model performance, and controlling the bias-variance trade-off [ 1 ]. Hyperparameter tuning is also a fundamental use case in ML, which requires the training and testing of a model over many different configurations to be able to find the model with the best predictive performance. The ability to train multiple smaller models in parallel, especially in a distributed environment, becomes important when multiple models are being combined [ 1 ].

Over the past few years, frequent advances have occurred in AI research caused by a resurgence in neural network methods that have fueled breakthroughs in areas like image understanding, natural language processing, and others [ 27 ]. One area of AI research that appears particularly inviting from this perspective is deep reinforcement learning (DRL), which marries neural network modeling with RL techniques. This technique has exploded within the last 5 years into one of the most intense areas of AI research, generating very promising results to mimic human-level performance in tasks varying from playing poker [ 28 ], video games [ 29 ], multiplayer contests, and complex board games, including Go and Chess [ 27 ]. Beyond its inherent interest as an AI topic, DRL might hold special interest for research in psychology and neuroscience since the mechanisms that drive learning in DRL were partly inspired by animal conditioning research and are believed to relate closely to neural mechanisms for reward-based learning centering on dopamine [ 27 ].

3.3.1. Deep Learning Concepts

DL is a heuristic learning framework and a sub-area of ML that involves learning patterns in data structures using neural networks with many nodes of artificial neurons called perceptrons [ 10 , 19 , 30 ] (see Figure 2 ). Artificial neurons can take several inputs and work according to a mathematical calculation, returning a result in a process similar to a biological neuron [ 19 ]. The simplest neural network, known as a single-layer perceptron [ 30 ], is composed of at least one input, one output, and a processor [ 31 ]. Three different types of DL algorithms can be differentiated: multilayered perceptron (MLP) with more than one hidden layer, CNN, and recurrent neural networks (RNNs) [ 32 ].

Differences in the progress stages between traditional ML methods and DL methods.

One important consideration towards generic neural networks is they are extremely low-bias learning systems. As dictated by the bias–variance trade-off, this means that neural networks, in the most generic form employed in the first DRL models, tend to be sample-inefficient and require large amounts of data to learn. A narrow hypothesis set can speed the learning process if it contains the correct hypothesis or if the specific biases the learner adopts happen to fit with the material to be learned [ 27 ]. Several proposals for algorithms and models have emerged, some of which have been extensively used in different contexts, such as CNNs, autoencoders, and multilayer feedback RNN [ 10 ]. For datasets of images, speech, and text, among others, it is necessary to use different network models in order to maximize system performance [ 33 ]. DL models are often used for image feature extraction and recognition, given their higher performance when dealing with some of the traditional ML problems [ 10 ].

DL techniques differ from traditional ML in some notable ways (see also Figure 2 ):

- Training a DNN implies the definition of a loss function, which is responsible for calculating the error made in the process given by the difference between the expected output value and that produced by the network. One of the most used loss functions in regression problems is the mean squared error (MSE) [ 30 ]. In the training phase, the weight vector that minimizes the loss function is adjusted, meaning it is not possible to obtain analytical solutions effectively. The loss function minimization method usually used is gradient descent [ 30 ].

- Activation functions are fundamental in the process of learning neural network models, as well as in the interpretation of complex nonlinear functions. The activation function adds nonlinear features to the model, allowing it to represent more than one linear function, which would not happen otherwise, no matter how many layers it had. The Sigmoid function is the most commonly used activation function in the early stages of studying neural networks [ 30 ].

- As their capacity to learn and adjust to data is greater than that of traditional ML models, it is more likely that overfitting situations will occur in DL models. For this reason, regularization represents a crucial and highly effective set of techniques used to reduce the generalization errors in ML. Some other techniques that can contribute to achieving this goal are increasing the size of the training dataset, stopping at an early point in the training phase, or randomly discarding a portion of the output of neurons during the training phase [ 30 ].

- In order to increase stability and reduce convergence times in DL algorithms, optimizers are used, with which greater efficiency in the hyperparameter adjustment process is also possible [ 30 ].

In the last decades, three main mathematical tools have been studied for image modeling and representation, mainly because of their proven modeling flexibility and adaptability. These methods are the ones based on probability statistics, wavelet analysis, and partial differential equations [ 34 , 35 ]. In image processing procedures, it is sometimes necessary to reduce the number of input data. An image can be translated into millions of pixels for tasks, such as classifications, meaning that data entry would make the processing very difficult. In order to overcome some difficulties, the image can be transformed into a reduced set of features, selecting and measuring some representative properties of raw input data in a more reduced form [ 2 ]. Since DL technologies can automatically mine and analyze the data characteristics of labeled data [ 13 , 14 ], this makes DL very suitable for image processing and segmentation applications [ 14 ]. Several approaches use autoencoders, a set of unsupervised algorithms, for feature selection and data dimensionality reduction [ 31 ].

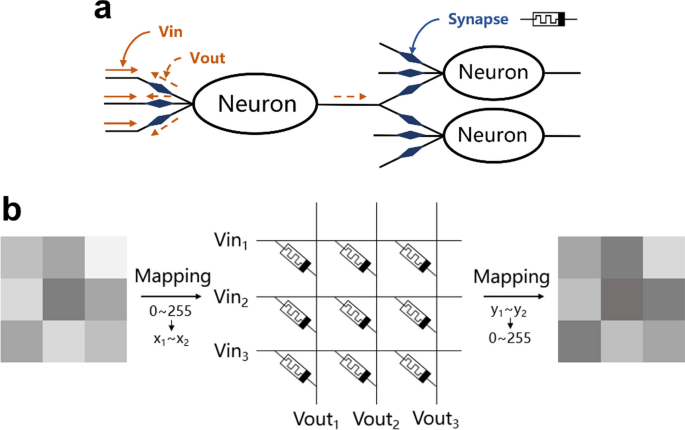

Among the many DL models, CNNs have been widely used in image processing problems, proving more powerful capabilities in image processing than traditional algorithms [ 36 ]. As shown in Figure 3 , a CNN, like a typical neural network, comprises an input layer, an output layer, and several hidden layers [ 37 ]. A single hidden layer in a CNN typically consists of a convolutional layer, a pooling layer, a fully connected layer [ 38 ], and a normalization layer.

Illustration of the structure of a CNN.

Additionally, the number of image-processing applications based on CNNs is also increasing daily [ 10 ]. Among the different DL structures, CNNs have proven to be more efficient in image recognition problems [ 20 ]. On the other hand, they can be used to improve image resolution, enhancing their applicability in real problems, such as the transmission or storage of images or videos [ 39 ].

DL models are frequently used in image segmentation and classification problems, as well as object recognition and image segmentation, and they have shown good results in natural language processing problems. As an example, face recognition applications have been extensively used in multiple real-life examples, such as airports and bank security and surveillance systems, as well as mobile phone functionalities [ 10 ].

There are several possible applications for image-processing techniques. There has been a fast development in terms of surveillance tools like CCTV cameras, making inspecting and analyzing footage more difficult for a human operator. Several studies show that human operators can miss a significant portion of the screen action after 20 to 40 minutes of intensive monitoring [ 18 ]. In fact, object detection has become a demanding study field in the last decade. The proliferation of high-powered computers and the availability of high-speed internet has allowed for new computer vision-based detection, which has been frequently used, for example, in human activity recognition [ 18 ], marine surveillance [ 40 ], pedestrian identification [ 18 ], and weapon detection [ 41 ].

One alternative application of ML in image-processing problems is image super-resolution (SR), a family of technologies that involve recovering a super-resolved image from a single image or a sequence of images of the same scene. ML applications have become the most mainstream topic in the single-image SR field, being effective at generating a high-resolution image from a single low-resolution input. The quality of training data and the computational demand remain the two major obstacles in this process [ 42 ].

3.3.2. Reinforcement Learning Concepts