Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Data Collection | Definition, Methods & Examples

Data Collection | Definition, Methods & Examples

Published on June 5, 2020 by Pritha Bhandari . Revised on June 21, 2023.

Data collection is a systematic process of gathering observations or measurements. Whether you are performing research for business, governmental or academic purposes, data collection allows you to gain first-hand knowledge and original insights into your research problem .

While methods and aims may differ between fields, the overall process of data collection remains largely the same. Before you begin collecting data, you need to consider:

- The aim of the research

- The type of data that you will collect

- The methods and procedures you will use to collect, store, and process the data

To collect high-quality data that is relevant to your purposes, follow these four steps.

Table of contents

Step 1: define the aim of your research, step 2: choose your data collection method, step 3: plan your data collection procedures, step 4: collect the data, other interesting articles, frequently asked questions about data collection.

Before you start the process of data collection, you need to identify exactly what you want to achieve. You can start by writing a problem statement : what is the practical or scientific issue that you want to address and why does it matter?

Next, formulate one or more research questions that precisely define what you want to find out. Depending on your research questions, you might need to collect quantitative or qualitative data :

- Quantitative data is expressed in numbers and graphs and is analyzed through statistical methods .

- Qualitative data is expressed in words and analyzed through interpretations and categorizations.

If your aim is to test a hypothesis , measure something precisely, or gain large-scale statistical insights, collect quantitative data. If your aim is to explore ideas, understand experiences, or gain detailed insights into a specific context, collect qualitative data. If you have several aims, you can use a mixed methods approach that collects both types of data.

- Your first aim is to assess whether there are significant differences in perceptions of managers across different departments and office locations.

- Your second aim is to gather meaningful feedback from employees to explore new ideas for how managers can improve.

Prevent plagiarism. Run a free check.

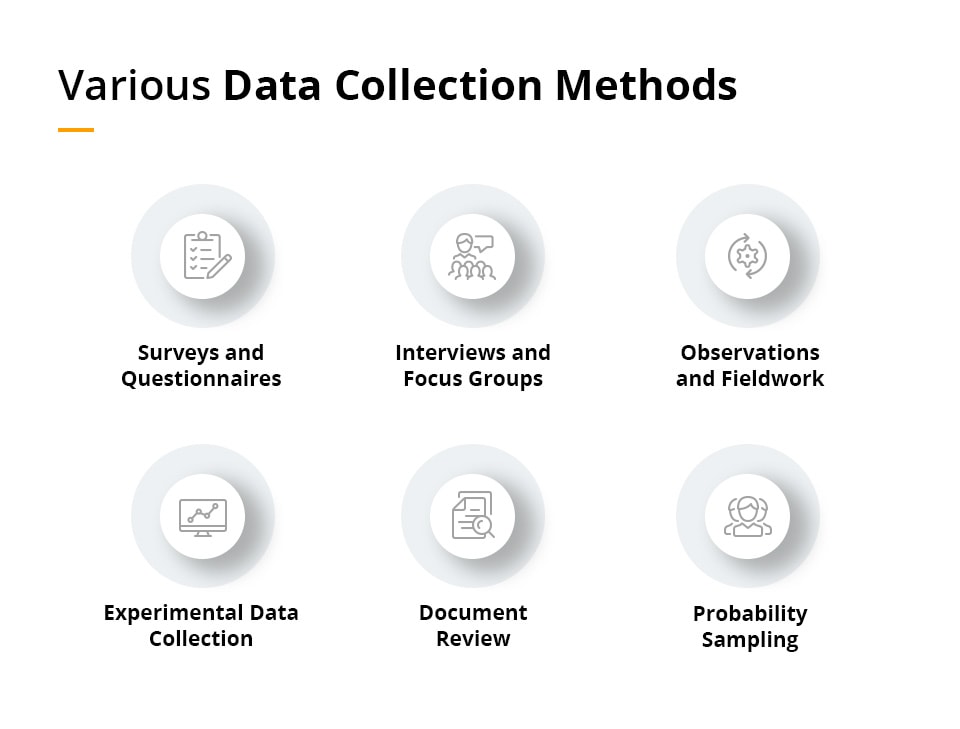

Based on the data you want to collect, decide which method is best suited for your research.

- Experimental research is primarily a quantitative method.

- Interviews , focus groups , and ethnographies are qualitative methods.

- Surveys , observations, archival research and secondary data collection can be quantitative or qualitative methods.

Carefully consider what method you will use to gather data that helps you directly answer your research questions.

| Method | When to use | How to collect data |

|---|---|---|

| Experiment | To test a causal relationship. | Manipulate variables and measure their effects on others. |

| Survey | To understand the general characteristics or opinions of a group of people. | Distribute a list of questions to a sample online, in person or over-the-phone. |

| Interview/focus group | To gain an in-depth understanding of perceptions or opinions on a topic. | Verbally ask participants open-ended questions in individual interviews or focus group discussions. |

| Observation | To understand something in its natural setting. | Measure or survey a sample without trying to affect them. |

| Ethnography | To study the culture of a community or organization first-hand. | Join and participate in a community and record your observations and reflections. |

| Archival research | To understand current or historical events, conditions or practices. | Access manuscripts, documents or records from libraries, depositories or the internet. |

| Secondary data collection | To analyze data from populations that you can’t access first-hand. | Find existing datasets that have already been collected, from sources such as government agencies or research organizations. |

When you know which method(s) you are using, you need to plan exactly how you will implement them. What procedures will you follow to make accurate observations or measurements of the variables you are interested in?

For instance, if you’re conducting surveys or interviews, decide what form the questions will take; if you’re conducting an experiment, make decisions about your experimental design (e.g., determine inclusion and exclusion criteria ).

Operationalization

Sometimes your variables can be measured directly: for example, you can collect data on the average age of employees simply by asking for dates of birth. However, often you’ll be interested in collecting data on more abstract concepts or variables that can’t be directly observed.

Operationalization means turning abstract conceptual ideas into measurable observations. When planning how you will collect data, you need to translate the conceptual definition of what you want to study into the operational definition of what you will actually measure.

- You ask managers to rate their own leadership skills on 5-point scales assessing the ability to delegate, decisiveness and dependability.

- You ask their direct employees to provide anonymous feedback on the managers regarding the same topics.

You may need to develop a sampling plan to obtain data systematically. This involves defining a population , the group you want to draw conclusions about, and a sample, the group you will actually collect data from.

Your sampling method will determine how you recruit participants or obtain measurements for your study. To decide on a sampling method you will need to consider factors like the required sample size, accessibility of the sample, and timeframe of the data collection.

Standardizing procedures

If multiple researchers are involved, write a detailed manual to standardize data collection procedures in your study.

This means laying out specific step-by-step instructions so that everyone in your research team collects data in a consistent way – for example, by conducting experiments under the same conditions and using objective criteria to record and categorize observations. This helps you avoid common research biases like omitted variable bias or information bias .

This helps ensure the reliability of your data, and you can also use it to replicate the study in the future.

Creating a data management plan

Before beginning data collection, you should also decide how you will organize and store your data.

- If you are collecting data from people, you will likely need to anonymize and safeguard the data to prevent leaks of sensitive information (e.g. names or identity numbers).

- If you are collecting data via interviews or pencil-and-paper formats, you will need to perform transcriptions or data entry in systematic ways to minimize distortion.

- You can prevent loss of data by having an organization system that is routinely backed up.

Finally, you can implement your chosen methods to measure or observe the variables you are interested in.

The closed-ended questions ask participants to rate their manager’s leadership skills on scales from 1–5. The data produced is numerical and can be statistically analyzed for averages and patterns.

To ensure that high quality data is recorded in a systematic way, here are some best practices:

- Record all relevant information as and when you obtain data. For example, note down whether or how lab equipment is recalibrated during an experimental study.

- Double-check manual data entry for errors.

- If you collect quantitative data, you can assess the reliability and validity to get an indication of your data quality.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Likert scale

Research bias

- Implicit bias

- Framing effect

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

Data collection is the systematic process by which observations or measurements are gathered in research. It is used in many different contexts by academics, governments, businesses, and other organizations.

When conducting research, collecting original data has significant advantages:

- You can tailor data collection to your specific research aims (e.g. understanding the needs of your consumers or user testing your website)

- You can control and standardize the process for high reliability and validity (e.g. choosing appropriate measurements and sampling methods )

However, there are also some drawbacks: data collection can be time-consuming, labor-intensive and expensive. In some cases, it’s more efficient to use secondary data that has already been collected by someone else, but the data might be less reliable.

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to systematically measure variables and test hypotheses . Qualitative methods allow you to explore concepts and experiences in more detail.

Reliability and validity are both about how well a method measures something:

- Reliability refers to the consistency of a measure (whether the results can be reproduced under the same conditions).

- Validity refers to the accuracy of a measure (whether the results really do represent what they are supposed to measure).

If you are doing experimental research, you also have to consider the internal and external validity of your experiment.

Operationalization means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioral avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalize the variables that you want to measure.

In mixed methods research , you use both qualitative and quantitative data collection and analysis methods to answer your research question .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 21). Data Collection | Definition, Methods & Examples. Scribbr. Retrieved June 10, 2024, from https://www.scribbr.com/methodology/data-collection/

Is this article helpful?

Pritha Bhandari

Other students also liked, qualitative vs. quantitative research | differences, examples & methods, sampling methods | types, techniques & examples, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

What are the Data Collection Tools and How to Use Them?

Ever wondered how researchers are able to collect enormous amounts of data and use it effectively while ensuring accuracy and reliability? With the explosion of AI research over the past decade, data collection has become even more critical. Data collection tools are vital components in both qualitative and quantitative research , as they help in collecting and analyzing data effectively.

The process involves the use of different techniques and tools that vary depending on the type of research being conducted. But with so many different tools available, it can sometimes be overwhelming to know which ones to use.

So, in this article, we’ll discuss some of the commonly used data collection tools, how they work, and their application in qualitative and quantitative research. By the end of the article, you’ll be confident about which data collection method is the one for you. Let’s get going!

What are the Data Collection Methods and How to Use Them? (Tools for Qualitative and Quantitative Research)

Data collection – qualitative vs. quantitative.

When researchers conduct studies or experiments, they need to collect data to answer their research questions, which is where data collection tools come in. Data collection tools are methods or instruments that researchers use to gather and analyze data .

Importance of Data Collection Tools

Data collection tools are essential for conducting reliable and accurate research. They provide a structured way of gathering information, which helps ensure that data is collected in a consistent and organized manner. This is important because it helps reduce errors and bias in the data, which can impact the validity and reliability of research findings. Moreover, using data collection tools can also help you analyze and interpret data more accurately and confidently.

For example, if you’re conducting a survey, using a standardized questionnaire will make it easier to compare responses and identify trends, leading to more meaningful insights and better-informed decisions. Hence, data collection tools are a vital part of the research process, and help ensure that your research is credible and trustworthy.

5 Types of Data Collection Tools

Let’s explore these methods in detail below, along with their real-life examples.

Interviews are amongst the most primary data collection tools in qualitative research. They involve a one-on-one conversation between the researcher and the participant and can be either structured or unstructured , depending on the nature of the research. Structured interviews have a predetermined set of questions, while unstructured interviews are more open-ended and allow the researcher to explore the participant’s perspective in-depth.

Interviews are useful in collecting rich and detailed data about a specific topic or experience, and they provide an opportunity for the researcher to understand the participant’s perspective in-depth.

Example : A researcher conducting a study on the experiences of cancer patients can use interviews to collect data about the patients’ experiences with their disease, including their emotional responses, coping strategies, and interactions with healthcare providers.

Observations involve watching and recording the behavior of individuals or groups in a natural or controlled setting. They are commonly used in qualitative research and are useful in collecting data about social interactions and behaviors. Observations can be structured or unstructured and can be conducted overtly or covertly, whatever the need of the research is.

Example : A researcher studying the attitudes of consumers towards a new product can use focus groups to collect data about how consumers perceive the product, what they like and dislike about it, and how they would use it in their daily lives.

Case studies involve an in-depth analysis of a specific individual, group, or situation and are useful in collecting detailed data about a specific phenomenon. Case studies can involve interviews, observations, and document analysis and can provide a rich understanding of the topic being studied.

The Importance of Data Analysis in Research

The 5 methods of collecting data explained, data analytics vs. business analytics – top 5 differences, how to use data collection tools.

Once the research plan is completed, the next step is to choose the right data collection tool. It’s essential to select a tool/method that aligns with the research question, methodology, and target population. You should also pay strong attention to the associated strengths and limitations of each method and choose the one that is most appropriate for their research.

Creating a protocol that outlines the steps involved in data collection and data recording is important to ensure consistency in the process. Additionally, training data collectors and testing the tool can help in identifying and addressing any potential issues.

This step is where you actually collect the data. It involves administering the tool to the target population. Ensuring that the data collection process is ethical and that all participants give informed consent is essential. The data collection process should be done systematically, and all data should be recorded accurately to ensure reliability and validity.

For example, if the research objective is to compare the means of two groups, a t-test may be used for statistical analysis. On the other hand, if the research objective is to explore a phenomenon, the qualitative analysis may be more appropriate.

Data collection tools are critical in both qualitative and quantitative research, and they help in collecting accurate and reliable data to build a solid foundation for every research. Selecting the appropriate tool depends on several factors, including the research question, methodology, and target population. Therefore, careful planning, proper preparation, systematic data collection, and accurate data analysis are essential for successful research outcomes.

Lastly, let’s discuss some of the most frequently asked questions along with their answers so you can jump straight to them if you want to.

Qualitative data collection tools are used to collect non-numerical data, such as attitudes, beliefs, and experiences, while quantitative data collection tools are used to collect numerical data, such as measurements and statistics.

Examples of quantitative data collection tools include surveys, experiments, and statistical analysis.

Choosing the right data collection tool is crucial as it can have a significant impact on the accuracy and validity of the data collected. Using an inappropriate tool can lead to biased or incomplete data, making it difficult to draw valid conclusions or make informed decisions.

As an IT Engineer, who is passionate about learning and sharing. I have worked and learned quite a bit from Data Engineers, Data Analysts, Business Analysts, and Key Decision Makers almost for the past 5 years. Interested in learning more about Data Science and How to leverage it for better decision-making in my business and hopefully help you do the same in yours.

Recent Posts

In today’s fast-paced business landscape, it is crucial to make informed decisions to stay in the competition which makes it important to understand the concept of the different characteristics and...

- 7 Data Collection Methods & Tools For Research

- Data Collection

The underlying need for Data collection is to capture quality evidence that seeks to answer all the questions that have been posed. Through data collection businesses or management can deduce quality information that is a prerequisite for making informed decisions.

To improve the quality of information, it is expedient that data is collected so that you can draw inferences and make informed decisions on what is considered factual.

At the end of this article, you would understand why picking the best data collection method is necessary for achieving your set objective.

Sign up on Formplus Builder to create your preferred online surveys or questionnaire for data collection. You don’t need to be tech-savvy! Start creating quality questionnaires with Formplus.

What is Data Collection?

Data collection is a methodical process of gathering and analyzing specific information to proffer solutions to relevant questions and evaluate the results. It focuses on finding out all there is to a particular subject matter. Data is collected to be further subjected to hypothesis testing which seeks to explain a phenomenon.

Hypothesis testing eliminates assumptions while making a proposition from the basis of reason.

For collectors of data, there is a range of outcomes for which the data is collected. But the key purpose for which data is collected is to put a researcher in a vantage position to make predictions about future probabilities and trends.

The core forms in which data can be collected are primary and secondary data. While the former is collected by a researcher through first-hand sources, the latter is collected by an individual other than the user.

Types of Data Collection

Before broaching the subject of the various types of data collection. It is pertinent to note that data collection in itself falls under two broad categories; Primary data collection and secondary data collection.

Primary Data Collection

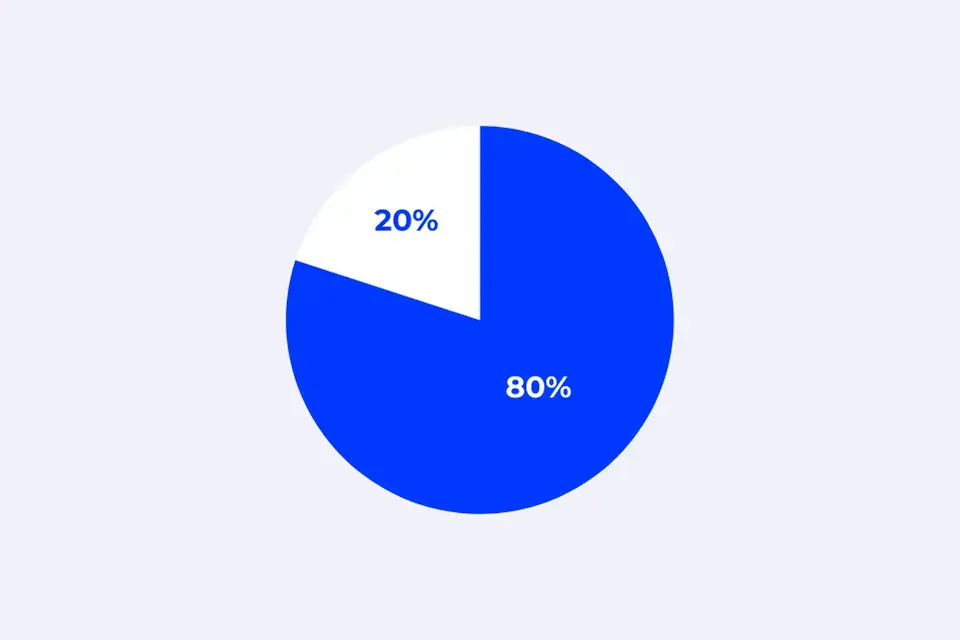

Primary data collection by definition is the gathering of raw data collected at the source. It is a process of collecting the original data collected by a researcher for a specific research purpose. It could be further analyzed into two segments; qualitative research and quantitative data collection methods.

- Qualitative Research Method

The qualitative research methods of data collection do not involve the collection of data that involves numbers or a need to be deduced through a mathematical calculation, rather it is based on the non-quantifiable elements like the feeling or emotion of the researcher. An example of such a method is an open-ended questionnaire.

- Quantitative Method

Quantitative methods are presented in numbers and require a mathematical calculation to deduce. An example would be the use of a questionnaire with close-ended questions to arrive at figures to be calculated Mathematically. Also, methods of correlation and regression, mean, mode and median.

Read Also: 15 Reasons to Choose Quantitative over Qualitative Research

Secondary Data Collection

Secondary data collection, on the other hand, is referred to as the gathering of second-hand data collected by an individual who is not the original user. It is the process of collecting data that is already existing, be it already published books, journals, and/or online portals. In terms of ease, it is much less expensive and easier to collect.

Your choice between Primary data collection and secondary data collection depends on the nature, scope, and area of your research as well as its aims and objectives.

Importance of Data Collection

There are a bunch of underlying reasons for collecting data, especially for a researcher. Walking you through them, here are a few reasons;

- Integrity of the Research

A key reason for collecting data, be it through quantitative or qualitative methods is to ensure that the integrity of the research question is indeed maintained.

- Reduce the likelihood of errors

The correct use of appropriate data collection of methods reduces the likelihood of errors consistent with the results.

- Decision Making

To minimize the risk of errors in decision-making, it is important that accurate data is collected so that the researcher doesn’t make uninformed decisions.

- Save Cost and Time

Data collection saves the researcher time and funds that would otherwise be misspent without a deeper understanding of the topic or subject matter.

- To support a need for a new idea, change, and/or innovation

To prove the need for a change in the norm or the introduction of new information that will be widely accepted, it is important to collect data as evidence to support these claims.

What is a Data Collection Tool?

Data collection tools refer to the devices/instruments used to collect data, such as a paper questionnaire or computer-assisted interviewing system. Case Studies, Checklists, Interviews, Observation sometimes, and Surveys or Questionnaires are all tools used to collect data.

It is important to decide on the tools for data collection because research is carried out in different ways and for different purposes. The objective behind data collection is to capture quality evidence that allows analysis to lead to the formulation of convincing and credible answers to the posed questions.

The objective behind data collection is to capture quality evidence that allows analysis to lead to the formulation of convincing and credible answers to the questions that have been posed – Click to Tweet

The Formplus online data collection tool is perfect for gathering primary data, i.e. raw data collected from the source. You can easily get data with at least three data collection methods with our online and offline data-gathering tool. I.e Online Questionnaires , Focus Groups, and Reporting.

In our previous articles, we’ve explained why quantitative research methods are more effective than qualitative methods . However, with the Formplus data collection tool, you can gather all types of primary data for academic, opinion or product research.

Top Data Collection Methods and Tools for Academic, Opinion, or Product Research

The following are the top 7 data collection methods for Academic, Opinion-based, or product research. Also discussed in detail are the nature, pros, and cons of each one. At the end of this segment, you will be best informed about which method best suits your research.

An interview is a face-to-face conversation between two individuals with the sole purpose of collecting relevant information to satisfy a research purpose. Interviews are of different types namely; Structured, Semi-structured , and unstructured with each having a slight variation from the other.

Use this interview consent form template to let an interviewee give you consent to use data gotten from your interviews for investigative research purposes.

- Structured Interviews – Simply put, it is a verbally administered questionnaire. In terms of depth, it is surface level and is usually completed within a short period. For speed and efficiency, it is highly recommendable, but it lacks depth.

- Semi-structured Interviews – In this method, there subsist several key questions which cover the scope of the areas to be explored. It allows a little more leeway for the researcher to explore the subject matter.

- Unstructured Interviews – It is an in-depth interview that allows the researcher to collect a wide range of information with a purpose. An advantage of this method is the freedom it gives a researcher to combine structure with flexibility even though it is more time-consuming.

- In-depth information

- Freedom of flexibility

- Accurate data.

- Time-consuming

- Expensive to collect.

What are The Best Data Collection Tools for Interviews?

For collecting data through interviews, here are a few tools you can use to easily collect data.

- Audio Recorder

An audio recorder is used for recording sound on disc, tape, or film. Audio information can meet the needs of a wide range of people, as well as provide alternatives to print data collection tools.

- Digital Camera

An advantage of a digital camera is that it can be used for transmitting those images to a monitor screen when the need arises.

A camcorder is used for collecting data through interviews. It provides a combination of both an audio recorder and a video camera. The data provided is qualitative in nature and allows the respondents to answer questions asked exhaustively. If you need to collect sensitive information during an interview, a camcorder might not work for you as you would need to maintain your subject’s privacy.

Want to conduct an interview for qualitative data research or a special report? Use this online interview consent form template to allow the interviewee to give their consent before you use the interview data for research or report. With premium features like e-signature, upload fields, form security, etc., Formplus Builder is the perfect tool to create your preferred online consent forms without coding experience.

- QUESTIONNAIRES

This is the process of collecting data through an instrument consisting of a series of questions and prompts to receive a response from the individuals it is administered to. Questionnaires are designed to collect data from a group.

For clarity, it is important to note that a questionnaire isn’t a survey, rather it forms a part of it. A survey is a process of data gathering involving a variety of data collection methods, including a questionnaire.

On a questionnaire, there are three kinds of questions used. They are; fixed-alternative, scale, and open-ended. With each of the questions tailored to the nature and scope of the research.

- Can be administered in large numbers and is cost-effective.

- It can be used to compare and contrast previous research to measure change.

- Easy to visualize and analyze.

- Questionnaires offer actionable data.

- Respondent identity is protected.

- Questionnaires can cover all areas of a topic.

- Relatively inexpensive.

- Answers may be dishonest or the respondents lose interest midway.

- Questionnaires can’t produce qualitative data.

- Questions might be left unanswered.

- Respondents may have a hidden agenda.

- Not all questions can be analyzed easily.

What are the Best Data Collection Tools for Questionnaires?

- Formplus Online Questionnaire

Formplus lets you create powerful forms to help you collect the information you need. Formplus helps you create the online forms that you like. The Formplus online questionnaire form template to get actionable trends and measurable responses. Conduct research, optimize knowledge of your brand or just get to know an audience with this form template. The form template is fast, free and fully customizable.

- Paper Questionnaire

A paper questionnaire is a data collection tool consisting of a series of questions and/or prompts for the purpose of gathering information from respondents. Mostly designed for statistical analysis of the responses, they can also be used as a form of data collection.

By definition, data reporting is the process of gathering and submitting data to be further subjected to analysis. The key aspect of data reporting is reporting accurate data because inaccurate data reporting leads to uninformed decision-making.

- Informed decision-making.

- Easily accessible.

- Self-reported answers may be exaggerated.

- The results may be affected by bias.

- Respondents may be too shy to give out all the details.

- Inaccurate reports will lead to uninformed decisions.

What are the Best Data Collection Tools for Reporting?

Reporting tools enable you to extract and present data in charts, tables, and other visualizations so users can find useful information. You could source data for reporting from Non-Governmental Organizations (NGO) reports, newspapers, website articles, and hospital records.

- NGO Reports

Contained in NGO report is an in-depth and comprehensive report on the activities carried out by the NGO, covering areas such as business and human rights. The information contained in these reports is research-specific and forms an acceptable academic base for collecting data. NGOs often focus on development projects which are organized to promote particular causes.

Newspaper data are relatively easy to collect and are sometimes the only continuously available source of event data. Even though there is a problem of bias in newspaper data, it is still a valid tool in collecting data for Reporting.

- Website Articles

Gathering and using data contained in website articles is also another tool for data collection. Collecting data from web articles is a quicker and less expensive data collection Two major disadvantages of using this data reporting method are biases inherent in the data collection process and possible security/confidentiality concerns.

- Hospital Care records

Health care involves a diverse set of public and private data collection systems, including health surveys, administrative enrollment and billing records, and medical records, used by various entities, including hospitals, CHCs, physicians, and health plans. The data provided is clear, unbiased and accurate, but must be obtained under legal means as medical data is kept with the strictest regulations.

- EXISTING DATA

This is the introduction of new investigative questions in addition to/other than the ones originally used when the data was initially gathered. It involves adding measurement to a study or research. An example would be sourcing data from an archive.

- Accuracy is very high.

- Easily accessible information.

- Problems with evaluation.

- Difficulty in understanding.

What are the Best Data Collection Tools for Existing Data?

The concept of Existing data means that data is collected from existing sources to investigate research questions other than those for which the data were originally gathered. Tools to collect existing data include:

- Research Journals – Unlike newspapers and magazines, research journals are intended for an academic or technical audience, not general readers. A journal is a scholarly publication containing articles written by researchers, professors, and other experts.

- Surveys – A survey is a data collection tool for gathering information from a sample population, with the intention of generalizing the results to a larger population. Surveys have a variety of purposes and can be carried out in many ways depending on the objectives to be achieved.

- OBSERVATION

This is a data collection method by which information on a phenomenon is gathered through observation. The nature of the observation could be accomplished either as a complete observer, an observer as a participant, a participant as an observer, or as a complete participant. This method is a key base for formulating a hypothesis.

- Easy to administer.

- There subsists a greater accuracy with results.

- It is a universally accepted practice.

- It diffuses the situation of the unwillingness of respondents to administer a report.

- It is appropriate for certain situations.

- Some phenomena aren’t open to observation.

- It cannot be relied upon.

- Bias may arise.

- It is expensive to administer.

- Its validity cannot be predicted accurately.

What are the Best Data Collection Tools for Observation?

Observation involves the active acquisition of information from a primary source. Observation can also involve the perception and recording of data via the use of scientific instruments. The best tools for Observation are:

- Checklists – state-specific criteria, that allow users to gather information and make judgments about what they should know in relation to the outcomes. They offer systematic ways of collecting data about specific behaviors, knowledge, and skills.

- Direct observation – This is an observational study method of collecting evaluative information. The evaluator watches the subject in his or her usual environment without altering that environment.

FOCUS GROUPS

The opposite of quantitative research which involves numerical-based data, this data collection method focuses more on qualitative research. It falls under the primary category of data based on the feelings and opinions of the respondents. This research involves asking open-ended questions to a group of individuals usually ranging from 6-10 people, to provide feedback.

- Information obtained is usually very detailed.

- Cost-effective when compared to one-on-one interviews.

- It reflects speed and efficiency in the supply of results.

- Lacking depth in covering the nitty-gritty of a subject matter.

- Bias might still be evident.

- Requires interviewer training

- The researcher has very little control over the outcome.

- A few vocal voices can drown out the rest.

- Difficulty in assembling an all-inclusive group.

What are the Best Data Collection Tools for Focus Groups?

A focus group is a data collection method that is tightly facilitated and structured around a set of questions. The purpose of the meeting is to extract from the participants’ detailed responses to these questions. The best tools for tackling Focus groups are:

- Two-Way – One group watches another group answer the questions posed by the moderator. After listening to what the other group has to offer, the group that listens is able to facilitate more discussion and could potentially draw different conclusions .

- Dueling-Moderator – There are two moderators who play the devil’s advocate. The main positive of the dueling-moderator focus group is to facilitate new ideas by introducing new ways of thinking and varying viewpoints.

- COMBINATION RESEARCH

This method of data collection encompasses the use of innovative methods to enhance participation in both individuals and groups. Also under the primary category, it is a combination of Interviews and Focus Groups while collecting qualitative data . This method is key when addressing sensitive subjects.

- Encourage participants to give responses.

- It stimulates a deeper connection between participants.

- The relative anonymity of respondents increases participation.

- It improves the richness of the data collected.

- It costs the most out of all the top 7.

- It’s the most time-consuming.

What are the Best Data Collection Tools for Combination Research?

The Combination Research method involves two or more data collection methods, for instance, interviews as well as questionnaires or a combination of semi-structured telephone interviews and focus groups. The best tools for combination research are:

- Online Survey – The two tools combined here are online interviews and the use of questionnaires. This is a questionnaire that the target audience can complete over the Internet. It is timely, effective, and efficient. Especially since the data to be collected is quantitative in nature.

- Dual-Moderator – The two tools combined here are focus groups and structured questionnaires. The structured questionnaires give a direction as to where the research is headed while two moderators take charge of the proceedings. Whilst one ensures the focus group session progresses smoothly, the other makes sure that the topics in question are all covered. Dual-moderator focus groups typically result in a more productive session and essentially lead to an optimum collection of data.

Why Formplus is the Best Data Collection Tool

- Vast Options for Form Customization

With Formplus, you can create your unique survey form. With options to change themes, font color, font, font type, layout, width, and more, you can create an attractive survey form. The builder also gives you as many features as possible to choose from and you do not need to be a graphic designer to create a form.

- Extensive Analytics

Form Analytics, a feature in formplus helps you view the number of respondents, unique visits, total visits, abandonment rate, and average time spent before submission. This tool eliminates the need for a manual calculation of the received data and/or responses as well as the conversion rate for your poll.

- Embed Survey Form on Your Website

Copy the link to your form and embed it as an iframe which will automatically load as your website loads, or as a popup that opens once the respondent clicks on the link. Embed the link on your Twitter page to give instant access to your followers.

.png?resize=845%2C418&ssl=1)

- Geolocation Support

The geolocation feature on Formplus lets you ascertain where individual responses are coming. It utilises Google Maps to pinpoint the longitude and latitude of the respondent, to the nearest accuracy, along with the responses.

- Multi-Select feature

This feature helps to conserve horizontal space as it allows you to put multiple options in one field. This translates to including more information on the survey form.

Read Also: 10 Reasons to Use Formplus for Online Data Collection

How to Use Formplus to collect online data in 7 simple steps.

- Register or sign up on Formplus builder : Start creating your preferred questionnaire or survey by signing up with either your Google, Facebook, or Email account.

Formplus gives you a free plan with basic features you can use to collect online data. Pricing plans with vast features starts at $20 monthly, with reasonable discounts for Education and Non-Profit Organizations.

2. Input your survey title and use the form builder choice options to start creating your surveys.

Use the choice option fields like single select, multiple select, checkbox, radio, and image choices to create your preferred multi-choice surveys online.

3. Do you want customers to rate any of your products or services delivery?

Use the rating to allow survey respondents rate your products or services. This is an ideal quantitative research method of collecting data.

4. Beautify your online questionnaire with Formplus Customisation features.

- Change the theme color

- Add your brand’s logo and image to the forms

- Change the form width and layout

- Edit the submission button if you want

- Change text font color and sizes

- Do you have already made custom CSS to beautify your questionnaire? If yes, just copy and paste it to the CSS option.

5. Edit your survey questionnaire settings for your specific needs

Choose where you choose to store your files and responses. Select a submission deadline, choose a timezone, limit respondents’ responses, enable Captcha to prevent spam, and collect location data of customers.

Set an introductory message to respondents before they begin the survey, toggle the “start button” post final submission message or redirect respondents to another page when they submit their questionnaires.

Change the Email Notifications inventory and initiate an autoresponder message to all your survey questionnaire respondents. You can also transfer your forms to other users who can become form administrators.

6. Share links to your survey questionnaire page with customers.

There’s an option to copy and share the link as “Popup” or “Embed code” The data collection tool automatically creates a QR Code for Survey Questionnaire which you can download and share as appropriate.

Congratulations if you’ve made it to this stage. You can start sharing the link to your survey questionnaire with your customers.

7. View your Responses to the Survey Questionnaire

Toggle with the presentation of your summary from the options. Whether as a single, table or cards.

8. Allow Formplus Analytics to interpret your Survey Questionnaire Data

With online form builder analytics, a business can determine;

- The number of times the survey questionnaire was filled

- The number of customers reached

- Abandonment Rate: The rate at which customers exit the form without submitting it.

- Conversion Rate: The percentage of customers who completed the online form

- Average time spent per visit

- Location of customers/respondents.

- The type of device used by the customer to complete the survey questionnaire.

7 Tips to Create The Best Surveys For Data Collections

- Define the goal of your survey – Once the goal of your survey is outlined, it will aid in deciding which questions are the top priority. A clear attainable goal would, for example, mirror a clear reason as to why something is happening. e.g. “The goal of this survey is to understand why Employees are leaving an establishment.”

- Use close-ended clearly defined questions – Avoid open-ended questions and ensure you’re not suggesting your preferred answer to the respondent. If possible offer a range of answers with choice options and ratings.

- Survey outlook should be attractive and Inviting – An attractive-looking survey encourages a higher number of recipients to respond to the survey. Check out Formplus Builder for colorful options to integrate into your survey design. You could use images and videos to keep participants glued to their screens.

- Assure Respondents about the safety of their data – You want your respondents to be assured whilst disclosing details of their personal information to you. It’s your duty to inform the respondents that the data they provide is confidential and only collected for the purpose of research.

- Ensure your survey can be completed in record time – Ideally, in a typical survey, users should be able to respond in 100 seconds. It is pertinent to note that they, the respondents, are doing you a favor. Don’t stress them. Be brief and get straight to the point.

- Do a trial survey – Preview your survey before sending out your surveys to the intended respondents. Make a trial version which you’ll send to a few individuals. Based on their responses, you can draw inferences and decide whether or not your survey is ready for the big time.

- Attach a reward upon completion for users – Give your respondents something to look forward to at the end of the survey. Think of it as a penny for their troubles. It could well be the encouragement they need to not abandon the survey midway.

Try out Formplus today . You can start making your own surveys with the Formplus online survey builder. By applying these tips, you will definitely get the most out of your online surveys.

Top Survey Templates For Data Collection

- Customer Satisfaction Survey Template

On the template, you can collect data to measure customer satisfaction over key areas like the commodity purchase and the level of service they received. It also gives insight as to which products the customer enjoyed, how often they buy such a product, and whether or not the customer is likely to recommend the product to a friend or acquaintance.

- Demographic Survey Template

With this template, you would be able to measure, with accuracy, the ratio of male to female, age range, and the number of unemployed persons in a particular country as well as obtain their personal details such as names and addresses.

Respondents are also able to state their religious and political views about the country under review.

- Feedback Form Template

Contained in the template for the online feedback form is the details of a product and/or service used. Identifying this product or service and documenting how long the customer has used them.

The overall satisfaction is measured as well as the delivery of the services. The likelihood that the customer also recommends said product is also measured.

- Online Questionnaire Template

The online questionnaire template houses the respondent’s data as well as educational qualifications to collect information to be used for academic research.

Respondents can also provide their gender, race, and field of study as well as present living conditions as prerequisite data for the research study.

- Student Data Sheet Form Template

The template is a data sheet containing all the relevant information of a student. The student’s name, home address, guardian’s name, record of attendance as well as performance in school is well represented on this template. This is a perfect data collection method to deploy for a school or an education organization.

Also included is a record for interaction with others as well as a space for a short comment on the overall performance and attitude of the student.

- Interview Consent Form Template

This online interview consent form template allows the interviewee to sign off their consent to use the interview data for research or report to journalists. With premium features like short text fields, upload, e-signature, etc., Formplus Builder is the perfect tool to create your preferred online consent forms without coding experience.

What is the Best Data Collection Method for Qualitative Data?

Answer: Combination Research

The best data collection method for a researcher for gathering qualitative data which generally is data relying on the feelings, opinions, and beliefs of the respondents would be Combination Research.

The reason why combination research is the best fit is that it encompasses the attributes of Interviews and Focus Groups. It is also useful when gathering data that is sensitive in nature. It can be described as an all-purpose quantitative data collection method.

Above all, combination research improves the richness of data collected when compared with other data collection methods for qualitative data.

What is the Best Data Collection Method for Quantitative Research Data?

Ans: Questionnaire

The best data collection method a researcher can employ in gathering quantitative data which takes into consideration data that can be represented in numbers and figures that can be deduced mathematically is the Questionnaire.

These can be administered to a large number of respondents while saving costs. For quantitative data that may be bulky or voluminous in nature, the use of a Questionnaire makes such data easy to visualize and analyze.

Another key advantage of the Questionnaire is that it can be used to compare and contrast previous research work done to measure changes.

Technology-Enabled Data Collection Methods

There are so many diverse methods available now in the world because technology has revolutionized the way data is being collected. It has provided efficient and innovative methods that anyone, especially researchers and organizations. Below are some technology-enabled data collection methods:

- Online Surveys: Online surveys have gained popularity due to their ease of use and wide reach. You can distribute them through email, social media, or embed them on websites. Online surveys allow you to quickly complete data collection, automated data capture, and real-time analysis. Online surveys also offer features like skip logic, validation checks, and multimedia integration.

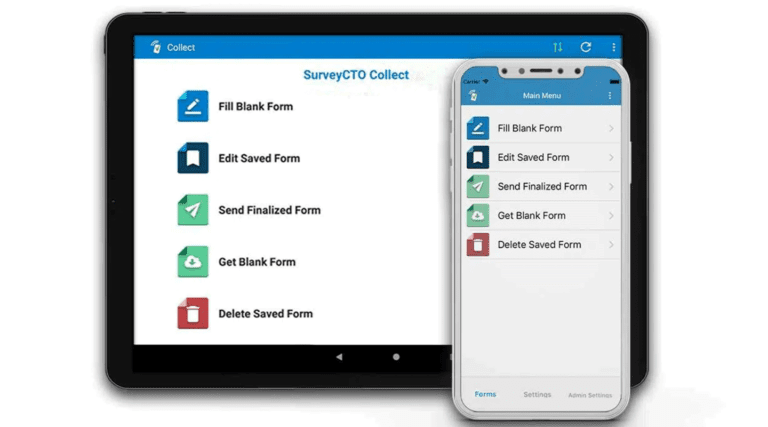

- Mobile Surveys: With the widespread use of smartphones, mobile surveys’ popularity is also on the rise. Mobile surveys leverage the capabilities of mobile devices, and this allows respondents to participate at their convenience. This includes multimedia elements, location-based information, and real-time feedback. Mobile surveys are the best for capturing in-the-moment experiences or opinions.

- Social Media Listening: Social media platforms are a good source of unstructured data that you can analyze to gain insights into customer sentiment and trends. Social media listening involves monitoring and analyzing social media conversations, mentions, and hashtags to understand public opinion, identify emerging topics, and assess brand reputation.

- Wearable Devices and Sensors: You can embed wearable devices, such as fitness trackers or smartwatches, and sensors in everyday objects to capture continuous data on various physiological and environmental variables. This data can provide you with insights into health behaviors, activity patterns, sleep quality, and environmental conditions, among others.

- Big Data Analytics: Big data analytics leverages large volumes of structured and unstructured data from various sources, such as transaction records, social media, and internet browsing. Advanced analytics techniques, like machine learning and natural language processing, can extract meaningful insights and patterns from this data, enabling organizations to make data-driven decisions.

Read Also: How Technology is Revolutionizing Data Collection

Faulty Data Collection Practices – Common Mistakes & Sources of Error

While technology-enabled data collection methods offer numerous advantages, there are some pitfalls and sources of error that you should be aware of. Here are some common mistakes and sources of error in data collection:

- Population Specification Error: Population specification error occurs when the target population is not clearly defined or misidentified. This error leads to a mismatch between the research objectives and the actual population being studied, resulting in biased or inaccurate findings.

- Sample Frame Error: Sample frame error occurs when the sampling frame, the list or source from which the sample is drawn, does not adequately represent the target population. This error can introduce selection bias and affect the generalizability of the findings.

- Selection Error: Selection error occurs when the process of selecting participants or units for the study introduces bias. It can happen due to nonrandom sampling methods, inadequate sampling techniques, or self-selection bias. Selection error compromises the representativeness of the sample and affects the validity of the results.

- Nonresponse Error: Nonresponse error occurs when selected participants choose not to participate or fail to respond to the data collection effort. Nonresponse bias can result in an unrepresentative sample if those who choose not to respond differ systematically from those who do respond. Efforts should be made to mitigate nonresponse and encourage participation to minimize this error.

- Measurement Error: Measurement error arises from inaccuracies or inconsistencies in the measurement process. It can happen due to poorly designed survey instruments, ambiguous questions, respondent bias, or errors in data entry or coding. Measurement errors can lead to distorted or unreliable data, affecting the validity and reliability of the findings.

In order to mitigate these errors and ensure high-quality data collection, you should carefully plan your data collection procedures, and validate measurement tools. You should also use appropriate sampling techniques, employ randomization where possible, and minimize nonresponse through effective communication and incentives. Ensure you conduct regular checks and implement validation processes, and data cleaning procedures to identify and rectify errors during data analysis.

Best Practices for Data Collection

- Clearly Define Objectives: Clearly define the research objectives and questions to guide the data collection process. This helps ensure that the collected data aligns with the research goals and provides relevant insights.

- Plan Ahead: Develop a detailed data collection plan that includes the timeline, resources needed, and specific procedures to follow. This helps maintain consistency and efficiency throughout the data collection process.

- Choose the Right Method: Select data collection methods that are appropriate for the research objectives and target population. Consider factors such as feasibility, cost-effectiveness, and the ability to capture the required data accurately.

- Pilot Test : Before full-scale data collection, conduct a pilot test to identify any issues with the data collection instruments or procedures. This allows for refinement and improvement before data collection with the actual sample.

- Train Data Collectors: If data collection involves human interaction, ensure that data collectors are properly trained on the data collection protocols, instruments, and ethical considerations. Consistent training helps minimize errors and maintain data quality.

- Maintain Consistency: Follow standardized procedures throughout the data collection process to ensure consistency across data collectors and time. This includes using consistent measurement scales, instructions, and data recording methods.

- Minimize Bias: Be aware of potential sources of bias in data collection and take steps to minimize their impact. Use randomization techniques, employ diverse data collectors, and implement strategies to mitigate response biases.

- Ensure Data Quality: Implement quality control measures to ensure the accuracy, completeness, and reliability of the collected data. Conduct regular checks for data entry errors, inconsistencies, and missing values.

- Maintain Data Confidentiality: Protect the privacy and confidentiality of participants’ data by implementing appropriate security measures. Ensure compliance with data protection regulations and obtain informed consent from participants.

- Document the Process: Keep detailed documentation of the data collection process, including any deviations from the original plan, challenges encountered, and decisions made. This documentation facilitates transparency, replicability, and future analysis.

FAQs about Data Collection

- What are secondary sources of data collection? Secondary sources of data collection are defined as the data that has been previously gathered and is available for your use as a researcher. These sources can include published research papers, government reports, statistical databases, and other existing datasets.

- What are the primary sources of data collection? Primary sources of data collection involve collecting data directly from the original source also known as the firsthand sources. You can do this through surveys, interviews, observations, experiments, or other direct interactions with individuals or subjects of study.

- How many types of data are there? There are two main types of data: qualitative and quantitative. Qualitative data is non-numeric and it includes information in the form of words, images, or descriptions. Quantitative data, on the other hand, is numeric and you can measure and analyze it statistically.

Sign up on Formplus Builder to create your preferred online surveys or questionnaire for data collection. You don’t need to be tech-savvy!

Connect to Formplus, Get Started Now - It's Free!

- academic research

- Data collection method

- data collection techniques

- data collection tool

- data collection tools

- field data collection

- online data collection tool

- product research

- qualitative research data

- quantitative research data

- scientific research

- busayo.longe

You may also like:

How Technology is Revolutionizing Data Collection

As global industrialization continues to transform, it is becoming evident that there is a ubiquity of large datasets driven by the need...

Data Collection Sheet: Types + [Template Examples]

Simple guide on data collection sheet. Types, tools, and template examples.

Data Collection Plan: Definition + Steps to Do It

Introduction A data collection plan is a way to get specific information on your audience. You can use it to better understand what they...

User Research: Definition, Methods, Tools and Guide

In this article, you’ll learn to provide value to your target market with user research. As a bonus, we’ve added user research tools and...

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Collection: What It Is, Methods & Tools + Examples

Let’s face it, no one wants to make decisions based on guesswork or gut feelings. The most important objective of data collection is to ensure that the data gathered is reliable and packed to the brim with juicy insights that can be analyzed and turned into data-driven decisions. There’s nothing better than good statistical analysis .

LEARN ABOUT: Level of Analysis

Collecting high-quality data is essential for conducting market research, analyzing user behavior, or just trying to get a handle on business operations. With the right approach and a few handy tools, gathering reliable and informative data.

So, let’s get ready to collect some data because when it comes to data collection, it’s all about the details.

Content Index

What is Data Collection?

Data collection methods, data collection examples, reasons to conduct online research and data collection, conducting customer surveys for data collection to multiply sales, steps to effectively conduct an online survey for data collection, survey design for data collection.

Data collection is the procedure of collecting, measuring, and analyzing accurate insights for research using standard validated techniques.

Put simply, data collection is the process of gathering information for a specific purpose. It can be used to answer research questions, make informed business decisions, or improve products and services.

To collect data, we must first identify what information we need and how we will collect it. We can also evaluate a hypothesis based on collected data. In most cases, data collection is the primary and most important step for research. The approach to data collection is different for different fields of study, depending on the required information.

LEARN ABOUT: Action Research

There are many ways to collect information when doing research. The data collection methods that the researcher chooses will depend on the research question posed. Some data collection methods include surveys, interviews, tests, physiological evaluations, observations, reviews of existing records, and biological samples. Let’s explore them.

LEARN ABOUT: Best Data Collection Tools

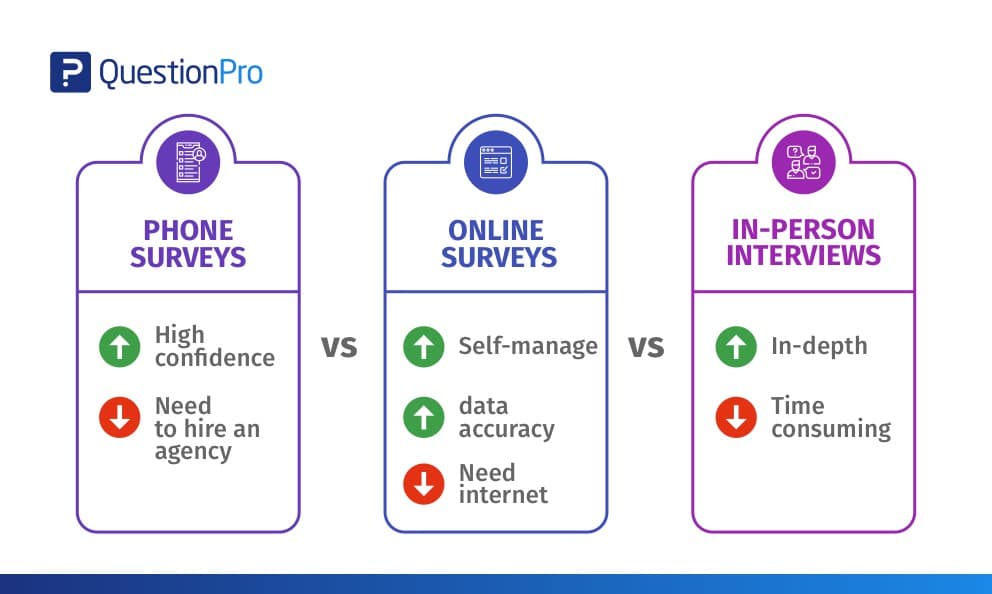

Phone vs. Online vs. In-Person Interviews

Essentially there are four choices for data collection – in-person interviews, mail, phone, and online. There are pros and cons to each of these modes.

- Pros: In-depth and a high degree of confidence in the data

- Cons: Time-consuming, expensive, and can be dismissed as anecdotal

- Pros: Can reach anyone and everyone – no barrier

- Cons: Expensive, data collection errors, lag time

- Pros: High degree of confidence in the data collected, reach almost anyone

- Cons: Expensive, cannot self-administer, need to hire an agency

- Pros: Cheap, can self-administer, very low probability of data errors

- Cons: Not all your customers might have an email address/be on the internet, customers may be wary of divulging information online.

In-person interviews always are better, but the big drawback is the trap you might fall into if you don’t do them regularly. It is expensive to regularly conduct interviews and not conducting enough interviews might give you false positives. Validating your research is almost as important as designing and conducting it.

We’ve seen many instances where after the research is conducted – if the results do not match up with the “gut-feel” of upper management, it has been dismissed off as anecdotal and a “one-time” phenomenon. To avoid such traps, we strongly recommend that data-collection be done on an “ongoing and regular” basis.

LEARN ABOUT: Research Process Steps

This will help you compare and analyze the change in perceptions according to marketing for your products/services. The other issue here is sample size. To be confident with your research, you must interview enough people to weed out the fringe elements.

A couple of years ago there was a lot of discussion about online surveys and their statistical analysis plan . The fact that not every customer had internet connectivity was one of the main concerns.

LEARN ABOUT: Statistical Analysis Methods

Although some of the discussions are still valid, the reach of the internet as a means of communication has become vital in the majority of customer interactions. According to the US Census Bureau, the number of households with computers has doubled between 1997 and 2001.

Learn more: Quantitative Market Research

In 2001 nearly 50% of households had a computer. Nearly 55% of all households with an income of more than 35,000 have internet access, which jumps to 70% for households with an annual income of 50,000. This data is from the US Census Bureau for 2001.

There are primarily three modes of data collection that can be employed to gather feedback – Mail, Phone, and Online. The method actually used for data collection is really a cost-benefit analysis. There is no slam-dunk solution but you can use the table below to understand the risks and advantages associated with each of the mediums:

| Paper | $20 – $30 | Medium | 100% |

| Phone | $20 – $35 | High | 95% |

| Online / Email | $1 – $5 | Medium | 50-70% |

Keep in mind, the reach here is defined as “All U.S. Households.” In most cases, you need to look at how many of your customers are online and determine. If all your customers have email addresses, you have a 100% reach of your customers.

Another important thing to keep in mind is the ever-increasing dominance of cellular phones over landline phones. United States FCC rules prevent automated dialing and calling cellular phone numbers and there is a noticeable trend towards people having cellular phones as the only voice communication device.

This introduces the inability to reach cellular phone customers who are dropping home phone lines in favor of going entirely wireless. Even if automated dialing is not used, another FCC rule prohibits from phoning anyone who would have to pay for the call.

Learn more: Qualitative Market Research

Multi-Mode Surveys

Surveys, where the data is collected via different modes (online, paper, phone etc.), is also another way of going. It is fairly straightforward and easy to have an online survey and have data-entry operators to enter in data (from the phone as well as paper surveys) into the system. The same system can also be used to collect data directly from the respondents.

Learn more: Survey Research

Data collection is an important aspect of research. Let’s consider an example of a mobile manufacturer, company X, which is launching a new product variant. To conduct research about features, price range, target market, competitor analysis, etc. data has to be collected from appropriate sources.

The marketing team can conduct various data collection activities such as online surveys or focus groups .

The survey should have all the right questions about features and pricing, such as “What are the top 3 features expected from an upcoming product?” or “How much are your likely to spend on this product?” or “Which competitors provide similar products?” etc.

For conducting a focus group, the marketing team should decide the participants and the mediator. The topic of discussion and objective behind conducting a focus group should be clarified beforehand to conduct a conclusive discussion.

Data collection methods are chosen depending on the available resources. For example, conducting questionnaires and surveys would require the least resources, while focus groups require moderately high resources.

Feedback is a vital part of any organization’s growth. Whether you conduct regular focus groups to elicit information from key players or, your account manager calls up all your marquee accounts to find out how things are going – essentially they are all processes to find out from your customers’ eyes – How are we doing? What can we do better?

Online surveys are just another medium to collect feedback from your customers , employees and anyone your business interacts with. With the advent of Do-It-Yourself tools for online surveys, data collection on the internet has become really easy, cheap and effective.

Learn more: Online Research

It is a well-established marketing fact that acquiring a new customer is 10 times more difficult and expensive than retaining an existing one. This is one of the fundamental driving forces behind the extensive adoption and interest in CRM and related customer retention tactics.

In a research study conducted by Rice University Professor Dr. Paul Dholakia and Dr. Vicki Morwitz, published in Harvard Business Review, the experiment inferred that the simple fact of asking customers how an organization was performing by itself to deliver results proved to be an effective customer retention strategy.

In the research study, conducted over the course of a year, one set of customers were sent out a satisfaction and opinion survey and the other set was not surveyed. In the next one year, the group that took the survey saw twice the number of people continuing and renewing their loyalty towards the organization data .

Learn more: Research Design

The research study provided a couple of interesting reasons on the basis of consumer psychology, behind this phenomenon:

- Satisfaction surveys boost the customers’ desire to be coddled and induce positive feelings. This crops from a section of the human psychology that intends to “appreciate” a product or service they already like or prefer. The survey feedback collection method is solely a medium to convey this. The survey is a vehicle to “interact” with the company and reinforces the customer’s commitment to the company.

- Surveys may increase awareness of auxiliary products and services. Surveys can be considered modes of both inbound as well as outbound communication. Surveys are generally considered to be a data collection and analysis source. Most people are unaware of the fact that consumer surveys can also serve as a medium for distributing data. It is important to note a few caveats here.

- In most countries, including the US, “selling under the guise of research” is illegal. b. However, we all know that information is distributed while collecting information. c. Other disclaimers may be included in the survey to ensure users are aware of this fact. For example: “We will collect your opinion and inform you about products and services that have come online in the last year…”

- Induced Judgments: The entire procedure of asking people for their feedback can prompt them to build an opinion on something they otherwise would not have thought about. This is a very underlying yet powerful argument that can be compared to the “Product Placement” strategy currently used for marketing products in mass media like movies and television shows. One example is the extensive and exclusive use of the “mini-Cooper” in the blockbuster movie “Italian Job.” This strategy is questionable and should be used with great caution.

Surveys should be considered as a critical tool in the customer journey dialog. The best thing about surveys is its ability to carry “bi-directional” information. The research conducted by Paul Dholakia and Vicki Morwitz shows that surveys not only get you the information that is critical for your business, but also enhances and builds upon the established relationship you have with your customers.

Recent technological advances have made it incredibly easy to conduct real-time surveys and opinion polls . Online tools make it easy to frame questions and answers and create surveys on the Web. Distributing surveys via email, website links or even integration with online CRM tools like Salesforce.com have made online surveying a quick-win solution.

So, you’ve decided to conduct an online survey. There are a few questions in your mind that you would like answered, and you are looking for a fast and inexpensive way to find out more about your customers, clients, etc.

First and foremost thing you need to decide what the smart objectives of the study are. Ensure that you can phrase these objectives as questions or measurements. If you can’t, you are better off looking at other data sources like focus groups and other qualitative methods . The data collected via online surveys is dominantly quantitative in nature.

Review the basic objectives of the study. What are you trying to discover? What actions do you want to take as a result of the survey? – Answers to these questions help in validating collected data. Online surveys are just one way of collecting and quantifying data .

Learn more: Qualitative Data & Qualitative Data Collection Methods

- Visualize all of the relevant information items you would like to have. What will the output survey research report look like? What charts and graphs will be prepared? What information do you need to be assured that action is warranted?

- Assign ranks to each topic (1 and 2) according to their priority, including the most important topics first. Revisit these items again to ensure that the objectives, topics, and information you need are appropriate. Remember, you can’t solve the research problem if you ask the wrong questions.

- How easy or difficult is it for the respondent to provide information on each topic? If it is difficult, is there an alternative medium to gain insights by asking a different question? This is probably the most important step. Online surveys have to be Precise, Clear and Concise. Due to the nature of the internet and the fluctuations involved, if your questions are too difficult to understand, the survey dropout rate will be high.

- Create a sequence for the topics that are unbiased. Make sure that the questions asked first do not bias the results of the next questions. Sometimes providing too much information, or disclosing purpose of the study can create bias. Once you have a series of decided topics, you can have a basic structure of a survey. It is always advisable to add an “Introductory” paragraph before the survey to explain the project objective and what is expected of the respondent. It is also sensible to have a “Thank You” text as well as information about where to find the results of the survey when they are published.

- Page Breaks – The attention span of respondents can be very low when it comes to a long scrolling survey. Add page breaks as wherever possible. Having said that, a single question per page can also hamper response rates as it increases the time to complete the survey as well as increases the chances for dropouts.

- Branching – Create smart and effective surveys with the implementation of branching wherever required. Eliminate the use of text such as, “If you answered No to Q1 then Answer Q4” – this leads to annoyance amongst respondents which result in increase survey dropout rates. Design online surveys using the branching logic so that appropriate questions are automatically routed based on previous responses.

- Write the questions . Initially, write a significant number of survey questions out of which you can use the one which is best suited for the survey. Divide the survey into sections so that respondents do not get confused seeing a long list of questions.

- Sequence the questions so that they are unbiased.

- Repeat all of the steps above to find any major holes. Are the questions really answered? Have someone review it for you.

- Time the length of the survey. A survey should take less than five minutes. At three to four research questions per minute, you are limited to about 15 questions. One open end text question counts for three multiple choice questions. Most online software tools will record the time taken for the respondents to answer questions.

- Include a few open-ended survey questions that support your survey object. This will be a type of feedback survey.

- Send an email to the project survey to your test group and then email the feedback survey afterward.

- This way, you can have your test group provide their opinion about the functionality as well as usability of your project survey by using the feedback survey.

- Make changes to your questionnaire based on the received feedback.

- Send the survey out to all your respondents!

Online surveys have, over the course of time, evolved into an effective alternative to expensive mail or telephone surveys. However, you must be aware of a few conditions that need to be met for online surveys. If you are trying to survey a sample representing the target population, please remember that not everyone is online.

Moreover, not everyone is receptive to an online survey also. Generally, the demographic segmentation of younger individuals is inclined toward responding to an online survey.

Learn More: Examples of Qualitarive Data in Education

Good survey design is crucial for accurate data collection. From question-wording to response options, let’s explore how to create effective surveys that yield valuable insights with our tips to survey design.

- Writing Great Questions for data collection

Writing great questions can be considered an art. Art always requires a significant amount of hard work, practice, and help from others.

The questions in a survey need to be clear, concise, and unbiased. A poorly worded question or a question with leading language can result in inaccurate or irrelevant responses, ultimately impacting the data’s validity.

Moreover, the questions should be relevant and specific to the research objectives. Questions that are irrelevant or do not capture the necessary information can lead to incomplete or inconsistent responses too.

- Avoid loaded or leading words or questions

A small change in content can produce effective results. Words such as could , should and might are all used for almost the same purpose, but may produce a 20% difference in agreement to a question. For example, “The management could.. should.. might.. have shut the factory”.

Intense words such as – prohibit or action, representing control or action, produce similar results. For example, “Do you believe Donald Trump should prohibit insurance companies from raising rates?”.

Sometimes the content is just biased. For instance, “You wouldn’t want to go to Rudolpho’s Restaurant for the organization’s annual party, would you?”

- Misplaced questions