Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

7.3 Quasi-Experimental Research

Learning objectives.

- Explain what quasi-experimental research is and distinguish it clearly from both experimental and correlational research.

- Describe three different types of quasi-experimental research designs (nonequivalent groups, pretest-posttest, and interrupted time series) and identify examples of each one.

The prefix quasi means “resembling.” Thus quasi-experimental research is research that resembles experimental research but is not true experimental research. Although the independent variable is manipulated, participants are not randomly assigned to conditions or orders of conditions (Cook & Campbell, 1979). Because the independent variable is manipulated before the dependent variable is measured, quasi-experimental research eliminates the directionality problem. But because participants are not randomly assigned—making it likely that there are other differences between conditions—quasi-experimental research does not eliminate the problem of confounding variables. In terms of internal validity, therefore, quasi-experiments are generally somewhere between correlational studies and true experiments.

Quasi-experiments are most likely to be conducted in field settings in which random assignment is difficult or impossible. They are often conducted to evaluate the effectiveness of a treatment—perhaps a type of psychotherapy or an educational intervention. There are many different kinds of quasi-experiments, but we will discuss just a few of the most common ones here.

Nonequivalent Groups Design

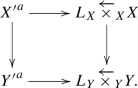

Recall that when participants in a between-subjects experiment are randomly assigned to conditions, the resulting groups are likely to be quite similar. In fact, researchers consider them to be equivalent. When participants are not randomly assigned to conditions, however, the resulting groups are likely to be dissimilar in some ways. For this reason, researchers consider them to be nonequivalent. A nonequivalent groups design , then, is a between-subjects design in which participants have not been randomly assigned to conditions.

Imagine, for example, a researcher who wants to evaluate a new method of teaching fractions to third graders. One way would be to conduct a study with a treatment group consisting of one class of third-grade students and a control group consisting of another class of third-grade students. This would be a nonequivalent groups design because the students are not randomly assigned to classes by the researcher, which means there could be important differences between them. For example, the parents of higher achieving or more motivated students might have been more likely to request that their children be assigned to Ms. Williams’s class. Or the principal might have assigned the “troublemakers” to Mr. Jones’s class because he is a stronger disciplinarian. Of course, the teachers’ styles, and even the classroom environments, might be very different and might cause different levels of achievement or motivation among the students. If at the end of the study there was a difference in the two classes’ knowledge of fractions, it might have been caused by the difference between the teaching methods—but it might have been caused by any of these confounding variables.

Of course, researchers using a nonequivalent groups design can take steps to ensure that their groups are as similar as possible. In the present example, the researcher could try to select two classes at the same school, where the students in the two classes have similar scores on a standardized math test and the teachers are the same sex, are close in age, and have similar teaching styles. Taking such steps would increase the internal validity of the study because it would eliminate some of the most important confounding variables. But without true random assignment of the students to conditions, there remains the possibility of other important confounding variables that the researcher was not able to control.

Pretest-Posttest Design

In a pretest-posttest design , the dependent variable is measured once before the treatment is implemented and once after it is implemented. Imagine, for example, a researcher who is interested in the effectiveness of an antidrug education program on elementary school students’ attitudes toward illegal drugs. The researcher could measure the attitudes of students at a particular elementary school during one week, implement the antidrug program during the next week, and finally, measure their attitudes again the following week. The pretest-posttest design is much like a within-subjects experiment in which each participant is tested first under the control condition and then under the treatment condition. It is unlike a within-subjects experiment, however, in that the order of conditions is not counterbalanced because it typically is not possible for a participant to be tested in the treatment condition first and then in an “untreated” control condition.

If the average posttest score is better than the average pretest score, then it makes sense to conclude that the treatment might be responsible for the improvement. Unfortunately, one often cannot conclude this with a high degree of certainty because there may be other explanations for why the posttest scores are better. One category of alternative explanations goes under the name of history . Other things might have happened between the pretest and the posttest. Perhaps an antidrug program aired on television and many of the students watched it, or perhaps a celebrity died of a drug overdose and many of the students heard about it. Another category of alternative explanations goes under the name of maturation . Participants might have changed between the pretest and the posttest in ways that they were going to anyway because they are growing and learning. If it were a yearlong program, participants might become less impulsive or better reasoners and this might be responsible for the change.

Another alternative explanation for a change in the dependent variable in a pretest-posttest design is regression to the mean . This refers to the statistical fact that an individual who scores extremely on a variable on one occasion will tend to score less extremely on the next occasion. For example, a bowler with a long-term average of 150 who suddenly bowls a 220 will almost certainly score lower in the next game. Her score will “regress” toward her mean score of 150. Regression to the mean can be a problem when participants are selected for further study because of their extreme scores. Imagine, for example, that only students who scored especially low on a test of fractions are given a special training program and then retested. Regression to the mean all but guarantees that their scores will be higher even if the training program has no effect. A closely related concept—and an extremely important one in psychological research—is spontaneous remission . This is the tendency for many medical and psychological problems to improve over time without any form of treatment. The common cold is a good example. If one were to measure symptom severity in 100 common cold sufferers today, give them a bowl of chicken soup every day, and then measure their symptom severity again in a week, they would probably be much improved. This does not mean that the chicken soup was responsible for the improvement, however, because they would have been much improved without any treatment at all. The same is true of many psychological problems. A group of severely depressed people today is likely to be less depressed on average in 6 months. In reviewing the results of several studies of treatments for depression, researchers Michael Posternak and Ivan Miller found that participants in waitlist control conditions improved an average of 10 to 15% before they received any treatment at all (Posternak & Miller, 2001). Thus one must generally be very cautious about inferring causality from pretest-posttest designs.

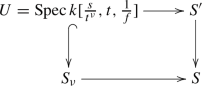

Does Psychotherapy Work?

Early studies on the effectiveness of psychotherapy tended to use pretest-posttest designs. In a classic 1952 article, researcher Hans Eysenck summarized the results of 24 such studies showing that about two thirds of patients improved between the pretest and the posttest (Eysenck, 1952). But Eysenck also compared these results with archival data from state hospital and insurance company records showing that similar patients recovered at about the same rate without receiving psychotherapy. This suggested to Eysenck that the improvement that patients showed in the pretest-posttest studies might be no more than spontaneous remission. Note that Eysenck did not conclude that psychotherapy was ineffective. He merely concluded that there was no evidence that it was, and he wrote of “the necessity of properly planned and executed experimental studies into this important field” (p. 323). You can read the entire article here:

http://psychclassics.yorku.ca/Eysenck/psychotherapy.htm

Fortunately, many other researchers took up Eysenck’s challenge, and by 1980 hundreds of experiments had been conducted in which participants were randomly assigned to treatment and control conditions, and the results were summarized in a classic book by Mary Lee Smith, Gene Glass, and Thomas Miller (Smith, Glass, & Miller, 1980). They found that overall psychotherapy was quite effective, with about 80% of treatment participants improving more than the average control participant. Subsequent research has focused more on the conditions under which different types of psychotherapy are more or less effective.

In a classic 1952 article, researcher Hans Eysenck pointed out the shortcomings of the simple pretest-posttest design for evaluating the effectiveness of psychotherapy.

Wikimedia Commons – CC BY-SA 3.0.

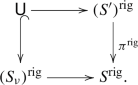

Interrupted Time Series Design

A variant of the pretest-posttest design is the interrupted time-series design . A time series is a set of measurements taken at intervals over a period of time. For example, a manufacturing company might measure its workers’ productivity each week for a year. In an interrupted time series-design, a time series like this is “interrupted” by a treatment. In one classic example, the treatment was the reduction of the work shifts in a factory from 10 hours to 8 hours (Cook & Campbell, 1979). Because productivity increased rather quickly after the shortening of the work shifts, and because it remained elevated for many months afterward, the researcher concluded that the shortening of the shifts caused the increase in productivity. Notice that the interrupted time-series design is like a pretest-posttest design in that it includes measurements of the dependent variable both before and after the treatment. It is unlike the pretest-posttest design, however, in that it includes multiple pretest and posttest measurements.

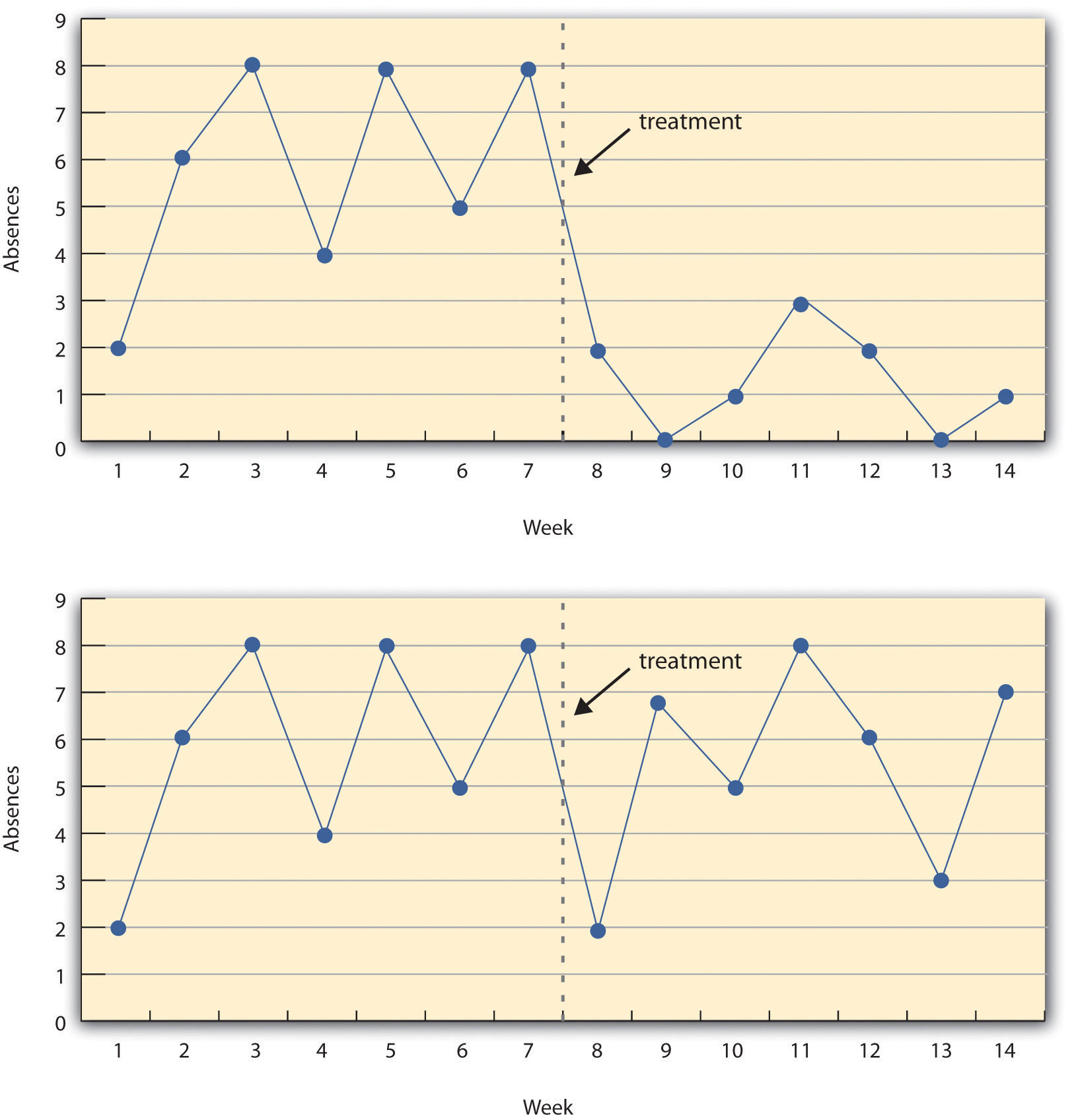

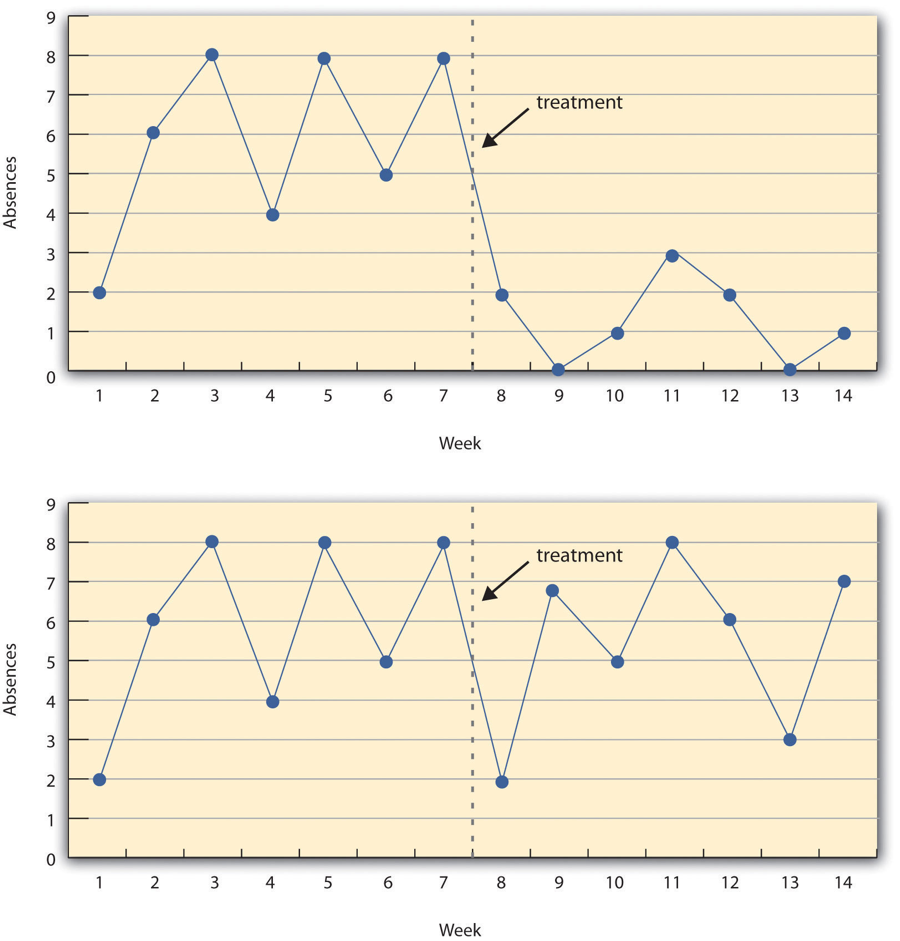

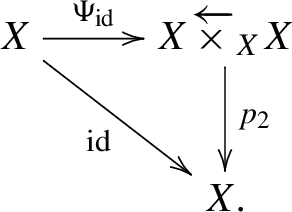

Figure 7.5 “A Hypothetical Interrupted Time-Series Design” shows data from a hypothetical interrupted time-series study. The dependent variable is the number of student absences per week in a research methods course. The treatment is that the instructor begins publicly taking attendance each day so that students know that the instructor is aware of who is present and who is absent. The top panel of Figure 7.5 “A Hypothetical Interrupted Time-Series Design” shows how the data might look if this treatment worked. There is a consistently high number of absences before the treatment, and there is an immediate and sustained drop in absences after the treatment. The bottom panel of Figure 7.5 “A Hypothetical Interrupted Time-Series Design” shows how the data might look if this treatment did not work. On average, the number of absences after the treatment is about the same as the number before. This figure also illustrates an advantage of the interrupted time-series design over a simpler pretest-posttest design. If there had been only one measurement of absences before the treatment at Week 7 and one afterward at Week 8, then it would have looked as though the treatment were responsible for the reduction. The multiple measurements both before and after the treatment suggest that the reduction between Weeks 7 and 8 is nothing more than normal week-to-week variation.

Figure 7.5 A Hypothetical Interrupted Time-Series Design

The top panel shows data that suggest that the treatment caused a reduction in absences. The bottom panel shows data that suggest that it did not.

Combination Designs

A type of quasi-experimental design that is generally better than either the nonequivalent groups design or the pretest-posttest design is one that combines elements of both. There is a treatment group that is given a pretest, receives a treatment, and then is given a posttest. But at the same time there is a control group that is given a pretest, does not receive the treatment, and then is given a posttest. The question, then, is not simply whether participants who receive the treatment improve but whether they improve more than participants who do not receive the treatment.

Imagine, for example, that students in one school are given a pretest on their attitudes toward drugs, then are exposed to an antidrug program, and finally are given a posttest. Students in a similar school are given the pretest, not exposed to an antidrug program, and finally are given a posttest. Again, if students in the treatment condition become more negative toward drugs, this could be an effect of the treatment, but it could also be a matter of history or maturation. If it really is an effect of the treatment, then students in the treatment condition should become more negative than students in the control condition. But if it is a matter of history (e.g., news of a celebrity drug overdose) or maturation (e.g., improved reasoning), then students in the two conditions would be likely to show similar amounts of change. This type of design does not completely eliminate the possibility of confounding variables, however. Something could occur at one of the schools but not the other (e.g., a student drug overdose), so students at the first school would be affected by it while students at the other school would not.

Finally, if participants in this kind of design are randomly assigned to conditions, it becomes a true experiment rather than a quasi experiment. In fact, it is the kind of experiment that Eysenck called for—and that has now been conducted many times—to demonstrate the effectiveness of psychotherapy.

Key Takeaways

- Quasi-experimental research involves the manipulation of an independent variable without the random assignment of participants to conditions or orders of conditions. Among the important types are nonequivalent groups designs, pretest-posttest, and interrupted time-series designs.

- Quasi-experimental research eliminates the directionality problem because it involves the manipulation of the independent variable. It does not eliminate the problem of confounding variables, however, because it does not involve random assignment to conditions. For these reasons, quasi-experimental research is generally higher in internal validity than correlational studies but lower than true experiments.

- Practice: Imagine that two college professors decide to test the effect of giving daily quizzes on student performance in a statistics course. They decide that Professor A will give quizzes but Professor B will not. They will then compare the performance of students in their two sections on a common final exam. List five other variables that might differ between the two sections that could affect the results.

Discussion: Imagine that a group of obese children is recruited for a study in which their weight is measured, then they participate for 3 months in a program that encourages them to be more active, and finally their weight is measured again. Explain how each of the following might affect the results:

- regression to the mean

- spontaneous remission

Cook, T. D., & Campbell, D. T. (1979). Quasi-experimentation: Design & analysis issues in field settings . Boston, MA: Houghton Mifflin.

Eysenck, H. J. (1952). The effects of psychotherapy: An evaluation. Journal of Consulting Psychology, 16 , 319–324.

Posternak, M. A., & Miller, I. (2001). Untreated short-term course of major depression: A meta-analysis of studies using outcomes from studies using wait-list control groups. Journal of Affective Disorders, 66 , 139–146.

Smith, M. L., Glass, G. V., & Miller, T. I. (1980). The benefits of psychotherapy . Baltimore, MD: Johns Hopkins University Press.

Research Methods in Psychology Copyright © 2016 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Child Care and Early Education Research Connections

Experiments and quasi-experiments.

This page includes an explanation of the types, key components, validity, ethics, and advantages and disadvantages of experimental design.

An experiment is a study in which the researcher manipulates the level of some independent variable and then measures the outcome. Experiments are powerful techniques for evaluating cause-and-effect relationships. Many researchers consider experiments the "gold standard" against which all other research designs should be judged. Experiments are conducted both in the laboratory and in real life situations.

Types of Experimental Design

There are two basic types of research design:

- True experiments

- Quasi-experiments

The purpose of both is to examine the cause of certain phenomena.

True experiments, in which all the important factors that might affect the phenomena of interest are completely controlled, are the preferred design. Often, however, it is not possible or practical to control all the key factors, so it becomes necessary to implement a quasi-experimental research design.

Similarities between true and quasi-experiments:

- Study participants are subjected to some type of treatment or condition

- Some outcome of interest is measured

- The researchers test whether differences in this outcome are related to the treatment

Differences between true experiments and quasi-experiments:

- In a true experiment, participants are randomly assigned to either the treatment or the control group, whereas they are not assigned randomly in a quasi-experiment

- In a quasi-experiment, the control and treatment groups differ not only in terms of the experimental treatment they receive, but also in other, often unknown or unknowable, ways. Thus, the researcher must try to statistically control for as many of these differences as possible

- Because control is lacking in quasi-experiments, there may be several "rival hypotheses" competing with the experimental manipulation as explanations for observed results

Key Components of Experimental Research Design

The manipulation of predictor variables.

In an experiment, the researcher manipulates the factor that is hypothesized to affect the outcome of interest. The factor that is being manipulated is typically referred to as the treatment or intervention. The researcher may manipulate whether research subjects receive a treatment (e.g., antidepressant medicine: yes or no) and the level of treatment (e.g., 50 mg, 75 mg, 100 mg, and 125 mg).

Suppose, for example, a group of researchers was interested in the causes of maternal employment. They might hypothesize that the provision of government-subsidized child care would promote such employment. They could then design an experiment in which some subjects would be provided the option of government-funded child care subsidies and others would not. The researchers might also manipulate the value of the child care subsidies in order to determine if higher subsidy values might result in different levels of maternal employment.

Random Assignment

- Study participants are randomly assigned to different treatment groups

- All participants have the same chance of being in a given condition

- Participants are assigned to either the group that receives the treatment, known as the "experimental group" or "treatment group," or to the group which does not receive the treatment, referred to as the "control group"

- Random assignment neutralizes factors other than the independent and dependent variables, making it possible to directly infer cause and effect

Random Sampling

Traditionally, experimental researchers have used convenience sampling to select study participants. However, as research methods have become more rigorous, and the problems with generalizing from a convenience sample to the larger population have become more apparent, experimental researchers are increasingly turning to random sampling. In experimental policy research studies, participants are often randomly selected from program administrative databases and randomly assigned to the control or treatment groups.

Validity of Results

The two types of validity of experiments are internal and external. It is often difficult to achieve both in social science research experiments.

Internal Validity

- When an experiment is internally valid, we are certain that the independent variable (e.g., child care subsidies) caused the outcome of the study (e.g., maternal employment)

- When subjects are randomly assigned to treatment or control groups, we can assume that the independent variable caused the observed outcomes because the two groups should not have differed from one another at the start of the experiment

- For example, take the child care subsidy example above. Since research subjects were randomly assigned to the treatment (child care subsidies available) and control (no child care subsidies available) groups, the two groups should not have differed at the outset of the study. If, after the intervention, mothers in the treatment group were more likely to be working, we can assume that the availability of child care subsidies promoted maternal employment

One potential threat to internal validity in experiments occurs when participants either drop out of the study or refuse to participate in the study. If particular types of individuals drop out or refuse to participate more often than individuals with other characteristics, this is called differential attrition. For example, suppose an experiment was conducted to assess the effects of a new reading curriculum. If the new curriculum was so tough that many of the slowest readers dropped out of school, the school with the new curriculum would experience an increase in the average reading scores. The reason they experienced an increase in reading scores, however, is because the worst readers left the school, not because the new curriculum improved students' reading skills.

External Validity

- External validity is also of particular concern in social science experiments

- It can be very difficult to generalize experimental results to groups that were not included in the study

- Studies that randomly select participants from the most diverse and representative populations are more likely to have external validity

- The use of random sampling techniques makes it easier to generalize the results of studies to other groups

For example, a research study shows that a new curriculum improved reading comprehension of third-grade children in Iowa. To assess the study's external validity, you would ask whether this new curriculum would also be effective with third graders in New York or with children in other elementary grades.

Glossary terms related to validity:

- internal validity

- external validity

- differential attrition

It is particularly important in experimental research to follow ethical guidelines. Protecting the health and safety of research subjects is imperative. In order to assure subject safety, all researchers should have their project reviewed by the Institutional Review Boards (IRBS). The National Institutes of Health supplies strict guidelines for project approval. Many of these guidelines are based on the Belmont Report (pdf).

The basic ethical principles:

- Respect for persons -- requires that research subjects are not coerced into participating in a study and requires the protection of research subjects who have diminished autonomy

- Beneficence -- requires that experiments do not harm research subjects, and that researchers minimize the risks for subjects while maximizing the benefits for them

- Justice -- requires that all forms of differential treatment among research subjects be justified

Advantages and Disadvantages of Experimental Design

The environment in which the research takes place can often be carefully controlled. Consequently, it is easier to estimate the true effect of the variable of interest on the outcome of interest.

Disadvantages

It is often difficult to assure the external validity of the experiment, due to the frequently nonrandom selection processes and the artificial nature of the experimental context.

Research Methodologies Guide

- Action Research

- Bibliometrics

- Case Studies

- Content Analysis

- Digital Scholarship This link opens in a new window

- Documentary

- Ethnography

- Focus Groups

- Grounded Theory

- Life Histories/Autobiographies

- Longitudinal

- Participant Observation

- Qualitative Research (General)

Quasi-Experimental Design

- Usability Studies

Quasi-Experimental Design is a unique research methodology because it is characterized by what is lacks. For example, Abraham & MacDonald (2011) state:

" Quasi-experimental research is similar to experimental research in that there is manipulation of an independent variable. It differs from experimental research because either there is no control group, no random selection, no random assignment, and/or no active manipulation. "

This type of research is often performed in cases where a control group cannot be created or random selection cannot be performed. This is often the case in certain medical and psychological studies.

For more information on quasi-experimental design, review the resources below:

Where to Start

Below are listed a few tools and online guides that can help you start your Quasi-experimental research. These include free online resources and resources available only through ISU Library.

- Quasi-Experimental Research Designs by Bruce A. Thyer This pocket guide describes the logic, design, and conduct of the range of quasi-experimental designs, encompassing pre-experiments, quasi-experiments making use of a control or comparison group, and time-series designs. An introductory chapter describes the valuable role these types of studies have played in social work, from the 1930s to the present. Subsequent chapters delve into each design type's major features, the kinds of questions it is capable of answering, and its strengths and limitations.

- Experimental and Quasi-Experimental Designs for Research by Donald T. Campbell; Julian C. Stanley. Call Number: Q175 C152e Written 1967 but still used heavily today, this book examines research designs for experimental and quasi-experimental research, with examples and judgments about each design's validity.

Online Resources

- Quasi-Experimental Design From the Web Center for Social Research Methods, this is a very good overview of quasi-experimental design.

- Experimental and Quasi-Experimental Research From Colorado State University.

- Quasi-experimental design--Wikipedia, the free encyclopedia Wikipedia can be a useful place to start your research- check the citations at the bottom of the article for more information.

- << Previous: Qualitative Research (General)

- Next: Sampling >>

- Last Updated: Dec 19, 2023 2:12 PM

- URL: https://instr.iastate.libguides.com/researchmethods

Nursing Shark

Your nursing school resource

Quasi-Experimental Design

Similar to a true experiment, a quasi-experimental design aims to establish a causal relationship between an independent and dependent variable . However, unlike true experiments, quasi-experiments do not utilize random assignment of participants to treatment and control groups. Instead, participants are assigned to groups based on pre-existing characteristics or circumstances, rather than through random selection.

Quasi-experimental designs are valuable research tools when conducting true experiments is not feasible or ethical due to practical or ethical constraints. They allow researchers to study cause-and-effect relationships in real-world situations where random assignment or manipulation of variables is challenging or impossible.

Differences between quasi-experiments and true experiments

Here’s a table highlighting the differences between true experimental designs and quasi-experimental designs in terms of assignment to treatment, control over treatment, and the use of control groups:

Example of a true experiment vs a quasi-experiment

Assume you are interested in studying the effects of a new tutoring program on student academic performance.

True Experiment:

A researcher wants to study the effect of a new teaching method on student performance in mathematics. The researcher randomly assigns students from the same school and grade level to either the treatment group (receives the new teaching method) or the control group (receives the traditional teaching method) .

The researcher has control over the implementation of the teaching methods and ensures that all other factors, such as curriculum, instructional time, and classroom environment, are kept consistent between the two groups.

Quasi-Experiment:

A researcher wants to study the effect of a new school policy that provides additional tutoring services on student performance in reading. However, the researcher cannot randomly assign students to groups. Instead, the researcher selects two schools: one school that has implemented the new tutoring policy (treatment group) and another school that has not implemented the policy (control group).

The researcher has no control over the implementation of the tutoring services or other factors that may differ between the two schools, such as teacher quality, socioeconomic status of the student population, or school resources.

In the true experiment, the random assignment of participants to groups and the researcher’s control over the treatment ensure that any observed differences in student performance can be attributed to the new teaching method, minimizing the influence of confounding variables.

In the quasi-experiment, the lack of random assignment and the researcher’s limited control over the treatment (tutoring policy) and other factors introduce potential confounding variables that may influence student performance. The researcher must account for these potential confounding variables in the analysis to strengthen the validity of the findings and draw more reliable conclusions about the effect of the tutoring policy.

Types of quasi-experimental designs

Quasi-experimental designs allow researchers to study phenomena and interventions in situations where true experiments are not feasible or ethical due to practical or ethical constraints.The three different types are:

Nonequivalent groups design

In this design, two or more groups are compared, but the participants are not randomly assigned to the groups. The groups may differ on important characteristics, and the researcher must account for these differences in the analysis.

Example : A researcher wants to study the effect of a new tutoring program on academic performance. Two existing classes are selected: one class receives the tutoring program (treatment group), and the other class does not (control group) . Since the classes already exist and students were not randomly assigned to them, this is a nonequivalent groups design.

Regression discontinuity

This design is used when participants are assigned to treatment or control groups based on a specific cutoff score or threshold on a continuous variable.

Example : A school district implements a new reading intervention program for students who score below a certain threshold on a standardized reading test. Students just below the cutoff score receive the intervention (treatment group) , while students just above the cutoff do not (control group) . The researcher can compare the reading scores of the two groups to evaluate the effectiveness of the intervention.

Natural experiments

These designs take advantage of naturally occurring events or circumstances that resemble experimental treatments. The researcher does not have control over the treatment or assignment to groups.

Example: A researcher wants to study the effect of a new state law that raises the minimum wage. Some cities in the state have already implemented the higher minimum wage (treatment group) , while others have not (control group) . The researcher can compare economic indicators, such as employment rates and consumer spending, between the two groups of cities to evaluate the impact of the minimum wage increase.

When to use quasi-experimental design

Quasi-experimental designs are often used when true experiments are not feasible or ethical due to practical or ethical constraints.

In some situations, it may be unethical or undesirable to randomly assign participants to treatment or control groups, especially when the treatment or intervention being studied involves potential risks or benefits. Quasi-experimental designs are suitable in these cases because they do not require random assignment.

For example , in medical research, it would be unethical to randomly assign participants to receive a potentially harmful treatment or to withhold a potentially beneficial treatment. In such cases, researchers may use a quasi-experimental design to study the effects of an existing treatment or intervention without randomly assigning participants.

In other cases, it may be difficult or impossible to randomly assign participants or manipulate the treatment due to practical constraints. Quasi-experimental designs are useful in these situations because they allow researchers to study phenomena in real-world settings or with pre-existing groups.

For instance, in educational research , it may not be feasible to randomly assign students to different teaching methods or interventions due to logistical or administrative constraints. In such cases, researchers may use a quasi-experimental design to study the effects of an educational program or policy by comparing existing groups of students or schools.

Advantages and disadvantages

Despite their limitations, quasi-experimental designs are valuable research methods when true experiments are not feasible or ethical. Here are some advantages and disadvantages:

- Allow researchers to study phenomena that cannot be manipulated experimentally due to ethical or practical constraints.

- Provide insights into real-world situations and naturalistic settings, enhancing external validity.

- Generally less expensive and time-consuming than true experiments, as they do not require extensive experimental controls or setups.

Disadvantages

- Lack of random assignment and control over treatment can introduce confounding variables and reduce internal validity, making it more difficult to establish cause-and-effect relationships.

- Potential for selection biases and other threats to validity due to the non-random assignment of participants to groups.

- Limited generalizability due to the specific context and sample used in the study, which may not be representative of the broader population.

Frequently asked questions

What is a quasi-experiment.

A quasi-experiment is a type of research design that attempts to establish a cause-and-effect relationship. The main difference between this and a true experiment is that the groups are not randomly assigned.

Frequently asked questions: Methodology

Quantitative observations involve measuring or counting something and expressing the result in numerical form, while qualitative observations involve describing something in non-numerical terms, such as its appearance, texture, or color.

To make quantitative observations , you need to use instruments that are capable of measuring the quantity you want to observe. For example, you might use a ruler to measure the length of an object or a thermometer to measure its temperature.

Scope of research is determined at the beginning of your research process , prior to the data collection stage. Sometimes called “scope of study,” your scope delineates what will and will not be covered in your project. It helps you focus your work and your time, ensuring that you’ll be able to achieve your goals and outcomes.

Defining a scope can be very useful in any research project, from a research proposal to a thesis or dissertation . A scope is needed for all types of research: quantitative , qualitative , and mixed methods .

To define your scope of research, consider the following:

- Budget constraints or any specifics of grant funding

- Your proposed timeline and duration

- Specifics about your population of study, your proposed sample size , and the research methodology you’ll pursue

- Any inclusion and exclusion criteria

- Any anticipated control , extraneous , or confounding variables that could bias your research if not accounted for properly.

Inclusion and exclusion criteria are predominantly used in non-probability sampling . In purposive sampling and snowball sampling , restrictions apply as to who can be included in the sample .

Inclusion and exclusion criteria are typically presented and discussed in the methodology section of your thesis or dissertation .

The purpose of theory-testing mode is to find evidence in order to disprove, refine, or support a theory. As such, generalisability is not the aim of theory-testing mode.

Due to this, the priority of researchers in theory-testing mode is to eliminate alternative causes for relationships between variables . In other words, they prioritise internal validity over external validity , including ecological validity .

Convergent validity shows how much a measure of one construct aligns with other measures of the same or related constructs .

On the other hand, concurrent validity is about how a measure matches up to some known criterion or gold standard, which can be another measure.

Although both types of validity are established by calculating the association or correlation between a test score and another variable , they represent distinct validation methods.

Validity tells you how accurately a method measures what it was designed to measure. There are 4 main types of validity :

- Construct validity : Does the test measure the construct it was designed to measure?

- Face validity : Does the test appear to be suitable for its objectives ?

- Content validity : Does the test cover all relevant parts of the construct it aims to measure.

- Criterion validity : Do the results accurately measure the concrete outcome they are designed to measure?

Criterion validity evaluates how well a test measures the outcome it was designed to measure. An outcome can be, for example, the onset of a disease.

Criterion validity consists of two subtypes depending on the time at which the two measures (the criterion and your test) are obtained:

- Concurrent validity is a validation strategy where the the scores of a test and the criterion are obtained at the same time

- Predictive validity is a validation strategy where the criterion variables are measured after the scores of the test

Attrition refers to participants leaving a study. It always happens to some extent – for example, in randomised control trials for medical research.

Differential attrition occurs when attrition or dropout rates differ systematically between the intervention and the control group . As a result, the characteristics of the participants who drop out differ from the characteristics of those who stay in the study. Because of this, study results may be biased .

Criterion validity and construct validity are both types of measurement validity . In other words, they both show you how accurately a method measures something.

While construct validity is the degree to which a test or other measurement method measures what it claims to measure, criterion validity is the degree to which a test can predictively (in the future) or concurrently (in the present) measure something.

Construct validity is often considered the overarching type of measurement validity . You need to have face validity , content validity , and criterion validity in order to achieve construct validity.

Convergent validity and discriminant validity are both subtypes of construct validity . Together, they help you evaluate whether a test measures the concept it was designed to measure.

- Convergent validity indicates whether a test that is designed to measure a particular construct correlates with other tests that assess the same or similar construct.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related. This type of validity is also called divergent validity .

You need to assess both in order to demonstrate construct validity. Neither one alone is sufficient for establishing construct validity.

Face validity and content validity are similar in that they both evaluate how suitable the content of a test is. The difference is that face validity is subjective, and assesses content at surface level.

When a test has strong face validity, anyone would agree that the test’s questions appear to measure what they are intended to measure.

For example, looking at a 4th grade math test consisting of problems in which students have to add and multiply, most people would agree that it has strong face validity (i.e., it looks like a math test).

On the other hand, content validity evaluates how well a test represents all the aspects of a topic. Assessing content validity is more systematic and relies on expert evaluation. of each question, analysing whether each one covers the aspects that the test was designed to cover.

A 4th grade math test would have high content validity if it covered all the skills taught in that grade. Experts(in this case, math teachers), would have to evaluate the content validity by comparing the test to the learning objectives.

Content validity shows you how accurately a test or other measurement method taps into the various aspects of the specific construct you are researching.

In other words, it helps you answer the question: “does the test measure all aspects of the construct I want to measure?” If it does, then the test has high content validity.

The higher the content validity, the more accurate the measurement of the construct.

If the test fails to include parts of the construct, or irrelevant parts are included, the validity of the instrument is threatened, which brings your results into question.

Construct validity refers to how well a test measures the concept (or construct) it was designed to measure. Assessing construct validity is especially important when you’re researching concepts that can’t be quantified and/or are intangible, like introversion. To ensure construct validity your test should be based on known indicators of introversion ( operationalisation ).

On the other hand, content validity assesses how well the test represents all aspects of the construct. If some aspects are missing or irrelevant parts are included, the test has low content validity.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related

Construct validity has convergent and discriminant subtypes. They assist determine if a test measures the intended notion.

The reproducibility and replicability of a study can be ensured by writing a transparent, detailed method section and using clear, unambiguous language.

Reproducibility and replicability are related terms.

- A successful reproduction shows that the data analyses were conducted in a fair and honest manner.

- A successful replication shows that the reliability of the results is high.

- Reproducing research entails reanalysing the existing data in the same manner.

- Replicating (or repeating ) the research entails reconducting the entire analysis, including the collection of new data .

Snowball sampling is a non-probability sampling method . Unlike probability sampling (which involves some form of random selection ), the initial individuals selected to be studied are the ones who recruit new participants.

Because not every member of the target population has an equal chance of being recruited into the sample, selection in snowball sampling is non-random.

Snowball sampling is a non-probability sampling method , where there is not an equal chance for every member of the population to be included in the sample .

This means that you cannot use inferential statistics and make generalisations – often the goal of quantitative research . As such, a snowball sample is not representative of the target population, and is usually a better fit for qualitative research .

Snowball sampling relies on the use of referrals. Here, the researcher recruits one or more initial participants, who then recruit the next ones.

Participants share similar characteristics and/or know each other. Because of this, not every member of the population has an equal chance of being included in the sample, giving rise to sampling bias .

Snowball sampling is best used in the following cases:

- If there is no sampling frame available (e.g., people with a rare disease)

- If the population of interest is hard to access or locate (e.g., people experiencing homelessness)

- If the research focuses on a sensitive topic (e.g., extra-marital affairs)

Stratified sampling and quota sampling both involve dividing the population into subgroups and selecting units from each subgroup. The purpose in both cases is to select a representative sample and/or to allow comparisons between subgroups.

The main difference is that in stratified sampling, you draw a random sample from each subgroup ( probability sampling ). In quota sampling you select a predetermined number or proportion of units, in a non-random manner ( non-probability sampling ).

Random sampling or probability sampling is based on random selection. This means that each unit has an equal chance (i.e., equal probability) of being included in the sample.

On the other hand, convenience sampling involves stopping people at random, which means that not everyone has an equal chance of being selected depending on the place, time, or day you are collecting your data.

Convenience sampling and quota sampling are both non-probability sampling methods. They both use non-random criteria like availability, geographical proximity, or expert knowledge to recruit study participants.

However, in convenience sampling, you continue to sample units or cases until you reach the required sample size.

In quota sampling, you first need to divide your population of interest into subgroups (strata) and estimate their proportions (quota) in the population. Then you can start your data collection , using convenience sampling to recruit participants, until the proportions in each subgroup coincide with the estimated proportions in the population.

A sampling frame is a list of every member in the entire population . It is important that the sampling frame is as complete as possible, so that your sample accurately reflects your population.

Stratified and cluster sampling may look similar, but bear in mind that groups created in cluster sampling are heterogeneous , so the individual characteristics in the cluster vary. In contrast, groups created in stratified sampling are homogeneous , as units share characteristics.

Relatedly, in cluster sampling you randomly select entire groups and include all units of each group in your sample. However, in stratified sampling, you select some units of all groups and include them in your sample. In this way, both methods can ensure that your sample is representative of the target population .

When your population is large in size, geographically dispersed, or difficult to contact, it’s necessary to use a sampling method .

This allows you to gather information from a smaller part of the population, i.e. the sample, and make accurate statements by using statistical analysis. A few sampling methods include simple random sampling , convenience sampling , and snowball sampling .

The two main types of social desirability bias are:

- Self-deceptive enhancement (self-deception): The tendency to see oneself in a favorable light without realizing it.

- Impression managemen t (other-deception): The tendency to inflate one’s abilities or achievement in order to make a good impression on other people.

Response bias refers to conditions or factors that take place during the process of responding to surveys, affecting the responses. One type of response bias is social desirability bias .

Demand characteristics are aspects of experiments that may give away the research objective to participants. Social desirability bias occurs when participants automatically try to respond in ways that make them seem likeable in a study, even if it means misrepresenting how they truly feel.

Participants may use demand characteristics to infer social norms or experimenter expectancies and act in socially desirable ways, so you should try to control for demand characteristics wherever possible.

A systematic review is secondary research because it uses existing research. You don’t collect new data yourself.

Ethical considerations in research are a set of principles that guide your research designs and practices. These principles include voluntary participation, informed consent, anonymity, confidentiality, potential for harm, and results communication.

Scientists and researchers must always adhere to a certain code of conduct when collecting data from others .

These considerations protect the rights of research participants, enhance research validity , and maintain scientific integrity.

Research ethics matter for scientific integrity, human rights and dignity, and collaboration between science and society. These principles make sure that participation in studies is voluntary, informed, and safe.

Research misconduct means making up or falsifying data, manipulating data analyses, or misrepresenting results in research reports. It’s a form of academic fraud.

These actions are committed intentionally and can have serious consequences; research misconduct is not a simple mistake or a point of disagreement but a serious ethical failure.

Anonymity means you don’t know who the participants are, while confidentiality means you know who they are but remove identifying information from your research report. Both are important ethical considerations .

You can only guarantee anonymity by not collecting any personally identifying information – for example, names, phone numbers, email addresses, IP addresses, physical characteristics, photos, or videos.

You can keep data confidential by using aggregate information in your research report, so that you only refer to groups of participants rather than individuals.

Peer review is a process of evaluating submissions to an academic journal. Utilising rigorous criteria, a panel of reviewers in the same subject area decide whether to accept each submission for publication.

For this reason, academic journals are often considered among the most credible sources you can use in a research project – provided that the journal itself is trustworthy and well regarded.

In general, the peer review process follows the following steps:

- First, the author submits the manuscript to the editor.

- Reject the manuscript and send it back to author, or

- Send it onward to the selected peer reviewer(s)

- Next, the peer review process occurs. The reviewer provides feedback, addressing any major or minor issues with the manuscript, and gives their advice regarding what edits should be made.

- Lastly, the edited manuscript is sent back to the author. They input the edits, and resubmit it to the editor for publication.

Peer review can stop obviously problematic, falsified, or otherwise untrustworthy research from being published. It also represents an excellent opportunity to get feedback from renowned experts in your field.

It acts as a first defence, helping you ensure your argument is clear and that there are no gaps, vague terms, or unanswered questions for readers who weren’t involved in the research process.

Peer-reviewed articles are considered a highly credible source due to this stringent process they go through before publication.

Many academic fields use peer review , largely to determine whether a manuscript is suitable for publication. Peer review enhances the credibility of the published manuscript.

However, peer review is also common in non-academic settings. The United Nations, the European Union, and many individual nations use peer review to evaluate grant applications. It is also widely used in medical and health-related fields as a teaching or quality-of-care measure.

Peer assessment is often used in the classroom as a pedagogical tool. Both receiving feedback and providing it are thought to enhance the learning process, helping students think critically and collaboratively.

- In a single-blind study , only the participants are blinded.

- In a double-blind study , both participants and experimenters are blinded.

- In a triple-blind study , the assignment is hidden not only from participants and experimenters, but also from the researchers analysing the data.

Blinding is important to reduce bias (e.g., observer bias , demand characteristics ) and ensure a study’s internal validity .

If participants know whether they are in a control or treatment group , they may adjust their behaviour in ways that affect the outcome that researchers are trying to measure. If the people administering the treatment are aware of group assignment, they may treat participants differently and thus directly or indirectly influence the final results.

Blinding means hiding who is assigned to the treatment group and who is assigned to the control group in an experiment .

Explanatory research is a research method used to investigate how or why something occurs when only a small amount of information is available pertaining to that topic. It can help you increase your understanding of a given topic.

Explanatory research is used to investigate how or why a phenomenon occurs. Therefore, this type of research is often one of the first stages in the research process , serving as a jumping-off point for future research.

Exploratory research is a methodology approach that explores research questions that have not previously been studied in depth. It is often used when the issue you’re studying is new, or the data collection process is challenging in some way.

Exploratory research is often used when the issue you’re studying is new or when the data collection process is challenging for some reason.

You can use exploratory research if you have a general idea or a specific question that you want to study but there is no preexisting knowledge or paradigm with which to study it.

To implement random assignment , assign a unique number to every member of your study’s sample .

Then, you can use a random number generator or a lottery method to randomly assign each number to a control or experimental group. You can also do so manually, by flipping a coin or rolling a die to randomly assign participants to groups.

Random selection, or random sampling , is a way of selecting members of a population for your study’s sample.

In contrast, random assignment is a way of sorting the sample into control and experimental groups.

Random sampling enhances the external validity or generalisability of your results, while random assignment improves the internal validity of your study.

Random assignment is used in experiments with a between-groups or independent measures design. In this research design, there’s usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable.

In general, you should always use random assignment in this type of experimental design when it is ethically possible and makes sense for your study topic.

Clean data are valid, accurate, complete, consistent, unique, and uniform. Dirty data include inconsistencies and errors.

Dirty data can come from any part of the research process, including poor research design , inappropriate measurement materials, or flawed data entry.

Data cleaning takes place between data collection and data analyses. But you can use some methods even before collecting data.

For clean data, you should start by designing measures that collect valid data. Data validation at the time of data entry or collection helps you minimize the amount of data cleaning you’ll need to do.

After data collection, you can use data standardisation and data transformation to clean your data. You’ll also deal with any missing values, outliers, and duplicate values.

Data cleaning involves spotting and resolving potential data inconsistencies or errors to improve your data quality. An error is any value (e.g., recorded weight) that doesn’t reflect the true value (e.g., actual weight) of something that’s being measured.

In this process, you review, analyse, detect, modify, or remove ‘dirty’ data to make your dataset ‘clean’. Data cleaning is also called data cleansing or data scrubbing.

Data cleaning is necessary for valid and appropriate analyses. Dirty data contain inconsistencies or errors , but cleaning your data helps you minimise or resolve these.

Without data cleaning, you could end up with a Type I or II error in your conclusion. These types of erroneous conclusions can be practically significant with important consequences, because they lead to misplaced investments or missed opportunities.

Observer bias occurs when a researcher’s expectations, opinions, or prejudices influence what they perceive or record in a study. It usually affects studies when observers are aware of the research aims or hypotheses. This type of research bias is also called detection bias or ascertainment bias .

The observer-expectancy effect occurs when researchers influence the results of their own study through interactions with participants.

Researchers’ own beliefs and expectations about the study results may unintentionally influence participants through demand characteristics .

You can use several tactics to minimise observer bias .

- Use masking (blinding) to hide the purpose of your study from all observers.

- Triangulate your data with different data collection methods or sources.

- Use multiple observers and ensure inter-rater reliability.

- Train your observers to make sure data is consistently recorded between them.

- Standardise your observation procedures to make sure they are structured and clear.

Naturalistic observation is a valuable tool because of its flexibility, external validity , and suitability for topics that can’t be studied in a lab setting.

The downsides of naturalistic observation include its lack of scientific control , ethical considerations , and potential for bias from observers and subjects.

Naturalistic observation is a qualitative research method where you record the behaviours of your research subjects in real-world settings. You avoid interfering or influencing anything in a naturalistic observation.

You can think of naturalistic observation as ‘people watching’ with a purpose.

Closed-ended, or restricted-choice, questions offer respondents a fixed set of choices to select from. These questions are easier to answer quickly.

Open-ended or long-form questions allow respondents to answer in their own words. Because there are no restrictions on their choices, respondents can answer in ways that researchers may not have otherwise considered.

You can organise the questions logically, with a clear progression from simple to complex, or randomly between respondents. A logical flow helps respondents process the questionnaire easier and quicker, but it may lead to bias. Randomisation can minimise the bias from order effects.

Questionnaires can be self-administered or researcher-administered.

Self-administered questionnaires can be delivered online or in paper-and-pen formats, in person or by post. All questions are standardised so that all respondents receive the same questions with identical wording.

Researcher-administered questionnaires are interviews that take place by phone, in person, or online between researchers and respondents. You can gain deeper insights by clarifying questions for respondents or asking follow-up questions.

In a controlled experiment , all extraneous variables are held constant so that they can’t influence the results. Controlled experiments require:

- A control group that receives a standard treatment, a fake treatment, or no treatment

- Random assignment of participants to ensure the groups are equivalent

Depending on your study topic, there are various other methods of controlling variables .

An experimental group, also known as a treatment group, receives the treatment whose effect researchers wish to study, whereas a control group does not. They should be identical in all other ways.

A true experiment (aka a controlled experiment) always includes at least one control group that doesn’t receive the experimental treatment.

However, some experiments use a within-subjects design to test treatments without a control group. In these designs, you usually compare one group’s outcomes before and after a treatment (instead of comparing outcomes between different groups).

For strong internal validity , it’s usually best to include a control group if possible. Without a control group, it’s harder to be certain that the outcome was caused by the experimental treatment and not by other variables.

A questionnaire is a data collection tool or instrument, while a survey is an overarching research method that involves collecting and analysing data from people using questionnaires.

A Likert scale is a rating scale that quantitatively assesses opinions, attitudes, or behaviours. It is made up of four or more questions that measure a single attitude or trait when response scores are combined.

To use a Likert scale in a survey , you present participants with Likert-type questions or statements, and a continuum of items, usually with five or seven possible responses, to capture their degree of agreement.

Individual Likert-type questions are generally considered ordinal data , because the items have clear rank order, but don’t have an even distribution.

Overall Likert scale scores are sometimes treated as interval data. These scores are considered to have directionality and even spacing between them.

The type of data determines what statistical tests you should use to analyse your data.

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (‘ x affects y because …’).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses. In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess. It should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations, and statistical analysis of data).

Cross-sectional studies are less expensive and time-consuming than many other types of study. They can provide useful insights into a population’s characteristics and identify correlations for further research.

Sometimes only cross-sectional data are available for analysis; other times your research question may only require a cross-sectional study to answer it.

Cross-sectional studies cannot establish a cause-and-effect relationship or analyse behaviour over a period of time. To investigate cause and effect, you need to do a longitudinal study or an experimental study .

Longitudinal studies and cross-sectional studies are two different types of research design . In a cross-sectional study you collect data from a population at a specific point in time; in a longitudinal study you repeatedly collect data from the same sample over an extended period of time.

Longitudinal studies are better to establish the correct sequence of events, identify changes over time, and provide insight into cause-and-effect relationships, but they also tend to be more expensive and time-consuming than other types of studies.

The 1970 British Cohort Study , which has collected data on the lives of 17,000 Brits since their births in 1970, is one well-known example of a longitudinal study .

Longitudinal studies can last anywhere from weeks to decades, although they tend to be at least a year long.

A correlation reflects the strength and/or direction of the association between two or more variables.

- A positive correlation means that both variables change in the same direction.

- A negative correlation means that the variables change in opposite directions.

- A zero correlation means there’s no relationship between the variables.

A correlational research design investigates relationships between two variables (or more) without the researcher controlling or manipulating any of them. It’s a non-experimental type of quantitative research .

A correlation coefficient is a single number that describes the strength and direction of the relationship between your variables.

Different types of correlation coefficients might be appropriate for your data based on their levels of measurement and distributions . The Pearson product-moment correlation coefficient (Pearson’s r ) is commonly used to assess a linear relationship between two quantitative variables.

Controlled experiments establish causality, whereas correlational studies only show associations between variables.

- In an experimental design , you manipulate an independent variable and measure its effect on a dependent variable. Other variables are controlled so they can’t impact the results.

- In a correlational design , you measure variables without manipulating any of them. You can test whether your variables change together, but you can’t be sure that one variable caused a change in another.

In general, correlational research is high in external validity while experimental research is high in internal validity .

The third variable and directionality problems are two main reasons why correlation isn’t causation .

The third variable problem means that a confounding variable affects both variables to make them seem causally related when they are not.

The directionality problem is when two variables correlate and might actually have a causal relationship, but it’s impossible to conclude which variable causes changes in the other.

As a rule of thumb, questions related to thoughts, beliefs, and feelings work well in focus groups . Take your time formulating strong questions, paying special attention to phrasing. Be careful to avoid leading questions , which can bias your responses.

Overall, your focus group questions should be:

- Open-ended and flexible

- Impossible to answer with ‘yes’ or ‘no’ (questions that start with ‘why’ or ‘how’ are often best)

- Unambiguous, getting straight to the point while still stimulating discussion

- Unbiased and neutral

Social desirability bias is the tendency for interview participants to give responses that will be viewed favourably by the interviewer or other participants. It occurs in all types of interviews and surveys , but is most common in semi-structured interviews , unstructured interviews , and focus groups .

Social desirability bias can be mitigated by ensuring participants feel at ease and comfortable sharing their views. Make sure to pay attention to your own body language and any physical or verbal cues, such as nodding or widening your eyes.

This type of bias in research can also occur in observations if the participants know they’re being observed. They might alter their behaviour accordingly.

A focus group is a research method that brings together a small group of people to answer questions in a moderated setting. The group is chosen due to predefined demographic traits, and the questions are designed to shed light on a topic of interest. It is one of four types of interviews .

The four most common types of interviews are:

- Structured interviews : The questions are predetermined in both topic and order.

- Semi-structured interviews : A few questions are predetermined, but other questions aren’t planned.

- Unstructured interviews : None of the questions are predetermined.

- Focus group interviews : The questions are presented to a group instead of one individual.

An unstructured interview is the most flexible type of interview, but it is not always the best fit for your research topic.

Unstructured interviews are best used when:

- You are an experienced interviewer and have a very strong background in your research topic, since it is challenging to ask spontaneous, colloquial questions

- Your research question is exploratory in nature. While you may have developed hypotheses, you are open to discovering new or shifting viewpoints through the interview process.

- You are seeking descriptive data, and are ready to ask questions that will deepen and contextualise your initial thoughts and hypotheses

- Your research depends on forming connections with your participants and making them feel comfortable revealing deeper emotions, lived experiences, or thoughts

A semi-structured interview is a blend of structured and unstructured types of interviews. Semi-structured interviews are best used when:

- You have prior interview experience. Spontaneous questions are deceptively challenging, and it’s easy to accidentally ask a leading question or make a participant uncomfortable.

- Your research question is exploratory in nature. Participant answers can guide future research questions and help you develop a more robust knowledge base for future research.

The interviewer effect is a type of bias that emerges when a characteristic of an interviewer (race, age, gender identity, etc.) influences the responses given by the interviewee.

There is a risk of an interviewer effect in all types of interviews , but it can be mitigated by writing really high-quality interview questions.

A structured interview is a data collection method that relies on asking questions in a set order to collect data on a topic. They are often quantitative in nature. Structured interviews are best used when:

- You already have a very clear understanding of your topic. Perhaps significant research has already been conducted, or you have done some prior research yourself, but you already possess a baseline for designing strong structured questions.

- You are constrained in terms of time or resources and need to analyse your data quickly and efficiently

- Your research question depends on strong parity between participants, with environmental conditions held constant

More flexible interview options include semi-structured interviews , unstructured interviews , and focus groups .

When conducting research, collecting original data has significant advantages:

- You can tailor data collection to your specific research aims (e.g., understanding the needs of your consumers or user testing your website).

- You can control and standardise the process for high reliability and validity (e.g., choosing appropriate measurements and sampling methods ).

However, there are also some drawbacks: data collection can be time-consuming, labour-intensive, and expensive. In some cases, it’s more efficient to use secondary data that has already been collected by someone else, but the data might be less reliable.

Data collection is the systematic process by which observations or measurements are gathered in research. It is used in many different contexts by academics, governments, businesses, and other organisations.

A mediator variable explains the process through which two variables are related, while a moderator variable affects the strength and direction of that relationship.

A confounder is a third variable that affects variables of interest and makes them seem related when they are not. In contrast, a mediator is the mechanism of a relationship between two variables: it explains the process by which they are related.

If something is a mediating variable :

- It’s caused by the independent variable

- It influences the dependent variable

- When it’s taken into account, the statistical correlation between the independent and dependent variables is higher than when it isn’t considered

Including mediators and moderators in your research helps you go beyond studying a simple relationship between two variables for a fuller picture of the real world. They are important to consider when studying complex correlational or causal relationships.

Mediators are part of the causal pathway of an effect, and they tell you how or why an effect takes place. Moderators usually help you judge the external validity of your study by identifying the limitations of when the relationship between variables holds.

You can think of independent and dependent variables in terms of cause and effect: an independent variable is the variable you think is the cause , while a dependent variable is the effect .

In an experiment, you manipulate the independent variable and measure the outcome in the dependent variable. For example, in an experiment about the effect of nutrients on crop growth:

- The independent variable is the amount of nutrients added to the crop field.

- The dependent variable is the biomass of the crops at harvest time.

Defining your variables, and deciding how you will manipulate and measure them, is an important part of experimental design .

Discrete and continuous variables are two types of quantitative variables :

- Discrete variables represent counts (e.g., the number of objects in a collection).

- Continuous variables represent measurable amounts (e.g., water volume or weight).

Quantitative variables are any variables where the data represent amounts (e.g. height, weight, or age).

Categorical variables are any variables where the data represent groups. This includes rankings (e.g. finishing places in a race), classifications (e.g. brands of cereal), and binary outcomes (e.g. coin flips).

You need to know what type of variables you are working with to choose the right statistical test for your data and interpret your results .

Determining cause and effect is one of the most important parts of scientific research. It’s essential to know which is the cause – the independent variable – and which is the effect – the dependent variable.

You want to find out how blood sugar levels are affected by drinking diet cola and regular cola, so you conduct an experiment .

- The type of cola – diet or regular – is the independent variable .

- The level of blood sugar that you measure is the dependent variable – it changes depending on the type of cola.

No. The value of a dependent variable depends on an independent variable, so a variable cannot be both independent and dependent at the same time. It must be either the cause or the effect, not both.

Yes, but including more than one of either type requires multiple research questions .

For example, if you are interested in the effect of a diet on health, you can use multiple measures of health: blood sugar, blood pressure, weight, pulse, and many more. Each of these is its own dependent variable with its own research question.

You could also choose to look at the effect of exercise levels as well as diet, or even the additional effect of the two combined. Each of these is a separate independent variable .

To ensure the internal validity of an experiment , you should only change one independent variable at a time.

To ensure the internal validity of your research, you must consider the impact of confounding variables. If you fail to account for them, you might over- or underestimate the causal relationship between your independent and dependent variables , or even find a causal relationship where none exists.

A confounding variable is closely related to both the independent and dependent variables in a study. An independent variable represents the supposed cause , while the dependent variable is the supposed effect . A confounding variable is a third variable that influences both the independent and dependent variables.

Failing to account for confounding variables can cause you to wrongly estimate the relationship between your independent and dependent variables.