Navigation group

Home banner.

Where scientists empower society

Creating solutions for healthy lives on a healthy planet.

most-cited publisher

largest publisher

2.5 billion

article views and downloads

Main Content

- Editors and reviewers

- Collaborators

Find a journal

We have a home for your research. Our community led journals cover more than 1,500 academic disciplines and are some of the largest and most cited in their fields.

Submit your research

Start your submission and get more impact for your research by publishing with us.

Author guidelines

Ready to publish? Check our author guidelines for everything you need to know about submitting, from choosing a journal and section to preparing your manuscript.

Peer review

Our efficient collaborative peer review means you’ll get a decision on your manuscript in an average of 61 days.

Article publishing charges (APCs) apply to articles that are accepted for publication by our external and independent editorial boards

Press office

Visit our press office for key media contact information, as well as Frontiers’ media kit, including our embargo policy, logos, key facts, leadership bios, and imagery.

Institutional partnerships

Join more than 555 institutions around the world already benefiting from an institutional membership with Frontiers, including CERN, Max Planck Society, and the University of Oxford.

Publishing partnerships

Partner with Frontiers and make your society’s transition to open access a reality with our custom-built platform and publishing expertise.

Policy Labs

Connecting experts from business, science, and policy to strengthen the dialogue between scientific research and informed policymaking.

How we publish

All Frontiers journals are community-run and fully open access, so every research article we publish is immediately and permanently free to read.

Editor guidelines

Reviewing a manuscript? See our guidelines for everything you need to know about our peer review process.

Become an editor

Apply to join an editorial board and collaborate with an international team of carefully selected independent researchers.

My assignments

It’s easy to find and track your editorial assignments with our platform, 'My Frontiers' – saving you time to spend on your own research.

Scientists call for urgent action to prevent immune-mediated illnesses caused by climate change and biodiversity loss

Climate change, pollution, and collapsing biodiversity are damaging our immune systems, but improving the environment offers effective and fast-acting protection.

Safeguarding peer review to ensure quality at scale

Making scientific research open has never been more important. But for research to be trusted, it must be of the highest quality. Facing an industry-wide rise in fraudulent science, Frontiers has increased its focus on safeguarding quality.

Chronic stress and inflammation linked to societal and environmental impacts in new study

Scientists hypothesize that as-yet unrecognized inflammatory stress is spreading among people at unprecedented rates, affecting our cognitive ability to address climate change, war, and other critical issues.

Tiny crustaceans discovered preying on live jellyfish during harsh Arctic night

Scientists used DNA metabarcoding to show for the first time that jellyfish are an important food for amphipods during the Arctic polar night in waters off Svalbard, at a time of year when other food resources are scarce.

Why studying astronauts’ microbiomes is crucial to ensure deep space mission success

In a new Frontiers’ guest editorial, Prof Dr Lembit Sihver, director of CRREAT at the Nuclear Physics Institute of the Czech Academy of Sciences and his co-authors explore the impact the microbiome has on human health in space.

Cake and cookies may increase Alzheimer’s risk: Here are five Frontiers articles you won’t want to miss

At Frontiers, we bring some of the world’s best research to a global audience. But with tens of thousands of articles published each year, it’s impossible to cover all of them. Here are just five amazing papers you may have missed.

2024's top 10 tech-driven Research Topics

Frontiers has compiled a list of 10 Research Topics that embrace the potential of technology to advance scientific breakthroughs and change the world for the better.

Get the latest research updates, subscribe to our newsletter

Quick Links

- RESOURCE CENTER

- MEMBER LOGIN

External Links

- AAAS Communities

- SCIENCE CAREERS

- SCIENCE FAMILY OF JOURNALS

- More AAAS Sites

Scientific Journals

A nitrogen-fixing organelle, or “nitroplast,” has been identified in a marine alga on the basis of intracellular imaging and proteomic evidence. This discovery sheds light on the evolutionary transition from endosymbiont to organelle. The image depicts the cell architecture and synchronized cell division of the alga Braarudosphaera bigelowii with nitroplast UCYN-A (large brown spheres). See pages 160 and 217. Image: N. Burgess/Science; Data: Tyler Coale et al., University of California Santa Cruz

Trucks transporting bauxite along a mining hauling road in Guinea. The demand for minerals required for clean energy technologies has fueled extensive exploration into wildlife habitats. Junker et al. integrated a global mining dataset with great ape density distribution and found that up to one-third of Africa’s great ape population faces mining-related risks. Apes in West Africa could be most severely affected, where up to 82% of the population overlaps with mining locations. The findings suggest the need to make environmental data accessible to enable assessments of the impact of mining on wildlife populations. Credit: Geneviève Campbell

Cultivating Memory B Cell Responses to a Plant-Based Vaccine. CoVLP (coronavirus virus-like particle) is a promising COVID-19 vaccine produced in the weed Nicotiana benthamiana. A squalene-based adjuvant, AS03, can enhance immune responses to CoVLP vaccination, but how AS03 affects memory B cell responses to CoVLP is unknown. Grigoryan et al. studied immune responses in healthy individuals who received two doses of CoVLP with or without AS03. They found that AS03 promoted the progressive maturation of memory B cell responses over time, leading to enhanced neutralization of SARS-CoV-2 and increased memory B cell breadth. This month’s cover illustration depicts a syringe containing a plant-based SARS-CoV-2 vaccine./a> Credit: N. Jessup/Science Immunology

Special Issue on Legged Robots. Developing legged robots capable of complex motor skills is a major challenge for roboticists. Haarnoja et al. used deep reinforcement learning to train miniature humanoid robots, Robotis OP3, to play a game of one-versus-one soccer. The robots were capable of exhibiting not only agile movements, such as walking, kicking the ball, and rapid recovery from falls, but also emergent behaviors to adapt to the game scenario, such as subtle defensive moves and dynamic footwork in response to the opponent. This month’s cover is an image of the miniature humanoid robot kicking a ball. Credit: Google DeepMind

This week, Ribeiro et al. report that DNA damage induced by the blockade of lipid synthesis in prostate cancer increases the effectiveness of PARP inhibition. The image shows a tissue section of human prostate cancer. Image: Nigel Downer/Science Source

Disconnecting Inflammation from Pain. The cover shows immunostaining of vascular endothelium (CD31, magenta) and calcitonin gene-related peptide axons (CGRP, cyan) in the synovium from an individual with rheumatoid arthritis, indicating pain-sensitive neuron sprouting in the joint. Rheumatoid arthritis pain does not always correlate with inflammation in joints. Using machine learning, Bai et al. used different cohorts of patients to identify hundreds of genes that were both involved in patient-reported pain and not associated with inflammation. These genes were mostly expressed in fibroblasts lining the synovium that interacted with CGRP-expressing neurons. This study may help identify new targets for treating rheumatoid arthritis pain. Credit: Bai et al./Science Translational Medicine

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

April 9, 2024

PLOS ONE

An inclusive journal community working together to advance science by making all rigorous research accessible without barriers

Calling all experts!

Plos one is seeking talented individuals to join our editorial board. .

Computational Biology

See Elegans: Simple-to-use, accurate, and automatic 3D detection of neural activity from densely packed neurons

Lanza and collagues presented a novel method (See Elegans) for automatic neuron sementation and tracking in C. elegans.

Image credit: Fig 2 by Lanza et al., CC BY 4.0

Pharmacology

Enhancing radioprotection: A chitosan-based chelating polymer is a versatile radioprotective agent for prophylactic and therapeutic interventions against radionuclide contamination

Durand and colleagues report the evaluation of a functionalized chitosan polymer for treating exposure to radioactive isotopes, including uranium, for which there are no suitable current countermeasures.

Image credit: Radioactive Materials Area by Kerry, CC BY 2.0

Mental Health

Cross-cultural variation in experiences of acceptance, camouflaging and mental health difficulties in autism: A registered report

In this Registered Report, Keating and colleagues explore the relationship between autism acceptance, camoflauging, and mental health in a cross-cultural sample of autistic adults.

Image credit: man-390342_1280 by PDPPics, Pixabay

The double-edged scalpel: Experiences and perceptions of pregnancy and parenthood during Canadian surgical residency training

Peters and colleagues survey female surgical trainees, who report experiencing higher rates of pregnancy complications when compared to non-surgical counterparts, more negative stigma and bias, and other social and logistical challenges. This highlights the need to create a culture where both birthing and non-birthing parents are empowered and supported.

Image credit: woman-1284353_1280 by Pexels, Pixabay

International Day of Women and Girls in Science – Interview with Dr. Swetavalli Raghavan

PLOS ONE Associate Editor Dr Johanna Pruller interviews Dr Swetavalli Raghavan, full professor and founder of Scientists & Co. about mentorship, role models, and the changing landscape for women in science.

Image credit: Dr Swetavalli Raghavan by EveryONE, CC BY 4.0

International Women’s Day – Interview with PLOS ONE Academic Editor Dr. Siaw Shi Boon

PLOS ONE Senior Editor Dr Jianhong Zhou interviews PLOS ONE Academic Editor Dr Siaw Shi Boon about her path to becoming a scientist, challenges facing women in science, and how to encourage more women to become scientists.

Image credit: Dr Siaw Shi Boon by EveryONE, CC BY 4.0

Editor Spotlight: Simon Porcher

In this interview, PLOS ONE Academic Editor Dr Simon Porcher discusses his role as editor, enhancing reproducibility in scientific reporting, and how we can act in future pandemics.

Image credit: Simon Porcher by EveryONE, CC BY 4.0

Child development

Childhood experiences and sleep problems: A cross-sectional study on the indirect relationship mediated by stress, resilience and anxiety

Ashour and colleagues investigate the relationship between childhood experiences and sleep quality in adulthood.

Image credit: Alarm Clock by Congerdesign, Pixabay

Sports and exercise medicine

Responsiveness of respiratory function in Parkinson’s Disease to an integrative exercise programme: A prospective cohort study

McMahon and colleagues report the effectiveness of an exercise intervention on respiratory function.

Image credit: Side view doctor looking at radiography by Freepik, Freepik

The great urban shift: Climate change is predicted to drive mass species turnover in cities

Filazzola and colleagues model how terrestrial wildlife within 60 Canadian and American cities will be affected by climate change.

Image credit: Fig 3 by Filazzola et al., CC BY 4.0

Accessibility

Color Quest: An interactive tool for exploring color palettes and enhancing accessibility in data visualization

Nelli presents an open-source tool for visualizing how various data plots appear to individuals with color blindness, towards improved accessibility.

Image credit: Fig 1 by Nelli et al., CC BY 4.0

Collections

Browse the latest collections of papers from across PLOS.

Watch this space for future collections of papers in PLOS ONE.

Conferences 2024

New opportunities to meet our editorial staff in 2024 will be announced soon.

Publish with PLOS ONE

- Submission Instructions

- Submit Your Manuscript

Connect with Us

- PLOS ONE on Twitter

- PLOS on Facebook

Get new content from PLOS ONE in your inbox

Thank you you have successfully subscribed to the plos one newsletter., sorry, an error occurred while sending your subscription. please try again later..

Disclaimer » Advertising

- HealthyChildren.org

- Previous Article

- Next Article

What is the Purpose of Peer Review?

What makes a good peer reviewer, how do you decide whether to review a paper, how do you complete a peer review, limitations of peer review, conclusions, research methods: how to perform an effective peer review.

- Split-Screen

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- CME Quiz Close Quiz

- Open the PDF for in another window

- Get Permissions

- Cite Icon Cite

- Search Site

Elise Peterson Lu , Brett G. Fischer , Melissa A. Plesac , Andrew P.J. Olson; Research Methods: How to Perform an Effective Peer Review. Hosp Pediatr November 2022; 12 (11): e409–e413. https://doi.org/10.1542/hpeds.2022-006764

Download citation file:

- Ris (Zotero)

- Reference Manager

Scientific peer review has existed for centuries and is a cornerstone of the scientific publication process. Because the number of scientific publications has rapidly increased over the past decades, so has the number of peer reviews and peer reviewers. In this paper, drawing on the relevant medical literature and our collective experience as peer reviewers, we provide a user guide to the peer review process, including discussion of the purpose and limitations of peer review, the qualities of a good peer reviewer, and a step-by-step process of how to conduct an effective peer review.

Peer review has been a part of scientific publications since 1665, when the Philosophical Transactions of the Royal Society became the first publication to formalize a system of expert review. 1 , 2 It became an institutionalized part of science in the latter half of the 20 th century and is now the standard in scientific research publications. 3 In 2012, there were more than 28 000 scholarly peer-reviewed journals and more than 3 million peer reviewed articles are now published annually. 3 , 4 However, even with this volume, most peer reviewers learn to review “on the (unpaid) job” and no standard training system exists to ensure quality and consistency. 5 Expectations and format vary between journals and most, but not all, provide basic instructions for reviewers. In this paper, we provide a general introduction to the peer review process and identify common strategies for success as well as pitfalls to avoid.

Modern peer review serves 2 primary purposes: (1) as “a screen before the diffusion of new knowledge” 6 and (2) as a method to improve the quality of published work. 1 , 5

As screeners, peer reviewers evaluate the quality, validity, relevance, and significance of research before publication to maintain the credibility of the publications they serve and their fields of study. 1 , 2 , 7 Although peer reviewers are not the final decision makers on publication (that role belongs to the editor), their recommendations affect editorial decisions and thoughtful comments influence an article’s fate. 6 , 8

As advisors and evaluators of manuscripts, reviewers have an opportunity and responsibility to give authors an outside expert’s perspective on their work. 9 They provide feedback that can improve methodology, enhance rigor, improve clarity, and redefine the scope of articles. 5 , 8 , 10 This often happens even if a paper is not ultimately accepted at the reviewer’s journal because peer reviewers’ comments are incorporated into revised drafts that are submitted to another journal. In a 2019 survey of authors, reviewers, and editors, 83% said that peer review helps science communication and 90% of authors reported that peer review improved their last paper. 11

Expertise: Peer reviewers should be up to date with current literature, practice guidelines, and methodology within their subject area. However, academic rank and seniority do not define expertise and are not actually correlated with performance in peer review. 13

Professionalism: Reviewers should be reliable and objective, aware of their own biases, and respectful of the confidentiality of the peer review process.

Critical skill : Reviewers should be organized, thorough, and detailed in their critique with the goal of improving the manuscript under their review, regardless of disposition. They should provide constructive comments that are specific and addressable, referencing literature when possible. A peer reviewer should leave a paper better than he or she found it.

Is the manuscript within your area of expertise? Generally, if you are asked to review a paper, it is because an editor felt that you were a qualified expert. In a 2019 survey, 74% of requested reviews were within the reviewer’s area of expertise. 11 This, of course, does not mean that you must be widely published in the area, only that you have enough expertise and comfort with the topic to critique and add to the paper.

Do you have any biases that may affect your review? Are there elements of the methodology, content area, or theory with which you disagree? Some disagreements between authors and reviewers are common, expected, and even helpful. However, if a reviewer fundamentally disagrees with an author’s premise such that he or she cannot be constructive, the review invitation should be declined.

Do you have the time? The average review for a clinical journal takes 5 to 6 hours, though many take longer depending on the complexity of the research and the experience of the reviewer. 1 , 14 Journals vary on the requested timeline for return of reviews, though it is usually 1 to 4 weeks. Peer review is often the longest part of the publication process and delays contribute to slower dissemination of important work and decreased author satisfaction. 15 Be mindful of your schedule and only accept a review invitation if you can reasonably return the review in the requested time.

Once you have determined that you are the right person and decided to take on the review, reply to the inviting e-mail or click the associated link to accept (or decline) the invitation. Journal editors invite a limited number of reviewers at a time and wait for responses before inviting others. A common complaint among journal editors surveyed was that reviewers would often take days to weeks to respond to requests, or not respond at all, making it difficult to find appropriate reviewers and prolonging an already long process. 5

Now that you have decided to take on the review, it is best of have a systematic way of both evaluating the manuscript and writing the review. Various suggestions exist in the literature, but we will describe our standard procedure for review, incorporating specific do’s and don’ts summarized in Table 1 .

Dos and Don’ts of Peer Review

First, read the manuscript once without making notes or forming opinions to get a sense of the paper as whole. Assess the overall tone and flow and define what the authors identify as the main point of their work. Does the work overall make sense? Do the authors tell the story effectively?

Next, read the manuscript again with an eye toward review, taking notes and formulating thoughts on strengths and weaknesses. Consider the methodology and identify the specific type of research described. Refer to the corresponding reporting guideline if applicable (CONSORT for randomized control trials, STROBE for observational studies, PRISMA for systematic reviews). Reporting guidelines often include a checklist, flow diagram, or structured text giving a minimum list of information needed in a manuscript based on the type of research done. 16 This allows the reviewer to formulate a more nuanced and specific assessment of the manuscript.

Next, review the main findings, the significance of the work, and what contribution it makes to the field. Examine the presentation and flow of the manuscript but do not copy edit the text. At this point, you should start to write your review. Some journals provide a format for their reviews, but often it is up to the reviewer. In surveys of journal editors and reviewers, a review organized by manuscript section was the most favored, 5 , 6 so that is what we will describe here.

As you write your review, consider starting with a brief summary of the work that identifies the main topic, explains the basic approach, and describes the findings and conclusions. 12 , 17 Though not universally included in all reviews, we have found this step to be helpful in ensuring that the work is conveyed clearly enough for the reviewer to summarize it. Include brief notes on the significance of the work and what it adds to current knowledge. Critique the presentation of the work: is it clearly written? Is its length appropriate? List any major concerns with the work overall, such as major methodological flaws or inaccurate conclusions that should disqualify it from publication, though do not comment directly on disposition. Then perform your review by section:

Abstract : Is it consistent with the rest of the paper? Does it adequately describe the major points?

Introduction : This section should provide adequate background to explain the need for the study. Generally, classic or highly relevant studies should be cited, but citations do not have to be exhaustive. The research question and hypothesis should be clearly stated.

Methods: Evaluate both the methods themselves and the way in which they are explained. Does the methodology used meet the needs of the questions proposed? Is there sufficient detail to explain what the authors did and, if not, what needs to be added? For clinical research, examine the inclusion/exclusion criteria, control populations, and possible sources of bias. Reporting guidelines can be particularly helpful in determining the appropriateness of the methods and how they are reported.

Some journals will expect an evaluation of the statistics used, whereas others will have a separate statistician evaluate, and the reviewers are generally not expected to have an exhaustive knowledge of statistical methods. Clarify expectations if needed and, if you do not feel qualified to evaluate the statistics, make this clear in your review.

Results: Evaluate the presentation of the results. Is information given in sufficient detail to assess credibility? Are the results consistent with the methodology reported? Are the figures and tables consistent with the text, easy to interpret, and relevant to the work? Make note of data that could be better detailed in figures or tables, rather than included in the text. Make note of inappropriate interpretation in the results section (this should be in discussion) or rehashing of methods.

Discussion: Evaluate the authors’ interpretation of their results, how they address limitations, and the implications of their work. How does the work contribute to the field, and do the authors adequately describe those contributions? Make note of overinterpretation or conclusions not supported by the data.

The length of your review often correlates with your opinion of the quality of the work. If an article has major flaws that you think preclude publication, write a brief review that focuses on the big picture. Articles that may not be accepted but still represent quality work merit longer reviews aimed at helping the author improve the work for resubmission elsewhere.

Generally, do not include your recommendation on disposition in the body of the review itself. Acceptance or rejection is ultimately determined by the editor and including your recommendation in your comments to the authors can be confusing. A journal editor’s decision on acceptance or rejection may depend on more factors than just the quality of the work, including the subject area, journal priorities, other contemporaneous submissions, and page constraints.

Many submission sites include a separate question asking whether to accept, accept with major revision, or reject. If this specific format is not included, then add your recommendation in the “confidential notes to the editor.” Your recommendation should be consistent with the content of your review: don’t give a glowing review but recommend rejection or harshly criticize a manuscript but recommend publication. Last, regardless of your ultimate recommendation on disposition, it is imperative to use respectful and professional language and tone in your written review.

Although peer review is often described as the “gatekeeper” of science and characterized as a quality control measure, peer review is not ideally designed to detect fundamental errors, plagiarism, or fraud. In multiple studies, peer reviewers detected only 20% to 33% of intentionally inserted errors in scientific manuscripts. 18 , 19 Plagiarism similarly is not detected in peer review, largely because of the huge volume of literature available to plagiarize. Most journals now use computer software to identify plagiarism before a manuscript goes to peer review. Finally, outright fraud often goes undetected in peer review. Reviewers start from a position of respect for the authors and trust the data they are given barring obvious inconsistencies. Ultimately, reviewers are “gatekeepers, not detectives.” 7

Peer review is also limited by bias. Even with the best of intentions, reviewers bring biases including but not limited to prestige bias, affiliation bias, nationality bias, language bias, gender bias, content bias, confirmation bias, bias against interdisciplinary research, publication bias, conservatism, and bias of conflict of interest. 3 , 4 , 6 For example, peer reviewers score methodology higher and are more likely to recommend publication when prestigious author names or institutions are visible. 20 Although bias can be mitigated both by the reviewer and by the journal, it cannot be eliminated. Reviewers should be mindful of their own biases while performing reviews and work to actively mitigate them. For example, if English language editing is necessary, state this with specific examples rather than suggesting the authors seek editing by a “native English speaker.”

Peer review is an essential, though imperfect, part of the forward movement of science. Peer review can function as both a gatekeeper to protect the published record of science and a mechanism to improve research at the level of individual manuscripts. Here, we have described our strategy, summarized in Table 2 , for performing a thorough peer review, with a focus on organization, objectivity, and constructiveness. By using a systematized strategy to evaluate manuscripts and an organized format for writing reviews, you can provide a relatively objective perspective in editorial decision-making. By providing specific and constructive feedback to authors, you contribute to the quality of the published literature.

Take-home Points

FUNDING: No external funding.

CONFLICT OF INTEREST DISCLOSURES: The authors have indicated they have no potential conflicts of interest to disclose.

Dr Lu performed the literature review and wrote the manuscript. Dr Fischer assisted in the literature review and reviewed and edited the manuscript. Dr Plesac provided background information on the process of peer review, reviewed and edited the manuscript, and completed revisions. Dr Olson provided background information and practical advice, critically reviewed and revised the manuscript, and approved the final manuscript.

Advertising Disclaimer »

Citing articles via

Email alerts.

Affiliations

- Editorial Board

- Editorial Policies

- Pediatrics On Call

- Online ISSN 2154-1671

- Print ISSN 2154-1663

- Pediatrics Open Science

- Hospital Pediatrics

- Pediatrics in Review

- AAP Grand Rounds

- Latest News

- Pediatric Care Online

- Red Book Online

- Pediatric Patient Education

- AAP Toolkits

- AAP Pediatric Coding Newsletter

First 1,000 Days Knowledge Center

Institutions/librarians, group practices, licensing/permissions, integrations, advertising.

- Privacy Statement | Accessibility Statement | Terms of Use | Support Center | Contact Us

- © Copyright American Academy of Pediatrics

This Feature Is Available To Subscribers Only

Sign In or Create an Account

Preserving the Quality of Scientific Research: Peer Review of Research Articles

- First Online: 20 January 2017

Cite this chapter

- Pali U. K. De Silva 3 &

- Candace K. Vance 3

Part of the book series: Fascinating Life Sciences ((FLS))

1343 Accesses

8 Citations

Peer review of scholarly articles is a mechanism used to assess and preserve the trustworthiness of reporting of scientific findings. Since peer reviewing is a qualitative evaluation system that involves the judgment of experts in a field about the quality of research performed by their colleagues (and competitors), it inherently encompasses a strongly subjective element. Although this time-tested system, which has been evolving since the mid-eighteenth century, is being questioned and criticized for its deficiencies, it is still considered an integral part of the scholarly communication system, as no other procedure has been proposed to replace it. Therefore, to improve and strengthen the existing peer review process, it is important to understand its shortcomings and to continue the constructive deliberations of all participants within the scientific scholarly communication system . This chapter discusses the strengths, issues, and deficiencies of the peer review system, conventional closed models (single-blind and double-blind), and the new open peer review model and its variations that are being experimented with by some journals.

- Article peer review system

- Closed peer review

- Open peer review

- Scientific journal publishing

- Single blind peer reviewing

- Article retraction

- Nonselective review

- Post-publication review system

- Double blind peer reviewing

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Evaluative criteria may also vary depending on the scope of the specific journal.

Krebs and Johnson ( 1937 ).

McClintock ( 1950 ).

Bombardier et al. ( 2000 ).

“Nature journals offer double-blind review” Nature announcement— http://www.nature.com/news/nature-journals-offer-double-blind-review-1.16931 .

Contains all versions of the manuscript, named reviewer reports, author responses, and (where relevant) editors’ comments (Moylan et al. 2014 ).

https://www.elsevier.com/about/press-releases/research-and-journals/peer-review-survey-2009-preliminary-findings .

Review guidelines, Frontiers in Neuroscience http://journal.frontiersin.org/journal/synaptic-neuroscience#review-guidelines .

Editorial policies - BioMed Central http://www.biomedcentral.com/getpublished/editorial-policies#peer+review .

Hydrology and Earth System Sciences Interactive Public Peer Review http://www.hydrology-and-earth-system-sciences.net/peer_review/interactive_review_process.html .

Copernicus Publications http://publications.copernicus.org/services/public_peer_review.html .

Copernicus Publications - Interactive Public Peer Review http://home.frontiersin.org/about/impact-and-tiering .

Biology Direct http://www.biologydirect.com/ .

F1000 Research http://f1000research.com .

GigaScience http://www.gigasciencejournal.com

Journal of Negative Results in Biomedicine http://www.jnrbm.com/ .

BMJOpen http://bmjopen.bmj.com/ .

PeerJ http://peerj.com/ .

ScienceOpen https://www.scienceopen.com .

ArXiv http://arxiv.org .

Retraction of articles from Springer journals. London: Springer, August 18, 2015 ( http://www.springer.com/gp/about-springer/media/statements/retraction-of-articles-from-springer-journals/735218 ).

COPE statement on inappropriate manipulation of peer review processes ( http://publicationethics.org/news/cope-statement-inappropriate-manipulation-peer-review-processes ).

Hindawi concludes an in-depth investigation into peer review fraud, July 2015 ( http://www.hindawi.com/statement/ ).

.Wakefield, A. J., Murch, S. H., Anthony, A., Linnell, J., Casson, D. M., Malik, M., ... & Valentine, A. (1998). Ileal-lymphoid-nodular hyperplasia, non-specific colitis, and pervasive developmental disorder in children. The Lancet , 351 (9103), 637–641. (RETRACTED:See The Lancet 375 (9713) p.445)

A practice used by researchers to increase the number of articles publishing multiple papers using very similar pieces of a single dataset. The drug industry also uses this tactic to increase publications with positive findings on their products.

Neuroscience Peer Reviewer Consortium http://nprc.incf.org/ .

“About 80% of submitted manuscripts are rejected during this initial screening stage, usually within one week to 10 days.” http://www.sciencemag.org/site/feature/contribinfo/faq/ (accessed on October 18, 2016); “Nature has space to publish only 8% or so of the 200 papers submitted each week” http://www.nature.com/nature/authors/get_published/ (accessed on October 18, 2016).

Code of Conduct and Best Practice Guidelines for Journal Editors http://publicationethics.org/files/Code%20of%20Conduct_2.pdf .

Recommendations for the Conduct, Reporting, Editing, and Publication of Scholarly work in Medical Journals http://www.icmje.org/icmje-recommendations.pdf .

Alberts, B., Hanson, B., & Kelner, K. L. (2008). Reviewing peer review. Science, 321 (5885), 15.

Article PubMed Google Scholar

Ali, P. A., & Watson, R. (2016). Peer review and the publication process. Nursing Open . doi: 10.1002/nop2.51 .

Baggs, J. G., Broome, M. E., Dougherty, M. C., Freda, M. C., & Kearney, M. H. (2008). Blinding in peer review: the preferences of reviewers for nursing journals. Journal of Advanced Nursing, 64 (2), 131–138.

Bjork, B.-C., Roos, A., & Lauri, M. (2009). Scientific journal publishing: yearly volume and open access availability. Information Research: An International Electronic Journal, 14 (1).

Google Scholar

Bohannon, J. (2013). Who’s afraid of peer review. Science, 342 (6154).

Boldt, A. (2011). Extending ArXiv. org to achieve open peer review and publishing. Journal of Scholarly Publishing, 42 (2), 238–242.

Article Google Scholar

Bombardier, C., Laine, L., Reicin, A., Shapiro, D., Burgos-Vargas, R., Davis, B., … & Kvien, T. K. (2000). Comparison of upper gastrointestinal toxicity of rofecoxib and naproxen in patients with rheumatoid arthritis. New England Journal of Medicine, 343 (21), 1520–1528

Bornmann, L. (2011). Scientific peer review. Annual Review of Information Science and Technology, 45 (1), 197–245.

Bornmann, L., & Daniel, H.-D. (2009). Reviewer and editor biases in journal peer review: An investigation of manuscript refereeing at Angewandte Chemie International Edition. Research Evaluation, 18 (4), 262–272.

Bornmann, L., & Daniel, H. D. (2010). Reliability of reviewers’ ratings when using public peer review: A case study. Learned Publishing, 23 (2), 124–131.

Bornmann, L., Mutz, R., & Daniel, H.-D. (2007). Gender differences in grant peer review: A meta-analysis. Journal of Informetrics, 1 (3), 226–238.

Borsuk, R. M., Aarssen, L. W., Budden, A. E., Koricheva, J., Leimu, R., Tregenza, T., et al. (2009). To name or not to name: The effect of changing author gender on peer review. BioScience, 59 (11), 985–989.

Bosch, X., Pericas, J. M., Hernández, C., & Doti, P. (2013). Financial, nonfinancial and editors’ conflicts of interest in high-impact biomedical journals. European Journal of Clinical Investigation, 43 (7), 660–667.

Brown, R. J. C. (2007). Double anonymity in peer review within the chemistry periodicals community. Learned Publishing, 20 (2), 131–137.

Budden, A. E., Tregenza, T., Aarssen, L. W., Koricheva, J., Leimu, R., & Lortie, C. J. (2008). Double-blind review favours increased representation of female authors. Trends in Ecology & Evolution, 23 (1), 4–6.

Burnham, J. C. (1990). The evolution of editorial peer review. JAMA, 263 (10), 1323–1329.

Article CAS PubMed Google Scholar

Callaham, M. L., & Tercier, J. (2007). The relationship of previous training and experience of journal peer reviewers to subsequent review quality. PLoS Med, 4 (1), e40.

Article PubMed PubMed Central Google Scholar

Campbell, P. (2006). Peer Review Trial and Debate. Nature . http://www.nature.com/nature/peerreview/debate/

Campbell, P. (2008). Nature peer review trial and debate. Nature: International Weekly Journal of Science, 11

Campos-Arceiz, A., Primack, R. B., & Koh, L. P. (2015). Reviewer recommendations and editors’ decisions for a conservation journal: Is it just a crapshoot? And do Chinese authors get a fair shot? Biological Conservation, 186, 22–27.

Cantor, M., & Gero, S. (2015). The missing metric: Quantifying contributions of reviewers. Royal Society open science, 2 (2), 140540.

CDC. (2016). Measles: Cases and Outbreaks. Retrieved from http://www.cdc.gov/measles/cases-outbreaks.html

Ceci, S. J., & Williams, W. M. (2011). Understanding current causes of women’s underrepresentation in science. Proceedings of the National Academy of Sciences, 108 (8), 3157–3162.

Article CAS Google Scholar

Chan, A. W., Hróbjartsson, A., Haahr, M. T., Gøtzsche, P. C., & Altman, D. G. (2004). Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. JAMA, 291 (20), 2457–2465.

Charlton, B. G. (2004). Conflicts of interest in medical science: peer usage, peer review andCoI consultancy’. Medical Hypotheses, 63 (2), 181–186.

Cressey, D. (2014). Journals weigh up double-blind peer review. Nature news .

Dalton, R. (2001). Peers under pressure. Nature, 413 (6852), 102–104.

DeVries, D. R., Marschall, E. A., & Stein, R. A. (2009). Exploring the peer review process: What is it, does it work, and can it be improved? Fisheries, 34 (6), 270–279. doi: 10.1577/1548-8446-34.6.270

Emerson, G. B., Warme, W. J., Wolf, F. M., Heckman, J. D., Brand, R. A., & Leopold, S. S. (2010). Testing for the presence of positive-outcome bias in peer review: A randomized controlled trial. Archives of Internal Medicine, 170 (21), 1934–1939.

Fanelli, D. (2010). Do pressures to publish increase scientists’ bias? An empirical support from US States Data. PLoS ONE, 5 (4), e10271.

Fang, F. C., Steen, R. G., & Casadevall, A. (2012). Misconduct accounts for the majority of retracted scientific publications. Proceedings of the National Academy of Sciences, 109 (42), 17028–17033. doi: 10.1073/pnas.1212247109

Ferguson, C., Marcus, A., & Oransky, I. (2014). Publishing: The peer-review scam. Nature, 515 (7528), 480.

Ford, E. (2015). Open peer review at four STEM journals: An observational overview. F1000Research, 4 .

Fountain, H. (2014). Science journal pulls 60 papers in peer-review fraud. Science, 3, 06.

Freda, M. C., Kearney, M. H., Baggs, J. G., Broome, M. E., & Dougherty, M. (2009). Peer reviewer training and editor support: Results from an international survey of nursing peer reviewers. Journal of Professional Nursing, 25 (2), 101–108.

Gillespie, G. W., Chubin, D. E., & Kurzon, G. M. (1985). Experience with NIH peer review: Researchers’ cynicism and desire for change. Science, Technology and Human Values, 10 (3), 44–54.

Greaves, S., Scott, J., Clarke, M., Miller, L., Hannay, T., Thomas, A., et al. (2006). Overview: Nature’s peer review trial. Nature , 10.

Grieneisen, M. L., & Zhang, M. (2012). A comprehensive survey of retracted articles from the scholarly literature. PLoS ONE, 7 (10), e44118.

Article CAS PubMed PubMed Central Google Scholar

Grivell, L. (2006). Through a glass darkly. EMBO Reports, 7 (6), 567–570.

Harrison, C. (2004). Peer review, politics and pluralism. Environmental Science & Policy, 7 (5), 357–368.

Hartog, C. S., Kohl, M., & Reinhart, K. (2011). A systematic review of third-generation hydroxyethyl starch (HES 130/0.4) in resuscitation: Safety not adequately addressed. Anesthesia and Analgesia, 112 (3), 635–645.

Hojat, M., Gonnella, J. S., & Caelleigh, A. S. (2003). Impartial judgment by the “gatekeepers” of science: Fallibility and accountability in the peer review process. Advances in Health Sciences Education, 8 (1), 75–96.

Ioannidis, J. P. A. (2005). Why most published research findings are false. PLoS Med, 2 (8), e124.

James, M. J., Cook-Johnson, R. J., & Cleland, L. G. (2007). Selective COX-2 inhibitors, eicosanoid synthesis and clinical outcomes: A case study of system failure. Lipids, 42 (9), 779–785.

Janssen, S. J., Bredenoord, A. L., Dhert, W., de Kleuver, M., Oner, F. C., & Verlaan, J.-J. (2015). Potential conflicts of interest of editorial board members from five leading spine journals. PLoS ONE, 10 (6), e0127362.

Jefferson, T., Alderson, P., Wager, E., & Davidoff, F. (2002). Effects of editorial peer review: A systematic review. JAMA, 287 (21), 2784–2786.

Jelicic, M., & Merckelbach, H. (2002). Peer-review: Let’s imitate the lawyers! Cortex, 38 (3), 406–407.

Jinha, A. E. (2010). Article 50 million: An estimate of the number of scholarly articles in existence. Learned Publishing, 23 (3), 258–263.

Khan, K. (2010). Is open peer review the fairest system? No. Bmj, 341, c6425.

Kilwein, J. H. (1999). Biases in medical literature. Journal of Clinical Pharmacy and Therapeutics, 24 (6), 393–396.

Koonin, E. V., Landweber, L. F., & Lipman, D. J. (2013). Biology direct: Celebrating 7 years of open, published peer review. Biology direct, 8 (1), 1.

Kozlowski, L. T. (2016). Coping with the conflict-of-interest pandemic by listening to and doubting everyone, including yourself. Science and Engineering Ethics, 22 (2), 591–596.

Krebs, H. A., & Johnson, W. A. (1937). The role of citric acid in intermediate metabolism in animal tissues. Enzymologia, 4, 148–156.

CAS Google Scholar

Kriegeskorte, N., Walther, A., & Deca, D. (2012). An emerging consensus for open evaluation: 18 visions for the future of scientific publishing. Beyond open access: Visions for open evaluation of scientific papers by post-publication peer review , 5.

Langfeldt, L. (2006). The policy challenges of peer review: Managing bias, conflict of interests and interdisciplinary assessments. Research Evaluation, 15 (1), 31–41.

Lawrence, P. A. (2003). The politics of publication. Nature, 422 (6929), 259–261.

Lee, C. J., Sugimoto, C. R., Zhang, G., & Cronin, B. (2013). Bias in peer review. Journal of the American Society for Information Science and Technology, 64 (1), 2–17.

Lippert, S., Callaham, M. L., & Lo, B. (2011). Perceptions of conflict of interest disclosures among peer reviewers. PLoS ONE, 6 (11), e26900.

Link, A. M. (1998). US and non-US submissions: an analysis of reviewer bias. Jama , 280 (3), 246–247.

Lo, B., & Field, M. J. (Eds.). (2009). Conflict of interest in medical research, education, and practice . Washington, D.C.: National Academies Press.

Loonen, M. P. J., Hage, J. J., & Kon, M. (2005). Who benefits from peer review? An analysis of the outcome of 100 requests for review by Plastic and Reconstructive Surgery. Plastic and Reconstructive Surgery, 116 (5), 1461–1472.

Luukkonen, T. (2012). Conservatism and risk-taking in peer review: Emerging ERC practices. Research Evaluation , rvs001.

McClintock, B. (1950). The origin and behavior of mutable loci in maize. Proceedings of the National Academy of Sciences, 36 (6), 344–355.

McCullough, J. (1989). First comprehensive survey of NSF applicants focuses on their concerns about proposal review. Science, Technology and Human Values, 14 (1), 78–88.

McIntyre, W. F., & Evans, G. (2014). The Vioxx ® legacy: Enduring lessons from the not so distant past. Cardiology Journal, 21 (2), 203–205.

Moylan, E. C., Harold, S., O’Neill, C., & Kowalczuk, M. K. (2014). Open, single-blind, double-blind: Which peer review process do you prefer? BMC Pharmacology and Toxicology, 15 (1), 1.

Mulligan, A., Hall, L., & Raphael, E. (2013). Peer review in a changing world: An international study measuring the attitudes of researchers. Journal of the American Society for Information Science and Technology, 64 (1), 132–161.

Nath, S. B., Marcus, S. C., & Druss, B. G. (2006). Retractions in the research literature: misconduct or mistakes? Medical Journal of Australia, 185 (3), 152.

PubMed Google Scholar

Nature Editorial (2008). Working double-blind. Nature, 451, 605–606.

Nature Neuroscience Editorial. (2006). Women in neuroscience: A numbers game. Nature Neuroscience, 9, 853.

Okike, K., Hug, K. T., Kocher, M. S., & Leopold, S. S. (2016). Single-blind vs double-blind peer review in the setting of author prestige. JAMA, 316 (12), 1315–1316.

Olson, C. M., Rennie, D., Cook, D., Dickersin, K., Flanagin, A., Hogan, J. W., … & Pace, B. (2002). Publication bias in editorial decision making. JAMA, 287 (21), 2825–2828.

Palmer, A. R. (2000). Quasireplication and the contract of error: lessons from sex ratios, heritabilities and fluctuating asymmetry. Annual Review of Ecology and Systematics , 441–480.

Peters, D. P., & Ceci, S. J. (1982). Peer-review practices of psychological journals: The fate of published articles, submitted again. Behavioral and Brain Sciences, 5 (02), 187–195.

PLOS MED Editors. (2008). Making sense of non-financial competing interests. PLOS Med, 5 (9), e199.

Pulverer, B. (2010). Transparency showcases strength of peer review. Nature, 468 (7320), 29–31.

Pöschl, U., & Koop, T. (2008). Interactive open access publishing and collaborative peer review for improved scientific communication and quality assurance. Information Services & Use, 28 (2), 105–107.

Relman, A. S. (1985). Dealing with conflicts of interest. New England Journal of Medicine, 313 (12), 749–751.

Rennie, J., & Chief, I. N. (2002). Misleading math about the Earth. Scientific American, 286 (1), 61.

Resch, K. I., Ernst, E., & Garrow, J. (2000). A randomized controlled study of reviewer bias against an unconventional therapy. Journal of the Royal Society of Medicine, 93 (4), 164–167.

CAS PubMed PubMed Central Google Scholar

Resnik, D. B., & Elmore, S. A. (2016). Ensuring the quality, fairness, and integrity of journal peer review: A possible role of editors. Science and Engineering Ethics, 22 (1), 169–188.

Ross, J. S., Gross, C. P., Desai, M. M., Hong, Y., Grant, A. O., Daniels, S. R., et al. (2006). Effect of blinded peer review on abstract acceptance. JAMA, 295 (14), 1675–1680.

Sandström, U. (2009, BRAZIL. JUL 14-17, 2009). Cognitive bias in peer review: A new approach. Paper presented at the 12th International Conference of the International-Society-for-Scientometrics-and-Informetrics.

Shatz, D. (2004). Peer review: A critical inquiry . Lanham, MD: Rowman & Littlefield.

Schneider, L. (2016, September 4). Beall-listed Frontiers empire strikes back. Retrieved from https://forbetterscience.wordpress.com/2016/09/14/beall-listed-frontiers-empire-strikes-back/

Schroter, S., Black, N., Evans, S., Carpenter, J., Godlee, F., & Smith, R. (2004). Effects of training on quality of peer review: Randomised controlled trial. BMJ, 328 (7441), 673.

Service, R. F. (2002). Scientific misconduct. Bell Labs fires star physicist found guilty of forging data. Science (New York, NY), 298 (5591), 30.

Shimp, C. P. (2004). Scientific peer review: A case study from local and global analyses. Journal of the Experimental Analysis of Behavior, 82 (1), 103–116.

Article PubMed Central Google Scholar

Smith, R. (1999). Opening up BMJ peer review: A beginning that should lead to complete transparency. BMJ, 318, 4–5.

Smith, R. (2006). Peer review: A flawed process at the heart of science and journals. Journal of the Royal Society of Medicine, 99 (4), 178–182. doi: 10.1258/jrsm.99.4.178

Souder, L. (2011). The ethics of scholarly peer review: A review of the literature. Learned Publishing, 24 (1), 55–72.

Spielmans, G. I., Biehn, T. L., & Sawrey, D. L. (2009). A case study of salami slicing: pooled analyses of duloxetine for depression. Psychotherapy and Psychosomatics, 79 (2), 97–106.

Spier, R. (2002). The history of the peer-review process. Trends in Biotechnology, 20 (8), 357–358.

Squazzoni, F. (2010). Peering into peer review. Sociologica, 4 (3).

Squazzoni, F., & Gandelli, C. (2012). Saint Matthew strikes again: An agent-based model of peer review and the scientific community structure. Journal of Informetrics, 6 (2), 265–275.

Steen, R. G. (2010). Retractions in the scientific literature: is the incidence of research fraud increasing? Journal of Medical Ethics , jme-2010.

Stroebe, W., Postmes, T., & Spears, R. (2012). Scientific misconduct and the myth of self-correction in science. Perspectives on Psychological Science, 7 (6), 670–688.

Tite, L., & Schroter, S. (2007). Why do peer reviewers decline to review? A survey. Journal of Epidemiology and Community Health, 61 (1), 9–12.

Travis, G. D. L., & Collins, H. M. (1991). New light on old boys: cognitive and institutional particularism in the peer review system. Science, Technology and Human Values, 16 (3), 322–341.

Tregenza, T. (2002). Gender bias in the refereeing process? Trends in Ecology & Evolution, 17 (8), 349–350.

Valkonen, L., & Brooks, J. (2011). Gender balance in Cortex acceptance rates. Cortex, 47 (7), 763–770.

van Rooyen, S., Delamothe, T., & Evans, S. J. W. (2010). Effect on peer review of telling reviewers that their signed reviews might be posted on the web: Randomised controlled trial. BMJ, 341, c5729.

van Rooyen, S., Godlee, F., Evans, S., Black, N., & Smith, R. (1999). Effect of open peer review on quality of reviews and on reviewers’ recommendations: A randomised trial. British Medical Journal, 318 (7175), 23–27.

Walker, R., & Rocha da Silva, P. (2014). Emerging trends in peer review—A survey. Frontiers in neuroscience, 9, 169.

Walsh, E., Rooney, M., Appleby, L., & Wilkinson, G. (2000). Open peer review: A randomised controlled trial. The British Journal of Psychiatry, 176 (1), 47–51.

Walters, W. P., & Bajorath, J. (2015). On the evolving open peer review culture for chemical information science. F1000Research, 4 .

Ware, M. (2008). Peer review in scholarly journals: Perspective of the scholarly community-Results from an international study. Information Services and Use, 28 (2), 109–112.

Ware, M. (2011). Peer review: Recent experience and future directions. New Review of Information Networking , 16 (1), 23–53.

Webb, T. J., O’Hara, B., & Freckleton, R. P. (2008). Does double-blind review benefit female authors? Heredity, 77, 282–291.

Wellington, J., & Nixon, J. (2005). Shaping the field: The role of academic journal editors in the construction of education as a field of study. British Journal of Sociology of Education, 26 (5), 643–655.

Whittaker, R. J. (2008). Journal review and gender equality: A critical comment on Budden et al. Trends in Ecology & Evolution, 23 (9), 478–479.

Wiedermann, C. J. (2016). Ethical publishing in intensive care medicine: A narrative review. World Journal of Critical Care Medicine, 5 (3), 171.

Download references

Author information

Authors and affiliations.

Murray State University, Murray, Kentucky, USA

Pali U. K. De Silva & Candace K. Vance

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Pali U. K. De Silva .

Rights and permissions

Reprints and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

De Silva, P.U.K., K. Vance, C. (2017). Preserving the Quality of Scientific Research: Peer Review of Research Articles. In: Scientific Scholarly Communication. Fascinating Life Sciences. Springer, Cham. https://doi.org/10.1007/978-3-319-50627-2_6

Download citation

DOI : https://doi.org/10.1007/978-3-319-50627-2_6

Published : 20 January 2017

Publisher Name : Springer, Cham

Print ISBN : 978-3-319-50626-5

Online ISBN : 978-3-319-50627-2

eBook Packages : Biomedical and Life Sciences Biomedical and Life Sciences (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Peer Review in Scientific Publications: Benefits, Critiques, & A Survival Guide

Affiliations.

- 1 Clinical Biochemistry, Department of Pediatric Laboratory Medicine, The Hospital for Sick Children, University of Toronto , Toronto, Ontario, Canada.

- 2 Clinical Biochemistry, Department of Pediatric Laboratory Medicine, The Hospital for Sick Children, University of Toronto, Toronto, Ontario, Canada; Department of Laboratory Medicine and Pathobiology, University of Toronto, Toronto, Canada; Chair, Communications and Publications Division (CPD), International Federation for Sick Clinical Chemistry (IFCC), Milan, Italy.

- PMID: 27683470

- PMCID: PMC4975196

Peer review has been defined as a process of subjecting an author's scholarly work, research or ideas to the scrutiny of others who are experts in the same field. It functions to encourage authors to meet the accepted high standards of their discipline and to control the dissemination of research data to ensure that unwarranted claims, unacceptable interpretations or personal views are not published without prior expert review. Despite its wide-spread use by most journals, the peer review process has also been widely criticised due to the slowness of the process to publish new findings and due to perceived bias by the editors and/or reviewers. Within the scientific community, peer review has become an essential component of the academic writing process. It helps ensure that papers published in scientific journals answer meaningful research questions and draw accurate conclusions based on professionally executed experimentation. Submission of low quality manuscripts has become increasingly prevalent, and peer review acts as a filter to prevent this work from reaching the scientific community. The major advantage of a peer review process is that peer-reviewed articles provide a trusted form of scientific communication. Since scientific knowledge is cumulative and builds on itself, this trust is particularly important. Despite the positive impacts of peer review, critics argue that the peer review process stifles innovation in experimentation, and acts as a poor screen against plagiarism. Despite its downfalls, there has not yet been a foolproof system developed to take the place of peer review, however, researchers have been looking into electronic means of improving the peer review process. Unfortunately, the recent explosion in online only/electronic journals has led to mass publication of a large number of scientific articles with little or no peer review. This poses significant risk to advances in scientific knowledge and its future potential. The current article summarizes the peer review process, highlights the pros and cons associated with different types of peer review, and describes new methods for improving peer review.

Keywords: journal; manuscript; open access; peer review; publication.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 26 March 2024

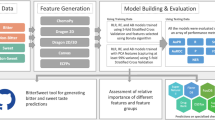

Predicting and improving complex beer flavor through machine learning

- Michiel Schreurs ORCID: orcid.org/0000-0002-9449-5619 1 , 2 , 3 na1 ,

- Supinya Piampongsant 1 , 2 , 3 na1 ,

- Miguel Roncoroni ORCID: orcid.org/0000-0001-7461-1427 1 , 2 , 3 na1 ,

- Lloyd Cool ORCID: orcid.org/0000-0001-9936-3124 1 , 2 , 3 , 4 ,

- Beatriz Herrera-Malaver ORCID: orcid.org/0000-0002-5096-9974 1 , 2 , 3 ,

- Christophe Vanderaa ORCID: orcid.org/0000-0001-7443-5427 4 ,

- Florian A. Theßeling 1 , 2 , 3 ,

- Łukasz Kreft ORCID: orcid.org/0000-0001-7620-4657 5 ,

- Alexander Botzki ORCID: orcid.org/0000-0001-6691-4233 5 ,

- Philippe Malcorps 6 ,

- Luk Daenen 6 ,

- Tom Wenseleers ORCID: orcid.org/0000-0002-1434-861X 4 &

- Kevin J. Verstrepen ORCID: orcid.org/0000-0002-3077-6219 1 , 2 , 3

Nature Communications volume 15 , Article number: 2368 ( 2024 ) Cite this article

53k Accesses

861 Altmetric

Metrics details

- Chemical engineering

- Gas chromatography

- Machine learning

- Metabolomics

- Taste receptors

The perception and appreciation of food flavor depends on many interacting chemical compounds and external factors, and therefore proves challenging to understand and predict. Here, we combine extensive chemical and sensory analyses of 250 different beers to train machine learning models that allow predicting flavor and consumer appreciation. For each beer, we measure over 200 chemical properties, perform quantitative descriptive sensory analysis with a trained tasting panel and map data from over 180,000 consumer reviews to train 10 different machine learning models. The best-performing algorithm, Gradient Boosting, yields models that significantly outperform predictions based on conventional statistics and accurately predict complex food features and consumer appreciation from chemical profiles. Model dissection allows identifying specific and unexpected compounds as drivers of beer flavor and appreciation. Adding these compounds results in variants of commercial alcoholic and non-alcoholic beers with improved consumer appreciation. Together, our study reveals how big data and machine learning uncover complex links between food chemistry, flavor and consumer perception, and lays the foundation to develop novel, tailored foods with superior flavors.

Similar content being viewed by others

BitterSweet: Building machine learning models for predicting the bitter and sweet taste of small molecules

Rudraksh Tuwani, Somin Wadhwa & Ganesh Bagler

Predicting odor from molecular structure: a multi-label classification approach

Kushagra Saini & Venkatnarayan Ramanathan

Toward a general and interpretable umami taste predictor using a multi-objective machine learning approach

Lorenzo Pallante, Aigli Korfiati, … Marco A. Deriu

Introduction

Predicting and understanding food perception and appreciation is one of the major challenges in food science. Accurate modeling of food flavor and appreciation could yield important opportunities for both producers and consumers, including quality control, product fingerprinting, counterfeit detection, spoilage detection, and the development of new products and product combinations (food pairing) 1 , 2 , 3 , 4 , 5 , 6 . Accurate models for flavor and consumer appreciation would contribute greatly to our scientific understanding of how humans perceive and appreciate flavor. Moreover, accurate predictive models would also facilitate and standardize existing food assessment methods and could supplement or replace assessments by trained and consumer tasting panels, which are variable, expensive and time-consuming 7 , 8 , 9 . Lastly, apart from providing objective, quantitative, accurate and contextual information that can help producers, models can also guide consumers in understanding their personal preferences 10 .

Despite the myriad of applications, predicting food flavor and appreciation from its chemical properties remains a largely elusive goal in sensory science, especially for complex food and beverages 11 , 12 . A key obstacle is the immense number of flavor-active chemicals underlying food flavor. Flavor compounds can vary widely in chemical structure and concentration, making them technically challenging and labor-intensive to quantify, even in the face of innovations in metabolomics, such as non-targeted metabolic fingerprinting 13 , 14 . Moreover, sensory analysis is perhaps even more complicated. Flavor perception is highly complex, resulting from hundreds of different molecules interacting at the physiochemical and sensorial level. Sensory perception is often non-linear, characterized by complex and concentration-dependent synergistic and antagonistic effects 15 , 16 , 17 , 18 , 19 , 20 , 21 that are further convoluted by the genetics, environment, culture and psychology of consumers 22 , 23 , 24 . Perceived flavor is therefore difficult to measure, with problems of sensitivity, accuracy, and reproducibility that can only be resolved by gathering sufficiently large datasets 25 . Trained tasting panels are considered the prime source of quality sensory data, but require meticulous training, are low throughput and high cost. Public databases containing consumer reviews of food products could provide a valuable alternative, especially for studying appreciation scores, which do not require formal training 25 . Public databases offer the advantage of amassing large amounts of data, increasing the statistical power to identify potential drivers of appreciation. However, public datasets suffer from biases, including a bias in the volunteers that contribute to the database, as well as confounding factors such as price, cult status and psychological conformity towards previous ratings of the product.

Classical multivariate statistics and machine learning methods have been used to predict flavor of specific compounds by, for example, linking structural properties of a compound to its potential biological activities or linking concentrations of specific compounds to sensory profiles 1 , 26 . Importantly, most previous studies focused on predicting organoleptic properties of single compounds (often based on their chemical structure) 27 , 28 , 29 , 30 , 31 , 32 , 33 , thus ignoring the fact that these compounds are present in a complex matrix in food or beverages and excluding complex interactions between compounds. Moreover, the classical statistics commonly used in sensory science 34 , 35 , 36 , 37 , 38 , 39 require a large sample size and sufficient variance amongst predictors to create accurate models. They are not fit for studying an extensive set of hundreds of interacting flavor compounds, since they are sensitive to outliers, have a high tendency to overfit and are less suited for non-linear and discontinuous relationships 40 .

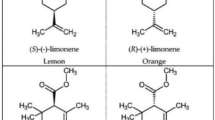

In this study, we combine extensive chemical analyses and sensory data of a set of different commercial beers with machine learning approaches to develop models that predict taste, smell, mouthfeel and appreciation from compound concentrations. Beer is particularly suited to model the relationship between chemistry, flavor and appreciation. First, beer is a complex product, consisting of thousands of flavor compounds that partake in complex sensory interactions 41 , 42 , 43 . This chemical diversity arises from the raw materials (malt, yeast, hops, water and spices) and biochemical conversions during the brewing process (kilning, mashing, boiling, fermentation, maturation and aging) 44 , 45 . Second, the advent of the internet saw beer consumers embrace online review platforms, such as RateBeer (ZX Ventures, Anheuser-Busch InBev SA/NV) and BeerAdvocate (Next Glass, inc.). In this way, the beer community provides massive data sets of beer flavor and appreciation scores, creating extraordinarily large sensory databases to complement the analyses of our professional sensory panel. Specifically, we characterize over 200 chemical properties of 250 commercial beers, spread across 22 beer styles, and link these to the descriptive sensory profiling data of a 16-person in-house trained tasting panel and data acquired from over 180,000 public consumer reviews. These unique and extensive datasets enable us to train a suite of machine learning models to predict flavor and appreciation from a beer’s chemical profile. Dissection of the best-performing models allows us to pinpoint specific compounds as potential drivers of beer flavor and appreciation. Follow-up experiments confirm the importance of these compounds and ultimately allow us to significantly improve the flavor and appreciation of selected commercial beers. Together, our study represents a significant step towards understanding complex flavors and reinforces the value of machine learning to develop and refine complex foods. In this way, it represents a stepping stone for further computer-aided food engineering applications 46 .

To generate a comprehensive dataset on beer flavor, we selected 250 commercial Belgian beers across 22 different beer styles (Supplementary Fig. S1 ). Beers with ≤ 4.2% alcohol by volume (ABV) were classified as non-alcoholic and low-alcoholic. Blonds and Tripels constitute a significant portion of the dataset (12.4% and 11.2%, respectively) reflecting their presence on the Belgian beer market and the heterogeneity of beers within these styles. By contrast, lager beers are less diverse and dominated by a handful of brands. Rare styles such as Brut or Faro make up only a small fraction of the dataset (2% and 1%, respectively) because fewer of these beers are produced and because they are dominated by distinct characteristics in terms of flavor and chemical composition.

Extensive analysis identifies relationships between chemical compounds in beer

For each beer, we measured 226 different chemical properties, including common brewing parameters such as alcohol content, iso-alpha acids, pH, sugar concentration 47 , and over 200 flavor compounds (Methods, Supplementary Table S1 ). A large portion (37.2%) are terpenoids arising from hopping, responsible for herbal and fruity flavors 16 , 48 . A second major category are yeast metabolites, such as esters and alcohols, that result in fruity and solvent notes 48 , 49 , 50 . Other measured compounds are primarily derived from malt, or other microbes such as non- Saccharomyces yeasts and bacteria (‘wild flora’). Compounds that arise from spices or staling are labeled under ‘Others’. Five attributes (caloric value, total acids and total ester, hop aroma and sulfur compounds) are calculated from multiple individually measured compounds.

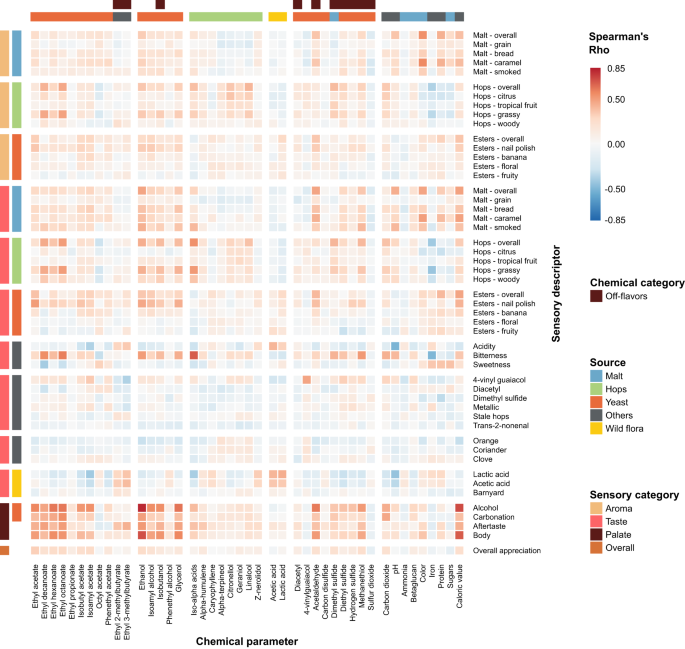

As a first step in identifying relationships between chemical properties, we determined correlations between the concentrations of the compounds (Fig. 1 , upper panel, Supplementary Data 1 and 2 , and Supplementary Fig. S2 . For the sake of clarity, only a subset of the measured compounds is shown in Fig. 1 ). Compounds of the same origin typically show a positive correlation, while absence of correlation hints at parameters varying independently. For example, the hop aroma compounds citronellol, and alpha-terpineol show moderate correlations with each other (Spearman’s rho=0.39 and 0.57), but not with the bittering hop component iso-alpha acids (Spearman’s rho=0.16 and −0.07). This illustrates how brewers can independently modify hop aroma and bitterness by selecting hop varieties and dosage time. If hops are added early in the boiling phase, chemical conversions increase bitterness while aromas evaporate, conversely, late addition of hops preserves aroma but limits bitterness 51 . Similarly, hop-derived iso-alpha acids show a strong anti-correlation with lactic acid and acetic acid, likely reflecting growth inhibition of lactic acid and acetic acid bacteria, or the consequent use of fewer hops in sour beer styles, such as West Flanders ales and Fruit beers, that rely on these bacteria for their distinct flavors 52 . Finally, yeast-derived esters (ethyl acetate, ethyl decanoate, ethyl hexanoate, ethyl octanoate) and alcohols (ethanol, isoamyl alcohol, isobutanol, and glycerol), correlate with Spearman coefficients above 0.5, suggesting that these secondary metabolites are correlated with the yeast genetic background and/or fermentation parameters and may be difficult to influence individually, although the choice of yeast strain may offer some control 53 .

Spearman rank correlations are shown. Descriptors are grouped according to their origin (malt (blue), hops (green), yeast (red), wild flora (yellow), Others (black)), and sensory aspect (aroma, taste, palate, and overall appreciation). Please note that for the chemical compounds, for the sake of clarity, only a subset of the total number of measured compounds is shown, with an emphasis on the key compounds for each source. For more details, see the main text and Methods section. Chemical data can be found in Supplementary Data 1 , correlations between all chemical compounds are depicted in Supplementary Fig. S2 and correlation values can be found in Supplementary Data 2 . See Supplementary Data 4 for sensory panel assessments and Supplementary Data 5 for correlation values between all sensory descriptors.

Interestingly, different beer styles show distinct patterns for some flavor compounds (Supplementary Fig. S3 ). These observations agree with expectations for key beer styles, and serve as a control for our measurements. For instance, Stouts generally show high values for color (darker), while hoppy beers contain elevated levels of iso-alpha acids, compounds associated with bitter hop taste. Acetic and lactic acid are not prevalent in most beers, with notable exceptions such as Kriek, Lambic, Faro, West Flanders ales and Flanders Old Brown, which use acid-producing bacteria ( Lactobacillus and Pediococcus ) or unconventional yeast ( Brettanomyces ) 54 , 55 . Glycerol, ethanol and esters show similar distributions across all beer styles, reflecting their common origin as products of yeast metabolism during fermentation 45 , 53 . Finally, low/no-alcohol beers contain low concentrations of glycerol and esters. This is in line with the production process for most of the low/no-alcohol beers in our dataset, which are produced through limiting fermentation or by stripping away alcohol via evaporation or dialysis, with both methods having the unintended side-effect of reducing the amount of flavor compounds in the final beer 56 , 57 .

Besides expected associations, our data also reveals less trivial associations between beer styles and specific parameters. For example, geraniol and citronellol, two monoterpenoids responsible for citrus, floral and rose flavors and characteristic of Citra hops, are found in relatively high amounts in Christmas, Saison, and Brett/co-fermented beers, where they may originate from terpenoid-rich spices such as coriander seeds instead of hops 58 .

Tasting panel assessments reveal sensorial relationships in beer

To assess the sensory profile of each beer, a trained tasting panel evaluated each of the 250 beers for 50 sensory attributes, including different hop, malt and yeast flavors, off-flavors and spices. Panelists used a tasting sheet (Supplementary Data 3 ) to score the different attributes. Panel consistency was evaluated by repeating 12 samples across different sessions and performing ANOVA. In 95% of cases no significant difference was found across sessions ( p > 0.05), indicating good panel consistency (Supplementary Table S2 ).

Aroma and taste perception reported by the trained panel are often linked (Fig. 1 , bottom left panel and Supplementary Data 4 and 5 ), with high correlations between hops aroma and taste (Spearman’s rho=0.83). Bitter taste was found to correlate with hop aroma and taste in general (Spearman’s rho=0.80 and 0.69), and particularly with “grassy” noble hops (Spearman’s rho=0.75). Barnyard flavor, most often associated with sour beers, is identified together with stale hops (Spearman’s rho=0.97) that are used in these beers. Lactic and acetic acid, which often co-occur, are correlated (Spearman’s rho=0.66). Interestingly, sweetness and bitterness are anti-correlated (Spearman’s rho = −0.48), confirming the hypothesis that they mask each other 59 , 60 . Beer body is highly correlated with alcohol (Spearman’s rho = 0.79), and overall appreciation is found to correlate with multiple aspects that describe beer mouthfeel (alcohol, carbonation; Spearman’s rho= 0.32, 0.39), as well as with hop and ester aroma intensity (Spearman’s rho=0.39 and 0.35).

Similar to the chemical analyses, sensorial analyses confirmed typical features of specific beer styles (Supplementary Fig. S4 ). For example, sour beers (Faro, Flanders Old Brown, Fruit beer, Kriek, Lambic, West Flanders ale) were rated acidic, with flavors of both acetic and lactic acid. Hoppy beers were found to be bitter and showed hop-associated aromas like citrus and tropical fruit. Malt taste is most detected among scotch, stout/porters, and strong ales, while low/no-alcohol beers, which often have a reputation for being ‘worty’ (reminiscent of unfermented, sweet malt extract) appear in the middle. Unsurprisingly, hop aromas are most strongly detected among hoppy beers. Like its chemical counterpart (Supplementary Fig. S3 ), acidity shows a right-skewed distribution, with the most acidic beers being Krieks, Lambics, and West Flanders ales.

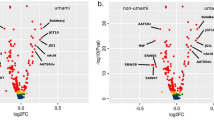

Tasting panel assessments of specific flavors correlate with chemical composition

We find that the concentrations of several chemical compounds strongly correlate with specific aroma or taste, as evaluated by the tasting panel (Fig. 2 , Supplementary Fig. S5 , Supplementary Data 6 ). In some cases, these correlations confirm expectations and serve as a useful control for data quality. For example, iso-alpha acids, the bittering compounds in hops, strongly correlate with bitterness (Spearman’s rho=0.68), while ethanol and glycerol correlate with tasters’ perceptions of alcohol and body, the mouthfeel sensation of fullness (Spearman’s rho=0.82/0.62 and 0.72/0.57 respectively) and darker color from roasted malts is a good indication of malt perception (Spearman’s rho=0.54).

Heatmap colors indicate Spearman’s Rho. Axes are organized according to sensory categories (aroma, taste, mouthfeel, overall), chemical categories and chemical sources in beer (malt (blue), hops (green), yeast (red), wild flora (yellow), Others (black)). See Supplementary Data 6 for all correlation values.

Interestingly, for some relationships between chemical compounds and perceived flavor, correlations are weaker than expected. For example, the rose-smelling phenethyl acetate only weakly correlates with floral aroma. This hints at more complex relationships and interactions between compounds and suggests a need for a more complex model than simple correlations. Lastly, we uncovered unexpected correlations. For instance, the esters ethyl decanoate and ethyl octanoate appear to correlate slightly with hop perception and bitterness, possibly due to their fruity flavor. Iron is anti-correlated with hop aromas and bitterness, most likely because it is also anti-correlated with iso-alpha acids. This could be a sign of metal chelation of hop acids 61 , given that our analyses measure unbound hop acids and total iron content, or could result from the higher iron content in dark and Fruit beers, which typically have less hoppy and bitter flavors 62 .

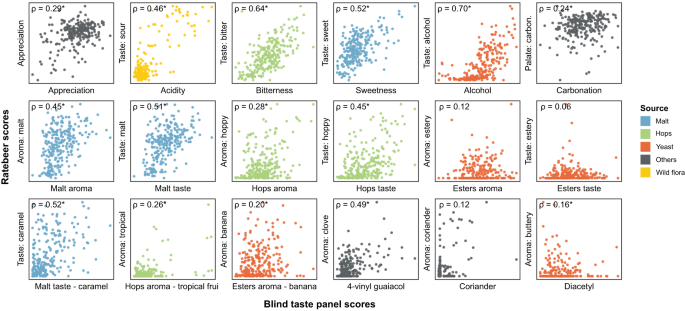

Public consumer reviews complement expert panel data

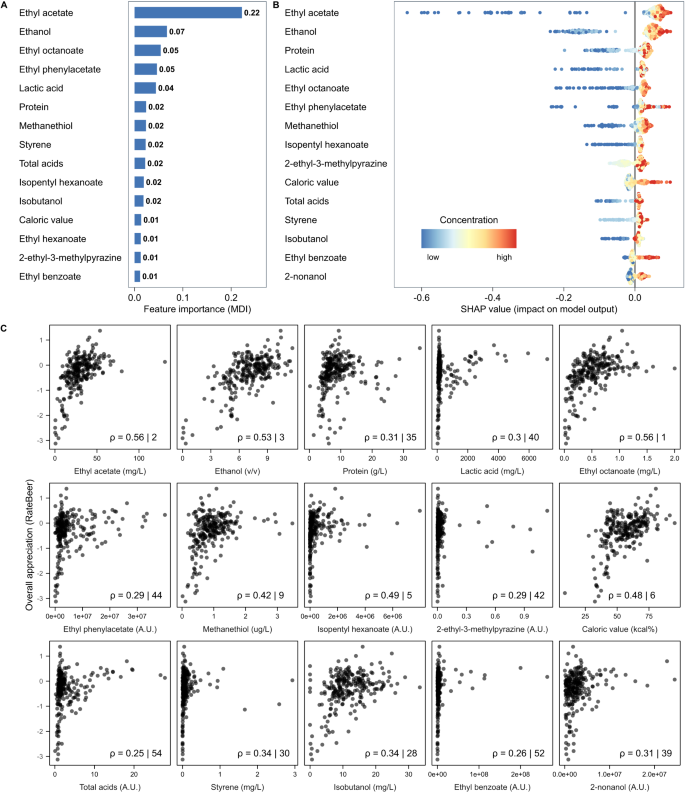

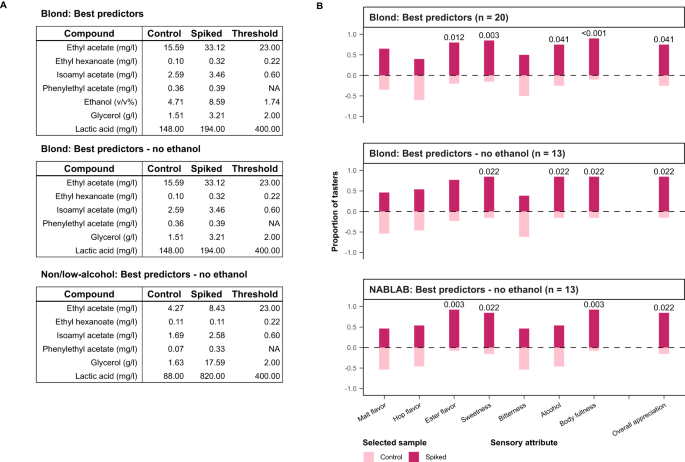

To complement and expand the sensory data of our trained tasting panel, we collected 180,000 reviews of our 250 beers from the online consumer review platform RateBeer. This provided numerical scores for beer appearance, aroma, taste, palate, overall quality as well as the average overall score.