Our websites may use cookies to personalize and enhance your experience. By continuing without changing your cookie settings, you agree to this collection. For more information, please see our University Websites Privacy Notice .

Neag School of Education

Educational Research Basics by Del Siegle

Experimental research.

The major feature that distinguishes experimental research from other types of research is that the researcher manipulates the independent variable. There are a number of experimental group designs in experimental research. Some of these qualify as experimental research, others do not.

- In true experimental research , the researcher not only manipulates the independent variable, he or she also randomly assigned individuals to the various treatment categories (i.e., control and treatment).

- In quasi experimental research , the researcher does not randomly assign subjects to treatment and control groups. In other words, the treatment is not distributed among participants randomly. In some cases, a researcher may randomly assigns one whole group to treatment and one whole group to control. In this case, quasi-experimental research involves using intact groups in an experiment, rather than assigning individuals at random to research conditions. (some researchers define this latter situation differently. For our course, we will allow this definition).

- In causal comparative ( ex post facto ) research, the groups are already formed. It does not meet the standards of an experiment because the independent variable in not manipulated.

The statistics by themselves have no meaning. They only take on meaning within the design of your study. If we just examine stats, bread can be deadly . The term validity is used three ways in research…

- I n the sampling unit, we learn about external validity (generalizability).

- I n the survey unit, we learn about instrument validity .

- In this unit, we learn about internal validity and external validity . Internal validity means that the differences that we were found between groups on the dependent variable in an experiment were directly related to what the researcher did to the independent variable, and not due to some other unintended variable (confounding variable). Simply stated, the question addressed by internal validity is “Was the study done well?” Once the researcher is satisfied that the study was done well and the independent variable caused the dependent variable (internal validity), then the research examines external validity (under what conditions [ecological] and with whom [population] can these results be replicated [Will I get the same results with a different group of people or under different circumstances?]). If a study is not internally valid, then considering external validity is a moot point (If the independent did not cause the dependent, then there is no point in applying the results [generalizing the results] to other situations.). Interestingly, as one tightens a study to control for treats to internal validity, one decreases the generalizability of the study (to whom and under what conditions one can generalize the results).

There are several common threats to internal validity in experimental research. They are described in our text. I have review each below (this material is also included in the PowerPoint Presentation on Experimental Research for this unit):

- Subject Characteristics (Selection Bias/Differential Selection) — The groups may have been different from the start. If you were testing instructional strategies to improve reading and one group enjoyed reading more than the other group, they may improve more in their reading because they enjoy it, rather than the instructional strategy you used.

- Loss of Subjects ( Mortality ) — All of the high or low scoring subject may have dropped out or were missing from one of the groups. If we collected posttest data on a day when the honor society was on field trip at the treatment school, the mean for the treatment group would probably be much lower than it really should have been.

- Location — Perhaps one group was at a disadvantage because of their location. The city may have been demolishing a building next to one of the schools in our study and there are constant distractions which interferes with our treatment.

- Instrumentation Instrument Decay — The testing instruments may not be scores similarly. Perhaps the person grading the posttest is fatigued and pays less attention to the last set of papers reviewed. It may be that those papers are from one of our groups and will received different scores than the earlier group’s papers

- Data Collector Characteristics — The subjects of one group may react differently to the data collector than the other group. A male interviewing males and females about their attitudes toward a type of math instruction may not receive the same responses from females as a female interviewing females would.

- Data Collector Bias — The person collecting data my favors one group, or some characteristic some subject possess, over another. A principal who favors strict classroom management may rate students’ attention under different teaching conditions with a bias toward one of the teaching conditions.

- Testing — The act of taking a pretest or posttest may influence the results of the experiment. Suppose we were conducting a unit to increase student sensitivity to prejudice. As a pretest we have the control and treatment groups watch Shindler’s List and write a reaction essay. The pretest may have actually increased both groups’ sensitivity and we find that our treatment groups didn’t score any higher on a posttest given later than the control group did. If we hadn’t given the pretest, we might have seen differences in the groups at the end of the study.

- History — Something may happen at one site during our study that influences the results. Perhaps a classmate dies in a car accident at the control site for a study teaching children bike safety. The control group may actually demonstrate more concern about bike safety than the treatment group.

- Maturation –There may be natural changes in the subjects that can account for the changes found in a study. A critical thinking unit may appear more effective if it taught during a time when children are developing abstract reasoning.

- Hawthorne Effect — The subjects may respond differently just because they are being studied. The name comes from a classic study in which researchers were studying the effect of lighting on worker productivity. As the intensity of the factor lights increased, so did the work productivity. One researcher suggested that they reverse the treatment and lower the lights. The productivity of the workers continued to increase. It appears that being observed by the researchers was increasing productivity, not the intensity of the lights.

- John Henry Effect — One group may view that it is competition with the other group and may work harder than than they would under normal circumstances. This generally is applied to the control group “taking on” the treatment group. The terms refers to the classic story of John Henry laying railroad track.

- Resentful Demoralization of the Control Group — The control group may become discouraged because it is not receiving the special attention that is given to the treatment group. They may perform lower than usual because of this.

- Regression ( Statistical Regression) — A class that scores particularly low can be expected to score slightly higher just by chance. Likewise, a class that scores particularly high, will have a tendency to score slightly lower by chance. The change in these scores may have nothing to do with the treatment.

- Implementation –The treatment may not be implemented as intended. A study where teachers are asked to use student modeling techniques may not show positive results, not because modeling techniques don’t work, but because the teacher didn’t implement them or didn’t implement them as they were designed.

- Compensatory Equalization of Treatmen t — Someone may feel sorry for the control group because they are not receiving much attention and give them special treatment. For example, a researcher could be studying the effect of laptop computers on students’ attitudes toward math. The teacher feels sorry for the class that doesn’t have computers and sponsors a popcorn party during math class. The control group begins to develop a more positive attitude about mathematics.

- Experimental Treatment Diffusion — Sometimes the control group actually implements the treatment. If two different techniques are being tested in two different third grades in the same building, the teachers may share what they are doing. Unconsciously, the control may use of the techniques she or he learned from the treatment teacher.

When planning a study, it is important to consider the threats to interval validity as we finalize the study design. After we complete our study, we should reconsider each of the threats to internal validity as we review our data and draw conclusions.

Del Siegle, Ph.D. Neag School of Education – University of Connecticut [email protected] www.delsiegle.com

Using Science to Inform Educational Practices

Experimental Research

As you’ve learned, the only way to establish that there is a cause-and-effect relationship between two variables is to conduct a scientific experiment. Experiment has a different meaning in the scientific context than in everyday life. In everyday conversation, we often use it to describe trying something for the first time, such as experimenting with a new hairstyle or new food. However, in the scientific context, an experiment has precise requirements for design and implementation.

Video 2.8.1. Experimental Research Design provides explanation and examples for correlational research. A closed-captioned version of this video is available here .

The Experimental Hypothesis

In order to conduct an experiment, a researcher must have a specific hypothesis to be tested. As you’ve learned, hypotheses can be formulated either through direct observation of the real world or after careful review of previous research. For example, if you think that children should not be allowed to watch violent programming on television because doing so would cause them to behave more violently, then you have basically formulated a hypothesis—namely, that watching violent television programs causes children to behave more violently. How might you have arrived at this particular hypothesis? You may have younger relatives who watch cartoons featuring characters using martial arts to save the world from evildoers, with an impressive array of punching, kicking, and defensive postures. You notice that after watching these programs for a while, your young relatives mimic the fighting behavior of the characters portrayed in the cartoon. Seeing behavior like this right after a child watches violent television programming might lead you to hypothesize that viewing violent television programming leads to an increase in the display of violent behaviors. These sorts of personal observations are what often lead us to formulate a specific hypothesis, but we cannot use limited personal observations and anecdotal evidence to test our hypothesis rigorously. Instead, to find out if real-world data supports our hypothesis, we have to conduct an experiment.

Designing an Experiment

The most basic experimental design involves two groups: the experimental group and the control group. The two groups are designed to be the same except for one difference— experimental manipulation. The experimental group gets the experimental manipulation—that is, the treatment or variable being tested (in this case, violent TV images)—and the control group does not. Since experimental manipulation is the only difference between the experimental and control groups, we can be sure that any differences between the two are due to experimental manipulation rather than chance.

In our example of how violent television programming might affect violent behavior in children, we have the experimental group view violent television programming for a specified time and then measure their violent behavior. We measure the violent behavior in our control group after they watch nonviolent television programming for the same amount of time. It is important for the control group to be treated similarly to the experimental group, with the exception that the control group does not receive the experimental manipulation. Therefore, we have the control group watch non-violent television programming for the same amount of time as the experimental group.

We also need to define precisely, or operationalize, what is considered violent and nonviolent. An operational definition is a description of how we will measure our variables, and it is important in allowing others to understand exactly how and what a researcher measures in a particular experiment. In operationalizing violent behavior, we might choose to count only physical acts like kicking or punching as instances of this behavior, or we also may choose to include angry verbal exchanges. Whatever we determine, it is important that we operationalize violent behavior in such a way that anyone who hears about our study for the first time knows exactly what we mean by violence. This aids peoples’ ability to interpret our data as well as their capacity to repeat our experiment should they choose to do so.

Once we have operationalized what is considered violent television programming and what is considered violent behavior from our experiment participants, we need to establish how we will run our experiment. In this case, we might have participants watch a 30-minute television program (either violent or nonviolent, depending on their group membership) before sending them out to a playground for an hour where their behavior is observed and the number and type of violent acts are recorded.

Ideally, the people who observe and record the children’s behavior are unaware of who was assigned to the experimental or control group, in order to control for experimenter bias. Experimenter bias refers to the possibility that a researcher’s expectations might skew the results of the study. Remember, conducting an experiment requires a lot of planning, and the people involved in the research project have a vested interest in supporting their hypotheses. If the observers knew which child was in which group, it might influence how much attention they paid to each child’s behavior as well as how they interpreted that behavior. By being blind to which child is in which group, we protect against those biases. This situation is a single-blind study , meaning that the participants are unaware as to which group they are in (experiment or control group) while the researcher knows which participants are in each group.

In a double-blind study , both the researchers and the participants are blind to group assignments. Why would a researcher want to run a study where no one knows who is in which group? Because by doing so, we can control for both experimenter and participant expectations. If you are familiar with the phrase placebo effect , you already have some idea as to why this is an important consideration. The placebo effect occurs when people’s expectations or beliefs influence or determine their experience in a given situation. In other words, simply expecting something to happen can actually make it happen.

Why is that? Imagine that you are a participant in this study, and you have just taken a pill that you think will improve your mood. Because you expect the pill to have an effect, you might feel better simply because you took the pill and not because of any drug actually contained in the pill—this is the placebo effect.

To make sure that any effects on mood are due to the drug and not due to expectations, the control group receives a placebo (in this case, a sugar pill). Now everyone gets a pill, and once again, neither the researcher nor the experimental participants know who got the drug and who got the sugar pill. Any differences in mood between the experimental and control groups can now be attributed to the drug itself rather than to experimenter bias or participant expectations.

Video 2.8.2. Introduction to Experimental Design introduces fundamental elements for experimental research design.

Independent and Dependent Variables

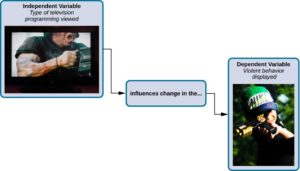

In a research experiment, we strive to study whether changes in one thing cause changes in another. To achieve this, we must pay attention to two important variables, or things that can be changed, in any experimental study: the independent variable and the dependent variable. An independent variable is manipulated or controlled by the experimenter. In a well-designed experimental study, the independent variable is the only important difference between the experimental and control groups. In our example of how violent television programs affect children’s display of violent behavior, the independent variable is the type of program—violent or nonviolent—viewed by participants in the study (Figure 2.3). A dependent variable is what the researcher measures to see how much effect the independent variable had. In our example, the dependent variable is the number of violent acts displayed by the experimental participants.

Figure 2.8.1. In an experiment, manipulations of the independent variable are expected to result in changes in the dependent variable.

We expect that the dependent variable will change as a function of the independent variable. In other words, the dependent variable depends on the independent variable. A good way to think about the relationship between the independent and dependent variables is with this question: What effect does the independent variable have on the dependent variable? Returning to our example, what effect does watching a half-hour of violent television programming or nonviolent television programming have on the number of incidents of physical aggression displayed on the playground?

Selecting and Assigning Experimental Participants

Now that our study is designed, we need to obtain a sample of individuals to include in our experiment. Our study involves human participants, so we need to determine who to include. Participants are the subjects of psychological research, and as the name implies, individuals who are involved in psychological research actively participate in the process. Often, psychological research projects rely on college students to serve as participants. In fact, the vast majority of research in psychology subfields has historically involved students as research participants (Sears, 1986; Arnett, 2008). But are college students truly representative of the general population? College students tend to be younger, more educated, more liberal, and less diverse than the general population. Although using students as test subjects is an accepted practice, relying on such a limited pool of research participants can be problematic because it is difficult to generalize findings to the larger population.

Our hypothetical experiment involves children, and we must first generate a sample of child participants. Samples are used because populations are usually too large to reasonably involve every member in our particular experiment (Figure 2.4). If possible, we should use a random sample (there are other types of samples, but for the purposes of this chapter, we will focus on random samples). A random sample is a subset of a larger population in which every member of the population has an equal chance of being selected. Random samples are preferred because if the sample is large enough we can be reasonably sure that the participating individuals are representative of the larger population. This means that the percentages of characteristics in the sample—sex, ethnicity, socioeconomic level, and any other characteristics that might affect the results—are close to those percentages in the larger population.

In our example, let’s say we decide our population of interest is fourth graders. But all fourth graders is a very large population, so we need to be more specific; instead, we might say our population of interest is all fourth graders in a particular city. We should include students from various income brackets, family situations, races, ethnicities, religions, and geographic areas of town. With this more manageable population, we can work with the local schools in selecting a random sample of around 200 fourth-graders that we want to participate in our experiment.

In summary, because we cannot test all of the fourth graders in a city, we want to find a group of about 200 that reflects the composition of that city. With a representative group, we can generalize our findings to the larger population without fear of our sample being biased in some way.

Figure 2.8.2. Researchers may work with (a) a large population or (b) a sample group that is a subset of the larger population.

Now that we have a sample, the next step of the experimental process is to split the participants into experimental and control groups through random assignment. With random assignment , all participants have an equal chance of being assigned to either group. There is statistical software that will randomly assign each of the fourth graders in the sample to either the experimental or the control group.

Random assignment is critical for sound experimental design. With sufficiently large samples, random assignment makes it unlikely that there are systematic differences between the groups. So, for instance, it would be improbable that we would get one group composed entirely of males, a given ethnic identity, or a given religious ideology. This is important because if the groups were systematically different before the experiment began, we would not know the origin of any differences we find between the groups: Were the differences preexisting, or were they caused by manipulation of the independent variable? Random assignment allows us to assume that any differences observed between experimental and control groups result from the manipulation of the independent variable.

Exercise 2.2 Randomization in Sampling and Assignment

Use this online tool to generate randomized numbers instantly and to learn more about random sampling and assignments.

Issues to Consider

While experiments allow scientists to make cause-and-effect claims, they are not without problems. True experiments require the experimenter to manipulate an independent variable, and that can complicate many questions that psychologists might want to address. For instance, imagine that you want to know what effect sex (the independent variable) has on spatial memory (the dependent variable). Although you can certainly look for differences between males and females on a task that taps into spatial memory, you cannot directly control a person’s sex. We categorize this type of research approach as quasi-experimental and recognize that we cannot make cause-and-effect claims in these circumstances.

Experimenters are also limited by ethical constraints. For instance, you would not be able to conduct an experiment designed to determine if experiencing abuse as a child leads to lower levels of self-esteem among adults. To conduct such an experiment, you would need to randomly assign some experimental participants to a group that receives abuse, and that experiment would be unethical.

Interpreting Experimental Findings

Once data is collected from both the experimental and the control groups, a statistical analysis is conducted to find out if there are meaningful differences between the two groups. The statistical analysis determines how likely any difference found is due to chance (and thus not meaningful). In psychology, group differences are considered meaningful, or significant, if the odds that these differences occurred by chance alone are 5 percent or less. Stated another way, if we repeated this experiment 100 times, we would expect to find the same results at least 95 times out of 100.

The greatest strength of experiments is the ability to assert that any significant differences in the findings are caused by the independent variable. This occurs because of random selection, random assignment, and a design that limits the effects of both experimenter bias and participant expectancy should create groups that are similar in composition and treatment. Therefore, any difference between the groups is attributable to the independent variable, and now we can finally make a causal statement. If we find that watching a violent television program results in more violent behavior than watching a nonviolent program, we can safely say that watching violent television programs causes an increase in the display of violent behavior.

Candela Citations

- Experimental Research. Authored by : Nicole Arduini-Van Hoose. Provided by : Hudson Valley Community College. Retrieved from : https://courses.lumenlearning.com/edpsy/chapter/experimental-research/. License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

- Experimental Research. Authored by : Nicole Arduini-Van Hoose. Provided by : Hudson Valley Community College. Retrieved from : https://courses.lumenlearning.com/adolescent/chapter/experimental-research/. Project : https://courses.lumenlearning.com/adolescent/chapter/experimental-research/. License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

Educational Psychology Copyright © 2020 by Nicole Arduini-Van Hoose is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

- Subject List

- Take a Tour

- For Authors

- Subscriber Services

- Publications

- African American Studies

- African Studies

- American Literature

- Anthropology

- Architecture Planning and Preservation

- Art History

- Atlantic History

- Biblical Studies

- British and Irish Literature

- Childhood Studies

- Chinese Studies

- Cinema and Media Studies

- Communication

- Criminology

- Environmental Science

- Evolutionary Biology

- International Law

- International Relations

- Islamic Studies

- Jewish Studies

- Latin American Studies

- Latino Studies

- Linguistics

- Literary and Critical Theory

- Medieval Studies

- Military History

- Political Science

- Public Health

- Renaissance and Reformation

- Social Work

- Urban Studies

- Victorian Literature

- Browse All Subjects

How to Subscribe

- Free Trials

In This Article Expand or collapse the "in this article" section Methodologies for Conducting Education Research

Introduction, general overviews.

- Experimental Research

- Quasi-Experimental Research

- Hierarchical Linear Modeling

- Survey Research

- Assessment and Measurement

- Qualitative Research Methodologies

- Program Evaluation

- Research Syntheses

- Implementation

Related Articles Expand or collapse the "related articles" section about

About related articles close popup.

Lorem Ipsum Sit Dolor Amet

Vestibulum ante ipsum primis in faucibus orci luctus et ultrices posuere cubilia Curae; Aliquam ligula odio, euismod ut aliquam et, vestibulum nec risus. Nulla viverra, arcu et iaculis consequat, justo diam ornare tellus, semper ultrices tellus nunc eu tellus.

- Action Research in Education

- Data Collection in Educational Research

- Educational Assessment

- Educational Statistics for Longitudinal Research

- Grounded Theory

- Literature Reviews

- Meta-Analysis and Research Synthesis in Education

- Mixed Methods Research

- Multivariate Research Methodology

- Narrative Research in Education

- Performance Objectives and Measurement

- Performance-based Research Assessment in Higher Education

- Qualitative Research Design

- Quantitative Research Designs in Educational Research

- Single-Subject Research Design

- Social Network Analysis

- Social Science and Education Research

- Statistical Assumptions

Other Subject Areas

Forthcoming articles expand or collapse the "forthcoming articles" section.

- Gender, Power, and Politics in the Academy

- Girls' Education in the Developing World

- Non-Formal & Informal Environmental Education

- Find more forthcoming articles...

- Export Citations

- Share This Facebook LinkedIn Twitter

Methodologies for Conducting Education Research by Marisa Cannata LAST REVIEWED: 19 August 2020 LAST MODIFIED: 15 December 2011 DOI: 10.1093/obo/9780199756810-0061

Education is a diverse field and methodologies used in education research are necessarily diverse. The reasons for the methodological diversity of education research are many, including the fact that the field of education is composed of a multitude of disciplines and tensions between basic and applied research. For example, accepted methods of systemic inquiry in history, sociology, economics, and psychology vary, yet all of these disciplines help answer important questions posed in education. This methodological diversity has led to debates about the quality of education research and the perception of shifting standards of quality research. The citations selected for inclusion in this article provide a broad overview of methodologies and discussions of quality research standards across the different types of questions posed in educational research. The citations represent summaries of ongoing debates, articles or books that have had a significant influence on education research, and guides to those who wish to implement particular methodologies. Most of the sections focus on specific methodologies and provide advice or examples for studies employing these methodologies.

The interdisciplinary nature of education research has implications for education research. There is no single best research design for all questions that guide education research. Even through many often heated debates about methodologies, the common strand is that research designs should follow the research questions. The following works offer an introduction to the debates, divides, and difficulties of education research. Schoenfeld 1999 , Mitchell and Haro 1999 , and Shulman 1988 provide perspectives on diversity within the field of education and the implications of this diversity on the debates about education research and difficulties conducting such research. National Research Council 2002 outlines the principles of scientific inquiry and how they apply to education. Published around the time No Child Left Behind required education policies to be based on scientific research, this book laid the foundation for much of the current emphasis of experimental and quasi-experimental research in education. To read another perspective on defining good education research, readers may turn to Hostetler 2005 . Readers who want a general overview of various methodologies in education research and directions on how to choose between them should read Creswell 2009 and Green, et al. 2006 . The American Educational Research Association (AERA), the main professional association focused on education research, has developed standards for how to report methods and findings in empirical studies. Those wishing to follow those standards should consult American Educational Research Association 2006 .

American Educational Research Association. 2006. Standards for reporting on empirical social science research in AERA publications. Educational Researcher 35.6: 33–40.

DOI: 10.3102/0013189X035006033

The American Educational Research Association is the professional association for researchers in education. Publications by AERA are a well-regarded source of research. This article outlines the requirements for reporting original research in AERA publications.

Creswell, J. W. 2009. Research design: Qualitative, quantitative, and mixed methods approaches . 3d ed. Los Angeles: SAGE.

Presents an overview of qualitative, quantitative and mixed-methods research designs, including how to choose the design based on the research question. This book is particularly helpful for those who want to design mixed-methods studies.

Green, J. L., G. Camilli, and P. B. Elmore. 2006. Handbook of complementary methods for research in education . Mahwah, NJ: Lawrence Erlbaum.

Provides a broad overview of several methods of educational research. The first part provides an overview of issues that cut across specific methodologies, and subsequent chapters delve into particular research approaches.

Hostetler, K. 2005. What is “good” education research? Educational Researcher 34.6: 16–21.

DOI: 10.3102/0013189X034006016

Goes beyond methodological concerns to argue that “good” educational research should also consider the conception of human well-being. By using a philosophical lens on debates about quality education research, this article is useful for moving beyond qualitative-quantitative divides.

Mitchell, T. R., and A. Haro. 1999. Poles apart: Reconciling the dichotomies in education research. In Issues in education research . Edited by E. C. Lagemann and L. S. Shulman, 42–62. San Francisco: Jossey-Bass.

Chapter outlines several dichotomies in education research, including the tension between applied research and basic research and between understanding the purposes of education and the processes of education.

National Research Council. 2002. Scientific research in education . Edited by R. J. Shavelson and L. Towne. Committee on Scientific Principles for Education Research. Center for Education. Division of Behavioral and Social Sciences and Education. Washington, DC: National Academy Press.

This book was released around the time the No Child Left Behind law directed that policy decisions should be guided by scientific research. It is credited with starting the current debate about methods in educational research and the preference for experimental studies.

Schoenfeld, A. H. 1999. The core, the canon, and the development of research skills. Issues in the preparation of education researchers. In Issues in education research . Edited by E. C. Lagemann and L. S. Shulman, 166–202. San Francisco: Jossey-Bass.

Describes difficulties in preparing educational researchers due to the lack of a core and a canon in education. While the focus is on preparing researchers, it provides valuable insight into why debates over education research persist.

Shulman, L. S. 1988. Disciplines of inquiry in education: An overview. In Complementary methods for research in education . Edited by R. M. Jaeger, 3–17. Washington, DC: American Educational Research Association.

Outlines what distinguishes research from other modes of disciplined inquiry and the relationship between academic disciplines, guiding questions, and methods of inquiry.

back to top

Users without a subscription are not able to see the full content on this page. Please subscribe or login .

Oxford Bibliographies Online is available by subscription and perpetual access to institutions. For more information or to contact an Oxford Sales Representative click here .

- About Education »

- Meet the Editorial Board »

- Academic Achievement

- Academic Audit for Universities

- Academic Freedom and Tenure in the United States

- Adjuncts in Higher Education in the United States

- Administrator Preparation

- Adolescence

- Advanced Placement and International Baccalaureate Courses

- Advocacy and Activism in Early Childhood

- African American Racial Identity and Learning

- Alaska Native Education

- Alternative Certification Programs for Educators

- Alternative Schools

- American Indian Education

- Animals in Environmental Education

- Art Education

- Artificial Intelligence and Learning

- Assessing School Leader Effectiveness

- Assessment, Behavioral

- Assessment, Educational

- Assessment in Early Childhood Education

- Assistive Technology

- Augmented Reality in Education

- Beginning-Teacher Induction

- Bilingual Education and Bilingualism

- Black Undergraduate Women: Critical Race and Gender Perspe...

- Blended Learning

- Case Study in Education Research

- Changing Professional and Academic Identities

- Character Education

- Children’s and Young Adult Literature

- Children's Beliefs about Intelligence

- Children's Rights in Early Childhood Education

- Citizenship Education

- Civic and Social Engagement of Higher Education

- Classroom Learning Environments: Assessing and Investigati...

- Classroom Management

- Coherent Instructional Systems at the School and School Sy...

- College Admissions in the United States

- College Athletics in the United States

- Community Relations

- Comparative Education

- Computer-Assisted Language Learning

- Computer-Based Testing

- Conceptualizing, Measuring, and Evaluating Improvement Net...

- Continuous Improvement and "High Leverage" Educational Pro...

- Counseling in Schools

- Critical Approaches to Gender in Higher Education

- Critical Perspectives on Educational Innovation and Improv...

- Critical Race Theory

- Crossborder and Transnational Higher Education

- Cross-National Research on Continuous Improvement

- Cross-Sector Research on Continuous Learning and Improveme...

- Cultural Diversity in Early Childhood Education

- Culturally Responsive Leadership

- Culturally Responsive Pedagogies

- Culturally Responsive Teacher Education in the United Stat...

- Curriculum Design

- Data-driven Decision Making in the United States

- Deaf Education

- Desegregation and Integration

- Design Thinking and the Learning Sciences: Theoretical, Pr...

- Development, Moral

- Dialogic Pedagogy

- Digital Age Teacher, The

- Digital Citizenship

- Digital Divides

- Disabilities

- Distance Learning

- Distributed Leadership

- Doctoral Education and Training

- Early Childhood Education and Care (ECEC) in Denmark

- Early Childhood Education and Development in Mexico

- Early Childhood Education in Aotearoa New Zealand

- Early Childhood Education in Australia

- Early Childhood Education in China

- Early Childhood Education in Europe

- Early Childhood Education in Sub-Saharan Africa

- Early Childhood Education in Sweden

- Early Childhood Education Pedagogy

- Early Childhood Education Policy

- Early Childhood Education, The Arts in

- Early Childhood Mathematics

- Early Childhood Science

- Early Childhood Teacher Education

- Early Childhood Teachers in Aotearoa New Zealand

- Early Years Professionalism and Professionalization Polici...

- Economics of Education

- Education For Children with Autism

- Education for Sustainable Development

- Education Leadership, Empirical Perspectives in

- Education of Native Hawaiian Students

- Education Reform and School Change

- Educator Partnerships with Parents and Families with a Foc...

- Emotional and Affective Issues in Environmental and Sustai...

- Emotional and Behavioral Disorders

- Environmental and Science Education: Overlaps and Issues

- Environmental Education

- Environmental Education in Brazil

- Epistemic Beliefs

- Equity and Improvement: Engaging Communities in Educationa...

- Equity, Ethnicity, Diversity, and Excellence in Education

- Ethical Research with Young Children

- Ethics and Education

- Ethics of Teaching

- Ethnic Studies

- Evidence-Based Communication Assessment and Intervention

- Family and Community Partnerships in Education

- Family Day Care

- Federal Government Programs and Issues

- Feminization of Labor in Academia

- Finance, Education

- Financial Aid

- Formative Assessment

- Future-Focused Education

- Gender and Achievement

- Gender and Alternative Education

- Gender-Based Violence on University Campuses

- Gifted Education

- Global Mindedness and Global Citizenship Education

- Global University Rankings

- Governance, Education

- Growth of Effective Mental Health Services in Schools in t...

- Higher Education and Globalization

- Higher Education and the Developing World

- Higher Education Faculty Characteristics and Trends in the...

- Higher Education Finance

- Higher Education Governance

- Higher Education Graduate Outcomes and Destinations

- Higher Education in Africa

- Higher Education in China

- Higher Education in Latin America

- Higher Education in the United States, Historical Evolutio...

- Higher Education, International Issues in

- Higher Education Management

- Higher Education Policy

- Higher Education Research

- Higher Education Student Assessment

- High-stakes Testing

- History of Early Childhood Education in the United States

- History of Education in the United States

- History of Technology Integration in Education

- Homeschooling

- Inclusion in Early Childhood: Difference, Disability, and ...

- Inclusive Education

- Indigenous Education in a Global Context

- Indigenous Learning Environments

- Indigenous Students in Higher Education in the United Stat...

- Infant and Toddler Pedagogy

- Inservice Teacher Education

- Integrating Art across the Curriculum

- Intelligence

- Intensive Interventions for Children and Adolescents with ...

- International Perspectives on Academic Freedom

- Intersectionality and Education

- Knowledge Development in Early Childhood

- Leadership Development, Coaching and Feedback for

- Leadership in Early Childhood Education

- Leadership Training with an Emphasis on the United States

- Learning Analytics in Higher Education

- Learning Difficulties

- Learning, Lifelong

- Learning, Multimedia

- Learning Strategies

- Legal Matters and Education Law

- LGBT Youth in Schools

- Linguistic Diversity

- Linguistically Inclusive Pedagogy

- Literacy Development and Language Acquisition

- Mathematics Identity

- Mathematics Instruction and Interventions for Students wit...

- Mathematics Teacher Education

- Measurement for Improvement in Education

- Measurement in Education in the United States

- Methodological Approaches for Impact Evaluation in Educati...

- Methodologies for Conducting Education Research

- Mindfulness, Learning, and Education

- Motherscholars

- Multiliteracies in Early Childhood Education

- Multiple Documents Literacy: Theory, Research, and Applica...

- Museums, Education, and Curriculum

- Music Education

- Native American Studies

- Note-Taking

- Numeracy Education

- One-to-One Technology in the K-12 Classroom

- Online Education

- Open Education

- Organizing for Continuous Improvement in Education

- Organizing Schools for the Inclusion of Students with Disa...

- Outdoor Play and Learning

- Outdoor Play and Learning in Early Childhood Education

- Pedagogical Leadership

- Pedagogy of Teacher Education, A

- Performance-based Research Funding

- Phenomenology in Educational Research

- Philosophy of Education

- Physical Education

- Podcasts in Education

- Policy Context of United States Educational Innovation and...

- Politics of Education

- Portable Technology Use in Special Education Programs and ...

- Post-humanism and Environmental Education

- Pre-Service Teacher Education

- Problem Solving

- Productivity and Higher Education

- Professional Development

- Professional Learning Communities

- Programs and Services for Students with Emotional or Behav...

- Psychology Learning and Teaching

- Psychometric Issues in the Assessment of English Language ...

- Qualitative Data Analysis Techniques

- Qualitative, Quantitative, and Mixed Methods Research Samp...

- Queering the English Language Arts (ELA) Writing Classroom

- Race and Affirmative Action in Higher Education

- Reading Education

- Refugee and New Immigrant Learners

- Relational and Developmental Trauma and Schools

- Relational Pedagogies in Early Childhood Education

- Reliability in Educational Assessments

- Religion in Elementary and Secondary Education in the Unit...

- Researcher Development and Skills Training within the Cont...

- Research-Practice Partnerships in Education within the Uni...

- Response to Intervention

- Restorative Practices

- Risky Play in Early Childhood Education

- Scale and Sustainability of Education Innovation and Impro...

- Scaling Up Research-based Educational Practices

- School Accreditation

- School Choice

- School Culture

- School District Budgeting and Financial Management in the ...

- School Improvement through Inclusive Education

- School Reform

- Schools, Private and Independent

- School-Wide Positive Behavior Support

- Science Education

- Secondary to Postsecondary Transition Issues

- Self-Regulated Learning

- Self-Study of Teacher Education Practices

- Service-Learning

- Severe Disabilities

- Single Salary Schedule

- Single-sex Education

- Social Context of Education

- Social Justice

- Social Pedagogy

- Social Studies Education

- Sociology of Education

- Standards-Based Education

- Student Access, Equity, and Diversity in Higher Education

- Student Assignment Policy

- Student Engagement in Tertiary Education

- Student Learning, Development, Engagement, and Motivation ...

- Student Participation

- Student Voice in Teacher Development

- Sustainability Education in Early Childhood Education

- Sustainability in Early Childhood Education

- Sustainability in Higher Education

- Teacher Beliefs and Epistemologies

- Teacher Collaboration in School Improvement

- Teacher Evaluation and Teacher Effectiveness

- Teacher Preparation

- Teacher Training and Development

- Teacher Unions and Associations

- Teacher-Student Relationships

- Teaching Critical Thinking

- Technologies, Teaching, and Learning in Higher Education

- Technology Education in Early Childhood

- Technology, Educational

- Technology-based Assessment

- The Bologna Process

- The Regulation of Standards in Higher Education

- Theories of Educational Leadership

- Three Conceptions of Literacy: Media, Narrative, and Gamin...

- Tracking and Detracking

- Traditions of Quality Improvement in Education

- Transformative Learning

- Transitions in Early Childhood Education

- Tribally Controlled Colleges and Universities in the Unite...

- Understanding the Psycho-Social Dimensions of Schools and ...

- University Faculty Roles and Responsibilities in the Unite...

- Using Ethnography in Educational Research

- Value of Higher Education for Students and Other Stakehold...

- Virtual Learning Environments

- Vocational and Technical Education

- Wellness and Well-Being in Education

- Women's and Gender Studies

- Young Children and Spirituality

- Young Children's Learning Dispositions

- Young Children's Working Theories

- Privacy Policy

- Cookie Policy

- Legal Notice

- Accessibility

Powered by:

- [66.249.64.20|45.133.227.243]

- 45.133.227.243

No internet connection.

All search filters on the page have been cleared., your search has been saved..

- All content

- Dictionaries

- Encyclopedias

- Expert Insights

- Foundations

- How-to Guides

- Journal Articles

- Little Blue Books

- Little Green Books

- Project Planner

- Tools Directory

- Sign in to my profile My Profile

- Sign in Signed in

- My profile My Profile

Designing and Conducting Research in Education

- By: Clifford J. Drew , Michael L. Hardman & John L. Hosp

- Publisher: SAGE Publications, Inc.

- Publication year: 2008

- Online pub date: December 22, 2014

- Discipline: Education

- Methods: Experimental design , Research questions , Measurement

- DOI: https:// doi. org/10.4135/9781483385648

- Keywords: feedback , instruments , population , students , teaching , threats , web sites Show all Show less

- Print ISBN: 9781412960748

- Online ISBN: 9781483385648

- Buy the book icon link

Subject index

“The authors did an excellent job of engaging students by being empathetic to their anxieties while taking a research design course. The authors also present a convincing case of the relevancies of research in daily life by showing how information was used or misused to affect our personal and professional decisions.” —Cherng-Jyh Yen, George Washington University

A practice-oriented, non-mathematical approach to understanding, planning, conducting, and interpreting research in education

Practical and applied, Designing and Conducting Research in Education is the perfect first step for students who will be consuming research as well as for those who will be actively involved in conducting research. Readers will find up-to-date examinations of quantitative, qualitative, and mixed-methods research approaches which have emerged as important components in the toolbox of educational research. Real-world situations are presented in each chapter taking the reader through various challenges often encountered in the world of educational research.

Key Features: Examines quantitative, qualitative, and mixed-methods research approaches, which have emerged as important components in the toolbox of educational research; Explains each step of the research process very practically to help students plan and conduct a research project in education; Applies research in real-world situations by taking the reader through various challenges often encountered in field settings; Includes a chapter on ethical issues in conducting research; Provides a Student study site that offers the opportunity to interact with contemporary research articles in education; Instructor Resources on CD provide a Computerized test bank, Sample Syllabi, General Teaching Tips and more

Intended audience: This book provides an introduction to research that emphasizes the fundamental concepts of planning and design. The book is designed to be a core text for the very first course on research methods. In some fields the first course is offered at an undergraduate level whereas in others it is a beginning graduate class.

“The book is perfect for introductory students. The language is top notch, the examples are helpful, and the graphic features (tables, figures) are uncomplicated and contain important information in an easy-to-understand format. Excellent text!” —John Huss, Northern Kentucky University

“Designing and Conducting Research in Education is written in a style that is conducive to learning for the type of graduate students we teach here in the College of Education. I appreciate the ‘friendly’ tone and concise writing that the authors utilize.” —Steven Harris, Tarleton State University

“A hands on, truly accessible text on how to design and conduct research”

—Joan P. Sebastian, National University

Front Matter

- Acknowledgments

- Chapter 1 | The Foundations of Research

- Chapter 2 | The Research Process

- Chapter 3 | Ethical Issues in Conducting Research

- Chapter 4 | Participant Selection and Assignment

- Chapter 5 | Measures and Instruments

- Chapter 6 | Quantitative Research Methodologies

- Chapter 7 | Designing Nonexperimental Research

- Chapter 8 | Introduction to Qualitative Research and Mixed-Method Designs

- Chapter 9 | Research Design Pitfalls

- Chapter 10 | Statistics Choices

- Chapter 11 | Data Tabulation

- Chapter 12 | Descriptive Statistics

- Chapter 13 | Inferential Statistics

- Chapter 14 | Analyzing Qualitative Data

- Chapter 15 | Interpreting Results

Back Matter

- Appendix: Random Numbers Table

- About the Authors

Sign in to access this content

Get a 30 day free trial, more like this, sage recommends.

We found other relevant content for you on other Sage platforms.

Have you created a personal profile? Login or create a profile so that you can save clips, playlists and searches

- Sign in/register

Navigating away from this page will delete your results

Please save your results to "My Self-Assessments" in your profile before navigating away from this page.

Sign in to my profile

Sign up for a free trial and experience all Sage Learning Resources have to offer.

You must have a valid academic email address to sign up.

Get off-campus access

- View or download all content my institution has access to.

Sign up for a free trial and experience all Sage Research Methods has to offer.

- view my profile

- view my lists

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

13 13. Experimental design

Chapter outline.

- What is an experiment and when should you use one? (8 minute read)

- True experimental designs (7 minute read)

- Quasi-experimental designs (8 minute read)

- Non-experimental designs (5 minute read)

- Critical, ethical, and critical considerations (5 minute read)

Content warning : examples in this chapter contain references to non-consensual research in Western history, including experiments conducted during the Holocaust and on African Americans (section 13.6).

13.1 What is an experiment and when should you use one?

Learning objectives.

Learners will be able to…

- Identify the characteristics of a basic experiment

- Describe causality in experimental design

- Discuss the relationship between dependent and independent variables in experiments

- Explain the links between experiments and generalizability of results

- Describe advantages and disadvantages of experimental designs

The basics of experiments

The first experiment I can remember using was for my fourth grade science fair. I wondered if latex- or oil-based paint would hold up to sunlight better. So, I went to the hardware store and got a few small cans of paint and two sets of wooden paint sticks. I painted one with oil-based paint and the other with latex-based paint of different colors and put them in a sunny spot in the back yard. My hypothesis was that the oil-based paint would fade the most and that more fading would happen the longer I left the paint sticks out. (I know, it’s obvious, but I was only 10.)

I checked in on the paint sticks every few days for a month and wrote down my observations. The first part of my hypothesis ended up being wrong—it was actually the latex-based paint that faded the most. But the second part was right, and the paint faded more and more over time. This is a simple example, of course—experiments get a heck of a lot more complex than this when we’re talking about real research.

Merriam-Webster defines an experiment as “an operation or procedure carried out under controlled conditions in order to discover an unknown effect or law, to test or establish a hypothesis, or to illustrate a known law.” Each of these three components of the definition will come in handy as we go through the different types of experimental design in this chapter. Most of us probably think of the physical sciences when we think of experiments, and for good reason—these experiments can be pretty flashy! But social science and psychological research follow the same scientific methods, as we’ve discussed in this book.

As the video discusses, experiments can be used in social sciences just like they can in physical sciences. It makes sense to use an experiment when you want to determine the cause of a phenomenon with as much accuracy as possible. Some types of experimental designs do this more precisely than others, as we’ll see throughout the chapter. If you’ll remember back to Chapter 11 and the discussion of validity, experiments are the best way to ensure internal validity, or the extent to which a change in your independent variable causes a change in your dependent variable.

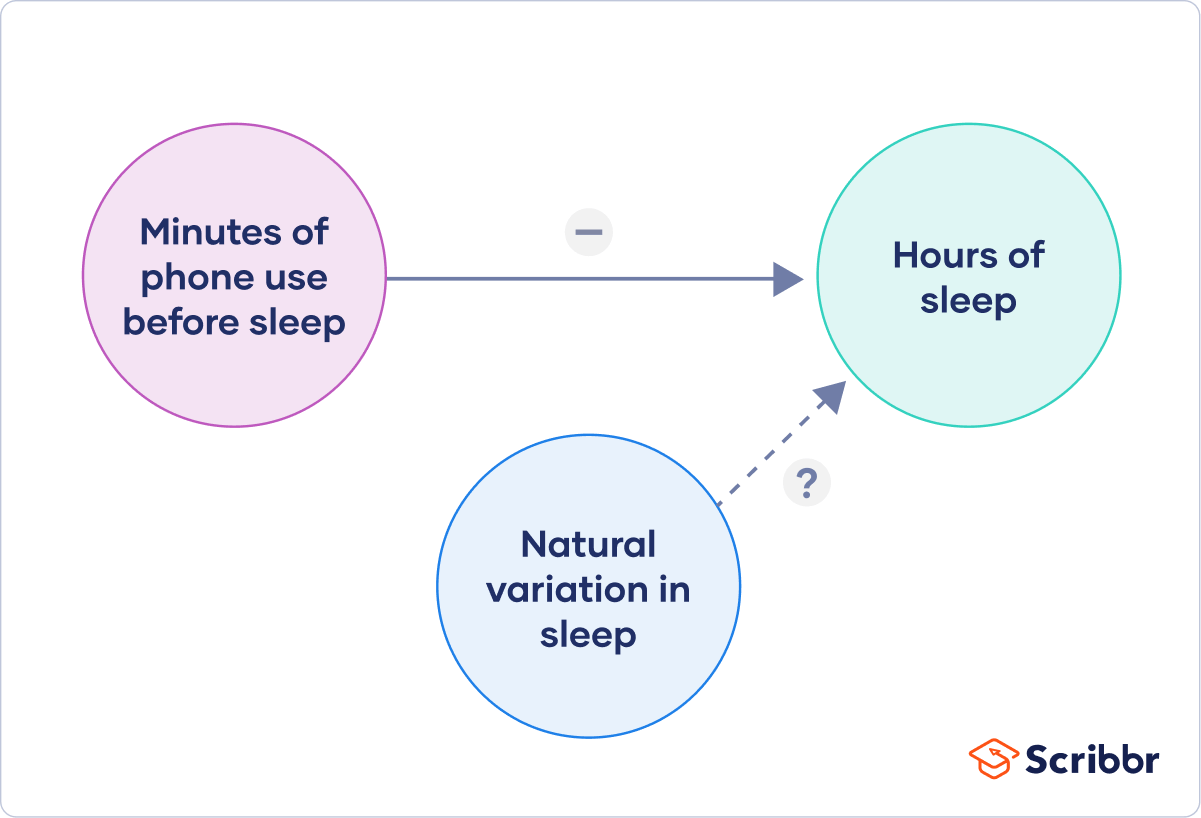

Experimental designs for research projects are most appropriate when trying to uncover or test a hypothesis about the cause of a phenomenon, so they are best for explanatory research questions. As we’ll learn throughout this chapter, different circumstances are appropriate for different types of experimental designs. Each type of experimental design has advantages and disadvantages, and some are better at controlling the effect of extraneous variables —those variables and characteristics that have an effect on your dependent variable, but aren’t the primary variable whose influence you’re interested in testing. For example, in a study that tries to determine whether aspirin lowers a person’s risk of a fatal heart attack, a person’s race would likely be an extraneous variable because you primarily want to know the effect of aspirin.

In practice, many types of experimental designs can be logistically challenging and resource-intensive. As practitioners, the likelihood that we will be involved in some of the types of experimental designs discussed in this chapter is fairly low. However, it’s important to learn about these methods, even if we might not ever use them, so that we can be thoughtful consumers of research that uses experimental designs.

While we might not use all of these types of experimental designs, many of us will engage in evidence-based practice during our time as social workers. A lot of research developing evidence-based practice, which has a strong emphasis on generalizability, will use experimental designs. You’ve undoubtedly seen one or two in your literature search so far.

The logic of experimental design

How do we know that one phenomenon causes another? The complexity of the social world in which we practice and conduct research means that causes of social problems are rarely cut and dry. Uncovering explanations for social problems is key to helping clients address them, and experimental research designs are one road to finding answers.

As you read about in Chapter 8 (and as we’ll discuss again in Chapter 15 ), just because two phenomena are related in some way doesn’t mean that one causes the other. Ice cream sales increase in the summer, and so does the rate of violent crime; does that mean that eating ice cream is going to make me murder someone? Obviously not, because ice cream is great. The reality of that relationship is far more complex—it could be that hot weather makes people more irritable and, at times, violent, while also making people want ice cream. More likely, though, there are other social factors not accounted for in the way we just described this relationship.

Experimental designs can help clear up at least some of this fog by allowing researchers to isolate the effect of interventions on dependent variables by controlling extraneous variables . In true experimental design (discussed in the next section) and some quasi-experimental designs, researchers accomplish this w ith the control group and the experimental group . (The experimental group is sometimes called the “treatment group,” but we will call it the experimental group in this chapter.) The control group does not receive the intervention you are testing (they may receive no intervention or what is known as “treatment as usual”), while the experimental group does. (You will hopefully remember our earlier discussion of control variables in Chapter 8 —conceptually, the use of the word “control” here is the same.)

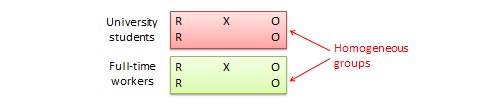

In a well-designed experiment, your control group should look almost identical to your experimental group in terms of demographics and other relevant factors. What if we want to know the effect of CBT on social anxiety, but we have learned in prior research that men tend to have a more difficult time overcoming social anxiety? We would want our control and experimental groups to have a similar gender mix because it would limit the effect of gender on our results, since ostensibly, both groups’ results would be affected by gender in the same way. If your control group has 5 women, 6 men, and 4 non-binary people, then your experimental group should be made up of roughly the same gender balance to help control for the influence of gender on the outcome of your intervention. (In reality, the groups should be similar along other dimensions, as well, and your group will likely be much larger.) The researcher will use the same outcome measures for both groups and compare them, and assuming the experiment was designed correctly, get a pretty good answer about whether the intervention had an effect on social anxiety.

You will also hear people talk about comparison groups , which are similar to control groups. The primary difference between the two is that a control group is populated using random assignment, but a comparison group is not. Random assignment entails using a random process to decide which participants are put into the control or experimental group (which participants receive an intervention and which do not). By randomly assigning participants to a group, you can reduce the effect of extraneous variables on your research because there won’t be a systematic difference between the groups.

Do not confuse random assignment with random sampling. Random sampling is a method for selecting a sample from a population, and is rarely used in psychological research. Random assignment is a method for assigning participants in a sample to the different conditions, and it is an important element of all experimental research in psychology and other related fields. Random sampling also helps a great deal with generalizability , whereas random assignment increases internal validity .

We have already learned about internal validity in Chapter 11 . The use of an experimental design will bolster internal validity since it works to isolate causal relationships. As we will see in the coming sections, some types of experimental design do this more effectively than others. It’s also worth considering that true experiments, which most effectively show causality , are often difficult and expensive to implement. Although other experimental designs aren’t perfect, they still produce useful, valid evidence and may be more feasible to carry out.

Key Takeaways

- Experimental designs are useful for establishing causality, but some types of experimental design do this better than others.

- Experiments help researchers isolate the effect of the independent variable on the dependent variable by controlling for the effect of extraneous variables .

- Experiments use a control/comparison group and an experimental group to test the effects of interventions. These groups should be as similar to each other as possible in terms of demographics and other relevant factors.

- True experiments have control groups with randomly assigned participants, while other types of experiments have comparison groups to which participants are not randomly assigned.

- Think about the research project you’ve been designing so far. How might you use a basic experiment to answer your question? If your question isn’t explanatory, try to formulate a new explanatory question and consider the usefulness of an experiment.

- Why is establishing a simple relationship between two variables not indicative of one causing the other?

13.2 True experimental design

- Describe a true experimental design in social work research

- Understand the different types of true experimental designs

- Determine what kinds of research questions true experimental designs are suited for

- Discuss advantages and disadvantages of true experimental designs

True experimental design , often considered to be the “gold standard” in research designs, is thought of as one of the most rigorous of all research designs. In this design, one or more independent variables are manipulated by the researcher (as treatments), subjects are randomly assigned to different treatment levels (random assignment), and the results of the treatments on outcomes (dependent variables) are observed. The unique strength of experimental research is its internal validity and its ability to establish ( causality ) through treatment manipulation, while controlling for the effects of extraneous variable. Sometimes the treatment level is no treatment, while other times it is simply a different treatment than that which we are trying to evaluate. For example, we might have a control group that is made up of people who will not receive any treatment for a particular condition. Or, a control group could consist of people who consent to treatment with DBT when we are testing the effectiveness of CBT.

As we discussed in the previous section, a true experiment has a control group with participants randomly assigned , and an experimental group . This is the most basic element of a true experiment. The next decision a researcher must make is when they need to gather data during their experiment. Do they take a baseline measurement and then a measurement after treatment, or just a measurement after treatment, or do they handle measurement another way? Below, we’ll discuss the three main types of true experimental designs. There are sub-types of each of these designs, but here, we just want to get you started with some of the basics.

Using a true experiment in social work research is often pretty difficult, since as I mentioned earlier, true experiments can be quite resource intensive. True experiments work best with relatively large sample sizes, and random assignment, a key criterion for a true experimental design, is hard (and unethical) to execute in practice when you have people in dire need of an intervention. Nonetheless, some of the strongest evidence bases are built on true experiments.

For the purposes of this section, let’s bring back the example of CBT for the treatment of social anxiety. We have a group of 500 individuals who have agreed to participate in our study, and we have randomly assigned them to the control and experimental groups. The folks in the experimental group will receive CBT, while the folks in the control group will receive more unstructured, basic talk therapy. These designs, as we talked about above, are best suited for explanatory research questions.

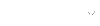

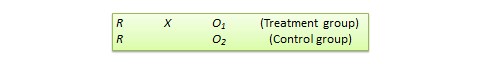

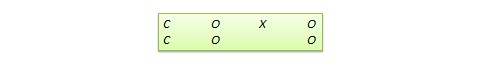

Before we get started, take a look at the table below. When explaining experimental research designs, we often use diagrams with abbreviations to visually represent the experiment. Table 13.1 starts us off by laying out what each of the abbreviations mean.

Pretest and post-test control group design

In pretest and post-test control group design , participants are given a pretest of some kind to measure their baseline state before their participation in an intervention. In our social anxiety experiment, we would have participants in both the experimental and control groups complete some measure of social anxiety—most likely an established scale and/or a structured interview—before they start their treatment. As part of the experiment, we would have a defined time period during which the treatment would take place (let’s say 12 weeks, just for illustration). At the end of 12 weeks, we would give both groups the same measure as a post-test .

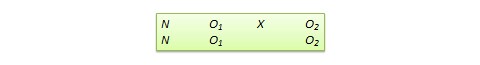

In the diagram, RA (random assignment group A) is the experimental group and RB is the control group. O 1 denotes the pre-test, X e denotes the experimental intervention, and O 2 denotes the post-test. Let’s look at this diagram another way, using the example of CBT for social anxiety that we’ve been talking about.

In a situation where the control group received treatment as usual instead of no intervention, the diagram would look this way, with X i denoting treatment as usual (Figure 13.3).

Hopefully, these diagrams provide you a visualization of how this type of experiment establishes time order , a key component of a causal relationship. Did the change occur after the intervention? Assuming there is a change in the scores between the pretest and post-test, we would be able to say that yes, the change did occur after the intervention. Causality can’t exist if the change happened before the intervention—this would mean that something else led to the change, not our intervention.

Post-test only control group design

Post-test only control group design involves only giving participants a post-test, just like it sounds (Figure 13.4).

But why would you use this design instead of using a pretest/post-test design? One reason could be the testing effect that can happen when research participants take a pretest. In research, the testing effect refers to “measurement error related to how a test is given; the conditions of the testing, including environmental conditions; and acclimation to the test itself” (Engel & Schutt, 2017, p. 444) [1] (When we say “measurement error,” all we mean is the accuracy of the way we measure the dependent variable.) Figure 13.4 is a visualization of this type of experiment. The testing effect isn’t always bad in practice—our initial assessments might help clients identify or put into words feelings or experiences they are having when they haven’t been able to do that before. In research, however, we might want to control its effects to isolate a cleaner causal relationship between intervention and outcome.

Going back to our CBT for social anxiety example, we might be concerned that participants would learn about social anxiety symptoms by virtue of taking a pretest. They might then identify that they have those symptoms on the post-test, even though they are not new symptoms for them. That could make our intervention look less effective than it actually is.

However, without a baseline measurement establishing causality can be more difficult. If we don’t know someone’s state of mind before our intervention, how do we know our intervention did anything at all? Establishing time order is thus a little more difficult. You must balance this consideration with the benefits of this type of design.

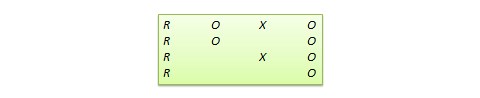

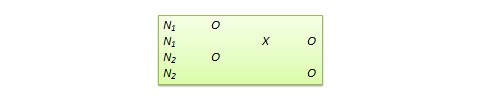

Solomon four group design

One way we can possibly measure how much the testing effect might change the results of the experiment is with the Solomon four group design. Basically, as part of this experiment, you have two control groups and two experimental groups. The first pair of groups receives both a pretest and a post-test. The other pair of groups receives only a post-test (Figure 13.5). This design helps address the problem of establishing time order in post-test only control group designs.

For our CBT project, we would randomly assign people to four different groups instead of just two. Groups A and B would take our pretest measures and our post-test measures, and groups C and D would take only our post-test measures. We could then compare the results among these groups and see if they’re significantly different between the folks in A and B, and C and D. If they are, we may have identified some kind of testing effect, which enables us to put our results into full context. We don’t want to draw a strong causal conclusion about our intervention when we have major concerns about testing effects without trying to determine the extent of those effects.

Solomon four group designs are less common in social work research, primarily because of the logistics and resource needs involved. Nonetheless, this is an important experimental design to consider when we want to address major concerns about testing effects.

- True experimental design is best suited for explanatory research questions.

- True experiments require random assignment of participants to control and experimental groups.

- Pretest/post-test research design involves two points of measurement—one pre-intervention and one post-intervention.

- Post-test only research design involves only one point of measurement—post-intervention. It is a useful design to minimize the effect of testing effects on our results.

- Solomon four group research design involves both of the above types of designs, using 2 pairs of control and experimental groups. One group receives both a pretest and a post-test, while the other receives only a post-test. This can help uncover the influence of testing effects.

- Think about a true experiment you might conduct for your research project. Which design would be best for your research, and why?

- What challenges or limitations might make it unrealistic (or at least very complicated!) for you to carry your true experimental design in the real-world as a student researcher?

- What hypothesis(es) would you test using this true experiment?

13.4 Quasi-experimental designs

- Describe a quasi-experimental design in social work research

- Understand the different types of quasi-experimental designs

- Determine what kinds of research questions quasi-experimental designs are suited for

- Discuss advantages and disadvantages of quasi-experimental designs

Quasi-experimental designs are a lot more common in social work research than true experimental designs. Although quasi-experiments don’t do as good a job of giving us robust proof of causality , they still allow us to establish time order , which is a key element of causality. The prefix quasi means “resembling,” so quasi-experimental research is research that resembles experimental research, but is not true experimental research. Nonetheless, given proper research design, quasi-experiments can still provide extremely rigorous and useful results.

There are a few key differences between true experimental and quasi-experimental research. The primary difference between quasi-experimental research and true experimental research is that quasi-experimental research does not involve random assignment to control and experimental groups. Instead, we talk about comparison groups in quasi-experimental research instead. As a result, these types of experiments don’t control the effect of extraneous variables as well as a true experiment.

Quasi-experiments are most likely to be conducted in field settings in which random assignment is difficult or impossible. They are often conducted to evaluate the effectiveness of a treatment—perhaps a type of psychotherapy or an educational intervention. We’re able to eliminate some threats to internal validity, but we can’t do this as effectively as we can with a true experiment. Realistically, our CBT-social anxiety project is likely to be a quasi experiment, based on the resources and participant pool we’re likely to have available.

It’s important to note that not all quasi-experimental designs have a comparison group. There are many different kinds of quasi-experiments, but we will discuss the three main types below: nonequivalent comparison group designs, time series designs, and ex post facto comparison group designs.

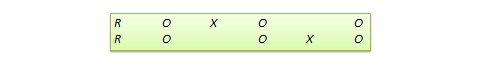

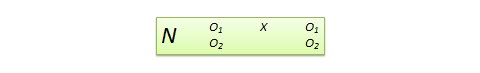

Nonequivalent comparison group design

You will notice that this type of design looks extremely similar to the pretest/post-test design that we discussed in section 13.3. But instead of random assignment to control and experimental groups, researchers use other methods to construct their comparison and experimental groups. A diagram of this design will also look very similar to pretest/post-test design, but you’ll notice we’ve removed the “R” from our groups, since they are not randomly assigned (Figure 13.6).

Researchers using this design select a comparison group that’s as close as possible based on relevant factors to their experimental group. Engel and Schutt (2017) [2] identify two different selection methods:

- Individual matching : Researchers take the time to match individual cases in the experimental group to similar cases in the comparison group. It can be difficult, however, to match participants on all the variables you want to control for.

- Aggregate matching : Instead of trying to match individual participants to each other, researchers try to match the population profile of the comparison and experimental groups. For example, researchers would try to match the groups on average age, gender balance, or median income. This is a less resource-intensive matching method, but researchers have to ensure that participants aren’t choosing which group (comparison or experimental) they are a part of.

As we’ve already talked about, this kind of design provides weaker evidence that the intervention itself leads to a change in outcome. Nonetheless, we are still able to establish time order using this method, and can thereby show an association between the intervention and the outcome. Like true experimental designs, this type of quasi-experimental design is useful for explanatory research questions.

What might this look like in a practice setting? Let’s say you’re working at an agency that provides CBT and other types of interventions, and you have identified a group of clients who are seeking help for social anxiety, as in our earlier example. Once you’ve obtained consent from your clients, you can create a comparison group using one of the matching methods we just discussed. If the group is small, you might match using individual matching, but if it’s larger, you’ll probably sort people by demographics to try to get similar population profiles. (You can do aggregate matching more easily when your agency has some kind of electronic records or database, but it’s still possible to do manually.)

Time series design