8.5 Writing Process: Creating an Analytical Report

Learning outcomes.

By the end of this section, you will be able to:

- Identify the elements of the rhetorical situation for your report.

- Find and focus a topic to write about.

- Gather and analyze information from appropriate sources.

- Distinguish among different kinds of evidence.

- Draft a thesis and create an organizational plan.

- Compose a report that develops ideas and integrates evidence from sources.

- Give and act on productive feedback to works in progress.

You might think that writing comes easily to experienced writers—that they draft stories and college papers all at once, sitting down at the computer and having sentences flow from their fingers like water from a faucet. In reality, most writers engage in a recursive process, pushing forward, stepping back, and repeating steps multiple times as their ideas develop and change. In broad strokes, the steps most writers go through are these:

- Planning and Organization . You will have an easier time drafting if you devote time at the beginning to consider the rhetorical situation for your report, understand your assignment, gather ideas and information, draft a thesis statement, and create an organizational plan.

- Drafting . When you have an idea of what you want to say and the order in which you want to say it, you’re ready to draft. As much as possible, keep going until you have a complete first draft of your report, resisting the urge to go back and rewrite. Save that for after you have completed a first draft.

- Review . Now is the time to get feedback from others, whether from your instructor, your classmates, a tutor in the writing center, your roommate, someone in your family, or someone else you trust to read your writing critically and give you honest feedback.

- Revising . With feedback on your draft, you are ready to revise. You may need to return to an earlier step and make large-scale revisions that involve planning, organizing, and rewriting, or you may need to work mostly on ensuring that your sentences are clear and correct.

Considering the Rhetorical Situation

Like other kinds of writing projects, a report starts with assessing the rhetorical situation —the circumstance in which a writer communicates with an audience of readers about a subject. As the writer of a report, you make choices based on the purpose of your writing, the audience who will read it, the genre of the report, and the expectations of the community and culture in which you are working. A graphic organizer like Table 8.1 can help you begin.

Summary of Assignment

Write an analytical report on a topic that interests you and that you want to know more about. The topic can be contemporary or historical, but it must be one that you can analyze and support with evidence from sources.

The following questions can help you think about a topic suitable for analysis:

- Why or how did ________ happen?

- What are the results or effects of ________?

- Is ________ a problem? If so, why?

- What are examples of ________ or reasons for ________?

- How does ________ compare to or contrast with other issues, concerns, or things?

Consult and cite three to five reliable sources. The sources do not have to be scholarly for this assignment, but they must be credible, trustworthy, and unbiased. Possible sources include academic journals, newspapers, magazines, reputable websites, government publications or agency websites, and visual sources such as TED Talks. You may also use the results of an experiment or survey, and you may want to conduct interviews.

Consider whether visuals and media will enhance your report. Can you present data you collect visually? Would a map, photograph, chart, or other graphic provide interesting and relevant support? Would video or audio allow you to present evidence that you would otherwise need to describe in words?

Another Lens. To gain another analytic view on the topic of your report, consider different people affected by it. Say, for example, that you have decided to report on recent high school graduates and the effect of the COVID-19 pandemic on the final months of their senior year. If you are a recent high school graduate, you might naturally gravitate toward writing about yourself and your peers. But you might also consider the adults in the lives of recent high school graduates—for example, teachers, parents, or grandparents—and how they view the same period. Or you might consider the same topic from the perspective of a college admissions department looking at their incoming freshman class.

Quick Launch: Finding and Focusing a Topic

Coming up with a topic for a report can be daunting because you can report on nearly anything. The topic can easily get too broad, trapping you in the realm of generalizations. The trick is to find a topic that interests you and focus on an angle you can analyze in order to say something significant about it. You can use a graphic organizer to generate ideas, or you can use a concept map similar to the one featured in Writing Process: Thinking Critically About a “Text.”

Asking the Journalist’s Questions

One way to generate ideas about a topic is to ask the five W (and one H) questions, also called the journalist’s questions : Who? What? When? Where? Why? How? Try answering the following questions to explore a topic:

Who was or is involved in ________?

What happened/is happening with ________? What were/are the results of ________?

When did ________ happen? Is ________ happening now?

Where did ________ happen, or where is ________ happening?

Why did ________ happen, or why is ________ happening now?

How did ________ happen?

For example, imagine that you have decided to write your analytical report on the effect of the COVID-19 shutdown on high-school students by interviewing students on your college campus. Your questions and answers might look something like those in Table 8.2 :

Asking Focused Questions

Another way to find a topic is to ask focused questions about it. For example, you might ask the following questions about the effect of the 2020 pandemic shutdown on recent high school graduates:

- How did the shutdown change students’ feelings about their senior year?

- How did the shutdown affect their decisions about post-graduation plans, such as work or going to college?

- How did the shutdown affect their academic performance in high school or in college?

- How did/do they feel about continuing their education?

- How did the shutdown affect their social relationships?

Any of these questions might be developed into a thesis for an analytical report. Table 8.3 shows more examples of broad topics and focusing questions.

Gathering Information

Because they are based on information and evidence, most analytical reports require you to do at least some research. Depending on your assignment, you may be able to find reliable information online, or you may need to do primary research by conducting an experiment, a survey, or interviews. For example, if you live among students in their late teens and early twenties, consider what they can tell you about their lives that you might be able to analyze. Returning to or graduating from high school, starting college, or returning to college in the midst of a global pandemic has provided them, for better or worse, with educational and social experiences that are shared widely by people their age and very different from the experiences older adults had at the same age.

Some report assignments will require you to do formal research, an activity that involves finding sources and evaluating them for reliability, reading them carefully, taking notes, and citing all words you quote and ideas you borrow. See Research Process: Accessing and Recording Information and Annotated Bibliography: Gathering, Evaluating, and Documenting Sources for detailed instruction on conducting research.

Whether you conduct in-depth research or not, keep track of the ideas that come to you and the information you learn. You can write or dictate notes using an app on your phone or computer, or you can jot notes in a journal if you prefer pen and paper. Then, when you are ready to begin organizing your report, you will have a record of your thoughts and information. Always track the sources of information you gather, whether from printed or digital material or from a person you interviewed, so that you can return to the sources if you need more information. And always credit the sources in your report.

Kinds of Evidence

Depending on your assignment and the topic of your report, certain kinds of evidence may be more effective than others. Other kinds of evidence may even be required. As a general rule, choose evidence that is rooted in verifiable facts and experience. In addition, select the evidence that best supports the topic and your approach to the topic, be sure the evidence meets your instructor’s requirements, and cite any evidence you use that comes from a source. The following list contains different kinds of frequently used evidence and an example of each.

Definition : An explanation of a key word, idea, or concept.

The U.S. Census Bureau refers to a “young adult” as a person between 18 and 34 years old.

Example : An illustration of an idea or concept.

The college experience in the fall of 2020 was starkly different from that of previous years. Students who lived in residence halls were assigned to small pods. On-campus dining services were limited. Classes were small and physically distanced or conducted online. Parties were banned.

Expert opinion : A statement by a professional in the field whose opinion is respected.

According to Louise Aronson, MD, geriatrician and author of Elderhood , people over the age of 65 are the happiest of any age group, reporting “less stress, depression, worry, and anger, and more enjoyment, happiness, and satisfaction” (255).

Fact : Information that can be proven correct or accurate.

According to data collected by the NCAA, the academic success of Division I college athletes between 2015 and 2019 was consistently high (Hosick).

Interview : An in-person, phone, or remote conversation that involves an interviewer posing questions to another person or people.

During our interview, I asked Betty about living without a cell phone during the pandemic. She said that before the pandemic, she hadn’t needed a cell phone in her daily activities, but she soon realized that she, and people like her, were increasingly at a disadvantage.

Quotation : The exact words of an author or a speaker.

In response to whether she thought she needed a cell phone, Betty said, “I got along just fine without a cell phone when I could go everywhere in person. The shift to needing a phone came suddenly, and I don’t have extra money in my budget to get one.”

Statistics : A numerical fact or item of data.

The Pew Research Center reported that approximately 25 percent of Hispanic Americans and 17 percent of Black Americans relied on smartphones for online access, compared with 12 percent of White people.

Survey : A structured interview in which respondents (the people who answer the survey questions) are all asked the same questions, either in person or through print or electronic means, and their answers tabulated and interpreted. Surveys discover attitudes, beliefs, or habits of the general public or segments of the population.

A survey of 3,000 mobile phone users in October 2020 showed that 54 percent of respondents used their phones for messaging, while 40 percent used their phones for calls (Steele).

- Visuals : Graphs, figures, tables, photographs and other images, diagrams, charts, maps, videos, and audio recordings, among others.

Thesis and Organization

Drafting a thesis.

When you have a grasp of your topic, move on to the next phase: drafting a thesis. The thesis is the central idea that you will explore and support in your report; all paragraphs in your report should relate to it. In an essay-style analytical report, you will likely express this main idea in a thesis statement of one or two sentences toward the end of the introduction.

For example, if you found that the academic performance of student athletes was higher than that of non-athletes, you might write the following thesis statement:

student sample text Although a common stereotype is that college athletes barely pass their classes, an analysis of athletes’ academic performance indicates that athletes drop fewer classes, earn higher grades, and are more likely to be on track to graduate in four years when compared with their non-athlete peers. end student sample text

The thesis statement often previews the organization of your writing. For example, in his report on the U.S. response to the COVID-19 pandemic in 2020, Trevor Garcia wrote the following thesis statement, which detailed the central idea of his report:

student sample text An examination of the U.S. response shows that a reduction of experts in key positions and programs, inaction that led to equipment shortages, and inconsistent policies were three major causes of the spread of the virus and the resulting deaths. end student sample text

After you draft a thesis statement, ask these questions, and examine your thesis as you answer them. Revise your draft as needed.

- Is it interesting? A thesis for a report should answer a question that is worth asking and piques curiosity.

- Is it precise and specific? If you are interested in reducing pollution in a nearby lake, explain how to stop the zebra mussel infestation or reduce the frequent algae blooms.

- Is it manageable? Try to split the difference between having too much information and not having enough.

Organizing Your Ideas

As a next step, organize the points you want to make in your report and the evidence to support them. Use an outline, a diagram, or another organizational tool, such as Table 8.4 .

Drafting an Analytical Report

With a tentative thesis, an organization plan, and evidence, you are ready to begin drafting. For this assignment, you will report information, analyze it, and draw conclusions about the cause of something, the effect of something, or the similarities and differences between two different things.

Introduction

Some students write the introduction first; others save it for last. Whenever you choose to write the introduction, use it to draw readers into your report. Make the topic of your report clear, and be concise and sincere. End the introduction with your thesis statement. Depending on your topic and the type of report, you can write an effective introduction in several ways. Opening a report with an overview is a tried-and-true strategy, as shown in the following example on the U.S. response to COVID-19 by Trevor Garcia. Notice how he opens the introduction with statistics and a comparison and follows it with a question that leads to the thesis statement (underlined).

student sample text With more than 83 million cases and 1.8 million deaths at the end of 2020, COVID-19 has turned the world upside down. By the end of 2020, the United States led the world in the number of cases, at more than 20 million infections and nearly 350,000 deaths. In comparison, the second-highest number of cases was in India, which at the end of 2020 had less than half the number of COVID-19 cases despite having a population four times greater than the U.S. (“COVID-19 Coronavirus Pandemic,” 2021). How did the United States come to have the world’s worst record in this pandemic? underline An examination of the U.S. response shows that a reduction of experts in key positions and programs, inaction that led to equipment shortages, and inconsistent policies were three major causes of the spread of the virus and the resulting deaths end underline . end student sample text

For a less formal report, you might want to open with a question, quotation, or brief story. The following example opens with an anecdote that leads to the thesis statement (underlined).

student sample text Betty stood outside the salon, wondering how to get in. It was June of 2020, and the door was locked. A sign posted on the door provided a phone number for her to call to be let in, but at 81, Betty had lived her life without a cell phone. Betty’s day-to-day life had been hard during the pandemic, but she had planned for this haircut and was looking forward to it; she had a mask on and hand sanitizer in her car. Now she couldn’t get in the door, and she was discouraged. In that moment, Betty realized how much Americans’ dependence on cell phones had grown in the months since the pandemic began. underline Betty and thousands of other senior citizens who could not afford cell phones or did not have the technological skills and support they needed were being left behind in a society that was increasingly reliant on technology end underline . end student sample text

Body Paragraphs: Point, Evidence, Analysis

Use the body paragraphs of your report to present evidence that supports your thesis. A reliable pattern to keep in mind for developing the body paragraphs of a report is point , evidence , and analysis :

- The point is the central idea of the paragraph, usually given in a topic sentence stated in your own words at or toward the beginning of the paragraph. Each topic sentence should relate to the thesis.

- The evidence you provide develops the paragraph and supports the point made in the topic sentence. Include details, examples, quotations, paraphrases, and summaries from sources if you conducted formal research. Synthesize the evidence you include by showing in your sentences the connections between sources.

- The analysis comes at the end of the paragraph. In your own words, draw a conclusion about the evidence you have provided and how it relates to the topic sentence.

The paragraph below illustrates the point, evidence, and analysis pattern. Drawn from a report about concussions among football players, the paragraph opens with a topic sentence about the NCAA and NFL and their responses to studies about concussions. The paragraph is developed with evidence from three sources. It concludes with a statement about helmets and players’ safety.

student sample text The NCAA and NFL have taken steps forward and backward to respond to studies about the danger of concussions among players. Responding to the deaths of athletes, documented brain damage, lawsuits, and public outcry (Buckley et al., 2017), the NCAA instituted protocols to reduce potentially dangerous hits during football games and to diagnose traumatic head injuries more quickly and effectively. Still, it has allowed players to wear more than one style of helmet during a season, raising the risk of injury because of imperfect fit. At the professional level, the NFL developed a helmet-rating system in 2011 in an effort to reduce concussions, but it continued to allow players to wear helmets with a wide range of safety ratings. The NFL’s decision created an opportunity for researchers to look at the relationship between helmet safety ratings and concussions. Cocello et al. (2016) reported that players who wore helmets with a lower safety rating had more concussions than players who wore helmets with a higher safety rating, and they concluded that safer helmets are a key factor in reducing concussions. end student sample text

Developing Paragraph Content

In the body paragraphs of your report, you will likely use examples, draw comparisons, show contrasts, or analyze causes and effects to develop your topic.

Paragraphs developed with Example are common in reports. The paragraph below, adapted from a report by student John Zwick on the mental health of soldiers deployed during wartime, draws examples from three sources.

student sample text Throughout the Vietnam War, military leaders claimed that the mental health of soldiers was stable and that men who suffered from combat fatigue, now known as PTSD, were getting the help they needed. For example, the New York Times (1966) quoted military leaders who claimed that mental fatigue among enlisted men had “virtually ceased to be a problem,” occurring at a rate far below that of World War II. Ayres (1969) reported that Brigadier General Spurgeon Neel, chief American medical officer in Vietnam, explained that soldiers experiencing combat fatigue were admitted to the psychiatric ward, sedated for up to 36 hours, and given a counseling session with a doctor who reassured them that the rest was well deserved and that they were ready to return to their units. Although experts outside the military saw profound damage to soldiers’ psyches when they returned home (Halloran, 1970), the military stayed the course, treating acute cases expediently and showing little concern for the cumulative effect of combat stress on individual soldiers. end student sample text

When you analyze causes and effects , you explain the reasons that certain things happened and/or their results. The report by Trevor Garcia on the U.S. response to the COVID-19 pandemic in 2020 is an example: his report examines the reasons the United States failed to control the coronavirus. The paragraph below, adapted from another student’s report written for an environmental policy course, explains the effect of white settlers’ views of forest management on New England.

student sample text The early colonists’ European ideas about forest management dramatically changed the New England landscape. White settlers saw the New World as virgin, unused land, even though indigenous people had been drawing on its resources for generations by using fire subtly to improve hunting, employing construction techniques that left ancient trees intact, and farming small, efficient fields that left the surrounding landscape largely unaltered. White settlers’ desire to develop wood-built and wood-burning homesteads surrounded by large farm fields led to forestry practices and techniques that resulted in the removal of old-growth trees. These practices defined the way the forests look today. end student sample text

Compare and contrast paragraphs are useful when you wish to examine similarities and differences. You can use both comparison and contrast in a single paragraph, or you can use one or the other. The paragraph below, adapted from a student report on the rise of populist politicians, compares the rhetorical styles of populist politicians Huey Long and Donald Trump.

student sample text A key similarity among populist politicians is their rejection of carefully crafted sound bites and erudite vocabulary typically associated with candidates for high office. Huey Long and Donald Trump are two examples. When he ran for president, Long captured attention through his wild gesticulations on almost every word, dramatically varying volume, and heavily accented, folksy expressions, such as “The only way to be able to feed the balance of the people is to make that man come back and bring back some of that grub that he ain’t got no business with!” In addition, Long’s down-home persona made him a credible voice to represent the common people against the country’s rich, and his buffoonish style allowed him to express his radical ideas without sounding anti-communist alarm bells. Similarly, Donald Trump chose to speak informally in his campaign appearances, but the persona he projected was that of a fast-talking, domineering salesman. His frequent use of personal anecdotes, rhetorical questions, brief asides, jokes, personal attacks, and false claims made his speeches disjointed, but they gave the feeling of a running conversation between him and his audience. For example, in a 2015 speech, Trump said, “They just built a hotel in Syria. Can you believe this? They built a hotel. When I have to build a hotel, I pay interest. They don’t have to pay interest, because they took the oil that, when we left Iraq, I said we should’ve taken” (“Our Country Needs” 2020). While very different in substance, Long and Trump adopted similar styles that positioned them as the antithesis of typical politicians and their worldviews. end student sample text

The conclusion should draw the threads of your report together and make its significance clear to readers. You may wish to review the introduction, restate the thesis, recommend a course of action, point to the future, or use some combination of these. Whichever way you approach it, the conclusion should not head in a new direction. The following example is the conclusion from a student’s report on the effect of a book about environmental movements in the United States.

student sample text Since its publication in 1949, environmental activists of various movements have found wisdom and inspiration in Aldo Leopold’s A Sand County Almanac . These audiences included Leopold’s conservationist contemporaries, environmentalists of the 1960s and 1970s, and the environmental justice activists who rose in the 1980s and continue to make their voices heard today. These audiences have read the work differently: conservationists looked to the author as a leader, environmentalists applied his wisdom to their movement, and environmental justice advocates have pointed out the flaws in Leopold’s thinking. Even so, like those before them, environmental justice activists recognize the book’s value as a testament to taking the long view and eliminating biases that may cloud an objective assessment of humanity’s interdependent relationship with the environment. end student sample text

Citing Sources

You must cite the sources of information and data included in your report. Citations must appear in both the text and a bibliography at the end of the report.

The sample paragraphs in the previous section include examples of in-text citation using APA documentation style. Trevor Garcia’s report on the U.S. response to COVID-19 in 2020 also uses APA documentation style for citations in the text of the report and the list of references at the end. Your instructor may require another documentation style, such as MLA or Chicago.

Peer Review: Getting Feedback from Readers

You will likely engage in peer review with other students in your class by sharing drafts and providing feedback to help spot strengths and weaknesses in your reports. For peer review within a class, your instructor may provide assignment-specific questions or a form for you to complete as you work together.

If you have a writing center on your campus, it is well worth your time to make an online or in-person appointment with a tutor. You’ll receive valuable feedback and improve your ability to review not only your report but your overall writing.

Another way to receive feedback on your report is to ask a friend or family member to read your draft. Provide a list of questions or a form such as the one in Table 8.5 for them to complete as they read.

Revising: Using Reviewers’ Responses to Revise your Work

When you receive comments from readers, including your instructor, read each comment carefully to understand what is being asked. Try not to get defensive, even though this response is completely natural. Remember that readers are like coaches who want you to succeed. They are looking at your writing from outside your own head, and they can identify strengths and weaknesses that you may not have noticed. Keep track of the strengths and weaknesses your readers point out. Pay special attention to those that more than one reader identifies, and use this information to improve your report and later assignments.

As you analyze each response, be open to suggestions for improvement, and be willing to make significant revisions to improve your writing. Perhaps you need to revise your thesis statement to better reflect the content of your draft. Maybe you need to return to your sources to better understand a point you’re trying to make in order to develop a paragraph more fully. Perhaps you need to rethink the organization, move paragraphs around, and add transition sentences.

Below is an early draft of part of Trevor Garcia’s report with comments from a peer reviewer:

student sample text To truly understand what happened, it’s important first to look back to the years leading up to the pandemic. Epidemiologists and public health officials had long known that a global pandemic was possible. In 2016, the U.S. National Security Council (NSC) published a 69-page document with the intimidating title Playbook for Early Response to High-Consequence Emerging Infectious Disease Threats and Biological Incidents . The document’s two sections address responses to “emerging disease threats that start or are circulating in another country but not yet confirmed within U.S. territorial borders” and to “emerging disease threats within our nation’s borders.” On 13 January 2017, the joint Obama-Trump transition teams performed a pandemic preparedness exercise; however, the playbook was never adopted by the incoming administration. end student sample text

annotated text Peer Review Comment: Do the words in quotation marks need to be a direct quotation? It seems like a paraphrase would work here. end annotated text

annotated text Peer Review Comment: I’m getting lost in the details about the playbook. What’s the Obama-Trump transition team? end annotated text

student sample text In February 2018, the administration began to cut funding for the Prevention and Public Health Fund at the Centers for Disease Control and Prevention; cuts to other health agencies continued throughout 2018, with funds diverted to unrelated projects such as housing for detained immigrant children. end student sample text

annotated text Peer Review Comment: This paragraph has only one sentence, and it’s more like an example. It needs a topic sentence and more development. end annotated text

student sample text Three months later, Luciana Borio, director of medical and biodefense preparedness at the NSC, spoke at a symposium marking the centennial of the 1918 influenza pandemic. “The threat of pandemic flu is the number one health security concern,” she said. “Are we ready to respond? I fear the answer is no.” end student sample text

annotated text Peer Review Comment: This paragraph is very short and a lot like the previous paragraph in that it’s a single example. It needs a topic sentence. Maybe you can combine them? end annotated text

annotated text Peer Review Comment: Be sure to cite the quotation. end annotated text

Reading these comments and those of others, Trevor decided to combine the three short paragraphs into one paragraph focusing on the fact that the United States knew a pandemic was possible but was unprepared for it. He developed the paragraph, using the short paragraphs as evidence and connecting the sentences and evidence with transitional words and phrases. Finally, he added in-text citations in APA documentation style to credit his sources. The revised paragraph is below:

student sample text Epidemiologists and public health officials in the United States had long known that a global pandemic was possible. In 2016, the National Security Council (NSC) published Playbook for Early Response to High-Consequence Emerging Infectious Disease Threats and Biological Incidents , a 69-page document on responding to diseases spreading within and outside of the United States. On January 13, 2017, the joint transition teams of outgoing president Barack Obama and then president-elect Donald Trump performed a pandemic preparedness exercise based on the playbook; however, it was never adopted by the incoming administration (Goodman & Schulkin, 2020). A year later, in February 2018, the Trump administration began to cut funding for the Prevention and Public Health Fund at the Centers for Disease Control and Prevention, leaving key positions unfilled. Other individuals who were fired or resigned in 2018 were the homeland security adviser, whose portfolio included global pandemics; the director for medical and biodefense preparedness; and the top official in charge of a pandemic response. None of them were replaced, leaving the White House with no senior person who had experience in public health (Goodman & Schulkin, 2020). Experts voiced concerns, among them Luciana Borio, director of medical and biodefense preparedness at the NSC, who spoke at a symposium marking the centennial of the 1918 influenza pandemic in May 2018: “The threat of pandemic flu is the number one health security concern,” she said. “Are we ready to respond? I fear the answer is no” (Sun, 2018, final para.). end student sample text

A final word on working with reviewers’ comments: as you consider your readers’ suggestions, remember, too, that you remain the author. You are free to disregard suggestions that you think will not improve your writing. If you choose to disregard comments from your instructor, consider submitting a note explaining your reasons with the final draft of your report.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/writing-guide/pages/1-unit-introduction

- Authors: Michelle Bachelor Robinson, Maria Jerskey, featuring Toby Fulwiler

- Publisher/website: OpenStax

- Book title: Writing Guide with Handbook

- Publication date: Dec 21, 2021

- Location: Houston, Texas

- Book URL: https://openstax.org/books/writing-guide/pages/1-unit-introduction

- Section URL: https://openstax.org/books/writing-guide/pages/8-5-writing-process-creating-an-analytical-report

© Dec 19, 2023 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

Overview of Analytic Studies

Introduction

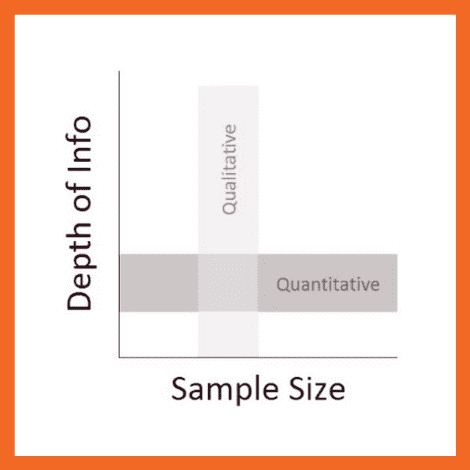

We search for the determinants of health outcomes, first, by relying on descriptive epidemiology to generate hypotheses about associations between exposures and outcomes. Analytic studies are then undertaken to test specific hypotheses. Samples of subjects are identified and information about exposure status and outcome is collected. The essence of an analytic study is that groups of subjects are compared in order to estimate the magnitude of association between exposures and outcomes.

In their book entitled "Epidemiology Matters" Katherine Keyes and Sandro Galea discuss three fundamental options for studying samples from a population as illustrated in the video below (duration 8:30).

Learning Objectives

After successfully completing this section, the student will be able to:

- Describe the difference between descriptive and scientific/analytic epidemiologic studies in terms of information/evidence provided for medicine and public health.

- Define and explain the distinguishing features of a cohort study.

- Describe and identify the types of epidemiologic questions that can be addressed by cohort studies.

- Define and distinguish among prospective and retrospective cohort studies using the investigator as the point of reference.

- Define and explain the distinguishing features of a case-control study.

- Explain the distinguishing features of an intervention study.

- Identify the study design when reading an article or abstract.

Cohort Type Studies

A cohort is a "group." In epidemiology a cohort is a group of individuals who are followed over a period of time, primarily to assess what happens to them, i.e., their health outcomes. In cohort type studies one identifies individuals who do not have the outcome of interest initially, and groups them in subsets that differ in their exposure to some factor, e.g., smokers and non-smokers. The different exposure groups are then followed over time in order to compare the incidence of health outcomes, such as lung cancer or heart disease. As an example, the Framingham Heart Study enrolled a cohort of 5,209 residents of Framingham, MA who were between the ages of 30-62 and who did not have cardiovascular disease when they were enrolled. These subjects differed from one another in many ways: whether they smoked, how much they smoked, body mass index, eating habits, exercise habits, gender, family history of heart disease, etc. The researchers assessed these and many other characteristics or "exposures" soon after the subjects had been enrolled and before any of them had developed cardiovascular disease. The many "baseline characteristics" were assessed in a number of ways including questionnaires, physical exams, laboratory tests, and imaging studies (e.g., x-rays). They then began "following" the cohort, meaning that they kept in contact with the subjects by phone, mail, or clinic visits in order to determine if and when any of the subjects developed any of the "outcomes of interest," such as myocardial infarction (heart attack), angina, congestive heart failure, stroke, diabetes and many other cardiovascular outcomes.

Over time some subjects eventually began to develop some of the outcomes of interest. Having followed the cohort in this fashion, it was eventually possible to use the information collected to evaluate many hypotheses about what characteristics were associated with an increased risk of heart disease. For example, if one hypothesized that smoking increased the risk of heart attacks, the subjects in the cohort could be sorted based on their smoking habits, and one could compare the subset of the cohort that smoked to the subset who had never smoked. For each such comparison that one wanted to make the cohort could be grouped according to whether they had a given exposure or not, and one could measure and compare the frequency of heart attacks (i.e., the incidence) between the groups. Incidence provides an estimate of risk, so if the incidence of heart attacks is 3 times greater in smokers compared to non-smokers, it suggests an association between smoking and risk of developing a heart attack. (Various biases might also be an explanation for an apparent association. We will learn about these later in the course.) The hallmark of analytical studies, then, is that they collect information about both exposure status and outcome status, and they compare groups to identify whether there appears to be an association or a link.

The Population "At Risk"

From the discussion above, it should be obvious that one of the basic requirements of a cohort type study is that none of the subjects have the outcome of interest at the beginning of the follow-up period, and time must pass in order to determine the frequency of developing the outcome.

- For example, if one wanted to compare the risk of developing uterine cancer between postmenopausal women receiving hormone-replacement therapy and those not receiving hormones, one would consider certain eligibility criteria for the members prior to the start of the study: 1) they should be female, 2) they should be post-menopausal, and 3) they should have a uterus. Among post-menopausal women there might be a number who had had a hysterectomy already, perhaps for persistent bleeding problems or endometriosis. Since these women no longer have a uterus, one would want to exclude them from the cohort, because they are no longer at risk of developing this particular type of cancer.

- Similarly, if one wanted to compare the risk of developing diabetes among nursing home residents who exercised and those who did not, it would be important to test the subjects for diabetes at the beginning of the follow-up period in order to exclude all subjects who already had diabetes and therefore were not "at risk" of developing diabetes.

Eligible subjects have to meet certain criteria to be included as subjects in a study (inclusion criteria). One of these would be that they did not have any of the diseases or conditions that the investigators want to study, i.e., the subjects must be "at risk," of developing the outcome of interest, and the members of the cohort to be followed are sometimes referred to as "the population at risk."

However, at times decisions about who is "at risk" and eligible get complicated.

Example #1: Suppose the outcome of interest is development of measles. There may be subjects who:

- Already were known to have had clinically apparent measles and are immune to subsequent measles infection

- Had sub-clinical cases of measles that went undetected (but the subject may still be immune)

- Had a measles vaccination that conferred immunity

- Had a measles vaccination that failed to confer immunity

In this case the eligibility criteria would be shaped by the specific scientific questions being asked. One might want to compare subjects known to have had clinically apparent measles to those who had not had clinical measles and had not had a measles vaccination. Or, one could take blood sample from all potential subjects in order to measure their antibody titers (levels) to the measles virus.

Example #2: Suppose you are studying an event that can occur more that once, such as a heart attack. Again, the eligibility criteria should be shaped to fit the scientific questions that are being answered. If one were interested in the risk of a first myocardial infarction, then obviously subjects who had already had a heart attack would not be eligible for study. On the other hand, if one were interested in tertiary prevention of heart attacks, the study cohort would include people who had had heart attacks or other clinical manifestations of heart disease, and the outcome of interest would be subsequent significant cardiac events or death.

Prospective and Retrospective Cohort Studies

Cohort studies can be classified as prospective or retrospective based on when outcomes occurred in relation to the enrollment of the cohort.

Prospective Cohort Studies

In a prospective study like the Nurses Health Study baseline information is collected from all subjects in the same way using exactly the same questions and data collection methods for all subjects. The investigators design the questions and data collection procedures carefully in order to obtain accurate information about exposures before disease develops in any of the subjects. After baseline information is collected, subjects in a prospective cohort study are then followed "longitudinally," i.e. over a period of time, usually for years, to determine if and when they become diseased and whether their exposure status changes. In this way, investigators can eventually use the data to answer many questions about the associations between "risk factors" and disease outcomes. For example, one could identify smokers and non-smokers at baseline and compare their subsequent incidence of developing heart disease. Alternatively, one could group subjects based on their body mass index (BMI) and compare their risk of developing heart disease or cancer.

Examples of Prospective Cohort Studies

- The Framingham Heart Study Home Page

- The Nurses Health Study Home Page

Pitfall: Note that in these prospective cohort studies a comparison of incidence between the groups can only take place after enough time has elapsed so that some subjects developed the outcomes of interest. Since the data analysis occurs after some outcomes have occurred, some students mistakenly would call this a retrospective study, but this is incorrect. The analysis always occurs after a certain number of events have taken place. The characteristic that distinguishes a study as prospective is that the subjects were enrolled, and baseline data was collected before any subjects developed an outcome of interest.

Retrospective Cohort Studies

In contrast, retrospective studies are conceived after some people have already developed the outcomes of interest. The investigators jump back in time to identify a cohort of individuals at a point in time before they have developed the outcomes of interest, and they try to establish their exposure status at that point in time. They then determine whether the subject subsequently developed the outcome of interest.

Suppose investigators wanted to test the hypothesis that working with the chemicals involved in tire manufacturing increases the risk of death. Since this is a fairly rare exposure, it would be advantageous to use a special exposure cohort such as employees of a large tire manufacturing factory. The employees who actually worked with chemicals used in the manufacturing process would be the exposed group, while clerical workers and management might constitute the "unexposed" group. However, rather than following these subjects for decades, it would be more efficient to use employee health and employment records over the past two or three decades as a source of data. In essence, the investigators are jumping back in time to identify the study cohort at a point in time before the outcome of interest (death) occurred. They can classify them as "exposed" or "unexposed" based on their employment records, and they can use a number of sources to determine subsequent outcome status, such as death (e.g., using health records, next of kin, National Death Index, etc.).

Retrospective cohort studies like the one described above are very efficient for studying rare or unusual exposures, but there are many potential problems here. Sometimes exposure status is not clear when it is necessary to go back in time and use whatever data is available, especially because the data being used was not designed to answer a health question. Even if it was clear who was exposed to tire manufacturing chemicals based on employee records, it would also be important to take into account (or adjust for) other differences that could have influenced mortality, i.e., confounding factors. For example, it might be important to know whether the subjects smoked, or drank, or what kind of diet they ate. However, it is unlikely that a retrospective cohort study would have accurate information on these many other risk factors.

The video below provides a brief (7:31) explanation of the distinction between retrospective and prospective cohort studies.

Link to a transcript of the video

Intervention Studies (Clinical Trials)

Intervention studies (clinical trials) are experimental research studies that compare the effectiveness of medical treatments, management strategies, prevention strategies, and other medical or public health interventions. Their design is very similar to that of a prospective cohort study. However, in cohort studies exposure status is determined by genetics, self-selection, or life circumstances, and the investigators just observe differences in outcome between those who have a given exposure and those who do not. In clinical trials exposure status (the treatment type) is assigned by the investigators . Ideally, assignment of subjects to one of the comparison groups should be done randomly in order to produce equal distributions of potentially confounding factors. Sometimes a group receiving a new treatment is compared to an untreated group, or a group receiving a placebo or a sham treatment. Sometimes, a new treatment is compared to an untreated group or to a group receiving an established treatment. For more on this topic see the module on Intervention Studies.

In summary, the characteristic that distinguishes a clinical trial from a cohort study is that the investigator assigns the exposure status in a clinical trial, while subjects' genetics, behaviors, and life circumstances determine their exposures in a cohort study.

Summarizing Data in a Cohort Study

Investigators often use contingency tables to summarize data. In essence, the table is a matrix that displays the combinations of exposure and outcome status. If one were summarizing the results of a study with two possible exposure categories and two possible outcomes, one would use a "two by two" table in which the numbers in the four cells indicate the number of subjects within each of the 4 possible categories of risk and disease status.

For example, consider data from a retrospective cohort study conducted by the Massachusetts Department of Public Health (MDPH) during an investigation of an outbreak of Giardia lamblia in Milton, MA in 2003. The descriptive epidemiology indicated that almost all of the cases belonged to a country club in Milton. The club had an adult swimming pool and a wading pool for toddlers, and the investigators suspected that the outbreak may have occurred when an infected child with a dirty diaper contaminated the water in the kiddy pool. This hypothesis was tested by conducting a retrospective cohort study. The cases of Giardia lamblia had already occurred and had been reported to MDPH via the infectious disease surveillance system (for more information on surveillance, see the Surveillance module). The investigation focused on an obvious cohort - 479 members of the country club who agreed to answer the MDPH questionnaire. The questionnaire asked, among many other things, whether the subject had been exposed to the kiddy pool. The incidence of subsequent Giardia infection was then compared between subjects who been exposed to the kiddy pool and those who had not.

The table below summarizes the findings. A total of 479 subjects completed the questionnaire, and 124 of them indicated that they had been exposed to the kiddy pool. Of these, 16 subsequently developed Giardia infection, but 108 did not. Among the 355 subjects who denied kiddy pool exposure, 14 developed Giardia infection, and the other 341 did not.

Organization of the data this way makes it easier to compute the cumulative incidence in each group (12.9% and 3.9% respectively). The incidence in each group provides an estimate of risk, and the groups can be compared in order to estimate the magnitude of association. (This will be addressed in much greater detail in the module on Measures of Association.) One way of quantifying the association is to calculate the relative risk, i.e., dividing the incidence in the exposed group by the incidence in the unexposed group). In this case, the risk ratio is (12.9% / 3.9%) = 3.3. This suggest that subjects who swam in the kiddy pool had 3.3 times the risk of getting Giardia infections compared to those who did not, suggesting that the kiddy pool was the source.

Unanswered Questions

If the kiddy pool was the source of contamination responsible for this outbreak, why was it that:

- Only 16 people exposed to the kiddy pool developed the infection?

- There were 14 Giardia cases among people who denied exposure to the kiddy pool?

Before you look at the answer, think about it and try to come up with a possible explanation.

Likely Explanation

Optional Links of Potential Interest

Link to the 2003 Giardia outbreak

Link to CDC page on Organizing Data

Possible Pitfall: Contingency tables can be oriented in several ways, and this can cause confusion when calculating measures of association.

There is no standard rule about how to set up contingency tables, and you will see them set up in different ways.

- With exposure status in rows and outcome status in columns

- With exposure status in columns and outcome status in rows

- With exposed group first followed by non-exposed group

- With non-exposed group first followed by exposed group

If you aren't careful, these different orientations can result in errors in calculating measures of association. One way to avoid confusion is to always set up your contingency tables in the same way. For example, in these learning modules the contingency tables almost always indicate outcome status in columns listing subjects who have the outcome of interest to the left of subjects who do not have the outcome, and exposure status of the exposed (or most exposed) group is listed in a row above those who are unexposed (or have less exposure).

The table below illustrates this arrangement.

Case-Control Studies

Cohort studies have an intuitive logic to them, but they can be very problematic when:

- The outcomes being investigated are rare;

- There is a long time period between the exposure of interest and the development of the disease; or

- It is expensive or very difficult to obtain exposure information from a cohort.

In the first case, the rarity of the disease requires enrollment of very large numbers of people. In the second case, the long period of follow-up requires efforts to keep contact with and collect outcome information from individuals. In all three situations, cost and feasibility become an important concern.

A case-control design offers an alternative that is much more efficient. The goal of a case-control study is the same as that of cohort studies, i.e. to estimate the magnitude of association between an exposure and an outcome. However, case-control studies employ a different sampling strategy that gives them greater efficiency. As with a cohort study, a case-control study attempts to identify all people who have developed the disease of interest in the defined population. This is not because they are inherently more important to estimating an association, but because they are almost always rarer than non-diseased individuals, and one of the requirements of accurate estimation of the association is that there are reasonable numbers of people in both the numerators (cases) and denominators (people or person-time) in the measures of disease frequency for both exposed and reference groups. However, because most of the denominator is made up of people who do not develop disease, the case-control design avoids the need to collect information on the entire population by selecting a sample of the underlying population.

Rothman describes the case-control strategy as follows:

To illustrate this consider the following hypothetical scenario in which the source population is Plymouth County in Massachusetts, which has a total population of 6,647 (hypothetical). Thirteen people in the county have been diagnosed with an unusual disease and seven of them have a particular exposure that is suspected of being an important contributing factor. The chief problem here is that the disease is quite rare.

If I somehow had exposure and outcome information on all of the subjects in the source population and looked at the association using a cohort design, it might look like this:

Therefore, the incidence in the exposed individuals would be 7/1,007 = 0.70%, and the incidence in the non-exposed individuals would be 6/5,640 = 0.11%. Consequently, the risk ratio would be 0.70/0.11=6.52, suggesting that those who had the risk factor (exposure) had 6.5 times the risk of getting the disease compared to those without the risk factor. This is a strong association.

In this hypothetical example, I had data on all 6,647 people in the source population, and I could compute the probability of disease (i.e., the risk or incidence) in both the exposed group and the non-exposed group, because I had the denominators for both the exposed and non-exposed groups.

The problem , of course, is that I usually don't have the resources to get the data on all subjects in the population. If I took a random sample of even 5-10% of the population, I might not have any diseased people in my sample.

An alternative approach would be to use surveillance databases or administrative databases to find most or all 13 of the cases in the source population and determine their exposure status. However, instead of enrolling all of the other 5,634 residents, suppose I were to just take a sample of the non-diseased population. In fact, suppose I only took a sample of 1% of the non-diseased people and I then determined their exposure status. The data might look something like this:

With this sampling approach I can no longer compute the probability of disease in each exposure group, because I no longer have the denominators in the last column. In other words, I don't know the exposure distribution for the entire source population. However, the small control sample of non-diseased subjects gives me a way to estimate the exposure distribution in the source population. So, I can't compute the probability of disease in each exposure group, but I can compute the odds of disease in the case-control sample.

The Odds Ratio

The odds of disease among the exposed sample are 7/10, and the odds of disease in the non-exposed sample are 6/56. If I compute the odds ratio, I get (7/10) / (5/56) = 6.56, very close to the risk ratio that I computed from data for the entire population. We will consider odds ratios and case-control studies in much greater depth in a later module. However, for the time being the key things to remember are that:

- The sampling strategy for a case-control study is very different from that of cohort studies, despite the fact that both have the goal of estimating the magnitude of association between the exposure and the outcome.

- In a case-control study there is no "follow-up" period. One starts by identifying diseased subjects and determines their exposure distribution; one then takes a sample of the source population that produced those cases in order to estimate the exposure distribution in the overall source population that produced the cases. [In cohort studies none of the subjects have the outcome at the beginning of the follow-up period.]

- In a case-control study, you cannot measure incidence, because you start with diseased people and non-diseased people, so you cannot calculate relative risk.

- The case-control design is very efficient. In the example above the case-control study of only 79 subjects produced an odds ratio (6.56) that was a very close approximation to the risk ratio (6.52) that was obtained from the data in the entire population.

- Case-control studies are particularly useful when the outcome is rare is uncommon in both exposed and non-exposed people.

The Difference Between "Probability" and "Odds"?

- The odds are defined as the probability that the event will occur divided by the probability that the event will not occur.

If the probability of an event occurring is Y, then the probability of the event not occurring is 1-Y. (Example: If the probability of an event is 0.80 (80%), then the probability that the event will not occur is 1-0.80 = 0.20, or 20%.

The odds of an event represent the ratio of the (probability that the event will occur) / (probability that the event will not occur). This could be expressed as follows:

Odds of event = Y / (1-Y)

So, in this example, if the probability of the event occurring = 0.80, then the odds are 0.80 / (1-0.80) = 0.80/0.20 = 4 (i.e., 4 to 1).

- If a race horse runs 100 races and wins 25 times and loses the other 75 times, the probability of winning is 25/100 = 0.25 or 25%, but the odds of the horse winning are 25/75 = 0.333 or 1 win to 3 loses.

- If the horse runs 100 races and wins 5 and loses the other 95 times, the probability of winning is 0.05 or 5%, and the odds of the horse winning are 5/95 = 0.0526.

- If the horse runs 100 races and wins 50, the probability of winning is 50/100 = 0.50 or 50%, and the odds of winning are 50/50 = 1 (even odds).

- If the horse runs 100 races and wins 80, the probability of winning is 80/100 = 0.80 or 80%, and the odds of winning are 80/20 = 4 to 1.

NOTE that when the probability is low, the odds and the probability are very similar.

On Sept. 8, 2011 the New York Times ran an article on the economy in which the writer began by saying "If history is a guide, the odds that the American economy is falling into a double-dip recession have risen sharply in recent weeks and may even have reached 50 percent." Further down in the article the author quoted the economist who had been interviewed for the story. What the economist had actually said was, "Whether we reach the technical definition [of a double-dip recession] I think is probably close to 50-50."

Question: Was the author correct in saying that the "odds" of a double-dip recession may have reached 50 percent?

Which Study Design Is Best?

Decisions regarding which study design to use rest on a number of factors including::

- Uncommon Outcome: If the outcome of interest is uncommon or rare, a case-control study would usually be best.

- Uncommon Exposure: When studying an uncommon exposure, the investigators need to enroll an adequate number of subjects who have that exposure. In this situation a cohort study is best.

- Ethics of Assigning Subjects to an Exposure: If you wanted to study the association between smoking and lung cancer, It wouldn't be ethical to conduct a clinical trial in which you randomly assigned half of the subjects to smoking.

- Resources: If you have limited time, money, and personnel to gather data, it is unlikely that you will be able to conduct a prospective cohort study. A case-control study or a retrospective cohort study would be better options. The best one to choose would be dictated by whether the outcome was rare or the exposure of interest was rare.

There are some situations in which more than one study design could be used.

Smoking and Lung Cancer: For example, when investigators first sought to establish whether there was a link between smoking and lung cancer, they did a study by finding hospital subjects who had lung cancer and a comparison group of hospital patients who had diseases other than cancer. They then compared the prior exposure histories with respect to smoking and many other factors. They found that past smoking was much more common in the lung cancer cases, and they concluded that there was an association. The advantages to this approach were that they were able to collect the data they wanted relatively quickly and inexpensively, because they started with people who already had the disease of interest.

The short video below provides a nice overview of epidemiological studies.

However, there were several limitations to the study they had done. The study design did not allow them to measure the incidence of lung cancer in smokers and non-smokers, so they couldn't measure the absolute risk of smoking. They also didn't know what other diseases smoking might be associated with, and, finally, they were concerned about some of the biases that can creep into this type of study.

As a result, these investigators then initiated another study. They invited all of the male physicians in the United Kingdom to fill out questionnaires regarding their health status and their smoking status. They then focused on the healthy physicians who were willing to participate, and the investigators mailed follow-up questionnaires to them every few years. They also had a way of finding out the cause of death for any subjects who became ill and died. The study continued for about 50 years. Along the way the investigators periodically compared the incidence of death among non-smoking physicians and physicians who smoked small, moderate or heavy amounts of tobacco.

These studies were useful, because they were able to demonstrate that smokers had an increased risk of over 20 different causes of death. They were also able to measure the incidence of death in different categories, so they knew the absolute risk for each cause of death. Of course, the downside to this approach was that it took a long time, and it was very costly. So, both a case-control study and a prospective cohort study provided useful information about the association between smoking and lung cancer and other diseases, but there were distinct advantages and limitations to each approach.

Hepatitis Outbreak in Marshfield, MA

In 2004 there was an outbreak of hepatitis A on the South Shore of Massachusetts. Over a period of a few weeks there were 20 cases of hepatitis A that were reported to the MDPH, and most of the infected persons were residents of Marshfield, MA. Marshfield's health department requested help in identifying the source from MDPH. The investigators quickly performed descriptive epidemiology. The epidemic curve indicated a point source epidemic, and most of the cases lived in the Marshfield area, although some lived as far away as Boston. They conducted hypothesis-generating interviews, and taken together, the descriptive epidemiology suggested that the source was one of five or six food establishments in the Marshfield area, but it wasn't clear which one. Consequently, the investigators wanted to conduct an analytic study to determine which restaurant was the source. Which study design should have been conducted? Think about the scenario, and then open the "Quiz Me" below and choose your answer.

Link to more on the hepatitis outbreak

Case-control studies are particularly efficient for rare diseases because they begin by identifying a sufficient number of diseased people (or people have some "outcome" of interest) to enable you to do an analysis that tests associations. Case-control studies can be done in just about any circumstance, but they are particularly useful when you are dealing with rare diseases or disease for which there is a very long latent period, i.e. a long time between the causative exposure and the eventual development of disease.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- BMJ Open Access

Analytical studies: a framework for quality improvement design and analysis

Conducting studies for learning is fundamental to improvement. Deming emphasised that the reason for conducting a study is to provide a basis for action on the system of interest. He classified studies into two types depending on the intended target for action. An enumerative study is one in which action will be taken on the universe that was studied. An analytical study is one in which action will be taken on a cause system to improve the future performance of the system of interest. The aim of an enumerative study is estimation, while an analytical study focuses on prediction. Because of the temporal nature of improvement, the theory and methods for analytical studies are a critical component of the science of improvement.

Introduction: enumerative and analytical studies

Designing studies that make it possible to learn from experience and take action to improve future performance is an essential element of quality improvement. These studies use the now traditional theory established through the work of Fisher, 1 Cox, 2 Campbell and Stanley, 3 and others that is widely used in biomedicine research. These designs are used to discover new phenomena that lead to hypothesis generation, and to explore causal mechanisms, 4 as well as to evaluate efficacy and effectiveness. They include observational, retrospective, prospective, pre-experimental, quasiexperimental, blocking, factorial and time-series designs.

In addition to these classifications of studies, Deming 5 defined a distinction between analytical and enumerative studies which has proven to be fundamental to the science of improvement. Deming based his insight on the distinction between these two approaches that Walter Shewhart had made in 1939 as he helped develop measurement strategies for the then-emerging science of ‘quality control.’ 6 The difference between the two concepts lies in the extrapolation of the results that is intended, and in the target for action based on the inferences that are drawn.

A useful way to appreciate that difference is to contrast the inferences that can be made about the water sampled from two different natural sources ( figure 1 ). The enumerative approach is like the study of water from a pond. Because conditions in the bounded universe of the pond are essentially static over time, analyses of random samples taken from the pond at a given time can be used to estimate the makeup of the entire pond. Statistical methods, such as hypothesis testing and CIs, can be used to make decisions and define the precision of the estimates.

Environment in enumerative and analytical study. Internal validity diagram from Fletcher et al . 7

The analytical approach, in contrast, is like the study of water from a river. The river is constantly moving, and its physical properties are changing (eg, due to snow melt, changes in rainfall, dumping of pollutants). The properties of water in a sample from the river at any given time may not describe the river after the samples are taken and analysed. In fact, without repeated sampling over time, it is difficult to make predictions about water quality, since the river will not be the same river in the future as it was at the time of the sampling.

Deming first discussed these concepts in a 1942 paper, 8 as well as in his 1950 textbook, 9 and in a 1975 paper used the enumerative/analytical terminology to characterise specific study designs. 5 While most books on experimental design describe methods for the design and analysis of enumerative studies, Moen et al 10 describe methods for designing and learning from analytical studies. These methods are graphical and focus on prediction of future performance. The concept of analytical studies became a key element in Deming's ‘system of profound knowledge’ that serves as the intellectual foundation for improvement science. 11 The knowledge framework for the science of improvement, which combines elements of psychology, the Shewhart view of variation, the concept of systems, and the theory of knowledge, informs a number of key principles for the design and analysis of improvement studies:

- Knowledge about improvement begins and ends in experimental data but does not end in the data in which it begins.

- Observations, by themselves, do not constitute knowledge.

- Prediction requires theory regarding mechanisms of change and understanding of context.

- Random sampling from a population or universe (assumed by most statistical methods) is not possible when the population of interest is in the future.

- The conditions during studies for improvement will be different from the conditions under which the results will be used. The major source of uncertainty concerning their use is the difficulty of extrapolating study results to different contexts and under different conditions in the future.

- The wider the range of conditions included in an improvement study, the greater the degree of belief in the validity and generalisation of the conclusions.

The classification of studies into enumerative and analytical categories depends on the intended target for action as the result of the study:

- Enumerative studies assume that when actions are taken as the result of a study, they will be taken on the material in the study population or ‘frame’ that was sampled.

More specifically, the study universe in an enumerative study is the bounded group of items (eg, patients, clinics, providers, etc) possessing certain properties of interest. The universe is defined by a frame, a list of identifiable, tangible units that may be sampled and studied. Random selection methods are assumed in the statistical methods used for estimation, decision-making and drawing inferences in enumerative studies. Their aim is estimation about some aspect of the frame (such as a description, comparison or the existence of a cause–effect relationship) and the resulting actions taken on this particular frame. One feature of an enumerative study is that a 100% sample of the frame provides the complete answer to the questions posed by the study (given the methods of investigation and measurement). Statistical methods such as hypothesis tests, CIs and probability statements are appropriate to analyse and report data from enumerative studies. Estimating the infection rate in an intensive care unit for the last month is an example of a simple enumerative study.

- Analytical studies assume that the actions taken as a result of the study will be on the process or causal system that produced the frame studied, rather than the initial frame itself. The aim is to improve future performance.

In contrast to enumerative studies, an analytical study accepts as a given that when actions are taken on a system based on the results of a study, the conditions in that system will inevitably have changed. The aim of an analytical study is to enable prediction about how a change in a system will affect that system's future performance, or prediction as to which plans or strategies for future action on the system will be superior. For example, the task may be to choose among several different treatments for future patients, methods of collecting information or procedures for cleaning an operating room. Because the population of interest is open and continually shifts over time, random samples from that population cannot be obtained in analytical studies, and traditional statistical methods are therefore not useful. Rather, graphical methods of analysis and summary of the repeated samples reveal the trajectory of system behaviour over time, making it possible to predict future behaviour. Use of a Shewhart control chart to monitor and create learning to reduce infection rates in an intensive care unit is an example of a simple analytical study.

The following scenarios give examples to clarify the nature of these two types of studies.

Scenario 1: enumerative study—observation

To estimate how many days it takes new patients to see all primary care physicians contracted with a health plan, a researcher selected a random sample of 150 such physicians from the current active list and called each of their offices to schedule an appointment. The time to the next available appointment ranged from 0 to 180 days, with a mean of 38 days (95% CI 35.6 to 39.6).

This is an enumerative study, since results are intended to be used to estimate the waiting time for appointments with the plan's current population of primary care physicians.

Scenario 2: enumerative study—hypothesis generation

The researcher in scenario 1 noted that on occasion, she was offered an earlier visit with a nurse practitioner (NP) who worked with the physician being called. Additional information revealed that 20 of the 150 physicians in the study worked with one or more NPs. The next available appointment for the 130 physicians without an NP averaged 41 days (95% CI 39 to 43 days) and was 18 days (95% CI 18 to 26 days) for the 20 practices with NPs, a difference of 23 days (a 56% shorter mean waiting time).

This subgroup analysis suggested that the involvement of NPs helps to shorten waiting times, although it does not establish a cause–effect relationship, that is, it was a ‘hypothesis-generating’ study. In any event, this was clearly an enumerative study, since its results were to understand the impact of NPs on waiting times in the particular population of practices. Its results suggested that NPs might influence waiting times, but only for practices in this health plan during the time of the study. The study treated the conditions in the health plan as static, like those in a pond.

Scenario 3: enumerative study—comparison

To find out if administrative changes in a health plan had increased member satisfaction in access to care, the customer service manager replicated a phone survey he had conducted a year previously, using a random sample of 300 members. The percentage of patients who were satisfied with access had increased from 48.7% to 60.7% (Fisher exact test, p<0.004).

This enumerative comparison study was used to estimate the impact of the improvement work during the last year on the members in the plan. Attributing the increase in satisfaction to the improvement work assumes that other conditions in the study frame were static.

Scenario 4: analytical study—learning with a Shewhart chart

Each primary care clinic in a health plan reported its ‘time until the third available appointment’ twice a month, which allowed the quality manager to plot the mean waiting time for all of the clinics on Shewhart charts. Waiting times had been stable for a 12-month period through August, but the manager then noted a special cause (increase in waiting time) in September. On stratifying the data by region, she found that the special cause resulted from increases in waiting time in the Northeast region. Discussion with the regional manager revealed a shortage of primary care physicians in this region, which was predicted to become worse in the next quarter. Making some temporary assignments and increasing physician recruiting efforts resulted in stabilisation of this measure.

Documenting common and special cause variation in measures of interest through the use of Shewhart charts and run charts based on judgement samples is probably the simplest and commonest type of analytical study in healthcare. Such charts, when stable, provide a rational basis for predicting future performance.

Scenario 5: analytical study—establishing a cause–effect relationship

The researcher mentioned in scenarios 1 and 2 planned a study to test the existence of a cause–effect relationship between the inclusion of NPs in primary care offices and waiting time for new patient appointments. The variation in patient characteristics in this health plan appeared to be great enough to make the study results useful to other organisations. For the study, she recruited about 100 of the plan's practices that currently did not use NPs, and obtained funding to facilitate hiring NPs in up to 50 of those practices.