- Privacy Policy

Home » Variables in Research – Definition, Types and Examples

Variables in Research – Definition, Types and Examples

Table of Contents

Variables in Research

Definition:

In Research, Variables refer to characteristics or attributes that can be measured, manipulated, or controlled. They are the factors that researchers observe or manipulate to understand the relationship between them and the outcomes of interest.

Types of Variables in Research

Types of Variables in Research are as follows:

Independent Variable

This is the variable that is manipulated by the researcher. It is also known as the predictor variable, as it is used to predict changes in the dependent variable. Examples of independent variables include age, gender, dosage, and treatment type.

Dependent Variable

This is the variable that is measured or observed to determine the effects of the independent variable. It is also known as the outcome variable, as it is the variable that is affected by the independent variable. Examples of dependent variables include blood pressure, test scores, and reaction time.

Confounding Variable

This is a variable that can affect the relationship between the independent variable and the dependent variable. It is a variable that is not being studied but could impact the results of the study. For example, in a study on the effects of a new drug on a disease, a confounding variable could be the patient’s age, as older patients may have more severe symptoms.

Mediating Variable

This is a variable that explains the relationship between the independent variable and the dependent variable. It is a variable that comes in between the independent and dependent variables and is affected by the independent variable, which then affects the dependent variable. For example, in a study on the relationship between exercise and weight loss, the mediating variable could be metabolism, as exercise can increase metabolism, which can then lead to weight loss.

Moderator Variable

This is a variable that affects the strength or direction of the relationship between the independent variable and the dependent variable. It is a variable that influences the effect of the independent variable on the dependent variable. For example, in a study on the effects of caffeine on cognitive performance, the moderator variable could be age, as older adults may be more sensitive to the effects of caffeine than younger adults.

Control Variable

This is a variable that is held constant or controlled by the researcher to ensure that it does not affect the relationship between the independent variable and the dependent variable. Control variables are important to ensure that any observed effects are due to the independent variable and not to other factors. For example, in a study on the effects of a new teaching method on student performance, the control variables could include class size, teacher experience, and student demographics.

Continuous Variable

This is a variable that can take on any value within a certain range. Continuous variables can be measured on a scale and are often used in statistical analyses. Examples of continuous variables include height, weight, and temperature.

Categorical Variable

This is a variable that can take on a limited number of values or categories. Categorical variables can be nominal or ordinal. Nominal variables have no inherent order, while ordinal variables have a natural order. Examples of categorical variables include gender, race, and educational level.

Discrete Variable

This is a variable that can only take on specific values. Discrete variables are often used in counting or frequency analyses. Examples of discrete variables include the number of siblings a person has, the number of times a person exercises in a week, and the number of students in a classroom.

Dummy Variable

This is a variable that takes on only two values, typically 0 and 1, and is used to represent categorical variables in statistical analyses. Dummy variables are often used when a categorical variable cannot be used directly in an analysis. For example, in a study on the effects of gender on income, a dummy variable could be created, with 0 representing female and 1 representing male.

Extraneous Variable

This is a variable that has no relationship with the independent or dependent variable but can affect the outcome of the study. Extraneous variables can lead to erroneous conclusions and can be controlled through random assignment or statistical techniques.

Latent Variable

This is a variable that cannot be directly observed or measured, but is inferred from other variables. Latent variables are often used in psychological or social research to represent constructs such as personality traits, attitudes, or beliefs.

Moderator-mediator Variable

This is a variable that acts both as a moderator and a mediator. It can moderate the relationship between the independent and dependent variables and also mediate the relationship between the independent and dependent variables. Moderator-mediator variables are often used in complex statistical analyses.

Variables Analysis Methods

There are different methods to analyze variables in research, including:

- Descriptive statistics: This involves analyzing and summarizing data using measures such as mean, median, mode, range, standard deviation, and frequency distribution. Descriptive statistics are useful for understanding the basic characteristics of a data set.

- Inferential statistics : This involves making inferences about a population based on sample data. Inferential statistics use techniques such as hypothesis testing, confidence intervals, and regression analysis to draw conclusions from data.

- Correlation analysis: This involves examining the relationship between two or more variables. Correlation analysis can determine the strength and direction of the relationship between variables, and can be used to make predictions about future outcomes.

- Regression analysis: This involves examining the relationship between an independent variable and a dependent variable. Regression analysis can be used to predict the value of the dependent variable based on the value of the independent variable, and can also determine the significance of the relationship between the two variables.

- Factor analysis: This involves identifying patterns and relationships among a large number of variables. Factor analysis can be used to reduce the complexity of a data set and identify underlying factors or dimensions.

- Cluster analysis: This involves grouping data into clusters based on similarities between variables. Cluster analysis can be used to identify patterns or segments within a data set, and can be useful for market segmentation or customer profiling.

- Multivariate analysis : This involves analyzing multiple variables simultaneously. Multivariate analysis can be used to understand complex relationships between variables, and can be useful in fields such as social science, finance, and marketing.

Examples of Variables

- Age : This is a continuous variable that represents the age of an individual in years.

- Gender : This is a categorical variable that represents the biological sex of an individual and can take on values such as male and female.

- Education level: This is a categorical variable that represents the level of education completed by an individual and can take on values such as high school, college, and graduate school.

- Income : This is a continuous variable that represents the amount of money earned by an individual in a year.

- Weight : This is a continuous variable that represents the weight of an individual in kilograms or pounds.

- Ethnicity : This is a categorical variable that represents the ethnic background of an individual and can take on values such as Hispanic, African American, and Asian.

- Time spent on social media : This is a continuous variable that represents the amount of time an individual spends on social media in minutes or hours per day.

- Marital status: This is a categorical variable that represents the marital status of an individual and can take on values such as married, divorced, and single.

- Blood pressure : This is a continuous variable that represents the force of blood against the walls of arteries in millimeters of mercury.

- Job satisfaction : This is a continuous variable that represents an individual’s level of satisfaction with their job and can be measured using a Likert scale.

Applications of Variables

Variables are used in many different applications across various fields. Here are some examples:

- Scientific research: Variables are used in scientific research to understand the relationships between different factors and to make predictions about future outcomes. For example, scientists may study the effects of different variables on plant growth or the impact of environmental factors on animal behavior.

- Business and marketing: Variables are used in business and marketing to understand customer behavior and to make decisions about product development and marketing strategies. For example, businesses may study variables such as consumer preferences, spending habits, and market trends to identify opportunities for growth.

- Healthcare : Variables are used in healthcare to monitor patient health and to make treatment decisions. For example, doctors may use variables such as blood pressure, heart rate, and cholesterol levels to diagnose and treat cardiovascular disease.

- Education : Variables are used in education to measure student performance and to evaluate the effectiveness of teaching strategies. For example, teachers may use variables such as test scores, attendance, and class participation to assess student learning.

- Social sciences : Variables are used in social sciences to study human behavior and to understand the factors that influence social interactions. For example, sociologists may study variables such as income, education level, and family structure to examine patterns of social inequality.

Purpose of Variables

Variables serve several purposes in research, including:

- To provide a way of measuring and quantifying concepts: Variables help researchers measure and quantify abstract concepts such as attitudes, behaviors, and perceptions. By assigning numerical values to these concepts, researchers can analyze and compare data to draw meaningful conclusions.

- To help explain relationships between different factors: Variables help researchers identify and explain relationships between different factors. By analyzing how changes in one variable affect another variable, researchers can gain insight into the complex interplay between different factors.

- To make predictions about future outcomes : Variables help researchers make predictions about future outcomes based on past observations. By analyzing patterns and relationships between different variables, researchers can make informed predictions about how different factors may affect future outcomes.

- To test hypotheses: Variables help researchers test hypotheses and theories. By collecting and analyzing data on different variables, researchers can test whether their predictions are accurate and whether their hypotheses are supported by the evidence.

Characteristics of Variables

Characteristics of Variables are as follows:

- Measurement : Variables can be measured using different scales, such as nominal, ordinal, interval, or ratio scales. The scale used to measure a variable can affect the type of statistical analysis that can be applied.

- Range : Variables have a range of values that they can take on. The range can be finite, such as the number of students in a class, or infinite, such as the range of possible values for a continuous variable like temperature.

- Variability : Variables can have different levels of variability, which refers to the degree to which the values of the variable differ from each other. Highly variable variables have a wide range of values, while low variability variables have values that are more similar to each other.

- Validity and reliability : Variables should be both valid and reliable to ensure accurate and consistent measurement. Validity refers to the extent to which a variable measures what it is intended to measure, while reliability refers to the consistency of the measurement over time.

- Directionality: Some variables have directionality, meaning that the relationship between the variables is not symmetrical. For example, in a study of the relationship between smoking and lung cancer, smoking is the independent variable and lung cancer is the dependent variable.

Advantages of Variables

Here are some of the advantages of using variables in research:

- Control : Variables allow researchers to control the effects of external factors that could influence the outcome of the study. By manipulating and controlling variables, researchers can isolate the effects of specific factors and measure their impact on the outcome.

- Replicability : Variables make it possible for other researchers to replicate the study and test its findings. By defining and measuring variables consistently, other researchers can conduct similar studies to validate the original findings.

- Accuracy : Variables make it possible to measure phenomena accurately and objectively. By defining and measuring variables precisely, researchers can reduce bias and increase the accuracy of their findings.

- Generalizability : Variables allow researchers to generalize their findings to larger populations. By selecting variables that are representative of the population, researchers can draw conclusions that are applicable to a broader range of individuals.

- Clarity : Variables help researchers to communicate their findings more clearly and effectively. By defining and categorizing variables, researchers can organize and present their findings in a way that is easily understandable to others.

Disadvantages of Variables

Here are some of the main disadvantages of using variables in research:

- Simplification : Variables may oversimplify the complexity of real-world phenomena. By breaking down a phenomenon into variables, researchers may lose important information and context, which can affect the accuracy and generalizability of their findings.

- Measurement error : Variables rely on accurate and precise measurement, and measurement error can affect the reliability and validity of research findings. The use of subjective or poorly defined variables can also introduce measurement error into the study.

- Confounding variables : Confounding variables are factors that are not measured but that affect the relationship between the variables of interest. If confounding variables are not accounted for, they can distort or obscure the relationship between the variables of interest.

- Limited scope: Variables are defined by the researcher, and the scope of the study is therefore limited by the researcher’s choice of variables. This can lead to a narrow focus that overlooks important aspects of the phenomenon being studied.

- Ethical concerns: The selection and measurement of variables may raise ethical concerns, especially in studies involving human subjects. For example, using variables that are related to sensitive topics, such as race or sexuality, may raise concerns about privacy and discrimination.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Quantitative Variable – Definition, Types and...

Control Variable – Definition, Types and Examples

Ratio Variable – Definition, Purpose and Examples

Dichotomous Variable – Definition Types and...

Confounding Variable – Definition, Method and...

Interval Variable – Definition, Purpose and...

- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- Independent and Dependent Variables

- Purpose of Guide

- Design Flaws to Avoid

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

Definitions

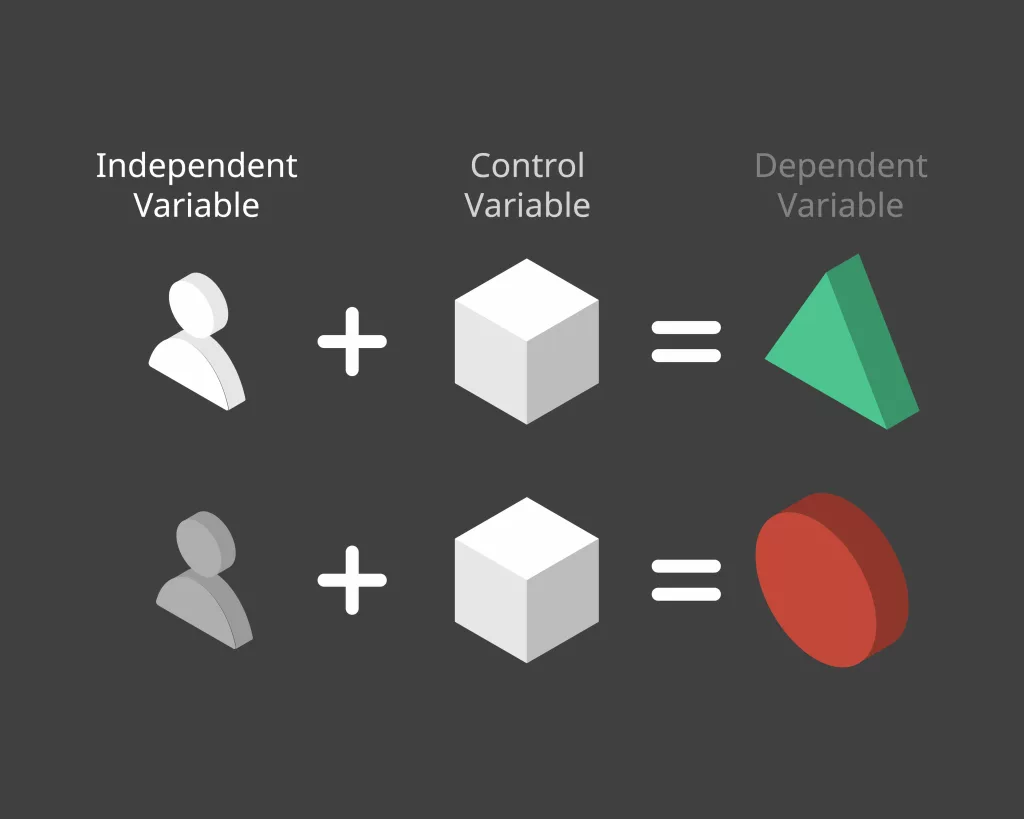

Dependent Variable The variable that depends on other factors that are measured. These variables are expected to change as a result of an experimental manipulation of the independent variable or variables. It is the presumed effect.

Independent Variable The variable that is stable and unaffected by the other variables you are trying to measure. It refers to the condition of an experiment that is systematically manipulated by the investigator. It is the presumed cause.

Cramer, Duncan and Dennis Howitt. The SAGE Dictionary of Statistics . London: SAGE, 2004; Penslar, Robin Levin and Joan P. Porter. Institutional Review Board Guidebook: Introduction . Washington, DC: United States Department of Health and Human Services, 2010; "What are Dependent and Independent Variables?" Graphic Tutorial.

Identifying Dependent and Independent Variables

Don't feel bad if you are confused about what is the dependent variable and what is the independent variable in social and behavioral sciences research . However, it's important that you learn the difference because framing a study using these variables is a common approach to organizing the elements of a social sciences research study in order to discover relevant and meaningful results. Specifically, it is important for these two reasons:

- You need to understand and be able to evaluate their application in other people's research.

- You need to apply them correctly in your own research.

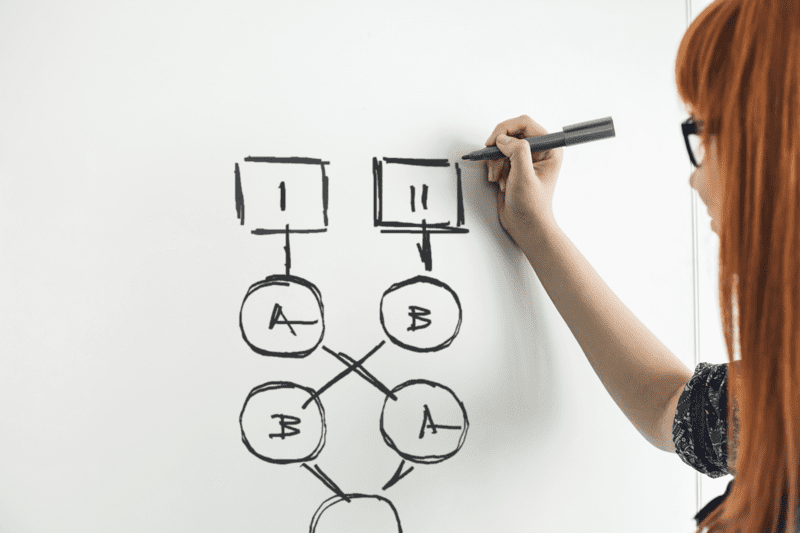

A variable in research simply refers to a person, place, thing, or phenomenon that you are trying to measure in some way. The best way to understand the difference between a dependent and independent variable is that the meaning of each is implied by what the words tell us about the variable you are using. You can do this with a simple exercise from the website, Graphic Tutorial. Take the sentence, "The [independent variable] causes a change in [dependent variable] and it is not possible that [dependent variable] could cause a change in [independent variable]." Insert the names of variables you are using in the sentence in the way that makes the most sense. This will help you identify each type of variable. If you're still not sure, consult with your professor before you begin to write.

Fan, Shihe. "Independent Variable." In Encyclopedia of Research Design. Neil J. Salkind, editor. (Thousand Oaks, CA: SAGE, 2010), pp. 592-594; "What are Dependent and Independent Variables?" Graphic Tutorial; Salkind, Neil J. "Dependent Variable." In Encyclopedia of Research Design , Neil J. Salkind, editor. (Thousand Oaks, CA: SAGE, 2010), pp. 348-349;

Structure and Writing Style

The process of examining a research problem in the social and behavioral sciences is often framed around methods of analysis that compare, contrast, correlate, average, or integrate relationships between or among variables . Techniques include associations, sampling, random selection, and blind selection. Designation of the dependent and independent variable involves unpacking the research problem in a way that identifies a general cause and effect and classifying these variables as either independent or dependent.

The variables should be outlined in the introduction of your paper and explained in more detail in the methods section . There are no rules about the structure and style for writing about independent or dependent variables but, as with any academic writing, clarity and being succinct is most important.

After you have described the research problem and its significance in relation to prior research, explain why you have chosen to examine the problem using a method of analysis that investigates the relationships between or among independent and dependent variables . State what it is about the research problem that lends itself to this type of analysis. For example, if you are investigating the relationship between corporate environmental sustainability efforts [the independent variable] and dependent variables associated with measuring employee satisfaction at work using a survey instrument, you would first identify each variable and then provide background information about the variables. What is meant by "environmental sustainability"? Are you looking at a particular company [e.g., General Motors] or are you investigating an industry [e.g., the meat packing industry]? Why is employee satisfaction in the workplace important? How does a company make their employees aware of sustainability efforts and why would a company even care that its employees know about these efforts?

Identify each variable for the reader and define each . In the introduction, this information can be presented in a paragraph or two when you describe how you are going to study the research problem. In the methods section, you build on the literature review of prior studies about the research problem to describe in detail background about each variable, breaking each down for measurement and analysis. For example, what activities do you examine that reflect a company's commitment to environmental sustainability? Levels of employee satisfaction can be measured by a survey that asks about things like volunteerism or a desire to stay at the company for a long time.

The structure and writing style of describing the variables and their application to analyzing the research problem should be stated and unpacked in such a way that the reader obtains a clear understanding of the relationships between the variables and why they are important. This is also important so that the study can be replicated in the future using the same variables but applied in a different way.

Fan, Shihe. "Independent Variable." In Encyclopedia of Research Design. Neil J. Salkind, editor. (Thousand Oaks, CA: SAGE, 2010), pp. 592-594; "What are Dependent and Independent Variables?" Graphic Tutorial; “Case Example for Independent and Dependent Variables.” ORI Curriculum Examples. U.S. Department of Health and Human Services, Office of Research Integrity; Salkind, Neil J. "Dependent Variable." In Encyclopedia of Research Design , Neil J. Salkind, editor. (Thousand Oaks, CA: SAGE, 2010), pp. 348-349; “Independent Variables and Dependent Variables.” Karl L. Wuensch, Department of Psychology, East Carolina University [posted email exchange]; “Variables.” Elements of Research. Dr. Camille Nebeker, San Diego State University.

- << Previous: Design Flaws to Avoid

- Next: Glossary of Research Terms >>

- Last Updated: Jun 18, 2024 10:45 AM

- URL: https://libguides.usc.edu/writingguide

- What is New

- Download Your Software

- Behavioral Research

- Software for Consumer Research

- Software for Human Factors R&D

- Request Live Demo

- Contact Sales

Sensor Hardware

We carry a range of biosensors from the top hardware producers. All compatible with iMotions

iMotions for Higher Education

Imotions for business.

What is the Observer Effect?

Morten Pedersen

Neuroaesthetics: Decoding the Brain’s Love for Art and Beauty

Consumer Insights

News & Events

- iMotions Lab

- iMotions Online

- Eye Tracking

- Eye Tracking Screen Based

- Eye Tracking VR

- Eye Tracking Glasses

- Eye Tracking Webcam

- FEA (Facial Expression Analysis)

- Voice Analysis

- EDA/GSR (Electrodermal Activity)

- EEG (Electroencephalography)

- ECG (Electrocardiography)

- EMG (Electromyography)

- Respiration

- iMotions Lab: New features

- iMotions Lab: Developers

- EEG sensors

- Sensory and Perceptual

- Consumer Inights

- Human Factors R&D

- Work Environments, Training and Safety

- Customer Stories

- Published Research Papers

- Document Library

- Customer Support Program

- Help Center

- Release Notes

- Contact Support

- Partnerships

- Mission Statement

- Ownership and Structure

- Executive Management

- Job Opportunities

Publications

- Newsletter Sign Up

Roles of Independent and Dependent Variables in Research

Explore the essential roles of independent and dependent variables in research. This guide delves into their definitions, significance in experiments, and their critical relationship. Learn how these variables are the foundation of research design, influencing hypothesis testing, theory development, and statistical analysis, empowering researchers to understand and predict outcomes of research studies.

Table of Contents

Introduction.

At the very base of scientific inquiry and research design , variables act as the fundamental steps, guiding the rhythm and direction of research. This is particularly true in human behavior research, where the quest to understand the complexities of human actions and reactions hinges on the meticulous manipulation and observation of these variables. At the heart of this endeavor lie two different types of variables, namely: independent and dependent variables, whose roles and interplay are critical in scientific discovery.

Understanding the distinction between independent and dependent variables is not merely an academic exercise; it is essential for anyone venturing into the field of research. This article aims to demystify these concepts, offering clarity on their definitions, roles, and the nuances of their relationship in the study of human behavior, and in science generally. We will cover hypothesis testing and theory development, illuminating how these variables serve as the cornerstone of experimental design and statistical analysis.

The significance of grasping the difference between independent and dependent variables extends beyond the confines of academia. It empowers researchers to design robust studies, enables critical evaluation of research findings, and fosters an appreciation for the complexity of human behavior research. As we delve into this exploration, our objective is clear: to equip readers with a deep understanding of these fundamental concepts, enhancing their ability to contribute to the ever-evolving field of human behavior research.

Chapter 1: The Role of Independent Variables in Human Behavior Research

In the realm of human behavior research, independent variables are the keystones around which studies are designed and hypotheses are tested. Independent variables are the factors or conditions that researchers manipulate or observe to examine their effects on dependent variables, which typically reflect aspects of human behavior or psychological phenomena. Understanding the role of independent variables is crucial for designing robust research methodologies, ensuring the reliability and validity of findings.

Defining Independent Variables

Independent variables are those variables that are changed or controlled in a scientific experiment to test the effects on dependent variables. In studies focusing on human behavior, these can range from psychological interventions (e.g., cognitive-behavioral therapy), environmental adjustments (e.g., noise levels, lighting, smells, etc), to societal factors (e.g., social media use). For example, in an experiment investigating the impact of sleep on cognitive performance, the amount of sleep participants receive is the independent variable.

Selection and Manipulation

Selecting an independent variable requires careful consideration of the research question and the theoretical framework guiding the study. Researchers must ensure that their chosen variable can be effectively, and consistently manipulated or measured and is ethically and practically feasible, particularly when dealing with human subjects.

Manipulating an independent variable involves creating different conditions (e.g., treatment vs. control groups) to observe how changes in the variable affect outcomes. For instance, researchers studying the effect of educational interventions on learning outcomes might vary the type of instructional material (digital vs. traditional) to assess differences in student performance.

Challenges in Human Behavior Research

Manipulating independent variables in human behavior research presents unique challenges. Ethical considerations are paramount, as interventions must not harm participants. For example, studies involving vulnerable populations or sensitive topics require rigorous ethical oversight to ensure that the manipulation of independent variables does not result in adverse effects.

Practical limitations also come into play, such as controlling for extraneous variables that could influence the outcomes. In the aforementioned example of sleep and cognitive performance, factors like caffeine consumption or stress levels could confound the results. Researchers employ various methodological strategies, such as random assignment and controlled environments, to mitigate these influences.

Chapter 2: Dependent Variables: Measuring Human Behavior

The dependent variable in human behavior research acts as a mirror, reflecting the outcomes or effects resulting from variations in the independent variable. It is the aspect of human experience or behavior that researchers aim to understand, predict, or change through their studies. This section explores how dependent variables are measured, the significance of their accurate measurement, and the inherent challenges in capturing the complexities of human behavior.

Defining Dependent Variables

Dependent variables are the responses or outcomes that researchers measure in an experiment, expecting them to vary as a direct result of changes in the independent variable. In the context of human behavior research, dependent variables could include measures of emotional well-being, cognitive performance, social interactions, or any other aspect of human behavior influenced by the experimental manipulation. For instance, in a study examining the effect of exercise on stress levels, stress level would be the dependent variable, measured through various psychological assessments or physiological markers.

Measurement Methods and Tools

Measuring dependent variables in human behavior research involves a diverse array of methodologies, ranging from self-reported questionnaires and interviews to physiological measurements and behavioral observations. The choice of measurement tool depends on the nature of the dependent variable and the objectives of the study.

- Self-reported Measures: Often used for assessing psychological states or subjective experiences, such as anxiety, satisfaction, or mood. These measures rely on participants’ introspection and honesty, posing challenges in terms of accuracy and bias.

- Behavioral Observations: Involve the direct observation and recording of participants’ behavior in natural or controlled settings. This method is used for behaviors that can be externally observed and quantified, such as social interactions or task performance.

- Physiological Measurements: Include the use of technology to measure physical responses that indicate psychological states, such as heart rate, cortisol levels, or brain activity. These measures can provide objective data about the physiological aspects of human behavior.

Reliability and Validity

The reliability and validity of the measurement of dependent variables are critical to the integrity of human behavior research.

- Reliability refers to the consistency of a measure; a reliable tool yields similar results under consistent conditions.

- Validity pertains to the accuracy of the measure; a valid tool accurately reflects the concept it aims to measure.

Ensuring reliability and validity often involves the use of established measurement instruments with proven track records, pilot testing new instruments, and applying rigorous statistical analyses to evaluate measurement properties.

Challenges in Measuring Human Behavior

Measuring human behavior presents challenges due to its complexity and the influence of multiple, often interrelated, variables. Researchers must contend with issues such as participant bias, environmental influences, and the subjective nature of many psychological constructs. Additionally, the dynamic nature of human behavior means that it can change over time, necessitating careful consideration of when and how measurements are taken.

Section 3: Relationship between Independent and Dependent Variables

Understanding the relationship between independent and dependent variables is at the core of research in human behavior. This relationship is what researchers aim to elucidate, whether they seek to explain, predict, or influence human actions and psychological states. This section explores the nature of this relationship, the means by which it is analyzed, and common misconceptions that may arise.

The Nature of the Relationship

The relationship between independent and dependent variables can manifest in various forms—direct, indirect, linear, nonlinear, and may be moderated or mediated by other variables. At its most basic, this relationship is often conceptualized as cause and effect: the independent variable (the cause) influences the dependent variable (the effect). For instance, increased physical activity (independent variable) may lead to decreased stress levels (dependent variable).

Analyzing the Relationship

Statistical analyses play a pivotal role in examining the relationship between independent and dependent variables. Techniques vary depending on the nature of the variables and the research design, ranging from simple correlation and regression analyses for quantifying the strength and form of relationships, to complex multivariate analyses for exploring relationships among multiple variables simultaneously.

- Correlation Analysis : Used to determine the degree to which two variables are related. However, it’s crucial to note that correlation does not imply causation.

- Regression Analysis : Goes a step further by not only assessing the strength of the relationship but also predicting the value of the dependent variable based on the independent variable.

- Experimental Design : Provides a more robust framework for inferring causality, where manipulation of the independent variable and control of confounding factors allow researchers to directly observe the impact on the dependent variable.

Causality vs. Correlation

A fundamental consideration in human behavior research is the distinction between causality and correlation. Causality implies that changes in the independent variable cause changes in the dependent variable. Correlation, on the other hand, indicates that two variables are related but does not establish a cause-effect relationship. Confounding variables may influence both, creating the appearance of a direct relationship where none exists. Understanding this distinction is crucial for accurate interpretation of research findings.

Common Misinterpretations

The complexity of human behavior and the myriad factors that influence it often lead to challenges in interpreting the relationship between independent and dependent variables. Researchers must be wary of:

- Overestimating the strength of causal relationships based on correlational data.

- Ignoring potential confounding variables that may influence the observed relationship.

- Assuming the directionality of the relationship without adequate evidence.

This exploration highlights the importance of understanding independent and dependent variables in human behavior research. Independent variables act as the initiating factors in experiments, influencing the observed behaviors, while dependent variables reflect the results of these influences, providing insights into human emotions and actions.

Ethical and practical challenges arise, especially in experiments involving human participants, necessitating careful consideration to respect participants’ well-being. The measurement of these variables is critical for testing theories and validating hypotheses, with their relationship offering potential insights into causality and correlation within human behavior.

Rigorous statistical analysis and cautious interpretation of findings are essential to avoid misconceptions. Overall, the study of these variables is fundamental to advancing human behavior research, guiding researchers towards deeper understanding and potential interventions to improve the human condition.

Free 44-page Experimental Design Guide

For Beginners and Intermediates

- Introduction to experimental methods

- Respondent management with groups and populations

- How to set up stimulus selection and arrangement

Last edited

About the author

See what is next in human behavior research

Follow our newsletter to get the latest insights and events send to your inbox.

Related Posts

The Impact of Gaze Tracking Technology: Applications and Benefits

The Ultimatum Game

The Stag Hunt (Game Theory)

Unlocking the Potential of VR Eye Trackers: How They Work and Their Applications

You might also like these.

Exploring Mobile Eye Trackers: How Eye Tracking Glasses Work and Their Applications

Understanding Screen-Based Eye Trackers: How They Work and Their Applications

Product guides.

Case Stories

Explore Blog Categories

Best Practice

Collaboration, product news, research fundamentals, research insights, 🍪 use of cookies.

We are committed to protecting your privacy and only use cookies to improve the user experience.

Chose which third-party services that you will allow to drop cookies. You can always change your cookie settings via the Cookie Settings link in the footer of the website. For more information read our Privacy Policy.

- gtag This tag is from Google and is used to associate user actions with Google Ad campaigns to measure their effectiveness. Enabling this will load the gtag and allow for the website to share information with Google. This service is essential and can not be disabled.

- Livechat Livechat provides you with direct access to the experts in our office. The service tracks visitors to the website but does not store any information unless consent is given. This service is essential and can not be disabled.

- Pardot Collects information such as the IP address, browser type, and referring URL. This information is used to create reports on website traffic and track the effectiveness of marketing campaigns.

- Third-party iFrames Allows you to see thirdparty iFrames.

Variables in Research | Types, Definiton & Examples

Introduction

What is a variable, what are the 5 types of variables in research, other variables in research.

Variables are fundamental components of research that allow for the measurement and analysis of data. They can be defined as characteristics or properties that can take on different values. In research design , understanding the types of variables and their roles is crucial for developing hypotheses , designing methods , and interpreting results .

This article outlines the the types of variables in research, including their definitions and examples, to provide a clear understanding of their use and significance in research studies. By categorizing variables into distinct groups based on their roles in research, their types of data, and their relationships with other variables, researchers can more effectively structure their studies and achieve more accurate conclusions.

A variable represents any characteristic, number, or quantity that can be measured or quantified. The term encompasses anything that can vary or change, ranging from simple concepts like age and height to more complex ones like satisfaction levels or economic status. Variables are essential in research as they are the foundational elements that researchers manipulate, measure, or control to gain insights into relationships, causes, and effects within their studies. They enable the framing of research questions, the formulation of hypotheses, and the interpretation of results.

Variables can be categorized based on their role in the study (such as independent and dependent variables ), the type of data they represent (quantitative or categorical), and their relationship to other variables (like confounding or control variables). Understanding what constitutes a variable and the various variable types available is a critical step in designing robust and meaningful research.

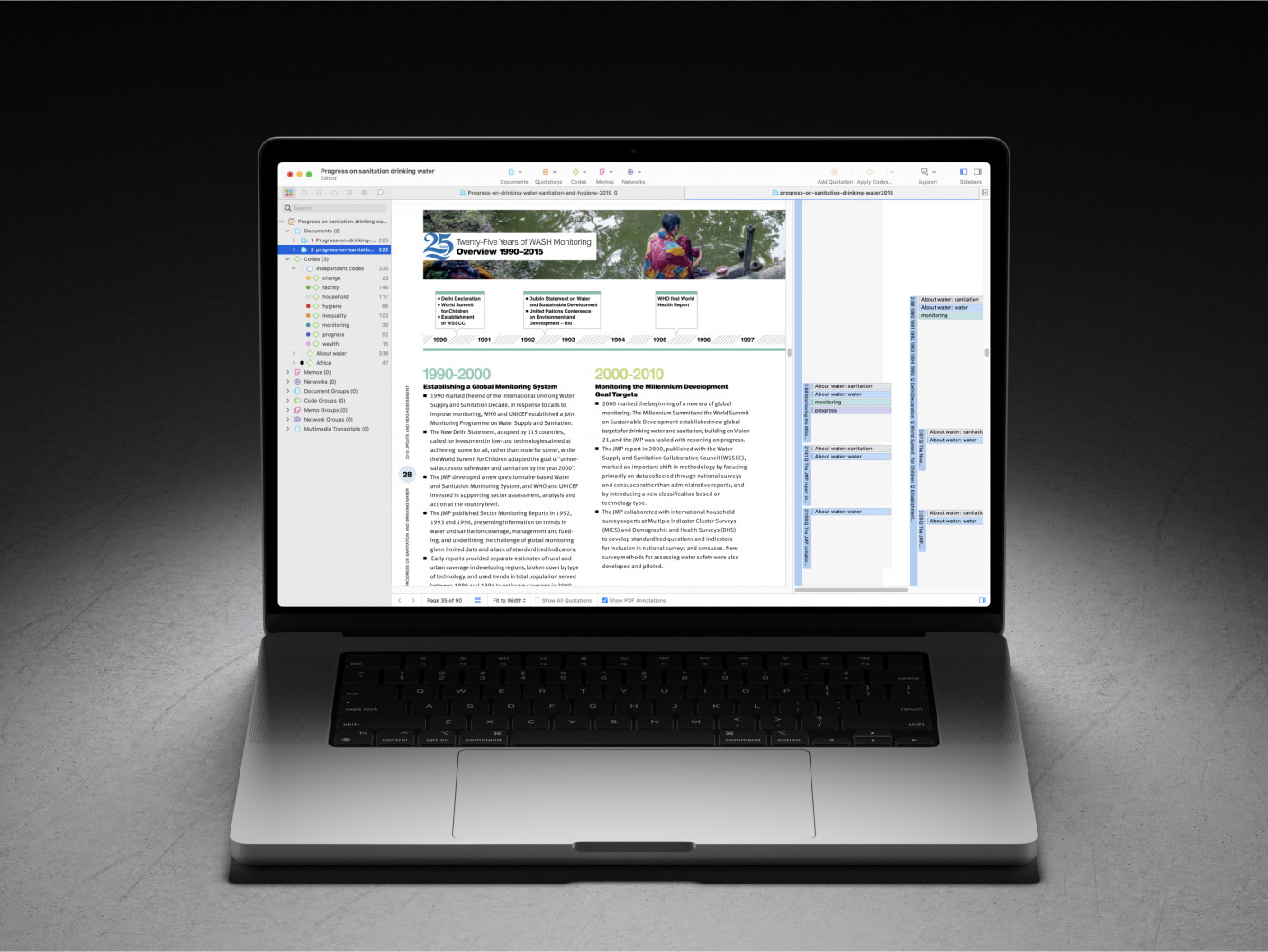

ATLAS.ti makes complex data easy to understand

Turn to our powerful data analysis tools to make the most of your research. Get started with a free trial.

Variables are crucial components in research, serving as the foundation for data collection , analysis , and interpretation . They are attributes or characteristics that can vary among subjects or over time, and understanding their types is essential for any study. Variables can be broadly classified into five main types, each with its distinct characteristics and roles within research.

This classification helps researchers in designing their studies, choosing appropriate measurement techniques, and analyzing their results accurately. The five types of variables include independent variables, dependent variables, categorical variables, continuous variables, and confounding variables. These categories not only facilitate a clearer understanding of the data but also guide the formulation of hypotheses and research methodologies.

Independent variables

Independent variables are foundational to the structure of research, serving as the factors or conditions that researchers manipulate or vary to observe their effects on dependent variables. These variables are considered "independent" because their variation does not depend on other variables within the study. Instead, they are the cause or stimulus that directly influences the outcomes being measured. For example, in an experiment to assess the effectiveness of a new teaching method on student performance, the teaching method applied (traditional vs. innovative) would be the independent variable.

The selection of an independent variable is a critical step in research design, as it directly correlates with the study's objective to determine causality or association. Researchers must clearly define and control these variables to ensure that observed changes in the dependent variable can be attributed to variations in the independent variable, thereby affirming the reliability of the results. In experimental research, the independent variable is what differentiates the control group from the experimental group, thereby setting the stage for meaningful comparison and analysis.

Dependent variables

Dependent variables are the outcomes or effects that researchers aim to explore and understand in their studies. These variables are called "dependent" because their values depend on the changes or variations of the independent variables.

Essentially, they are the responses or results that are measured to assess the impact of the independent variable's manipulation. For instance, in a study investigating the effect of exercise on weight loss, the amount of weight lost would be considered the dependent variable, as it depends on the exercise regimen (the independent variable).

The identification and measurement of the dependent variable are crucial for testing the hypothesis and drawing conclusions from the research. It allows researchers to quantify the effect of the independent variable , providing evidence for causal relationships or associations. In experimental settings, the dependent variable is what is being tested and measured across different groups or conditions, enabling researchers to assess the efficacy or impact of the independent variable's variation.

To ensure accuracy and reliability, the dependent variable must be defined clearly and measured consistently across all participants or observations. This consistency helps in reducing measurement errors and increases the validity of the research findings. By carefully analyzing the dependent variables, researchers can derive meaningful insights from their studies, contributing to the broader knowledge in their field.

Categorical variables

Categorical variables, also known as qualitative variables, represent types or categories that are used to group observations. These variables divide data into distinct groups or categories that lack a numerical value but hold significant meaning in research. Examples of categorical variables include gender (male, female, other), type of vehicle (car, truck, motorcycle), or marital status (single, married, divorced). These categories help researchers organize data into groups for comparison and analysis.

Categorical variables can be further classified into two subtypes: nominal and ordinal. Nominal variables are categories without any inherent order or ranking among them, such as blood type or ethnicity. Ordinal variables, on the other hand, imply a sort of ranking or order among the categories, like levels of satisfaction (high, medium, low) or education level (high school, bachelor's, master's, doctorate).

Understanding and identifying categorical variables is crucial in research as it influences the choice of statistical analysis methods. Since these variables represent categories without numerical significance, researchers employ specific statistical tests designed for a nominal or ordinal variable to draw meaningful conclusions. Properly classifying and analyzing categorical variables allow for the exploration of relationships between different groups within the study, shedding light on patterns and trends that might not be evident with numerical data alone.

Continuous variables

Continuous variables are quantitative variables that can take an infinite number of values within a given range. These variables are measured along a continuum and can represent very precise measurements. Examples of continuous variables include height, weight, temperature, and time. Because they can assume any value within a range, continuous variables allow for detailed analysis and a high degree of accuracy in research findings.

The ability to measure continuous variables at very fine scales makes them invaluable for many types of research, particularly in the natural and social sciences. For instance, in a study examining the effect of temperature on plant growth, temperature would be considered a continuous variable since it can vary across a wide spectrum and be measured to several decimal places.

When dealing with continuous variables, researchers often use methods incorporating a particular statistical test to accommodate a wide range of data points and the potential for infinite divisibility. This includes various forms of regression analysis, correlation, and other techniques suited for modeling and analyzing nuanced relationships between variables. The precision of continuous variables enhances the researcher's ability to detect patterns, trends, and causal relationships within the data, contributing to more robust and detailed conclusions.

Confounding variables

Confounding variables are those that can cause a false association between the independent and dependent variables, potentially leading to incorrect conclusions about the relationship being studied. These are extraneous variables that were not considered in the study design but can influence both the supposed cause and effect, creating a misleading correlation.

Identifying and controlling for a confounding variable is crucial in research to ensure the validity of the findings. This can be achieved through various methods, including randomization, stratification, and statistical control. Randomization helps to evenly distribute confounding variables across study groups, reducing their potential impact. Stratification involves analyzing the data within strata or layers that share common characteristics of the confounder. Statistical control allows researchers to adjust for the effects of confounders in the analysis phase.

Properly addressing confounding variables strengthens the credibility of research outcomes by clarifying the direct relationship between the dependent and independent variables, thus providing more accurate and reliable results.

Beyond the primary categories of variables commonly discussed in research methodology , there exists a diverse range of other variables that play significant roles in the design and analysis of studies. Below is an overview of some of these variables, highlighting their definitions and roles within research studies:

- Discrete variables : A discrete variable is a quantitative variable that represents quantitative data , such as the number of children in a family or the number of cars in a parking lot. Discrete variables can only take on specific values.

- Categorical variables : A categorical variable categorizes subjects or items into groups that do not have a natural numerical order. Categorical data includes nominal variables, like country of origin, and ordinal variables, such as education level.

- Predictor variables : Often used in statistical models, a predictor variable is used to forecast or predict the outcomes of other variables, not necessarily with a causal implication.

- Outcome variables : These variables represent the results or outcomes that researchers aim to explain or predict through their studies. An outcome variable is central to understanding the effects of predictor variables.

- Latent variables : Not directly observable, latent variables are inferred from other, directly measured variables. Examples include psychological constructs like intelligence or socioeconomic status.

- Composite variables : Created by combining multiple variables, composite variables can measure a concept more reliably or simplify the analysis. An example would be a composite happiness index derived from several survey questions .

- Preceding variables : These variables come before other variables in time or sequence, potentially influencing subsequent outcomes. A preceding variable is crucial in longitudinal studies to determine causality or sequences of events.

Master qualitative research with ATLAS.ti

Turn data into critical insights with our data analysis platform. Try out a free trial today.

- Get new issue alerts Get alerts

- IARS MEMBER LOGIN

Secondary Logo

Journal logo.

Colleague's E-mail is Invalid

Your message has been successfully sent to your colleague.

Save my selection

Fundamentals of Research Data and Variables: The Devil Is in the Details

Vetter, Thomas R. MD, MPH

From the Department of Surgery and Perioperative Care, Dell Medical School at the University of Texas at Austin, Austin, Texas.

Accepted for publication June 26, 2017.

Published ahead of print August 4, 2017.

Funding: None.

The author declares no conflicts of interest.

Reprints will not be available from the author.

Address correspondence to Thomas R. Vetter, MD, MPH, Department of Surgery and Perioperative Care, Dell Medical School at the University of Texas at Austin, Dell Pediatric Research Institute, Suite 1.114, 1400 Barbara Jordan Blvd, Austin, TX 78723. Address e-mail to [email protected] .

Designing, conducting, analyzing, reporting, and interpreting the findings of a research study require an understanding of the types and characteristics of data and variables. Descriptive statistics are typically used simply to calculate, describe, and summarize the collected research data in a logical, meaningful, and efficient way. Inferential statistics allow researchers to make a valid estimate of the association between an intervention and the treatment effect in a specific population, based upon their randomly collected, representative sample data. Categorical data can be either dichotomous or polytomous. Dichotomous data have only 2 categories, and thus are considered binary. Polytomous data have more than 2 categories. Unlike dichotomous and polytomous data, ordinal data are rank ordered, typically based on a numerical scale that is comprised of a small set of discrete classes or integers. Continuous data are measured on a continuum and can have any numeric value over this continuous range. Continuous data can be meaningfully divided into smaller and smaller or finer and finer increments, depending upon the precision of the measurement instrument. Interval data are a form of continuous data in which equal intervals represent equal differences in the property being measured. Ratio data are another form of continuous data, which have the same properties as interval data, plus a true definition of an absolute zero point, and the ratios of the values on the measurement scale make sense. The normal (Gaussian) distribution (“bell-shaped curve”) is of the most common statistical distributions. Many applied inferential statistical tests are predicated on the assumption that the analyzed data follow a normal distribution. The histogram and the Q–Q plot are 2 graphical methods to assess if a set of data have a normal distribution (display “normality”). The Shapiro-Wilk test and the Kolmogorov-Smirnov test are 2 well-known and historically widely applied quantitative methods to assess for data normality. Parametric statistical tests make certain assumptions about the characteristics and/or parameters of the underlying population distribution upon which the test is based, whereas nonparametric tests make fewer or less rigorous assumptions. If the normality test concludes that the study data deviate significantly from a Gaussian distribution, rather than applying a less robust nonparametric test, the problem can potentially be remedied by judiciously and openly: (1) performing a data transformation of all the data values; or (2) eliminating any obvious data outlier(s).

Der teufel steckt im detail [The devil is in the details]

Friedrich Wilhelm Nietzsche (1844–1900)

Designing, conducting, analyzing, reporting, and interpreting the findings of a research study require an understanding of the types and characteristics of data and variables. This basic statistical tutorial discusses the following fundamental concepts about research data and variables:

- Population parameter versus sample variable;

- Types of research variables;

- Descriptive statistics versus inferential statistics;

- Primary data versus secondary data and analyses;

- Measurement scales and types of data;

- Normal versus non-normal data distribution;

- Assessing for normality of data;

- Parametric versus nonparametric statistical tests; and

- Data transformation to achieve normality.

POPULATION PARAMETER VERSUS SAMPLE VARIABLE

In conducting a research study, one ideally would obtain the pertinent data from all the members of the specific, targeted population, which defines the population parameter. However, this is seldom feasible, unless the entire targeted population is relatively small, and all its members are easily and readily accessible. 1–4

Pertinent data instead are typically collected on a random, representative subset or sample chosen from the members of the overall specific population, which defines the sample variable. The unknown population parameter, representing the characteristic or association of interest, is then estimated from this chosen study sample, with a varying degree of accuracy or precision. One essentially extrapolates from this sample to make conclusions about the population. 1–4

TYPES OF RESEARCH VARIABLES

When undertaking research, there are 4 basic types of variables to consider and define: 1 , 5–7

- Independent variable: A variable that is believed to be the cause of some of the observed effect or association and one that is directly manipulated by the researcher during the study or experiment.

- Dependent variable: A variable that is believed to be directly affected by changes in an independent variable and one that is directly measured by the researcher during the study or experiment.

- Predictor variable: A variable that is believed to predict another variable and one that is identified, determined, and/or controlled by the researcher during the study or experiment (essentially synonymous with an independent variable).

- Outcome variable: A variable that is believed to change as a result of a change in a predictor variable and one that is directly measured by the researcher during the study or experiment (essentially synonymous with a dependent variable).

DESCRIPTIVE STATISTICS VERSUS INFERENTIAL STATISTICS

Descriptive statistics are specific methods used simply to calculate, describe, and summarize the collected research data in a logical, meaningful, and efficient way. Descriptive statistics are reported numerically in the text and tables or in graphical forms. 1 , 8 Descriptive statistics will be the topic of the next basic tutorial in this series.

Researchers often pose a hypothesis (“if this is done, then this occurs” or “if this occurs, then this happens”) and seek to describe and to compare the quantitative or qualitative characteristics of 2 or more populations: 1 with and 1 without a specific intervention, or before and after the intervention in the same group. Purely descriptive statistics alone do not allow a conclusion to be made about association or effect and thus cannot answer a research hypothesis. 1 , 8

Inferential statistics involves using available data about a sample variable to make a valid inference (estimate) about its corresponding, underlying, but unknown population parameter. Inferential statistics also allow researchers to make a valid estimate of the association between an intervention and the treatment effect (causal-effect) in a specific population, based upon their randomly collected, representative sample data. 1 , 3 , 8

For example, Castro-Alves et al 9 recently reported on their prospective, randomized, placebo-controlled, double-blinded trial, in which the perioperative administration of duloxetine improved postoperative quality of recovery after abdominal hysterectomy. Based upon their study sample, these researchers made the valid inference that duloxetine appears to be an effective medication to improve postoperative quality of recovery in all similar patients undergoing abdominal hysterectomy. 9

PRIMARY DATA VERSUS SECONDARY DATA AND ANALYSIS

Frequently, there is confusion about the terms primary data and primary data analysis versus secondary data and secondary data analysis. 10

Primary data are intentionally and originally collected for the purposes of a specific research study, and it is a priori planned primary data analysis. 10–12 Primary data are usually collected prospectively but can be collected retrospectively. 12 Valid and reliable primary clinical data collection tends to be time consuming, labor intensive, and costly, especially if undertaken on a large scale and/or at multiple, independent, care-delivery locations or sites. 13

For example, a large-scale randomized study is being undertaken in 40 centers in 5 countries over 3 years to determine whether a stronger association (and thus more likely causality) exists between relatively deep anesthesia, as guided by the bispectral index, and increased postoperative mortality. 14

Likewise, the General Anesthesia compared to Spinal anesthesia study is an ongoing prospective randomized, controlled, multisite, trial designed to assess the influence of general anesthesia on neurodevelopment at 5 years of age. 15

Secondary data are initially collected for other purposes, and these existing data are subsequently used for a research study and its secondary data analyses. 11 , 16 Examples include the myriad of bedside clinical data recorded for routine patient care and administrative claims data utilized for billing and third-party payer purposes. 13

Such hospital administrative data (health care claims data) represent an important alternative data source that can be used to answer a broad range of research questions, including perioperative and critical care medicine, which would be difficult to study with a prospective randomized controlled trial. 17 , 18

Secondary clinical data can also be gathered and coalesced into a large-scale research data repository or warehouse, which is intentionally created for quality assurance, performance improvement, health services, or clinical outcomes research purposes. Data on study-specific variables are then extracted (“abstracted”) from one of these already existing secondary data sources. 16 , 19 , 20 An example is the National Anesthesia Clinical Outcomes Registry, developed by the Anesthesia Quality Institute of the American Society of Anesthesiologists. 21

Despite the resources needed for their creation, maintenance, and extraction, secondary data are typically less time consuming, labor intensive, and costly than primary data, especially if needed on a large scale (eg, health services and outcomes research questions in perioperative and critical care medicine). 22 However, the possible study variables are limited to those that already exist. 16 , 20 , 22 Furthermore, the validity of the findings of the research study can be adversely affected by a poorly constructed or executed secondary data collection or extraction process (“garbage in—garbage out”). 16 , 22

The term “secondary analysis of existing data” is generally preferred to the traditional term “secondary data analysis” because the former avoids the need to decide whether the data used in an analysis are primary or secondary. 10 An example is the predefined secondary analysis of existing data, prospectively collected in the Vascular Events in Non-Cardiac Surgery Patients Cohort Evaluation study, which assessed the association between preoperative heart rate and myocardial injury after noncardiac surgery. 23

MEASUREMENT SCALES AND TYPES OF DATA

Categorical data.

Some demographic and clinical characteristics can be parsed into and described using separate, discrete categories. The key distinction is the lack of rank order to these discrete categories. Categorical data can also be called nominal data (from the Latin word, nomen, for “name”), implying that there is no ordering to the categories, but rather simply names. Categorical data can be either dichotomous (2 categories) or polytomous (more than 2 categories). 1 , 5 , 24 , 25

Dichotomous data have only 2 categories, and thus are considered binary (yes or no; positive or negative). 1 , 5 , 24 , 25 Many clinical outcomes (eg, postoperative nausea/vomiting, myocardial infarction, stroke, sepsis, and mortality) can be recorded and reported as dichotomous data.

Polytomous data have more than 2 categories. Examples of such data include sex (man, woman, or transgender), race/ethnicity (American Indian or Alaska Native, Asian, black or African American, Hispanic or Latino, Native Hawaiian or other Pacific Islander, and white or Caucasian), body habitus (ectomorph, mesomorph, or endomorph), hair color (black, brown, blond, or red), blood type (A, B, AB, or O), and diet (carnivore, omnivore, vegetarian, or vegan). 1 , 5 , 6 , 24 , 25

Ordinal Data

Unlike nominal or categorical data, ordinal data follow a logical order. Ordinal data are rank ordered, typically based on a numerical scale that is comprised of a small set of discrete classes or integers. 1 , 5 , 24 , 25 A key characteristic is that the response categories have a rank order, but the intervals between the values cannot be presumed to be equal. 26 The numeric Likert scale (1 = strongly disagree to 5 = strongly agree), which is commonly used to measure respondent attitude, generates ordinal data. 26 Other examples of ordinal data include socioeconomic status (low, medium, or high), highest educational level completed (elementary, middle school, high school, college, or postcollege graduate), the American Society of Anesthesiologists Physical Status Score (I, II, III, IV, or V), and the 11-point numerical rating scale (0–10) for pain intensity.

Discrete Data.

Categorical data that are counts or integers (eg, the number of episodes of intraoperative bradycardia or hypotension experienced by a patient) are typically called discrete data. Discrete data may be more appropriately analyzed using different statistical methods than ordinal data 27 ; however, in practice, the same methods are often used for these 2 variable types. In general, “discrete data variables” refer to those which can only take on certain specific values and are thus distinguished from continuous data, which are discussed next.

Continuous (Interval or Ratio) Data

Continuous data are measured on a continuum and can have or occupy any numeric value over this continuous range. Continuous data can be meaningfully divided into smaller and smaller or finer and finer increments, depending upon the sensitivity or precision of the measurement instrument. 25

Interval data are a form of continuous data in which equal intervals represent equal differences in the property being measured. 1 , 5 , 6 , 28 For example, the 1° difference between a temperature of 37° and 36° is the same 1° difference as between a temperature of 36° and 35°. However, when using the Fahrenheit or Celsius scale, a temperature of 100° is not twice as hot as 50° because a temperature of 0° on either scale does not mean “no heat” (but this would be true for Kelvin temperature). 28 This leads us naturally to a definition of ratio data.

Ratio data are another form of continuous data, which have the same properties as interval data, plus a true definition of an absolute zero point, and the ratios of the values on the measurement scale must make sense. 1 , 5 , 6 , 28 Age, height, weight, heart rate, and blood pressure are also ratio data. For example, a weight of 4 g is twice the weight of 2 g. 28 The visual analog scale (VAS) pain intensity tool generates ratio data. 29 A VAS score of 0 represents no pain, and a VAS score of 60 actually represents twice as much pain as a VAS score of 30.

NORMAL VERSUS NON-NORMAL DATA DISTRIBUTION

A statistical distribution is a graph of the possible specific values or the intervals of values of a variable (on the x-axis) and how often the observed values occur (on the y-axis). There are multiple types of data distribution, including the normal (Gaussian) distribution, binomial distribution, and Poisson distribution. 30 , 31 The so-inclined reader is referred to a more in-depth discussion of the various types or patterns of data distribution. 32

The normal (Gaussian) distribution (the “bell-shaped curve”) ( Figure 1 ) is one of the most common statistical distributions. 33 , 34 Many applied inferential statistical tests are predicated on the assumption that the analyzed data follow a normal distribution. Therefore, the normal distribution is also one of the most relevant to basic inferential statistics. 30 , 31 , 33–35

METHODS FOR ASSESSING DATA NORMALITY

The histogram and the Q–Q plot are 2 graphical methods to visually assess if a set of data have a normal distribution (display “normality”). The Shapiro-Wilk test and Kolmogorov-Smirnov test are 2 well-known and historically widely applied quantitative methods to assess for data normality. 36 Graphical methods and quantitative testing can complement one another; therefore, it is preferable that data normality be assessed both visually and with a statistical test. 30 , 37 , 38 However, if one is uncertain about how to correctly interpret the more subjective histogram or the Q–Q plot, it is better to rely instead on a numerical test statistic. 37 See the study by Kuhn et al 39 for an example.

The histogram or frequency distribution of the study data can be used to graphically assess for normality. If the study data are normally distributed, the histogram or frequency distribution of these data will fall within the shape of a bell curve ( Figure 2A ), whereas if the study data are not normally distributed, the histogram or frequency distribution of these data will fall outside the shape of a bell curve ( Figure 2B ). 35 When applicable, authors state in their manuscript their use of a histogram to assess the normality of their primary outcome data, but they do not reproduce this graph. See the study by Blitz et al 40 for an example.

One can also use the output of a quantile–quantile or Q–Q plot to graphically assess if a set of data plausibly came from a normal distribution. The Q–Q plot is a scatterplot of the quantiles of a theoretical normal data set (on x-axis) and the quantiles of the actual sample data set (on y-axis). If the data are normally distributed, the data points on the Q–Q plot will be closely aligned with the 45°, reference diagonal line ( Figure 3A ). If the individual data points stray from the reference diagonal line in an obviously nonlinear fashion, the data are not normally distributed ( Figure 3B ). When applicable, authors state in their manuscript their use of a Q–Q plot to assess the normality of their primary outcome data, but they do not reproduce this graph. See the study by Jæger et al 41 for an example.

Shapiro-Wilk Test and Kolmogorov-Smirnov Test

Both the Shapiro-Wilk and the Kolmogorov-Smirnov tests compare the scores in the study sample with a normally distributed set of scores with the same mean and SD; their null hypothesis is that sample distribution is normal. Therefore, if the test is significant ( P < .05), the sample data distribution is non-normal. 30 , 36 When applicable, authors should state in their manuscript which test was used to assess the normality of their primary outcome data and report its corresponding P value.

The Shapiro-Wilk test is more appropriate for small sample sizes (N ≤ 50), but it can also be validly applied with large sample sizes. The Shapiro-Wilk test provides greater power than the Kolmogorov-Smirnov test (even with its Lilliefors correction). For these reasons, the Shapiro-Wilk test has been recommended as the numerical means for assessing data normality. 30 , 36 , 37

PARAMETRIC VERSUS NONPARAMETRIC STATISTICAL TESTS

The details and appropriate use of the wide array of available inferential statistical tests will be the topics of several future tutorials in this current series.

These statistical tests are commonly classified as parametric versus nonparametric. This distinction is generally predicated on the number and rigor of the assumptions (requirements) regarding the underlying study population. 42 Parametric statistical tests make certain assumptions about the characteristics and/or parameters of the underlying population distribution upon which the test is based, whereas nonparametric tests make fewer or less rigorous assumptions. 42

Specifically, parametric statistical tests assume that the data have been sampled from a specific probability distribution (a normal distribution); nonparametric statistical tests make no such distribution assumption. 43 , 44 In general, parametric tests are more powerful (“robust”) than nonparametric tests, and so if possible, a parametric test should be applied. 43

DATA TRANSFORMATION TO ACHIEVE NORMALITY

Researchers may find that their available study data are not normally distributed, ostensibly calling into question the validity of using a more robust parametric statistical test.

While the results of the above tests of normality are typically reported (including in Anesthesia & Analgesia ), they are not a panacea. With small sample sizes, these normality tests do not have much power to detect a non-Gaussian distribution. With large sample sizes, minor deviations from the Gaussian “ideal” might be deemed “statistically significant” by a normality test; however, the commonly applied parametric t test and analysis of variance are then fairly tolerant of a violation of the normality assumption. 36 , 45 The decision to apply a parametric test versus a nonparametric test is thus sometimes a difficult one, requiring thought and perspective, and should not be simply automated. 36

If the normality test concludes that study data deviate significantly from a Gaussian distribution, rather than applying a less robust nonparametric test, the problem can potentially be remedied by judiciously and openly: (1) performing a data transformation of all the data values; or (2) eliminating any obvious data outlier(s). 36 , 46 Most commonly, logarithmic, square root, or reciprocal data transformation are applied to achieve data normality. 47 See the studies by Law et al 48 and Maquoi et al 49 for examples.

CONCLUSIONS

A basic understanding of data and variables is required to design, conduct, analyze, report, and interpret, as well as to understand and apply, the findings of a research study. The assumption of study data demonstrating a normal (Gaussian) distribution, and the corresponding choice of a parametric versus nonparametric statistical test, can be a complex and vexing issue. As will be discussed in detail in future tutorials, the type and characteristics of study data and variables essentially determine the appropriate descriptive statistics and inferential statistical tests to apply.

DISCLOSURES

Name: Thomas R. Vetter, MD, MPH.

Contribution: This author wrote and revised the manuscript.

This manuscript was handled by: Jean-Francois Pittet, MD.

- Cited Here |

- Google Scholar

- + Favorites

- View in Gallery

Readers Of this Article Also Read

Magic mirror, on the wall—which is the right study design of them all—part i, descriptive statistics: reporting the answers to the 5 basic questions of who,..., in the beginning—there is the introduction—and your study hypothesis, significance, errors, power, and sample size: the blocking and tackling of..., diagnostic testing and decision-making: beauty is not just in the eye of the....

| Elements of Research | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Frequently asked questionsWhy are independent and dependent variables important. Determining cause and effect is one of the most important parts of scientific research. It’s essential to know which is the cause – the independent variable – and which is the effect – the dependent variable. Frequently asked questions: MethodologyAttrition refers to participants leaving a study. It always happens to some extent—for example, in randomized controlled trials for medical research. Differential attrition occurs when attrition or dropout rates differ systematically between the intervention and the control group . As a result, the characteristics of the participants who drop out differ from the characteristics of those who stay in the study. Because of this, study results may be biased . Action research is conducted in order to solve a particular issue immediately, while case studies are often conducted over a longer period of time and focus more on observing and analyzing a particular ongoing phenomenon. Action research is focused on solving a problem or informing individual and community-based knowledge in a way that impacts teaching, learning, and other related processes. It is less focused on contributing theoretical input, instead producing actionable input. Action research is particularly popular with educators as a form of systematic inquiry because it prioritizes reflection and bridges the gap between theory and practice. Educators are able to simultaneously investigate an issue as they solve it, and the method is very iterative and flexible. A cycle of inquiry is another name for action research . It is usually visualized in a spiral shape following a series of steps, such as “planning → acting → observing → reflecting.” To make quantitative observations , you need to use instruments that are capable of measuring the quantity you want to observe. For example, you might use a ruler to measure the length of an object or a thermometer to measure its temperature. Criterion validity and construct validity are both types of measurement validity . In other words, they both show you how accurately a method measures something. While construct validity is the degree to which a test or other measurement method measures what it claims to measure, criterion validity is the degree to which a test can predictively (in the future) or concurrently (in the present) measure something. Construct validity is often considered the overarching type of measurement validity . You need to have face validity , content validity , and criterion validity in order to achieve construct validity. Convergent validity and discriminant validity are both subtypes of construct validity . Together, they help you evaluate whether a test measures the concept it was designed to measure.

You need to assess both in order to demonstrate construct validity. Neither one alone is sufficient for establishing construct validity.